Abstract

A number of models from mathematics, physics, probability theory and statistics can be described in terms of Wishart matrices and their eigenvalues. The most prominent example being the Laguerre ensembles of the spectrum of Wishart matrix. We aim to express extreme points of the joint eigenvalue probability density distribution of a Wishart matrix using optimisation techniques for the Vandermonde determinant over certain surfaces implicitly defined by univariate polynomials.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Vandermonde determinant

- Orthogonal ensembles

- Gaussian ensembles

- Wishart ensembles

- Eigenvalue density optimization

MSC 2010 Classification

34.1 Introduction

In this work, we review and investigate the Gaussian \(\beta \)-ensembles as the basis for a generalized Wishart density distribution and how this can be optimized over various surfaces, in particular a unit sphere. We take advantage of the general properties of Vandermonde determinant. To begin with, we give a brief outline of key terms including but not limited to Gaussian univariate and multivariate distributions, the Chi-squared density, the Wishart density, the occurrence of random matrices, their join eigenvalue probability distribution, the \(\beta \)-ensembles, the Vandermonde matrix and its determinant. We then illustrate the optimization of the joint probability density function of the \(\beta \)-ensembles over a unit sphere based on the characteristic properties of the Vandermonde determinant.

34.1.1 Univariate and Multivariate Normal Distribution

Definition 34.1

The univariate normal probability density function (Gaussian normal density) for a random variable X, which is the basis for construction of many multivariate distributions that occur in statistics, can be expressed as [4]:

where \(\alpha \) and k is chosen so that the integral of (34.1) over the entire \(x-\)axis is unity and \(\beta \) is equal to the expectation of X, that is, \(\mathbb {E}[X] = \beta \). It is then said that X follows a normal probability density function with parameters \(\alpha \) and \(\beta \), also expressed as \(X\sim \mathcal {N}(\alpha ,\beta )\).

The density function of the multivariate normal distribution of random variables say \(X_{1},\, \ldots ,\, X_{p}\) is defined analogously. If the scalar variable x in (34.1) is directly replaced by the vector \(\mathbf {X} = (X_{1}, \,\ldots ,\, X_{p})^{\top }\), the scalar constant \(\beta \) is replaced by a vector \(\mathbf {b} = (b_{1},\, \ldots , \,b_{p})^{\top }\) and the positive definite matrix

The expression

is replaced by the quadratic form

Thus, the density of the p-variate normal distribution becomes

where \(\top \) denotes transpose and \(K > 0\) is chosen so that the integral over the entire p-dimensional Euclidean space \(x_{1}, \ldots , x_{p}\) is unity.

Theorem 34.1

If the density of a p-dimensional random vector \(\mathbf {X}\) is

then the expected value of \(\mathbf {X}\) is \(\mathbf {b}\) and the covariance matrix is \(\mathbf {A}^{-1}\), see [4]. Conversely, given a vector \(\pmb {\mu }\) and a positive definite matrix \(\pmb {\varSigma }\), there is a multivariate normal density

such that the expected value of the density is \(\pmb {\mu }\) and the covariance matrix is \(\pmb {\varSigma }\).

The density (34.5) is often denoted as \(\mathbf {X} \sim \mathcal {N}_{p}(\pmb {\mu }, \pmb {\varSigma })\).

For example, the diagonal elements of the covariance matrix, \(\pmb {\varSigma }_{ii}\), is the variance of the ith component of \(\mathbf {X}\), which may sometimes be denoted by \(\sigma _{i}^{2}\). The correlation between \(X_{i}\) and \(X_{j}\) is defined as

where \(\sigma _k\) denotes the standard deviation of \(X_k\) and \(\sigma _{ij} = \pmb {\varSigma }_{ij}\). This measure of association is symmetric in \(X_{i}\) and \(X_{j}\) such that \(\rho _{ij} = \rho _{ji}\). Since

is positive-definite, the determinant

is positive. Therefore \(-1< \rho _{ij} < 1\).

34.1.2 Wishart Distribution

The matrix distribution that is now known as a Wishart distribution, was first derived by Wishart in the late 1920s [56]. It is usually regarded as a multivariate extension of the \(\chi ^{2}-\)distribution.

Theorem 34.2

The sum of squares, \(\displaystyle \pmb {\chi }^{2} = Z_{1}^{2} + \cdots + Z_{n}^{2}\) of n- independent standard normal variables \(Z_{i}\) of mean 0 and variance 1, that is, distributed as \(\mathcal {N}(0,1)\) has a \(\chi ^{2}\)-distribution defined by:

where \(\mathrm {\Gamma }\left( \cdot \right) \) is the Gamma function [40].

Definition 34.2

Let \(\mathbf {X} = (X_{1}, \ldots , X_{n})\), where \(X_{i} \sim \mathcal {N}(\mu _{i}, \pmb {\varSigma })\) and \(\mathbf {X}_{i}\) is independent of \(\mathbf {X}_{j}\), where \(i\not = j\). The matrix \(\mathbf {W}:p\times p\) is said to be Wishart distributed [56] if and only if \(\mathbf {W} = \mathbf {X}\mathbf {X}^{\top }\) for some matrix \(\mathbf {X}\) in a family of Gaussian matrices \(\mathbf {G}_{m \times n}, m \le n\), that is, \(\mathbf {X} \sim \mathcal {N}_{m,n}(\pmb {\mu }, \pmb {\varSigma }, \mathbf {I})\) where \(\pmb {\varSigma }\ge 0\). If \(\pmb {\mu } = 0\) we have a central Wishart distribution which will be denoted by \(\mathbf {W} \sim \mathcal {W}_{m}(\pmb {\varSigma }, n)\), and if \(\pmb {\mu } \not =0\) we have a non-central Wishart distribution which will be denoted \(\mathbf {W} \sim \mathcal {W}_{m}(\pmb {\varSigma }, n, \pmb {\triangle })\), where \(\pmb {\triangle } = \pmb {\mu }\pmb {\mu }^{\top }\) and n is the number of degrees of freedom.

In our study, we shall mainly focus on the central Wishart distribution for which \(\pmb {\mu } = 0\) and \(\mathbf {X} \sim \mathcal {N}_{m,n}(\pmb {\mu }, \pmb {\varSigma }, \mathbf {I})\)

Theorem 34.3

([4]) Given a random matrix \(\mathbf {W}\) which can be expressed as \(\displaystyle \mathbf {W} = \mathbf {X}\mathbf {X}^{\top } \) where \(\mathbf {X}_{1}, \cdots , \mathbf {X}_{n}, ~(n \ge p)\) are independent, each with the distribution \(\mathcal {N}_{p}(\pmb {\mu }, \pmb {\varSigma })\). Then, the distribution of \(\mathbf {W} \sim \mathcal {W}_{p}(\pmb {\varSigma }, n)\). If \(\pmb {\varSigma } > 0\), then the random matrix \(\mathbf {W}\) has a joint density functions:

where the multivariate Gamma function is given by

If \(p=1, \pmb {\mu } = \mathbf {0}\) and \(\pmb {\varSigma } = \mathbf {1}\), then the Wishart matrix is identical to a central \(\pmb {\chi }^{2}\)-variable with n degrees of freedom as defined in (34.6).

Theorem 34.4

([24, 39]) If \(\mathbf {X}\) is distributed as \(\mathcal {N}(\pmb {\mu }, \displaystyle \pmb {\varSigma }),\) then the probability density distribution of the eigenvalues of \(\mathbf {X}\mathbf {X}^{\top }\), denoted \(\pmb {\lambda } = (\lambda _{1}, \ldots , \lambda _{m})\), is given by:

where \(\mathbf {D} = \mathrm {diag}(\lambda _{i})\) and \(\Gamma \) is the Gamma function.

It will prove useful that (34.9) contains the term \(\displaystyle \prod _{i < j}(\lambda _{i} - \lambda _{j})\) which is the determinant of a Vandermonde matrix [46]. A Vandermonde matrix is a well-known type of matrix that appears in many different applications both in mathematics, physics and recently in multivariate statistics, most famously curve-fitting using polynomials, for details see [46].

Definition 34.3

Square Vandermonde matrices of size \(n \times n\) are determined by N values \(\mathbf {x}=(x_1,\ldots ,x_n)\) and is defined as follows:

The determinant of the Vandermonde matrix is well known.

Lemma 34.1

The determinant of square Vandermonde matrices has the form

This determinant is also referred to as the Vandermonde determinant or Vandermonde polynomial or Vandermondian [46].

We take advantage of this fact of Vandermonde determinant to establish the relationship between the product of Vandermonde matrices and joint eigenvalue probability density functions for large random matrices that occur in various areas of both classical mechanics, mathematics, statistics and many other areas of science. We also illustrate the optimization of these densities based of the extreme points Vandermonde determinant.

The extreme points of the Vandermonde determinant appears in random matrix theory, for example to compute the limiting value of the so called Stieltjes transform using the method sometimes called the ‘Coulomb gas analogy’ [32]. This is also closely related to many problems in quantum mechanics and statistical mechanics. For an overview of some other applications of the extreme points see [35].

In the next section we give a brief overview of random matrix theory (RMT).

34.2 Overview of Random Matrix Theory

Random matrices were first introduced in mathematical statistics in the late 1920s [56] and today the joint probability density function of eigenvalues of random matrices play a significant role both in probability theory, mathematical physics and quantum mechanics [22]. A random matrix, in simple terms can be defined as any matrix whose real or complex valued entries are random variables.

Random matrix theory primarily discusses the properties large or complex matrices with random variables as entries by utilizing the existing probability laws, in particularly, Gaussian distributions [4, 5]. The main motivational question in the probabilistic approach to random matrices is: what can be said about the probabilities of a few or if not all of its eigenvalues and eigenvectors? This question is significant in many areas of science including particle physics, mathematics, statistics and finance as highlighted here under.

In nuclear physics random matrices were applied in the modelling of the nuclei of heavy atoms [55]. The main idea was to investigate the spacing between the lines in the electromagnetic spectrum of a heavy atom nucleus, e.g. Uranium 238, which resembles the separation between the eigenvalues of a random matrix [32]. These random matrices have also been employed in solid-state physics to model the chaotic behaviour of large disordered Hamiltonians in terms of mean field approximation [14]. Random matrices have also been applied in quantum chaos to characterise the spectral statistics of quantum systems [9, 12].

Random unitary matrix transformations has also appears in theoretical physics, e.g. the boson sampling model [1] has been applied in quantum optics to describe the advantages of quantum computation over classical computation. Random unitary transformations can also be directly implemented in an optical circuit, by mapping their parameters to optical circuit components [41].

Other applications in theoretical physics include, analysing the chiral Dirac operator [28, 52] quantum chromodynamics, quantum gravity in two dimensions [21], in mesoscopic physics random matrices are used to characterise materials of intermediate length [43], spin-transfer torque [42], the fractional quantum Hall effect [10], Anderson localization [25], quantum dots [59] and superconductors [7], electrodynamic properties of structural materials [58], describing electrical conduction properties of disordered organic and inorganic materials [57], quantum gravity [15] and string theory [8].

In mathematics some application include the distribution of the zeros of the Riemann zeta function [27], enumeration of permutations having certain particularities in which the random matrices can help to derive polynomials permutation patterns [38], counting of certain knots and links as applies to folding and coloring [8].

In multivariate statistics random matrices were introduced for statistical analysis of large samples in estimation of covariance matrices [18,19,20, 33, 45, 56]. More significant results have proven that to extend the classical scalar inequalities for improved analysis of a structured dimension reduction based on largest eigenvalues of finite sums of random Hermitian matrices [48].

Random matrices have also been applied to financial modelling especially risk models and time series [6, 23, 51, 56].

Random matrices also are increasingly used to model the network of synaptic connections between neurons in the brain as applies to neural networks or neuroscience. Neuronal networks can help to construct dynamical models based on random connectivity matrix [44]. This has also helped to establish the link relating the statistical properties of the spectrum of biologically inspired random matrix models to the dynamical behaviour of randomly connected neural networks [13, 26, 36, 47, 53].

In optimal control theory random matrices appear as coefficients in the state equation of linear evolution. In most problems the values of the parameters in these matrices are not known with certainty, in which case there are random matrices in the state equation and the problem is known as one of stochastic control [11, 49, 50].

In the next section, we will discuss some well-known ensembles that that appear in the mathematical study of random matrices.

34.3 Classical Random Matrix Ensembles

The key famously known classical ensembles include the Gaussian Orthogonal Ensembles (G.O.E), the Gaussian Unitary Ensembles (G.U.E), the Gaussian Symplectic Ensembles (GSE), the Wishart Ensembles (W.E), the MANOVA Ensembles (M.E) and the Circular Ensembles (C.E). These can be derived from the multivariate Gaussian matrix, \(\mathbf {G}_{\beta }, \beta = 1, 2, 4\). Since, the multivariate Gaussian possesses an inherent orthogonal property from the standard normal distribution, that is, they remain invariant under orthogonal transformations. More detailed discussions on these ensembles can be found in [3, 4, 32, 37, 54, 56].

Definition 34.4

([29]) The Gaussian Orthogonal Ensembles (G.O.E) are characterised by the symmetric matrix \(\mathbf {X} = \mathbf {G}_{1}(N,N)\) obtained as \(\left( \mathbf {X} + \mathbf {X}^{\top }\right) /2\). The diagonal entries of \(\mathbf {X}\) are independent and identically distributes (i.i.d) with a standard normal distribution \(\mathcal {N}(0,1)\) while the off-diagonal entries are i.i.d with a standard normal distribution \(\mathcal {N}_{1}(0,1/2)\). That is, a random matrix \(\mathbf {X}\) is called the Gaussian Orthogonal Ensemble (GOE), if it is symmetric and real-valued (\(X_{ij} = X_{ji}\)) and has

Definition 34.5

([16, 29]) The Gaussian Unitary Ensembles (G.U.E), are characterised by the Hermitian complex-valued matrix \(\mathbf {H} = \mathbf {G}_{2}(N,N)\) obtained as \(\left( \mathbf {H} + \mathbf {H}^{\top ^*}\right) /2\) where \(\top ^*\) is the operation of taking the Hermitian transpose, that is, the Hermitian or conjugate transpose of \(\mathbf {H}\), and expressed as \((\mathbf {H}^{\top ^*})_{ij} = \overline{\mathbf {H}}_{ji}\). The diagonal entries of \(\mathbf {H}\) are independent and identically distributes (i.i.d) with a standard normal distribution \(\mathcal {N}(0,1)\) while the off-diagonal entries are i.i.d with a standard normal distribution \(\mathcal {N}_{2}(0,1/2)\). That is, random matrix \(\mathbf {H}\) is called a Gaussian Unitary Ensemble (GUE), if it is complex-valued, Hermitian \((\mathbf {H}_{ij}^{\top ^*} = \overline{\mathbf {H}}_{ji})\), and the entries satisfy

Definition 34.6

([6, 29]) The Gaussian Symplectic Ensembles (GSE), are characterised by the self-dual matrix \(\mathbf {S} = \mathbf {G}_{4}(N,N)\) obtained as \(\left( \mathbf {S} + \mathbf {S}^{\top ^*}\right) /2\) where \(\top ^*\) represents the operation of taking the conjugate transpose of a quaternion matrix. The diagonal entries \(\mathbf {H}\) are independent and identically distributes (i.i.d) with a standard normal distribution \(\mathcal {N}(0,1)\) while the off-diagonal entries are i.i.d with a standard normal distribution \(\mathcal {N}_{4}(0,1/2)\).

Definition 34.7

([6, 29]) The Wishart Ensembles (W.E), \(\mathcal {W}_{\beta }(m,n), m \ge n\), are characterised by the symmetric, Hermitian or self-dual matrix \(\mathbf {W} = \mathbf {W}_{\beta }(N,N)\) obtained as \(\mathbf {W} = \mathbf {A}\mathbf {A}^{\top }, \mathbf {W} = \mathbf {H}\mathbf {H}^{\top }\), or \(\mathbf {W} = \mathbf {S}\mathbf {S}^{\top }\) where \(\top \) represents the operation of taking the usual transposes of defined in G.O.E, G.U.E and G.S.E above respectively.

Definition 34.8

([6, 29]) The MANOVA Ensembles (M.E), \(\mathcal {J}_{\beta }(m_{1}, m_{2},n), m_{1}, m_{2} \ge n\), are characterised by the symmetric, Hermitian or self-dual matrix \(\mathbf {A}/(\mathbf {A} + \mathbf {B})\) where \(\mathbf {A}\) and \(\mathbf {B}\) are \(\mathbf {W}_{\beta }(m_{1},n)\) and \(\mathbf {W}_{\beta }(m_{2},n)\) respectively.

Definition 34.9

([16, 29]) The Circular Ensembles (C.E), are characterised by the special matrix \(\mathbf {U}\mathbf {U}^{\top }\) where \(\mathbf {U}_{\beta }, \beta = 1, 2\) is a uniformly distributed unitary matrix.

Lemma 34.2

([29]) From the Gaussian normal distribution with mean \(\mu \) and variance \(\sigma ^{2}\), that is, \(\mathbf {X}\sim \mathcal {N}(\mu , \sigma ^{2})\), given by (34.1) and the multivariate normal distribution with mean vector \(\pmb {\mu }\) and the covariance matrix is \(\pmb {\varSigma }\), \(\mathcal {N}_{N}(\pmb {\mu },\pmb {\varSigma })\) given in (34.5), then it can be verified that the joint density of \(\mathbf {A}\) is written as:

where \(\Vert \mathbf {A} \Vert _{F}\) represents the Frobenius norm of \(\mathbf {A}\).

Theorem 34.5

([4, 16]) If we let \(\mathbf {X}\) be an \(N \times N\) random matrix with entries that are independently identically distributed as \(\mathcal {N}(0,1)\), then the joint density distribution of the Gaussian ensembles is given by:

Theorem 34.6

([16, 32]) Considering a Wishart matrix \(\mathbf {W}_{\beta }(m,n) = \mathbf {X}\mathbf {X}^{\top }\) where \(\mathbf {X} = \mathbf {G}_{\beta }(m,n)\) is a multivariate Gaussian matrix. Then, the joint elements of \(\mathbf {W}_{\beta }(m,n)\) can be computed in two steps, first writing \(\mathbf {W} = \mathbf {Q}\mathbf {R}\) and then integrating out \(\mathbf {Q}\) leaving \(\mathbf {R}\). Secondly applying the transformation \(\mathbf {W} = \mathbf {R}\mathbf {R}^{\top }\), which is the famous Cholesky factorization of matrices in numerical analysis. Then the joint density distribution for Wishart ensembles of \(\mathbf {W}\) is given by:

Here we notice that the density distribution for both the Gaussian and Wishart ensembles are made up of determinant term and exponential trace term. This generalizes the fact that indeed the determinant term is actually the Vandermonde determinant in (34.11) for the case of the joint eigenvalue density functions. This concept further explained in the next section.

34.4 The Vandermonde Determinant and Joint Eigenvalue Probability Densities for Random Matrices

To obtain the joint eigenvalue densities for random matrices, we apply the the principle of matrix factorization, for instance if the random matrix \(\mathbf {X}\) is expressed as \(\mathbf {X} = \mathbf {Q} \pmb {\varLambda } \mathbf {Q}^{\top }\), then \(\pmb {\varLambda }\) directly gives the eigenvalues \(\mathbf {X}\) [24]. Applying the Jacobian technique for joint density transformation, see for example [4], this yields the joint densities of eigenvalues and eigenvectors.

Lemma 34.3

The three Gaussian ensembles have joint eigenvalues probability density function [32, 37] given by

where \(\beta = 1\) representing reals, \(\beta = 2\) representing the complexes, and \(\beta = 4\) representing the quaternion, and

Lemma 34.4

([24, 32]) The Wishart (or Laguerre) ensembles have a joint eigenvalue probability density distribution given by

where \(\alpha = \frac{\beta }{2}m\) and \(p = 1 + \frac{\beta }{2}(N-1)\). The \(\beta \) parameter is decided by what type of elements are in the Wishart matrix, real-valued elements corresponds to \(\beta = 1\), complex-valued elements correspond to \(\beta = 2\) and quarternion elements correspond to \(\beta = 4\), and the normalizing constant \(C_N^{\beta ,\alpha }\) is given by

Thus the joint eigenvalue probability density distribution for all the ensembles can be summarized in the following theorem [18, 29, 32].

Theorem 34.7

Suppose that \(\mathbf {X}_{N} \in \mathcal {H}^{\beta }\) for \(\beta = 1,2,4\). Then, the distribution of eigenvalues of \(\mathbf {X}_{N}\) is given by

where \(\bar{C}_{N}^{(\beta )}\) are normalized constants and can be computed explicitly.

From (34.17) it should be noted that trivially, the properties of a probability density function, that is,

do hold as verified in [32]. We also notice that the term \(\displaystyle \prod _{i<j}|x_{i} - x_{j}|^{\beta }\) in the expression (34.17) is the determinant of the famous Vandermonde matrix (34.10) raised to the power \(\beta = 1, 2, 4\). For example, from (34.10) and (34.11) and applying the principles of linear algebra, that is, if \(\mathbf {A}\) is an \(N\times N\) matrix, then \(|\mathbf {A}^{\beta }| = |\mathbf {A}|^{\beta }\), for determinants. Thus,

It should also be noted that the trace exponential term

in (34.17) is a product of weight functions of Freud type [30, 34] of the form

where \(\alpha = 1, 1/2, 1/4\). The weights \(\omega (x)\) are bounded, that is, \(\displaystyle 0 \le |\omega (x)| \le 1\). If we assume the random variables \(\mathbf {X} = \{x_{1}, \ldots , x_{N}\}\) having normal probability density function with mean \(\mu = 0\) and variance \(\sigma ^{2} = 1\), that is, \(x_{i}\) are independent identically distributed i.i.d. as \(\mathcal {N}(0,1)\), then it follows that we can construct the normal density in terms of a Gaussian weights such that

Also, from the definition of the p-th moment of a probability density function

and we have equivalently in terms of Gaussian weights

Thus focusing on the coefficients terms of \(x_{i}\), that is,

we generate a weighted Vandermonde matrix of the \(\omega (\mathbf {x})\) weighted form as follows:

The determinant of the Vandermonde matrix in (34.19) can also be obtained taking advantage of the Gaussian weights of the form:

and properties of determinant that is, if say \(\mathbf {A}\) is an \(N \times N\) matrix, then \(|\alpha \mathbf {A}| = \alpha ^{N}|A|\). Thus,

If \(\mathbf {X}\) has a central normal distribution, then for any finite non-negative integer p the plain central moments are given by

where n!! denotes the double factorial, that is, the product of numbers from n to 1 that have same parity as n.

The absolute central moment coincides with the plain moments for all even orders and are non-zero for odd orders. Thus, for any non-negative integer p

Considering \(\mathbf {X}_{1}, \ldots , \mathbf {X}_{N}\) independently normally distributed random variables with mean \(\mu = 0\), then the p-th product moment can be expressed as

Thus from (34.22), the joint p-th product moment will be given by

This, thorough examination, generates an equivalent expression for multivariate Gamma function as defined in (34.16), which is also the normalizing constant for the joint eigenvalue density for \(\beta \)-ensembles as given in (34.17). Thus, the same normalizing coefficient can be introduced in the expression (34.20) to lead to the same result as in (34.17).

Basing on the above close link between the Vandermonde determinant, then it is plausible enough to consider the general optimization of Vandermonde determinant over the polynomial constraint defined by trace factor \(\displaystyle \sum _{i=1}^{N}x_{i}^{2}\) in the bounded exponential term. We will apply the method of Lagrange multipliers to optimize the density (34.12) to optimize the Vandermonde determinant on the unit sphere and other surfaces, which in turn optimize the joint eigenvalue density as will be demonstrated in the next section.

34.5 Optimising the Joint Eigenvalue Probability Density Function

Lemma 34.5

For any symmetric \(n \times n\) matrix A with eigenvalues \(\{ \lambda _i, i = 1,\ldots ,n \}\) that are all distinct, and any polynomial P:

Proof

By definition, for any eigenvalue \(\lambda \) and eigenvector \(\mathbf {v}\) we must have \(\mathbf {A}\mathbf {v} = \lambda \mathbf {v}\) and thus

and thus \(P(\lambda )\) is an eigenvalue of \(P(\mathbf {A})\). For any matrix, \(\mathbf {A}\), the sum of eigenvalues is equal to the trace of the matrix

when multiplicities are taken into account. For the matrices considered in the Lemma 34.5 all eigenvalues are distinct. Thus applying this property to the matrix \(P(\mathbf {A})\) gives the desired statement.

Lemma 34.6

A Wishart distributed matrix \(\mathbf {W}\) as defined in Definition 34.2 will be a symmetric \(n \times n\) matrix.

Proof

From the definition \(\mathbf {W}\) is a \(p \times p\) matrix such that \(\mathbf {W} = \mathbf {X}\mathbf {X}^{\top }\). Then

and thus \(\mathbf {W}\) is symmetric.

Lemma 34.7

Suppose we have a Wishart distributed matrix \(\mathbf {W}\) with the probability density function of its eigenvalues given by

where \(C_n\) is a normalising constant, m is a positive integer, \(\beta > 1\) and P is a polynomial with real coefficients. Then the vector of eigenvalues of \(\mathbf {W}\) will lie on the surface defined by

Proof

Since \(\mathbf {W}\) is symmetric by Lemma 34.6 then it will also have real eigenvalues. By Lemma 34.5

and thus the point given by \(\mathbf {\lambda } = (\lambda _{1}, \lambda _{2}, \ldots , \lambda _{n})\) will be on the surface defined by

To find the maximum values we can use the method of Lagrange multipliers and find eigenvectors such that

where \(\eta \) is some real-valued constant. Computing the left-hand side gives

Thus the stationary points of (34.23) on the surface given by (34.24) are the solution to the equation system

If we denote the value of \(\mathbb {P}\) in a stationary point with \(P_s\) then the system above can be rewritten as

The equation system described by (34.25) appears when one tries to optimize the Vandermonde determinant on a surface defined by a univariate polynomial. This problem also appears in other settings, such as finding the Fekete points on a surface [34], certain electrostatics problems [17] and D-optimal design [35]. This equation system can be rewritten as an ordinary differential equation.

Consider the polynomial

and note that

Thus in each of the extreme points we will have the relation

for some \(\rho \in \mathbb {R}\). Since each \(\lambda _j\) is a root of \(f(\lambda )\) we see that the left hand side in the differential equation must be a polynomial with the same roots as \(f(\lambda )\), thus we can conclude that for any \(\lambda \in \mathbb {R}\)

where Q is a polynomial of degree \((\deg (p)-2)\).

Consider the \(\beta \) ensemble described by (34.17). For this ensemble the polynomial that defines the surface that the eigenvalues will be on is \(p(\lambda ) = \lambda ^2\). Thus by Lemma 34.7 the surface becomes a sphere with radius \(\sqrt{\text{ Tr }(\mathbf {W}^2)}\).

The solution to the equation system given by (34.25) on the unit sphere has been known for a long time, see [46] or [31, 35] for a more explicit description. The solution is given as the roots of a polynomial, in this case the solution can be written as the roots of the rescaled Hermite polynomials, the explicit expression for the polynomial whose roots give the maximum points is

where \(H_n\) denotes the nth (physicist) Hermite polynomial [2].

The solution on the unit sphere can then be used to find the vector of eigenvalues that maximizes the probability density function \(\mathbb {P}(\lambda )\) given by (34.17). Since rescaling the vector of eigenvalues affects the probability density depending on the length of the original vector in the following way

the unit sphere solution can be rescaled so that it ends up on the appropriate sphere.

For other polynomials that define the surface that the eigenvalues lie on similar techniques, for instance for some polynomials of the form \(P(\lambda ) = \lambda ^k\) where k is an even positive integer techniques like the ones demonstrated in [35] or [34] can be employed.

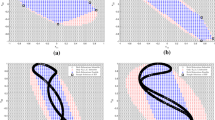

For a \(\beta \) ensemble the extreme points of \(\mathbb {P}\) share the properties of the extreme points of the Vandermonde determinant, for example all the extreme points will lie on the intersection of the sphere and the plane \(\displaystyle \sum _{k=1}^{n} \lambda _{k} = 0\). What this can look like for \(n=3\) is shown in Fig. 34.1 and for \(n=4\) in Fig. 34.2.

To visualize the location of the extreme points we use a technique described in detail in [31].

It can be shown that the extreme points of \(v_4(\mathbf {x})\) on the sphere all lie in the hyperplane \(x_1+x_2+x_3+x_4=0\). The intersection of this hyperplane with the unit sphere in \(\mathbb {R}^4\) can be described as a unit sphere in \(\mathbb {R}^3\), under a suitable basis, and can then be easily visualized.

This can be realized using the transformation

where \(\mathbf {x}\) is the coordinate vector in \(\mathbb {R}^4\) and \(\mathbf {t}\) is the corresponding coordinate vector in \(\mathbb {R}^3\). This will give a new sphere that can be parametrised using angles as normal.

Similar visualizations of the locations of extreme points on the unit sphere can be constructed up to \(n=7\), see [31] for further discussion.

Illustration of the expression given by (34.23) on the unit sphere in three dimensions, with parameters \(n = 3\), \(m = 2\), \(\beta = 2\) and \(C_n = 1\). Note that this expression is not correctly normalized and therefore not the exact value of the probability density distribution. On the right the value of the expression on the sphere is drawn and on the left the sphere has been parametrized in such a way that the point of the sphere given by \(\left( \frac{1}{\sqrt{3}},\frac{1}{\sqrt{3}},\frac{1}{\sqrt{3}}\right) \) corresponds to the point (0, 0)

Illustration of the expression given by (34.23) on the unit sphere in four dimensions, with parameters \(n = 4\), \(m = 2\), \(\beta = 2\) and \(C_n = 1\). Note that this expression is not correctly normalized and therefore not the exact value of the probability density distribution. In order to visualize the locations of the extreme points in four dimensions using only a two-dimensional surface the transformation given in (34.28) is used

34.6 Summary

In our study we establish that finding the extreme points for the probability distribution of the eigenvalues of a Wishart matrix can be done by finding the extreme points on a sphere with a radius related to the trace of the Wishart matrix. This close link between the Vandermonde determinant and the joint eigenvalue probability density function for \(\beta \)-ensembles helps to study more properties and applications of the probability density functions that occur in random matrices. As illustrated in Fig. 34.1, such results can be used to explain the distribution of charges over a unit sphere which agrees with Coloumb’s theory for electrostatic charge distribution. In this case the extreme points of the probability density function of the eigenvalues happen to be the zeros of deformed Hermite polynomials given by (34.27).

References

Aaronson, S., Arkhipov, A.: The computational complexity of linear optics. Theory. Comput. 9, 333–342 (2013)

Abramowitz, M., Stegun, I.: Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover, New York (1964)

Anderson, G.W., Guionnet, A., Zeitouni, O.: An Introduction to Random Matrices. Cambridge Studies in Advanced Mathematics, vol. 118. Cambridge University Press (2010)

Anderson, T.W.: An Introduction to Multivariate Statistical Analysis, 3rd edn. Wiley, Publication (2003)

Anderson, T.W., Girshick, M.A.: Some extensions of Wishart distribution. Ann. Math. Statist. 15(4), 345–357 (1944)

Bai, Z., Fang, Z., Liang, Y.C.: Spectral Theory of Large Dimensional Random Matrices and Its application to Wireless Communication and Finance: Random Matrix Theory and Its Applications. World Scientific Publishing Co., Pte., Ltd. (2014)

Bahcall, S.R.: Random Matrix Model for Superconductors in a Magnetic Field. Phys. Rev. Lett. 77(26), 5276–5279 (1996)

Bleher, P.M., Its, A.R. (eds.).: Random Matrix Models and Their Applications, MSRI Publications, vol. 40. Cambridge University Press (2001)

Bohigas, O., Giannoni, M.J., Schmit, S.: Characterization of chaotic quantum spectra and universality of level fluctuation laws. Phys. Rev. Lett. 52(1), 1–4 (1984)

Callaway, D.J.: Random matrices, fractional statistics, and the quantum Hall effect. Phys. Rev. B 43(10), 8641–8643 (1991)

Chow, G.P.: Analysis and Control of Dynamic Economic Systems. Wiley, New York (1976). ISBN 0-471-15616-7

Cotler, J., Hunter–Jones, N., Liu, J., Yoshida, B.: Chaos, Complexity and Random Matrices. J. High. Energy. Phys. 2017(48) (2017)

del Molino, L.C.G., Luis, C., Khashayar, P., Touboul, J., Wainrib, G.: Synchronization in random balanced networks. Phys. Rev. E. 88(4), 042824 (2013)

Derrida, B.: Random–energy model: limit of a family of disordered models. Phys. Rev. Lett. 45(2), 79 (1980)

Di Francesco, P.: 2D Quantum gravity, matrix models and graph combinatorics. In: Brezin E., Kazakov V., Serban D., Wiegmann P., Zabrodin A. (eds.). Applications of Random Matrices in Physics. NATO Science Series II: Mathematics, Physics and Chemistry, vol 221, 33–88, Springer, Dordrecht (2006)

Dumitriu, I., Edelman, A.: Matrix models for beta ensembles. J. Math. Phys. 43(11), 5830–5847 (2002)

Dimitrov, D.K., Shapiro, B.: Electrostatic problems with a rational constraint and degenerate Lamé equations. Potential Anal. 52, 645–659 (2020)

Edelman, A., Rao, N.R.: Random matrix theory. Acta. Numer. 14, 233–297 (2005)

Efron, B., Morris, C.N.: Stein’s paradox in statistics. Sci. Am. 236(5), 119–127 (1977)

Efron, B., Morris, C.N.: Multivariate empirical Bayes and estimation of covariance matrices. Ann. Stat. 4(1), 22–32 (1976)

Franchini, F., Kravtsov, V.E.: Horizon in random matrix theory, the Hawking radiation, and flow of cold atoms. Phys. Rev. Lett. 103(16), 166401 (2009)

Girko, V.L.: Theory of Random Determinants. Kluwer Academic Publishers (1990)

Harnad, J.: Random Matrices, Random Processes and Integral Systems. CRM–Series in Mathematical Physics, Springer Science and Business Media (2011)

James, A.T.: The distribution of latent roots of the covariance matrix. Ann. Math. Statist. 31(1), 151–158 (1960)

Janssen, M., Pracz, K.: Correlated random band matrices: localization-delocalization transitions. Phys. Rev. E. 62(6), 6278–6286 (2000)

Kanaka, R., Abbott, L.: Eigenvalue spectra of random matrices for neural networks. Phys. Rev. Lett. 97(18), 188104 (2006)

Keating J (1993) The Riemann zeta-function and quantum chaology. In: Quantum Chaos. School of Physics Enrico Fermi, vol. CXIX, 145–185. Elsevier

Kemal, S.M.: Universality in Random Matrix Models of Quantum Chromodynamics. Doctoral Dissertation, 91191 State University of New York (1999)

König, W.: Orthogonal polynomial ensembles in probability theory. Probab. Surv. 2, 385–447 (2005)

Lubinsky, D.S.: A survey of weighted polynomial approximation with exponential weights. Surv. Approx. Theory. 3, 1–105 (2007)

Lundengård, K., Österberg, J., Silvestrov, S.: Extreme points of the Vandermonde determinant on the sphere and some limits involving the generalized Vandermonde determinant, In: Silvestrov, S., Malyarenko, A., Rančić, M. (eds.), Algebraic Structures and Applications, Springer Proceedings in Mathematics and Statistics, vol. 317. Springer (2020). arXiv, eprint arXiv:1312.6193

Mehta, M.L.: Random Matrices and the Statistical Theory of Energy Levels. Academic Press, New York, London (1967)

Markowitz, H.: Portfolio selection. J. Financ. 7(1), 77–91 (1952)

Muhumuza, A.K., Lundengård, K., Österberg, J., Silvestrov, S., Mango, J.M., Kakuba, G.: The generalized Vandermonde interpolation polynomial based on divided differences. In: Skiadas, C. H. (ed.), Proceedings of the 5th Stochastic Modeling Techniques and Data Analysis International Conference with Demographics Workshop, Chania, Crete, Greece, 2018, ISAST: International Society for the Advancement of Science and Technology, 443–456 (2018)

Muhumuza, A.K., Lundengård, K., Österberg, J., Silvestrov, S., Mango, J.M., Kakuba, G.: Extreme points of the Vandermonde determinant on surfaces implicitly determined by a univariate polynomial, In: Silvestrov, S., Malyarenko, A., Rančić, M. (eds.), Algebraic Structures and Applications, Springer Proceedings in Mathematics and Statistics, vol. 317. Springer (2020)

Muir, D., Mrsic-Flogel, T.: Eigenspectrum bounds for semirandom matrices with modular and spatial structure for neural networks. Phys. Rev. E 91(4), 042808 (2015)

Muirhead, R.J.: Aspect of Multivariate Statistical Theory, vol. 197. Wiley (1982)

Novak, J.I.: Topics in Combinatorics and Random Matrix Theory. Kingston, Ontario, Canada (2009)

Parlett, B.N.: The Symmetric Eigenvalue Problem, vol. 20. The Society for Industrial and Applied Mathematics (SIAM) (1998)

Pearson, K.: On the criterion that a certain given system of deviations from the probable in the case of correlated system of variables is such that it can reasonably supposed to have arisen from random sampling. Pil. Mg. 50(302), 157–175 (1900)

Russel, N., Chakhmakhchyan, l., O’Brien, J., Laing, A.: Direct dialling of Haar random unitary matrices. New J. Phys. 9(3), 033007 (2017)

Rychkov, V.S., Borlenghi, S., Jaffres, H., Fert, A., Waintal, X.: Spin torque and waviness in magnetic multilayers: a bridge between Valet-Fert theory and quantum approaches. Phys. Rev. Lett. 103(6), 066602 (2009)

Sánchez, D., Büttiker, M.: Magnetic-field asymmetry of nonlinear mesoscopic transport. Phys. Rev. Lett. 93(10), 106802 (2004)

Sompolinsky, H., Crisanti, A., Sommers, H.: Chaos in random neural networks. Phys. Rev. Lett. 61(3), 259–262 (1988)

Stein, C.: Inadmissibility of the Usual Estimator for the Mean of a Multivariate Normal Distribution. Stanford University Stanford, United States (1956)

Szegő, G.: Orthogonal Polynomials. American Mathematics Society (1939)

Timme, M., Wolf, F., Geisel, T.: Topological speed limits to network synchronization. Phys. Rev. Lett. 92(7), 074101 (2004)

Tropp, J.: User-friendly tail bounds for sums of random matrices. Found. Comput. Math. 12, 389–434 (2011)

Turnovsky, S.: The stability properties of optimal economic policies. Rev. Econ. Stud. 64(1), 136–148 (1974)

Turnovsky, S.: Optimal stabilization policies for stochastic linear systems: the case of correlated multiplicative and additive disturbances. Rev. Econ. Stud. 43(1), 191–194 (1976)

Van der Vaart, A.W.: Asymptotic Statistics, vol. 3. Cambridge University Press (2000)

Verbaarschot, J.J., Wettig, T.: Random matrix theory and chiral symmetry in QCD. Annu. Rev. Nucl. Part. Sci. 50(1), 343–410 (2000)

Wainrib, G., Touboul, J.: Topological and dynamical complexity of random neural networks. Phys. Rev. Lett. 110(11), 118101 (2013)

Wigner, E.P.: Random Matrices in Physics. SIAM Review 9(1), 1–23 (1967)

Wigner, E.P.: Characteristic vectors of bordered matrices with infinite dimension. Ann. Math. 62(3), 524–540 (1955)

Wishart, J.: The generalised product moment distribution in samples from a normal multivariate population. Biometrika 20A(1/2), 32–52 (1928)

Zanon, N., Pichard, J.-L.: Random matrix theory and universal statistics for disordered quantum conductors with spin-dependent hopping. J. Phys. 49(6), 907–920 (1988)

Ziegler, K.: Random matrix approach to light scattering on complex particles. In: The Fifth International Kharkov Symposium on Physics and Engineering of Microwaves, Millimeter, and Submillimeter Waves (IEEE Cat. No. 04EX828), Ukraine, 21–26 June 2004, vol. 1, 208–210 (2004)

Zumbühl, D.M., Miller, J.B., Marcus, C.M., Campman, K., Gossard, A.C.: Spin–orbit coupling, antilocalization, and parallel magnetic fields in quantum dots. Phys. Rev. Lett. 89(27), 276803 (2002)

Acknowledgements

We acknowledge the financial support for this research by the Swedish International Development Agency, (Sida), Grant No.316, International Science Program, (ISP) in Mathematical Sciences, (IPMS). We are also grateful to the Division of Applied Mathematics, Mälardalen University for providing an excellent and inspiring environment for research education and research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Muhumuza, A.K., Lundengård, K., Österberg, J., Silvestrov, S., Mango, J.M., Kakuba, G. (2020). Optimization of the Wishart Joint Eigenvalue Probability Density Distribution Based on the Vandermonde Determinant. In: Silvestrov, S., Malyarenko, A., Rančić, M. (eds) Algebraic Structures and Applications. SPAS 2017. Springer Proceedings in Mathematics & Statistics, vol 317. Springer, Cham. https://doi.org/10.1007/978-3-030-41850-2_34

Download citation

DOI: https://doi.org/10.1007/978-3-030-41850-2_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-41849-6

Online ISBN: 978-3-030-41850-2

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)