Abstract

The values of the determinant of Vandermonde matrices with real elements are analyzed both visually and analytically over the unit sphere in various dimensions. For three dimensions some generalized Vandermonde matrices are analyzed visually. The extreme points of the ordinary Vandermonde determinant on finite-dimensional unit spheres are given as the roots of rescaled Hermite polynomials and a recursion relation is provided for the polynomial coefficients. Analytical expressions for these roots are also given for dimension three to seven. A transformation of the optimization problem is provided and some relations between the ordinary and generalized Vandermonde matrices involving limits are discussed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

MSC 2010 Classification

32.1 Introduction

Extreme points and values of Vandermonde determinant and its generalizations constrained on surfaces have been considered in the work of the authors, including also applications to approximation and interpolation of functions and data, curve fitting and applications in electromagnetism, lightning modelling, electromagnetic compatibility, probability theory and financial engineering [1,2,3,4,5]. Algebraic varieties defined using Vandermonde determinant functions have also interesting algebraic and geometric properties and structure from the point of view of algebraic geometry and commutative algebra [6,7,8].

The ordinary Vandermonde matrices

Note that some authors use the transpose as the definition and possibly also let indices run from 0. All entries in the first row of Vandermonde matrices are ones and by considering \(0^0=1\) this is true even when some \(x_j\) is zero.

In this article the following notation will sometimes be used:

For the sake of convenience we will use \(\mathbf {x}_n\) to mean \(\mathbf {x}_{I_n}\) where \(I_n = \{ 1, 2, \ldots , n \}\).

We have the following well known theorem.

Theorem 32.1

The determinant of (square) Vandermonde matrices has the well known form

This determinant is also simply referred to as the Vandermonde determinant.

The Vandermonde determinant is a special case of a generalized Vandermonde determinant (32.1). A generalized Vandermonde matrix is determined by two vectors \(\mathbf {x}_n=(x_1,\ldots ,x_n)\in K^n\) and \(\mathbf {a}_m=(a_1,\ldots ,a_m)\in K^m\), where K is usually the real or complex field, and is defined as

For square matrices only one index is given, \(G_n \equiv G_{nn}\).

Note that the term generalized Vandermonde matrix has been used for several kinds of matrices that are not equivalent to or a special cases of (32.1), see [9] for instance.

The determinant of generalized Vandermonde matrices

and its connections to difference equations, symmetric polynomials and representation theory have been considered for example in [10, 11].

Vandermonde matrices can be used in polynomial interpolation. The coefficients of the unique polynomial \(c_0+c_1x+\cdots +c_{n-1}x^{n-1}\) that passes through n points \((x_i,y_i)\in \mathbb {C}^2\) with distinct \(x_i\) are

In this context the \(x_i\) are called nodes. There are also many applications of Vandermonde and generalized Vandermonde determinants, for example in differential equations [9], difference equations and representation theory [10], and time series analysis [12].

In Sect. 32.1.1 we introduce the reader to the behavior of the Vandermonde determinant \(v_3(\mathbf {x}_3)=(x_3-x_2)(x_3-x_1)(x_2-x_1)\) by some introductory visualizations. We also consider \(g_3(\mathbf {x}_3,\mathbf {a}_3)\) for some choices of exponents \(\mathbf {a}_3\).

In Sect. 32.2 we optimize the Vandermonde determinant \(v_n\) over the unit sphere \(S^{n-1}\) in \(\mathbb {R}^n\) finding a rescaled Hermite polynomial whose roots give the extreme points. In Sect. 32.2.2 we arrive at the results for the special case \(v_3\) in a slightly different way. In Sect. 32.2.3 we present a transformation of the optimization problem into an equivalent form with known optimum value. In Sect. 32.2.4 we extend the range of visualizations to \(v_4,\ldots ,v_7\) by exploiting some analytical results. In Sect. 32.3 we prove some limits involving the generalized Vandermonde matrix and determinant.

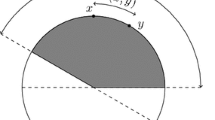

32.1.1 Visual Exploration in 3D

In this section we plot the values of the determinant

and also the generalized Vandermonde determinant \(g_3(\mathbf {x}_3,\mathbf {a}_3)\) for three different choices of \(\mathbf {a}_3\) over the unit sphere \(x_1^2 + x_2^2 + x_3^2=1\) in \(\mathbb {R}^3\). Our plots are over the unit sphere but the determinant exhibits the same general behavior over centered spheres of any radius. This follows directly from (32.1) and that exactly one element from each row appear in the determinant. For any scalar c we get

which for \(v_n\) becomes

and so the values over different radii differ only by a constant factor.

In Fig. 32.1 value of \(v_3(\mathbf {x}_3)\) has been plotted over the unit sphere and the curves where the determinant vanishes are traced as black lines. The coordinates in Fig. 32.1b are related to \(\mathbf {x}_3\) by

where the columns in the product of the two matrices are the basis vectors in \(\mathbb {R}^3\). The unit sphere in \(\mathbb {R}^3\) can also be described using spherical coordinates. In Fig. 32.1c the following parametrization was used.

We will use this \(\mathbf {t}\)-basis and spherical parametrization throughout this section.

From the plots in Fig. 32.1 it can be seen that the number of extreme points for \(v_3\) over the unit sphere seem to be \(6=3!\). It can also been seen that all extreme points seem to lie in the plane through the origin that is orthogonal to an apparent symmetry axis in the direction (1, 1, 1), the direction of \(t_3\). We will see later that the extreme points for \(v_n\) indeed lie in the hyperplane \(\sum _{i=1}^n x_i=0\) for all n, see Theorem 32.3, and the number of extreme points for \(v_n\) count n!, see Remark 32.1.

The black lines where \(v_3(\mathbf {x}_3)\) vanishes are actually the intersections between the sphere and the three planes \(x_3-x_1=0\), \(x_3-x_2=0\) and \(x_2-x_1=0\), as these differences appear as factors in \(v_3(\mathbf {x}_3)\).

We will see later on that the extreme points are the six points acquired from permuting the coordinates in

For reasons that will become clear in Sect. 32.2.1 it is also useful to think about these coordinates as the roots of the polynomial

So far we have only considered the behavior of \(v_3(\mathbf {x}_3)\), that is \(g_3(\mathbf {x}_3,\mathbf {a}_3)\) with \(\mathbf {a}_3=(0,1,2)\). We now consider three generalized Vandermonde determinants, namely \(g_3\) with \(\mathbf {a}_3=(0,1,3)\), \(\mathbf {a}_3=(0,2,3)\) and \(\mathbf {a}_3=(1,2,3)\). These three determinants show increasingly more structure and they all have a neat formula in terms of \(v_3\) and the elementary symmetric polynomials

where we will simply use \(e_k\) whenever n is clear from the context.

In Fig. 32.2 we see the determinant

plotted over the unit sphere. The expression \(v_3(\mathbf {x}_3)e_{1}\) is easy to derive, the \(v_3(\mathbf {x}_3)\) is there since the determinant must vanish whenever any two columns are equal, which is exactly what the Vandermonde determinant expresses. The \(e_1\) follows by a simple polynomial division. As can be seen in the plots we have an extra black circle where the determinant vanishes compared to Fig. 32.1. This circle lies in the plane \(e_1=x_1+x_2+x_3=0\) where we previously found the extreme points of \(v_3(\mathbf {x}_3)\) and thus doubles the number of extreme points to \(2\cdot 3!\).

A similar treatment can be made of the remaining two generalized determinants that we are interested in, plotted in the following two figures.

The four determinants treated so far are collected in Table 32.1. Derivation of these determinants is straight forward. We note that all but one of them vanish on a set of planes through the origin. For \(\mathbf {a}=(0,2,3)\) we have the usual Vandermonde planes but the intersection of \(e_2=0\) and the unit sphere occur at two circles.

and so \(g_3(\mathbf {x}_3,(0,2,3))\) vanish on the sphere on two circles lying on the planes \(x_1+x_2+x_3 + 1=0\) and \(x_1+x_2+x_3 - 1=0\). These can be seen in Fig. 32.3 as the two black circles perpendicular to the direction (1, 1, 1).

Note also that while \(v_3\) and \(g_3(\mathbf {x}_3,(0,1,3))\) have the same absolute value on all their respective local extreme points (by symmetry) we have that both \(g_3(\mathbf {x}_3,(0,2,3))\) and \(g_3(\mathbf {x}_3,(1,2,3))\) have different absolute values for some of their respective extreme points, this can be seen in Figs. 32.2, 32.3 and 32.4.

32.2 Optimizing the Vandermonde Determinant over the Unit Sphere

In this section we will consider the extreme points of the Vandermonde determinant on the n-dimensional unit sphere in \(\mathbb {R}^n\). We want both to find an analytical solution and to identify some properties of the determinant that can help us to visualize it in some area around the extreme points in dimensions \(n > 3\).

32.2.1 The Extreme Points Given by Roots of a Polynomial

The extreme points of the Vandermonde determinant on the unit sphere in \(\mathbb {R}^n\) are known and given by Theorem 32.4 where we present a special case of Theorem 6.7.3 in ‘Orthogonal polynomials’ by Gábor Szegő [13]. We will also provide a proof that is more explicit than the one in [13] and that exposes more of the rich symmetric properties of the Vandermonde determinant. For the sake of convenience some properties related to the extreme points of the Vandermonde determinant defined by real vectors \(\mathbf {x}_n\) will be presented before Theorem 32.4.

Theorem 32.2

For any \(1 \le k \le n\)

This theorem will be proven after the introduction of the following useful lemma:

Lemma 32.1

For any \(1 \le k \le n-1\)

and

Proof

Note that the determinant can be described recursively

Formula (32.6) follows immediately from applying the differentiation formula for products on (32.8). Formula (32.7) follows from (32.8), the differentiation rule for products and that \(v_{n-1}(\mathbf {x}_{n-1})\) is independent of \(x_n\).

Proof

(Proof of Theorem 32.2) Using Lemma 32.1 we can see that for \(k = n\), formula (32.5) follows immediately from (32.7). The case \(1 \le k < n\) will be proved using induction. Using (32.6) gives

Supposing that formula (32.5) is true for \(n-1\) results in

Showing that (32.5) is true for \(n=2\) completes the proof

Theorem 32.3

The extreme points of \(v_n(\mathbf {x}_n)\) on the unit sphere can all be found in the hyperplane defined by

This theorem will be proved after the introduction of the following useful lemma:

Lemma 32.2

For any \(n \ge 2\) the sum of the partial derivatives of \(v_n(\mathbf {x}_n)\) will be zero.

Proof

This lemma is easily proven using Lemma 32.1 and induction:

Thus if (32.10) is true for \(n-1\), then it is also true for n. Showing that the equation holds for \(n=2\) is very simple

Proof

(Proof of Theorem 32.3) Using the method of Lagrange multipliers it follows that any \(\mathbf {x}_n\) on the unit sphere that is an extreme point of the Vandermonde determinant will also be a stationary point for the Lagrange function

for some \(\lambda \). Explicitly this requirement becomes

Equation (32.12) corresponds to the restriction to the unit sphere and is therefore immediately fulfilled. Since all the partial derivatives of the Lagrange function should be equal to zero it is obvious that the sum of the partial derivatives will also be equal to zero. Combining this with Lemma 32.2 gives

There are two ways to fulfill condition (32.13) either \(\lambda = 0\) or \(\sum _{k=1}^n x_k= 0\). When \(\lambda = 0\), equations (32.11) reduce to

and by (32.2) this can only be true if \(v_n(\mathbf {x}_n)=0\), which is of no interest to us, and so all extreme points must lie in the hyperplane \(\sum _{k=1}^n x_k= 0\).

Theorem 32.4

A point on the unit sphere in \(\mathbb {R}^n\), \(\mathbf {x}_n = (x_1, x_2, \ldots \, x_n)\), is an extreme point of the Vandermonde determinant if and only if all \(x_i\), \(i \in \{ 1,2,\ldots \,n \}\), are distinct roots of the rescaled Hermite polynomial

Remark 32.1

Note that if \(\mathbf {x}_n = (x_1, x_2, \ldots \, x_n)\) is an extreme point of the Vandermonde determinant then any other point whose coordinates are a permutation of the coordinates of \(\mathbf {x}_n\) is also an extreme point. This follows from the determinant function being, by definition, alternating with respect to the columns of the matrix and the \(x_i\)s defines the columns of the Vandermonde matrix. Thus any permutation of the \(x_i\)s will give the same value for \(\left| v_n(\mathbf {x}_n)\right| \). Since there are n! permutations there will be at least n! extreme points. The roots of the polynomial (32.14) defines the set of \(x_i\)s fully and thus there are exactly n! extreme points, n!/2 positive and n!/2 negative.

Remark 32.2

All terms in \(P_n(x)\) are of even order if n is even and of odd order when n is odd. This means that the roots of \(P_n(x)\) will be symmetrical in the sense that if \(x_i\) is a root then \(-x_i\) is also a root.

Proof

(Proof of Theorem 32.4) By the method of Lagrange multipliers condition (32.11) must be fulfilled for any extreme point. If \(\mathbf {x}_n\) is a fixed extreme point so that

then (32.11) can be written explicitly, using (32.5), as

or alternatively by introducing a new multiplier \(\rho \) as

By forming the polynomial \(f(x) = (x-x_1)(x-x_2)\cdots (x-x_n)\) and noting that

we can rewrite (32.15) as

or

Since the last equation must vanish for all k we must have

for some constant c. To find c the \(x^n\)-terms of the right and left part of (32.16) are compared to each other,

Thus the following differential equation for f(x) must be satisfied

Choosing \(x = az\) gives

By setting \(g(z) = f(az)\) and choosing \(a = \sqrt{\frac{n}{\rho }}\) a differential equation that matches the definition for the Hermite polynomials is found:

By definition the solution to (32.18) is \(g(z) = b H_n(z)\) where b is a constant. An exact expression for the constant a can be found using Lemma 32.3 (for the sake of convenience the lemma is stated and proved after this theorem). We get

and so

Thus condition (32.11) is fulfilled when \(x_i\) are the roots of

Choosing \(b = \left( 2n(n-1)\right) ^{-\frac{n}{2}}\) gives \(P_n(x)\) with leading coefficient 1. This can be confirmed by calculating the leading coefficient of P(x) using the explicit expression for the Hermite polynomial (32.20). This completes the proof.

Lemma 32.3

Let \(x_i\), \(i = 1,2,\ldots ,n\) be roots of the Hermite polynomial \(H_n(x)\). Then

Proof

By letting \(e_k(x_1,\ldots \,x_n)\) denote the elementary symmetric polynomials, \(H_n(x)\) can be written as

where q(x) is a polynomial of degree \(n-3\). Noting that

it is clear the sum of the square of the roots can be described using the coefficients for \(x^n\), \(x^{n-1}\) and \(x^{n-2}\). The explicit expression for \(H_n(x)\) is [13]

Comparing the coefficients in the two expressions for \(H_n(x)\) gives

Thus by (32.19)

Theorem 32.5

The coefficients, \(a_k\), for the terms of \(x^k\) in \(P_n(x)\) given by (32.14), are given by the following relations

Proof

Equation (32.17) tells us that

That \(a_n = 1\) follows from the definition of \(P_n\) and \(a_{n-1} = 0\) follows from the Hermite polynomials only having terms of odd powers when n is odd and even powers when n is even. That \(a_{n-2} = \frac{1}{2}\) can be easily shown using the definition of \(P_n\) and the explicit formula for the Hermite polynomials (32.20).

The value of the \(\rho \) can be found by comparing the \(x^{n-2}\) terms in (32.22)

From this follows

Comparing the \(x^{n-l}\) terms in (32.22) gives the following relation

which is equivalent to

Letting \(k = n-l\) gives (32.21).

32.2.2 Extreme Points of the Vandermonde Determinant on the Three Dimensional Unit Sphere

It is fairly simple to describe \(v_3(\mathbf {x}_3)\) on the circle that is formed by the intersection of the unit sphere and the plane \(x_1+x_2+x_3=0\). Using Rodrigues’ rotation formula to rotate a point, \(\mathbf {x}\), around the axis \(\frac{1}{\sqrt{3}}(1,1,1)\) with the angle \(\theta \) will give the rotation matrix

A point which already lies on \(S^2\) can then be rotated to any other point on \(S^2\) by letting \(R_\theta \) act on the point. Choosing the point \(\mathbf {x} = \frac{1}{\sqrt{2}}\left( -1,0,1\right) \) gives the Vandermonde determinant a convenient form on the circle since:

which gives

Note that the final equality follows from \(\cos (n\theta ) = T_n(\cos (\theta ))\) where \(T_n\) is the nth Chebyshev polynomial of the first kind. From formula (32.14) if follows that \(P_3(x) = T_3(x)\) but for higher dimensions the relationship between the Chebyshev polynomials and \(P_n\) is not as simple.

Finding the maximum points for \(v_3(\mathbf {x}_3)\) on this form is simple. The Vandermonde determinant will be maximal when \(3\theta = 2n\pi \) where n is some integer. This gives three local maxima corresponding to \(\theta _1 = 0\), \(\theta _2 = \frac{2\pi }{3}\) and \(\theta _3 = \frac{4\pi }{3}\). These points correspond to cyclic permutation of the coordinates of \(\mathbf {x} = \frac{1}{\sqrt{2}}\left( -1,0,1\right) \). Analogously the minimas for \(v_3(\mathbf {x}_3)\) can be shown to be a transposition followed by cyclic permutation of the coordinates of \(\mathbf {x}\). Thus any permutation of the coordinates of \(\mathbf {x}\) correspond to a local extreme point just like it was stated on Sect. 32.1.1.

32.2.3 A Transformation of the Optimization Problem

In this section we provide a transformation of the problem of optimizing the Vandermonde determinant over the unit sphere to a related equation system with two equations.

Lemma 32.4

For any \(n\ge 2\) the dot product between the gradient of \(v_n(\mathbf {x}_n)\) and \(\mathbf {x}_n\) is proportional to \(v_n(\mathbf {x}_n)\). More precisely,

Proof

Using Theorem 32.2 we have

Now, for each distinct pair of indices \(k=a,i=b\) in the last double sum we have that the indices \(k=b,i=a\) also appear. And so we continue

which proves the lemma.

Consider the premise that an objective function \(f(\mathbf {x})\) is optimized on the points satisfying an equality constraint \(g(\mathbf {x})=0\) when its gradient is linearly dependent with the gradient of the constraint function. In Lagrange multipliers this is expressed as

where \(\lambda \) is some scalar constant. We can also express this using a dot product

We are interested in the case where both \(\nabla f\) and \(\nabla g\) are non-zero and so for linear dependence we require \(\cos \theta =\pm 1\). By squaring we then have

which can also be expressed

Theorem 32.6

The problem of finding the vectors \(\mathbf {x}_n\) that maximize the absolute value of the Vandermonde determinant over the unit sphere:

has exactly the same solution set as the related problem

Proof

By applying (32.24) to the problem of optimizing the Vandermonde determinant \(v_n(\mathbf {x}_n)\) over the unit sphere we get.

By applying Lemma 32.4 the left part of (32.27) can be written as

The right part of (32.27) can be rewritten as

and by expanding the square we continue

We recognize that the triple sum runs over all distinct i, k, j and so we can write them as one sum by expanding permutations:

We continue by joining the simplified left and right part of (32.27):

and the result follows as

This captures the linear dependence requirement of the problem, what remains is to require the solutions to lie on the unit sphere.

32.2.4 Further Visual Exploration

Visualization of the determinant \(v_3(\mathbf {x}_3)\) on the unit sphere is straightforward, as well as visualizations for \(g_3(\mathbf {x}_3,\mathbf {a})\) for different \(\mathbf {a}\). All points on the sphere can be viewed directly by a contour map. In higher dimensions we need to reduce the set of visualized points somehow. In this section we provide visualizations for \(v_4,\ldots ,v_7\) by using symmetry properties of the Vandermonde determinant.

32.2.4.1 Four Dimensions

By Theorem 32.3 we know that the extreme points of \(v_4(\mathbf {x}_4)\) on the sphere all lie in the hyperplane \(x_1+x_2+x_3+x_4=0\). The intersection of this hyperplane with the unit sphere in \(\mathbb {R}^4\) can be described as a unit sphere in \(\mathbb {R}^3\), under a suitable basis, and can then be easily visualized.

This can be realized using the transformation

The results of plotting the \(v_4(\mathbf {x}_4)\) after performing this transformation can be seen in Fig. 32.5. All \(24 = 4!\) extreme points are clearly visible.

From Fig. 32.5 we see that whenever we have a local maxima we have a local maxima at the opposite side of the sphere as well, and the same for minima. This it due to the occurrence of the exponents in the rows of \(V_n\). From (32.2) we have

and so opposite points are both maxima or both minima if \(n=4k\) or \(n=4k+1\) for some \(k \in \mathbb {Z}^+\) and opposite points are of different types if \(n=4k-2\) or \(n=4k-1\) for some \(k \in \mathbb {Z}^+\).

By Theorem 32.4 the extreme points on the unit sphere for \(v_4(\mathbf {x}_4)\) is described by the roots of this polynomial

The roots of \(P_4(x)\) are:

By Theorem 32.4 or 32.5 we see that the polynomials providing the coordinates of the extreme points have all even or all odd powers. From this it is easy to see that all coordinates of the extreme points must come in pairs \(x_i,-x_i\). Furthermore, by Theorem 32.3 we know that the extreme points of \(v_5(\mathbf {x}_5)\) on the sphere all lie in the hyperplane \(x_1+x_2+x_3+x_4+x_ 5=0\).

We use this to visualize \(v_5(\mathbf {x}_5)\) by selecting a subspace of \(\mathbb {R}^5\) that contains all points that have coordinates which are symmetrically placed on the real line, \((x_1,x_2,0,-x_2,-x_1)\).

The coordinates in Fig. 32.6a are related to \(\mathbf {x}_5\) by

The result, see Fig. 32.6, is a visualization of a subspace containing 8 of the 120 extreme points. Note that to satisfy the condition that the coordinates should be symmetrically distributed pairs can be fulfilled in two other subspaces with points that can be described in the following ways: \((x_1,x_2,0,-x_1,-x_2)\) and \((x_2,-x_2,0,x_1,-x_1)\). This means that a transformation similar to (32.30) can be used to describe \(3 \cdot 8 = 24\) different extreme points.

The transformation (32.30) corresponds to choosing \(x_3 = 0\). Choosing another coordinate to be zero will give a different subspace of \(\mathbb {R}^5\) which behaves identically to the visualized one. This multiplies the number of extreme points by five to the expected \(5 \cdot 4! = 120\).

By Theorem 32.4, the extreme points on the unit sphere for \(v_5(\mathbf {x}_5)\) are described by the roots of this polynomial

The roots of \(P_5(x)\) are:

As for \(v_5(\mathbf {x}_5)\) we use symmetry to visualize \(v_6(\mathbf {x}_6)\). We select a subspace of \(\mathbb {R}^6\) that contains all symmetrical points \((x_1,x_2,x_3,-x_3,-x_2,-x_1)\) on the sphere.

The coordinates in Fig. 32.7a are related to \(\mathbf {x}_6\) by

In Fig. 32.7 there are 48 visible extreme points. The remaining extreme points can be found using arguments analogous the five-dimensional case.

By Theorem 32.4 the extreme points on the unit sphere for \(v_6(\mathbf {x}_6)\) is described by the roots of this polynomial

The roots of \(P_6(x)\) are:

As for \(v_6(\mathbf {x}_6)\) we use symmetry to visualize \(v_7(\mathbf {x}_7)\). We select a subspace of \(\mathbb {R}^7\) that contains all symmetrical points \((x_1,x_2,x_3,0,-x_3,-x_2,-x_1)\) on the sphere.

The coordinates in Fig. 32.8a are related to \(\mathbf {x}_7\) by

In Fig. 32.8, there are 48 extreme points that are visible just like it was for the six-dimensional case. This is expected since the transformation corresponds to choosing \(x_4 = 0\) which restricts us to a six-dimensional subspace of \(\mathbb {R}^7\) which can then be visualized in the same way as the six-dimensional case. The remaining extreme points can be found using arguments analogous the five-dimensional case.

By Theorem 32.4 the extreme points on the unit sphere for \(v_4\) is described by the roots of this polynomial

The roots of \(P_7(x)\) are:

32.3 Some Limit Theorems Involving the Generalized Vandermonde Matrix

Let \(D_k\) be the diagonal matrix

Theorem 32.7

For any \(\mathbf {x}\in \mathbb {C}^n\) and \(\mathbf {a}\in \mathbb {C}^m\) with \(x_j\ne 0\) for all j we have

where the convergence is entry-wise, \(\log \mathbf {x}=(\log x_1,\ldots ,\log x_n)\) and the branch of the complex logarithm \(\log (\cdots )\) is fixed and defines the expression \(x_j^{a_i}\) by

We will prove this theorem after presenting some results for a larger class of matrices.

Generalized Vandermonde matrices is a special case of matrices on the form

where f is a function. Suppose that \(\mathbf {x}\) is fixed, then each entry will be a function of one variable

If all these functions \(f_j\) are analytic in a neighborhood of some common \(a_0\) then the functions have power series expansions around \(a_0\). If we denote the power series coefficients for function \(f_j\) as \(c_{j*}\) then we may write

where convergence holds for each entry of \(A_{m n}\) and

Proof

(Proof of Theorem 32.7) With the complex logarithmic function, \(\log (\cdots )\), defined to lie in a fixed branch we may write generalized Vandermonde matrices as

where

These functions \(f_j\) are analytic everywhere whenever \(x_j\ne 0\). By the power series of the exponential function we have

and by (32.41) we get

which concludes the proof.

Theorem 32.8

If \(n\ge 2\), \(\mathbf {x},\mathbf {a}\in \mathbb {C}^n\), \(x_j\ne 0\) for all j and \(v_n(\mathbf {a})\ne 0\) then

We will prove this theorem after some intermediate results.

Let \(\mathbf {i}_n=(1,2,\ldots ,n)\), \(P_{kn}\) be the set of all vectors \(\mathbf {p}\in \mathbb {N}^n\) such that

and \(Q_{kn}=\{\mathbf {p}\in P_{kn}:p_n=k\}\). An \(N\times N\) minor of a matrix \(A\in M_{m n}\) is determined by two vectors \(\mathbf {k}\in P_{mN}\) and \(\mathbf {l}\in P_{nN}\) and is defined as

Using this notation the determinant of the product of two matrices \(A\in M_{n k}\) and \(B\in M_{k n}\) can be written using the Cauchy–Binet formula [14, p. 18] as

Lemma 32.5

If \(\mathbf {x},\mathbf {a}\in \mathbb {C}^n\) and \(x_j\ne 0\) for all j then we can write the determinant of generalized Vandermonde matrices as

Proof

By (32.39), the continuity of the determinant function, the associativity of matrix multiplication, and (32.42), we get

We recognizing that \(D_k\) is a diagonal matrix that scales the columns of \(V_{k n}(\mathbf {a})^T\):

and so

that is

and the result follows by recognizing that as k is increased to \(k+1\) in the limit, the sum will contain all the previous terms (corresponding to \(\mathbf {p}\in P_{kn}\)) with the addition of new terms corresponding to \(\mathbf {p}\in Q_{(k+1)n}\).

Proof

(Proof of Theorem 32.8) When the summation in Lemma 32.5 is applied to \(g_n(\mathbf {x},\mathbf {a}t)\) the resulting expression will contain factors

where

The lowest exponent for t will occur exactly once, for \(k=n\), when \(\mathbf {q}=\mathbf {i}_n\), and it is

and by splitting the sum we get

The final result can now be proven by rewriting the denominator in the theorem as \(v_n(\mathbf {a}t)=t^{M} v_n(\mathbf {a})\), taking the limit, and finally expanding \(v_n(\log \mathbf {x})\) by Theorem 32.1.

32.4 Conclusions

From the visualizations in Sect. 32.1.1 it was concluded that the extreme points for the ordinary Vandermonde determinant on the unit sphere in \(\mathbb {R}^3\) seems to have some interesting symmetry properties and in Sect. 32.2 it was proven that extreme points could only appear given certain symmetry conditions, see Remark 32.2 and Theorem 32.5. This also allowed visualization of the extreme points of the ordinary Vandermonde determinant on the unit sphere in some higher dimensional spaces, \(\mathbb {R}^n\), more specifically for \(n = 4,5,6,7\).

The exact location of the extreme points for any finite dimension could also be determined as roots of the polynomial given in Theorem 32.4. Exact solutions for these roots were also given for the dimensions that were visualized, see Sects. 32.2.2 and 32.2.4.

Some visual investigation of the generalized Vandermonde matrix was also done in Sect. 32.1.1 but no clear way to find or illustrate where the extreme points was given. The authors intend to explore this problem further.

In Sect. 32.3 some limit theorems that involve factorization of a generalized Vandermonde matrix using an ordinary Vandermonde matrix and the ratio between the determinant of a generalized Vandermonde matrix and the determinant of a related ordinary Vandermonde matrix.

References

Lundengård, K., Österberg, J., Silvestrov, S.: Extreme points of the Vandermonde determinant on the sphere and some limits involving the generalized Vandermonde determinant (2013). arXiv:1312.6193 [math.ca]

Lundengård, K., Österberg, J., Silvestrov, S.: Optimization of the determinant of the Vandermonde matrix and related matrices. Methodol. Comput. Appl. Probab. 20, 1417–1428 (2018)

Muhumuza, A.K., Lundengård, K., Österberg, J., Silvestrov, S., Mango, J.M., Kakuba, G.: Extreme points of the Vandermonde determinant on surfaces implicitly determined by a univariate polynomial. In: Silvestrov, S., Malyarenko, A., Rančić., M. (eds.), Algebraic structures and Applications, Springer Proceedings in Mathematics and Statistics, vol. 317. Springer (2020)

Muhumuza, A. K., Lundengård, K., Österberg, J., Silvestrov, S., Mango, J. M., Kakuba, G.: Optimization of the Wishart joint eigenvalue probability density distribution based on the Vandermonde determinant. In: Silvestrov, S., Malyarenko, A., Rančić., M. (eds.) Algebraic structures and Applications, Springer Proceedings in Mathematics and Statistics, vol. 317. Springer (2020)

Lundengård, K., Rančić, M., Javor, V., Silvestrov, S.: Electrostatic discharge currents representation using the multi-peaked analytically extended function by interpolation on a \(D\)-optimal design. Facta Univ. Ser.: Electron. Energ. 32(1), 25–49 (2019)

Dimitrov, D., Shapiro, B.: Electrostatic problems with a rational constraint and degenerate Lamé operators. Potential Anal. 52(4), 645–659 (2020)

Fröberg, R., Shapiro, B.: Vandermonde varieties and relations among schur polynomials (2013). arXiv:1302.1298 [math.AG]

Fröberg, R., Shapiro, B.: On Vandermonde varieties. Math. Scand. 119(1), 73–91 (2016)

Kalman, D.: The generalized Vandermonde matrix. Math. Mag. 57, 15–21 (1984)

Ernst, T., Silvestrov, S.: Shift difference equations, symmetric polynomials and representations of the symmetric group. U. U. D. M. Rep. 1999, 14 (1999)

Ernst, T.: Generalized Vandermonde determinants. U. U. D. M. Rep. 2000, 6 (2000)

Klein, A., Spreij, P.: Some results on Vandermonde matrices with an application to time series analysis. Siam J. Matrix Anal. Appl. 25, 213–223 (2003)

Szegő, G.: Orthogonal Polynomials. American Mathematics Society (1939)

Serre, D.: Matrices: Theory and Applications. Springer, Berlin (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Lundengård, K., Österberg, J., Silvestrov, S. (2020). Extreme Points of the Vandermonde Determinant on the Sphere and Some Limits Involving the Generalized Vandermonde Determinant. In: Silvestrov, S., Malyarenko, A., Rančić, M. (eds) Algebraic Structures and Applications. SPAS 2017. Springer Proceedings in Mathematics & Statistics, vol 317. Springer, Cham. https://doi.org/10.1007/978-3-030-41850-2_32

Download citation

DOI: https://doi.org/10.1007/978-3-030-41850-2_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-41849-6

Online ISBN: 978-3-030-41850-2

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)