Abstract

The practicality of adopting Life Cycle Assessment to support decision-making can be limited by the resource-intensive nature of data collection and Life Cycle Inventory modelling. The number of chemical products increases continuously, and long-term analyses show that overall growth of chemicals production and demand as well as faster growth in emerging regions is a behaviour that is expected to continue in the future. Regrettably, chemical inventories are typically among the most challenging to model because of the lack of available data and the large number of material and energy flows. This explains why it is so important for the Life Cycle Assessment community to have effective methods to implement life cycle inventories of chemicals available. This chapter deals with the issues of Life Cycle Inventory analysis for chemical processes and the related lack of data concerning inventories of basic and fine chemicals substances. The overall aim of the chapter is to illustrate the different possibilities/approaches that the scientific Life Cycle Assessment community has developed in order to overcome such a lack of data concerning the inventories of a specific (basic and/or fine) chemical substance both for input and output flows. Their main advantages and drawbacks are identified and discussed briefly.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

The Life Cycle Inventory (LCI) analysis step within the ISO 14040 and 14044 standards involves the compilation and quantification of input/output data for a given product system throughout its life cycle (ISO 2006a, b). Data concerning energy and raw material inputs, products and co-products, waste, emissions to air, discharges to water and soil and other environmental aspects have to be collected (ISO 2006a, b). LCI is considered the most time-consuming, complicated and resource-demanding part of a Life Cycle Assessment (LCA) study (Laurent et al. 2014) and therefore a very critical phase within the entire LCA activities, as the degree of quantification of the inputs and outputs directly affect the following impact assessment and interpretation activities.

This challenge is particularly true in the case of chemical products. Tens of thousands of chemicals are currently in commerce, and hundreds more are introduced every year (USEPA 2016a). In the United States of America, the Toxic Substances Control Act (TSCA) Inventory listed about 85,000 chemicals in 2016 (USEPA 2016b). In Europe, over the first 10 years of the REACH Regulation, nearly 90,000 registrations for chemicals manufactured in, or imported to the EU at above one tonne a year have been submitted (ECHA 2018). The long-term analysis shows that overall growth of chemicals production and demand as well as faster growth in emerging countries is a trend that should continue in the near future. World chemicals sales are expected to reach the level of €6.3 trillion in 2030 (Cefic 2018). To compound the problem, for many of the fine chemicals the bill of materials may involve anywhere from twenty, fifty or more chemical compounds (depending on the complexity), each of which will require their own inventory data to accomplish the assessment (Jiménez-González and Overcash 2014). Because there are so many chemicals—and establishing respective data inventories is expensive and time-consuming—only a small number of today’s chemicals are represented in current LCI databases.

Compiling LCIs of chemical compound production can be a complex and challenging endeavour. However, several methods have been proposed in recent decades to facilitate their creation. This chapter aims to illustrate how the scientific LCA community has proposed to overcome the lack of data concerning life cycle inventories of chemicals and investigates the main advantages and drawbacks of each of these approaches.

2 Life Cycle Inventory Approaches for Chemicals

In the past decades, several efforts have been made to categorize the methods applied for LCI compilation. Suh and Huppes (2005) identified three different types of approaches, i.e. computational approaches, the economic input–output (EIO) analysis, and combinations of those two approaches. Being part of the first type, process flow diagrams are the oldest and the most common practice in LCI compilation, showing how processes of a product system are interconnected through commodity flows. Each process is represented as a ratio between several inputs and outputs. Using plain algebra, the amount of commodities fulfilling a certain functional unit is obtained. The second computational approach reviewed by Suh and Huppes (2005) is the matrix representation where a system of linear equations is used to solve an inventory problem. Next to this, the authors examined the application of EIO within LCA—starting in early 1990s, when macroeconomic models are combined with sector-level environmental data to estimate total supply-chain impacts of the production (Moriguchi et al. 1993). Hybrid approaches—linking process-based and EIO-based analysis and attempting to exploit the respective strengths and advantages of each of these two approaches—can be further distinguished into the following types: tiered hybrid analysis; IO-based hybrid analysis; integrated hybrid analysis. This review by Suh and Huppes (2005) is not focused on LCA applied on chemistry but it is the first clear classification of LCI methods and cites several case studies related to synthetic (chemical) products (such as e.g. Joshi 1999; Strømman 2001).

In 2014, Jiménez-González and Overcash examined the evolution of the application of LCA in the pharmaceutical and the chemical sector and analysed various methods for gathering inventory data (Jiménez-González and Overcash 2014). They fundamentally retraced the categories identified by Suh and Huppes (2005), thus listing process-based inventories, economic input–output inventories and hybrid approaches. In addition, they included methods such as industrial groups sharing manufacturing data with a third party (Boustead 2005) or streamlined tools (such as Wernet et al. 2009).

In their work about uncertainties within LCI data, Williams et al. (2009) introduced a different nomenclature scheme, distinguishing between bottom-up, top-down and hybrid approaches. In a bottom-up approach, each process along the supply chain of a product is described in terms of its proper inputs and outputs. Top-down approaches start from general, often economic and/or economy-based, data with the aim to extract process-specific information in the form of economic input–output inventories and hybrid approaches are a combination of the two approaches. Such a distinction between bottom-up and top-down approaches was proposed in recent years in several articles concerning new methods with a high aggregated level for implementing LCI of chemical products, like Cashman et al. (2016), Mittal et al. (2018), or de Camargo et al. (2018).

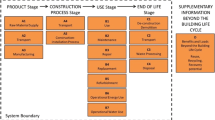

In this chapter, this classification in bottom-up, top-down and hybrid approaches is followed in order to guarantee a broader classification and thus a more comprehensive coverage of the issue of finding suitable LCI data for chemicals. Figure 1.1 summarizes the different methods covered in the chapter.

3 Bottom-Up Approaches

Bottom-up approaches move from the most detailed level towards the most general. In LCI, a bottom-up approach starts from a finely granulated, detailed process system by identifying the smallest transformation activities (covering the various inputs and outputs as shown in Fig. 1.2) and studying them attentively. These activities are then combined to form larger processes, with a successive incorporation of smaller processes into larger processes, until the entire system is implemented.

3.1 Direct Data Collection and Existing LCI Databases

Outlined as above, the LCI compilation from a bottom-up standpoint requires the collection of quantitative information about inputs and outputs, unit process after unit process.

Ideally, data collection for the unit process inventories is done at a relevant production/manufacturing site (primary data). This means gathering raw data at the plant and transforming them into inventory entries (material and energy inputs, co-products, emissions, waste streams). Raw data at facility level can be obtained from several relevant sources, e.g. by consulting bill of materials, collecting process monitoring data, proposing questionnaires or surveys to the plant personnel and performing on-site measurements. Conducting a proper and meaningful collection of raw data at plant for LCI purposes is not an easy task. Apart from the considerable effort in gathering all the relevant information, the LCA analyst has to reconcile discrepancies between different data sources in the plant and properly validate the resulting dataset by means of consistency and completeness checks. A structured approach is highly recommended and standards (ISO 14040 and 14044) offer useful guidance to the generation of unit process datasets from primary data. Further suggestions are given by the Global Guidance Principles for Life Cycle Assessment Databases, a publication issued under the auspices of the United Nations Environment Programme (Sonnemann et al. 2013).

An evident hindrance to the collection of relevant primary data for industrial operations at a chemical processing plant is represented by confidentiality issues. Frequently, information cannot be disclosed by companies or the time required to get publication clearance is not compatible with the time constraints of the LCA study.

Even when access to primary data at the plant is possible, data other than first-hand plant information (secondary data) are still needed to complete the LCA study. For example, an LCA investigator could obtain all the relevant information related to a manufacturing site of specialty chemicals, thus producing a detailed unit process dataset for the products of the site, but they are still in the need to find LCI data covering the supply chain of raw materials, as well as each related background process (e.g. generation of electricity, transport phases and auxiliary services). For this purpose, commercial databases, such as ecoinvent (Wernet et al. 2016) and GaBi (Thinkstep 2019), which are typically included in the licence of LCA software packages, are of widespread use in the LCA community. Gate-to-gate unit process datasets, as well as cradle-to-gate inventories, are given, with variable geographical and time validity. Public LCI databases, free or subscription-based, have been developed in countries such as Australia (Australian LCI Database Initiative; AusLCI 2019), Canada (Canadian Raw Materials Database; CRMD 2019), Japan (IDEA-LCA; AIST 2019), Sweden (SPINE@CPM; CPM 2019), Thailand (Thai National LCI Database; Wolf et al. 2016) United States (U.S. LCI Database; NREL 2019), but the extent of data coverage is generally limited, compared to commercial databases (Curran 2012). Other relevant data providers are represented by industrial organizations: for example, both the American Plastics Council and Plastics Europe manage free LCI databases for plastics manufacturing created on information provided by member companies.

At the European level, a harmonization effort of LCI databases has been started with the creation of the Life Cycle Data Network (JRC 2019), a common infrastructure where data from different organizations are published upon compliance with entry-level requirements. In the framework of the Single Market for Green Products initiative launched in April 2013, the European Commission proposed the development of the so-called Product Environmental Footprint (PEF) as a common method of measuring the environmental performance of products, with the aim to standardize the communication of the environmental impacts of products from companies to consumers. For several product categories, PEF category rules (PEFCRs) have been developed to provide specific guidance in LCI and LCIA calculations, with the ultimate aim of bolstering reproducibility and comparability of LCA results. The PEFCRs present the guidelines that companies should follow in the calculation of the PEF of their products and explicate how to refer to secondary datasets for all the raw, intermediate and auxiliary materials that are not produced by the company. PEF-compliant secondary datasets are already in development and are distributed through the Life Cycle Data Network. With particular reference to the use of chemicals, in the PEFCRs developed during the pilot stage of the initiative significant effort has been devoted to associate specific functions required in the manufacturing of a product to the families of chemical compounds that can have that function and to identify reference substances within each family for which LCI data are available. For example, in the production of leather, a required auxiliary compound is the tanning agent. The PEFCR for leather (De Rosa-Giglio et al. 2018) identifies categories of chemical products suitable for tanning (mineral agents, synthetic organic agents and vegetable tannins) and families within the categories (e.g. Al-, Cr-, or Zr-based agents in the mineral agents category), for which at least a representative compound presents a related LCI dataset available (e.g. aluminium sulphate for the Al-based agents). This systematization effort, which will be replicated in all the future PEFCRs, has the goal to simplify and homogenize the use of secondary datasets when primary data are lacking.

3.2 Process-Based Methods

Process-based methods of different levels of complexity focus on the production process to obtain the inventory data related to the production of a given chemical substance. Available approaches are vastly different in terms of data/time requirements and resulting accuracy (Parvatker and Eckelman 2019). Here, process-based methods are classified into three categories, depending on the information required for their use and the kind of LCI data that can be extracted (see Table 1.1): process chemistry, conceptual process design, and process modelling and simulation.

3.2.1 Process Chemistry

In the absence of any process data, the basis for estimating the LCI associated to the production of a given chemical substance is the reaction stoichiometry. For known chemicals, published balanced reaction pathways can be found easily in open datasets, such as the Ullmann’s Encyclopedia of Industrial Chemistry (Elvers 2011) or the Kirk-Othmer’s Encyclopedia of Chemical Technology (Kroschwitz and Seidel 2004), while for a newly synthesized product or a novel synthesis route developed at laboratory scale, stoichiometric data are directly available to the investigator from her own experimental protocol. It should come to no surprise that inventory compilation based on stoichiometry constitutes probably the most common approach for LCI data collection and even established LCI databases rely heavily on stoichiometric assumptions (Hischier et al. 2005).

Reaction stoichiometry allows calculating, for a unit of given chemical produced, the associated mass flows of reactants, by-products (emissions for LCI purposes) and co-products (if present, they are used for allocation or system expansion). If known, yield of reaction can be taken into account in the calculation. Regarding energy flows, basic thermodynamic data of the involved chemical species (heat of formation and heat capacity) can be used for a raw estimate of the energy requirements for heating (endothermic reactions) or cooling (exothermic reactions). The heat associated with a chemical reaction is the algebraic sum of the heats of the formation of reactants and products. The heat of formation of a compound can be retrieved from databases, e.g. the NIST-JANAF Thermochemical Tables (Chase 1998). In the absence of data, expeditious methods such as the group additivity approach (Holmes and Aubry 2012), which requires only the knowledge of the chemical structure of the compound, ensure an acceptable estimate of the heat of formation for LCA purposes. Likewise, the sensible heat to be provided to reactants to reach reaction conditions can be calculated from the specific heat capacity of the compounds, which can be retrieved from sources like the NIST Chemistry WebBook (Linstrom and Mallard 2019).

There are clear limitations to the information that can be extracted from reaction stoichiometry. This approach allows the main raw materials and products of the synthesis route to be traced, but neglects auxiliary materials, such as catalysts and solvents. Both classes of materials cannot be excluded without due consideration. As for solvents, e.g. 80–90% of the total mass involved in the production of an active pharmaceutical ingredient can be ascribed to solvent use (Raymond et al. 2010). Furthermore, the stoichiometric approach is inherently limited to reactions and ignores pre- and post-reaction stages. In particular, it excludes from LCI compilation the separation and purification stages, which in several contexts like, e.g. the bio-based industry (Huang et al. 2008), are the most energy-intensive steps.

3.2.2 Conceptual Process Design and Scale-Up Methods

To increase the level of detail and the representativeness of the estimated LCI, it is necessary to move up from process chemistry to process design considerations. The definition of the process requires: listing all direct and indirect chemicals involved in the process, determining the conditions for each operation (temperature, pressure and composition of process streams), assuming the efficiencies in chemical conversion and separation stages, and, finally, elaborating the flow diagram of the process (Jimenez-Gonzalez et al. 2000). As a result, the definition of a conceptual process flowsheet and the related unit operations allows drawing the mass and energy balances that provide the material and energy flows of the process.

The data needed to define the process can be extracted from relevant scientific or technical literature and from patent information. When it is not possible to retrieve adequate process details, information gaps can be filled by reasonable estimates and assumptions based on chemical engineering knowledge. Unfortunately, there is no general protocol for process flowsheeting (Papadokonstantakis et al. 2016). Nonetheless, general good practices for early process design, ranging from simple heuristics to short-cut models, have been developed and consolidated in the discipline. Guidance in the selection of assumptions (process yield, solvent recycling, heat recovery efficiency, etc.), as well as empirical design equations, can be found in reference texts such as the Coulson and Richardson’s Chemical Engineering Series (Sinnott 2005) or the Perry’s Chemical Engineers’ Handbook (Green and Perry 2007). Losses both in terms of energy and materials can be estimated following rules of thumb (Hall 2017). Diffuse and fugitive emissions can also be approximated using generic emission factors, like the ones collected by the US EPA under the AP-42 Compilation of Air Pollutant Emission Factors (US EPA 2010). Simplified methods to evaluate storage emissions or process vent emissions are proposed by Smith et al. (2017).

In addition, if the process under study can be decomposed into subprocesses, it is worth recalling that the LCA analyst can rely on established LCI databases (ecoinvent, Thinkstep, etc.) to model rather ‘standard’ process blocks and perform conceptual process design just for the most case-specific operations, filling specific gate-to-gate data gaps.

In many cases, the LCA practitioner dealing with process design considerations needs to analyse the environmental footprint of a novel chemical or a new route for the synthesis of a known chemical, discovered at the laboratory stage. Since the main interest is to develop a credible LCI of the theoretical future industrial scale, rather than conduct the mere LCA of the laboratory-based process, the investigator has to perform a prospective scale-up. The primary data gathered at laboratory level (material/energy inputs) cannot be generally considered representative of the same synthesis performed at commercial scale (Khoo et al. 2018). At lab stage, the aim of the researchers is just to demonstrate that a certain synthesis protocol works and the process itself is not optimized in terms of consumption of energy and auxiliary materials (Hischier et al. 2018). Several impacts can be reduced significantly when the process is established at industrial scale, thanks to an increased technological maturity, the advantages of economies of scale, and opportunities for heat integration and materials recycling (Arvidsson and Molander 2016; Gavankar et al. 2015).

A full process scale-up procedure would require a detailed feasibility study, generally involving fluid-dynamic modelling of equipment, pilot-scale experimental campaigns and process optimization trials (Righi et al. 2018). Nonetheless, simplified approaches have been proposed for the necessities of scale-up in the context of LCI data generation. This issue will be dealt in detail in Chap. 6. Here, it is just mentioned that process scale-up for LCA purposes is a topic of vivid discussion in the recent scholarly literature. Several considerations are based on expert judgment, e.g. in assessing how much solvent could be spared in industrial operation compared to the laboratory synthesis of a novel chemical compound (Hischier et al. 2018). In analogy with well-known scaling rules historically proposed for equipment cost estimation, other authors suggested the use of power-law relationships to estimate, e.g. how energy consumption scales with process size (Caduff et al. 2014). A more systematic approach is represented by scale-up frameworks proposed, e.g. by Shibasaki et al. (2007) and Zhou et al. (2017), with specific reference to the transition between pilot scale and commercial scale and, e.g. by Piccinno et al. (2016) and Simon et al. (2016), concerning the scaling of laboratory-based processes. These frameworks guide the user in the translation of lab operations into industrial process units, by providing simple scale-up formulae and leading the LCA practitioner in the analysis of similarities with existing industrial processes.

Another aspect that is generally overlooked in a laboratory synthesis but needs to be addressed considering its prospective industrial counterpart is the fate of waste streams (solid residues, gaseous and liquid effluents). The management methods adopted at lab scale might not be extended to the industrial scale. The LCA analyst might refer to the Best Available Technique reference documents, BREFs (European IPPC Bureau 2019), for an overview of the state-of-the-art on air and water pollution control devices in the industrial sector of interest. In BREFs, technologies for pollutant abatement are reviewed systematically, providing quantitative information on expected removal efficiency and associated consumption of reactants and energy. With a similar aim, Li et al. (2018) developed modular LCIs for some standard air pollution control units in the petrochemical sector. Differently from flue gases, effluents like waste solvents or wastewater streams might sometimes undergo treatment in external facilities (e.g. municipal wastewater treatment plants and municipal solid waste incinerators). To keep track of these streams and the inputs/outputs associated with their treatment, multi-input allocation models for LCI generation have been developed (see, e.g. Köhler et al. 2007 for wastewater treatment processes in the chemical sector and Seyler et al. 2004 for waste-solvent incineration).

3.2.3 Process Simulation

Once the process is defined, its modelling can be assisted by process simulation tools. Several commercial chemical process simulation (CPS) software packages are available on the market. Relevant examples include Aspen Plus, CHEMCAD, HYSYS, and Pro/II (Foo 2017).

All the considerations made above are still valid here, but the use of a process simulator offers a series of advantages over the simple process design calculations mentioned above. Besides providing material and energy flows for each equipment, process simulation allows for a quick screening of alternative plant configurations. Sensitivity analyses around operating conditions can be performed to assess how assumptions on the process variables influence the inventory data, thus exploring the uncertainties associated with the generated data.

Another advantage of computer-based tools is the relative ease in studying opportunities of process integration. This includes both energy and material integration within a process, as well as ‘total site’ integration, if the modelled process is to be realized, e.g. in an industrial park sharing infrastructure and utilities between processes. Generally speaking, integration can decrease markedly the entity of input and output flows in LCI, although trade-offs may also take place when, for instance, the recycling of by-products requires energy-intensive operations (Papadokonstantakis et al. 2016). A notable example of the use of CPS software to explore process integration in an LCA viewpoint is the recent work by Lari et al. (2018), where detailed process simulation allowed assessing the potential environmental advantages of integrating different glycerol upgrading routes within a single biorefinery.

The flexibility of CPS software is increased by the possibility to integrate external models within the framework of process simulation. For example, with the aim to determine the environmental footprint of the use of different solid sorbents for the abatement of gaseous acid pollutants, Dal Pozzo et al. (2017) nested a detailed reaction model inside a simulation of the acid gas treatment system in a CPS environment, thus coupling an accurate description of the gas–solid reaction with the modelling of unit operations and utilities provided by the CPS software. Likewise, the outputs of a process simulation can be interfaced with other, ancillary software tools. For example, the US EPA offers a suite of free tools for the estimation of uncontrolled (diffuse and fugitive) emissions from chemical processing equipment like, e.g. TANKS for the modelling of storage emissions (US EPA 2006a) or WATER9 for the modelling of fugitive emissions from wastewater treatment operations (US EPA 2006b), which can be complemented to process simulation.

3.3 Dedicated LCI Software

As the bottom-up approach relies on process design methods and it requires an extensive engineering knowledge that can be challenging, in addition to the above-described CPS software tools, dedicated software and/or tools have been developed in the past few years in order to support designers, engineers and LCA practitioners in view of establishing LCI data of chemical substances. A thorough web search, using search terms such as ‘Life Cycle Inventory and tool’ and ‘Inventory and chemicals’ has been carried out, but revealed a limited number of suitable tools (listed in Table 1.2).

CLiCC

The Chemical Life Cycle Collaborative (CLiCC) tool is composed of three modules addressing different goals and needs of its user: (i) a screening-level assessment, (ii) a ‘full’ Life Cycle Assessment and (iii) Risk Assessment. With its first module, CLiCC enables a screening of life-cycle impacts for new chemicals, and thus can be used as early predictive tool, offering at the same time support for alternative comparisons. The second module (i.e. LCA) contains two sub-modules about life cycle inventory (LCI) estimates and life cycle impact assessment (LCIA) estimates. The first sub-module covers ‘cradle-to-gate’ inputs/outputs of chemical production via a customizable general chemical production model. The tool contains already more than 1.100 chemical-manufacturing process modules. Their input data—collected from publicly available industrial reports and general references such as Ullmann’s Encyclopedia of Industrial Chemistry (Elvers 2011)—have been converted into LCI data. These LCI data have been established on the basis of stoichiometric equations in order to provide estimates of raw materials, efficiency, and product selectivity. The related energy requirements of each manufacturing process (for cooling or heating, as well as the energy gained/lost during the different reaction steps) are established using thermodynamic theories (such as Gibbs free energy). The emissions were estimated using a chemical factor model. For new substances, the user can choose to use one of the existing data as ‘proxy’ chemical or to build a ‘new’ LCI, based on own input data. For the latter, reactants, co-products, by-products by mass, as well as the electricity and heating values are required. Artificial Neural Network (ANNs) is applied to characterize the relationship between chemical descriptors and life cycle impacts (CED, Acidification, GWP, Eco-indicator, Human health and ecosystem quality) (Song et al. 2017).

EATOS

The Environmental Assessment Tool for Organic Synthesis (EATOS) is an easy-to-use tool that can be used to discuss and compare chemical reactions to their potential environmental impact to make them more environmentally friendly (Eissen and Lenoir 2017). The inventory data are established on the basis of the stoichiometry of the synthesis of the chemical under investigation. The tool provides a default list of synthesis mechanisms, however new synthesis routes can be added by the user. Only the input of the stoichiometric reaction equation and the amount of starting materials are required for the determination of the mass balancing. The tool comprises a weighting function that allows accounting the relevant environmental aspects for an estimation of the synthesis’ danger potential. Quantitative material flows are weighted with their qualitative characteristics or weighting factor (‘Q’). The tool comprises the claiming of resource consumption, risks, human toxicity, chronic toxicity, ecotoxicity, ozone creation, air pollution, accumulation, greenhouse effect, eutrophication and acidification as impact categories. For each category, a specific weighting factor (the values range from 0 to 10) is provided. Chemical data such as MAK value, hazard symbol, LD50, LC50, WGK value, POCP, BCF and R-phrases are used to determine the Q factors. The software provides a graphical evaluation that is useful for understanding which is the phase that has more environmental impacts, as well as for comparing different processes.

Ecosolvent

This tool from ETH Zürich is a dedicated tool for the environmental assessment of waste-solvent treatment in the chemical industry. It is valuable to support a decision in chemical industry based on a retrospective assessment of the environmental impact of waste-solvent processes (Capello et al. 2007, 2008; Amelio et al. 2014). The tool comprises four different LCI models representing the most commonly used waste-solvent technologies—i.e. solvent recovery by distillation, thermal treatment in a cement kiln, waste-solvent incineration and wastewater treatment in case of aqueous distillation residues (Capello et al. 2005; Seyler et al. 2005). The integrated LCI model calculates waste-solvent specific inventory flow as a function of the waste-solvent composition and the treatment technology. The tool has a tiered structure; precise information on the treatment technologies, i.e. measured steam consumption of a distillation process, can be entered and the results show a relatively small uncertainty range. However, the tool may also be used if such precise information is missing and generic data are used to abridge the lack of information. In this case the results show larger uncertainty ranges. The model relies on the principle of a multi-input allocation model—for a detail explanation see Azapagic and Clift (1999)—allowing for ‘a calculation of the environmental impacts of a specific product out of measurement data for a mixture of several products’ (Seyler et al. 2005). The statistical evaluation provides an empirical average value as well as the fitting of probability distributions for all the inventory parameters; these data can be used to perform a quantitative uncertainty analysis (i.e. Monte Carlo simulation). Inventory data for ancillaries, fuels, energy from industry data and the ecoinvent database are applied.

Fine Chem

This tool from ETH Zürich is a dedicated tool to estimate the resource use and environmental impacts of petrochemical production (Wernet et al. 2008, 2009). The tool can be applied for the purposes of screening LCA, supply chain management and process design. The tool estimates the inventory data, of chemicals’ production stage, based on the molecular structure of a chemical. The tool has been developed by using neural network model to estimate the key production parameters directly from the chemicals’ molecular structure. The neural network model was established on mass and energy flow data on petrochemicals productions of 338 chemicals, based on industrial production data and on the ecoinvent database. The molecular structure-based model offers several advantages, such as, a low number of inputs data and a priori knowledge of the production process is not required. As an input ten chemical descriptors are required (i.e. number of nitrogen and halogen atoms, molecular weight, etc.). Results are cradle-to gate inventory data, as well as related Life Cycle Impact Assessment results (i.e. CED, GWP, Eco-indicator 99 score). Also, the tool provides an uncertainty analysis of the estimated values, allowing an assessment of their prediction accuracy.

WW LCI v3.0

A further tool (programmed in MS Excel) that is designed to calculate LCI of wastewater discharges down to the drain or directly into the aquatic environmental (Munoz et al. 2017; Kalbar et al. 2018; Munoz 2019). It provides a database on the wastewater treatment levels and sludge disposal practices of 81 different countries. Its database has been established on public data sources (i.e. Eurostat, OECD statistic and country-specific statistic). The Inventory data are calculated including infrastructure requirements, energy consumption, auxiliary materials for the treatment of wastewater, disposal of sludge and sewage. Four wastewater treatment levels are accounted: primary treatment (suspended solid settling), secondary treatment (aerobic biological treatment), tertiary treatment (nitrogen and phosphorus removal, sand filtration, disinfection by chlorination) and septic tank (on-site primary treatment). The outputs of wastewater level treatments are, i.e. fraction of chemical of effluent discharged in natural water body and the fraction of chemical discharged in the sludge. Sludge treatments by means of anaerobic digestion, composting, incineration and landfilling are modelled. The sludge treatments processes are assessed by mass balance equation (i.e. conversion of sludge into compost, combustion of biogas), and the data for the infrastructures are based on the ecoinvent data set (Munoz et al. 2017). In the model proposed by WW LCI, the fate of chemical (i.e. degradability) is included. Thus, the exchanges with the environment are assessed on the basis of the predicted behaviours (fate factor values) of the chemical in the wastewater treatment plant (WWTP) and in the environment. The fate factors of chemicals in WWTPs are assessed using SimpleTreat model (Franco et al. 2013) and USES-LCA (Van Zelm et al. 2009) is used to estimate the fate of the chemical in the environment.

Table 1.3 (on the next two pages) summarizes the key aspects of these various tools described in the preceding paragraphs above.

4 Top-Down Approach

Top-down approaches start, opposite to the above-described bottom-up approaches, from the most general level and proceed towards a much more (case-study) specific level. In an LCI analysis, a top-down approach uses general data (e.g. at global or national level) to derive the input and output flows definition of a specific system.

4.1 Economic Input–Output Analysis

Economic input–output (EIO) analysis is a macroeconomic technique where the complex interdependencies across different sectors/branches of an economy are represented by a set of linear equations. EIO analysis was theorized and developed by Leontief in the 1930s (Leontief 1936). The most basic form of EIO analysis involves so-called input–output tables. Such tables quantify the supply chain for all sectors of an economy displaying information using a series of rows and columns of data. Industry sectors are listed in the heads of each row and each column. The data in each row represents the amount of output sent from an industrial sector to the different column sectors for productive use. The data in each column corresponds to the amount of inputs used in that industrial sector.

In the 1970s, along with increasing concerns on the environment, Leontief and co-authors proposed to include environmental externalities in input–output models. In such a way, pollutant emissions and natural resources consumption are treated as sectors of the input–output model (Leontief and Ford 1970). In 1995, Lave and co-workers proposed the economic input–output life-cycle analysis (EIO-LCA) to address the problem of subjective boundary definition (Lave et al. 1995). The EIO-LCA models incorporate economic matrices of sector-based environmental and resource-use coefficients. Those models allow the LCA researchers and practitioners to evaluate the environmental impacts associated with a product, as represented by one or more economic sectors. Since the initial implementation in the US (Hendrickson et al. 1998), EIO-LCA models have been developed for many countries around the world and have been widely used for analysing a wide range of products, including fuels, chemicals, and plastics, as described in the following.

4.1.1 Mathematical Structure of Economic Input-Output Life-Cycle Assessment

The essential tool for the analysis is a matrix indicating sector-to-sector flows of purchases, which is denoted by A and called the direct requirements coefficient matrix. An element aij of matrix A represents the monetary value of the input required from sector i to produce one unit of monetary output of sector j (i = 1…n, and j = 1…n) (e.g. value of electricity required to produce one monetary unit of steel). I is the identity matrix of dimensions n by n (to account for the output of each sector production stage itself). Finally, Y represents the vector of the required output of each sector (e.g. 100 dollars of steel) and X represents the vector of total inputs of each sector (e.g. dollars of electricity required to produce 100 dollar of steel). Then X can be obtained by multiplying the matrix (I + A) by the vector of required outputs:

In (1.1) only direct (first-level supplier) inputs are taken into considerations. It is possible to consider also the second-level supplier requirements considering the matrix AA, the third-level supplier requirements by the AAA matrix, etc. Therefore, the X vector including all supplier outputs can be obtained as:

The expression (I + A + AA + AAA + …) can be shown to be equivalent to (I − A)−1; then (1.2) can be written as:

The (I − A)−1 matrix is commonly named Leontief Inverse matrix.

This EIO analysis can be adapted to environmental purposes. An environmental impact would characterize the discharges into air, water, underground, and on land of an output from each industry. Suppose B is a k by n matrix of environmental burden coefficients, where bkj is environmental burden k (e.g. carbon monoxide emissions) per dollar output of sector j; and M is the vector of total environmental burdens. Then, the vector of total environmental burdens is calculated by:

4.1.2 EIO-LCA Applied to Chemicals

Lave et al. (1995) proposed the first EIO-LCA approach with the aim of capturing economic interdependencies for examining the economy-wide environmental implications of a product. The authors compared plastic cups to paper cups and they applied the US economic input–output tables that were compiled for 519 sectors. The two products have been approximated by the corresponding commodity sectors. In detail, they modelled the paper cups by using the data for the industry sector ‘Paperboard Containers and Boxes’ and the plastic cups by using the industry sector ‘Plastic Materials and Resins’. The electricity consumption and the toxic chemicals release have been used as environmental burdens. The toxic chemicals release was characterized by destination media (air, water, underground, land) and by substances (320 toxics were included). Results highlighted the relevance of indirect suppliers that are generally neglected by ‘conventional’ (bottom-up) LCI.

Joshi (1999) proposed an analytical model consisting of the 498 sectors of US economic input–output tables augmented with various sector-level environmental burden vectors, including energy use, non-renewable ores use, conventional pollutant emissions, toxic releases, hazardous solid waste generation and fertilizer use (as an indicator of the eutrophication potential). Impacts from individual pollutant emissions were aggregated using appropriate characterization factors in global warming, acidification, ozone depletion, toxicity and energy use. In addition to the EIO-LCA approach, Joshi (1999) proposed five alternative models that (i) introduce a new hypothetical sector entering into the economy (Model II); (ii) use selective disaggregation of aggregate input–output data (Models III and IV); (iii) include use and end-of-life phases (Models V and VI). These alternative models were, subsequently, classified as ‘hybrid approaches’. The author presented a case study comparing steel and plastic automobile fuel tank systems and he also compared the results of EIO-LCA to a conventional process-based LCA. The author highlighted that despite using very different methodologies there is a high degree of correspondence between the comparable results from these two approaches. Moreover, the absolute amount of impacts reported in EIO-LCA is generally lower than those reported by the process-based approach (Joshi 1999).

In 1999, the EIO-LCA method was transformed into a user-friendly online tool by researchers at the Green Design Institute of Carnegie Mellon University. The website is free for non-commercial use. The EIO-LCA models available on the site apply the EIO-LCA method to various national and state economies. Each model is comprised of national economic input–output models and publicly available resource use and emissions data. Since 1999, the online tool has been accessed over 1 million times by researchers, LCA practitioners, business users, students and others (CMU Green Design Institute 2019).

While the method found applications in many countries (e.g. Australia, Germany, Denmark, Japan), in the USA the efforts in the EIO-LCA development continued and permitted great progresses. In 2005, a new environmental database for the US, named ‘Comprehensive Environmental Data Archive (CEDA) 3.0’ was launched (Suh 2005). CEDA 3.0 covered a total of 1,344 environmental flows (both resource and emissions). The toxic pollutants part of the database comprises about one thousand toxic chemicals. Data derived from Toxics Releases Inventory, National Toxics Inventory and National Center for Food and Agricultural Policy databases.

The next step was made by Hawkins and co-authors (Hawkins et al. 2007). The authors combined Materials Flow Analysis (MFA) and EIO model to create the Mixed-Unit Input-Output (MUIO) model. In their approach the sector output, which is generally considered in monetary terms, can be also expressed in physical units. Therefore, the MUIO model provides the total physical and monetary output required to meet an additional final demand by sector and stage, in the supply chain. In their study, they tested the model by using physical and monetary units to describe the output of sectors that produce heavy metals and products containing these metals. Material flow data were obtained from the US Geological Survey.

Generally, EIO-LCA allows evaluating the environmental impacts of chemical emissions just on the basis of the overall mass of chemicals released into the environment, without taking into consideration the potential concentration of those chemicals in the different environmental media. This is performed through the data supplied by individual plants of specified industries about their toxic releases into air, water, land and underground. Wright et al. (2008) integrated EIO-LCA with a multimedia fate and transport model (CHEMGL) and a human risk assessment tool for a screen-level-analysis to examine the relative risk posed during each life cycle stage of a chemical. The CHEMGL model was applied to predict the chemical concentration in environmental compartments, for each life cycle stage of a chemical (the production, manufacturing and consumption stages). The predicted concentration is then input into a risk assessment tool. The latter assesses the human and fish risk potential, per unit of chemical released, by combining toxicity data (i.e. reference dose) and exposure via inhalation and ingestion. Finally, the relative risk, in units of toluene equivalent, is determined by multiplying the risk potential per unit release by the environmental release into the air boundary layer, surface water, surface soil and groundwater based on EIO-LCA model. This integrated life cycle methodology allows chemical designers to evaluate each stage and assess areas where the risk can be minimized using alternative chemicals or process operations.

Recently, Meng and Sager (2017) provided a global view of China’s petrochemical industry’s energy consumption and CO2 emissions by using an EIO-LCA model from both production and demand perspectives. The method permits the authors to calculate not only the direct energy consumption and energy-related CO2 emissions but also the indirect amounts that can be identified throughout the supply chain. The results of the study indicate that the indirect energy consumption and CO2 emissions embodied and conveyed throughout the supply chain are highly significant, though often overlooked. The Chinese petrochemical industry accounts for 23% of the total energy consumption and 32% of CO2 emissions. These indirect loads cannot be neglected in determining the role and responsibilities of sectors in energy conservation and emissions reduction.

The EIO-LCA addresses the conventional LCA limitation of excluding a large set of activities from the analysis with narrow study boundaries since the entire national economy is included in the boundary of this analysis. Moreover, it offers the advantage that analyses can be performed in minutes, not months. Also, all data used by the model are publicly available government data and therefore valid and accepted. The main limitation of EIO-LCA analysis is the problem of data aggregation, resulting in a limited granularity: the product of interest, indeed, is approximated by its commodity sector in the national input–output tables with respect to input requirements; in this way the inventory data suffers from scarce accuracy.

4.2 Data Mining, Web Mining and Big Data Applied to Chemicals

According to Hand et al. (2001), ‘Data mining is the analysis of (often large) observational data sets to find unsuspected relationships and to summarize the data in novel ways that are both understandable and useful to the data owner’.

Data mining could be another suitable approach to compile chemical inventories (Cashman et al. 2016). Relevant examples in chemical fields are the exploitation of the national inventories to develop EIO-LCA models. For example, Suh (2005) integrated US EPA Toxics Release Inventory (TRI) and National Emissions Inventory (NEI) to develop sector-average emission data for all industries covered in the EIO tables, including petrochemical production. Recently, Sengupta et al. (2015) highlighted that the potential for underreporting in national emission inventories can result in significant potential for underestimation of emission factors when calculated on a sector-average basis. In this regard, the authors proposed an approach that can be used to improve the estimation of sector-average emission factors for use in EIO-LCA studies and, starting from national inventories, used it for a case study estimating environmental impacts of ethanol and gasoline.

Data mining is an important approach also for gathering characterization data. For example, using the physicochemical and toxicity data available in databases from the European Chemical Agency, the European Food Safety Authority and the University of Hertfordshire, EC-JRC has calculated ecotoxicity and toxicity characterization factors for over 6,000 chemical substances (Saouter et al. 2018).

Web mining is the use of data mining to discover and extract information from web pages. In the last few years, many national statistical institutes provide open data concerning several specific sectors (e.g. environment, economy, society, etc.) that could be extremely useful for implementing LCA models. Often such data have different formats and are incomplete and it is therefore difficult to share and compare them. In order to overcome these problems, the Semantic Web proposes standards to promote common data formats and exchange protocols on the web (Berners-Lee et al. 2001). The US EPA is implementing semantic management of linked open data (LOD) for environmental databases such as Toxics Release Inventory (TRI) and the Chemical Data Reporting tool (CDR). This shift can support a transition to semantic data mining for improved inventory modelling. In this sense, very recently, some scientific papers analysed the use of semantic information resources for improving LCA (Cashman et al. 2016; Ingwersen et al. 2015; Kuczenski et al. 2016; Zhang et al. 2015). In the field of chemical products and processes, the work of Cashman et al. (2016) is of special interest. These authors have proposed a method for standardizing and automating the discovery and use of publicly available data at the US EPA for chemical-manufacturing LCI. The method is applicable to chemicals traced in CDR. In 2018, Mittal and co-authors presented two coupled ontologies, i.e. semantic data models, with the ultimate goal of developing an automated life cycle inventory modelling (Mittal et al. 2018). The ontologies, called Lineage and Process, were developed in the Web Ontology Language. The described ontological modelling provides a means to identify and eventually predict the synthesis route of a chemical, while connecting this knowledge with relevant process information.

The definition of Big Data generally refers to large and unwieldy groups of data that regular database management tools have difficulty in capturing, storing, sharing and managing (Sharma and Gulia 2014). Recently, the use of Big Data to collect a great amount of data for implementing LCI has prompted much interest in the LCA community. Cooper et al. (2013) reported several examples of Big Data collected by national agencies and institutions and used in LCI and LCIA. Song et al. (2017) analysed the use of Big Data in environmental performance evaluation with a focus on thermal power plants. The authors highlighted that combined use of Big Data and LCA should be significantly improved before being successfully applied to assess environmental performances.

5 Hybrid Approach Applied to Chemicals

Hybrid techniques attempt to combine the benefits of both process-based and EIO analyses, while minimizing their limitations. Process-based analysis is accurate and detailed but is generally time-intensive and can be subject to considerable variability. EIO analysis is relatively fast and representative at the national level; moreover, the system boundaries are wide-ranging; however, it can be less accurate than process-based LCI (Treloar 1997). As stipulated above, Suh and Huppes (2005) distinguished three categories of hybrid analyses: (i) tiered hybrid or process-based hybrid; (ii) IO-based hybrid and (iii) integrated hybrid. Tiered hybrid analysis utilizes process-based inventory data for use and disposal phases, as well as for several important upstream processes, and then the remaining input data are imported from an EIO-based LCI (Suh and Huppes 2005). An example of its first application is the Model II proposed by Joshi (1999). Then, in order to improve the tiered hybrid analysis, Suh and Huppes (2002) introduced the Missing Inventory Estimation Tool (MIET) that combines the strengths of process-based LCA and EIO-LCA. The general strategy of MIET is to minimize the use of EIO tables for major processes by restricting its application only to the flows located at the margin of the system boundaries. IO-based hybrid analysis is based on the extraction of a particular path from EIO table and substituting them with process-based data (Islam et al. 2016). The disaggregation procedure is the most essential part of IO-based hybrid approach. Models III and IV proposed by Joshi (1999) concern disaggregation procedures in hybrid approaches. An integrated hybrid approach implies that the process-based data is fully incorporated into the IO model, represented in a technology matrix by physical units per unit operation time of each process while the input–output system is represented by monetary units (Suh et al. 2004).

Hybrid approach has been applied successfully also to the chemical sector. Biofuel sector shows several applications of hybrid LCI analysis. Life cycle water consumption of corn-based ethanol, soybean biodiesel, cellulosic ethanol from switch grass and microbial biodiesel was assessed by Harto et al. (2010). The tiered hybrid model was applied due to the wide range of technologies being covered. Tiered hybrid analysis was used also by Strogen and Horvath (2013) in order to assess life-cycle greenhouse gas (GHG) emissions of petroleum and biofuels. Hybrid LCI analysis detailed physical data were used as inputs to model energy production and consumption processes, and economic data were used to characterize manufacturing, construction and maintenance activities. In Watanabe et al. (2016) a hybrid approach was applied to the first- and second-generation ethanol production in Brazil. The method is similar to IO-based hybrid analysis: data on direct and downstream requirements are collected according to the process analysis, whereas the remaining upstream requirements are covered by EIO tables. Data for each biorefinery from process-based LCI were inserted as a group of new sectors and commodities into the IO model by following the approach of Joshi (1999). A very promising approach is the combined use of multi-objective optimization and integrated hybrid LCA (Yue et al. 2016). This quantifies both direct and indirect environmental impacts and incorporates them into the decision-making process in addition to the conventional economic criteria. That approach has been demonstrated in the bioethanol supply chain. Liu et al. (2018) have applied the tiered hybrid model to the environmental assessment of biofuels from corn-stover: the impacts caused by the direct chemical emissions are evaluated by process-based approach, whereas the environmental impacts caused by the indirect chemical emissions are analysed by the EIO-LCA model. The indirect emissions are found to be significant.

Two further examples of hybrid approach applied to chemical products are the studies of Tatari et al. (2012) and Rodríguez-Alloza et al. (2015) on warm-mix asphalts. The former study developed a tiered hybrid LCA model and focused on the thermodynamic aspects, the latter one performed an IO-based hybrid analysis.

A final example is the work of Alvarez-Gaitan et al. (2013), which has developed a tiered hybrid LCA of water treatment chemicals. The authors compared these results with process and input–output models for caustic soda, sodium hypochlorite, ferric chloride, aluminium sulphate, fluorosilicic acid, calcium oxide and chlorine gas. In many cases, very close results have been obtained from process-based- and hybrid-LCA. Moreover, the research shows that where there are important price fluctuations in the raw materials, hybrid modelling provided a more robust output.

Discussion and Conclusions

Existing life cycle databases cover only a portion of the vast and increasing variety of chemicals available on the market. LCA practitioners willing to investigate the environmental footprint of novel products and novel production routes have the need to obtain first-hand representative data on the related material and energy flows. This chapter offered a concise overview of the possible approaches to generating inventory data.

In bottom-up approaches, the LCI is developed from process considerations, ranging from the mere knowledge of reaction stoichiometry to a full-fledged process simulation, passing through process design calculations of gradually increasing complexity. A clear advantage of this approach is the control on the modelled system. For the LCA analyst that develops a process-based LCI, the gate-to-gate production process of a target chemical is not anymore a non-detailed ‘black box’, with just known inputs and outputs, as in the traditional LCA practice. As such, LCIs generated from process-based considerations are potentially more detailed than LCIs found in commercial databases and offer to their creators the flexibility to assess changes in the process conditions and an increased awareness of the uncertainties in the inventory data.

Clearly enough, increasing the level of detail in LCI generation comes at the price of increasing time effort and increasing requirements of domain knowledge in industrial chemistry and chemical engineering. In particular, process modelling and simulation is a powerful approach, but solid expertise is required to generate meaningful, representative LCI results. The trade-off between accuracy in LCI modelling and time/expertise constraints has to be clear to the LCA practitioner. Not every LCA study requires the highest level of detail in inventory compilation and, depending on the specific goal and scope, the choice of simpler process-based methods can be more than adequate.

In this respect, dedicated LCI software tools have been developed to streamline the compilation of inventories for specific systems. The development of these tools is a rather new area of LCA-related research. In fact, only 5 dedicated LCI software could have been identified for the area of synthesis of chemical products. These tools are not in all cases covering all the life cycle stages of the actual chemical synthesis—i.e. some of them focus only on a single stage (e.g. discharges) of the entire life cycle of a chemical. One part of these tools also include an LCIA module and therefore allows a full LCA computation of (the synthesis of) chemical products. These dedicated tools are particularly useful in screening applications. When a vast number of alternative chemicals has to be compared to identify the ‘greener’ choice (e.g. for a solvent), it would be unfeasible to elaborate process flow diagrams for the production of each compound, whereas the use of a dedicated tool can give a swift, approximate result. On the other hand, care should be given in using such tools properly, for example avoiding extrapolation to product categories/production routes not covered by the software (e.g. using for the production of a chemical via a bio-based route a tool trained only for conventional, fossil-based processes).

At the other end of the spectrum compared to bottom-up methods, top-down approaches use general data to compile LCI for a specific system. EIO-LCA takes a top-down approach and treats the whole economy as the boundary of analysis, thus overcoming the conventional LCA limitation of excluding a large set of activities from the analysed system because of a narrow boundary definition. EIO-LCA offers the advantage that analyses can be performed very quickly. Also, all data used by the model are publicly available government data; therefore, the issue of preserving the confidentiality of industrially sensitive information does not exist. The main limitation of EIO-LCA analysis is the problem of aggregation. Therefore, the product of interest has to be approximated by its commodity sector in the national input–output tables with respect to input requirements and environmental coefficients. Moreover, EIO-LCA accounts the upstream environmental burdens associated with raw materials extraction and manufacturing phases, but not those associated with use and end-of-life options. Lastly, EIO-LCA allocates environmental burdens based on market value.

Web mining will continue and even increase over the coming decades and it shows enormous potential for applications in LCA. Currently, there is the need of standardization of format and the Semantic Web offers a common framework for standardizing data. Big Data appears as a vital source of data and the LCA community has already started to use it. However, this open and collaborative model for data production creates new challenges in data integration and harmonization. Therefore, there is also a need to verify applicability, reliability and stability of an application of Big Data in the assessment of environmental performances. All of this is fundamental in order to provide environmental performance assessments by using Big Data in order to provide a scientific basis for supporting environmental management policies. However, to date, a significant improvement in the integration of Big Data and LCA is needed for their successful application in environmental performance evaluations.

A hybrid approach combines the strengths of top-down input–output analysis and bottom-up process analysis, allowing for specificity, accuracy and system completeness while eliminating boundary truncation errors. That approach allows direct and indirect burdens to be included and some authors demonstrate that indirect burdens are often not negligible. Free online input–output tools, like EIO-LCA (Carnegie Mellon University Green Design Institute 2012), would be helpful in the future development of hybrid models.

With such a variety of methods available for LCI compilation, the LCA analyst has to make a choice based on the specific study to be developed. Meyer et al. (2019) recently introduced the concept of ‘purpose-driven reconciliation’ to propose a logical framework for approach selection. Depending on the goal and scope of the LCA study to perform (e.g. whether it has a regulatory or design purpose, whether it assesses a novel or existing substance, whether it is a screening of alternative chemicals or a detailed comparison of process schemes, etc.), the most important constraint can be complying with a data quality criterion, or meeting the timeline to decision, or addressing specific data gaps. By analysing the trade-offs of each available approach, as has been briefly done in this chapter, the LCA practitioner should identify which of these generally meets the constraints and opt for it, while leaving open the possibility of using alternative methods to retrieve specific missing information or even to revisit the decision-making approach, if needed.

References

Alvarez-Gaitan PJ, Peters MG, Rowley VH et al (2013) A hybrid life cycle assessment of water treatment chemicals: an Australian experience. Int J Life Cycle Assess 18:1291–1301

Amelio A, Genduso G, Vreysen S et al (2014) Guidelines based on life cycle assessment for solvent selection during the process design and evaluation of treatment alternatives. Green Chem 16:3045

Arvidsson R, Molander S (2016) Prospective life cycle assessment of epitaxial graphene production at different manufacturing scales and maturity. J Ind Ecol 21:1153–1164

AusLCI (2019) Australian Life Cycle Inventory Database Initiative. http://www.auslci.com.au. Accessed 30 May 2019

Azapagic A, Clift R (1999) Allocation of environmental burdens in multiple-function systems. J Cleaner Prod 7:101–119

Berners-Lee T, Hendler J, Lassila O (2001) The semantic web. Sci Am 284:34–43

Boustead I (2005) Eco-profiles of the European plastics industry. Association of Plastics Manufacturers in Europe (Plastics Europe), Brussels

Caduff M, Huijbregts MAJ, Koehler A et al (2014) Scaling relationships in life cycle assessment. J Ind Ecol 18:393–406

Capello C, Hellweg S, Badertscher B et al (2007) Environmental assessment of waste-solvent treatment options. J Ind Ecol 4:26–38

Capello C, Hellweg S, Badertshcer B et al (2005) Life cycle inventory of waste solvent distillation: statistical analysis of empirical data. Environ Sci Technol 39:5885–5892

Capello C, Hellweg S, Hungerbühler K (2008) Environmental assessment of waste-solvent treatments options. J Ind Ecol 12:111–127

Carnegie Mellon University (CMU) Green Design Institute (2019) Economic input-output life cycle assessment (EIO-LCA) model. CMU, Pittsburgh. http://www.eiolca.net. Accessed 28 Feb 2019

Cashman SA, Meyer DE, Edelen NA et al (2016) Mining available data from the United States environmental protection agency to support rapid life cycle inventory modeling of chemical manufacturing. Environ Sci Technol 50:9013–9025

Cefic (2018). FACTS & FIGURES of the European chemical industry. http://www.cefic.org/Documents/RESOURCES/Reports-and-Brochure/Cefic_FactsAnd_Figures_2018_Industrial_BROCHURE_TRADE.pdf. Accessed 28 Feb 2019

Chase WM (1998) NIST-JANAF Thermochemical Tables. American Institute of Physics for the National Institute of Standards and Technology, Washington, DC

Cooper J, Noon M, Jones C et al (2013) Big data in life cycle assessment. J Ind Ecol 17:796–799

CPM (2019) SPINE@CPM— the operational prototype for industrial LCA databases. Center for Environmental Assessment of Product and Material Systems, Chalmers University, Göteborg, Sweden. http://cpmdatabase.cpm.chalmers.se/AboutDatabase_1.htm. Accessed 30 May 2019

CRMD (2019) Canadian raw materials database. University of Waterloo. http://uwaterloo.ca/canadian-raw-materials-database/. Accessed 30 May 2019

Curran MA (2012) Sourcing Life Cycle Inventory Data. In: Curran MA (ed) Life cycle assessment handbook: a guide for environmentally sustainable products. Wiley, Hoboken, NJ p, pp 105–141

Dal Pozzo A, Guglielmi D, Antonioni G et al (2017) Sustainability analysis of dry treatment technologies for acid gas removal in waste-to-energy plants. J Cleaner Prod 162:1061–1074

de Camargo AM, Forin S, Macedo K et al (2018) The implementation of organizational LCA to internally manage the environmental impacts of a broad product portfolio: an example for a cosmetics, fragrances, and toiletry provider. Int J Life Cycle Assess 24(1):104–116

De Rosa-Giglio P, Fontanella A, Gonzalez-Quijano G, Ioannidis I, Nucci B, Brugnoli F (2018) Product environmental footprint category rules - Leather. https://ec.europa.eu/environment/eussd/smgp/pdf/PEFCR_leather.pdf. Accessed 19 Nov 2019

ECHA (2018) 21,551 chemicals on EU market now registered. https://echa.europa.eu/-/21-551-chemicals-on-eu-market-now-registered. Accessed 28 Feb 2019

Eissen M, Lenoir D (2017) Mass efficiency of alkene syntheses with tri-and tetrasubstituted double bonds. ACS Sustain Chem Eng 5:10459–10473

Elvers (ed) (2011) Ullmann’s encyclopedia of industrial chemistry, 7th edn. Wiley-VCH, Weinheim

European IPPC Bureau (2019) Reference documents under the IPPC directive and the IED. http://eippcb.jrc.ec.europa.eu/reference. Accessed 28 Feb 2019

Foo D (2017) Chemical engineering process simulation, 1st edn. Elsevier, Amsterdam

Franco A, Stuikis J, Gouin T et al (2013) Evolution of the sewage treatment plant model SimpleTreat: use of realistic biodegradability tests in probabilistic model simulations. Integr Environ Assess Manage 9:569–579

Gavankar S, Suh S, Keller AA (2015) The role of scale and technology maturity in life cycle assessment of emerging technologies: a case study on carbon nanotubes. J Ind Ecol 19:51–60

Green D, Perry R (2007) Perry’s chemical engineer’s handbook, 8th edn. McGraw-Hill, New York, NY

Khoo HH, Isoni V, Sharratt PN (2018) LCI data selection criteria for a multidisciplinary research team: LCA applied to solvents and chemicals. Sustain Prod Consum 16:68–87

Hall S (2017) Rules of thumb for chemical engineers, 6th edn. Elsevier, Amsterdam

Hand D, Mannila H, Smyth P (2001) Principles of data mining. MIT Press, Cambridge, MA

Harto C, Meyers R, Williams E (2010) Life cycle water use of low-carbon transport fuels. Energ Policy 38:4933–4944

Hawkins T, Hendrickson C, Higgins C et al (2007) A mixed-unit input-output model for environmental life-cycle assessment and material flow analysis. Environ Sci Technol 41:1024–1031

Hendrickson C, Horvath A, Joshi S et al (1998) Economic input-output models for lifecycle assessment. Environ Sci Technol 13:184A–191A

Hischier R, Hellweg S, Capello C et al (2005) Establishing life cycle inventories of chemicals based on differing data availability. Int J Life Cycle Assess 10:59–67

Hischier R, Kwon NH, Brog J-P et al (2018) Early-stage sustainability evaluation of nanoscale cathode materials for Lithium ion batteries. Chemsuschem 11:2068–2076

Holmes JL, Aubry C (2012) Group additivity values for estimating the enthalpy of formation of organic compounds: an update and reappraisal. 2. C, H, N, O, S, and Halogens. J Phys Chem A 116:7196–7209

Huang H-J, Ramaswamy S, Tschirner UW et al (2008) A review of separation technologies in current and future biorefineries. Sep Purif Technol 62:1–21

Ingwersen WW, Hawkins TR, Transue TR et al (2015) A new data architecture for advancing life cycle assessment. Int J Life Cycle Assess 20:520–526

IDEA (2019) Inventory database for environmental analysis. National Institute of Advanced Industrial Science and Technology, Japan. http://www.idea-lca.jp. Accessed 30 May 2019

ISO (2006a) Environmental management—life cycle assessment—principles and framework. Geneva, International Standardization Organization (ISO), European Standard EN ISO 14040

ISO (2006b) Environmental management—life cycle assessment—requirements and guidelines. Geneva, International Standardisation Organisation (ISO), European Standard EN ISO 14044

Islam S, Ponnambalam SG, Loong Lam H (2016) Review on life cycle inventory: methods, examples and applications. J Cleaner Prod 136:266–278

Jiménez-González C, Kim S, Overcash MR (2000) Methodology for developing gate-to-gate life cycle inventory information. Int J Life Cycle Assess 5:153–159

Jiménez-González C, Overcash MR (2014) The evolution of life cycle assessment in pharmaceutical and chemical applications—a perspective. Green Chem 16:3392–3400

Joshi S (1999) Product environmental life-cycle assessment using input output techniques. J Ind Ecol 3:95–120

JRC (2019) Life cycle data network. Joint Research Centre, European Union. https://eplca.jrc.ec.europa.eu/LCDN/. Accessed 30 May 2019

Kalbar PP, Munoz I, Birkved M (2018) WW LCIv2: a second-generation life cycle inventory model for chemicals discharged to wastewater system. Sci Total Environ 622:1649–1657

Köhler A, Hellweg S, Recan E et al (2007) Input-dependent life-cycle inventory model of industrial wastewater-treatment processes in the chemical sector. Environ Sci Technol 41:5515–5522

Kroschwitz JI, Seidel A (eds) (2004) Kirk-Othmer encyclopedia of chemical technology, 5th edn. Wiley-Interscience, Weinheim

Kuczenski B, Davis CB, Rivela B et al (2016) Semantic catalogs for life cycle assessment data. J Cleaner Prod 137:1109–1117

Linstrom PJ, Mallard WG (eds) (2019) NIST Chemistry WebBook, NIST Standard Reference Database Number 69. National Institute of Standards and Technology, Gaithersburg, MD. https://doi.org/10.18434/T4D303. Accessed 28 Feb 2019

Lari GM, Pastore G, Haus M et al (2018) Environmental and economical perspectives of a glycerol biorefinery. Energy Environ Sci 11:1012–1029

Laurent A, Bakas I, Clavreul J et al (2014) Review of LCA studies of solid waste management systems—Part I: Lessons learned and perspectives. Waste Manage 34:573–588

Lave LB, Cobas-Flores E, Hendrickson CT et al (1995) Using input-output analysis to estimate economy-wide discharges. Environ Sci Technol 29:420A–426A

Leontief WW (1936) Quantitative input and output relations in the economic systems of the United States. Rev Econ Stat 18:105–125

Leontief WW, Ford D (1970) Environmental repercussions and the economic structure: an input–output approach. Rev Econ Stat 52:262–271

Li S, Feliachi Y, Agbleze S, Ruiz-Mercado GJ et al (2018) A process systems framework for rapid generation of life cycle inventories for pollution control and sustainability evaluation. Clean Technol Environ Policy 20:1543–1561

Liu C, Huang Y, Wang X et al (2018) Total environmental impacts of biofuels from corn stover using a hybrid life cycle assessment model combining process life cycle assessment and economic input-output life cycle assessment. Integr Environ Assess Manage 14:139–149

Meng L, Sager J (2017) Energy consumption and energy-related CO2 emissions from China’s petrochemical industry based on an environmental input-output life cycle assessment. Energies 10:1–12

Meyer DE, Mittal VK, Ingwersen WW et al (2019) Purpose-driven reconciliation of approaches to estimate chemical releases. ACS Sustain Chem Eng 7:1260–1270

Mittal V, Bailin S, Gonzalez M et al (2018) Toward automated inventory modeling in life cycle assessment: the utility of semantic data modeling to predict real-world chemical production. ACS Sustain Chem Eng 6:1961–1976

Moriguchi Y, Kondo Y, Shimizu H (1993) Analyzing the life cycle impact of cars: the case of CO2. Ind Environ 16:42–45

Munoz I, Otte N, Van Hoof G et al (2017) A model and tool to calculate life cycle inventories of chemicals discharged down the drain. Int J Life Cycle Assess 22:986–1004

Munoz I (2019) Wastewater life cycle inventory initiative. WW LCI 3.0: changes and improvements to WW LCI v2. 2.-0 LCA Consultants, Aalborg

NREL (2019) U.S. Life cycle inventory database. National Renewable Energy Laboratory, Lakewood, CO, USA. https://www.nrel.gov/lci/. Accessed 30 May 2019

Papadokonstantakis S, Karka P, Kikuchi Y et al (2016) Challenges for model-based life cycle inventories and impact assessment in early to basic process design stages. In: Ruiz-Mercado G, Cabezas H (eds) Sustainability in the design, synthesis and analysis of chemical engineering processes. Butterworth-Heinemann, Oxford, p 295

Parvatker AG, Eckelman MJ (2019) Comparative evaluation of chemical life cycle inventory generation methods and implications for life cycle assessment results. ACS Sustain Chem Eng 7:350–367

Piccinno F, Hischier R, Seeger S et al (2016) From laboratory to industrial scale: a scale-up framework for chemical processes in life cycle assessment studies. J Cleaner Prod 135:1085–1097

Raymond MJ, Slater CS, Savelski MJ (2010) LCA approach to the analysis of solvent waste issues in the pharmaceutical industry. Green Chem 12:1826–1834

Righi S, Baioli F, Dal Pozzo A et al (2018) Integrating life cycle inventory and process design techniques for the early estimate of energy and material consumption data. Energies 11:970

Rodriguez-Alloza AM, Malik A, Lenzen M et al (2015) Hybrid input-output life cycle assessment of warm mix asphalt mixtures. J Cleaner Prod 90:171–182

Saouter E, Biganzoli F, Ceriani L, et al (2018) Environmental footprint: update of life cycle impact assessment methods—ecotoxicity freshwater, human toxicity cancer, and non-cancer, EUR 29495 EN. Publications Office of the European Union, Luxembourg, ISBN 978-92-79-98183-8. JRC114227. https://doi.org/10.2760/611799

Sengupta D, Hawkins TR, Smith RL (2015) Using national inventories for estimating environmental impacts of products from industrial sectors: a case study of ethanol and gasoline. Int J Life Cycle Assess 20:597–607

Seyler C, Hellweg S, Monteil M et al (2004) Life cycle inventory for use of waste solvent as fuel substitute in the cement industry: a multi-input allocation model. Int J Life Cycle Assess 10:120–130

Seyler C, Hofstetter TB, Hungerbuhler K (2005) Life cycle inventory for thermal treatment of waste solvent form chemical industry: a multi-input allocation model. J Cleaner Prod 13:1211–1224

Sharma A, Gulia P (2014) Analysis of big data. IJCSMC 3:56–68

Shibasaki M, Fischer M, Barthel L (2007) Effects on life cycle assessment—scale up of processes. In: Takata S, Umeda Y (eds) Advances in life cycle engineering for sustainable manufacturing businesses. Springer, London, London, p 377

Simon B, Bachtin K, Kilic A et al (2016) Proposal of a framework for scale-up life cycle inventory: a case of nanofibers for lithium iron phosphate cathode applications. Integr Environ Assess Manage 12:465–477

Sinnott RK (2005) Coulson and Richardson’s chemical engineering series: volume 6—chemical engineering design, 4th edn. Butterworth-Heinemann, Oxford

Smith RL, Ruiz-Mercado GJ, Meyer DE et al (2017) Coupling computer-aided process simulation and estimations of emissions and land use for rapid life cycle inventory modeling. ACS Sustain Chem Eng 5:3786–3794

Song R, Keller AA, Suh S (2017) Rapid life-cycle impact screening using artificial neural networks. Environ Sci Technol 51:10777–10785

Sonnemann G, Vigon B, Rack M et al (2013) Global guidance principles for life cycle assessment databases: development of training material and other implementation activities on the publication. Int J Life Cycle Assess 18:1169–1172

Strømman A (2001) LCA of hydrogen production from a steam methane reforming plant with CO2 sequestration and deposition. Paper presented at the 1st Industrial Ecology Conference, Leiden, The Netherlands, 12–14 November 2001

Strogen B, Horvath A (2013) Greenhouse gas emissions from the construction, manufacturing, operation, and maintenance of U.S. distribution infrastructure for petroleum and biofuels. J Infrastruct Syst 19:371–383

Suh S, Huppes G (2002) Missing inventory estimation tool using extended input output analysis. Int J Life Cycle Assess 7:134–140

Suh S, Lenzen M, Treloar GJ et al (2004) System boundary selection in life-cycle inventories using hybrid approaches. Environ Sci Technol 38:657–664

Suh S (2005) Developing a sectoral environmental database for input–output analysis: the comprehensive environmental data archive of the US. Econ Syst Res 17:449–469

Suh S, Huppes G (2005) Methods for life cycle inventory of a product. J Cleaner Prod 13:687–697