Abstract

There is increasing evidence that shape and texture descriptors from imaging data could be used as image biomarkers for computer-assisted diagnosis and prognostication in a number of clinical conditions. It is believed that such quantitative features may help uncover patterns that would otherwise go unnoticed to the human eye, this way offering significant advantages against traditional visual interpretation. The objective of this paper is to provide an overview of the steps involved in the process – from image acquisition to feature extraction and classification. A significant part of the work deals with the description of the most common texture and shape features used in the literature; overall issues, perspectives and directions for future research are also discussed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

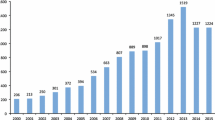

Recent technological advances including new imaging modalities as well as storing, sharing and computing resources have facilitated the collection of very large amounts of three-dimensional medical data [1]. In this scenario shape and texture analysis of such data has been receiving increasing attention during the last few years. The overall objective is that of extracting quantitative parameters from the imaging data (biomarkers) capable of correlating with clinical features such as disease phenotype and/or survival. The whole process, usually referred to as radiomics, can be regarded as an improvement on the traditional practice wherein medical images were mostly used as pictures for qualitative visual interpretation only [2, 3]. In the management of oncologic disorders, for instance, a number studies have supported the use of radiomics for a variety of tasks including prediction of outcome [4, 5] and response to treatment [6, 7]; discrimination between benign, malignant, primary and metastatic lesions [8,9,10]; and classification of hystologic subtypes [11].

Radiomics, however, is still a new discipline and definitely far from being mature. There are significant obstacles that prevent the application on a large scale – chief among them the lack of large enough datasets for building models and classifiers, and the absence of standards establishing how the biomarkers should be computed [12]. The objective of this paper is to provide an overview of the steps involved, discuss the open issues and indicate directions for future research. A significant part of the paper deals with the description of the most common texture and shape features used in the literature.

2 Methods

The flow-chart of Fig. 1 summarises the overall workflow in radiomics. Image acquisition is always the first step and can optionally be followed by a post-processing phase. Segmentation is then required to separate the region of interest (ROI) from the background. Feature extraction is the core of the procedure and consists of extracting a set of meaningful parameters (features) from the ROI. The features can undergo some post-processing step as for instance selection and/or reduction. Finally, the resulting data are fed to some classifier or regression model suitable for the required task.

2.1 Image Acquisition

There are three main classes of medical imaging modalities providing three-dimensional data [13]: Computed Tomography (CT), Positron Emission Tomography (PET) and Magnetic Resonance Imaging (MRI).

Computed Tomography is based on the unlike absorption of X-rays by different tissue types, therefore the signal is proportional to the tissue density in this case [14]. Positron Emission Tomography estimates the metabolic activity of the tissue by measuring the radioactive decay of some specific radio-tracers. Those used in PET contain isotopes (e.g. \(^{11}C\), \(^{15}O\) and \(^{18}F\)) which emit positrons through \(\beta {+}\) decay. The positrons collide and annihilate with the electrons in the tissue, this way emitting two \(\gamma \) rays 180\(^{\circ }\) apart that are detected by the sensors [15]. Finally, in Magnetic Resonance Imaging the signal comes from positrons (hydrogen nuclei) contained in water and lipids. The signal in this case is proportional relaxation time – i.e. the time to return to the equilibrium magnetization state once the external magnetisation field is switched off [16].

In all the imaging modalities the scanning usually proceeds axially (head to feet), this way producing, as a result, a variable number of axial cross-sections with fixed size (slices). A three-dimensional voxel model is eventually reconstructed by piling up all the slices.

2.2 Pre-processing

Pre-processing usually involves one or more of the following operations: (1) windowing (rescaling), (2) filtering and (3) resampling. Although frequently overlooked, pre-processing is a fundamental step in the pipeline with significant effects on the overall results, as for instance shown in [17, 18].

Windowing consists of applying an upper and lower threshold to the raw intensity values returned by the scans, this way excluding from the analysis those values that fall outside the range. In CT, for instance, windowing is routinely used to exclude from the analysis those anatomic parts (e.g. bones) that are reputed not relevant to the disease investigated.

Filtering can be carried out either to reduce noise and/or highlight features at different spatial scales. A variety of methods can be used for this purpose, as for instance Butterworth smoothing [18], Gaussian [19] and Laplacian of Gaussian [7, 20] filters.

Resampling involves changing the number of bits (bit depth) used for encoding the intensity values. The bit depth of the raw data depends on the scanning device and settings used (12 and 16 bit are standard values in the practice). These are usually reduced to lower values (downasmpling) before feature extraction: eight, six and four bit and are common choices [6, 18, 21, 22].

2.3 Segmentation

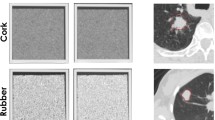

The objective of segmentation is that of identifying the part of the scan (ROI) that is considered relevant to the analysis. A ROI usually represents a clinically relevant region, as for instance a potentially cancerous lesion (Fig. 2). Segmentation is a crucial step, for it provides the input to the subsequent phases. Unfortunately, this is also a tedious and time-consuming procedure. Although a number of methods have been investigated for automatising the process – these include, among the others thresholding [23, 24], region growing [23,24,25], edge detection [23, 24] and convolutional networks [26, 27] – segmentation remains by and large a manual procedure in which the experience and sensitivity of the physician play a major role. Besides, the decision whether to include or exclude dubious areas such as necrosis, atelectasis, inflammation and/or oedema is essentially the clinician’s responsibility and, as such, hard to automatise.

2.4 Feature Extraction

Feature extraction can be considered the ‘core’ of the whole procedure and consists of computing meaningful parameters from the regions of interest. There are two main strategies to feature extraction: the ‘hand-designed’ or ‘hand-crafted’ paradigm one the hand, and Deep Learning on the other.

In the hand designed approach the functions for feature extraction (also indicated as image descriptors) are mostly designed by hand, the design process being based on some prior knowledge about filtering, perceptual models and/or relatively intuitive visual properties (e.g. coarseness, business, contrast, etc.) This model-driven, ‘a priori’ paradigm is independent on the data to analyse. By contrast, Deep Learning is a data-driven, ‘a posteriori’ strategy in which the descriptors are essentially shaped by the data. The feature extractors, in this case, are based on sets of combinable blocks (layers) of which only the overall skeleton is defined a priori, and their behaviour depends on lots of free parameters whose values need to be determined by training over huge sets of data. In this paper we are mostly concerned with the hand-design paradigm; for an overview of Deep Learning and its potential applications in the field we refer the reader to Refs. [28,29,30].

Regardless the method used, there are some desirable properties that one would always expect from features. First, they should be discriminative, i.e.: they should enable good separation among the classes involved in the problem investigated (e.g. classification benign vs. malignant). Second, they should be interpretable on the basis of some physical characteristics (e.g. round/elongated, coarse/fine, etc.) Third, they should be few: this, again, facilitates interpretation, limits the computational overhead and reduces the chances of overfitting. Here below we briefly review some of the most common shape and texture features used in radiomics.

Shape Features. Shape features have been investigated as potential biomarkers for a range of diseases. In oncologic disorders, for instance, lesions presenting ill-defined (‘spiculated’) borders are considered suggestive of malignancy, aggressiveness and in general worse prognosis; whereas those with regular, well defined margins are more frequently indicative of benign or less aggressive lesions [31,32,33]. For a quantitative evaluation of shape different parameters have been proposed – among them compactness, spherical disproportion, sphericity and surface-to-volume ratio (Eqs. 1–4). In formulas, indicated with A the surface area of the ROI, V the volume and R the radius of a sphere with volume V we have:

Compactness, spherical disproportion and surface-to-volume ratio from CT, for instance, were found predictive of malignancy in lung lesions [34]; surface-to-volume ratio from MRI showed potential to differentiate between clinically significant and non-significant prostate cancer [31]; and functional sphericity from PET images correlated with clinical outcome in non-small-cell lung cancer [32].

Texture Features

Basic Statistics. These are parameters that can be computed directly from the raw data with no further processing. Resampling is not required. They include: mean, maximum, median, range, standard deviation, skewness and kurtosis (for definitions and formulae see also [35]). All these features are by definition invariant to geometric transformations of the input data such as rotation, mirroring, scaling and/or voxel permutation. Most of these features are also rather intuitive and their implementation straightforward.

Histogram-Based Features. This kind of features are derived from the probability distribution (histogram) of the intensity levels within the ROI. Features like energy (Eq. 5 – sometimes also referred to as uniformity) and entropy (Eq. 6) are routinely used for assessing the ‘heterogeneity’ of tumour lesions. There is indeed evidence that higher heterogeneity may correlate with worse overall prognosis and response to treatment [36,37,38,39]. Histogram-based statistics are invariant to geometric transformations of the input data – just as basic statistics are – but they heavily depend on the resampling scheme used. In formulas, given N the number of quantisation levels and p the probability of occurrence of the i-th intensity level, we have:

where entropy is expressed in bits in this case. Subscript ‘H’ is used to indicate that the features are computed from histograms and to differentiate them from those computed from co-occurrence matrices (see below).

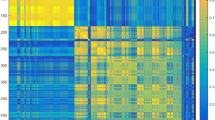

Grey-Level Co-occurrence Matrices. Co-occurrence matrices (GLCM) represent the two-dimensional joint distribution of the intensity levels between pairs of voxels separated by a given displacement vector. By changing the orientation and the length of the vector GLCM can probe the local signal variation at different scales and orientations. Co-occurrence matrices, a classic tools in texture analysis, were originally designed for planar images [40] but their extension to three-dimensional data is straightforward [41]. In this case there are 26 possible orientations for a given scale and as many GLCM, of which, however, only 13 non-redundant. A GLCM with values mainly clustered around the main diagonal will indicate a texture with low variability; a highly dispersed matrix will be characteristic of a variable texture. To capture this behaviour one usually extracts some global parameters from the GLCM, as for instance contrast, energy, entropy and homogeneity (Eqs. 7–10). Again, these have shown potential as clinical biomarkers in a number of studies [5, 6, 22, 42]. Indicated with i and j the indices of the two voxels separated by a given displacement vector, we have:

Other Texture Features. Texture analysis has been an area of intense research for more than forty years, and, as a results, the amount of available methods is huge. Among those that have received attention in the field of radiomics are: neighbourhood grey-tone difference matrices (NGTDM [6, 21, 22, 43]), grey-level run-length matrices (GLRLM [21, 22, 44]), Local Binary Patterns (LBP [17, 45]), Laws’ masks [46, 47] and wavelets [48, 49]. For definitions and further details we refer the reader to the given references.

2.5 Post-processing

The features returned by the extraction phase can undergo further processing to (a) reduce their number and (b) increase their discrimination capability. The main strategies to achieve this goal are feature selection and feature generation [50]. The first aims at identifying the most discriminative features so as to reduce their overall number while retaining as much information as possible. This is particularly important in radiomics, where some shape and texture features tend to be highly correlated to each other, as recently shown in [51]. The second consists of generating new features from the original ones via some suitable transformation, as for instance Principal Component Analysis (PCA) and Independent Component Analysis (ICA) [50].

2.6 Data Analysis/Classification

The last step consists of feeding the features to a classifier to make predictions about the disease type (computer-assisted diagnosis) and/or the clinical outlook (prognostication). To this end suitable machine learning models and large enough sets of labelled data (train set) are required. As for the model, one can choose among a number of different solutions (e.g. linear classifiers, Support Vector Machines, Classification Trees, neural networks and/or a combination of them [50, 52]): the main problem is selecting the right model for the specific task. Getting the right data for training, however, can be rather hard, for it requires finding large enough sets of manually classified/annotated clinical records. For prognostication the data need also to be longitudinal, which implies following up on a cohort of patients for a long period of time.

3 Discussion

A number of recent studies have advocated the use of shape and texture descriptors as potential image biomarkers in the diagnosis and treatment of a range of disorders. Still, clear advantages for patient management are yet to be demonstrated above and beyond traditional imaging techniques and basic biomarkers [37]. From a technical standpoint there are at least three major hurdles that still limit the applicability of radiomics on a large scale. The first is the lack of standardisation in all the steps detailed in Sect. 2. Differences in the imaging acquisition parameters, feature definition and naming conventions, pre- and post-processing procedures are all sources of variations that reduce reproducibility, increase artefacts and eventually lead to biased results [36]. To overcome this problem, an important standardisation initiative is in progress [12]. The second is the lack of large enough datasets of imaging data to train the classifiers. To fill this gap, international, open access repositories are being developed [53]. The third is that some steps of the pipeline (e.g. lesion segmentation) still rely too heavily on human intervention, which may potentially lead to biased results and low reproducibility [54].

4 Conclusions

Shape and texture features from three-dimensional biomedical data have been attracting much research interest in recent years. It is believed that such quantitative imaging features may help uncover patterns that would otherwise go unnoticed to the human eye. In this paper we have provided an overview about the methods, issues and perspectives. On the whole it is clear that radiomics has the potential to improve the patient management in a number of diseases, but there are still significant obstacles along the way – chief among them the absence of standardisation and the lack of large datasets of clinical data.

References

Chalkidou, A., O’Doherty, M., Marsden, P.: False discovery rates in PET and CT studies with texture features: a systematic review. PLoS ONE 10(5), article no. e0124165 (2015)

Gillies, R., Kinahan, P., Hricak, H.: Radiomics: images are more than pictures, they are data. Radiology 278(2), 563–577 (2016)

Keek, S., Leijenaar, R., Jochems, A., Woodruff, H.: A review on radiomics and the future of theranostics for patient selection in precision medicine. Br. J. Radiol. 91(1091), article no. 20170926 (2018)

Wang, X., Sun, W., Liang, H., Mao, X., Lu, Z.: Radiomics signatures of computed tomography imaging for predicting risk categorization and clinical stage of thymomas. BioMed Res. Int. 2019, 10 (2019). article ID 3616852

Song, J., Liu, Z., Zhong, W., Huang, Y., Ma, Z., Dong, D., Liang, C., Tian, J.: Non-small cell lung cancer: quantitative phenotypic analysis of CT images as a potential marker of prognosis. Sci. Rep. 6, article no. 38282 (2016)

Pyka, T., Bundschuh, R., Andratschke, N., Mayer, B., Specht, H., Papp, L., Zsótér, N., Essler, M.: Textural features in pre-treatment [F18]-FDG-PET/CT are correlated with risk of local recurrence and disease-specific survival in early stage NSCLC patients receiving primary stereotactic radiation therapy. Radiat. Oncol. 10(1), article no. 100 (2015)

Ravanelli, M., Farina, D., Morassi, M., Roca, E., Cavalleri, G., Tassi, G., Maroldi, R.: Texture analysis of advanced non-small cell lung cancer (NSCLC) on contrast-enhanced computed tomography: prediction of the response to the first-line chemotherapy. Eur. Radiol. 23(12), 3450–3455 (2013)

Andersen, M., Harders, S., Ganeshan, B., Thygesen, J., Madsen, H., Rasmussen, F.: CT texture analysis can help differentiate between malignant and benign lymph nodes in the mediastinum in patients suspected for lung cancer. Acta Radiol. 57(6), 669–676 (2016)

Dennie, C., Thornhill, R., Sethi-Virmani, V., Souza, C., Bayanati, H., Gupta, A., Maziak, D.: Role of quantitative computed tomography texture analysis in the differentiation of primary lung cancer and granulomatous nodules. Quant. Imaging Med. Surg. 6(1), 6–15 (2016)

Kirienko, M., Cozzi, L., Rossi, A., Voulaz, E., Antunovic, L., Fogliata, A., Chiti, A., Sollini, M.: Ability of FDG PET and CT radiomics features to differentiate between primary and metastatic lung lesions. Eur. J. Nucl. Med. Mol. Imaging 45(10), 1649–1660 (2018)

Zhu, X., Dong, D., Chen, Z., Fang, M., Zhang, L., Song, J., Yu, D., Zang, Y., Liu, Z., Shi, J., Tian, J.: Radiomic signature as a diagnostic factor for histologic subtype classification of non-small cell lung cancer. Eur. Radiol. 28(7), 2772–2778 (2018)

Hatt, M., Vallieres, M., Visvikis, D., Zwanenburg, A.: IBSI: an international community radiomics standardization initiative. J. Nucl. Med. 59(1 supp.), 287 (2018)

Barrie Smith, N., Webb, A.: Introduction to Medical Imaging: Physics, Engineering and Clinical Applications. Cambridge University Press, Cambridge (2011)

Goldman, L.: Principles of CT and CT technology. J. Nucl. Med. Technol. 35(3), 115–128 (2007)

Basu, S., Kwee, T., Surti, S., Akin, E., Yoo, D., Alavi, A.: Fundamentals of PET and PET/CT imaging. Ann. N. Y. Acad. Sci. 1228(1), 1–18 (2011)

Grover, V., Tognarelli, J., Crossey, M., Cox, I., Taylor-Robinson, S., McPhail, M.: Magnetic resonance imaging: principles and techniques: lessons for clinicians. J. Clin. Exp. Hepatol. 5(3), 246–255 (2015)

Bagher-Ebadian, H., Siddiqui, F., Liu, C., Movsas, B., Chetty, I.: On the impact of smoothing and noise on robustness of CT and CBCT radiomics features for patients with head and neck cancers. Med. Phys. 44(5), 1755–1770 (2017)

Fave, X., Zhang, L., Yang, J., Mackin, D., Balter, P., Gomez, D., Followill, D., Jones, A., Stingo, F., Court, L.: Impact of image preprocessing on the volume dependence and prognostic potential of radiomics features in non-small cell lung cancer. Transl. Cancer Res. 5(4), 349–363 (2016)

Brunese, L., Greco, B., Setola, F., Lassandro, F., Guarracino, M., De Rimini, M., Piccolo, S., De Rosa, N., Muto, R., Bianco, A., Muto, P., Grassi, R., Rotondo, A.: Non-small cell lung cancer evaluated with quantitative contrast-enhanced CT and PET-CT: net enhancement and standardized uptake values are related to tumour size and histology. Med. Sci. Monit. 19(1), 95–101 (2013)

Ganeshan, B., Goh, V., Mandeville, H., Ng, Q., Hoskin, P., Miles, K.: Non-small cell lung cancer: histopathologic correlates for texture parameters at CT. Radiology 266(1), 326–336 (2013)

Bianconi, F., Fravolini, M., Bello-Cerezo, R., Minestrini, M., Scialpi, M., Palumbo, B.: Evaluation of shape and textural features from CT as prognostic biomarkers in non-small cell lung cancer. Anticancer Res. 38(4), 2155–2160 (2018)

Yip, S., McCall, K., Aristophanous, M., Chen, A., Aerts, H., Berbeco, R.: Comparison of texture features derived from static and respiratory-gated PET images in non-small cell lung cancer. PLoS ONE 9(12), article no. e115510 (2014)

Zheng, L., Lei, Y.: A review of image segmentation methods for lung nodule detection based on computed tomography images. In: Proceedings of the 2nd International Conference on Electronic Information Technology and Computer Engineering. MATEC Web of Conferences, Shanghai, China, vol. 232, October 2018. art. no. 02001

Jayanthi, M.: Comparative study of different techniques used for medical image segmentation of liver from abdominal CT scan. In: Proceedings of the 2016 IEEE International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, pp. 1462–1465, March 2016. art. no. 7566379

Sandor, T., Metcalf, D., Kim, Y.J.: Segmentation of brain CT images using the concept of region growing. Int. J. Biomed. Comput. 29(2), 133–147 (1991)

Chen, L., Bentley, P., Mori, K., Misawa, K., Fujiwara, M., Rueckert, D.: DRINet for medical image segmentation. IEEE Trans. Med. Imaging 37(11), 2453–2462 (2018). art. no. 8357580

Chlebus, G., Schenk, A., Moltz, J., van Ginneken, B., Hahn, H., Meine, H.: Automatic liver tumor segmentation in CT with fully convolutional neural networks and object-based postprocessing. Sci. Rep. 8(1), article no. 15497 (2018)

Vial, A., Stirling, D., Field, M., Ros, M., Ritz, C., Carolan, M., Holloway, L., Miller, A.: The role of deep learning and radiomic feature extraction in cancer-specific predictive modelling: a review. Transl. Cancer Res. 7(3), 803–816 (2018)

Lecun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Xie, D., Zhang, L., Bai, L.: Deep learning in visual computing and signal processing. Appl. Comput. Intell. Soft Comput., article no. 1320780, February 2017

Cuocolo, R., Stanzione, A., Ponsiglione, A., Romeo, V., Verde, F., Creta, M., La Rocca, R., Longo, N., Pace, L., Imbriaco, M.: Clinically significant prostate cancer detection on MRI: a radiomic shape features study. Eur. J. Radiol. 116, 144–149 (2019)

Hatt, M., Laurent, B., Fayad, H., Jaouen, V., Visvikis, D., Le Rest, C.: Tumour functional sphericity from PET images: prognostic value in NSCLC and impact of delineation method. Eur. J. Nucl. Med. Mol. Imaging 45(4), 630–641 (2018)

Limkin, E., Reuzé, S., Carré, A., Sun, R., Schernberg, A., Alexis, A., Deutsch, E., Ferté, C., Robert, C.: The complexity of tumor shape, spiculatedness, correlates with tumor radiomic shape features. Sci. Rep. 9(1), article no. 4329, (2019)

Wang, J., Liu, X., Dong, D., Song, J., Xu, M., Zang, Y., Tian, J.: Prediction of malignant and benign of lung tumor using a quantitative radiomic method. In: Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Orlando, United States, pp. 1272–1275, October 2016. art. no. 7590938

Newbold, P., Carlson, W., Thorne, B.: Statistics for Business and Economics, 6th edn. Pearson Education International (2007)

Bashir, U., Siddique, M., McLean, E., Goh, V., Cook, G.: Imaging heterogeneity in lung cancer: techniques, applications, and challenges. Am. J. Roentgenol. 207(3), 534–543 (2016)

O’Connor, J., Rose, C., Waterton, J., Carano, R., Parker, G., Jackson, A.: Imaging intratumor heterogeneity: role in therapy response, resistance, and clinical outcome. Clin. Cancer Res. 21(2), 249–257 (2015)

Win, T., Miles, K., Janes, S., Ganeshan, B., Shastry, M., Endozo, R., Meagher, M., Shortman, R., Wan, S., Kayani, I., Ell, P., Groves, A.: Tumor heterogeneity and permeability as measured on the CT component of PET/CT predict survival in patients with non-small cell lung cancer. Clin. Cancer Res. 19(13), 3591–3599 (2013)

Ganeshan, B., Panayiotou, E., Burnand, K., Dizdarevic, S., Miles, K.: Tumour heterogeneity in non-small cell lung carcinoma assessed by CT texture analysis: a potential marker of survival. Eur. Radiol. 22(4), 796–802 (2012)

Haralick, R.M., Shanmugam, K., Dinstein, I.: Textural features for image classification. IEEE Trans. Syst. Man Cybern. 3(6), 610–621 (1973)

Ben Othmen, E., Sayadi, M., Fniaech, F.: 3D gray level co-occurrence matrices for volumetric texture classification. In: Proceedings of the 3rd International Conference on Systems and Control (ICSC), Algiers, Algeria, pp. 833–837, October 2013

Aerts, H., Velazquez, E., Leijenaar, R., Parmar, C., Grossmann, P., Cavalho, S., Bussink, J., Monshouwer, R., Haibe-Kains, B., Rietveld, D., Hoebers, F., Rietbergen, M., Leemans, C., Dekker, A., Quackenbush, J., Gillies, R., Lambin, P.: Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 5, article no. 4006 (2014)

Adamasun, M., King, R.: Textural features corresponding to textural properties. IEEE Trans. Syst. Man Cybern. 19(5), 1264–1274 (1989)

Galloway, M.: Texture analysis using gray level run lengths. Comput. Graph. Image Process. 4(2), 172–179 (1975)

Ojala, T., Pietikäinen, M., Mäenpää, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 24(7), 971–987 (2002)

Hawkins, S., Korecki, J., Balagurunathan, Y., Gu, Y., Kumar, V., Basu, S., Hall, L., Goldgof, D., Gatenby, R., Gillies, R.: Predicting outcomes of nonsmall cell lung cancer using CT image features. IEEE Access 2, 1418–1426 (2014). art. no. 6966732

Laws, K.: Rapid texture identification. In: Wiener, T. (ed.) Image Processing for Missile Guidance. SPIE Proceedings, vol. 0238 (1980)

Balagurunathan, Y., Gu, Y., Wang, H., Kumar, V., Grove, O., Hawkins, S., Kim, J., Goldgof, D., Hall, L., Gatenby, R., Gillies, R.: Reproducibility and prognosis of quantitative features extracted from CT images. Transl. Oncol. 7(1), 72–87 (2014)

Daubechies, I.: CBMS-NSF Regional Conference Series in Applied Mathematics, Ten Lectures on Wavelets, vol. 61. Society for Industrial and Applied Mathematics (1992)

Theodoridis, S., Koutroumbas, K.: Pattern Recognition, 3rd edn. Academic Press, Burlington (2006)

Bianconi, F., Palumbo, I., Fravolini, M., Chiari, R., Minestrini, M., Brunese, L., Palumbo, B.: Texture analysis on [18F]FDG PET/CT in non-small-cell lung cancer: correlations between PET features, CT features, and histological types. Molecular Imaging and Biology (2019, in press)

Duda, R., Hart, P., Stork, D.: Pattern Classification, 2nd edn. Wiley, New York (2001)

The Cancer Imaging Archive (TCIA). http://www.cancerimagingarchive.net/. Accessed 04 June 2019

Rizzo, S., Botta, F., Raimondi, S., Origgi, D., Fanciullo, C., Morganti, A., Bellomi, M.: Radiomics: the facts and the challenges of image analysis. Eur. Radiol. Exp. 2(1), 36 (2018)

Feedback Medical Ltd: TexRAD (Texture + Radiology). https://fbkmed.com/texrad-landing-2/. Accessed 20 July 2019

Nioche, C., Orlhac, F., Boughdad, S., Reuze, S., Goya-Outi, J., Robert, C., Pellot-Barakat, C., Soussan, M., Frouin, F., Buvat, I.: LIFEx: a freeware for radiomic feature calculation in multimodality imaging to accelerate advances in the characterization of tumor heterogeneity. Cancer Res. 78(16), 4786–4789 (2018)

van Griethuysenand, J., Fedorovand, A., Parmarand, C., Hosnyand, A., Vivek Narayanand, A., Beets-Tanand, R., Fillion-Robinand, J.C., Pieperand, S., Aerts, H.: Computational radiomics system to decode the radiographic phenotype. Cancer Res. 77(21), e104–7 (2017)

Acknowledgements

This work was partially supported by the Department of Engineering at the University of Perugia, Italy, under the Fundamental Research programme 2017.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: Tools and Packages for Radiomics

Appendix: Tools and Packages for Radiomics

A number of applications are already available for managing radiomics data. Among them, TexRAD (Feedback Medical Ltd, Cambridge, UK) is a commercial tool enabling lesion segmentation, feature extraction and statistical interpretation over radiomic data [55]. LIFEx (IMIV/CEA, Orsay, France) is a freeware package allowing textural analysis and radiomic feature measurements from PET, CT, ultrasound and MR images [56]. Finally, Pyradiomics (Computational Imaging & Bioinformatics Lab, Harvard Medical School, Cambridge, USA) is an open-source set of python libraries for the extraction of radiomics data from medical images as well as image loading and preprocessing [57].

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Bianconi, F., Fravolini, M.L., Palumbo, I., Palumbo, B. (2020). Shape and Texture Analysis of Radiomic Data for Computer-Assisted Diagnosis and Prognostication: An Overview. In: Rizzi, C., Andrisano, A.O., Leali, F., Gherardini, F., Pini, F., Vergnano, A. (eds) Design Tools and Methods in Industrial Engineering. ADM 2019. Lecture Notes in Mechanical Engineering. Springer, Cham. https://doi.org/10.1007/978-3-030-31154-4_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-31154-4_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-31153-7

Online ISBN: 978-3-030-31154-4

eBook Packages: EngineeringEngineering (R0)