Abstract

Hidden graphs are flexible abstractions that are composed of a set of known vertices (nodes), whereas the set of edges are not known in advance. To uncover the set of edges, multiple edge probing queries must be executed by evaluating a function f(u, v) that returns either true or false, if nodes u and v are connected or not respectively. Evidently, the graph can be revealed completely if all possible \(n(n-1)/2\) probes are executed for a graph containing n nodes. However, the function f() is usually computationally intensive and therefore executing all possible probing queries result in high execution costs. The target is to provide answers to useful queries by executing as few probing queries as possible. In this work, we study the problem of discovering the top-k nodes of a hidden bipartite graph with the highest degrees, by using distributed algorithms. In particular, we use Apache Spark and provide experimental results showing that significant performance improvements are achieved in comparison to existing centralized approaches.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

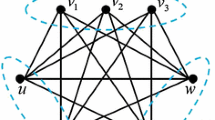

Nowadays, graphs are used to model real-life problems in many diverse application domains, such as social network analysis, searching and mining the Web, pattern mining in bioinformatics and neuroscience. Graph mining is an active and growing research area, aiming at knowledge discovery from graph data [1]. In this work, we focus on simple bipartite graphs, where the set of nodes is composed of two subsets B (the black nodes) and W (the white nodes), such as \(V = B \cup W\) and \(B \cap W = \emptyset \).

Bipartite graphs have many interesting applications in diverse fields. For example, a bipartite graph may be used to represent product purchases by customers. In this case, an edge exists between a product p and a customer c when p was purchased by c. As another example, in an Information Retrieval or Text Mining application a bipartite graph may be used to associate different types of tokens that exist in a document. Thus, an edge between a document d and a token t represents the fact that the token t appears in document d.

In conventional bipartite graphs, the sets of vertices B and W as well as the set of edges E are known in advance. Nodes and edges are organized in a way to enable efficient execution of fundamental graph-oriented computational tasks, such as finding high-degree nodes, computing shortest paths, detecting communities. Usually, the adjacency list representation is being used, which is a good compromise between space requirements and computational efficiency. However, a concept that has started to gain significant interest recently is that of hidden graphs. In contrast to a conventional graph, a hidden bipartite graph is defined as G(B, W, f()), where B and W are the subsets of nodes and f() is a function \(B \times W \rightarrow \{0,1\}\) which takes as an input two vertex identifiers and returns true or false if the edge exists or not respectively.

Formally, for n nodes, there is an exponential number of different graphs that may be generated (the exact number is \(2^{\left( {\begin{array}{c}n\\ 2\end{array}}\right) }\)). To materialize all these different graphs demands significant space requirements. Moreover, it is unlikely that all these graphs will be used eventually. In contrast, the use of a hidden graph enables the representation of any possible graph by the specification of the appropriate function f(). It is noted that the function f() may require the execution of a complex algorithm in order to decide if two nodes are connected by an edge. Therefore, interesting what-if scenarios may be tested with respect to the satisfaction of meaningful properties. It is evident, that the complete graph structure may be revealed if all possible \(n_b \times n_w\) edge probing queries are executed, where \(n_b = |B|\) and \(n_w = |W|\). However, this solution involves the execution of a quadratic number of probes, which is expected to be highly inefficient, taking into account that the function f() is computationally intensive and that real-world graphs are usually very large. Therefore, the target is to provide a solution to a graph-oriented problem by using as few edge probing queries as possible. The problem we are targeting is the discovery of the k nodes with the highest degrees. The degree of a node v is defined as the number of neighbors of v. This problem has been addressed previously by [6] and [8]. In this work, we are interested in solving the problem by using distributed algorithms over Big Data architectures. This enables the analysis of massive hidden graphs and provides some baseline for executing more complex graph mining tasks, overcoming the limitations provided by centralized approaches. In particular, we study distributed algorithms for the discovery of the top-k degree nodes in Apache Spark [9] over YARN and HDFS [7] and we offer experimental results based on a cluster of 32 physical machines. Experiments demonstrate that the proposed techniques are scalable and can be used for the analysis of hidden networks.

2 Related Research

A hidden graph is able to represent arbitrary relationship types among graph nodes. A research direction that is strongly related to hidden graphs is graph property testing [4]. In this case, one is interested in detecting if a graph satisfies a property or not by using fast approximate algorithms. Evidently, detecting graph properties efficiently is extremely important. However, an ever more useful and more challenging task is to detect specific subgraphs or nodes that satisfy specific properties, by using as few edge probing queries as possible.

Another research direction related to hidden graphs focuses on learning a graph or a subgraph by using edge probing queries using pairs or sets of nodes (group testing) [2]. A similar topic is the reconstruction of subgraphs that satisfy certain structural properties [3]. An important difference between graph property testing and analyzing hidden graphs is that in the first case the graph is known whereas in the second case the set of edges is unknown and must be revealed gradually. Moreover, property testing focuses on checking if a specific property holds or not, whereas in hidden graph analysis we are interested in answering specific queries exactly. The problem we attack is the discovery of the top-k nodes with the highest degrees. This problem was solved by [6] and [8] assuming a centralized environment.

The basic algorithm used in [6] was extended in [5] for unipartite graphs, towards detecting if a hidden graph contains a k-core or not. Again, the algorithm proposed in [5] is centralized and its main objective is to minimize the number of edge probing queries performed.

In this paper, we take one step forward towards the design and performance evaluation of distributed algorithms for detecting the top-k nodes with the highest degrees in hidden bipartite graphs. We note that this is the first work in the literature to attack the specific problem in a distributed setting.

3 Proposed Approach

The detection of the top-k nodes with the highest degrees in a bipartite graph has been studied in [6]. In that paper, the authors present the Switch-On-Empty (SOE) algorithm which provides an optimal solution with respect to the required number of probing queries. SOE receives as input a hidden bipartite graph, with bipartitions B and W. The output is composed of the k vertices from B or W with the highest degrees. Without loss of generality, we are focusing on vertices of B. For a vertex \(b_1 \in B\), selects a vertex \(w_1 \in W\) and executes \(f(b_1, w_1)\). If the edge \((b_1,w_1)\) is solid, it continues to perform probes between \(b_1\) and another vertex \(w_2 \in W\), but if it’s empty the algorithm proceed with \(b_2 \in B\). A round is complete when all vertices of B have been considered. After each round, some vertices can be safely included in the result set R and they are removed from B. SOE continues until the upper bound of vertex degrees in B is less than the current k-th highest degree determined so far.

In this work, we proceed one step further in order to detect high-degree nodes in large graphs using multiple resources. Our algorithms are implemented in the Apache Spark engine [9] using the Scala programming language. In the sequel, we focus on the algorithms developed to solve the distributed detection of top-k nodes in a hidden bipartite graph. The first algorithm, Distributed Switch-On-Empty (DSOE), is a distributed version of the SOE algorithm and the second algorithm, DSOE\(^*\), is an improved and more flexible version of DSOE.

3.1 The DSOE Algorithm

The main mechanism, for DSOE, has the following rationale. For all vertices \(b \in B\) we are executing, in parallel, edge-probing queries until we get a certain amount of negative results f. The value of f is relevant to the size of the graph and is being reduced exponentially in every iteration, because the majority of the degree distribution in real life graphs follows a Power law distribution, we do not expect to find many vertices with a high degree. The closer we get to the point of exhausting all \(w \in W\) the smaller we set f, because we want to avoid as many unnecessary queries as possible. When a vertex \(b \in B\) completes all possible probes is added in the answer-set R. If \(|R| \ge k\), then we need one last batch of routines to finalize R.Algorithm 1 contains the pseudocode of DSOE.

In this part we will prove that our conditions regarding R are correct. For a vertex \(b_1 \in B\), we assume that \(b_1\) completes all possible probes in the routine \(r_1\). So we will have \(d(b_1) = |W| - r_1\) or \(d(b_1) = |W|- r_1 - 1\) in case the last probe was negative. For a routine \(r_2\) with \(r_2>r_1\) we will have \(d(b_1) = |W| - r_2\) or \(d(b_1) = |W|- r_2 - 1\). We can conclude that \(d(b_1) \ge d(b_2)\) and the equality is possible only if \(r_2 = r_1 + 1\). For this reason we run a last routine when \(R \ge k\).

3.2 The DSOE\(^*\) Algorithm

DSOE is the natural extension of SOE. However, we advance one step further in order to improve the runtime as much as possible. Inevitably we will have a big number of probes for big graphs so by adding a small amount of probes in favor of execution time may be beneficial.

DSOE\(^*\) requires an initial prediction about the degree of the vertices with sample. Then we calculate all possible edge-probing queries for the vertices we predict to have a large degree. This calculation provides a threshold for the remaining vertices, in order not to waste time on low degree vertices.

More specifically, DSOE\(^*\) initially performs some random edge-probing queries \(\forall b \in B\). The number for these queries is set to \(\log (\log (|W|))\). Then we execute repeatedly a batch of routines \(\forall b \in B\), with the difference that, this time a single routine for a vertex \(b \in B\) is complete after N negative edge-probing queries, where N is an outcome of the prediction performed by the prediction process.

When a vertex exhausts all possible queries, then it is added to a temporary set M. The contents of M provide a threshold T which is set to the minimum degree among the vertices in M. Probing queries are executed \(\forall b \in B\), until \(maxPossibleD(b)<T\). The vertices that have completed all possible probes are added to M. Finally, the k best vertices from M with respect to the degree are returned as the final answer. Algorithm 2 contains the pseudocode of DSOE\(^*\).

4 Performance Evaluation

In this section, we present performance evaluation results depicting the efficiency of the proposed distributed algorithms. All experiments have been conducted in a cluster of 32 physical nodes (machines) running Hadoop 2.7 and Spark 2.1.0. One node is used as the master whereas the rest 31 nodes are used as workers. The data resides in HDFS and YARN is being used as the resource manager.

All datasets used in performance evaluation correspond to real-world networks. The networks used have different number of black and white nodes as well as different number of edges. More specifically, we have used three networks: DBLP, YOUTUBE, and WIKI. All networks are publicly available at the Koblenz Network Collection (http://konect.uni-koblenz.de/).

DBLP. The DBLP network is the authorship network from the DBLP computer science bibliography. With \(|B| = 4,000,150\), \(|W| = 1,425,813\), the highest degree for set B is 114, the highest degree for set W is 951 and the average degree for B is 6.0660 and for W is 2.1622.

YOUTUBE1. This is the bipartite network of YouTube users and their group memberships. With \(|B| = 94,238\) and \(|W| = 30,087\), the highest degree of set B is 1, 035, the highest degree of set W is 7, 591, the average degree for B is 3.1130 and the average degree of W is 9.7504.

YOUTUBE2. This network represents friendship relationships between YouTube users. Nodes are users and an undirected edge between two nodes indicates a friendship. We transform this graph to bipartite by duplicate it’s vertices and the set B is one clone of the nodes whereas W is the other. Two nodes between B and W are connected if their corresponding nodes are connected in the initial graph. Evidently, for this graph the statistics for the two sets B and W are exactly the same: \(|B| = |W| = 1,134,890\), the maximum degree is 28, 754 and the average degree is 5.2650.

WIKIPEDIA. This is the bipartite network of English Wikipedia articles and the corresponding categories they are contained in. For this graph we have \(|B|= 182,947\), \(|W|= 1,853,493\), the highest degree for B is 11, 593 and for W is 54, the average degree for B is 2.048 while for set W is 20.748.

In the sequel, we present some representative experimental results demonstrating the performance of the proposed techniques. First, we perform a comparison of DSOE and DSOE\(^*\) with respect to runtime (i.e., time duration to complete the computation in the cluster) by modifying the number of Spark executors running. For this experiment, we start with 8 Spark executors and gradually we keep on increasing their number keeping \(k=10\). The DBLP graph has been used in this case. Figure 1(a) shows the runtime of both algorithms by increasing the number of executors. It is evident that both algorithms are scalable, since there is a significant speedup as we double the number of executors. Moreover, DSOE\(^*\) shows better performance than DSOE.

Another important factor is the number of probes vs k. We are running our algorithms in a significantly smaller dataset (YOUTUBE1) for different values of k (i.e., 1, 10, 100 and 1000). The corresponding results are given in Fig. 1(b). The first observation is that both algorithms perform a significant amount of probes. However, this was expected, taking into account that if the average node degree is very small or very large, then many probes are required before the algorithms can provide the answer, as it has been shown in [6]. Also, in general DSOE\(^*\) requires less probes in comparison to DSOE for small values of k. One more very interesting aspect is the performance with respect to the graph size. For this reason we apply DSOE\(^*\) on the DBLP graph twice: for the first execution we have \(|B| = 4,000,150\) and \(|W| = 1,425,813\) and for the second one we reverse the direction of the queries from W to B. This way the graphs that we compare have the exact same number of edges and vertices but the differ significantly in the cardinality of B and W. Figure 2(a) presents the corresponding results. It is observed that in the reverse case the runtime is significantly higher, since for every node in W there are more options to perform probes on B. In general, the cost of the algorithm drops if the cardinality if B is larger than that of W.

The goal of the last experiment is to test scalability. First we focus on B and then on W. For this experiment DBLP, YOUTUBE2 and WIKIPEDIA datasets have been used. These graphs have almost the same cardinality in one set and they differ on the cardinality of the other. For all tests we have used 64 Spark executors and the implementation of the DSOE\(*\) algorithm with \(k=10\). The corresponding results are given in Fig. 2(b). It is evident that although the cardinalities of both B and W have an impact on performance, execution time is more sensitive on the cardinality of W when used as the source set.

5 Conclusions

In this work, we study for the first time the distributed detection of the top-k nodes with the highest degrees in a hidden graph. Since the set of edges is not available a-priori, edge probing queries must be applied in order to be able to explore the graph. We have designed two algorithms to attack the problem (DSOE and DSOE\(^*\)) and evaluate their performance based on real-world networks. In general the algorithms are scalable, showing good speedup factors by increasing the number of Spark executors.

By studying the experimental results, one important observation is that the number of probes is in general large. Therefore, more research is required to be able to significantly reduce the number of probes preserving the good quality of the answer. Also, it is interesting to evaluate the performance of the algorithms in even larger networks containing significantly larger number of nodes and edges.

References

Aggarwal, C.C., Wang, H.: Managing and Mining Graph Data. Springer, New York (2010). https://doi.org/10.1007/978-1-4419-6045-0

Alon, N., Asodi, V.: Learning a hidden subgraph. SIAM J. Discrete Math. 18(4), 697–712 (2005)

Bouvel, M., Grebinski, V., Kucherov, G.: Combinatorial search on graphs motivated by bioinformatics applications: a brief survey. In: Kratsch, D. (ed.) WG 2005. LNCS, vol. 3787, pp. 16–27. Springer, Heidelberg (2005). https://doi.org/10.1007/11604686_2

Goldreich, O., Goldwasser, S., Ron, D.: Property testing and its connection to learning and approximation. J. ACM 45(4), 653–750 (1998)

Strouthopoulos, P., Papadopoulos, A.N.: Core discovery in hidden graphs. CoRR (to appear in Data and Knowledge Engineering) abs/1712.02827 (2017)

Tao, Y., Sheng, C., Li, J.: Finding maximum degrees in hidden bipartite graphs. In: Proceedings ACM International Conference on Management of Data (SIGMOD), Indianapolis, IN, pp. 891–902 (2010)

White, T.: Hadoop: The Definitive Guide, 4th edn. O’Reilly Media Inc., Sebastopol (2015)

Yiu, M.L., Lo, E., Wang, J.: Identifying the most connected vertices in hidden bipartite graphs using group testing. IEEE Trans. Knowl. Data Eng. 25(10), 2245–2256 (2013)

Zaharia, M., et al.: Apache spark: a unified engine for big data processing. Commun. ACM 59(11), 56–65 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Kostoglou, P., Papadopoulos, A.N., Manolopoulos, Y. (2019). Distributed Computation of Top-k Degrees in Hidden Bipartite Graphs. In: Welzer, T., et al. New Trends in Databases and Information Systems. ADBIS 2019. Communications in Computer and Information Science, vol 1064. Springer, Cham. https://doi.org/10.1007/978-3-030-30278-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-30278-8_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30277-1

Online ISBN: 978-3-030-30278-8

eBook Packages: Computer ScienceComputer Science (R0)