Abstract

Building and using models to make sense of phenomena or to design solutions to problems is a key science and engineering practice. Using technology-based tools in class can promote the development of students’ modeling practice, systems thinking, and causal reasoning. In this chapter we focus on the development of students’ system modeling competence that became evident as students engaged in the modeling practice while using an online modeling tool in the context of a high school chemistry unit. We describe and provide examples for four aspects of system modeling competence: (1) defining the boundaries of the system by including components in the model that are relevant to the phenomena under investigation, (2) determining appropriate relationships between components in the model, (3) using evidence and reasoning to build, evaluate, and revise models, and (4) interpreting the behavior of a model to determine its usefulness in explaining and making predictions about phenomena. We discuss how building, using, evaluating, and revising models can be classified into the four system modeling competence aspects, and how technology tools can support the development of students’ system modeling competence.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

The modeling practice, which includes the elements of constructing, using, evaluating and revising models, has always been a central practice used by scientists and has recently gained more prominence in science classrooms. In parallel, the prominence of systems thinking and system modeling has grown in science education as students are expected to investigate complex systems to make sense of phenomena (National Research Council [NRC], 2012). Since technologically advanced modeling tools have become more widely used in STEM education, the question arises as to how these tools influence learning and how they can be used to probe theories about learning through modeling. However, the reverse may also be asked: how do theories of modeling influence the development of digital modeling tools? Our prior experiences and understandings of scientific modeling, its importance in the development of many areas of science, and our belief in the educational value of system modeling in particular inspired us to develop a modeling tool that could provide a better onramp to system thinking. In turn, classroom use of the modeling tool provided us with a new and detailed view into student modeling practices and challenges.

We begin with a brief overview of the theoretical framework related to the development of system modeling tools, then describe the tool itself and several aspects of system modeling competence that it is designed to support. The chapter is concluded by discussing how the aspects of system modeling competence correspond with the ideas of modeling competence presented in this book.

2 A Framework for System Modeling Competence

The Next Generation Science Standards (NGSS Lead States, 2013), a set of internationally benchmarked standards for science education widely used in the United States, defines the modeling practice at the secondary school level as developing, revising, using, and evaluating models to predict and explain phenomena (Appendix F, p. 6). Lehrer and Schauble (2015) point out that many philosophers and researchers have identified modeling as the signature practice of science, and that the other seven science and engineering practices of the NGSS are “deployed in the goal of constructing, revising, critiquing, and contesting models of the natural world” (p. 1241). Furthermore, the modeling practice is viewed as critical for advancements in science knowledge and critical for students to make sense of phenomena and share their ideas (Harrison & Treagust, 2000; Passmore, Gouvea, & Giere, 2014; Schwarz et al., 2009). Engaging learners in constructing, using, evaluating and revising models is key in helping them build useable knowledge (Pellegrino & Hilton, 2012) that can explain and predict phenomena and solve problems.

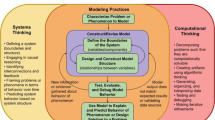

An important part of modeling stressed by A Framework for K-12 Science Education (NRC, 2012) is the ability to generate models. Schwarz et al. (2009) maintain that it is crucial to involve learners in the construction of models rather than primarily working with models provided by teachers or scientific authorities, and that by doing so students can articulate their own understanding. Clement (2000, 2008) argues for the importance of having students construct explanatory models, but found that most students did not do this without appropriate support. In the Schwarz et al. (2009) study, with the right supports, elementary and middle school students were able to develop a more sophisticated view of modeling, construct models that included explanatory mechanisms, and use these models to make predictions about closely related phenomena. However, students face several challenges when constructing and using models and have few opportunities to engage in this practice (Harrison & Treagust, 2000; Schwarz et al., 2009). Engaging in the modeling practice often means thinking about phenomena from a system perspective, commonly referred to as ‘systems thinking.’ Systems thinking encompasses the cognitive processes involved in understanding and working with complex systems. It includes consideration of the system boundaries, components of the system, interactions between components in the system and between different systems, and that systems have emergent properties based on the behavior of the system (Passmore et al., 2014). Models are tools that represent the investigated system and can support students in figuring out how complex systems behave and predict the outcome of changes in complex systems (Yoon et al., 2015). As described in A Framework for K-12 Science Education (NRC, 2012), “An explicit model of a system under study can be a useful tool not only for gaining understanding of the system but also for conveying it to others. Models of a system can range in complexity from lists and simple sketches to detailed computer simulations or functioning prototypes. Models can be valuable in predicting a system’s behaviors or in diagnosing problems or failures in its functioning, regardless of what type of system is being examined” (pp. 91–92). The basic structure of a system model is essentially a network of causal links. Systems thinking is challenging for students. The dynamic nature of these models and difficulties people have with causal reasoning interfere with the ability to design and predict the outcomes of complex system models (Zimmerman, 2007; Chinn & Brewer, 2001). We may be able to conceptualize each component of the model, but ‘running’ the entire model in our heads is nearly impossible. The outcomes of complex system models can be counterintuitive and it is difficult to know a priori which components of the system will have significant impact. The difficulties of understanding complex systems are well documented. Engaging in the modeling practice through system modeling can provide a support to help students develop a systems thinking perspective (Booth Sweeney & Sterman, 2000, 2007; Dörner, 1980, 1996; Fretz et al., 2002; Hmelo-Silver & Pfeffer, 2004; Jacobson & Wilensky, 2006).

Students can engage in modeling in a number of different ways. Commonly this occurs through the construction of some physical representation, illustration, or model diagram (Krajcik & Merritt, 2012). It also occurs through the exploration of simulations designed and produced by others (Rutten, Van Joolingen, & Van Der Veen, 2012). However, it is uncommon for students to create their own testable models from scratch. These are usually manifested as computational models, and require significant expertise in either computer programming, writing mathematical equations, or both. Our challenge was to make the construction, testing, sharing, and revising of computational models accessible to many more students by overcoming these barriers. By opening up the full range of engagement in the modeling practice, growth in student modeling practices and the possibility of achieving competence in modeling that would not be otherwise possible is within reach.

A framework for system modeling competence should encompass key features of how students build, evaluate, use, and revise models. We have identified four aspects of system modeling competence that appear to be necessary in order for students to construct system models useful for understanding natural phenomena:

-

1.

Defining the boundaries of the system by including components in the model that are relevant to the phenomenon under investigation.

-

2.

Determining appropriate relationships between components in the model.

-

3.

Using evidence and reasoning to build, evaluate, use, and revise models.

-

4.

Interpreting the behavior of a model to determine its usefulness in explaining and making predictions about phenomena.

The first two aspects of the framework for system modeling competence encompass the most common challenges we have observed regarding students building models (Damelin, 2017). These relate primarily to model structure and provide insight into students’ system thinking and causal reasoning. The third aspect stems from the question that always arises after a student has defined a relationship between components in a model: ‘How do I know this relationship is right?,’ To answer that question students can compare the output of a model to validating data sources such as publicly available datasets, results from their own experiments, teacher demonstrations, and readings. The fourth aspect comes from how models are used in the real world to explain and make predictions about phenomena or to solve a problem. All four aspects of the framework for system modeling competence are important for students to engage in when designing, testing, and revising models, and when building their understanding of the purpose and nature of models. In this chapter we provide a description of the four aspects of system modeling competence, and illustrate them with several examples from students’ models developed in a high school curricular unit.

3 Development of the Modeling Tool

As part of a U.S. National Science Foundation funded project,Footnote 1 we developed a modeling tool called SageModeler and embedded it in an environment that would allow the model output to be compared with external validating data sources. Our hypothesis was that an iterative approach to model construction that uses real-world data and experiences as evidence for the relationships between components of the model would result in students creating models they could use to explain and make predictions about the phenomenon under study.

SageModeler,Footnote 2 a free, web-based tool, is designed to support students, beginning in middle school, to engage in systems thinking. SageModeler was inspired by a previously designed modeling tool, Model-it (Metcalf-Jackson, Krajcik, & Soloway, 2000). It facilitates the diagramming of a system and makes it possible to calculate and visualize model output without requiring students to write equations or code. Several scaffolds were built into the software to achieve these goals (Damelin, Krajcik, McIntyre, & Bielik, 2017).

Students begin by dragging images that represent model components to the canvas and linking them together to represent a relationship between those components. This initial system diagram provides an opportunity for students to make their first choices about what should be included within the boundaries of the system and to indicate how the causal chains will direct model behavior. At this point the model diagram is a visual representation of a student’s systems thinking. This feature of the software supports students in engaging in aspect 1 above, defining the boundaries of the system.

In order for the system diagram to become a runnable model, each component is treated as a variable that can be calculated by the modeling engine. The next step is to define each relationship link in the model such that the impact of one variable on each of the other variables to which it is linked can be calculated. In order to do this without requiring coding or writing equations, students construct a verbal description of how one variable affects another. For example, in a model of gas properties, students could use the relationship inspector to construct a sentence such as the following: An increase in Volume causes Pressure to [decrease] by [about the same] (Fig. 16.1). The underlined parts of that sentence are defined using drop-down menus, and the resulting relationship is also depicted by a graph showing a visual representation of this relationship. Defining relationships with words helps students overcome the mathematical obstacles typically associated with creating computational models, and allows them to focus on a conceptual understanding of the relationships between variables (Stephens & Ke, 2017). This feature of the software engages students in aspect 2, resulting in a model that represents an instantiation of the student’s thinking about the workings of some phenomenon that can now be tested.

Once a system includes variables and relationships between those variables, the model can be run, generating tables and graphs that provide feedback on the behavior of the model. To simplify comparing model output with other data sources, SageModeler is embedded in CODAP, the Common Online Data Analysis Platform (Finzer & Damelin, 2016). CODAP is an intuitive graphing and data analysis platform that takes the outputs generated by the system model, as well as any other data source—from published data sets to results of computational models or student physical experiments—and combines them into a single analytic environment. Students use the feedback from the visualizations in CODAP to inform iterative cycles of creating, testing, and evaluating their models. Here students engage in aspects 3 and 4, using model output in comparison with an external data set to validate choices made about model components and relationships, resulting in a working model that can be used to explain and make predictions about the phenomenon under study.

To discuss the aspects of system modeling competence in the context of a model created with SageModeler, we use the initial and final models created by a pair of high school students engaged in a chemistry unit about the emergent properties of gases. The phenomenon that was the focus of the unit was framed for students in the following way:

It was the end of a long work day on the railway. It was a cooler day and a chilled rain was falling from the sky. A few of the workers were given the task of steam cleaning one of the 67,000 pound, half inch thick steel tankers. When they were done, they sealed up the tanker and went home. Not long after they left, disaster struck, and the steel-walled tanker collapsed in on itself. So, how can something that can’t be seen crush a 67,000-pound oil tanker made of half inch steel?Footnote 3

The driving question of the unit was, ‘How can something that can’t be seen crush a 67,000 lb. oil tanker made of half inch steel?’

The initial model created by one pair of students is shown in Fig. 16.2.

Initial model created by one student pair. The red arrows indicate positive relationships (i.e., as one variable increases, so will the other). The thickness of the arrow represents how much of an effect one variable has on another: a thin arrow indicates a small effect, a thick arrow indicates a large effect, a gradually thickening arrow indicates a change by ‘more and more’

Below we expand upon each aspect of system modeling competence, illustrating them with examples from student models in Figs. 16.2 and 16.3.

Final model from the same student pair in Fig. 16.2, after 3 weeks of classroom activities and four model revisions. The red arrows indicate positive relationships (as one variable increases, so does the other), and the blue arrows indicate negative relationship (as one variable increases, the other decreases). The thickness of the arrow represents how much of an effect one variable has on another

4 Results

4.1 Define Boundaries of a System by Choosing Components in the Model That Are Relevant to the Phenomenon Under Investigation

When constructing a model of some phenomenon it is important that the model is expansive enough to include all the relevant components and relationships to produce appropriate behaviors, but not so expansive that it complicates the model, hindering understanding of the system. This aspect can manifest itself in two ways.

-

(a)

Distinguishing between objects and variables

In order for a system model created using the modeling tool to generate data, each component of the model must be a variable that represents a measurable quantity or quality, something that can be defined on a low-to-high scale. Many students, especially in early iterations of their models, include objects or other components that have no inherent measurement scale. One scaffold that was designed in the modeling tool to support students in this aspect was the text in the relationship box used to define the effect between the variables (Fig. 16.1). When defining these relationships, students are asked to choose the appropriate semi-quantitative effect (about the same, a little, a lot, etc.). If students did not label the variable appropriately as a measurable variable, the sentence will not make sense (for example, ‘an increase in composition of air causes temperature change to increase.’ What does it mean to increase the composition of air? The strangeness of this sentence should indicate to the student that the label of the composition of air variable should be changed to something measurable, or in this case removed if it is not relevant to the model behavior, as these students realized themselves in a later iteration of this model (Fig. 16.3).

-

(b)

Choosing relevant variables through consideration of appropriate scope and significance of effect

There are two questions of scope regarding variable relevance: Is the variable related to the phenomenon being modeled, and if so, is the level of detail implied by the variable appropriate for the questions being asked of the model? The first question is easier for students to address, and may be supported by asking whether, if we removed that variable, the model would still explain the phenomenon. However, early in the development of a model, before the components of the system and their effects are well understood, decisions about which components to include can be challenging. The second question, regarding the level of detail a model should include, tends to be harder to define. It would be inappropriate for every model to drill down to the level of atomic or subatomic interactions, while some models do require this level of detail. Because of this, the scope of variables to include is related not only to the phenomenon being modeled, but also to the features of the phenomenon that are important to understand. In the case of emergent properties of gases, a molecular-level understanding, while not absolutely necessary, provides a richer and more widely applicable model.

Even when variables are all clearly within an appropriate boundary of the system being modeled and are at an appropriate level of detail, some variables will have a greater effect than others. Variables can be related to the phenomenon but have so little effect on the model output as to be insignificant. Including these variables only complicates the model and obstructs exploration of the salient features. In Fig. 16.2 the variable Elevation is included and linked to Air pressure (Inside and Outside). While it is scientifically correct that elevation will affect air pressure, the effect will be insignificant on a model of this phenomenon, which occurs under typical atmospheric conditions.

4.2 Determine Appropriate Causal and Correlational Relationships Between Components in the Model

Defining the interactions and relationships between elements in the model is critical for developing a good scientific model. These relationships will determine the outcome of the model. When using modeling tools, this aspect can manifest itself in two ways:

-

(a)

Defining logically correct and scientifically accurate relationships to represent interactions between variables

There are several ways a link between two variables could be incorrect:

-

(i)

There may be no relationship between variable A and variable B. While A and B may covary, a change in one may not be the cause of a change in the other. While linking these two variables together in a model might produce expected outcomes, there would be no rationale for making a causal chain by linking these two variables together.

-

(ii)

There is a relationship, but the way the relationship is defined doesn’t match the real-world behavior of the interaction between variable A and variable B. For example, some students correctly predicted that an increase in (external) pressure would decrease the volume of a gas (assuming the container can change size), but they defined a directly proportional relationship rather than an inversely proportional one.

-

(iii)

The direction of causality is reversed from the correct orientation. It was not uncommon for one or more relationships in a causal chain to be reversed.

-

(i)

An example of this aspect can be seen in how the pair of students reversed the linkage between air pressure and temperature from their initial model (Fig. 16.2) to their final model (Fig. 16.3). In the phenomenon explored in this unit temperature affects the air pressure rather than the other way around, and students recognized this during their model building and testing iterations. These students also changed the relationship between air pressure and likelihood of implosion from positive to negative, as they realized that an increase in pressure inside the tanker will decrease the chance of the tanker implosion.

-

(b)

Defining direct relationships between variables

This is one of the most complicated tasks for students when constructing models. There are two considerations related to the directness of relationships:

-

(i)

Large gaps in the causal chain. For example, linking temperature and pressure might be acceptable if a model is describing what happens at a macroscopic level. However, if the expectation is for a molecular-level explanation, one could argue that other variables should come between temperature and pressure. Perhaps temperature -> molecular kinetic energy -> speed of molecules -> number and strength of molecule collisions -> pressure might be more appropriate.

-

(ii)

Inclusion of indirect relationships between variables. Often students will show one variable having an effect on two or more other variables in the model. It is not typical that one variable truly has a direct effect on many others; even more unlikely to be accurate is when a variable is connected to both the beginning of a causal chain and to later parts of the same chain of linked variables.

-

(i)

Identifying large gaps, b(i), overlaps with the issue of defining system boundaries, because defining a relationship in this way results in missing relevant variables. Identifying indirect relationships, b(ii), is the greater challenge for students.

In Fig. 16.3, the variable Volume of Air in Tanker is linked in two ways to the Implosion variable. There are several issues with this, one of which has to do with appropriate labeling of variables. During various model iterations the students attached different meaning to the Volume of Air variable. At one point it was meant to refer to the amount of air molecules, and at other points as referring to the volume of the tanker. Though they had not untangled all of these issues by the end of the unit, they did consider it problematic that Volume affected Implosion via two different causal chains in their model. One of them noted in an interview that the link that did not include Air Pressure was not necessary and that they planned to remove it.

4.3 Using Evidence and Reasoning to Build, Evaluate, and Revise Models

A well-designed model, which has explanatory and predictive power regarding real-world phenomena, should use evidence to justify which variables are included and how the relationships are defined. Evidence for the inclusion of individual model components can be in the form of collected empirical data or from external data sources, and should be supported by reasoning based on scientific principles.

For example, at the beginning of the properties of gases curricular unit, students were introduced to a phenomenon and driving question and asked to construct an initial model that they thought would help answer the driving question. Because they had not had much experience beyond observing the phenomenon, most of the relationships and some of the variables they used in their initial models were speculative, based on prior knowledge or intuitions about how to define the relationships. As the unit progressed, students conducted experiments, explored simulations, and discussed articles that gave them a foundation on which to defend their choices for specific relationships they had defined. We often observed students modifying those relationships and adding and deleting variables soon after engaging with these validating data sources.

4.4 Interpreting the Behavior of the Model to Determine Its Usefulness in Explaining and Generating Predictions About the Phenomena

One of the goals was to create a tool and associated curriculum that would support students in experiencing the modeling practice similar to the way in which scientists engage in modeling and system thinking. Thus, this aspect of system modeling competence is related to students’ ability to use models in much the way scientists do. Achieving this aspect of competence means that not only have students created a testable model, but that they also understand how to run it and can make visualizations of the output, compare the model behavior with expected real-world behavior, make predictions related to similar events, and use it to answer the unit’s driving question about the core phenomenon being modeled.

Although the students in the emergent properties of gas unit considered the validity of individual relationships between directly-connected pairs of variables in their models, they showed less competence in considering the behavior of their models as a whole as a predictor of the investigated phenomena. For example, the pair of students who produced the model shown in Fig. 16.3 did not appear to notice that their model gave two different predictions for the effect of temperature on implosion. Their final model showed both a positive relationship between temperature and pressure and a negative relationship between those same two variables. Situations like this could exist in which two different causal pathways exist, or a feedback loop might cause an oscillating effect. However, two different predictions for the effect of a single variable usually indicates a problem with the model. These students constructed graphs of the relationships between different pairs of variables in their model, but did not consider the overall behavior of the model as feedback that could have helped them detect, diagnose, and resolve problems, in this case, arising from inconsistency in the way they were thinking about the variable Volume of Air in Tanker.

5 Discussion

In the examples provided above, students encountered some challenges with causal reasoning similar to those described by Jonassen and Ionas (2008), Schauble (1996), and Koslowski and Masnick (2002). These challenges were most apparent when students were asked to provide evidence and justifications for the variables and relationships defined in their models and to explain how their model addressed the driving question in the unit. However, students’ model-based explanations improved after each model revision, indicating that the technology-rich modeling environment and curricular materials offered support to students in developing their causal reasoning.

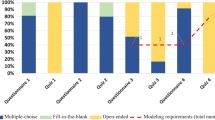

In line with the goals of scientific modeling practice (NRC, 2012; Schwarz et al., 2009), this chapter provided examples of how students utilized a modeling tool to construct, use, evaluate, and revise their own models. Iterative cycles of model testing and revision stand at the heart of the modeling practice (Lehrer & Schauble, 2006; Magnani, Nersessian, & Thagard, 1999). Students iteratively revised their models to explain the phenomenon under investigation by honing the boundaries of the system and improving relationships between the variables in their models. This process is recommended by Schwarz et al., (2009) and aligns with the modeling cycle presented in Chap. 2 of this book and described by Krell, Upmeier zu Belzen, and Krüger (2014), in which students move between the experimental world and the model world. Our evidence supports the notion that students require repeated appropriate opportunities to use validating data sources to develop, test, evaluate and revise their models in the pursuit of gains in this competence. Students were able to take the ideas they learned in their real-world investigations and incorporate them, with the support of the teacher and their peers, into their computational models. Although we saw improvements with each iteration, the examples in this chapter indicate that it was not easy for students to build towards all four aspects of system modeling competence discussed here. Most students made progress toward them, showing the greatest gains in aspects 1 and 2 related to model structure, but results suggest that progress toward aspects 3 and 4 would have benefited from more explicit connections to validating data sources and more explicit support to use the models to make predictions about specific phenomena.

The impetus for developing a modeling tool for students was to support them in engaging in developing, using, testing and revising models—key aspects of the modeling practice. The focus was primarily on the more general aspects (aspects 3 and 4) articulated in the framework for system modeling competence. This informed how we designed the tool to support sense-making with models through comparative data analysis and ease of model construction. We provided scaffolds to encourage student articulation of evidence for defining particular relationships and designed tools for sharing models and supporting peer review. These features scaffolded students in their growth toward aspect 3—using evidence and reasoning to build, evaluate, use, and revise models. We also built the tool to utilize an existing data analytic environment, which was designed for student visualization of data and facilitated the comparison of data across multiple data sources. This feature of the tool design supports student growth in aspect 4—interpreting the behavior of a model to determine its usefulness in explaining and making predictions about phenomena.

At the same time that the framework for system modeling competence was informing the design of the modeling tool, the experience with students during the tool development influenced the development of the framework for system modeling competence. Aspects 1 and 2 primarily grew out of challenges we observed students encountering when building system models. Some of these were anticipated, such as the need to include only measurable variables as model components, but other barriers toward student generation of useful models proved to be significant obstacles. In particular, the issues students encountered in causal reasoning and graph literacy spawned many discussions about how to address these issues both through software scaffolds, such as the design of the relationship inspector (Fig. 16.1), to pedagogical scaffolds, which included teacher materials to support targeted discussions around these issues and easy ways for the teacher to project student models for discussion.

The framework for modeling competence (FMC; Chap. 1) arose in a different context from ours, but connections can be drawn between the two approaches. Grosslight, Unger, Jay, and Smith (1991) defined three general levels of model understanding. In level I, students consider models as mere copies of reality. In level II, students still consider models as copies of reality, but start to understand that models can be used for different purposes and focus on certain features of the target reality. In level III, students perceive models as representational tools for developing and testing ideas, and understand that the role of the modeler and the models can change as the understanding develops. Based on these levels, Krell, Upmeier zu Belzen, and Krüger (2014) developed the FMC. According to their model, three levels of understanding were suggested for each of five modeling aspects (Chap. 1). Levels I and II in this framework consider models as descriptions of reality, while level III considers models as predictive research tools for testing different hypothesis (Krell et al., 2014; Krell & Krüger, 2016; Krell, Reinisch, & Krüger, 2015).

We suggest that students with level III modeling competence would perform at a high level on the four aspects of system modeling competence. This would be expected from students with level III competence in testing and changing models, because it is necessary for students to consider the four aspects described in this chapter—choosing the relevant components of the system, determining the appropriate causal relationships between components, using evidence to support the model, and interpreting the behavior of the model—to successfully test and change their models. When considering the aspect purpose of models, students who understand the predictive power of the model (level III) should be more competent in the aspect of interpreting the behavior of the model, as the output of the simulation should be used for predicting real-world behavior of the system being modeled. It is important to emphasize that the four system modeling aspects described in this chapter are not defined as levels, but as ideas about what students need to know and be able to do.

We hold views on the aspect nature of models somewhat different from those presented in Chap. 2. We believe that the explanatory power of a model is just as important as the predictive power of it. A good model that provides a complete and appropriate explanation of the investigated phenomena is just as meaningful for students’ learning as a model that is used to test hypotheses and predict changes in the system.

6 Conclusions

Modeling and system thinking play an important role in supporting students in developing useable knowledge of science (Pellegrino & Hilton, 2012). Technologically advanced learning tools such as the modeling tool described in this chapter hold a potential to support students’ engagement in the modeling practice and provide an environment for demonstrating competence in modeling, systems thinking, and causal reasoning (Fishman, Marx, Blumenfeld, Krajcik, & Soloway, 2004; Quintana et al., 2004; Wagh, Cook-Whitt, & Wilensky, 2017; Yoon et al., 2015). However, as others have found (e.g., Fretz et al., 2002), students face substantial challenges when learning to engage in modeling, systems thinking, and causal reasoning. We believe that modeling tools that engage students in the process of understanding phenomena through constructing, testing, evaluating, and revising models increase students’ competence related to the four aspects of system modeling competence described in this chapter.

We have described the development of an online computational modeling tool, discussed four key aspects that are part of a the framework for system modeling competence, and illustrated them with examples of competence from students’ models in a high school unit using the modeling tool. We believe that building toward these four important aspects of system modeling competence provides a way to evaluate whether students are productively engaged in the modeling practice. This articulation of the framework for system modeling competence can also provide guidance for designers considering the creation of tools, curricula, and teacher supports that will encourage student growth related to systems and system modeling.

Notes

- 1.

This material is based upon work supported by the National Science Foundation under Grant Nos. 1417900 and 1417809. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

- 2.

SageModeler can be freely accessed at https://learn.concord.org/building-models

- 3.

Chemistry unit lead author Erin Cothran, a teacher at Hudson High School, in Hudson, MA.

References

Booth Sweeney, L., & Sterman, J. D. (2000). Bathtub dynamics: Initial results of a systems thinking inventory. System Dynamics Review, 16(4), 249–286.

Booth Sweeney, L., & Sterman, J. D. (2007). Thinking about systems: Student and teacher conceptions of natural and social systems. System Dynamics Review, 23(2–3), 285–311.

Chinn, C., & Brewer, W. (2001). Models of data: A theory of how people evaluate data. Cognition and Instruction, 19(3), 323–393.

Clement, J. (2000). Model based learning as a key research area for science education. International Journal of Science Education, 22(9), 1041–1053.

Clement, J. (2008). Creative model construction in scientists and students. Dordrecht, The Netherlands: Springer.

Damelin, D. (2017). Technical and conceptual challenges for students designing systems models. Paper presented at the Annual Meeting of the National Association for Research in Science Teaching (NARST), San Antonio, TX.

Damelin, D., Krajcik, J., McIntyre, C., & Bielik, T. (2017). Students making system models: An accessible approach. Science Scope, 40(5), 78–82.

Dörner, D. (1980). On the difficulties people have in dealing with complexity. Simulations and Games, 11(1), 87–106.

Dörner, D. (1996). The logic of failure: Recognizing and avoiding error in complex situations (R. Kimber & R. Kimber, Trans.). New York: Metropolitan Books.

Finzer, W., & Damelin, D. (2016). Design perspective on the common online data analysis platform. In C. E. Konold (Chair), Student thinking, learning, and inquiry with the common online data analysis platform. Symposium conducted at the meeting of the American Educational Research Association, Washington, DC.

Fishman, B., Marx, R. W., Blumenfeld, P., Krajcik, J., & Soloway, E. (2004). Creating a framework for research on systemic technology innovations. The Journal of the Learning Sciences, 13(1), 43–76.

Fretz, E. B., Wu, H. K., Zhang, B., Davis, E. A., Krajcik, J. S., & Soloway, E. (2002). An investigation of software scaffolds supporting modeling practices. Research in Science Education, 32(4), 567–589.

Grosslight, L., Unger, C., Jay, E., & Smith, C. L. (1991). Understanding models and their use in science: Conceptions of middle and high school students and experts. Journal of Research in Science Teaching, 28(9), 799–822.

Harrison, A. G., & Treagust, D. F. (2000). A typology of school science models. International Journal of Science Education, 22(9), 1011–1026.

Hmelo-Silver, C. E., & Pfeffer, M. G. (2004). Comparing expert and novice understanding of a complex system from the perspective of structures, behaviors, and functions. Cognitive Science, 28(1), 127–138.

Jacobson, M. J., & Wilensky, U. (2006). Complex systems in education: Scientific and educational importance and implications for the learning sciences. The Journal of the Learning Sciences, 15(1), 11–34.

Jonassen, D. H., & Ionas, I. G. (2008). Designing effective supports for causal reasoning. Educational Technology Research and Development, 56(3), 287–308.

Koslowski, B., & Masnick, A. (2002). The development of causal reasoning. In U. Goswami (Ed.), Blackwell handbook of childhood cognitive development (pp. 257–281). Oxford, UK: Blackwell Publishing, Ltd.

Krajcik, J., & Merritt, J. (2012). Engaging students in scientific practices: What does constructing and revising models look like in the science classroom? The Science Teacher, 79(3), 38.

Krell, M., & Krüger, D. (2016). Testing models: A key aspect to promote teaching-activities related to models and modelling in biology lessons? Journal of Biological Education, 50(2), 160–173.

Krell, M., Upmeier zu Belzen, A., & Krüger, D. (2014). Students’ levels of understanding models and modelling in biology: Global or aspect-dependent? Research in Science Education, 44(1), 109–132.

Krell, M., Reinisch, B., & Krüger, D. (2015). Analyzing students’ understanding of models and modeling referring to the disciplines biology, chemistry, and physics. Research in Science Education, 45(3), 367–393.

Lehrer, R., & Schauble, L. (2006). Cultivating model-based reasoning in science education. In R. K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (pp. 371–387). New York: Cambridge University Press.

Lehrer, R., & Schauble, L. (2015). Development of scientific thinking. In R. Lerner, L. Liben, & U. Müller (Eds.), Handbook of child psychology and developmental science: Vol. 2. Cognitive processes (7th ed., pp. 671–714). Hoboken, NJ: Wiley.

Magnani, L., Nersessian, N., & Thagard, P. (Eds.). (1999). Model-based reasoning in scientific discovery. New York: Kluwer Academic/Plenum Publishers.

Metcalf-Jackson, S., Krajcik, J., & Soloway, E. (2000). Model-it: A design retrospective. In M. Jacobson & R. Kozma (Eds.), Advanced designs for the technologies of learning: Innovations in science and mathematics education. Hillsdale, NJ: Erlbaum.

National Research Council. (2012). A framework for K-12 science education: Practices, crosscutting concepts, and core ideas. Washington, DC: The National Academies Press.

NGSS Lead States. (2013). Next generation science standards: For states, by states. Washington, DC: The National Academies Press.

Passmore, C., Gouvea, J. S., & Giere, R. (2014). Models in science and in learning science: Focusing scientific practice on sense-making. In International handbook of research in history, philosophy and science teaching (pp. 1171–1202). Amsterdam, The Netherlands: Springer.

Pellegrino, J. W., & Hilton, M. L. (2012). Education for life and work: Developing transferable knowledge and skills in the 21st century. Washington, DC: The National Academies Press.

Quintana, C., Reiser, B. J., Davis, E. A., Krajcik, J., Fretz, E., Duncan, R. G., et al. (2004). A scaffolding design framework for software to support science inquiry. The Journal of the Learning Sciences, 13(3), 337–386.

Rutten, N., Van Joolingen, W. R., & Van Der Veen, J. T. (2012). The learning effects of computer simulations in science education. Computers & Education, 58(1), 136–153.

Schauble, L. (1996). The development of scientific reasoning in knowledge-rich contexts. Developmental Psychology, 32(1), 102–119.

Schwarz, C. V., Reiser, B. J., Davis, E. A., Kenyon, L., Achér, A., Fortus, D., et al. (2009). Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. Journal of Research in Science Teaching, 46(6), 632–654.

Stephens, L., & Ke, L. (2017). Explanations and relationships in students’ mental and external models. Paper presented at the Annual Meeting of the National Association for Research in Science Teaching (NARST), San Antonio, TX.

Wagh, A., Cook-Whitt, K., & Wilensky, U. (2017). Bridging inquiry-based science and constructionism: Exploring the alignment between students tinkering with code of computational models and goals of inquiry. Journal of Research in Science Teaching, 54(5), 615–641.

Yoon, S. A., Anderson, E., Koehler-Yom, J., Klopfer, E., Sheldon, J., Wendel, D., et al. (2015). Designing curriculum and instruction for computer-supported complex systems teaching and learning in high school science classrooms. J-STEM: Journal of Research in STEM Education, 1(1), 4–14.

Zimmerman, C. (2007). The development of scientific thinking skills in elementary and middle school. Developmental Review, 27(2), 172–223.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bielik, T., Stephens, L., Damelin, D., Krajcik, J.S. (2019). Designing Technology Environments to Support System Modeling Competence. In: Upmeier zu Belzen, A., Krüger, D., van Driel, J. (eds) Towards a Competence-Based View on Models and Modeling in Science Education. Models and Modeling in Science Education, vol 12. Springer, Cham. https://doi.org/10.1007/978-3-030-30255-9_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-30255-9_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30254-2

Online ISBN: 978-3-030-30255-9

eBook Packages: EducationEducation (R0)