Abstract

Mobile map applications have become a ubiquitous and important tool for navigation and exploration. However, existing map applications are heavily based on visual information, and are targeted towards persons with normal vision. Users with visual impairments face significant barriers when using these apps – currently, no major map app offers an accessible, intuitive or understandable presentation of map data in a non-visual format. The goal of this ongoing research project is to explore how mobile map application data can be displayed in an accessible and understandable way for visually impaired persons. As a first step, existing solutions were researched, identified and analysed. It was found that information retention is highest when a combination of different output modalities is used. Three major non-visual modalities were identified: Voice output (speech synthesis), ambient sounds (e.g. running water, birdsong, etc.), and vibration patterns. An initial prototype was created which combines all of these modalities, and first user tests were performed. Based on the test results, new iterations of the app are being developed. These will be tested and improved, after which a proof of concept will be developed, and ultimately a functioning app will be created and tested by target users.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

According to a study by the Swiss Central Association for the Blind (SZB) in 2012, there are about 325,000 visually impaired persons in Switzerland [1]. Persons with visual impairments encounter many major obstacles, one of which is the difficulty in navigating and exploring unknown areas independently. In order to explore an unknown area, they can not easily resort to maps, since the target group of commercially available maps are people with normal vision.

One solution is tactile maps, which are maps on which the information can be felt with the fingers. These are made using a special paper printing process which causes printed areas to swell upwards. However, these maps have significant disadvantages: They are expensive to produce, take up a lot of space, and generally present very little information on one page, since small details would be too difficult to distinguish by touch.

In recent decades, the presentation of geographical information has moved away from printed formats in favour of more convenient digital formats. Mobile map applications have become a ubiquitous and important tool for navigation and exploration. They have the advantage of being size-adjustable, meaning that the user can decide on the scope and level of detail they wish to be shown.

However, existing map applications are still heavily based on visual information, and, like most printed maps, are targeted towards persons with normal vision. Users with visual impairments face significant barriers when using these apps – currently, no major map app offers an accessible, intuitive or understandable presentation of map data in a non-visual format. This makes it essentially impossible for persons with visual impairments to quickly get a sense of a given environment, something which is particularly important before travelling to a new or unknown destination.

The goal of this research project is to explore how mobile map application data can be displayed in an accessible and understandable way for visually impaired persons in such a manner that it offers a quick and intuitive overview of a location and its surroundings.

1.1 Existing Research

In 2011, Zeng et al. [2] examined existing mapping systems for visually impaired persons. In their investigations, they distinguished between desktop and mobile solutions. Desktop solutions were defined as those used in a fixed location, whereas mobile solutions are GPS-based and are mainly used on smartphones. The authors concluded that, at the time the paper was published, there were still few mobile applications suitable for persons with visual impairments, but that many different solutions were expected for the future. They note that the possibilities of audio output offer great potential, but also a combination of mobile application and braille output is conceivable, as this distracts the user less from the everyday sounds around him.

In a study by Poppinga et al. in 2011 [3], it was examined whether a digital map using speech and vibration provides better accessibility than standard digital maps. The test subjects were instructed to use their finger to detect a network of streets and then attempt drawing them on paper. The result showed that it is generally possible to provide a visually impaired person with an overview of a map using this output method. These findings are very promising and serve as a basis for this work. Given that smartphone technology has improved continuously since 2011, it is expected that even better results can be achieved today.

2 Methods

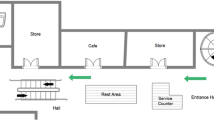

A smartphone-based prototype app was created, offering the user simplified maps of fictional places. To explore the area, the user must move their finger across the screen. The map elements are presented as rectangles for maximum clarity and simplicity. In future prototypes, the concept will be expanded so that more complex shapes can be represented.

The initial prototype presents a sample scenario: the user has been invited to a birthday party in a forest cabin (Fig. 1). The user’s task is to use the app to get an overview of the location and its surroundings. In the app, the cabin appears in the center of the screen; near it and around it are a forest, a lake, and one road. Using their finger to explore on the map, the user encounters different vibration patterns, sounds, and/or speech synthesis for each of these different elements.

Four different output methods were used for the first prototype (Table 1). These were chosen to enable all important information to be displayed as clearly as possible. In the case of forests and waters, names and exact boundaries are not of primary significance – it is more important that the user knows where a forest or body of water are located relative to other elements. Therefore, only audio clips were used as output, without additional vibration or voice output. This allows users to explore the area without being distracted by less relevant information. It is conceivable that the name of the water body or the forest could be output via an additional user action. For streets, the name and direction are of highest importance. As such, when touching the street, the device starts to vibrate and the name of the street is output once by voice. Vibrations emitted through continuous finger contact with the screen offers users a sense of the street’s path. The street name is reissued every time the street is touched again, allowing different streets to be more easily distinguished.

The forest cabin is in the center of the map. If it is touched, the cabin name is heard in an endless loop. This form of output may not be an option for real maps, as few buildings have a name.

Table 1 serves as a legend to Fig. 1. It also describes which output modalities were used for the corresponding elements when the user touches them with their finger.

This initial prototype was first tested with a group of people who have normal vision. In order to be able to test the prototype with sighted persons, the map elements were hidden so that only a white screen could be seen. This ensured that the subjects could not see the map elements.

Since this was a first attempt, the user survey was conducted without any measurable or comparable criteria. It was based on the question of what the subjects were able to perceive and how they rated the experience.

3 Results

The subjects were able to recognize and distinguish all elements while operating the app. They were able to find their way around, and in a follow-up conversation they were able to remember which elements could be found on the map.

There was initially some difficulty in deciding whether the water was a lake or a river. However, most of the subjects were able to determine that it was a lake upon further exploration of the map, based on the shape of the water. They also expressed the desire to be able to learn the name of the lake through a user action.

The repetitive speech output for the cabin turned out to be less than optimal. Although it was possible to identify where the cabin was located, it was difficult to determine its outline or boundaries. In addition, difficulties arose in finding the way leading to and from the cabin.

For the subjects, it quickly became clear that the vibrating line was supposed to be a road. This realization was supported by the spoken street name every time the line touched. Because the finger repeatedly left and returned to the defined area of the road during touch exploration, this repeatedly led to the app speaking out the street name.

The subsequent discussions with the test subjects showed that the persistent vibration for the road was perceived as too strong and disturbing. A lighter vibration pattern with accompanying sound reproduction would help determine the type of road. In addition, it would be sufficient if the street name were only issued by an additional user action, since the name of a street may not be relevant in every case.

4 Discussion and Future Work

The first prototype has shown that it is possible to explore a simple map just by outputting vibration, sounds and speech. In future iterations, the selected output options for the lake and forest will be maintained. For the output of the street, a concept with a combination of vibration pattern and audio output will be explored. It will also be important to examine the possibilities of additional user actions with the map which could offer more detailed information on certain elements when desired, such as the names of bodies of water.

Based on the experience gained with the initial prototype, the next one is currently being implemented with improvements and a new scenario. In addition to the prototypes, isolated analyses of individual details will be carried out, and the collected findings will be summarized. Finally, a concept will be developed for how to present map data in a mobile app, and a proof of concept will be implemented and usability tested with selected users. Based on the test results a map application will be developed and will then be field tested with a larger group of target users.

References

Sehbehinderung und Blindheit: Entwicklung in der Schweiz: Unpublished study by the Swiss Association for the Blind (SBZ) (2013)

Zeng, L., Weber, G.: Accessible maps for the visually impaired. In: Proceedings of IFIP INTERACT 2011, Workshop on ADDW, pp. 54–60 (2011)

Poppinga, B., Pielot, M., Magnusson, C., Rassmus-Gröhn, K.: TouchOver map: audio-tactile exploration of interactive maps. In: MobileHCI 2011, pp. 545–550 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Darvishy, A., Hutter, HP., Frei, J. (2020). Making Mobile Map Applications Accessible for Visually Impaired People. In: Ahram, T., Taiar, R., Colson, S., Choplin, A. (eds) Human Interaction and Emerging Technologies. IHIET 2019. Advances in Intelligent Systems and Computing, vol 1018. Springer, Cham. https://doi.org/10.1007/978-3-030-25629-6_61

Download citation

DOI: https://doi.org/10.1007/978-3-030-25629-6_61

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-25628-9

Online ISBN: 978-3-030-25629-6

eBook Packages: EngineeringEngineering (R0)