Abstract

High-quality research methods underpin strong scholarship in international business (IB) research. This book is a testimony to the maturity of the field and highlights both how far we have come and how far we still have to go. In this chapter, we first reiterate the importance of applying sound research methods while conducting IB research, and then proceed to identify a number of particularly salient methodological challenges facing scholars in IB research. We next review some key points in the Commentaries and Reflections that accompany the JIBS articles selected for this volume. Our chapter ends with a set of general recommendations to IB scholars in the pursuit of continuous high-quality scholarship.

We thank Agnieszka Chidlow, Henrik Gundelach, Stewart R. Miller, and Catherine Welch for their comments and help on an earlier draft of this chapter.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Introduction

It is because cross-national research is so consuming of time and other resources that we ought to be willing to settle for less than the ideal research designs. It is also in this context that we should be more forgiving of researchers who might seem opportunistic in selecting the countries and problems for cross-cultural research. (Sekaran 1983: 69)

Most, perhaps all, international business (IB) scholars would now agree that the above statement, made more than 35 years ago, is no longer valid. The challenges and costs associated with obtaining adequate samples and/or measures do not free a researcher from the responsibility of crafting a well-thought-out research design and adopting rigorous research methods. Gone are the days when IB researchers could be excused for a relative lack of methodological rigor due to “real-world constraints”, such as the absence of requisite financial resources to deploy the most advanced methodological approaches in complex international settings (Yang et al. 2006). For today’s scholars, staying up to date—that is, understanding and using the best available and most appropriate research methods—clearly matters.

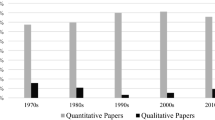

There have been several calls for expanding research settings and using more advanced methods to analyse complex, cross-border phenomena. A recent review of the methodological trajectories found in JIBS over the past 50 years (1970–2019) shows a dramatic rise in sophistication of the methods deployed (Nielsen et al. 2019), which is a testament to a maturing field. In fact, most IB journals with high Web-of-Science impact scores now formally or informally adhere to rigorous methodological standards and impose requirements on authors similar to the ones prevailing at the leading journals in other business disciplines such as marketing, organizational studies, and strategy (e.g., Hahn and Ang 2017; Meyer et al. 2017).

Moreover, during the past two decades, much has been written on sound methodological practices and rigor in IB research, some of which is documented in this book (see also, e.g., Nielsen and Raswant 2018; Nielsen et al. 2019). While Organizational Research Methods remains the primary outlet for pure methods articles, several of our scholarly journals, particularly JIBS and the Academy of Management Journal, have regularly published editorials and articles on best practices in research methods. Some of the best JIBS editorials on research methods have been included in this book.

IB scholars, like academics everywhere, study basic research methods in undergraduate and graduate classes such as statistics, econometrics, qualitative methods, and research design. We learn or teach ourselves how to use STATA, SPSS, SAS, or NVivo. These activities happen early in our careers, so we need to update our knowledge of best research methods practices on a regular basis. To partially address this need, within professional associations such as the Academy of International Business (AIB) and the Academy of Management (AOM), much attention is paid to sharing knowledge on best research practices through, for example, doctoral and junior faculty consortia. The Research Methods Shared Interest Group (RM-SIG) was recently established within the AIB to promote the advancement, quality, diversity, and understanding of research methodologies by IB scholars. The RM-SIG is just one example of a broad range of initiatives being undertaken by the global community of IB scholars to both help keep scholars abreast of the latest research methods and to push the field forward in the methods sphere.

We view our book as part of this ongoing initiative, which has spread across all business and social science disciplines, of improving the overall quality of methods used in business research. Our specific focus in this book is best practices in IB research methods. We take stock of some key challenges faced by the field in the realm of research design and methods deployed, and we also discuss recent advances in overcoming these challenges. We view our book as a unique, up-to-date reference source on good and best practices. By identifying and assembling a set of exemplary JIBS articles together with commentaries and reflections on these articles, we hope to share with the IB research community at large what now constitutes these best practices.

Our objectives for this introductory chapter are four-fold. First, we think that it is important to reiterate how and why high-quality research methods matter to IB scholars. Our second goal is to identify a number of base-line systemic methodological challenges facing scholars in IB research. A third goal is to introduce the various JIBS articles included in the book, which were selected because they represent sound and best practices to help overcome these challenges. We also briefly introduce the insightful commentaries and reflections provided by leading scholars on each of the JIBS pieces. Our last goal is to provide recommendations to IB scholars in the hope that the field will continue its positive trajectory and evolve into a net exporter of research methodology.

How and Why High-Quality Research Methods Matter?

The answer to the questions “How and why high-quality methods matter in international business research?” may be self-evident; however, we believe it is worth reiterating the benefits of using high-quality research methods—and the costs of using inadequate, outdated, or sloppy methods—to the field of IB studies.

First, the benefits. Acting with academic integrity means being consistent with “the values of honesty, trust, fairness, respect and responsibility in learning, teaching and research” (Bretag 2019). Acting with integrity in our research requires using high-quality research methods that promote the “truth” and minimize error. Our research is often motivated by puzzles we see around us in the real world. We use and test theories in order to better understand the world in which we live. We want our research to be credible and useful to other scholars, policy makers, managers, and the public. As is the case with any area of research, the choice and application of specific research methods largely determine the quality of subsequent knowledge creation, as well as the intellectual contribution made to the field. A well-crafted methodological approach can go a long way towards establishing a study’s rigor and relevance, thereby enhancing its potential impact, both in terms of scholarly advancement and improvement of managerial and policy practice. Only by practising the state-of-the-art in terms of research design, including sampling, measurement, analysis, and interpretation of results, will other scholars have confidence in the field’s findings. In fact, sound methodology—“research that implements sound scientific methods and processes in both quantitative and qualitative or both theoretical and empirical domains”—is one of the seven core guiding principles of the movement for Responsible Research in Business and Management (https://rrbm.network/).

However, scholars often view the benefits from research integrity as accruing only in the long term and primarily to society as a whole. In the short term, pressures to publish and the desire for tenure and promotion may be much more salient. Eden, Lund Dean, and Vaaler (2018: 21–22) argue that academia is full of “research pitfalls for the unwary” that can derail even well-intentioned faculty who believe they are acting with academic integrity. Doctoral students and junior faculty members are especially susceptible to these pitfalls due to the challenges they face as new entrants to academia—the liabilities of newness, resource dependence, and outsiderness.

The polar opposite of academic integrity is “scientists behaving badly” by engaging in academic misconduct/dishonesty (Eden 2010; Bedeian, Taylor and Miller 2010). IB scholars, similar to scholars throughout the social and physical sciences, are familiar with the three main types of academic misconduct: falsification (manipulating or distorting data or results), fabrication (inventing data or cases), and plagiarism (copying without attribution). In FFP (falsification, fabrication, and plagiarism) cases, researchers fail to tell the truth in scientific communications about their research (Butler et al. 2017). Such academic misconduct corrupts the research process and damages public trust in scientific literature. Research misconduct occasionally leads to retraction of the published work, and there is empirical evidence that the majority of retracted journal articles were retracted due to misconduct by the authors (Fang et al. 2012). Retraction also carries with it significant financial and personal consequences for the authors and substantial ripple effects on one’s colleagues, students, prior collaborators, and home institutions; see, for example, the types and estimates of costs in Stern, Casadevall, Steen and Fang (2014); Michalek, Hutson, Wicher and Trump (2010); Tourish and Craig (2018); and Hussinger and Pellens (2019).

Because of the huge costs involved when scientists behave badly, most of our universities, journals, and professional associations now have Codes of Ethics that outline, prohibit, and punish research misconduct. The Academy of International Business (AIB), for example, now has three ethics codes, one each for the AIB journals, members, and leadership (https://www.aib.world/about/ethics/). In fact, to the best of our knowledge, JIBS was the first scholarly business journal to have its own code of ethics (Eden 2010). Many of our journals and professional organizations also belong to COPE, the Committee on Publication Ethics (https://publicationethics.org/), which provides detailed process maps for handling various types of academic misconduct.

While the costs of academic dishonesty are well understood, there are also huge costs to scholarly inquiry from engaging in the grey area between academic integrity and misconduct, that is, in what has been called “sloppy science” or “questionable research practices” (QRPs) (Bouter et al. 2016). QRPs are research methods that “operate in the ambiguous space between what one might consider best practices and academic misconduct” (Banks et al. 2016a: 6). QRPs can occur in the design, analysis, or reporting stages of research. The typical motivation for QRPs is the desire of authors to present evidence favouring their hypotheses and to increase the likelihood of publication in a high-impact journal (Edwards and Roy 2017; Eden et al. 2018).

Banks et al. (2016a) and Banks et al. (2016b) identify six types of QRPs: selectively reporting hypotheses, excluding data post hoc, HARKing (hypothesizing after the results are known), selectively including control variables, falsifying data, and poor reporting of p values. Bouter et al. (2016) provide a list of 60 major and minor research misbehaviours, which they group into 4 areas based on research stage: study design, data collection, reporting, and collaboration. The authors conclude that selective reporting and citing, together with flaws in quality assurance and mentoring, are the top four examples of researchers cutting corners and engaging in sloppy research practices.

QRPs have high costs; they can “harm the development of theory, evidence-based practice, and perceptions of the rigor and relevance of science” (Banks et al. 2016b: 323). Incorrect statistical procedures can lead to flawed validity estimates, as shown in Antonakis and Dietz (2011). Engaging in low-quality or unethical research methods makes it impossible for other scholars to reproduce and replicate our results, leading to an overall distrust in scholarly publications (Rynes et al. 2018: 2995).

Evidence that scholars in the social and physical sciences, including in our business schools, do engage in QRPs and academic dishonesty is widespread, ranging from lists of retracted articles on Retraction Watch (http://retractionwatch.org) to stories in the New York Times and Nature. The evidence suggests that while only a small percentage of researchers may engage in academic misconduct (FFP), sloppy science is much more widespread (Bouter et al. 2016; Butler et al. 2017; Hall and Martin 2019).

We believe that the spread of QRPs in academia is partly because not everyone shares our interest in and passion for research methods. For many, if not most, academics, learning the “ins and outs” (the “dos and don’ts”) of particular research methods is difficult, a bit like “bad medicine”—you know it must be good for you because it tastes terrible. Math and statistics anxiety exist even among doctoral students and perhaps even more so among full professors! Over our careers, we have seen many examples of faculty who learned one research method early in their careers and relied on that method for all their projects, rather than learning new, more appropriate methods. We have also seen “slicing and dicing” of research projects where the workload was parsed out among co-authors, and no supervision of, or interaction with, the co-author assigned to write the research methods and results sections was provided. When scholars cut corners due to math/statistics anxiety and/or laziness, they open the door to questionable research practices.

QRP is clearly and issue facing all social scientists, not only IB researchers. Science is in the middle of a “reproducibility and replicability crisis” and “international business is not immune”, as Aguinis, Cascio, and Ramani (2017: 653) argue. Once scholars begin to have doubts about the findings of scientific research, we have started on the path towards viewing all research results with a jaundiced eye as “fake news”. We know that the conclusions drawn from an empirical study are only as solid as the methodological practices that underlie the research. If we want to raise the quality and impact of IB research, we need to bring not only rigor but also transparency and credible expectations of reliability back into our research methods. We turn now to a discussion of how to do this.

Methodological Challenges

Our second goal in this chapter is to identify a number of base-line systemic methodological challenges facing scholars in IB research. These methodological challenges, we argue, seem to plague IB research because of the types of research questions asked and the cross-bordercontexts studied.

For example, consider distance as a concept in IB research. A great number of measures and methodologies have attempted to capture the complexity of distance between nations (even accounting for within country variations), and the implications for firms operating within and across different types of distance (e.g., geographic, cultural, economic, institutional). Many IB research questions in the realm of distance can only be answered by taking into account multiple levels of analysis beyond the country level, including individuals (e.g., senior executives), headquarters, subsidiaries, strategic groups, industries, and so on (Nielsen and Nielsen 2010). However, the methods used to examine multilevel phenomena in IB studies (such as variance decomposition) have often been relatively unsophisticated and may have left key questions unanswered (Peterson et al. 2012). Moreover, of special interest to IB research that seeks practical relevance are the executives who formulate, implement, and monitor strategic initiatives related to IB operations (Tihanyi et al. 2000; Nielsen and Nielsen 2011). The methodological approaches deployed to tap into executives’ motives, preferences, values, and ultimate decisions (such as the usage of demographic proxies) have, at times, been limited in their capacity to describe and explain these complex phenomena (Lawrence 1997).

Below we identify some of the most salient methodological challenges facing IB researchers. This list of challenges is by no means exhaustive and we invite the community of IB scholars to contribute to this list and—more importantly—to provide input into possible solutions and best practices, for instance, by actively partaking in the AIB RM-SIG activities and/or contributing to the website (https://rmsig.aib.world/) or newsletter.

The discussion below is organized around three major research phases in terms of methodological choices: (1) Problem definition and research questions; (2) Research design and data collection; and (3) Data analysis and interpretation of results.

Phase 1: Problem Definition and Research Questions

As suggested above, IB refers to a complex set of phenomena, which require attention to both similarities and differences between domestic and foreign operations at multiple levels of analysis. We see the following key methodological challenges in the realm of problem definition.

-

1.

Is the problem truly international?

Isolating the international (cross-border/cultural) influence on the key relationship(s) in the study may require a deep understanding of both the domestic (i.e., the country of origin) and foreign (i.e., country of operations) business environments, in terms of political, institutional, economic, social, cultural, and behavioural characteristics. Methodologically, this may require input from researchers who are familiar with these environments and/or necessitate field trips to establish the nature of the “international” phenomenon.

-

2.

Are concepts and theories equivalent and comparable across contexts(cases, countries, cultures,etc.)?

Much current debate in IB revolves around the applicability of “standard” internationalization theories to emerging market firms (e.g., Santangelo and Meyer 2011; Cuervo-Cazurra 2012; Ramamurti 2012). To the extent that the applicability of theories and their key assumptions (e.g., the degree of confidence in the reliability of societal institutions) differ across national borders, IB researchers must embed such differences in their research design and develop suitable research questions with attention to equivalence and compatibility across contexts. It is now widely recognized that much IB research may inherently be about “contextualising business” (Teagarden et al. 2018), but at the same time scholars must guard against the possibility of alternative explanations or biases introduced by the very nature of the context(s) being investigated. Identifying explicitly contextual influences and their potential impacts, both in the design and interpretation of outcomes of a particular study, are critical for determining the boundaries within which theories used might be applicable. For instance, the impact of a variable such as state ownership on a variety of outcomes (e.g., the probability of going international or of engaging in international mergers vis-à-vis other entry modes or the location of outward foreign direct investment) may be largely dependent on the political and institutional environment in the country of origin (Estrin et al. 2016; He et al. 2016a, b). State ownership in China versus Norway may have completely different implications for explaining and predicting international expansion moves.

-

3.

What types of research questions are being asked?

A significant challenge is to establish a clear linkage between the specificity versus general nature of the research questions asked, and the related ambition to explain empirical phenomena and extend theory. IB scholars sometimes claim they will try to answer general research questions, such as: Where do firms locate their international operations? What entry mode choice is the best given the nature of the knowledge assets involved? Do firms benefit from internationalization? Unfortunately, the research design, including inter alia sample limitations and a restricted set of variables, then sometimes leads scholars to overestimate the generalizability of their results and to make exaggerated claims as to their contributions to theory. There is nothing wrong with relatively narrow, phenomenon-driven empirical research, but such research is unlikely to answer general research questions with important implications for theory. In this realm, IB scholars should always remember that their research questions should drive data collection and choice of methodology—not the other way around.

Phase 2: Research Design and Data Collection

Research design and data collection efforts are also susceptible to a number of challenges that are especially salient in IB research. The research design must ensure the equivalence and comparability of primary and secondary data, which may be related to different environmental contexts. Here again, we see three main challenges.

-

1.

What is an appropriate sample?

In many instances, particularly in developing countries, reliable information about the target population may not be available from secondary sources. Government data may be unavailable or highly biased. Lists of targeted respondents may not be available commercially (e.g., small samples of certain respondents, such as entrepreneurial women in some cultures). In general terms, sampling is often performed with the implicit assumption that all sampled firms or individuals in a nation share the same underlying characteristics, such as national cultural characteristics, but this is often untrue. For example, in an extreme case, a US-based company entering China might be managed by Chinese immigrants, and a potential joint venture partner in China considered by this US firm might be managed by US-trained executives or even US natives. The point is to avoid sampling in IB studies on the basis of convenience, without properly evaluating whether assumed characteristics of the sample actually hold. In the presence of inadequate sampling, any purported contributions to IB theory development must be viewed with suspicion. In the realm of cross-cultural studies, Ryen, Truman, Mertens, and Humphries (2000) and Marschan-Piekkari and Welch (2004) highlight various challenges associated with deploying qualitative techniques in developing countries; for example, respondents in cross-national surveys may interpret specific ideas or concepts put forward by researchers from developed countries in a culturally specific manner, rendering any comparisons among nations untenable.

-

2.

What is an appropriate sample size?

The answer may be much more complex than suggested by rules of thumb or generally accepted conventions in statistical analysis. The simple reason is that samples in IB research may have much stronger heterogeneity in terms of relevant variables than in domestic settings. For example, when assessing the impact of cultural distance on governance mode choice (such as a joint venture versus a wholly owned operation), it does not suffice to take into account the normal distance variables, such as geographic distance, that would be considered in domestic settings. A variety of distance parameters should be considered (e.g., institutional and cultural/psychic distance). In addition, the actual impact of these additional distance variables will depend on firm-level parameters such as the composition of the top management team (e.g., international experience and cultural diversity). As a result of the greater number of relevant variables, requisite sample size should also increase, in this case to isolate the discrete effects of a larger number of explanatory variables on governance choice.

-

3.

How to avoid non-sampling errors?

In IB research spanning multiple cultures, both measurement non-equivalence and variations in interviewer quality can lead to non-sampling errors. The increasing availability of large international surveys has opened a wide avenue of new possibilities for researchers interested in cross-national and even longitudinal comparisons. Such surveys build on constructs measured mostly by multiple indicators, with the explicit goal of making comparisons across different countries, regions, and time points. However, past research has shown that the same scales can have different reliabilities in different cultures. Davis et al. (1981) demonstrated that two sources of “measure unreliability”, namely the assessment method deployed and the nature of the construct, can confound the comparability of cross-cultural findings. Thus, substantive relationships among constructs must be adjusted for unequal reliabilities before valid inferences can be drawn. Hence, it is critical to assess whether questions “travel” effectively across national and cultural borders (Jowell et al. 2007).

It is also important to test empirically the extent to which survey responses are cross-nationally equivalent, rather than erroneously assuming equivalence. IB scholars are therefore advised to test the assumption of measurement equivalence empirically, for instance, by applying a generalized latent variable approach (Rabe-Hesketh and Skrondal 2004) or optimal scaling procedures (Mullen 1995). Other possible strategies include (1) identifying subgroups of countries and concepts where measurement equivalence holds, and continuing with cross-country comparisons within these subgroups; (2) determining how severe the violation of measurement equivalence is, and whether it might still allow meaningful comparisons across countries; and (3) at a minimum, trying to explain the individual, societal, or historical sources of measurement non-equivalence, and the potential impact thereof on results (Davidov et al. 2014).

Phase 3: Data Analysis and Interpretation of Results

The internal validity of IB research improves if the outcomes of a study have fewer rival explanations. The approach adopted to analyse data and interpret results should address and control for such alternative explanations (Cuervo-Cazurra et al. 2016). Here, IB researchers can formulate plausible, rival hypotheses that could explain the results (see, e.g., Nielsen and Raswant 2018). Even with rival hypotheses in play, we see the following four challenges:

-

1.

How to address outliers?

IB research may be particularly susceptible to the impact of outliers for two reasons. First, outlier outcomes are often included in IB studies, even though the economic actors responsible for the outlier results were not considered ex ante as being members of the target population. One example is the presence of first-generation immigrant managers or firm owners in studies of national companies’ choices of foreign locations for international activities. The results of these studies may still be relevant, but if the purpose of the study is, for example, to assess the impact of national cultural distances (measured as the supposed distances between one home country and a number of potential host countries) on location choices, then the aggregate responses of immigrant managers and owners will very possibly function as outlier outcomes. The reason is that their decision-making on location may not be influenced at all by the national cultural distances considered, for example, if their first-choice foreign location is their country (or region) of origin. Second, again in the realm of distance, many multinational enterprises (MNEs) employ expatriates, who are likely to have characteristics different from those shared by the general population of managers, whether in their home country or in the host country where they work. It would therefore be a mistake to assess, for example, head office–subsidiary interactions based on characteristics of the home and host countries at play, when expatriate executives play key roles in these interactions. Various statistical techniques are available to assist IB scholars in identifying multivariate outliers (e.g., see Mullen et al. 1995).

-

2.

How to choose the level(s) of analysis?

Many IB phenomena are by default multilevel in nature. For instance, MNEs are nested within home and host countrycontexts. By the same token, subsidiaries are nested within MNE “hierarchies”, typically the headquarter(s) of the parent company. A number of scholars have emphasized that it is imperative to approach IB phenomena at a variety of levels of theory and analysis (Arregle et al. 2006; Peterson et al. 2012, Goerzen et al. 2013), with due attention paid to nesting or cross-level effects (Andersson et al. 2014). Failure to account for the multilevel structure of hierarchically nested data is likely to yield statistical problems. Such problems arise from improperly disaggregating datasets, thereby violating the assumption of independence among observations and ignoring inter-class correlations that increase the risk of type I and type II errors (Snijders and Bosker 2011; Raudenbush and Bryk 2002). If these problems arise, random coefficients modelling (RCM) offers three substantial advantages over traditional statistical models (Raudenbush and Bryk 2002): (1) improved estimation of effects within each level; (2) possibility to formulate and test hypotheses about cross-level effects; and (3) portioning the variance and co-variance components among levels.

In addition, IB phenomena are often influenced by contexts that are interwoven in a more complex fashion than “simply” being hierarchically nested. On the one hand, MNE subsidiaries are nested within their parent companies, but also within national contexts (e.g., home/host countrycontexts) in a hierarchical way. On the other hand, MNEs (both parent companies and their subsidiaries) are cross-nested within home and host countries, as well as within industries, but the countries involved are not nested within industries or vice versa. Empirically (as well as conceptually), it is therefore important to recognize heterogeneity at the firm, industry, and country levels, as well as cross-nested embeddedness. Here, a special application of RCM—namely cross-classified random coefficients modelling or CCRCM—may help isolate the effects of the cross-cutting hierarchies (e.g., country and industry) on the dependent variable (e.g., firm performance), thereby avoiding model under-specification and biased results (Fielding and Goldstein 2006). Though still uncommon in IB research (for a recent example, see Estrin et al. 2017), scholars are strongly encouraged to account for the nested structure of the IB phenomena they study, and for non-hierarchical embeddedness in particular, when theorizing about—and testing the effects of—context on firm (industry, team, or individual) behaviour.

Also related to the presence of multiple levels is the challenge of ecological fallacies. These refer to the unqualified usage in one level of analysis of the variable scores that were derived from analysis at another level. As one example, an ecological fallacy comes into play when a researcher uses culture-level scores (e.g., based on Hofstede’s cultural dimensions or GLOBE measures) without conducting individual-level analyses to interpret individual behaviour. Conversely, a problem of “atomistic” fallacy arises when a researcher constructs culture-related indices based on individual-level measurements (attitudes, values, behaviours), without conducting societal-level cultural analysis (Schwartz 1994). Culture can be important for many IB decisions and outcomes, whether as a distance measure (Beugelsdijk et al. 2018) or a contextual control variable (Nielsen and Raswant 2018). Yet, ecological fallacy challenges are seldom addressed fully, despite ample evidence that they matter (Brewer and Venaik 2014; Hofstede 2001: 16; House et al. 2004: 99).

-

3.

How to avoid personal bias in interpreting and reporting results?

In IB studies, a researcher working out of a particular context (such as a national culture or a set of national economic institutions relevant to IB transactions) must often interpret data gathered in various other contexts. The researcher’s own context-dependent biases may then affect her or his interpretation of the outcomes. We noted above that concepts may not easily “travel” across borders, and that theories and methods are not necessarily “equivalent” across contexts. One should therefore avoid assuming too easily the universality of concepts, theories, and methods. In addition, researchers themselves may potentially introduce another bias based on their personal ethnocentrism and other context-determined preferences.

These biases often remain undetected, especially when scholars build upon extant streams of equally biased research, sometimes amplified by individuals and “clubs” of like-minded scholars adopting the same methods and involved in editorial reviewing processes. Individuals may actually have a preference for—and may thereby be instrumental to—long waves of biased research being published. Meade and Brislin (1973) suggested a partial solution to this problem, relevant especially in the context of multinational research teams. They suggested that researchers from each country should independently interpret the results obtained, so that inter-interpreter reliability can be assessed.

On a positive note, the average number of authors and national diversity in terms of authors of JIBS articlesFootnote 1 has increased substantially over the past 50 years. In the 1970s, the average JIBS article had 1.48 authors with 17 percent of first authors being from a country other than the United States. In the 2000s (2000–2009) these numbers increased to 2.33 authors per article with 55 percent of first authors being from a country other than the US. Since 2010 these numbers have further increased to 2.88 authors per article and 62 percent non-US first authors (Nielsen et al. 2019).

Even if an author has reflected adequately on the questions outlined above, there is still work to be done, since a wide range of methods-related decisions must still be made. To aid the reader in making those decisions, we now turn to some methodological advances in conducting IB research. In the next section, we examine 11 JIBS publications that were designed to promote a level of sophistication at par with or ahead of other business disciplines but keeping in mind the specificities of IB research. We augment these pieces with new Commentaries and Reflections on how the field has advanced since these JIBS articles were first published.

Methodological Advances

Our third goal in writing this introductory chapter is to introduce the remaining chapters in this book. These chapters were selected because they represent good and best practices to help overcome the methodological challenges we identified above. Each chapter included in this volume represents a significant advance in IB methods, given the field’s unique features.

We have organized the remainder of this book into 11 distinct Parts. Each Part has one to three chapters. The first chapter is an original JIBS article on a particular methods topic published between 2010 and 2019. Each JIBS article is followed by an insightful Commentary from one or more content experts who deliver forward-looking observations on the importance of the methodological challenges considered and on the most effective ways to respond to such challenges. Four Parts also include a third chapter, a Further Reflections note prepared by one or more of the authors of the original JIBS article.Footnote 2

The three co-editors selected the 11 original JIBS articles included in this book after a detailed, lengthy, and iterative search process. We chose 2010 as our starting year so as to include 10 years of JIBS publications. We used several criteria for selection, both quantitative ones such as citation counts, and qualitative ones when re-reading the articles ourselves where we assessed their contribution on three dimensions. First, we wanted each article to represent a different methodological challenge in IB research. Second, an important selection criterion was our assessment of each article’s likely contribution to raising the rigor and relevance of contemporary IB scholarship. Third, we also used as a selection criterion the need to respect diversity and plurality in methodological focus, thereby acknowledging the importance of both qualitative and quantitative methods, as well as mixed-methods approaches.

Parts II through IV (Chaps. 2, 3, 4, 5, 6, 7, and 8) in this book are concerned with recurring methodological challenges in contemporary IB research and offer best practices to overcome these challenges. Parts V through VII (Chaps. 9, 10, 11, 12, 13, 14, and 15) deal with methodological challenges and advances in qualitative research in IB. Parts VIII through X (Chaps. 16, 17, 18, 19, 20, 21, and 22) discuss methodological challenges in quantitative methods and suggest ways to deal with these challenges. The volume concludes with Parts XI and XII (Chaps. 23, 24, 25, 26, and 27), which focus on frontier methodological challenges in IB research.

In the rest of this section we summarize the main ideas presented in each of the 11 Commentaries and 4 Reflections chapters. Since these 15 chapters also provide summaries of the original 11 JIBS articles, we do not include them here.

Part II (Chaps. 2 and 3) deals with the reproducibility and replicability of research findings. In his Commentary on Chap. 2, “Science’s Reproducibility and Replicability Crisis: International Business Is Not Immune” by Aguinis, Cascio, and Ramani (2017), Andrew Delios argues in Chap. 3 that the solutions and recommendations provided to improve our empirical methods—mainly the use of meta-analysis—miss the opportunity to question more fundamentally whether existing research protocols should continue to be standard operating procedures or replaced. In Delios’ view, the so-called replication crisis is not a crisis; “it is a reality and a logical off-shoot of the accepted research standards we have in the field of IB research”. He suggests that our decision as a community of scholars is not whether we should engage in replication or reproducibility studies but rather “whether we want to make the investments necessary to re-think the core of our methods and to address the long-standing systemic challenges to conducting good, repeatable empirical research in international business”.

Part III (Chaps. 4 and 5) builds further on this theme and suggests best practices with respect to conducting, reporting, and discussing the results of quantitative hypothesis testing, so as to increase rigor in IB research. Agnieszka Chidlow, William Greene, and Stewart Miller discuss and augment the insights from Chap. 4, “What’s in a p? Reassessing Best Practices for Conducting and ReportingHypothesis-Testing Research” by Meyer, Witteloostuijn, and Beugelsdijk (2017). Their Commentary suggests a more rational approach to reporting the actual level of significance by placing the burden of interpretive skill on the researcher since there is no “right” or “wrong” level of significance in hypotheses testing. Scholars are encouraged to give higher priority to selecting appropriate levels of significance for a given problem instead of the misleading culture of the “old asterisks habit”. The idiosyncratic features of many IB phenomena call into question conventionally accepted significance levels “because different classes of research may require different levels of alpha”. The commenters also discuss the pros and cons of modern technology in ensuring credible and ethical researchdesigns and execution. The authors ultimately place the burden on the entire IB scholarly community— authors, co-authors, reviewers, editors, and PhD supervisors—to avoid QRPs such as HARKing and p-hacking.

Part IV (Chaps. 6, 7, and 8) completes the discussion of recurring challenges and best practices by addressing alternative explanations to improve the validity and generalizability (i.e., “trustworthiness”) of empirical research in IB. Jonathan Doh comments on Chap. 6, “Can I Trust Your Findings? Ruling Out Alternative Explanations in International Business Research” by Cuervo-Cazurra, Andersson, Brannen, Nielsen, and Reuber (2016). The original JIBS article provides guidance on how to ensure that authors establish the “correct” relationships and mechanisms so that readers can rely on their findings. Doh in his Commentary (Chap. 7) makes several important observations, including that “too often scholars are fearful of revealing any findings or cases that are contrary to their overall hypotheses (whether formal or informal) and may somewhat subconsciously or unknowingly suppress this countervailing information”. He also reiterates points made by previous commenters that “the core challenge in IB concerns some of the generally accepted norms, practices, and assumptions that undergird what we consider to be an acceptable empirical exposition”. Doh closes with a plea for more attention to societal “grand challenges” and argues that while such research “may require interdisciplinary approaches, multilevel methods, and consideration of a diverse range of societal actors and influences, it offers an organizing principle for IB research that seeks to achieve relevance, rigor, and real-world contribution”.

Chapter 8 provides Further Reflections on the original JIBS article (Chap. 6) written by three of the authors (Brannen, Cuervo-Cazurra, and Reuber). They provide two observations; (1) how difficult it is for scholars to take up the challenge of tackling significant, bold, real-world phenomena with an open-minded, interdisciplinary multi-methods approach, and (2) how challenging it is to review mixed-methods articles. To help with the latter, the authors make several astute suggestions for reviewers of mixed-methods articles, including allowing for multiple story lines to develop while paying particular attention to how data are used to build evidence. Their hope is to increase methodological ambidexterity among IB scholars.

Parts V through VII (Chaps. 9, 10, 11, 12, 13, 14, and 15) deal with methodological challenges in qualitative research in IB. Part V (Chaps. 9, 10, and 11) focuses on how to theorize from qualitative research, especially case studies, and the critical role of context. In Chap. 10, Kathleen Eisenhardt makes several important points in her Commentary on Chap. 9, “Theorising from Case Studies: Towards a Pluralist Future for International Business Research” by Welch, Piekkari, Plakoyiannaki, and Paavilainen-Mäntymäki (2011). Specifically, Eisenhardt argues that “while helpful, the article’s central typology and 2x2 create artificial distinctions” and goes on to suggest that “its interpretation of theory building cases combines cherry-picked phrases with an eighteenth-century view of positivism”. In Eisenhardt’s view, the role of context was and is always central to qualitative research, and cases can be used to both develop and test theory. She views cases as independent experiments where replication logic is germane and where one should seek to develop an underlying theoretical logic. Eisenhardt concludes that there is an emerging recognition of the similarity across inductive methods as well as the relevance of specific methods for different types of research questions and contexts. She advocates for more attention to the role of language in defining and naming constructs, which may take on different meanings in different cultural or linguistic contexts. New technologies, such as machine learning and big data, may offer promise for the future with regard to meeting some of the challenges of case study research.

Eisenhardt’s Commentary is followed by Further Reflections (Chap. 11) provided by the four original authors of the 2011 article. In their reflections piece, the authors outline three ways in which the themes of their 2011 article have been further developed since its publication. Firstly, they point to an increase in studies taking an abduction approach, which emphasizes a theoretical starting point and offers qualitative researchers a vocabulary to articulate how they iterate between theory and data. Secondly, the authors emphasize a need for more holistic explanations, dissolving the dichotomy between qualitative and quantitative research. Such approaches may include rarely used methodologies, such as longitudinal single cases, historical methods, and use of retrospective data, which may help researchers trace causal mechanisms over time and develop process explanations addressing how social change emerges and evolves. Finally, the authors see a general trend toward combining contextualization with causal explanation, which holds great promise for future IB research.

Part VI (Chaps. 12 and 13) investigates the linkages between historical and qualitative analyses and suggests that more attention be paid to longitudinal qualitative research in IB. Catherine Welch comments on Chap. 12, “Bridging History and Reductionism: A Key Role for Longitudinal Qualitative Research” by Burgelman (2011). Her Commentary in Chap. 13 provides not only new insights on the methodological challenges of conducting longitudinal qualitative research in IB, but also on the potential value added that might result from this approach. She highlights how a longitudinal, qualitative research approach would allow IB scholars to “go beyond reductionist forms of explanations to account for complex causality, system effects, context dependence, non-linear processes and the indeterminacy of the social world”. The implications of what she calls “Burgelman’s vision” are far reaching: it requires us to “rethink the research questions we pose, the analytical techniques we use, the nature of the theories we develop, and the way we view our role as social theorists”. She contrasts the “standard” approach to qualitative research with the alternative offered by the longitudinal qualitative research “vision” of Burgelman and concludes that to realize this would entail a paradigmatic shift yet to be implemented in IB research. However, there is hope; these two paradigms may be mutually supportive as “longitudinal qualitative research may form a ‘bridge’ between history and reductionist research, and a ‘stepping stone’ to formal mathematical models”. In Welch’s words: “IB researchers have the opportunity to diversify and enrich the methods we use and the theories we develop”.

Part VII (Chaps. 14 and 15) discusses the relevance and applicability of Fuzzy-set Qualitative Comparative Analysis (fsQCA) for advancing IB theory. Stav Fainshmidt comments on Chap. 14, “Predicting Stakeholder Orientation in the Multinational Enterprise: A Mid-Range Theory” by Crilly (2011). Fainshmidt’s Commentary in Chap. 15 discusses some of the key judgement calls that researchers using fsQCA must make throughout the analytical process. Applying fsQCA may help IB scholars to straddle both qualitative and quantitative analyses in an iterative manner, which helps to pinpoint causal mechanisms as well as generalize and contextualize qualitative findings that often span multiple levels of analysis. Yet such analysis requires important judgement calls regarding (among other things): (1) calibration, (2) frequency, and (3) consistency. Fainshmidt points to several other analyses that may augment the ones proposed by Crilly, such as varying the frequencythreshold, evaluating the impact of alternative calibration approaches, and revisiting decisions related to counterfactuals. He also suggests using the proportional reduction in inconsistency (PRI) statistic. This should help researchers who utilize fsQCA, to correct for the potential contribution of paradoxical cases and to identify paradoxical rows in the truth table, thereby producing a more accurate solution. He cautions, however, that these analyses “should be considered in light of the decisions made in the main analysis and the context of the study or data at hand”.

Parts VIII through X (Chaps. 16, 17, 18, 19, 20, 21, and 22) discuss methodological challenges in quantitative methods and suggest ways to deal with them. Part VIII (Chaps. 16 and 17) draws attention to the difficulty of adequately theorizing and accurately empirically testing interaction effects within and across levels of analysis in IB research. Jose Cortina discusses Chap. 16, “Explaining Interaction Effects Within and Across Levels of Analysis”, by Andersson, Cuervo-Cazurra, and Nielsen (2014) and makes three important observations in his Commentary in Chap. 17. First, Cortina notes how conceptual diagrams, which are intended to aid comprehension, often have the opposite effect because they do not represent the statistical model being tested. Specifically, he points to the importance of including an arrow between the moderator (Z) and the dependent variable (Y) because “the coefficient for the product must reflect rate of change in Y per unit increase in the product holding both of its components constant”. Second, Cortina provides mathematical evidence for the fact that there is no moderator-predictor distinction. Therefore, he argues, researchers should clarify why it makes sense to say that the effect of X on Y depends on the level of Z as opposed to the effect of Z on Y depends on the level of X. Finally, Cortina proposes restricted variance interaction (reasoning) as a potential tool that may help researchers move from a general notion regarding Z moderating the X-Y relationship to a variable-specific justification for a particular interaction pattern.

Part IX (Chaps. 18 and 19) reflects on another critical methodological challenge facing many IB researchers, namely that of endogeneity. Myles Shaver discusses Chap. 18, “Endogeneity in International Business Research” by Reeb, Sakakibara, and Mahmood (2012); his Commentary in Chap. 19 presents three points to complement the issues raised by the authors. Shaver starts by reminding us about what exactly causality is and how to establish causal identification. He illustrates the importance of paying attention to both guiding theory andalternative explanations (or theories) that would or could lead to the same relationship between the variables we study. Shaver points out that authors must take several steps toward causal identification and must view this as a process, rather than deploy a supposedly “simple” fix. He offers three specific observations (presented here in a different order): (1) the difficulty of establishing causal identification is linked to the nature of the data we collect; (2) causal identification is best established through a cumulative body of research and a plurality of approaches; and (3) establishing causal identification requires both well-crafted theories and well-crafted alternative theoretical mechanisms.

Part X (Chaps. 20, 21, and 22) addresses another important issue in quantitative IB research, namely that of common method variance (CMV). Harold Doty and Marina Astakhova discuss uncommon methods variance (UMV) in their Commentary on Chap. 20, “Common Method Variance in International Business Research” by Chang, Van Witteloostuijn, and Eden (2010). Their Commentary in Chap. 21 offers four guidelines that will help reviewers evaluate the extent to which CMV threatens the validity of a study’s findings. These guidelines encourage reviewers (and authors) to ask critical questions related to: (1) the extent to which single source or self-report measures may be the most theoretically appropriate measurement approach in a particular study; (2) how the content of the constructs may help judge the potential for biased results; (3) how likely it is that the observed correlations are biased given reported reliabilities; and (4) whether the larger nomological network would appear to make sense.

In a Further Reflection (Chap. 22) on their JIBS article, Van Witteloostuijn, Eden, and Chang reiterate the importance of making appropriate ex ante research design decisions in order to avoid or minimize such issues. They also provide compelling evidence for the continued importance and relevance of the single-respondent–one-shot survey design in many instances. Therefore, ex ante approaches to CMV issues are preferable to ex post remedies. The authors end by musing over the extent to which CMV issues may also apply to other research designs than single-respondent–one-shot survey designs, arguing for the importance of replication studies—particularly ones that utilize different research designs and methods.

The volume concludes with Parts XI and XII (Chaps. 23, 24, 25, 26, and 27), which focus on frontier methodological challenges in IB research. Part XI (Chaps. 23, 24, and 25) delves into the complexities of modelling the multilevel nature of IB phenomena. Robert Vandenberg discusses opportunities and challenges specific to multilevel research in his insightful Commentary on Chap. 23, “Multilevel Models in International Business Research” by Peterson, Arregle, and Martin (2012). Vandenberg in his Chap. 24 Commentary points to four “hidden” jewels in the article that may not be apparent to the reader: (1) not addressing cross-level direct effects; (2) not using the term cross-level when addressing how a level 2 variable may moderate the slopes of an X-Y relationship within each level 2 unit; (3) introducing the concept of cross-classified cases; and (4) centring. He also addresses two methodological advancements since the publication of the article; (a) ML structural equation modelling; and (b) incorporating more than just two levels into multilevel analysis. Vandenberg has very strong views on the statistical possibilities (or impossibility as he argues) of testing particular types of relationships, such as cross-level direct effects, and he also urges authors not to use the term “cross-level interaction” at all. More constructively, he urges scholars to pay due attention to cross-classified cases, that is, data that are not hierarchically nested, which is often the case in IB research (see also our earlier discussion under the heading How to choose the level(s) of analysis?). Finally, he also points to the importance of centring in multilevel analysis. He concludes by discussing the two recent advances (at least to IB scholars) pertaining to complex multilevel structural equation modelling (MLSEM) and models with more than 2 levels.

Further reflecting on the scope for the use of multilevel models (MLMs) in IB research, one of the original authors (Martin) in Chap. 25 reviews conditions and solutions for the estimation of MLMs where the dependent variable is not continuous. He points to several powerful and well-documented software packages that allow for the estimation of such models, but cautions that guidelines for appropriate use of MLM (particularly with non-continuous dependent variables) are less well documented and that sample size requirements are generally more demanding. With regard to sample size requirements, Martin reminds us that most power simulation studies use a single predictor (typically at level 2), thus rendering true power analysis difficult. He ends by offering two suggestions that may help researchers overcome the issues resulting from small sample size: (1) using repeated measures at level 1 and (2) using bootstrapping and a Bayesian estimator leveraging Gibbs sampling to reduce the number of unique subjects required.

Cultural distance is one of the most commonly used proxies to assess the general difficulties firms will face when operating across borders. Part XII (Chaps. 26 and 27) concludes this research methods volume by providing insightful recommendations about how to conduct distance research in IB via a detailed analysis of the various measures for cultural distance available. Mark Peterson and Yulia Muratova comment on Chap. 26, “Conceptualizing and Measuring Distance in International Business Research: Recurring Questions and Best Practice Guidelines” by Beugelsdijk, Ambos, and Nell (2018). The Peterson-Muratova Commentary in Chap. 27 assesses the recommendation to use the Mahalanobis distance correction in the context of studies of distances between a single home or host country and multiple other countries and finds strong support for its importance. Peterson and Muratova then offer two additional recommendations: (1) occasionally, distance from a single reference country can be meaningfully estimated for some of the country’s salient cultural or institutional characteristics; and (2) when estimates for a reference country require more than the data can provide, the best course of action may be to study cultural and institutional characteristics as variables representing other countries with which it does business.

Having briefly pointed out some of the highlights of the other 26 chapters in this book, we now turn to our last goal for this chapter: providing a few recommendations for best practices in research methods, which we hope will be useful for IB researchers.

A Few Suggestions for the Road Ahead

This book is a first, serious attempt to bring together various strands of state-of-the-art thinking on research methods in international business. The volume is intended as a solid reference book for scholars, ranging from research master’s students to senior academics, as they reflect on the best research methods approaches that can reasonably be adopted given the nature of the IB phenomena studied. Deploying the practices suggested in this book will go a long way towards improving the image of IB research as a field of academic inquiry at par methodologically with the more conventional subject areas in business schools.

IB as a field of research is rapidly moving towards maturity. The level of methodological sophistication with which many IB scholars now study cross-border phenomena makes it likely that the IB context will increasingly prove to be fertile ground for developing innovative new methodologies that may inform other disciplines. If this occurs, IB will evolve from being a net methods importer towards becoming an exporter in its own right.

We end this chapter with four methods-related recommendations for IB scholars and reviewers alike, which follow from our long experience with assessing the work of colleagues and from our own work being evaluated by our peers.

-

1.

Rules of thumband widely accepted conventions as to what constitutes an acceptable methodological approach or interpretation of an outcome should never substitute for independent scholarly judgement and plain common sense.

Many IB scholars legitimately claim that their work is sometimes unfairly judged because of “methods policing” by reviewers and editors, who follow simple heuristics and standard rules that should perhaps not apply to the specific study being assessed. One case in point is that of multicollinearity. As stated by Lindner, Puck, and Verbeke (2019): “Research in IB is affected by prevailing myths about the effects of multicollinearity that hamper effective testing of hypotheses… Econometric texts and theory tend to use ‘clean’ examples (i.e., simple ones with only one problem being present at a time) to analytically make a point about the effect of the violation of an assumption on point estimates or their variance. Yet, IB research usually deals with complex relationships and many interrelated variables. To the best of our knowledge, there is no investigation into how specific econometric and data problems affect regression outcomes in such ‘messy’ cases where, among potential other issues, multicollinearity exists”. Lindner et al. (2019) show that many published JIBS articles explicitly calculate Variance Inflation Factors (VIFs) to address multicollinearity, which in turn helps to determine what variables to include in regression models. In more than 10 percent of the articles studied, variables were dropped when high VIFs had been observed. Yet, the authors demonstrate that high VIFs may be an inappropriate guide to eliminate otherwise relevant parameters from regressions.

Conversely, sometimes problematic empirical studies are actually published and become heavily cited because they align with a stream of studies adopting a similar methodological approach and meet a number of supposed quality standards, again as a function of heuristics and conventions, which may be ill advised at best. For example, a large literature exists on the multinationality–performance linkage, which—as far as theory is concerned—builds upon concepts related to the entire historical trajectory of firms, starting from their first international expansion move. But most empirical studies in this realm actually build on cross-sectional data or panel data covering only a limited time span (e.g., 10 years), rather than the firms’ actual histories. An unacceptable discrepancy can therefore be observed between theory and data in a large number of articles, including articles published in leading academic journals, where reviewers and editors accept poor work, based on the standards set in earlier poor work (Verbeke and Forootan 2012).

-

2.

IB researchers should systematically be pushed to disclose their actual command of the data they use, and their knowledge of the economic actors supposedly represented by these data or affected by these data.

This challenge is becoming increasingly important in an era of access to big data and higher sophistication in the information and communications technology sphere. More data and better technology do not necessarily lead IB researchers to have a better command of their data. A few years ago, one of the editors of this volume contacted the OECD because some data collected longitudinally and across countries on inward and outward FDI revealed potential inconsistencies. Upon investigating the discrepancies found, it appeared that these data were collected by national agencies, and that the data sources drawn from, as well as the methods to collect and collate the data, changed every year in some countries. The OECD itself had been making significant changes to its data aggregation methods over time (these were noted in footnotes under the data tables, in font 3 and therefore difficult to decipher). The recommendation of an OECD expert was therefore never to assess FDI trajectories over periods longer than 3–5 years. This expert stated: “comparisons within a country over more than 3–5 years will at best be like comparing apples and oranges; comparing across countries over time, will be like comparing apples and sports cars”.

The above example is indicative of a major problem facing IB researchers. The main problem is not that national and international agencies change their methods and sources to collect data, sometimes in a non-transparent way. The problem is that many IB researchers are unaware (or choose to remain unaware) of this situation and thus overestimate the quality and consistency of their data. Especially in an era when scholars are pushed to analyse large databases and to deploy sophisticated statistical techniques, it would appear that many of them simply do not conduct any background investigation of their data. That is, they know little or nothing about the firms in their samples and are ignorant of these firms’ historical trajectories and the real-world meaning behind the evolution of the values of the parameters they study. In some cases, they do not even have a basic command of the language in which the data were collected or published. They just view it as their task to test hypotheses, but without any in-depth understanding of the subjects they are studying. After the empirical analyses have been performed, very few IB scholars confront the managers or owners of the firms they have analysed with the results and conclusions of their studies. As a result, entire bodies of completely speculative research come into existence and feed off each other, without due diligence.

The answer to the above is clearly for journal editors and reviewers to demand tangible proof that the authors of articles have a proper command of their data. It should be mandatory for editors to ask authors submitting manuscripts which due diligence measures were undertaken to ascertain the quality of the underlying data and the plausibility of the results found. In many cases, this may imply contacting data collection agencies as well as firms, both ex ante (at the time of formulating hypotheses and collecting data) and ex post (after the results of the empirical analysis have been computed.)

-

3.

IB researchers should systematically consider the possibility of “combinatorial notions” being associated with particular outcomes, rather than assuming from the outset one key independent variable affects the focal outcome with the impact moderated by other variables.

As one example, the fsQCA approach discussed earlier in this chapter examines combinations of factors linked to an outcome variable in “complex” situations or “rich contexts”. Complex means that the various parameters considered work in concert to influence the outcome variable. Different combinations of these parameters can lead equifinally to the same outcome. What is perhaps more critical is that no individual parameter might in and by itself determine a particular outcome, in sharp contrast with conventional regression analysis where scholars typically identify a key independent variable.

As with any model, there might be alternative explanations. In fsQCA these are taken into account by considering all theory-anchored alternative explanations prevailing in the literature. FsQCA does not use control variables, because it does not measure linear, causal relationships. One of the arguments for the use of fsQCA is precisely that it forces researchers to use a more experimental approach, that is, trying to control for causality ex ante through theory and research design, as opposed to ex post testing through the use of control variables. Thus, using fsQCA entails choosing carefully models informed by causal antecedents for a given phenomenon, as identified in the theory-based literature.

As an alternative to fsQCA, SEM allows for simultaneous equations with both multiple x’s and y’s (as well as moderators and mediators), whereas most OLS type studies do not allow this. The tendency to test regressions hierarchically with controls first, followed by independent variables, and then moderators, and finally the “full” model, which is interpreted only in terms of p-values in relation to hypothesis testing, may not be an optimal approach. Nielsen and Raswant (2018) discuss this issue with regard to controls and suggest that IB scholars should run models both with and without controls (i.e., a model with only x’s and no controls and a full model with no controls), in order to tease out the actual effect(s) of the controls. Something similar could be done with particular independent variables that are thought (theoretically) to be interdependent rather than independent of each other. While this violates OLS assumptions, other estimation techniques can address this challenge.

-

4.

Technology is a powerful aid in research, but IB scholars should strive for methodological parsimony.

As society transforms and is transformed by new technology, novel pathways materialize for IB researchers to collect and analyse data. New forms of data also become more readily available. As of 2019, the sheer number and sophistication of technological tools that can help scholars collect, analyse, and interpret data is daunting. And more of these tools are on their way, as this very chapter is being written.

For qualitative and quantitative scholars alike, increasingly sophisticated and complex tools are becoming available and this trend is driven by innovation in technology. For instance, the spread of video and photographic technology allows scholars to use images both as sources of data and as tools for data collection. In addition, the increasingly digital form of most data (either as audio or video files) provides new ways of accessing, developing, analysing, and interpreting data. With the Internet now available to some estimated 60 percent of the world’s population, tables, charts, maps, and articles, in addition to audio and video files, can be easily shared across the globe. Social media platforms such as Facebook, LinkedIn, Twitter, and so on further link previously disparate people throughout the world. For IB researchers, digital tools and platforms offer tremendous opportunities to collect data—both primary and secondary—from diverse cross-country and cross-cultural settings.

Technological advances have also led to new ways of analysing data, with increasingly sophisticated techniques and tools available to both qualitative and quantitative researchers. For instance, various computer assisted qualitative data analysis (CAQDAS) software packages exist and with artificial intelligence (AI) on the rise, such programs are likely to be even more capable of sorting through enormous amounts of data in various formats (i.e., text, audio, visual) and drawing out coherent information for analytical purposes. By the same token, quantitative methods have experienced significant technological leaps forward with the coming of Big Data and a shift from analogue to digital storage and distributed processing (e.g., via cloud-based platforms). Statistical software capable of analysing such large and complex data is following suit, with many of the “traditional” packages now offering Big Data programs (e.g., R). As with qualitative software, AI and other innovative technologies may further enhance our abilities to access, process, and interpret increasingly larger and more complex cross-cultural datasets.

Finally, machine learning may lead to significant improvements in research methodology in IB as it holds the potential to assist both quantitative and qualitative research, and perhaps lead to more mixed-methods applications (see also the Commentary by Eisenhardt in Chap. 10 in this volume). Such advances in technology are likely to enable IB researchers to ask broader questions about IB phenomena that influence (and are influenced by) many if not all of us, and compare and contrast results across regions, countries, sub-cultures, and even individuals—over vast distances in both time and space. It may also lead to more narrow research questions that seek to tease out micro-foundational issues pertaining to individual behaviour(s) within and across contexts.

However, such technological developments raise important issues about the way researchers collect, process, and publish data, and how they produce high-quality analyses. The diversity of software means that there is a need for standards for storing and exchanging data and analyses. Moreover, with more analytical (statistical or other) power comes the risk of drowning good research in technically sophisticated modelling exercises.

While the issue of responsible and ethical research is an important challenge in its own right, we argue that IB scholars should strive for methodological parsimony rather than technical sophistication when designing and carrying out their studies. It may be enticing to apply the newest tools or most complex methodologies in a study—particularly if junior scholars with such skills are involved—but an important caveat surrounds the trade-offs between “necessary” and “sufficient” methodological complexity.

IB researchers would be wise to remember that rigor in methodology does not equate to complexity any more than larger datasets (such as Big Data) can ensure more validity or reliability. To be sure, large datasets may increase power to detect certain phenomena but potentially at the risk of committing type I errors. Add to this the concerns about veracity stemming from noise in the data and scholars may be left with less than desirable outcomes.

Another example of how technology is potentially a double-edged sword is the increasing inclusion of graphical user interface (GUI) in many software packages (for instance, in most SEM software packages). While such graphical interfaces may aid the researcher, they do so at the risk of sometimes losing the underlying meaning behind the study (i.e., drawing a diagram with arrows between boxes and having the software write the underlying equations removes the researchers one more step from the data and its implications). By the same token, some software packages also allow for automatic removal of outliers or capitalization of chance by data driven modelling procedures such as modification indices.

IB researchers collecting, analysing, and interpreting data from nationally and culturally diverse settings should utilize technologically sophisticated techniques when warranted (e.g., multilevel modelling of nested data). However, they must also avoid the trap of “showing off” newly developed methodologies in situations where these are not necessary. The old adage still holds true: if it ain’t broke, don’t fix it. Many often used, mainstream techniques, such as regression and ethnographic studies, still work well to address the majority of our IB research questions.

It is the responsibility of the researcher to select and apply the best-suited methodology within a given research setting. Replication is important, and results should never be attributable to a particular method. We strongly recommend that IB scholars consider parsimony over technical sophistication when making such choices. A short statement of justification of methodological choices, including selection criteria, is warranted; and in the early round submissions, it can be worthwhile to illustrate the utility of a particular (advanced) technique by comparing and reporting results with more/less parsimonious techniques.

Conclusions

“The values of honesty, trust, fairness, respect and responsibility in learning, teaching and research” (Bretag 2019)—that is what acting with integrity means. To act with integrity in our research requires that we use high-quality research methods that promote the “truth” and minimize error.

FFP (falsification, fabrication, and plagiarism) and QRPs (questionable research practices) are not consistent with research integrity and have serious negative consequences for the credibility of our scholarship. Researchers, we believe, make mistakes mostly because they do not really understand the nuances of using different research methods. There will always be some scholars who engage in research misconduct, and a far larger number who engage in QRPs, but we believe that the bulk of errors in how scholars use research methods is due to unfamiliarity with best practices.

This book is designed to help reduce unfamiliarity hazards by explaining and exploring several best practices in IB research methods. We hope that reading and working through the chapters in this book will enhance research integrity in IB scholarship and serve as inspiration for interesting, high-quality IB research. We also hope that this collection may promote more discussion among IB scholars about the importance and utility of research methods in furthering our field. Only through innovation (in both theory and methodology) can international business scholarship grow and prosper.

Notes

- 1.

The nationality of authors was determined by the university affiliation at the time of publication. Thus, this number does not take into account the multicultural backgrounds of authors in the same country. Hence, this number is likely very conservative and the real increase potentially much higher.

- 2.

The authors of each original article were invited to write a Further Reflections note on their original piece. Some authors chose to do this; others decided not to, inter alia, because they felt there was little new to report in substantive terms.

References

Aguinis, H., W.F. Cascio, and R.S. Ramani. 2017. Science’s reproducibility and replicability crisis: International business is not immune. Journal of International Business Studies 48 (6): 653–663.

Andersson, U., A. Cuervo-Cazurra, and B.B. Nielsen. 2014. From the editors: Explaining interaction effects within and across levels of analysis. Journal of International Business Studies 45: 1063–1071.

Antonakis, J., and J. Dietz. 2011. Looking for validity or testing it? The perils of stepwise regression, extreme-scores analysis, heteroscedasticity, and measurement error. Personality and Individual Differences 50: 409–415.

Arregle, J.-L., L. Hebert, and P.W. Beamish. 2006. Mode of international entry: The advantages of multilevel methods. Management International Review 46: 597–618.

Banks, G.C., E.H. O’Boyle Jr., J.M. Pollack, C.D. White, J.H. Batchelor, C.E. Whelpley, K.A. Abston, A.A. Bennett, and C.L. Adkins. 2016a. Questions about questionable research practices in the field of management: A guest commentary. Journal of Management 42 (1): 5–20.

Banks, G.P., S.G. Rogelberg, H.M. Woznyj, R.S. Landis, and D.E. Rupp. 2016b. Editorial: Evidence on questionable research practices: The good, the bad, and the ugly. Journal of Business Psychology 31: 323–338.

Bedeian, A.G., S.G. Taylor, and A.N. Miller. 2010. Management science on the credibility bubble: Cardinal sins and various misdemeanors. Academy of Management Learning & Education 9 (4): 715–725.

Beugelsdijk, S., B. Ambos, and P.C. Nell. 2018. Conceptualizing and measuring distance in international business research: Recurring questions and best practice guidelines. Journal of International Business Studies 49 (9): 1113–1137.

Bouter, L.M., J. Tijdink, N. Axelsen, B.C. Martinson, and G. ter Riert. 2016. Ranking major and minor research misbehaviors: Results from a survey among participants of four World Conferences on Research Integrity. Research Integrity and Peer Review 1: 17.

Bretag, T. 2019. Academic integrity. Oxford Research Encyclopedia, Business and Management. Oxford University Press. https://doi.org/10.1093/acrefore/9780190224851.013.147.

Brewer, P., and S. Venaik. 2014. The ecological fallacy in national culture research. Organization Studies 35 (7): 1063–1086.

Burgelman, R.A. 2011. Bridging history and reductionism: A key role for longitudinal qualitative research. Journal of International Business Studies 42 (5): 591–601.