Abstract

We propose FuCoLoT – a Fully Correlational Long-term Tracker. It exploits the novel DCF constrained filter learning method to design a detector that is able to re-detect the target in the whole image efficiently. FuCoLoT maintains several correlation filters trained on different time scales that act as the detector components. A novel mechanism based on the correlation response is used for tracking failure estimation. FuCoLoT achieves state-of-the-art results on standard short-term benchmarks and it outperforms the current best-performing tracker on the long-term UAV20L benchmark by over 19%. It has an order of magnitude smaller memory footprint than its best-performing competitors and runs at 15 fps in a single CPU thread.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

The computer vision community has recently witnessed significant activity and advances of model-free short-term trackers [22, 40] which localize a target in a video sequence given a single training example in the first frame. Current short-term trackers [1, 12, 14, 28, 37] localize the target moderately well even in the presence of significant appearance and motion changes and they are robust to short-term occlusions. Nevertheless, any adaptation at an inaccurate target position leads to gradual corruption of the visual model, drift and irreversible failure. Another major source of failures of short-term trackers are significant occlusion and target disappearance from the field of view. These problems are addressed by long-term trackers which combine a short-term tracker with a detector that is capable of reinitializing the tracker.

A long-term tracker development is complex as it entails: (i) the design of the two core components - the short term tracker and the detector, (ii) an algorithm for their interaction including the estimation of tracking and detection uncertainty, and (iii) the model adaptation strategy. Initially, memoryless displacement estimators like the flock-of-trackers [20] and the flow calculated at keypoints [34] were considered. Later, methods applied keypoint detectors [19, 31, 34, 35], but these require large and sufficiently well textured targets. Cascades of classifiers [20, 30] and more recently deep feature object detection systems [15] have been proposed to deal with diverse targets. The drawback is in the significant increase of computational complexity and the subsequent reduction in the range of possible applications. Recent long-term trackers either train the detector on the first frame only [15, 34], thus losing the opportunity to learn target appearance variability or adapt the detector [19, 30] and becoming prone to failure due to learning from incorrect training examples.

The FuCoLoT tracker framework: a short-term component of FuCoLoT tracks a visible target (1). At occlusion onset (2), the localization uncertainty is detected and the detection correlation filter is activated in parallel to short-term component to account for the two possible hypotheses of uncertain localization. Once the target becomes visible (3), the detector and short-term tracker interact to recover its position. Detector is deactivated once the localization uncertainty drops.

The paper introduces FuCoLoT - a novel Fully Correlational Long-term Tracker. FuCoLoT is the first long-term tracker that exploits the novel discriminative correlation filter (DCF) learning method based on the ADMM optimization that allows to control the support of the discriminative filter. The method was first used in CSRDCF [28] to limit the DCF filter support to the object segmentation and to avoid problems with shapes not well approximated by a rectangle.

The first contribution of the paper is the observation, and its use, that the ADMM optimization allows DCF to search in an area with size unrelated to the object, e.g. in the whole image. The decoupling of the target and the search region sizes allows implementing the detector as a DCF. Both the short-term tracker and the detector of FuCoLoT are DCFs operating efficiently on the same representation, making FuCoLoT “fully correlational”. For some time, DCFs have been the state-of-the-art in short-term tracking, topping a number of recent benchmarks [22, 23, 23, 24, 40]. However, with the standard learning algorithm [18], a correlation filter cannot be used for detection because of two reasons: (i) the dominance of the background in the search regions which necessarily has the same size as the target model and (ii) the effects of the periodic extension on the borders. Only recently theoretical breakthroughs [10, 21, 28] allowed constraining the non-zero filter response to the area covered by the target.

As a second contribution, FuCoLoT uses correlation filters trained on different time scales as a detector to achieve resistance to occlusions, disappearance, or short-term tracking problems of different duration. Both the detectors and its short-term tracker is implemented by a CSRDCF core [28], see Fig. 1.

The estimation of the relative confidence of the detectors and the short-term tracker, as well as of the localization uncertainty, is facilitated by the fact that both the detector and the short-term tracker output the same representation - the correlation response. We show that this leads to a simple and effective method that controls their interaction. As another contribution, a stabilizing mechanism is introduced that enables the detector to recover from model contamination.

Extensive experiments show that the proposed FuCoLoT tracker by far outperforms all trackers on a long-term benchmark and achieves excellent performance even on short-term benchmarks. FuCoLoT has a small memory footprint, does not require GPUs and runs at 15 fps on a CPU since both the detectors and the short-term tracker enjoy efficient implementation through FFT.

2 Related Work

We briefly overview the most related short-term DCFs and long-term trackers.

Short-Term DCFs. Since their inception as the MOSSE tracker [3], several advances have made discriminative correlation filters the most widely used methodology in short-term tracking [22]. Major boosts in performance followed introduction of kernels [18], multi-channel formulations [11, 16] and scale estimation [8, 27]. Hand-crafted features have been recently replaced with deep features trained for classification [9, 12] as well as features trained for localization [37]. Another line of research lead to constrained filter learning approaches [10, 28] that allow learning a filter with the effective size smaller than the training patch.

Long-Term Trackers. The long-term trackers combine a short-term tracker with a detector – an architecture first proposed by Kalal et al. [20] and now commonly used in long-term trackers. The seminal work of Kalal et al. [20] proposes a memory-less flock of flows as a short-term tracker and a template-based detector run in parallel. They propose a P-N learning approach in which the short-term tracker provides training examples for the detector and pruning events are used to reduce contamination of the detector model. The detector is implemented as a cascade to reduce the computational complexity.

Another paradigm was pioneered by Pernici et al. [35]. Their approach casts localization as local keypoint descriptors matching with a weak geometrical model. They propose an approach to reduce contamination of the keypoints model that occurs at adaptation during occlusion. Nebehay et al. [34] have shown that a keypoint tracker can be utilized even without updating and using pairs of correspondences in a GHT framework to track deformable models. Maresca and Petrosino [31] have extend the GHT approach by integrating various descriptors and introducing a conservative updating mechanism. The keypoint methods require a large and well textured target, which limits their application scenarios.

Several methods achieve long-term capabilities by careful model updates and detection of detrimental events like occlusion. Grabner et al. [17] proposed an on-line semi-supervised boosting method that combines a prior and online-adapted classifiers to reduce drifting. Chang et al. [5] apply log-polar transform for tracking failure detection. Kwak et al. [26] proposed occlusion detection by decomposing the target model into a grid of cells and learning an occlusion classifier for each cell. Beyer et al. [2] proposed a Bayes filter for target loss detection and re-detection for multi-target tracking.

Recent long-term trackers have shifted back to the tracker-detector paradigm of Kalal et al. [20], mainly due to availability of DCF trackers [18] which provide a robust and fast short-term tracking component. Ma et al. [29, 30] proposed a combination of KCF tracker [18] and a random ferns classifier as a detector. Similarly, Hong et al. [19] combine a KCF tracker with a SIFT-based detector which is also used to detect occlusions.

The most extreme example of using a fast tracker and a slow detector is the recent work of Fan and Ling [15]. They combine a DSST [8] tracker with a CNN detector [36] which verifies and potentially corrects proposals of the short-term tracker. The tracker achieved excellent results on the challenging long-term benchmark [32], but requires a GPU, has a huge memory footprint and requires parallel implementation with backtracking to achieve a reasonable runtime.

3 Fully Correlational Long-Term Tracker

In the following we describe the proposed long-term tracking approach based on constrained discriminative correlation filters. The constrained DCF is overviewed in Sect. 3.1, Sect. 3.2 overviews the short-term component, Sect. 3.3 describes detection of tracking uncertainty, Sect. 3.4 describes the detector and the long-term tracker is described in Sect. 3.5.

3.1 Constrained Discriminative Filter Formulation

FuCoLoT is based on discriminative correlation filters. Given a search region of size \(W \times H\) a set of \(N_d\) feature channels \(\mathbf {f} = \{ \mathbf {f}_d \}_{d=1}^{N_d}\), where \(\mathbf {f}_d\in \mathcal {R}^{W\times H}\), are extracted. A set of \(N_d\) correlation filters \(\mathbf {h} = \{ \mathbf {h}_d \}_{d=1}^{N_d}\), where \(\mathbf {h}_d\in \mathcal {R}^{W\times H}\), are correlated with the extracted features and the object position is estimated as the location of the maximum of the weighted correlation responses

where \(\star \) represents circular correlation, which is efficiently implemented by a Fast Fourier Transform and \(\{ w_d \}_{d=1}^{N_d}\) are channel weights. The target scale can be efficiently estimated by another correlation filter trained over the scale-space [8].

We apply the recently proposed filter learning technique (CSRDCF [28]), which uses the alternating direction method of multipliers (ADMM [4]) to constrain the learned filter support by a binary segmentation mask. In the following we provide a brief overview of the learning framework and refer the reader to the original paper [28] for details.

Constrained Learning. Since feature channels are treated independently, we will assume a single feature channel (i.e., \(N_d=1\)) in the following. A channel feature \(\mathbf {f}\) is extracted from a learning region and a fast segmentation method [25] is applied to produce a binary mask \(\mathbf {m}\in \{0,1\}^{W \times H}\) that approximately separates the target from the background. Next a filter of the same size as the training region is learned, with support constrained by the mask \(\mathbf {m}\). The discriminative filter \(\mathbf {h}\) is learned by introducing a dual variable \(\mathbf {h}_c\) and minimizing the following augmented Lagrangian

where \(\mathbf {g}\) is a desired output, \(\hat{\mathbf {l}}\) is a complex Lagrange multiplier, \(\hat{(\cdot )}=\mathcal {F}(\cdot )\) denotes Fourier transformed variable, \([\cdot ]_\mathrm {Re}\) is an operator that removes the imaginary part and \(\mu \) is a non-negative real number. The solution is obtained via ADMM [4] iterations of two closed-form solutions:

where \(\mathcal {F}^{-1}(\cdot )\) denotes the inverse Fourier transform. In the case of multiple channels, the approach independently learns a single filter per channel. Since the support of the learned filter is constrained to be smaller than the learning region, the maximum response on the training region reflects the reliability of the learned filter [28]. These values are used as per-channel weights \(w_d\) in (1) for improved target localization.

Note that the constrained learning [28] estimates a filter implicitly padded with zeros to match the learning region size. In contrast to the standard approach to filter learning like e.g., [18] and multiplying with a mask post-hoc, the padding is explicitly enforced during learning, resulting in an increased filter robustness. We make an observation that adding or removing the zeros at filter borders keeps the filter unchanged, thus correlation on an arbitrary large region via FFT is possible by zero padding the filter to match the size. These properties make the constrained learning an excellent candidate to train the short-term component (Sect. 3.4) as well as the detector (Sect. 3.4) in a long-term tracker.

The short-term component (left) estimates the target location at the maximum response of its DCF within a search region centered at the estimate in the previous frame. The detector (right) estimates the target location as the maximum in the whole image of the response of its DCF multiplied by the motion model \(\pi (\mathbf {x}_{t})\). If tracking fails, the prior \(\pi (\mathbf {x}_{t})\) spreads.

3.2 The Short-Term Component

The CSRDCF [28] tracker is used as the short-term component in FuCoLoT. The short-term component is run within a search region centered on the target position predicted from the previous frame. The new target position hypothesis \(\mathbf {x}^\mathrm {ST}_t\) is estimated as the location of the maximum of the correlation response between the short-term filter \(\mathbf {h}^\mathrm {ST}_{t}\) and the features extracted from the search region (see Fig. 2, left).

The visual model of the short-term component \(\mathbf {h}^\mathrm {ST}\) is updated by a weighted running average

where \(\mathbf {h}^\mathrm {ST}_{t}\) is the correlation filter used to localize the target, \(\tilde{\mathbf {h}}^\mathrm {ST}_{t}\) the filter estimated by constrained filter learning (Sect. 3.1) in the current frame, and \(\eta \) is the update factor.

3.3 Tracking Uncertainty Estimation

Tracking uncertainty estimation is crucial for minimizing short-term visual model contamination as well as for activating target re-detection after events like occlusion. We propose a self-adaptive approach for tracking uncertainty based on the maximum correlation response.

Confident localization produces a well expressed local maximum in the correlation response \(\mathbf {r}_t\), which can be measured by the peak-to-sidelobe ratio \(\mathrm {PSR}(\mathbf {r}_t)\) [3] and by the peak absolute value \(\mathrm {max}(\mathbf {r}_t)\). Empirically, we observed that multiplying the two measures leads to improved performance, therefore the localization quality is defined as the product

The following observations were used in design of tracking uncertainty (or failure) detection: (i) relative value of the localization quality \(q_t\) depends on target appearance changes and is only a weak indicator of tracking uncertainty, and (ii) events like occlusion occur on a relatively short time-scale and are reflected in a significant reduction of the localization quality. Let \(\overline{q}_t\) be the average localization quality computed over the recent \(N_{q}\) confidently tracked frames. Tracking is considered uncertain if the ratio between \(\overline{q}_t\) and \({q}_t\) exceeds a predefined threshold \(\tau _q\), i.e.,

In practice, the ratio between the average and current localization quality significantly increases during occlusion, indicating a highly uncertain tracking, and does not require threshold fine-tuning (an example is shown in Fig. 3).

Localization uncertainty measure (7), reflects the correlation response peak strength relative to its past values. The measure rises fast during occlusion and drops immediately afterwards.

3.4 Target Loss Recovery

A visual model not contaminated by false training examples is desirable for reliable re-detection after a long period of target loss. The only known certainly uncontaminated filter is the one learned at initialization. However, for a short-term occlusions, the most recent uncontaminated model would likely yield a better detection. While contamination of the short-term visual model (Sect. 3.2) is reduced by the long-term system (Sect. 3.5), it cannot be prevented. We thus maintain as set of several filters \(\mathcal {H}^{\mathrm {DE}} = \{ \mathbf {h}_{i}^{\mathrm {DE}} \}_{i\in 1,\ldots N_{\mathrm {DE}}}\) updated at different temporal scales to deal with potential model contamination.

The filters updated frequently learn recent appearance changes, while the less frequently updated filters increase robustness to learning during potentially undetected tracking failure. In our approach, one of the filters is never updated (the initial filter), which guarantees full recovery from potential contamination of the updated filters if a view similar to the initial training image appears. The i-th filter is updated every \(n_{i}^{\mathrm {DE}}\) frames similarly as the short-term filter:

A random-walk motion model is added as a principled approach to modeling the growth of the target search region size. The target position prior \(\pi (\mathbf {x}_t)=\mathcal {N}(\mathbf {x}_t; \mathbf {x}_{c}, \varSigma _t)\) at time-step t is a Gaussian with a diagonal covariance \(\varSigma _t=\mathrm {diag}(\sigma _{xt}^2, \sigma _{yt}^2)\) centered at the last confidently estimated position \(\mathbf {x}_{c}\). The variances in the motion model gradually increase with the number of frames \(\varDelta _t\) since the last confident estimation, i.e., \([\sigma _{xt}, \sigma _{yt}] = [x_w, x_h] \alpha _{s}^{\varDelta _t}\), where \(\alpha _{s}\) is scale increase parameter, \(x_w\) and \(x_h\) are the target width and height, respectively.

During target re-detection, a filter is selected from \(\mathcal {H}^{\mathrm {DE}}\) and correlated with features extracted from the entire image. The detected position \(\mathbf {x}^\mathrm {DE}_t\) is estimated as the location maximum of the correlation response multiplied with the motion prior \(\pi (\mathbf {x}_t)\) as shown in Fig. 2 (right). For implementation efficiency only a single filter is evaluated on each image. The algorithm cycles through all filters in \(\mathcal {H}^{\mathrm {DE}}\) and all target size scales \(\mathcal {S}^{\mathrm {DE}}\) in subsequent images until the target is detected. In practice this means that all filters are evaluated approximately within a second of the sequence (Sect. 4.1).

3.5 Tracking with FuCoLoT

The FuCoLoT integrates the short-term component (Sect. 3.2), the uncertainty estimator (Sect. 3.3) and target recovery (Sect. 3.4) as follows.

Initialization. The long-term tracker is initialized in the first frame and the learned initialization model \(\mathbf {h}^\mathrm {ST}_1\) is stored. In the remaining frames, \(N_\mathrm {DE}\) visual models are maintained at different time-scales for target localization and detection \(\{ \mathbf {h}_{i}^{\mathrm {DE}} \}_{i\in 1,\ldots N_{\mathrm {DE}}}\), where the model updated at every frame is the short-term visual model, i.e., \(\mathbf {h}^\mathrm {ST}_t=\mathbf {h}_{N_\mathrm {DE}}^\mathrm {DE}\), and the model that is never updated is equal to the initialization model, i.e., \(\mathbf {h}_{1}^\mathrm {DE}=\mathbf {h}^\mathrm {ST}_1\).

Localization. A tracking iteration at frame t starts with the target position \(\mathbf {x}_{t-1}\) from the previous frame, a tracking quality score \(q_{t-1}\) and the mean \(\overline{q}_{t-1}\) over the recent \(N_q\) confidently tracked frames. A region is extracted around \(\mathbf {x}_{t-1}\) in the current image and the correlation response is computed using the short-term component model \(\mathbf {h}^\mathrm {ST}_{t-1}\) (Sect. 3.2). Position \(\mathbf {x}^\mathrm {ST}_t\) and localization quality \(q^\mathrm {ST}_t\) (6) are estimated from the correlation response \(\mathbf {r}_{t}^{\mathrm {ST}}\). When tracking is confident at \(t-1\), i.e., the uncertainty (7) \(\overline{q}_t/q_t\) was smaller than \(\tau _q\), only the short-term component is run. Otherwise the detector (Sect. 3.4) is activated as well to address potential target loss. The detector filter \(\mathbf {h}^\mathrm {DE}_i\) is chosen from the sequence of stored detectors \(\mathcal {H}^{\mathrm {DE}}\) and correlated with the features extracted from the entire image. The detection hypothesis \(\mathbf {x}^\mathrm {DE}_t\) is obtained as the location of the maximum of the correlation multiplied by the motion model \(\pi (\mathbf {x}_t)\), while the localization quality \(q^\mathrm {DE}_t\) (6) is computed only on the correlation response.

Update. In case the detector has not been activated, the short-term position is taken as the final target position estimate. Alternatively, both position hypotheses, i.e., the position estimated by the short-term component as well as the position estimated by the detector, are considered. The final target position is estimated as the one with higher quality score, i.e.,

If the estimated position is reliable (7), a constrained filter \(\tilde{\mathbf {h}}^\mathrm {ST}_{t}\) is estimated according to [28] and the short-term component (5) and detector (8) are updated. Otherwise the models are not updated, i.e., \(\eta =0\) in (5) and (8).

4 Experiments

4.1 Implementation Details

We use the same standard HOG [7] and colornames [11, 39] features in the short-term component and in the detector. All the parameters of the CSRDCF filter learning are the same as in [28], including filter learning rate \(\eta = 0.02\) and regularization \(\lambda = 0.01\). We use 5 filters in the detector, updated with the following frequencies \(\{ 0, 1/250, 1/50, 1/10, 1 \}\) and size scale factors \(\{ 0.5, 0.7, 1, 1.2, 1.5, 2 \}\).

The random-walk motion model region growth parameter was set to \(\alpha _{s}=1.05\). The uncertainty threshold was set to \(\tau _{q}=2.7\) and the parameter “recent frames” was \(N_{q}=100\). The parameters did not require fine tuning and were kept constant throughout all experiments. Our Matlab implementation runs at 15 fps on OTB100 [40], 8 fps on VOT16 [23] and 6 fps on UAV20L [32] dataset. The experiments were conducted on an Intel Core i7 3.4 GHz standard desktop.

4.2 Evaluation on a Long-Term Benchmark

The long-term performance of the FuCoLoT is analyzed on the recent long-term benchmark UAV20L [32] that contains results of 11 trackers on 20 long term sequences with average sequence length 2934 frames. To reduce clutter in the plots we include top-performing tracker SRDCF [10] and all long-term trackers in the benchmark, i.e., MUSTER [19] and TLD [20]. We add the most recent state-of-the-art long-term trackers CMT [34], LCT [30], and PTAV [15] in the analysis, as well as the recent state-of-the-art short-term DCF trackers CSRDCF [28], CCOT [12] and CNN-based MDNet [33] and SiamFC [1].

UAV20L [32] benchmark results. The precision plot (left) and the success plot (right).

Results in Fig. 4 show that on benchmark, FuCoLoT by far outperforms all top baseline trackers as well as all the recent long-term state-of-the-art. In particular FuCoLoT outperforms the recent long-term correlation filter LCT [30] by \(102\%\) in precision and \(106\%\) in success measures. The FuCoLoT also outperforms the currently best-performing published long-term tracker PTAV [15] by over \(22\%\) and \(26\%\) in precision and success measures, respectively. This is an excellent result especially considering that FuCoLoT does not apply deep features and backtracking like PTAV [15] and that it runs in near-realtime on a single thread CPU. The FuCoLoT outperforms the second-best tracker on UAV20L, MDNet [33] which uses pre-trained network and runs at cca. 1fps, by \(21\%\) in precision and \(19\%\) in success measure.

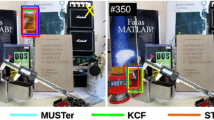

Qualitative results of the FuCoLoT and four state-of-the-art trackers on four sequences from [32]. The selected sequences contain full occlusions which highlight the re-detection ability of a tracker.

Table 1 shows tracking performance in terms of the AUC measure for the twelve attributes annotated in the UAV20L benchmark. The FuCoLoT is the top performing tracker across all attributes, including full occlusion and out-of-view, where it outperforms the second-best PTAV and MDNet by \(29\%\) and \(11\%\), respectively. These attributes focus on the long-term tracker capabilities since they require target re-detection.

Figure 5 shows qualitative tracking examples for the FuCoLoT and four state-of-the-art trackers: PTAV [15], CSRDCF [28], MUSTER [19] and TLD [20]. In all these sequences the target becomes fully occluded at least once. FuCoLot is the only tracker that is able to successfully track the target throughout Group2, Group3 and Person19 sequences, which shows the strength of the proposed correlation filter based detector. In Person 17 sequence, the occlusion is shorter, thus the short-term CSRDCF [28] and long-term PTAV [15] are able to track as well.

4.3 Re-detection Capability Experiment

In the original UAV20L [32] dataset, the target disappears and reappears only 39 times, resulting in only 4% of frames with the target absent. This setup does not significantly expose the target re-detection capability of the tested trackers. To address this, we have cropped the images in all sequences to 40% of their size around the center at target initial position. An example of the dataset modification is shown in Fig. 6. This modification increased the target disappearance/reappearance to 114 cases, and the target is absent in 34% of the frames.

The top six trackers from Sect. 4.2 and a long-term baseline TLD [20] were re-evaluated on the modified dataset (results in Table 2). The gap between the FuCoLoT and the other trackers is further increased. FuCoLoT outperforms the second-best MDNet [33] by 30% and the recent long-term state-of-the-art tracker PTAV [15] by 47%. Note that FuCoLoT outperforms all CNN-based trackers using only hand-crafted features, which speaks in favor of the highly efficient architecture.

4.4 Ablation Study

Several modifications of the FuCoLoT detector were tested to expose the contributions of different parts in our architecture. Two variants used the filter extracted at initialization in the detector with a single scale detection (\(\mathrm {FuCoLoT_{D^{0}S^{1}}}\)) and multiple scale detection (\(\mathrm {FuCoLoT_{D^{0}S^{M}}}\)) and one variant used the most recent filter from the short-term tracker in the detector with multiple scale detection (\(\mathrm {FuCoLoT_{D^{ST}S^{M}}}\)). The results are summarized in Table 3.

In single-scale detection, the most recent short-term filter (\(\mathrm {FuCoLoT_{D^{ST}S^{1}}}\)) marginally improves the performance of the (\(\mathrm {FuCoLoT_{D^{0}S^{1}}}\)) and achieves 0.499 AUC. The performance improves to 0.505 AUC by adding multiple scales search in the detector (\(\mathrm {FuCoLoT_{D^{ST}S^{M}}}\)) and further improves to 0.533 AUC when considering filters with variable temporal updating in the detector (\(\mathrm {FuCoLoT}\)). For reference, all FuCoLoT variants significantly outperform the FuCoLOT short-term tracker without our detector, i.e. the CSRDCF [28] tracker.

4.5 Performance on Short-Term Benchmarks

For completeness, we first evaluate the performance of FuCoLoT on the popular short-term benchmarks: OTB100 [40], and VOT2016 [23]. A standard no-reset evaluation (OPE [40]) is applied to focus on long-term behavior: a tracker is initialized in the first frame and left to track until the end of the sequence.

Tracking quality is measured by precision and success plots. The success plot shows all threshold values, the proportion of frames with the overlap between the predicted and ground truth bounding boxes as greater than a threshold. The results are summarized by areas under these plots which are shown in the legend. The precision plots in Figs. 7 and 8 show a similar statistics computed from the center error. The results in the legends are summarized by percentage of frames tracked with an center error less than 20 pixels.

OTB100 [40] benchmark results. The precision plot (left) and the success plot (right).

The benchmarks results have some long-term trackers and the most recent PTAV [15] – the currently best-performing published long-term tracker. Note that PTAV applies preemptive tracking with backtracking and requires future frames to predict position of the tracked object which limits its applicability.

OTB100 [40] contains results of 29 trackers evaluated on 100 sequences with average sequence length of 589 frames. We show only the results for top-performing recent baselines, and recent top-performing state-of-the-art trackers SRDCF [10], MUSTER [19], LCT [30] PTAV [15] and CSRDCF [28].

The FuCoLoT ranks among the top on this benchmark (Fig. 7) outperforming all baselines as well as state-of-the-art SRDCF, CSRDCF and MUSTER. Using only handcrafted features, the FuCoLoT achieves comparable performance to the PTAV [15] which uses deep features for re-detection and backtracking.

VOT2016 [23] benchmark results. The precision plot (left) and the success plot (right).

VOT2016 [23] is a challenging recent short-term tracking benchmark which evaluates 70 trackers on 60 sequences with the average sequence length of 358 frames. The dataset was created using a methodology that selected sequences which are difficult to track, thus the target appearance varies much more than in other benchmarks. In the interest of visibility, we show only top-performing trackers on no-reset evaluation, i.e., SSAT [23, 33], TCNN [23, 33], CCOT [12], MDNetN [23, 33], GGTv2 [13], MLDF [38], DNT [6], DeepSRDCF [10], SiamRN [1] and FCF [23]. We add CSRDCF [28] and the long-term trackers TLD [20], LCT [30], MUSTER [19], CMT [34] and PTAV [15].

The FuCoLoT is ranked fifth (Fig. 8) according to the tracking success measure, outperforming 65 trackers, including trackers with deep features, CSR-DCF and PTAV. Note that four trackers with better performance than FuCoLoT (SSAT, TCNN, CCOT and MDNetN) are computationally very expensive CNN-based trackers. They are optimized for accurate tracking on short sequences, without an ability for re-detection. The FuCoLoT outperforms all long-term trackers on this benchmark (TLD, CMT, LCT, MUSTER and PTAV).

5 Conclusion

A fully-correlational long-term tracker – FuCoLot – was proposed. FuCoLoT is the first long-term tracker that exploits the novel DCF constrained filter learning method [28]. The constrained filter learning based detector is able to re-detect the target in the whole image efficiently. FuCoLoT maintains several correlation filters trained on different time scales that act as the detector components. A novel mechanism based on the correlation response quality is used for tracking uncertainty estimation which drives interaction between the short-term component and the detector.

On the UAV20L long-term benchmark [32] FuCoLoT outperforms the best method by over 19%. Experimental evaluation on short-term benchmarks [23, 40] showed state-of-the-art performance. The Matlab implementation running at 15 fps will be made publicly available.

References

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.S.: Fully-convolutional siamese networks for object tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 850–865. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_56

Beyer, L., Breuers, S., Kurin, V., Leibe, B.: Towards a principled integration of multi-camera re-identification and tracking through optimal Bayes filters. CoRR abs/1705.04608 (2017)

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: Computer Vision and Pattern Recognition, pp. 2544–2550 (2010)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Chang, H.J., Park, M.S., Jeong, H., Choi, J.Y.: Tracking failure detection by imitating human visual perception. In: Proceedings of the International Conference on Image Processing, pp. 3293–3296 (2011)

Chi, Z., Li, H., Lu, H., Yang, M.H.: Dual deep network for visual tracking. IEEE Trans. Image Process. 26(4), 2005–2015 (2017)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: Computer Vision and Pattern Recognition, vol. 1, pp. 886–893 (2005)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1561–1575 (2017)

Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: Eco: efficient convolution operators for tracking. In: Computer Vision and Pattern Recognition, pp. 6638–6646 (2017)

Danelljan, M., Hager, G., Shahbaz Khan, F., Felsberg, M.: Learning spatially regularized correlation filters for visual tracking. In: International Conference on Computer Vision, pp. 4310–4318 (2015)

Danelljan, M., Khan, F.S., Felsberg, M., van de Weijer, J.: Adaptive color attributes for real-time visual tracking, pp. 1090–1097 (2014)

Danelljan, M., Robinson, A., Shahbaz Khan, F., Felsberg, M.: Beyond correlation filters: learning continuous convolution operators for visual tracking. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9909, pp. 472–488. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46454-1_29

Du, D., Qi, H., Wen, L., Tian, Q., Huang, Q., Lyu, S.: Geometric hypergraph learning for visual tracking. IEEE Trans. Cyber. 47(12), 4182–4195 (2017)

Du, D., Wen, L., Qi, H., Huang, Q., Tian, Q., Lyu, S.: Iterative graph seeking for object tracking. IEEE Trans. Image Process. 27(4), 1809–1821 (2018)

Fan, H., Ling, H.: Parallel tracking and verifying: a framework for real-time and high accuracy visual tracking. In: International Conference on Computer Vision, pp. 5486–5494 (2017)

Galoogahi, H.K., Sim, T., Lucey, S.: Multi-channel correlation filters. In: International Conference on Computer Vision, pp. 3072–3079 (2013)

Grabner, H., Leistner, C., Bischof, H.: Semi-supervised on-line boosting for robust tracking. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5302, pp. 234–247. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88682-2_19

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2015)

Hong, Z., Chen, Z., Wang, C., Mei, X., Prokhorov, D., Tao, D.: Multi-store tracker (muster): a cognitive psychology inspired approach to object tracking. In: Computer Vision and Pattern Recognition, pp. 749–758, June 2015

Kalal, Z., Mikolajczyk, K., Matas, J.: Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 34(7), 1409–1422 (2012)

Kiani Galoogahi, H., Sim, T., Lucey, S.: Correlation filters with limited boundaries. In: Computer Vision and Pattern Recognition, pp. 4630–4638 (2015)

Kristan, M., et al.: A novel performance evaluation methodology for single-target trackers. IEEE Trans. Pattern Anal. Mach. Intell. 38, 2137 (2016)

Kristan, M., et al.: A novel performance evaluation methodology for single-target trackers. In: Proceedings of the European Conference on Computer Vision (2016)

Kristan, M., et al.: The visual object tracking vot2015 challenge results. In: International Conference on Computer Vision (2015)

Kristan, M., Perš, J., Sulič, V., Kovačič, S.: A graphical model for rapid obstacle image-map estimation from unmanned surface vehicles. In: Cremers, D., Reid, I., Saito, H., Yang, M.-H. (eds.) ACCV 2014. LNCS, vol. 9004, pp. 391–406. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-16808-1_27

Kwak, S., Nam, W., Han, B., Han, J.H.: Learning occlusion with likelihoods for visual tracking. In: International Conference on Computer Vision, pp. 1551–1558 (2011)

Li, Y., Zhu, J.: A scale adaptive kernel correlation filter tracker with feature integration. In: Agapito, L., Bronstein, M.M., Rother, C. (eds.) ECCV 2014. LNCS, vol. 8926, pp. 254–265. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-16181-5_18

Lukežič., Vojíř, T., Čehovin Zajc, L., Matas, J., Kristan, M.: Discriminative correlation filter with channel and spatial reliability. In: Computer Vision and Pattern Recognition, pp. 6309–6318 (2017)

Ma, C., Huang, J.B., Yang, X., Yang, M.H.: Adaptive correlation filters with long-term and short-term memory for object tracking. Int. J. Comput. Vis. 126(8), 771–796 (2018)

Ma, C., Yang, X., Zhang, C., Yang, M.H.: Long-term correlation tracking. In: Computer Vision and Pattern Recognition, pp. 5388–5396 (2015)

Maresca, M.E., Petrosino, A.: MATRIOSKA: a multi-level approach to fast tracking by learning. In: Petrosino, A. (ed.) ICIAP 2013. LNCS, vol. 8157, pp. 419–428. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-41184-7_43

Mueller, M., Smith, N., Ghanem, B.: A benchmark and simulator for UAV tracking. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 445–461. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_27

Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking. In: Computer Vision and Pattern Recognition, pp. 4293–4302, June 2016

Nebehay, G., Pflugfelder, R.: Clustering of static-adaptive correspondences for deformable object tracking. In: Computer Vision and Pattern Recognition, pp. 2784–2791 (2015)

Pernici, F., Del Bimbo, A.: Object tracking by oversampling local features. IEEE Trans. Pattern Anal. Mach. Intell. 36(12), 2538–2551 (2013)

Tao, R., Gavves, E., Smeulders, A.W.M.: Siamese instance search for tracking. In: Computer Vision and Pattern Recognition, pp. 1420–1429 (2016)

Valmadre, J., Bertinetto, L., Henriques, J., Vedaldi, A., Torr, P.H.S.: End-to-end representation learning for correlation filter based tracking. In: Computer Vision and Pattern Recognition, pp. 2805–2813 (2017)

Wang, L., Ouyang, W., Wang, X., Lu, H.: Visual tracking with fully convolutional networks. In: International Conference on Computer Vision, pp. 3119–3127, December 2015

van de Weijer, J., Schmid, C., Verbeek, J., Larlus, D.: Learning color names for real-world applications. IEEE Trans. Image Process. 18(7), 1512–1523 (2009)

Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015)

Acknowledgement

This work was partly supported by the following research programs and projects: Slovenian research agency research programs and projects P2-0214 and J2-8175. Jiří Matas and Tomáš Vojíř were supported by The Czech Science Foundation Project GACR P103/12/G084 and Toyota Motor Europe.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Lukežič, A., Zajc, L.Č., Vojíř, T., Matas, J., Kristan, M. (2019). FuCoLoT – A Fully-Correlational Long-Term Tracker. In: Jawahar, C., Li, H., Mori, G., Schindler, K. (eds) Computer Vision – ACCV 2018. ACCV 2018. Lecture Notes in Computer Science(), vol 11362. Springer, Cham. https://doi.org/10.1007/978-3-030-20890-5_38

Download citation

DOI: https://doi.org/10.1007/978-3-030-20890-5_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20889-9

Online ISBN: 978-3-030-20890-5

eBook Packages: Computer ScienceComputer Science (R0)