Abstract

Computational thinking (CT) is a term widely used to describe algorithmic thinking and logic reasoning concepts and processes often related to computer programming. As such, CT as a cognitive ability builds on concepts and processes that derive from computer programming, but are applicable to wider real-life problems and STEM domains. CT has recently been argued to be a fundamental skill for 21st century education and an early academic success indicator that should be introduced and trained already in primary school education. Accordingly, we developed three life-size board games – Crabs & Turtles: A Series of Computational Adventures – that aim at providing an unplugged, gamified and low-threshold introduction to CT by presenting basic coding concepts and computational thinking processes to 8 to 9-year-old primary school children. For the design and development of these educational board games we followed a rapid prototyping approach. In the current study, we report results of an empirical evaluation of game experience of our educational board games with students of the target age group. In particular, we conducted quantitative analyses of player experience of primary school student participants. Results indicate overall positive game experience for all three board games. Future studies are planned to further evaluate learning outcomes in educational interventions with children.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

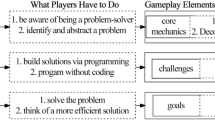

Computational Thinking (CT) denotes the mental ability of creating a computational solution to a problem, by first decomposing it, and then developing a structured and algorithmic solution procedure [1, 2]. CT as a cognitive ability is argued to reflect the application of fundamental concepts and reasoning processes that derive from computer science and informatics to wider everyday life activities and problems but also STEM (Science, Technology, Engineering, and Mathematics) domains [3]. The construct of CT as a cognitive ability shares common concepts with computer programming as a practical skill. Central concepts in computer programming are the ideas of sequences, operators, data/variables, conditionals, events, loops, and parallelism [4]. Respectively, CT draws on processes such as decomposition, algorithmic thinking, conditional logic, pattern recognition, evaluation, abstraction, and generalization, which reflect cognitive counterparts of central computer programming concepts [2, 5].

CT, as a rather general problem solving strategy applied to different domains, has been identified as a fundamental 21st century skill [1]. It has been suggested that the instruction on CT concepts may improve students’ analytical skills and provide early indication and prediction of academic success [6]. Therefore, CT is considered a key competence for everyone and not just computer scientists [1], comparable to literacy and numeracy [7], that should be taught and acquired early in education.

Recent research focused on the benefits of CT and its integration into educational curricula, which has lately led to several adaptations and reformations of educational programs throughout all levels of education worldwide [8, 9]. Educational initiatives and governmental institutions all over the world have been working on the integration of CT into curricula of educational programs of primary, secondary, and higher education [10,11,12,13].

The societal relevance of CT led us to design and develop a CT training course for primary school children, introducing computer programming concepts and CT processes, applied to different STEAM (Science, Technology, Engineering, Art, and Mathematics) domains (for information on the overall course structure see [14]). Importantly, to offer a low threshold introduction to CT utilizing embodied learning [15], we developed unplugged life-size board games Crabs & Turtles: A Series of Computational Adventures (for a more detailed description of the games see [16]) for our CT training course.

Crabs & Turtles shares common ideas with concepts of Papert’s educational Logo Turtle [17] and logo-inspired gamified educational activities [18]. Logo Turtle transferred to the real world conceptualized ideas of programing-like commands and algorithms, by applying them for the first time to a transparent moving and haptic object, the Turtle. The unplugged life-size game design allows embodied training (for the concept of embodied cognition [15]) of simple computational concepts and encourages active engagement and participation of students (for an overview see [19]). The games’ target group are primary and secondary school students (8–12 years old) with no prior programming knowledge. We deliberately chose an unplugged mode of the game taking into consideration common concerns regarding the introduction of computer programming to young children [20, 21]. The unplugged mode fosters the understanding that CT processes do not occur only within digital contexts, but have a wider application in real-life problem solving.

Design and development of the game followed an iterative user-centered process [22]. More specifically, we tested first design ideas of the game with a custom-made life-size game as a pilot educational intervention with primary school children [23]. Later on, we developed and tested usability of an early prototype with primary school students during a short workshop session. After integrating feedback from both previous stages, we continued with the examination of users’ game experience quantitatively with an adult population to ensure the games’ appropriateness for children before evaluating the game with the target age group [16]. Feedback from this study was integrated again and resulted in the latest version of the games. The final version of the games was evaluated for its game experience in the target age group. Results of this evaluation are reported in the current article.

2 Games Description

Crabs & Turtles [16] consists of three different games: i. The Treasure Hunt, ii. The Race, and iii. Patterns. The games are designed for children at primary school level, focusing specifically on 3rd and 4th graders. They are intended to be used as integrated educational interventions in the classroom. Teachers play a central role in their implementation by acting as the game master in all three games, which can be played independently from each other and at any order of preference. The games aim at introducing and training processes related to CT, like abstraction, algorithms, decomposition, evaluation and patterns. In particular, they focus on mathematical (i.e., addition, multiplication, subtraction, and angular degrees) and coding (i.e., conditionals, constants and variables, events, loops, operators, and sequences) concepts related to those processes.

The Treasure Hunt (see Fig. 1) is the first game of Crabs & Turtles. Players have to strategically move the pawn in teams of two on a grid board to collect food treasure items for their pawns (either crabs or turtles). To do so, teams of two have to efficiently build sequences of instructions, consisting of specific card commands to move their pawns across the board to gather treasures. They also need to obey specific rules and restrictions on movements indicated by the environment. For example, crab and turtle pawns can move only across specific colored tiles on the grid, water or stone and grass or stone, respectively. The main learning objective of the game is the general introduction to algorithmic thinking and sequential problem solving, as well as the consideration of restrictions and the use of simple conditional orders. Coding concepts explicitly addressed in this game are sequences and loops. For successful application of coding concepts players are awarded badges during the game (e.g. loop badge, sequence badge, etc.). Along with coding concepts, students get familiar with handling angular degrees in spatial orientation. The winner of the game is the team that first collects a specific number of food treasure items.

The Race (see Fig. 2) is the second game of Crabs & Turtles. In this game, players in teams of two have to reach the end of the game board by solving math/related riddles and handle the changing characteristics of variables (e.g. in-/decreasing of values). This game specifically focuses on coding concepts related to mathematics. In particular, coding skills explicitly addressed in this game are constants and variables, conditionals, events, and operators. During the game, players are awarded badges related to their achievements like variable badge, addition badge, etc. Mathematical abilities trained in the game relate to addition, subtraction, and multiplication. Consequently, the riddles of the game consist of equations related to mathematical operations and variables. The winner of the game is the team of two that first reaches the end of the race in the center of the game board.

Patterns (see Fig. 3) is the third game of Crabs & Turtles. In this game children play individually, trying to match as fast as possible two types of cards based on visual patterns depicted on them. In order to do so, they have to read color codes, recognize patterns, and follow specific restrictions. The color codes consist of colors, a shape, and an arrow that indicates the order of reading the color code (see Fig. 3, left). The patterns consist of colorful shapes which are depictions of a star, a square, a circle, and a triangle (see Fig. 3, right). The order of the shapes as well as their color is different on each card matching in this way only one specific color code. The main learning objective of the game is the introduction to the concept of patterns, by identifying color and shape patterns. The winner of the game is the player that succeeds in collecting the most cards.

3 Evaluation

After a successful 2-phase user test evaluation procedure with adult participants [16] we moved on to evaluating the games with primary school children – the actual target group of the games. In our 45-minute gaming sessions, main focus was on assessing game experience quantitatively to identify potential dysfunctionalities during game-play, which then can be addressed before integrating the games into our CT course and evaluating their educational potential. To validate the design approach, participants consisted of different grades of primary school. Instructors and game masters in those sessions were the creators of the games.

3.1 Participants

We collected data from 79 primary school students aged between 8 and 12 years of age from 6 different schools in Greece and Germany. Due to missing data on more than 10% of the items, we excluded data of 9 participants from further analysis. For another 4 participants who completed more than one game we had to exclude some of their questionnaires for specific games, because responses were missing due to local organizational issues. Missing values for fewer items in the questionnaires were replaced by the mean score for the respective item computed from other participants. As such, data of a final sample of 70 participants was considered in the analyses. (age in years: mean = 9.44, SD = 0.845; male: 42, female: 20, not indicated: 8).

3.2 Procedure and Materials

In separate teaching sessions, we evaluated game experience of primary school students. Most of the participants played all 3 games of Crabs & Turtles. Before participants started playing each of the games, we provided oral and visual instructions. After playing each game, participants were asked to complete the Game Experience Questionnaire (henceforth GEQ) [24]. We used a translated version of the Core (33 items) module in Greek and German to assess overall game experience. The Core module consists of seven subscales addressing i. Immersion, ii. Flow, iii. Competence, iv. Positive Affect, v. Negative Affect, vi. Tension, and vii. Challenge. For each subscale we used the average scores of the respective items as dependent variable in our analyses. Each item had to be responded on a 5-point Likert-scale (1 = not at all; 2 = slightly; 3 = moderately; 4 = fairly; 5 = extremely). For example, the fourth item of the Core module reads as follows: “I felt happy” and participants had to rate their experience of content on the aforementioned Likert scale by crossing an answer from 1 to 5 (e.g. crossing 4 would mean “I felt fairly happy”).

Furthermore, we used 4 additional items to further evaluate overall game experience, which also employed a 5-point Likert-scale: Q1. I would explain my experience as playing; Q2. I would explain my experience as learning (Q1 & Q2: 1 = not at all; 2 = not really; 3 = undecided; 4 = somewhat; 5 = very much); Q3. I would recommend the games to a friend; Q4. I would like to play the games again in the future (Q3 & Q4: 1 = not at all; 2 = not really; 3 = undecided; 4 = likely; 5 = very likely). We added these 4 items to the questionnaire with the intention to measure the experience of the game as learning and/or playing, because the GEQ aims at evaluating game experience more broadly and not game experience for educational games in particular.

Finally, to evaluate specific design elements of The Treasure Hunt and The Race, such as boards, cards, game pieces, inventory items, and rules, 5 more items (e.g. Q: How much did you like the inventory items?), again using a 5-point Likert-scale (1 = not at all; 2 = slightly; 3 = moderately; 4 = fairly; 5 = extremely), were used. For Patterns only cards and rules were evaluated.

3.3 Results

The analyses of the questionnaires were conducted for each game separately. Current results are presented in the following three sections. We used a conservative approach of analyzing each subscale of the GEQ by conducting one sample t-test comparing means of subscale ratings to the middle value of the scale (3 = mediocre) of the 5-point Likert scale. Internal consistency (Cronbachs’s Alpha) of the GEQ as reported by [24] is presented in Table 1 (column α*). In addition, Cronbach’s alpha as obtained in the current sample is also reported in Table 1 (column α). The observed internal consistency indicated acceptable reliability for most subscales with α > .70. However, this was not the case for subscales Tension/Annoyance (for games 2 and 3), Challenge (for games 1, 2 and 3) and Negative Affect (for game 1). For the analyses of overall game experience and the specific design elements we again ran t-tests against the middle of the respective scale. Descriptive results and inferential statistics for the GEQ subscales are summarized in Table 1 and Fig. 4.

Students’ rating of GEQ subscales for each of the three games. On the y-axes mean ratings of each subscales of the GEQ is represented. The y-axes refer to each of the subscales of the GEQ (Comp = Competence; Immersion = Sensory & Imaginative Immersion; Flow = Flow; Tension = Tension/Annoyance; Challenge = Challenge; NegAff = Negative Affect; PosAff = Positive Affect). Error bars depict 1 standard error of the mean.

3.3.1 The Treasure Hunt

Game Experience. Participants rated this game significantly above mediocre on the subscales Competence, Sensory & Imaginative Immersion, and Positive Affect. In contrast, ratings were significantly below mediocre for the subscales Tension/Annoyance, Challenge, and Negative Affect. We did not find a significant difference from mediocre for Flow (see Table 1).

Overall Experience. Participants experienced The Treasure Hunt somewhat as playing (Q1: mean = 4.22, SD = 1.10; t(17) = 6.29, p < 0.001) as reflected by ratings significantly above mediocre and not so much as a learning activity (Q2: mean = 2.98, SD = 1.51; t(17) = −.09, p = 0.929). Additionally, participants reported that they would likely recommend the game to a friend (Q3: mean = 3.74, SD = 1.37; t(17) = 3.05, p = 0.005), and would likely play the game again in the future (Q4: mean = 4.29, SD = 1.08; t(17) = 6.71, p < 0.001), as indicated by ratings significantly above mediocre.

Design Elements’ Evaluation. The design elements of The Treasure Hunt scored a mean of 4.13 (SD = 1.02) on the 5-point Likert scale. More specifically, users rated all five design elements of The Treasure Hunt (Board: mean = 4.09, SD = 1.17, t(17) = 5.27, p < 0.001; Cards: mean = 3.88, SD = 1.24, t(17) = 4.00, p < 0.001; Game Pieces: mean = 4.50, SD = .84, t(17) = 10.07, p < 0.001; Inventory items: mean = 4.16, SD = 1.17, t(17) = 5.61, p < 0.001 and Rules: mean = 4.02, SD = 1.35, t(17) = 4.27, p < 0.001) significantly above mediocre.

3.3.2 The Race

Game Experience. Participants’ ratings for this game were significantly above mediocre for the Competence, Sensory & Imaginative Immersion, and Positive Affect subscales of the GEQ Core module. In contrast, participants rated the game significantly below mediocre on the subscales Tension/Annoyance, Challenge, and Negative Affect. Also, we did not find a significant difference to mediocre for the Flow subscale.

Overall Experience. For The Race ratings significantly above mediocre indicated that participants rated their game experience somewhat as playing (Q1: mean = 4.16, SD = 1.03; t(16) = 5.64, p < 0.001) and not as a learning activity for which there was no significant difference from mediocre (Q2: mean = 3.21, SD = 1.41; t(17) = 0.74, p = 0.467). Furthermore, ratings significantly above mediocre reflected that they would likely recommend the game to a friend (Q3: mean = 3.91, SD = 1.12; t(16) = 4.09, p < 0.001), and also would likely play it again in the future (Q4: mean = 4.09, SD = 1.15; t(16) = 4.72, p < 0.001).

Design Elements’ Evaluation. Overall, all five design elements of The Race were positively rated scoring a mean of 4.02 (SD = 1.01). More specifically, participants liked all five design elements (Board: mean = 4.08, SD = 1.08, t(16) = 5.03, p < 0.001; Cards: mean = 3.75, SD = 1.20, t(16) = 3.13, p = 0.005; Game pieces: mean = 4.42, SD = 1.08, t(16) = 6.58, p < 0.001; Inventory items: mean = 3.91, SD = 1.22, t(16) = 3.72, p = 0.001 and Rules: mean =3.96, SD = 1.27, t(16) = 3.76, p = 0.001) as reflected by ratings significantly above mediocre.

3.3.3 Patterns

Game Experience. Similarly to the results of the other two games, participants’ ratings for Patterns were significantly above mediocre for the Competence, Sensory & Imaginative Immersion, and Positive affect subscales of the GEQ Core module. Again, ratings for the Tension/Annoyance, Challenge, and Negative Affect subscales were significantly below mediocre. Also, we did not find a significant difference to mediocre for the subscale Flow.

Overall Experience. Participants perceived the Patterns game somewhat as a playing experience (Q1: mean = 3.93, SD = 1.24; t(15) = 4.92, p < 0.001) which was again reflected by ratings above mediocre and with a marginally significant score as a learning activity as well (Q3: mean = 3.42, SD = 1.43; t(17) = 1.94, p = 0.059). Moreover, according to ratings above mediocre, participants reported that they would likely recommend it to a friend (Q3: mean = 3.74, SD = 1.29; t(15) = 3.75, p = 0.001), and also play the game again (Q4: mean = 4.10, SD = 1.30; t(15) = 5.58, p < 0.001).

Design Elements’ Evaluation. The design elements in Patterns scored a mean of 3.98 (SD = 1.01) on the 5-point Likert scale. The two design elements in the questionnaire for Patterns scored positively as indicated by ratings significantly above mediocre (Cards: mean = 4.00, SD = 1.05, t(15) = 6.27, p < 0.001; and Rules: mean = 3.95, SD = 1.09, t(15) = 5.72, p < 0.001).

4 Discussion and Future Work

The present study aimed at evaluating game experience of primary school students in the three games of Crabs & Turtles and thus complements a previous evaluation of game experience in adults [16]. After evaluating the game experience in adults and gathering overall positive results and valuable feedback, we completed the design of the final prototype for our games and tested user experience in the actual target group. We play-tested the games and collected data using the GEQ. Quantitative analyses on the game users’ experience provided promising results regarding the validity of our approach.

Student participants rated their game experience after playing each game. Results indicated an overall positive reception of the games. In particular, students reported feeling competent and immersed while playing all three games, as well as experiencing positive affect. On the other hand, the overall challenge was rated low. Importantly, tension and negative affect ratings were also low for all three games. In addition, all three games of Crabs & Turtles were experienced as a playing activity and students would likely be willing to play again all three of them and recommend them to their friends. Additionally, evaluation of the quality of design elements for each game was rated highly positive. In summary, this indicates that we managed to implement CT concepts into three gaming activities while achieving overall positive game experience in children. However, the actual educational value of each of the games needs to be investigated comprehensively and evaluated empirically in separate studies, which, in fact, are currently being conducted in Germany.

The main aim of this study was the quantitative evaluation of primary school students’ game experience in Crabs & Turtles to extend a previous evaluation in adults [16]. The overall positive evaluation of game experience replicated in the target group now allows for a comprehensive evaluation of cognitive and educational benefits when playing the games. Although the overall results were positive, the rather low scores in challenge and flow in all three games may not be optimal. Therefore, we plan to provide a set of game instructions with multiple adaptations. For example, we will facilitate selection of difficulty levels based on the number of players, so that the game becomes adaptive to classroom conditions (e.g. few or many students) and to students’ game understanding (e.g. in case the game is understood well and game play seems easy, rules could become gradually more challenging while playing). We also plan to adapt a challenging game mechanic in The Race that will foster competition between the teams at every round of the game by allowing all the teams to solve the riddle as fast as possible.

Future studies are planned with primary school students of 3rd and 4th grade to evaluate learning outcomes of the three games and their educational effectiveness on training CT-related skills. The three games, as part of a structured curriculum dedicated to training CT [14], will be evaluated through a pre-/post-test study design using a randomized field trial with a control group in 20 Hector Children’s Academies in Baden-Wuerttemberg, Germany. Moreover, this forthcoming evaluation will aim at investigating cognitive abilities underlying CT and possible transfer effects of the course, using standardized cognitive tests to allow a diverse approach and definition of CT. Finally, we aim at developing digital versions of our board games to allow for individual dynamic adaptation of – for instance – the difficulty of the games.

References

Wing, J.M.: Computational Thinking. Theor. Comput. Sci. 49(3), 33 (2006)

Wing, J.M.: Computational Thinking: What and Why? The Link - The Magazine of the Carnegie Mellon University School of Computer Science (2010)

Wang, P.S.: From Computing to Computational Thinking, 1st edn. Chapman and Hall/CRC, New York (2015)

Brennan, Κ., Resnick, Μ.: New frameworks for studying and assessing the development of computational thinking. In: Annual American Educational Research Association Meeting, Vancouver, BC, Canada (2012)

Astrachan, O., Briggs, Α.: The CS principles principles project. ACM Inroads 3(2), 38 (2012)

Haddad, R.J., Kalaani, Y.: Can computational thinking predict academic performance? In: Integrated STEM Education Conference Proceedings. IEEE (2015)

Yadav, A., Mayfield, C., Zhou, N., Hambrusch, S., Korb, J.T.: Computational thinking in elementary and secondary teacher education. ACM Trans. Comput. Educ. 14(1), 5 (2014)

Tuomi, P., Multisilta, J., Saarikoski, P., Suominen, J.: Coding skills as a success factor for a society. Educ. Inf. Technol. 23(1), 419 (2018)

Brown, N.C.C., Sentance, S.U.E., Crick, T.O.M., Humphreys, S.: Restart: the resurgence of computer science in UK schools. ACM Trans. Comput. Educ. 14(2), 9 (2014)

European School Network Homepage: https://www.esnetwork.eu/. Accessed 6 May 2018

European Coding Initiative Homepage: http://www.allyouneediscode.eu/. Accessed 6 May 2018

Code.org Homepage: https://code.org. Accessed 6 May 2018

National Science Foundation Homepage: https://www.nsf.gov/. Accessed 6 May 2018

Tsarava, K., Moeller, K., Pinkwart, N., Butz, M., Trautwein, U., Ninaus, M.: Training computational thinking: game-based unplugged and plugged-in activities in primary school. In: 11th European Conference on Games Based Learning Proceedings, ECGBL, ACPI, UK (2017)

Barsalou, L.W.: Grounded cognition. Ann. Rev. Psychol. 59, 617 (2008)

Tsarava, K., Moeller, K., Ninaus, M.: Training computational thinking through boar games: the case of crabs & turtles. Int. J. Serious Games 5(2), 25–44 (2018)

Papert, S.: Logo Philosophy and Implementation (1999)

Papert, S., Solomon, C.: Twenty Things To Do With a Computer (1971)

Echeverría, A., et al.: A framework for the design and integration of collaborative classroom games. Comput. Educ. 57(1), 1127 (2011)

Grover, S., Pea, R.: Computational thinking in K-12: a review of the state of the field. Educ. Res. 42(1), 38 (2013)

Pea, R.D., Kurland, D.M.: On the cognitive effects of learning computer programming. New Ideas Psychol. 2(2), 137 (1984)

Fullerton, T.: Game Design Workshop: A Playcentric Approach to Creating Innovative Games. CRC Press, Boca Raton (2008)

Tsarava, K.: Programming in Greek with Python. Aristotle University of Thessaloniki (2016)

Poels, K., de Kort, Y.A.M., Ijsselsteijn, W.A.: D3.3: game experience questionnaire: development of a self-report measure to assess the psychological impact of digital games. Technische Universiteit Eindhoven, Eindhoven (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Tsarava, K., Moeller, K., Ninaus, M. (2019). Board Games for Training Computational Thinking. In: Gentile, M., Allegra, M., Söbke, H. (eds) Games and Learning Alliance. GALA 2018. Lecture Notes in Computer Science(), vol 11385. Springer, Cham. https://doi.org/10.1007/978-3-030-11548-7_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-11548-7_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-11547-0

Online ISBN: 978-3-030-11548-7

eBook Packages: Computer ScienceComputer Science (R0)