Abstract

We propose a new numerical method for fractional ordinary differential equation systems based on a judiciously chosen quadrature point. The proposed method is efficient and easy to implement. We show that the convergence order of the method is 2. Numerical results are presented to demonstrate that the computed rates of convergence confirm our theoretical findings.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

We consider the following system of fractional ordinary differential equations:

where \(x(t)=(x_1(t), x_2(t), \ldots , x_n(t))^\top \in \mathbb {R}^n\) for a positive integer n, \(T>0\) is a fixed constant, \(f : \mathbb {R}^{n+1} \mapsto \mathbb {R}^n\) a given mapping, \(x^0 \in \mathbb {R}^n\) a given initial condition, and \( {_0 D^{\alpha }_t x(t)}=({_0 D^{\alpha _1}_t x_1(t)}, {_0D^{\alpha _2}_t x_2(t)}, \ldots , {_0 D^{\alpha _n}_tx_n(t))} ^\top \) for \(\alpha _i \in (0,1)\) with \( {_0 D^{\alpha _i}_tx_i(t)}\) denoting the following Caputo’s \(\alpha _i\)-th derivative

for \(t > 0\) and \(i=1,2,...,n\), where \(\varGamma (\cdot )\) denotes the Gamma function.

In the open literature, there are a number of methods for solving (1). Adomian decomposition method [3, 5, 9], variational iteration method [11, 12], differential transform method [2] and homotopy analysis method [10, 14] have been used for the problem. Recently, we proposed a new one-step numerical integration scheme for (1) [8]. This method is easy to implement and computationally inexpensive. In this paper, we will show that the global error of the method is of order \(\mathcal {O}(h^{2})\), where h denotes the maximal mesh size to be defined.

The rest of the paper is organized as the follows. In Sect. 2, we first transform Eq. (1) into an equivalent Volterra integral equation, we then propose an approximation of Volterra integral equation based on a Taylor expansion. An error analysis of the approximation is also presented. In Sect. 3, we propose an algorithm for implementing the approximate equation and analyse its convergence. In Sect. 4, numerical examples are presented. Section 5 concludes the paper.

2 Approximation

We first rewrite (1) as the following Volterra integral equation:

for \(t \in (0,T]\) and \(i = 1,2,...,n. \) It has been proven [1, 4, 6] that solving Eq. (1) is equivalent to solving (2). In this section, we will develop a numerical method based on a Taylor expansion to approximate (2) and estimate the approximation error.

Let N be a given positive integer. We divide (0, T) into N sub-intervals with mesh points \(t_i=i h\) for \(i=0,1,\ldots , N, \) where \(h=T/N\). Thus, we have

To approximate the integral on the RHS of (3), we assume that \(f_i(t,x(t))\) is twice continuously differentiable with respect to both t and x. For any k, we expend \(f_i(\tau ,x(\tau ))\) at any point in \(((k-1)h, kh)\), denoted as \(\tau ^i_{jk}\), into

where \(c^i_{jk} \) is the coefficient of the reminder of the expansion and

Therefore, replacing \(f_i(\tau ,x(\tau ))\) in (3) with the RHS of (4) and by direct integration we have

where \(\displaystyle R^i_{jk}=\frac{1}{\varGamma (\alpha _i)}\int _{(k-1)h}^{kh} { (jh-\tau )^{\alpha _i-1}} c^i_{jk} (\tau -\tau ^i_{jk})^2 d\tau \).

From (5) it is clear that \(\tau ^i_{jk}\) should be chosen such that the integral term in (5) becomes zero so that the truncation error is \(R^i_{jk}\). The choice of \(\tau ^i_{jk}\) is given in the following theorem.

Theorem 1

For any feasible j and k, the unique solution to

is

Furthermore, \((k-1)h< \tau ^i_{jk} < kh\).

Proof

See the proof of Theorem 2.1 in [7].

Substituting the expression for \( \tau ^i_{jk}\) in (6) into (5) and combining the resulting expression with (3), we have the following representation for \(x_i(t_j)\).

for \(j=1,2,\ldots ,N\), where \( \tau ^i_{jk}\) is given in (6) for \(k=1,2,\ldots ,j\) and \(R^i_j=\sum _{k=1}^{j} R^i_{jk} \). Omitting \(R^i_j\) in (7), we have an approximation to (3) with the truncation error \(R^i_j\). An upper bound for \(R^i_j\) is given in the following theorem.

Theorem 2

If f(t, x) is twice continuously differentiable in t and x, then we have \( |R^i_j| \le Ch^2, \) where C denotes a positive constant independent of h.

Proof

See the proof of Theorem 2.2 in [7].

From (7) it is clear that to compute \({x}_i (t_j)\), we need to calculate \(f_i(\tau ^i_{jk},x(\tau ^i_{jk}))\). However, \(x(\tau ^i_{jk})\) is not available directly from the scheme. Thus, approximations to \({x} (\tau ^i_{jk})\) need to be determined. In the next section, we propose a single step numerical scheme for implementing (7) when the remainder \(R_j^i\) is omitted.

3 Algorithm and Its Convergence

For any j and k satisfying \(1\le k \le j \le N\), since \(\tau ^i_{jk} \in (t_{k-1}, t_k)\) by Theorem 1, we use the following linear interpolation to approximate \(x_i(\tau ^i_{jk})\):

where \( \rho ^i_{jk} : = \frac{\tau ^i_{jk} - t_{k-1} }{h} \in (0,1) \) and \(E_n = (1,1,...,1)^\top \in \mathbb {R}^n\). Using (8) , we approximate \(f_i(\tau ^i_{jk}, x(\tau ^i_{jk}))\) as follows.

Replacing \(f_i(\tau ^i_{jk},x (\tau ^i_{jk} ) )\) in (7) with the RHS of (9), we have

for \( j=1,2,\ldots ,N\), where \(h_{\alpha _i}=\frac{h^{\alpha _i}}{\varGamma (\alpha _i+1)}\) and \(\tau ^i_{jk}\) is defined in (6). Clearly, (10) defines a time-stepping scheme for (2) if we omit the term \(\mathcal {O}(h^2)\).

The above scheme is implicit as it is a nonlinear system in \(x(t_j)\). We now define an explicit single step scheme by further approximating the jth term in the sum in (10) by the following Taylor expansion:

Thus, combining (11) and (10) yields

Let \(x^{j}: =(x_1^j, x_2^j, \ldots , x_n^j)^\top \) for \(j=0,1,...,N\) and omitting the truncation error terms of order \(\mathcal {O}(h^2)\) in (12), we define the following single step time-stepping scheme for approximating (2):

Re-organising (13), we have the following linear system for \(x^j\):

where \(B^j\) is the \( n\times n\) matrix given by

with

for \(i=1,2,\ldots ,n\), \(l=1,2,\ldots ,n \) and \(C^j=(c_1^j, c_2^j, \ldots ,c_n^j )^\top \) with

It is clear that to calculate \(x^j,\) we need to solve the system of equations (14)–(17). It has been shown in [8] that (14)–(17) is uniquely solvable when h is sufficiently small.

For a given initial condition \(x^0\), using the above results, we propose the following algorithm for solving (3) numerically.

Algorithm A

-

1.

For a given positive integer N, let \(t_j=jh\) for \(j=0,1,\ldots ,N\), where \(h=T/N\).

- 2.

Using a linear interpolation and Taylor’s theorem, we are able to prove in the following theorem that, for any \(j=1,2,\ldots ,N\), \(x^j\) generated by the above algorithm converges to the solution of (2) at the rate \(\mathcal {O}(h^2)\) when \(h\rightarrow 0^+\).

Theorem 3

Let \(x(t_j)\) and \(x^j\) be respectively the solution to (3) and the sequence generated by Algorithm A. If f(t, x) is twice continuously differentiable in t and x, then there exists an \(\bar{h} >0\) such that when \( h <\bar{h}\)

Proof

In what follows, we let C denote a generic positive constant, independent of h. We now prove this theorem by mathematical induction.

When \(j=1\), from (12) we have

Re-organising (19) and using the definitions for \(B^j\) and \(C^j\), we have

Solving (20) yields

Therefore,

It has been proven [8] that \(B^j, j=1,2, \ldots , N\) satisfies, when \(h<\bar{h}\),

for a constant \(\beta \), independent of h, where \(\bar{h}=\min _{1 \le i \le n} {(\frac{\varGamma {(\alpha _i+1)}}{nM})}^{\frac{1}{\alpha _i}}\) and \(M=\max _{\begin{array}{c} 1 \le i \le n\\ 1 \le l \le n \end{array}} \left| \frac{\partial f_i}{\partial x_l} \right| \). Thus, using [13] and (21), we have

Therefore, we have

When \(i \ge 2\) and \(h \le \bar{h}\), we assume that

We now show that \( ||x(t_j)-x^j||_{\infty } \le Ch^2\) for \(1 \le j \le i\).

Note that (12) can be re-written in the following form:

where

Similarly, (13) can be re-written as follows.

where

Subtracting (26) from (23) gives

Let us first estimate \(D_{i}^j -\tilde{D}_{i}^j\). From (25) and (28), we have

Since \(f_i\) is twice continuously differentiable, using a Taylor expansion we get

where

where \(\xi =x(t_{j-1})+\theta (x(t_{j-1})-x^{j-1})\) with \( \theta \in (0,1)\). From the assumption (22) we have \( r_j^i = \mathcal {O}(h^2).\) Similarly, since f is twice differentiable, using (22) it is easy to show \(f_i(\tau _{jj}^i,x_i(t_{i-1})) -f_i(\tau _{jj}^i,x_i^{j-1}) = \mathcal {O}(h^2). \)

Using (31) and the above estimates we have, from (30),

since \(h_{\alpha _i} = h^{\alpha _i}/\varGamma (1+\alpha _i)\) and (22). Thus, from the above expression and (29), we get

Re-organising the above equation gives

where \(B^j\) is defined in (15)–(16) and \(A^j=(A_1^j -\tilde{A}_1^j, A_2^j -\tilde{A}_2^j, \ldots , A_n^j -\tilde{A}_n^j )^\top \). From this equation we have

Thus, we have

for \(j=1,2,\ldots ,N.\) Using [13] and (21), we have

Therefore, we obtain

We now examine \( ||A^j||_{\infty }=|| A_i^j -\tilde{A}_i^j ||_{\infty }\). For notational simplicity, we let \(x^{jk} = x^{k-1}+\rho _{jk}^i(x^k-x^{k-1})\) and \(x(t_{jk}) = x(t_{k-1})+\rho _{jk}^i(x(t_k)-x(t_{k-1})\). From (24) and (27), we have

since \(z^{\alpha _i}\) is an increasing function of z for \(\alpha _i \in (0,1)\). Because f is twice continuously differentiable, we have, using a Taylor expansion,

since \(\rho _{jk}^i \in (0,1)\). Thus, from Assumption (22), we have

Replacing \(|f_i(\tau _{ij},x(t_{jk}))-f_i(\tau _{ij},x^{{jk}})|\) in (33) with the above upper bound yields

for \(i = 1,2,..., n\). Thus, we have

Combining the above error bound with (32), we have the estimate (18). Thus, the theorem is proved.

4 Numerical Results

In this section, we will use Algorithm A to solve two non-trivial examples. All the computations have been performed in double precision under Matlab environment on a PC with Intel Xeon 3.3 GHz CPU and 16 GB RAM.

Example 1

Consider the following system of fractional differential equations:

where \(\alpha _i \in (0,1)\), \(i=1,2,3\). The exact solution is

We solve the problem using Algorithm A for various values of \(\alpha _i, i=1,2,3\) and \(h_k = 1/(2^k \times 10), k=1,...,6\). The computed errors \(E^i_{h_k} = \max _{1\le j\le 1/h_k} | x_i^j - x_i(t_j)|\) for the chosen values of \(\alpha _i\)’s are listed in Table 1. To estimate the rates of convergence, we calculate \(\log _2 (E_{h_k}/E_{h_{k+1}})\) for \(k=1,...,5\) and the computed rates of convergence, as well as CPU times, are also listed in Table 1. From the results in Table 1 we see that our method has a 2nd-order convergence rate for all the chosen values of \(\alpha \), as predicted by Theorem 3, indicating that our method is very robust in \(\alpha \). The CPU time in Table 1 shows that our method is very efficient.

Example 2

Consider the following fractional differential equation used in [14].

The exact solution when \(\alpha _1=\alpha _2=\alpha _3=1\) is

It is solved using Algorithm A for various values of h and \(\alpha _i, i=1,2,3\). The computed errors and rates of convergence when \(\alpha _1=\alpha _2=\alpha _3=1\) are listed in Table 2 from which we see that the computed rates of convergence is \(\mathcal {O}(h^2)\), confirming our theoretical result.

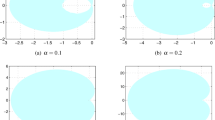

Since the exact solution to this Example 2 is unknown when \(\alpha _i <1\) for any i, we are unable to compute the rates of convergence. Instead, we solve the problem for \(\alpha _1=0.7, \alpha _2=0.5, \alpha _3=0.2\) using \(h=1/640\) and plot the computed solution, along with the solution for \(\alpha _i=1\) for \(i=1,2,3\), in Fig. 1. From Fig. 1 we see that \(x_1, x_2\) and \(x_3\) from the fractional system grow much faster than those from the integer system.

Computed solutions of Example 2

5 Conclusion

We have proposed and analysed a 1-step numerical integration method for a system of fractional differential equations, based a superconvergent quadrature point we have derived recently. The proposed method is unconditionally stable and easy to implement. We have shown the method has a 2nd-order accuracy. Non-trivial examples have been solved by our method and the numerical results show that our method is 2nd-order accurate for all the chosen values of the fractional orders, demonstrating our method is very robust.

References

Diethelm, K., Ford, N.J.: Analysis of fractional differential equations. J. Math. Anal. Appl. 265, 229–248 (2002)

Erturk, V.S., Momani, S.: Solving systems of fractional differential equations using differential transform method. J. Comput. Appl. Math. 215, 142–151 (2008)

Gejji, V.D., Jafari, H.: Adomian decomposition: a tool for solving a system of fractional differential equations. J. Math. Anal. Appl. 301(2), 508–518 (2005)

Guo, B., Pu, X., Huang, F.: Fractional Partial Differential Equations and Their Numerical Solutions. World Scientific, Singapore (2015)

Jafari, H., Gejji, V.D.: Solving a system of nonlinear fractional differential equation using adomain decomposition. Appl. Math. Comput. 196, 644–651 (2006)

Kilbas, A.A., Marzan, S.A.: Cauchy problem for differential equation with caputo derivative. Fract. Calc. Appl. Anal. 7(3), 297–321 (2004)

Li, W., Wang, S., Rehbock, V.: A 2nd-order one-point numerical integration scheme for fractional ordinary differential Equation. Numer. Algebra Control Optim. 7(3), 273–287 (2017)

Li, W., Wang, S., Rehbock, V.: Numerical solution of fractional optimal control. J. Optim. Theory Appl. (2018). https://doi.org/10.1007/s10957-018-1418-y

Momani, S., Al-Khaled, K.: Numerical solutions for systems of fractional differential equations by the decomposition method. Appl. Math. Comput. 162(3), 1351–1365 (2005)

Momani, S., Odibat, Z.: Homotopy perturbation method for nonlinear partial differential equations of fractional order. Phys. Lett. A 365(5–6), 345–350 (2007)

Momani, S., Odibat, Z.: Numberical approach to differential equations of fractional order. J. Comput. Appl. Math. 207, 96–110 (2007)

Odibat, Z., Momani, S.: Application of variational iteration method to nonlinear differential equations of fractional order. Int. J. Nonlinear Sci. Numer. Simulat. 1(7), 15–27 (2006)

Varga, R.S.: On Diagonal dominance arguments for bounding \(\Vert A^{-1}\Vert _{\infty }\). Linear Algebra Appl. 14(3), 211–217 (1976)

Zurigat, M., Momani, S., Odibat, Z., Alawneh, A.: The homotopy analysis method for handling systems of fractional differential equations. Appl. Math. Model. 34, 24–35 (2010)

Acknowledgment

This work is partially supported by US Air Force Office of Scientific Research Project FA2386-15-1-4095.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, W., Wang, S. (2019). A 2nd-Order Numerical Scheme for Fractional Ordinary Differential Equation Systems. In: Dimov, I., Faragó, I., Vulkov, L. (eds) Finite Difference Methods. Theory and Applications. FDM 2018. Lecture Notes in Computer Science(), vol 11386. Springer, Cham. https://doi.org/10.1007/978-3-030-11539-5_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-11539-5_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-11538-8

Online ISBN: 978-3-030-11539-5

eBook Packages: Computer ScienceComputer Science (R0)