Abstract

Management of human and animal wastes is among the major constraints towards the sustainable development of human settlements, where we demand increasing amounts of clean water, food, and energy. The aim of most sanitation solutions is to keep waste away from the generation site, such as households or animal stalls. The misconception that wastes have no useful purpose has resulted in unsustainable systems. However, the recovery of energy and agricultural use of the organics and nutrients contained in excreta and solid waste can improve soil structure and fertility, increasing productivity, reducing the dependency of resource-demanding chemical fertilizers, and thus contributing to food security. Treatment plants for waste anaerobic biodigestion can be applied in that context, moving from “treatment” plants to become “resource recovery” plants. The recovery of biogas in those plants for energy production is highly valuable, and added value can be obtained by the recycling of the biodegradation products—accumulated sludge and digestate. Those fractions should be treated sufficiently to inactivate pathogens to a certain extent. The quantitative microbial risk assessment is an effective approach to estimate risks, which can be applied to any scenarios of recycling liquid fractions from biogas reactors in agriculture.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

12.1 Introduction—Risks and the Resource Recovery Concept

Management of human and animal wastes is nowadays among the major constraints towards the sustainable development of human settlements, where we demand increasing amounts of clean water, food, and energy. Water pollution is caused largely by inappropriate discharge of human and animal wastes into water bodies. It is well known that faecal sludge, either from human or animal systems, has considerably high pollution potential, and its mismanagement is a common reality in many regions, especially in low- and mid-income countries.

The aim of most sanitation solutions is to keep waste away from the generation site, such as households or animals stalls. The misconception that wastes have no useful purpose has resulted in unsustainable systems. However, the recovery of energy and agricultural use of the organics and nutrients contained in excreta can improve soil structure and fertility, increasing productivity, reducing the dependency of resource-demanding chemical fertilizers, and thus contributing to food security. These benefits can be obtained since excreta is treated sufficiently to inactivate pathogens and make it safe.

Innovations on resource recovery are urging, with special focus on integrated waste management, responding to the need of development of sustainable resilient energy and sanitation systems for areas where poor infrastructure, water scarcity, and limited energy supply restrain the capacity for economic growth. These initiatives would contribute to long-term sustainability of cities in both its urban and rural areas and climate-compatible activities for the development.

Treatment plants for waste anaerobic biodigestion can be applied in that context, moving from “treatment” plants to become “resource recovery” plants. The recovery of biogas in those plants for energy production is highly valuable, and even added value can be obtained by the organics and nutrient recycling of other degradation products of the process that consist of the accumulated sludge and the digestate outcomes from the bioreactors.

But what is the risk associated? That is always a raised question when talking about recycling of human- and animal-derived wastes, since they have a high concentration and a very diverse pathogen content of importance for public health.

Microbial risk associated with the biogas fraction is very low when compared with the liquid fractions of sludge and digestate since pathogens stay in those fractions. The objective of this chapter is to present the quantitative microbial risk assessment as an effective approach to estimate risks, which can be applied to any scenarios of recycling liquid fractions from biogas reactors, operating with human and/or animal manure in agriculture.

12.2 Risk Analysis General Concepts

Risk analysis is an effective tool used by most diverse fields including economy, business, engineering, environmental, and human health. This tool works as a systematic and preventive approach through which is possible to minimize, control, and avoid risks, as well as to aid decision-making (Haas et al. 2014).

The process of risk analysis includes risk assessment, risk management, and risk communication. Risk assessment can be qualitative or quantitative and is intended to characterize and estimate all the potential risks involved during a process. Quantitative risk assessment associates numeric values to the risk and through probabilistic calculus provides an overview of the risks (WHO 2016).

12.2.1 Quantitative Microbial Risk Assessment (QMRA)

Quantitative microbial risk assessment consists of the application of risk assessment principles with emphasis on microbial risk and the aim to estimate health effects associated with exposure of an individual to a pathogenic microorganism in different scenarios (Haas et al. 2014). Through the use of systematic information applied to a mathematical model, QMRA enables a preventive management of microbiological risk contamination.

QMRA methodology is supported by the World Health Organization (WHO), which describes the process and steps in “Quantitative Microbial Risk Assessment: Application for Water Safety Management”, published in 2016. The methodology is divided into four steps—hazard identification, dose–response model, exposure assessment, and risk characterization—which will be presented below.

-

(1)

Hazard identification

Hazard identification is the first step of QMRA and has the main purpose to identify the microbial agent, all diseases associated with this pathogen, and the spectrum of human illnesses.

For this purpose, epidemiological and microbiological studies need to be accessed in order to obtain all necessary information about the microbial agent—pathogenicity, virulence, and infectivity—and about the human response to the microorganism (Haas et al. 2014).

-

(2)

Dose–response model

According to Weir et al. (2017), the choice of an adequate dose–response model is essential for a successful analysis. The dose–response model describes the relationship between the level of microbial exposure and the probability of this exposure to affect human health.

The dose–response models are expressed by mathematical functions and are based on experimental data. Until now, some dose–response models were developed and each of them has a parameter which best describes the microbial agent concerned (Haas et al. 2014).

The following describes the two most useful models: the exponential dose–response model and the beta-Poisson dose–response model.

-

Exponential dose–response model

The exponential dose–response model is characterized by the assumption that each microorganism has an independent and constant probability to survive (r) and consequently to cause an infection in a host. In other words, this model does not take into consideration some variabilities that could interfere in pathogen behaviour. Equation (12.1) describes this model.

- P(d):

-

risk of infection;

- d :

-

dose ingested by the individual;

- r :

-

specific parameter of each microorganism, which represent the probability to survive and infect the host.

-

Beta-Poisson dose–response model

The beta-Poisson model differs from the exponential model as it assumes that some variations in pathogen–host probability to survive may occur. According to Haas et al. (2014), this variation may appear due to host characteristics, such as gender, age, or immunity or due to the diversity of pathogen ability.

In mathematical terms, the parameter “r” of Eq. (12.1) is no longer constant and varies according to a probability distribution represented by the parameters α e β. Given this, the risk of infection is calculated using Eq. (12.2).

- P(d):

-

risk of infection;

- d :

-

dose ingested by an individual;

- α e β :

-

parameters that express the survival probability distribution of each microorganism.

-

(3)

Exposure assessment

Exposure assessment is intended to determine the population exposed to the risk (adults, children or immunocompromised people), the routes of transmission (air, soil, water, ingestion, inhalation, contact), the exposure scenario, and the distribution of the microorganism concentration (WHO 2016; Haas et al. 2014).

In order to estimate the dose ingested by an individual, the distribution of the microorganism concentration must be known. Ideally, these values should be determined through laboratory analysis for each case; however, the process of quantification for most pathogens can be very challenging and expensive. Therefore, in these cases the use of a comprehensive database with the most similarity as possible is recommended (Haas et al. 2014). The dose ingested by an individual is given by Eq. (12.3).

- d :

-

dose ingested by an individual;

- v :

-

volume ingested per exposure;

- c :

-

microorganism concentration.

The determination of exposure scenario enables the identification of the contact level between the individual and the microbial agent, as well as the exposure frequency. These parameters have a high influence on the risk of infection. Moreover, once established the exposure scenario it is possible to know the average ingested volume in that case. The values of volume ingested can be found in the literature, which is normally based on experimental volunteer studies (WHO 2016).

-

(4)

Risk characterization

The risk characterization is the last step of the QMRA and consists in the integration of all data obtained on the previous steps, with the intent to estimate the probability of the risk to occur as well as its magnitude. According to Haas et al. (2014), during this characterization all the variabilities and uncertainties are taking into consideration. The uncertainties can be related to the dose–response model chosen, model parameters, ingested volumes, and microorganisms concentrations.

Usually, to conduct the characterization the Monte Carlo simulation is used—a mathematical tool, which simulates various scenarios using probability distribution and as a result shows all possible scenarios and the probability of people to get infected when exposured to pathogens present in the scenarios. The results are expressed through probability of infection, disease or death, or through disability-adjusted life years (DALY), a measure that expresses all wasted years as a consequence of health problems due to microbiological contamination (Haas et al. 2014).

12.3 Nutrients in Human and Animal Manure and Microbial Pathogen Content for Risk Assessment

In general, human and animal excreta present a large variety of primary and secondary macronutrients, which would characterize them as compound biofertilizers. Table 12.1 presents a complete characterization of excreta regarding macro- and micronutrients content.

The biofertilizers that could be produced as digestate and sludge in biogas plants, considering the percentage of macro- and micronutrients in human and animal excreta, can be compared to organic fertilizers, but are less effective in terms of concentrations when compared to chemical fertilizers.

At the same time that human and animal manure have high concentrations of carbon and nutrients, the concentrations of metals are small, which is an advantage for the reuse of excreta (Vinnerås 2002; Albihn and Vinnerås 2007).

While manure application in agriculture has its benefits, its management, especially its storage, represents a main limiting factor, given that long storage periods can lead to nutrients and carbon losses. Castellanos-Navarrete et al. (2015) conducted a study in western Kenya regarding manure utilization in farms and indicated that poor manure management led to low nutrient cycle efficiencies, indicating that long periods of storage contribute to nitrogen and phosphorus losses by volatilization and leaching. The authors also noticed carbon losses up to 51% in the farms monitored, which agrees with previous researches that indicate that poor storage conditions can lead to losses between 30 and 55% of carbon. However, half of the farms counteracted the carbon losses through biomass additions.

Nutrient losses by leaching can lead to a series of environmental impacts, especially on water bodies. One way of reducing this kind of impact is to apply the Ecological Recycling Agriculture (ERA), which is an organic agriculture that aims at closed nutrients cycles (Granstedt et al. 2008), and has potential to decrease nitrogen surplus in agricultural soils and its leaching due to increased nutrient efficiency (Granlund et al. 2015). Granlund et al. (2015) carried a study regarding Finnish agriculture catchments and simulated a theoretical crop rotation developed to represent ERA cultivation, with considered N fixation, mineralization, and manure as nitrogen sources. The authors observed reductions up to 33% in nitrogen losses on fields working in ERA when compared to those from conventional agriculture, which is mostly based on chemical fertilizers. Manure utilization in agriculture can also increase soil organic carbon, improve soil physical and biological properties, and lead to reduced carbon losses, especially when farmyard manure is applied (Baldivieso-Freitas et al. 2018).

The study carried by Castellanos-Navarrete et al. (2015) shows the importance of manure application in agriculture, once 43% of the total nitrogen inputs into the maize fields studied came from storage manure. Yet these amounts were insufficient to prevent major nutrient depletion in most farms analysed, it was still a considerable contribution to soil quality, especially in situations similar to those observed in the study, where at many times farmers lack resources to work and struggle to overcome difficulties. Thereby, they observed that composted manure provided the largest N inputs to the soil and similar amounts of carbon to those coming from crop residues left in the fields.

Although manure application has its advantages, Baldivieso-Freitas et al. (2018) indicate that research should consider studies about the effects of applying more stabilized organic matter, as it may be better to enhance soil quality and increase the organic matter contents in the soil. Given that, anaerobic digestion is a good way of treating animal manures in order to produce biogas, once they provide the adequate organic substrate. In addition, it is possible to mix horticultural fruit wastes with the manure, once they cannot be processed alone. Iocoli et al. (2019) carried a study in which different animal manures and onion waste were treated by anaerobic digestion, and each manure and its products of digestion and co-digestion had their fertilizing properties evaluated. The authors could notice large differences in the composition between the unprocessed wastes while the digestates had similar characteristics, which complies with the capacity of anaerobic digestion to generate more uniform products.

In this sense, it is possible to infer that the digestate is a more uniform fertilizer than the unprocessed manures. However, a high hydraulic retention time must be applied in the biodigester, in order to promote full degradation of the organic matter, as lower HRT may lead to the production of an unstable digestate, which can cause unpleasant smells, storage problems, and negative impacts on crops (Iocoli et al. 2019).

In addition to the above characteristics, excreta may contain pathogenic microorganisms and micropollutants (i.e. drugs residues and hormones). The highest concentration of pathogens is found in the faeces fractions, while the highest concentration of micropollutants in the urine fraction of excreta.

Inactivation of pathogens is recognized as a limiting factor for the reuse at any scale. Similarly, the risk posed by micropollutants in the environment, especially in the aquatic environment, is recognized as a serious environmental problem, although its consequences are still poorly understood (Carrington et al. 1991; Winker 2010; Fatta-Kassinos et al. 2011). However, from another perspective the presence of micropollutants in low concentrations is not considered a limiting factor for recycling, since it is believed that the excreta application to the soil ends up promoting an additional barrier against its direct release in the water bodies.

Enteric pathogens are excreted mainly on faeces of infected organisms, which can be human and/or animal. Some pathogens of relevance to public health are presented in Table 12.2, as well as its reservoir (e.g. human or animal faeces), the diseases each pathogen develops on human, the concentrations that are excreted per gram of faeces, the duration of the shedding of the pathogens on faeces, and the infectious dose for the disease to develop in another person. All of the presented pathogens are zoonotic, i.e. pathogens that are able to infect both animals and humans. The knowledge and collection of data on pathogen content in the biofertilizers produced from excreta are an essential step for conducting the risk analysis.

Disease propagation depends on factors such as microorganisms’ survival in the environment and the required infectious dose to infect a susceptible individual. Pathogens’ survival in the environment varies according to each group and species: virus, for example, are not able to multiply outside a host; however, they are able to maintain at a stable concentration or decrease over time. Protozoa are also unable to multiply in the environment, but they are highly resistant, even to most disinfectants. As for bacteria, some groups are able to multiply in the environment, while others are able to persist or decrease, depending on factors like nutrient availability and temperature (Leclerc et al. 2008). Pathogens’ infectious dose represents the amount of organisms necessary to cause an infection; i.e. the lower the infectious dose, less microorganisms are necessary to cause an infection (Griffin and Tauxe 1991).

The amount of microorganisms excreted from an infected organism, as well as the duration of shedding, varies depending on the pathogen and the host. However, for most pathogens presented in Table 12.2, the amount excreted in faeces is considerably high, what changes for each pathogen is the duration of shedding, which varies from days to weeks. Furthermore, the infectious dose is lower for some pathogens, such as Escherichia coli, Shigella spp., Rotavirus, and Giardia intestinalis, while higher for others. This means lower doses of these microorganisms are needed to develop an infection. These pathogens should get extra attention when conducting QMRA studies on reuse of human and animal excreta for agriculture. It is also important to evaluate zoonotic pathogens, as they are of greater importance to public and animal health.

12.4 Inactivation of Pathogens During the Biogas Production—Anaerobic Bioreactors

The natural inactivation of pathogens is usually a slow process, and because of that, it is necessary to apply treatments that seek the disinfection in effluents and other wastes, such as human faeces, animal manures, and digestate from anaerobic biodigesters (Semenov et al. 2007; Sidhu 2001).

During the process of anaerobic biodigestion, 90% of the enteric bacteria and viruses can be inactivated (Fongaro et al. 2014). However, it is important to emphasize the importance of applying high-efficiency treatments, in order to minimize 99.9% of most pathogens, depending on the initial pathogenic load and the purpose of the recycling, keeping in mind the safety when recycling the digestate.

Methods based on alkalinity are among the main treatments used to reduce pathogens in environmental matrices, which consist of adding alkaline compounds such as ash and lime in the waste. The efficiency of this process is mainly due to the elevation of the pH that alkalinizes the cellular cytosol, as well as interferes with protein activity, inactivating microorganisms (Magri et al. 2013; Chandran et al. 2009).

As an effect of alkali treatment, non-ionized ammonia—NH3—is generated, which is an important biocidal agent. Therefore, wastes with high ammonia concentrations, as sludges and digestates from human and animal manure biodegradation, have good potential for ammonia sanitization. It is observed that this treatment needs to be performed outside the biogas reactor. The mechanism of pathogen inactivation mediated by NH3 is due to the solubility of ammonium in the lipids, which facilitates its entry and diffusion in the cells, being able to act in cell destabilization, membrane destruction, and protein denaturation (Emmoth et al. 2011; Bujozek 2001). There is little research about the virucidal mechanism of NH3. However, it is reported that it leads to a cleavage in viral genetic material and small viral structural changes. Thus, viruses can be prevented from entering the host cell as well as from replicating (Decrey et al. 2015). In addition to NH3, the carbonates (\({\text{CO}}_{3}^{2 - }\)) formed as a consequence of pH and chemical equilibrium, probably due to organic matter decomposition, are also reported by their biocidal action (Magri 2013; Chandran et al. 2009).

As an example, it is possible to mention Fongaro et al. (2014) that studied pathogen inactivation based on free ammonia in swine digestate in Brazil, reaching inactivation in the order of 7 log 10 and 4 log 10 in 23 days of treatment for inactivation of enterobacteria and enteric viruses, respectively.

The use of heat in hygienic or disinfection processes is widely used, once it leads to structural protein denaturation, enzymatic inactivation, and nucleic acids denaturation, thus irreversibly preventing pathogens’ replication in excreta and digestates, being widely used (Fong and Lipp 2005). Maheshwari et al. (2004) applied temperatures of 50 °C in order to inactivate adenovirus in substrata used for biodigestion and reported a significant inactivation of 4.0 log 10 decay of HAdV 5 in 10 min of exposure.

Some of the most important parameters to consider during the thermal inactivation process are the decimal reduction time (DT), which expresses the time required to reduce a logarithmic unit at the concentration of viable cells at a given temperature (T), and the thermal coefficient (z), which represents the temperature difference required for the reduction of a logarithmic unit in the DT value. They are both related to the degree of heat resistance of a microorganism in a given matrix (Wigginton et al. 2012; Pecson et al. 2007). However, it should be noted that the use of heat requires energy expenditure that could be used for other purposes.

In this context, the application of economically feasible techniques to reduce pathogens in environmental matrices, such as those generated from the biodigestion process, is extremely important for the reduction of microbiological risks in the nutrients recycling in this process.

12.5 Scenarios and Data of Risk Exposure on the Biogas Production Chain and Risk Management

In the biogas treatment plants, as well as in the sites where the recycling of excess sludge and digestate is done, there are several possible routes where microbial risk exists in different extents. Here, we focus on the risk posed by sludge and digestate during its application on land for agricultural purposes since that is considered the most sustainable use in the context of the circular economy.

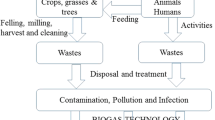

The production of digestate and sludge and the possible general routes of risk exposure to human and animals are represented in the series of Figs. 12.1, 12.2, 12.3, 12.4, 12.5, 12.6.

During manure application on soil, some main routes of exposure of humans and animals can be highlighted: exposure to aerosols and soil contaminated superficially (Fig. 12.3); exposure to contaminated groundwater by soil infiltration of excreta (Fig. 12.4); exposure to contaminated plants (crops) (Fig. 12.5); and exposure to superficial water contaminated by agricultural runoff (Fig. 12.6).

The ingestion of contaminated soil and aerosols is normally referred to as indirect contact and accidental ingestion. Workers (farmers in that case) accidentally ingest soil as a consequence of hands to mouth gesture (faecal–oral route), and hands can get contaminated during soil labour, harvesting crops, or still during maintenance of equipment and tools used. Julian et al. (2018) simulated (100 simulations per farmer) and predicted final Escherichia coli concentrations across farmers for both hands of 1.4 ± 1.3 log 10 CFU/cm2. In this case, E. coli indicates the potential for a physical adhesion of enteric pathogens in hands. Comparing the concentration found for Julian et al. (2018) with the concentration of E. coli in human faeces of 108 log 10 (Table 12.2), each cm2 was contaminated with approximately 0.0001% of E. coli, which might be sufficient for illness, in the case of pathogenic E. coli.

Moazeni et al. (2017) estimated the infection risk for farmers in contact with contaminated soil irrigated with an effluent containing 12–16 pfu/mL of enterovirus. The probability of infection was 8.8 × 101 per person per year for farmers, which is about 2 log higher than the tolerable infection risk of 2 × 103 pppy targeted in the study.

The modelling for assessing risk can be conducted also from the opposite perspective. For instance, Kobayashi et al. (2017) targeted the tolerable burden of disease of 10−4 and 10−6 for disability-adjusted life year loss per person per year (DALYS) and calculated a threshold reduction level for norovirus gastrointestinal illness (GII) that would be required for the use of a sewage effluent for agricultural irrigation. That approach is widely used on decision-making and management processes, as well, for legislation.

Understanding the behaviour of enteric bacteria following application to soils is an important element in predicting exposure to adjacent water, and the development of regulatory guidelines to manage the risk of faecal contamination of water from soils that have received manures (Haas et al. 1999).

The chemical composition of livestock wastes and the treatment applied after the biogas reactor are some of the elements that will influence the dynamics of surviving enteric bacteria following application to soils (Topp et al. 2009).

Topp et al. (2009) compared risk for application of fresh untreated manure and treated manure for which a 3-log reduction was achieved prior to land application. In the absence of treatment, the risk of infection (expressed as a probability of risk of infection per event, as a point estimate) from Cryptosporidium was 1.75 × 104 and from Campylobacter 1.27 × 102. In contrast when considering treated livestock waste that had a 1000-fold reduction in pathogen content, the risk from Cryptosporidium was 1.75 × 107 and that from Campylobacter was 1.27 × 105.

Herein, we can state as an important evaluation, the multi-barrier approach for managing risk from shed microorganism. An effective multi-barrier strategy has three major components, according to Topp et al. (2009): (1) managing herd health to minimize the acquisition, potentiation, and release of zoonotic pathogens into the manure; (2) management of the manure during storage to effect a reduction in pathogen content prior to release into the broader environment; and (3) application of the material to land at a judicious rate, and under suitable land, climate, and crop conditions to minimize the off-site movement of contaminants into adjacent surface or groundwater.

The multi-barrier approach was at first considered to water supply systems, aiming to assure safe drinking water, and since then, people are beginning to shift their focus from compliance monitoring to the more holistic approach.

During the application of biofertilizer on soil, there is also a risk related to aerosols, as cited above. According to Courault et al. (2017), the risk decreases with increasing distance from the emission source and that wind speed has a great impact on atmospheric dispersion.

For the exposure route presented in Fig. 12.5, the consumption of contaminated water or plants can be understood as direct contact. The barriers applied for reducing the risk in this situation vary from cleaning vegetables before consumption to the application of water treatment processes.

Moazeni et al. (2017) estimated the risk for lettuce consumers and as result a lower level of infection and disease burden (about 10−3) but higher than the guideline threshold of 10−4 DALY pppy (WHO).

Mok et al. (2014) evaluated the risks for both situations, eating food washed and non-washed. The median probability of infection for washers ranged from 2.94 × 10−8 to 1.51 × 10−3, and the median probability of illness per dose ranged from 1.35 × 10−16 to 3.52 × 10−7. For non-washers, the probabilities were one order of magnitude higher. Other important factors that can influence risk in the cited situations are: type of vegetable, type of irrigation system, temperature and type of soil, time for consumption after harvesting, and “resting” period after manure application (Mok and Hamilton 2014; Amoah et al. 2018). For instance, consumption of lettuce grown on sludge-amended soil will result in probable infections but harvest after 30 days between sludge application and harvest in the study presented by Amoah et al. (2018) gave median probability infection risks with a risk level similar to the WHO tolerable risk value (10−4).

For the scenario of agricultural runoff and consequent superficial water contamination, several parameters can interfere with the risk extent. Sensitivity analysis is usually performed to investigate how variability of the outputs can be apportioned quantitatively to different sources of variability in the inputs. Clarke et al. (2017) show that the parameter of importance that affected the variance in model predictions for ingestion of contaminated river water with E. coli was time in the stream, which highlights the importance of residence time of bacteria in that environment. The time that bacteria stay in the stream allows their contact with environmental inactivation factors such as temperature, pH, and photolysis, which may in turn influence the growth or die-off rates. The other parameters of importance were the water intake and initial counts in surface runoff.

12.6 Final Considerations

The recovery of biogas for energy production in treatment plants for waste anaerobic biodigestion is already a reality in many countries. Conventional plants are moving from “treatment” plants to become “resource recovery” plants, and even added value can be obtained by the organics and nutrient recycling of the accumulated sludge and digestate outcomes from the bioreactors. However, for conducting a safe nutrient recycling in agriculture, the pathogen content is a limiting factor. In that context, we presented the quantitative microbial risk assessment as an effective approach to estimate risks, which can be applied to any scenarios of recycling liquid fractions from biogas reactors in agriculture.

References

Albihn A, Vinnerås B (2007) Biosecurity and arable use of manure and biowaste—treatment alternatives. Livest Sci 112:232–239

Amoah ID et al (2018) Concentration of soil-transmitted helminth eggs in sludge from South Africa and Senegal: a probabilistic estimation of infection risks associated with agricultural application. J Environ Manage 206:1020–1027

Ashbolt NJ (2004) Microbial contamination of drinking water and disease outcomes in developing regions. Toxicology 198(1–3):229–238

Baldivieso-Freitas P et al (2018) Crop yield, weed infestation and soil fertility responses to contrasted ploughing intensity and manure additions in a Mediterranean organic crop rotation. Soil Tillage Res 180

Besser TE et al (2001) Escherichia coli O157: H7 infection of calves: infectious dose and direct contact transmission. Epidemiol Infect 127:555; 129(2):427, 2002

Blaser MJ, Newman LS (1982) A review of human salmonellosis: I. Infective dose. Rev Infect Dis 4(6):1096–1106

Bujozek G (2001) Influence of ammonia and other abiotic factors on microbial activity and pathogen inactivation during processing of highsolid residues. Diss. Manitoba: University of Manitoba, 206p

Carrington EG, Pike EB, Auty D, Morris R (1991) Destruction of faecal bacteria, enteroviruses and OVA of parasites in wastewater sludge by aerobic thermophilic and anaerobic mesophilic digestion. Water Sci Technol 24(2):377–380

Castellanos-Navarrete A, Tittonell P, Rufino MC, Giller KE (2015) Feeding, crop residue and manure management for integrated soil fertility management: a case study from Kenya. Agric Syst 134:24–35

Chandran A, Pradhan SK, Heinonen-Tanski H (2009) Survival of enteric bacteria and coliphage MS2 in pure human urine. J Appl Microbiol 107(5):1651–1657

Clarke R et al (2017) A quantitative microbial risk assessment model for total coliforms and E. coli in surface runoff following application of biosolids to grassland. Environ Pollut 224:739–750

Coia JE (1998) Clinical, microbiological and epidemiological aspects of Escherichia coli O157 infection. FEMS Immunol Med Microbiol 20(1):1–9

Courault D et al (2017) Assessment and risk modeling of airborne enteric viruses emitted from wastewater reused for irrigation. Sci Total Environ 592:512–526

Decrey L, Kazama S, Udert KM, Kohn T (2015) Ammonia as an in situ sanitizer: inactivation kinetics and mechanisms of the ssRNA virus MS2 by NH. Environ Sci Technol 49(2):1060–1067

De Roda Husman AM, Schets FM (2010) Climate change and recreational water-related infectious diseases. RIVM rapport 330400002

Dizon JJ et al (1967) Studies on cholera carriers. Bull World Health Organ 37(5):737

Emmoth E, Ottoson J, Albihn A, Belák S, Vinnerås B (2011) Ammonia disinfection of hatchery waste for elimination of single-stranded RNA viruses. Appl Environ Microbiol 77(12):3960–3966

Fatta-Kassinos D, Meric S, Nikolaou A (2011) Pharmaceutical residues in environmental waters and wastewater: current state of knowledge and future research. Anal Bioanal Chem 399:251–275

Fong T-T, Lipp EK (2005) Enteric viruses of humans and animals in aquatic environments: health risks, detection, and potential water quality assessment tools. Microbiol Mol Biol Rev 69(2):357–371

Fongaro G (2016) Higienização de dejetos suinícolas visando reciclo agrícola sanitariamente seguro, Santa Catarina. Thesis (in portuguese) (Doutorado em Biotecnologia e Biociências), Departamento de Biotecnologia e Biociências, Universidade Federal de Santa Catarina, Florianópolis, Santa Catarina, 244 f

Fongaro G, Viancelli A, Magri ME, Elmahdy EM, Biesus LL, Kich JD, Kunz A, Barardi CRM (2014) Utility of specific biomarkers to assess safety of swine manure for biofertilizing purposes. Sci Total Environ 479–480:277–283

Granlund K, Rankinen K, Etheridge R, Seuri P, Lehtorant J (2015) Ecological recycling agriculture can reduce inorganic nitrogen losses – model results from three Finnish catchments. Agric Syst 133:167–176

Granstedt A, Schneider T, Seuri P, Thomsson O (2008) Ecological recycling agriculture to reduce nutrient pollution to the Baltic Sea. Biol Agric Hortic 26(3):279–307

Griffin PM, Tauxe RV (1991) The epidemiology of infections caused by Escherichia coli O157: H7, other enterohemorrhagic E. coli, and the associated hemolytic uremic syndrome. Epidemol Rev 13:60–98

Haas CN, Rose JB, Gerba CP (1999) Quantitative microbial risk assessment. Wiley, Gerba

Haas CN, Rose JB, Gerba CP (2014) Quantitative microbial risk assessment. New Jersey, John Wiley & Sons, 427p

Hornick RB et al (1971) The Broad Street pump revisited: response of volunteers to ingested cholera vibrios. Bull N Y Acad Med 47(10):1181

Iocoli GA, Zabaloy MC, Pasdevicelli G, Gómez MA (2019) Use of biogas digestates obtained by anaerobic digestion and co-digestion as fertilizers: characterization, soil biological activity and growth dynamic of Lactuca sativa L. Sci Total Environ 11–19 (In press)

Julian TR et al (2018) High time-resolution simulation of E. coli on hands reveals large variation in microbial exposures amongst Vietnamese farmers using human excreta for agriculture. Sci Total Environ 635:120–131

Kafer SC (2015) Tratamento de lodo anaeróbio em wetlands construídos operados em alta taxa e com a recirculação de líquido percolado, Santa Catarina. Master Thesis (in portuguese) (Mestrado em Engenharia Ambiental), Departamento de Engenharia Sanitária e Ambiental, Universidade Federal de Santa Catarina, Florianópolis, Santa Catarina, 143 f

Kasorndorkbua C et al (2004) Routes of transmission of swine hepatitis E virus in pigs. J Clin Microbiol 42(11):5047–5052

Kobayashi N et al (2017) Removal of human pathogenic viruses in a down-flow hanging sponge (DHS) reactor treating municipal wastewater and health risks associated with utilization of the effluent for agricultural irrigation. Water Res 110:389–398

Leclerc H et al (2008) Microbial agents associated with waterborne diseases. Crit Rev Microbiol 7828(4):371–409

Levine MM et al (1973) Pathogenesis of Shigella dysenteriae 1 (Shiga) dysentery. J Infect Dis 127(3):261–270

Magri ME (2013) Aplicação de processos de estabilização e higienização de fezes e urina humanas em banheiros secos segregadores, Santa Catarina. Thesis (in portuguese) (Doutorado em Engenharia Ambiental), Departamento de Engenharia Sanitária e Ambiental, Universidade Federal de Santa Catarina, Florianópolis, Santa Catarina, 195 f

Magri ME, Philippi LS, Vinnerås B (2013) Inactivation of pathogens in feces by desiccation and urea treatment for application in urine-diverting dry toilets. Appl Environ Microbiol 79(7):2156–2163

Maheshwari G, Jannat R, McCormick L, Hsu D (2004) Thermal inactivation of adenovirus type 5. J Virol Methods 118(2):141–146

Meng X-J et al (1998) Genetic and experimental evidence for cross-species infection by swine hepatitis E virus. J Virol 72(12):9714–9721

Moazeni M et al (2017) Estimation of health risks caused by exposure to enteroviruses from agricultural application of wastewater effluents. Water Res 125:104–113

Mok H-F, Hamilton AJ (2014) Exposure factors for wastewater-irrigated Asian vegetables and a probabilistic rotavirus disease burden model for their consumption. Risk Anal 34(4):602–613

Mok H-F, Barker SF, Hamilton AJ (2014) A probabilistic quantitative microbial risk assessment model of norovirus disease burden from wastewater irrigation of vegetables in Shepparton, Australia. Water Res 54:347–362

Ottosson J (2003) Hygiene aspects of greywater and greywater reuse. Tese de Doutorado. Mark och vatten

Pecson BM, Barrios JA, Jiménez BE, Nelson KL (2007) The effects of temperature, pH, and ammonia concentration on the inactivation of Ascaris eggs in sewage sludge. Water Res 41(13):2893–2902

Semenov AV, Ariena HC, Van Bruggen, Leo Van Overbeek, Termorshuizen AJ, Semenov AM (2007) Influence of temperature fluctuations on Escherichia coli O157:H7 and Salmonella enterica serovar Typhimurium in cow manure. FEMS Microbiol Ecol 60(3):419–428

Sidhu J (2001) The role of indigenous microorganisms in suppression of salmonella regrowth in composted biosolids. Water Res 35(4):913–920

Smith HI Jr, Freter R, Sweeney FJ Jr (1961) Enumeration of cholera vibrios in fecal samples. J Infect Dis 31–34

Topp E et al (2009) Livestock waste treatment systems for reducing environmental exposure to hazardous enteric pathogens: some considerations. Biores Technol 100(22):5395–5398

Tsarev SA et al (1992) Characterization of a prototype strain of hepatitis E virus. Proc Natl Acad Sci 89(2):559–563

Tuttle J et al (1999) Lessons from a large outbreak of Escherichia coli O157 [ratio] H7 infections: insights into the infectious dose and method of widespread contamination of hamburger patties. Epidemiol Infect 122(2):185–192

Vinnerås B (2002) Possibilities for sustainable nutrient recycling by faecal separation combined with urine diversion. Thesis, Swedish University of Agricultural Sciences, Department of Biometry and Engineering, Uppsala, Sweden, 88 p

Weir MH et al (2017) Development of a microbial dose response visualization and modelling application for QMRA modelers and educators. Environ Model Softw 88:74–83

Wigginton KR, Pecson BM, Sigstam T, Bosshard F, Kohn T (2012) Virus inactivation mechanisms: impact of disinfectants on virus function and structural integrity. Environ Sci Technol 46(21):12069–12078

Winker M (2010) Are pharmaceutical residues in urine a constraint for using urine as a fertilizer? Sustain Sanitation Pract 3:7

World Health Organization et al (2016) Quantitative microbial risk assessment: application for water safety management

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Magri, M.E., Carlon, P., Cruz, L.J.M., Dalri-Cecato, L. (2019). Closing the Loop on Biogas Plants: Recycling Digestate and Sludge on Agriculture and Microbial Risk Assessment. In: Treichel, H., Fongaro, G. (eds) Improving Biogas Production. Biofuel and Biorefinery Technologies, vol 9. Springer, Cham. https://doi.org/10.1007/978-3-030-10516-7_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-10516-7_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-10515-0

Online ISBN: 978-3-030-10516-7

eBook Packages: EnergyEnergy (R0)