Abstract

With the advent of X-Ray free electron lasers (FELs), the field of serial femtosecond crystallography (SFX) was borne, allowing a stream of nanocrystals to be measured individually and diffraction data to be collected and merged to form a complete crystallographic data set. This allows submicron to micron crystals to be utilized in an experiment when they were once, at best, only an intermediate result towards larger, usable crystals. SFX and its variants have opened new possibilities in structural biology, including studies with increased temporal resolution, extending to systems with irreversible reactions, and minimizing artifacts related to local radiation damage. Perhaps the most profound aspect of this newly established field is that “molecular movies,” in which the dynamics and kinetics of biomolecules are studied as a function of time, are now an attainable commodity for a broad variety of systems, as discussed in Chaps. 11 and 12. However, one of the historic challenges in crystallography has always been crystallogenesis and this is no exception when preparing samples for serial crystallography methods. In the following chapter, we focus on some of the specific characteristics and considerations inherent in preparing a suitable sample for successful serial crystallographic approaches.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

3.1 Introduction

With the advent of X-Ray free electron lasers (FELs), the field of serial femtosecond crystallography (SFX) was borne, allowing a stream of nanocrystals to be measured individually and diffraction data to be collected and merged to form a complete crystallographic data set. This allows submicron to micron crystals to be utilized in an experiment when they were once, at best, only an intermediate result towards larger, usable crystals. SFX and its variants have opened new possibilities in structural biology, including studies with increased temporal resolution, extending to systems with irreversible reactions, and minimizing artifacts related to local radiation damage. Perhaps the most profound aspect of this newly established field is that “molecular movies,” in which the dynamics and kinetics of biomolecules are studied as a function of time, are now an attainable commodity for a broad variety of systems, as discussed in Chaps. 11 and 12. However, one of the historic challenges in crystallography has always been crystallogenesis and this is no exception when preparing samples for serial crystallography methods. In the following chapter, we focus on some of the specific characteristics and considerations inherent in preparing a suitable sample for successful serial crystallographic approaches.

While this chapter’s title directly refers to “nano-”crystals, the following is also applicable to small crystals that may not be strictly submicron. Synchrotron serial crystallography at a micro-focused beamline is also a highly effective technique that is very similar to nano-crystallography at X-Ray FELs but requires larger crystals than the minimum needed for SFX. Furthermore, depending on specifics of a given experiment, larger crystals may also be preferable at an X-Ray FEL, sometimes up to a few tens of microns in the largest dimension, which is common for membrane proteins as discussed in Chap. 4. Other times, growth of crystals larger than a couple microns tends to be elusive at best, a challenge common with G-protein coupled receptors (covered in depth in Chap. 10). In any case, it is common within the field for the terms “nanocrystal” and “microcrystal” to be somewhat synonymous, with “nanocrystal” appearing for a broad size regime in the literature. For the sake of brevity, we will refer to all small crystals suitable for serial crystallography as “nanocrystals” hereto forth.

3.2 Nanocrystallogenesis

When approaching nanocrystallogenesis, the parameters governing growth remain largely the same from macrocrystallography, namely thermodynamics, kinetics, and solubility. The notable difference is that the objective occupies a different region of the phase space. To additionally optimize diffraction quality, one must also consider and control crystal size and size homogeneity. These parameters have an impact upon data collection in serial crystallography and failure to optimize can cause malfunction of sample introduction and/or an increase in time and sample needed to complete a data set. It is also very important to consider and prioritize characteristics for a given experiment, especially in the case of a serial experiment aimed at something more complex than a single static structure.

In general, crystallogenesis can be thought about as a multidimensional phase space consisting of any and all conditions experienced by the protein in solution. A simplified depiction of this can be seen in Fig. 3.1 where a 2D slice is shown between protein concentration and a generic precipitant. However, in practice a comprehensive phase space is highly complicated and can be sensitive to multiple additives, temperature, protein homogeneity, time, pH, etc., essentially anything that comprises part of the crystallization environment. While each protein will have its own unique phase space, there are generalities that can help guide optimization and avoid a brute force approach past initial screening (even this is not strictly brute force as most commercial screens rely on empirical successes). It should also be noted that all the traditional pre-screening optimizations (purification, configurational and oligomeric homogeneity, detergent screening, mutagenic engineering, etc.) will still play an immense role in the ability to obtain quality samples. We will not go into the details about these here but would refer you to texts by Scopes [1] or Doublie [2] for additional reading on the topic.

Generalized solubility phase diagram for crystallization. Illustrates the typical qualitative relationship between solubility and concentrations of protein and precipitating conditions (e.g., salt concentration, pH, temperature). As one or both of these concentrations are increased, the solubility tends to decrease until a supersaturated state is achieved where nucleation is able to occur. The segmented regions in the supersaturated phase qualitatively increase in their propensity for nuclei to form as they move away from the solubility curve. Once supersaturation becomes very large, disordered (non-crystalline) nuclei tend to be favored, illustrated by the amorphous precipitate region in the top right

3.2.1 Thermodynamics of Solubility and Nucleation

In its most fundamental sense, the formation of crystals is nothing more than a controlled precipitation of a molecule or molecular system such that translational symmetry is achieved through free energy minima. The process is driven by thermodynamics through solubility and intermolecular forces. This pertains to any size of crystal and just like macrocrystallogenesis of macromolecules, the first step is to find a foothold within the broad parameter phase space governing a particular sample of interest. This typically involves trying many conditions in a broad screen to look for trends and patterns in solubility by sparsely sampling phase space [3]. It is important to remember that in order to find precipitating conditions, one must consider a plethora of variables including not only the concentration and composition of protein and mother liquor but also the pH, temperature, time and method among others. While Fig. 3.1 is an example of a generic crystallization diagram, a useful tool in visualizing phase space, it should be stressed that this is simplified and, as mentioned above, many variables govern the actual phase space, making it n-dimensional. The “precipitating condition” represented on the x-axis can either be thought of as representing the entire suite of precipitating variables other than the concentration of the protein itself, or as a single variable, equating to simply a “slice” of the n-dimensional phase space.

In contrast to macrocrystallogenesis, where a single or few large crystals are desired, the main goal for a serial crystallography sample is to obtain many crystals that are small in size. That is to say, the objective is to reach a completely different area in phase space. Highly oversaturating conditions are the crux of this, allowing the formation of many nuclei and rapid depletion of protein concentration. In Fig. 3.1 this equates to nearing the border between the nucleation and amorphous precipitate regions. To achieve this and guide crystallogenic optimization, we must first consider how nucleation and crystallization are driven.

For the favorability of spontaneous nucleation and crystal growth to occur, the free energy of the system must decrease from the process. From a physiochemical perspective, the formation of nuclei is a stochastic function of concentration. As the concentration increases, so too does the chance for the macromolecules to collide and, subsequently, collide with a favorable orientation that can lower the local energy (but collisions also lead to unfavorable orientations that eventually dissipate). This can be written in terms of the free energy of crystallization as

Since a crystal is inherently ordered, the entropy from the macromolecular crystal will always be negative (equating to a positive contribution to the free energy). Thereby, the loss of degrees of freedom for the protein needs to be compensated with an entropic gain from disrupted solvent shells (there may also be an enthalpic contribution but it has generally been shown to be minimal in comparison [4]). Even upon such an event, the solution is still dynamic and subsequent collisions can cause the nuclei to increase in size or disperse. General phase transitions (e.g., nanocrystallization) intrinsically are a competition between the destabilizing interfacial (surface area) free energy components and the stabilizing bulk (volume) free energy components. For simplicity, consider an ideal case where the surface energy does not vary with orientation (i.e., a spherical nucleus). This leads to a free energy for the nucleus being the Gibbs-Thomson equation for a condensed droplet as a function of radius, namely

where k B, T, and S are the Boltzmann constant, temperature, and entropy, respectively, the ν term represents a molar volume element for an additional molecule with respect to the packing, r is the radius of the droplet, and the γ term representing the specific energy of the surface [5]. Certainly, in the real nucleation case, the γ term will have an anisotropic dependence upon facet composition but the fundamental form of the equation remains a competition between a positive surface component (acting similar to an activation barrier) and a negative (stabilizing) volumetric component that dominates as r becomes larger. This means that a critical radius occurs when we set the derivative of Eq. (3.2) to zero:

In essence, a critical sized nucleus is formed when the contribution to the overall energy from the nucleus volume overcomes the surface energy increase as an additional molecule is added [5]. However, in order to get to this point, growth of smaller, quasi-stable nuclei must continue to grow against an uphill energy barrier until a critical size is reached.

For any amount of supersaturation, critical nuclei are possible given enough time but at low levels of supersaturation, deterioration of the sub-critical nuclei dominates. This region is typically represented as the heterogeneous nucleation or growth zone (see Fig. 3.1). As can be qualitatively understood, higher degrees of supersaturation lead to more collision events and shorter, more feasible time scales for critical nuclei to occur. This is represented as the spontaneous nucleation zone. In extremely elevated levels of supersaturation through increased protein concentration, collisions will be so frequent as to overcome the preference for energetically favorable orientations necessary for quasi-stable nuclei, as the unstable nuclei lifetimes are outcompeted by collisions. Alternatively, increasingly precipitating conditions also lead to higher levels of supersaturation that change the potential energy surface of collisions, allowing slower relaxation times. Both of these can lead to critical radii being achieved without the molecules exhibiting translational symmetry, resulting in an amorphous precipitate. It should be noted that other than the solubility line, the zone separations represented in crystallization phase diagrams are not well defined and are, without a conventional metric, somewhat arbitrary. This does not exclude their usefulness, especially in conveying and differentiating phase space trajectories experienced in different methods. For any set of sample characteristics, fine screening solution content and physical parameters is crucial. One of the most impactful factors in attaining your goal lies in the chosen method, for which the following section is dedicated.

3.2.2 Methods

It has been almost 60 years since the first protein crystal structure was published [6] and even longer since the first protein crystals were observed in 1840 [7]. In the time since, many ways to produce macromolecular crystals have been devised. Vapor diffusion, batch, free interface diffusion, and dialysis methods are among the most historically popular ways to make macrocrystals, each having their own benefits and challenges (these and other methods have been covered extensively elsewhere [8, 9], the reader is referred to these publications for detailed review of these and other methods). Again, when aiming for nanocrystals, we are simply trying to access a different area of phase space and so, in most cases, we can just adapt these techniques to suit our purpose. Another pervading theme in sample production for serial crystallography is the sheer mass needed for a successful data set, sometimes requiring hundreds of milligrams of protein! This is certainly something to consider and, when possible, increasing crystallization setup volumes should be considered as it can lead to consistency throughout data collection on top of reducing tediousness of sample preparation.

It is important to remember that crystals are not grown statically and the method and implementation will largely affect experimental crystallogenesis results. Once a foothold condition is found, fine screening around it should be done with multiple methods. This is especially important for nanocrystals due to the multitude of parameters that need to be simultaneously optimized. While one method may give the best diffraction, this must sometimes be weighed against size or yield or even growth time. It is important to keep in mind the specifics of the experiment at hand. If it is the case that the goal is simply to obtain a novel structure from a protein that resists the formation of large, well-ordered crystals then diffraction quality will of course take precedence. However, this is not always the case. For example, in a kinetic study with substrate mixing there are multiple variables to simultaneously optimize. In this type of experiment, as reaction time regimes become short, size and size homogeneity become increasingly important to maintain temporally homogeneous data sets along a reaction timeline. Of course, enhancing resolution is still very important but so long as the resolution is sufficient to see conformational changes in a particular system, the other parameters may become more beneficial to focus ones efforts.

3.2.2.1 Adaptation of Existing Conditions

While obtaining structures on samples that only seem to form small crystals is certainly a benefit of SFX, this represents only a fraction of targets. The ability to “outrun” radiation damage, the high temporal resolving power and the extreme brilliance found at an X-Ray FEL lend it to completely new areas of study and the ability to overcome some of the shortcomings of other light sources. For example, the structures of metalloproteins can be determined without site specific radiation damage [10, 11], irreversible reactions and conformational homogeneity in transient states can be probed [12, 13], and ultrafast time regimes (sub-ps) are now accessible to study dynamics [14, 15]. This opens many avenues for progression on proteins that already have a static crystal structure and, subsequently, already have well-established crystallization conditions. With this in mind, a primary goal of this chapter is to guide the translation of existing macrocrystallogenic conditions into those suitable for SFX and initial conditions are assumed.

By far, the most common and effective way to get a foothold on possible crystallization conditions is mass sparse-matrix screening, and this remains true for nanocrystallization. Interpreting the results differs as one searches for conditions giving nanocrystals but most times the large crystal “hit” in a screen can be optimized into nanocrystal conditions in the same way that a shower of crystals can lead to macrocrystal conditions. It should also be noted that most large-scale screening is done with vapor diffusion and while this can still lead to nanocrystals, it is a method that moves through phase space because of evaporative concentration and can be a generally “slow” method with respect to inducing nucleation. In its traditional form, it is also an extremely tedious method to obtain the milligrams of sample generally necessary for a serial crystallography experiment. That is not to say that it could not be used and one can certainly imagine a volumetrically upscaled sitting drop setup, but it is rarely the most convenient or optimal technique. It is important to keep in mind that, in general, increased concentration of precipitating condition leads to smaller crystals. While this is certainly true for chemical precipitants (e.g., salt, polyethylene glycol (PEG)), it is worthwhile to think about the kinetics or time as well. For example, in a vapor diffusion experiment, the volume and concentration of the well solution controls how fast the sample cocktail concentrates via evaporation. Parameters such as temperature or viscosity can also have a large effect on the thermodynamic rate of nucleus formation. For example, a highly viscous precipitant (e.g., PEG) can slow down the process by which the protein molecules collide in solution versus a lower viscosity precipitant, which will favor a much faster diffusion rate. Or in the case of temperature, crystallogenesis at a higher temperature will increase the available energy in solution, giving faster diffusion and thus tending to favor more nuclei and smaller crystals (of course caution must be taken when varying the temperature too much to avoid possibly unwanted effects such as denaturation or expansive freezing of water). The speed of a method will depend on its crystallization diagram; the time spent in each region of phase space will dictate the overall results of a given setup.

3.2.2.2 Free Interface Diffusion

One method that has been adapted from a traditional method is that of nanocrystalline free interface diffusion (FID), originally described by Kupitz et al. 2014 [16] and shown in Fig. 3.2. In an FID setup, the protein and precipitant solutions are combined to create a layered mixture similar to the microcapillary approaches [17] used traditionally. The difference lies in the need for larger volumes and high nucleation rates. Typically, the less dense of the two solutions—which is typically the protein solution—is first aliquoted into a vessel, often a microcentrifuge tube because the sloped sides have the potential to act as a parameter by influencing the mixing region volume and concentration profile. A quick centrifugation can be helpful to remove any bubbles and create a flat interface before addition of the second solution. Once a flat interface is obtained, the denser solution is added through the center of the surface dropwise. It will form a bottom layer and some perturbation at the interface, giving a small volume mixing zone. This allows for very high concentrations of each solution at the interface, higher than can be achieved with thorough mixing (most other methods involve the need for mixing of a protein containing solution and a precipitant containing solution, leading to a necessary dilution of both upon mixing). The perturbation from dropping one solution through the other tends to speed up the process, favoring nucleation by inducing a minimal but necessary mixing region.

Schematic of nanocrystal free interface diffusion (FID) setup. As the denser solution (either protein or precipitant) is dropwise added to the center of the other and a layered setup with a mixing region between occurs. The mixing region acts as an interface with high local concentrations of both protein and precipitating conditions, allowing diffusive mixing. The concentrations and volume of this will depend upon the container geometry, droplet size and viscosities of the two solutions. The sloped sides of a microcentrifuge tube (pictured here) can allow for easily varying the surface area-to-volume ratio, though this should be taken into account when attempting to upscale a setup (adapted from Kupitz et al. [16])

The access to high nucleation regions of phase space experienced at this interface can cause nanocrystals to form and, as they grow larger, tend to settle towards the bottom layer. In the case that the precipitant is denser, as is common with many precipitants containing high salt or PEG, this serves as a sort of auto-quenching effect as the crystals settle away from the free protein layer and into the precipitant rich layer. Different volumetric ratios, total volume and even droplet radius can have a profound effect on results from this method and volumetrically conserved upscaling tends to eventually break down reproducibility and quality. The limit of this is highly specific to a given protein/mother liquor and is likely a function of the mixing region profile which depends upon surface area and volume ratios, viscosities and perturbation. Gentle centrifugation is a way to enhance gravitational settling and can also expedite crystal formation and uniform growth. As can be expected, over extended periods full mixing of the two layers can occur and to prevent any loss of quality or even dissolution, crystals should be harvested and/or quenched prior to complete diffusion (the time sensitivity of this will be a function of miscibility between the layers as well as volume–interfacial surface area ratio). This method can be particularly useful when either or both precipitant and protein concentrations are constrained by solubility in other methods and a smaller crystal size is desired. This is due to the ability to have saturated concentrations at the interface. It should be noted, however, that reproducibility is sensitive to even minor differences in the setup of this method due to the many affecting variables involved such as volume, ratio, drop size, position of the perturbation in the interface, and container (Fig. 3.3).

Nanocrystal FID phase diagram. The red line shows a conceptual path experienced by proteins moving through the interface into the mixing region and finally depositing as part of a crystal. The spacing of the arrows denote a likely temporal trajectory as diffusion slowly allows it to enter the mixing region and then more rapidly adsorb onto a nucleus before settling as crystal growth is achieved

3.2.2.3 Batch Nanocrystallization

Batch crystallization is a “cocktail” approach where the protein and precipitating conditions are homogenized. This is particularly useful in making the large volumes often necessary for SFX experiments due to its ease and simplicity. This has the added benefits of decreasing sensitivity to user technique and volumetric scaling, though caution should be taken to ensure scaling does not interfere with homogeneity. It can be seen in Fig. 3.4 that there is no movement through phase space prior to nucleation (or lack thereof). Protein stock and precipitating mother liquor are typically added to a vial or microcentrifuge tube and either homogenized by pipette mixing or using a magnetic stir rod. The stir rod allows homogeneity to be retained as protein begins to precipitate out and can allow either the protein or precipitant to be added slowly, avoiding high concentration interfaces with significant contact time. Analogously, when pipette mixing, multiple aliquots can be serially pipette mixed to allow a more gradual introduction of precipitating conditions. This is perhaps the most convenient method as it is a short, simple setup that can usually be upscaled to complete experimental volumes suitable for consistent data collection. While this method can theoretically be done in any size vessel, experimental demand usually requires a few milliliters of sample for a full data set. Fine screening with a dilution gradient using a crystallization robot can help explore specific batch conditions prior to upscaling. It is also worth noting that recently, microfluidic approaches have also been successful at fine screening batch conditions with minimal volume constraints [18].

Batch crystallization phase diagram. Illustration of the “cocktail” method where a protein and precipitant are homogenized, essentially picking a supersaturated point in phase space and letting it evolve with equilibration. As can be seen, the crystal size (among other parameters) is highly sensitive to these conditions and will tend to smaller crystals further away from the solubility curve. The use of seeding is also highlighted here as it often allows milder conditions to result in a similar result. Seeding can also encourage homogeneity and accelerate crystallogenesis

3.2.2.4 Other Methods and Sample Delivery Considerations

There are many other methods that have been successfully used to grow macromolecular nanocrystals that are thus far a little less general. Almost any method that has been used for microcrystal growth can be adapted to nanocrystal generation, the key is using parameters to enter a different region of phase space. In fact, even methods to create nanocrystals such as mechanically crushing macrocrystals have had success [19], although caution should be taken with this as homogeneity and quality can be severely impacted, the degree of which is very dependent on the crystal contacts within the crystal.

A particularly interesting method of crystallization that should be mentioned is that of in vivo over-expression. Using baculovirus-Sf9 cells, multiple proteins have now been shown to crystallize in vivo [20] with successful injecting of the un-lysed cells in one case [21]. While the mechanism behind in vivo crystallization and its general applicability is currently unclear, it is certainly worth monitoring the progress behind this phenomenon.

One method of crystallization that has been extremely successful with membrane proteins, particularly G-coupled protein receptors, is the use of the lipidic cubic phase (LCP) as both a crystallization and delivery media. LCP is a bi-continuous mesophase that can allow type I crystal packing (i.e., stacked membrane embedded planar 2D crystals lacking detergent micelles [22]) due to its membrane mimetic properties, typically leading to tighter packing and often higher resolution than obtainable with crystals grown in detergents or other surfactants (in surfo). Chapter 4 is dedicated to this method as it has been so successful when approaching some of the most challenging proteins.

One of the clear advantages of an LCP capable system for serial crystallography is the ability to create a much slower moving viscous jet, which reduces sample consumption by orders of magnitude. The reduction of sample requirements has driven the development of alternative viscous carriers in which pre-grown crystals can be embedded into a viscous media, allowing 1–2 orders of magnitude less material [23,24,25].

A useful tool that can be implemented with any method is seeding, the use of pre-grown crystals to “seed” nucleation. These seeds are of course obtained by some initial crystallization method but the addition to a subsequent crystallization attempt can have a profound effect. Thinking back to the crystallization phase diagrams, different areas of phase space can now be approached. Using seeds also tends to encourage homogeneity among crystal size [26] and across different batches. The most convenient side effect is that while pursuing nanocrystallogenic optimization, any sample made along the way can be used for subsequent trials and can be doped into any method easily.

3.2.3 Stability and Storage

Since serial crystallography avoids radiation damage via constantly replenishing sample during data collection, freezing techniques and optimization therein are, in general, unnecessary. Instead, one must consider how the sample is stored and handled prior to sample introduction to best preserve diffraction quality and physical characteristics that are optimized during crystallogenesis.

Crystal size is one of the most sensitive characteristics to the storage method and technique. Ideally, crystals are kept in a solution of their mother liquor or a variant thereof. This has the benefit of needing only to resuspend the sample prior to introduction and data collection (once an optimal crystal density has been determined and achieved). It is not uncommon, however, that the precipitating conditions used in initial crystallogenesis allow further growth over time, since many crystallization experiments have a slow growth phase due to supersaturated conditions in the mother liquor. This can occur from any free protein continuing to adsorb to crystal surfaces. It is therefore highly advisable to either remove uncrystallized protein as soon as the desired crystals are obtained or to further decrease solubility, though the impact is highly dependent upon the amount of time the sample must be stored and specific conditions. While in some cases an optimized method will have little remaining protein in solution, this can still be necessary. Fortunately, this can usually be achieved by allowing crystals to settle, carefully removing the supernatant and replacing with fresh mother liquor, effectively “washing” the crystals. It should be noted that this does have the effect of shifting the solution out of equilibrium and will cause the surface molecules to fall back into solution until equilibrium is obtained. For a larger size crystal this can be negligible, but for a smaller crystal it may be significant and may be advisable to try to find a minimal concentration that keeps stability or to shift to higher precipitating conditions, effectively decreasing equilibrium concentration of free protein.

Ostwald ripening, the process by which larger crystals tend to grow while smaller ones tend to dissolve due to thermodynamic favorability, may also disturb the size, homogeneity and number of individual crystals. This occurs because even though crystals have been formed, the solution is effectively in a dynamic equilibrium with solubility being low but not strictly absent. Because of the lower number of interactions with the bulk crystal for the molecules on the surface of a crystal, they tend to detach and go into solution periodically before stochastically recombining with a crystal. Due to the difference in surface area, this process favors large crystals in the long run. Certainly, the more size homogenous the sample, the slower this process will occur but absolute size homogeneity is impossible to achieve practically. One way to avoid Ostwald ripening is to further decrease the solubility of the protein, effectively quenching the exchange. Simply by “washing” the sample as described above with a higher precipitating condition can achieve this, although a dramatic change in solubility can sometimes damage the crystals and sometimes a stepwise approach is preferable. Temperature can also be used to decrease solubility, though again care must be taken to monitor unwanted effects on crystal quality.

As data are usually collected at or near biological temperatures, stability during transportation can also be a concern. Fortunately, due to the high nucleating conditions needed for a plethora of small crystals, nanocrystallogenesis is typically a fast process and oftentimes samples can be grown on site within a matter of hours or days prior to an experiment. However, if this is not the case, it is imperative to ensure the integrity of the sample is not compromised during transport by anticipating environmental perturbations such as handling or temperature variance. As automation and remote data collection in serial experiments is almost certainly inevitable, this will likely become more and more general of a consideration. Temperature secure containers, eliminating gas from sample head space, shock absorption or even embedding crystals into a viscous media (if viable) are among the approaches that can ameliorate shipping concerns. As always, testing and characterizing to optimize results and protect precious sample is vital.

3.3 Considerations and Characterization for SFX Optimization

3.3.1 Characteristics: What Is Optimal?

There are some unique characteristics that apply in serial crystallography that must be controlled and optimized for a given sample. Size homogeneity and sample density are always general concerns, affecting data collection efficiency and quality. Of course, size itself plays a vital role and sometimes bigger is better. But there are many circumstances where it is not and thoughtful selection is crucial to a successful experiment (e.g., for time-resolved studies). In fact, for time resolved studies, it can be important for both size and size distribution to be minimized. Size governs reaction homogeneity upon probing since the activation trigger (e.g., light, substrate) will have a different distribution to the different molecules in the crystal dependent upon volume. In the case of a chemical trigger, molecules towards the center would on average experience a delayed reaction initiation due to reactant diffusion within the crystal. For optically triggered reactions, molecules downstream in the direction of the pump laser propagation would experience increasing attenuation in pump power, leading to lower yields of reaction initiation. There will also be a temporal range of reaction initiation similar to the chemical trigger case but the range would be in the 10s–100s of femtoseconds for micrometer sized crystals. This still must be taken into account when exploring dynamics on these timescales and, like the diffusive case, smaller crystals are preferable with respect to homogeneous initiation. Reaction timeline homogeneity itself is of course important as the serial snapshots are merged into a data set and large distributions will broaden conformational heterogeneity. This will be covered in more detail in the later Chaps. 11 and 12.

Sample density, that is, the concentration of crystals in a suspension, is another key factor. Depending on X-Ray source size, crystal size and jet size, the optimal sample density can be calculated by approximating a Poisson distribution for the hit rate versus concentration (usually falling in the 109–1011 crystals/mL range), though a Poisson approximation will become less accurate as the crystal size becomes much larger than the beam focal spot. It is important to verify that the protein-rich phase is in fact crystalline and, especially in the submicron range, this is not always straightforward. In fact, many times small crystals may be mistaken for amorphous precipitate to even the trained eye. Techniques in microscopy and diffraction that can be used to verify crystallinity are covered in Sect. 3.3.2.

3.3.1.1 Data Analysis Considerations

In serial crystallography, data are collected as a series of diffraction “snapshots” from different crystals that are merged together to form a complete data set. As opposed to a rotation series on a goniometer, only partial reflections are measured and thus structure factors must be elucidated using Monte Carlo methods. The high multiplicities of measured reflections needed necessitate a large amount of sample even in an ideal case, where each crystal is the exact same size, morphology, quality, etc. Changes in sample homogeneity signify that even more measurements, that is, single snapshot diffraction patterns, are needed for the data to converge to reliable and comparable intensities.

In early SFX studies, >105 diffraction patterns were thought to be necessary to determine structures [27]. This has considerably decreased in the past few years and full data sets have now been obtained with under 10,000 (in some cases, under 1000! [28]) images needed [23, 29, 30]. A narrower size distribution of protein nanocrystals, however, might greatly reduce the number of diffraction patterns required for successful merging and integration. In addition, the peak intensity of Bragg reflections in the individual diffraction patterns scales with the crystal size quadratically, which may lead to significant variation in peak intensities. This means that a high size inhomogeneity not only introduces another parameter that needs to be addressed using Monte Carlo methods, practical considerations may necessitate attenuation of the beam to avoid detector damage. This can lead to a decreased signal-to-noise ratio (SNR) for patterns representing the smaller sized crystals in a sample since the beam intensity used is often determined from the strongest scattering crystals.

Another consideration for data analysis related to crystal size is experimental solutions to the phase problem, that is, phasing. There is a huge interest to improve SFX data analysis for de novo structure determination. Crystal size homogeneity may play a crucial role in this approach. For example, Spence et al. have proposed that coherent diffraction intensities between Bragg reflections of sufficiently small crystals may be used for novel phasing approaches [31]. These coherent shape-transformed Bragg reflections allow for two-dimensional projection images of the entire nanocrystal, which could be used to solve the crystallographic phase problem in SFX without prior information, crystal modifications, or resolution restrictions. It is expected that this novel approach works best for a specific crystal size range. When crystals become too small (containing too few unit cells), the inter-Bragg diffraction intensities reduce, thus an optimal intermediate size is desired [32]. The details of methods involving these properties will be discussed in Chap. 8. Whether exploiting nanocrystals discreteness for novel phasing approaches or minimizing the time and amount of sample needed for a complete data set, there is a clear motivation to obtain control over crystallogenic parameters in order to improve data quality.

3.3.1.2 Practicality: Sample and Hardware

There are some practical concerns that arise from the hardware used for SFX experiments, which must also be considered. From the sample delivery point of view, most experiments have been performed using some type of gas focused jet (sample delivery is covered in detail in Chap. 4) and to minimize wasted sample, constrictions in the hardware are often very small (nozzles usually contain a capillary with 30–100 μm inner diameter). Minimum flow rates for a stable jet (equating to minimal sample consumption) can vary depending on buffer composition, especially viscosity. With polyalcohols, such as polyethylene glycol, being a common precipitating agent, this can be a frequent concern and it is worth experimenting to try minimizing viscosity during final sample preparation. Setting up small aliquots of different concentrations and monitoring crystal integrity over time can save later frustration over hindrance of data collection due to a clogged nozzle or inconsistent jet.

Another issue that arises due to the small nature of sample delivery hardware is clogging due to the crystals themselves or other particles. Certainly, one must select a nozzle size appropriate for the employed crystals (a good rule of thumb is at least twice the size of the largest crystals in the batch) but many times even a sample with relatively good size homogeneity will have a few large outliers. It should be kept in mind that optimal sample densities are on the order of 109–1011 crystals/mL and it only takes one crystal to clog the nozzle. It is therefore in a user’s best interest to have filtering systems in place prior to the injection hardware to avoid time loss for fixing/replacing the hardware. In-line filtering is almost always a necessity but it is oftentimes advisable to “pre-filter” the sample prior to containment in a sample delivery reservoir. Many commercially available plumbing and filter setups can be adapted to this purpose, for example standard liquid chromatography hardware. It should be noted that not all filters are created equal and different types and quoted porosities can have detrimental effects on a crystalline sample such as shearing crystals apart. It is advisable to test the effect of filtration on the sample prior to a beamtime in order to know which filter will work best for a given crystal suspension. The density at which a crystal suspension is filtered can also have an effect and, in general, it is prudent to filter at low concentrations and allow a sample to settle before removing supernatant for concentration. Filtering can also serve to improve size homogeneity during sample preparation should you experience a bimodal or multimodal distribution.

Even when a particular sample is not strongly constrained by quantity or another method of sample introduction is used that can sidestep the above-mentioned concerns, size heterogeneity can cause other concerns. In addition to data quality and efficiency concerns addressed in the previous section, saturation or even destruction of the detector must be considered. Especially with the extremely brilliant X-Ray FELs, a well diffracting crystal can easily exceed the intensity at which a detector can be damaged. Even when not damaged, saturation can occur, obscuring values for structure factors (i.e., intensities above the saturation threshold will be measured incorrectly as the threshold value). To avoid this, the beam is usually attenuated to a level that certainly avoids damage and minimizes saturation. Remembering that peak scattered intensity scales quadratically with respect to the number of unit cells illuminated by the beam, it becomes clear how attenuating to the largest crystals that are introduced to the beam can quickly limit the lower size limit for useful data collection.

3.3.1.3 Control of Homogeneity Through Post Growth Methods

Once crystals are obtained in suitable concentrations, it is important to characterize crystal size homogeneity. This can be accomplished with the methods described in Sect. 3.3.2. However, we emphasize that dynamic processes may play a significant role, requiring stringent analysis of crystals prior to crystallographic measurements. Crystals may grow, aggregate or dissolve after production and these processes need to carefully be accounted for. To reduce the amount of unwanted larger crystals, a straight forward approach of filtering may be employed. While this approach is suitable for sufficiently large amounts of crystals available, it might not be applicable when the concentration of smaller crystals is low or when the crystals are prone to decomposition through mechanical filtering approaches. In addition, as discussed above, novel phasing approaches may require specific size ranges and narrow size distributions, which require more sophisticated approaches for post growth crystal sizing.

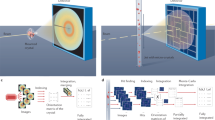

One approach to fractionate crystals by size has recently proposed by Abdallah et al. [33] In this novel microfluidic method, crystals are deviated in their migration path while flowing through a micrometer-sized constriction and collected in various outlet reservoirs of a microfluidic device. The method relies on dielectrophoresis, where the applied dielectrophoretic force scales with the radius of the crystals to the power of three [33]. In the application demonstrated by Abdallah et al., crystals experienced negative dielectrophoresis, repulsing large objects into a center stream, while smaller particles deviate to side channels. Smaller crystals can thus be recovered in the side channels. The first realization of this dielectrophoretic crystal sorting was demonstrated with photosystem I (PSI crystals, as demonstrated in Fig. 3.5). The sorted crystals were characterized with various techniques, demonstrating that they were not decomposed during the sorting process and that they retained excellent diffraction quality even after sorting [34]. This continuous crystal sorting method was further developed for higher throughput to account for the mL volumes of crystal suspension needed in liquid jet based injection methods for SFX with X-Ray FELs [34].

Microfluidic Sorting: (a) Image of the microfluidic sorter used for high throughput crystal sorting. (b) Mechanism of crystal sorting in the microfluidic device near the constriction region. Electroosmotic flow (EOF) is used to deliver sample to the constriction region. The green arrows indicate the flow of large and small particles after the constriction regions, where sorting occurs. (c) Dynamic light scattering (DLS) analysis of PSI crystal suspension prior to sorting. (d) DLS analysis of PSI crystal suspension after sorting with the dielectrophoretic sorter indicating crystals <600 nm). (e) PSI crystal suspension prior to dielectrophoretic sorting and (f) after sorting in the low throughput version (adapted from: Abdallah et al. [33]) A homogeneous size fraction of about 100 nm was achieved (f)

3.3.2 Characterization

During the crystal screening and growth process, one needs to continually characterize the crystallogenic results to guide optimization. Compared to macrocrystals, this can be a significantly more complex task due to the necessity for high density, homogeneous crystal suspensions typical in serial crystallography, which are also generally harder or sometimes impossible to visualize with routine microscopy. This is not to say that the task is necessarily always difficult, just that it requires a thoughtful approach with a larger arsenal of tools than is traditionally necessary.

3.3.2.1 Optical Detection: Visualizing Your Crystals

While it is still a good idea to first look at potential crystals under an optical microscope, it can be difficult to score results, especially as crystals approach one micron or smaller. Using a polarized filter to look for birefringence can be particularly useful to look for crystals whose sizes are near this threshold. In practice, the intensity from birefringence scales with the size of the crystals and so its usefulness does have a lower limit. Nonetheless, it can certainly enhance the ability to differentiate crystals from amorphous precipitate and can often provide enough contrast to indicate crystals below a micron. It should be noted that birefringence requires optical anisotropy and will therefore be absent or diminished in space groups with high symmetry (e.g., cubic). However, this does allow most salt crystals to be ruled out, which can be a concern with the high concentrations that can arise in nanocrystal creation.

One of the most useful ways to identify crystallinity is that of second harmonic generation (SHG) microscopy. There has been considerable effort developing this technique, specifically for identification of nanocrystals, and commercial instruments, such as Formulatrix’s SONICC (second order nonlinear imaging of chiral crystals), have enjoyed success within the community. SHG works on the principle that under very intense illumination with light, two photon processes become significant and subsequent frequency doubling occurs over the fundamental wavelength. In our case, this is dependent on the polarizability of both the molecule and the crystal as a whole, constructively interfering with additional unit cells. In practice, this means that it is only observable from crystals (or other periodic objects) and will differentiate between them and amorphous material, often down to ∼100 nm or smaller. It is also very sensitive to anisotropy and complete destructive interference will occur in any centrosymmetric space group. Like birefringence, this also rules out cubic space groups but prevents false positives from most salts. In addition, other space groups of high symmetry may suffer from diminished signal, albeit not strictly zero [35]. The signal also has an inherent dependence on the polarizability of the protein itself and this can lead to varying size limits or even feasibility. Commercial dyes have been developed that can intercalate through the crystal and enhance signal, a noteworthy aid for one aiming for the smaller end of the size regime. A good cross validation for potential nanocrystals observed in either SHG or polarized microscopy is UV-microscopy, which can easily highlight protein from salt by way of tryptophan fluorescence. In fact, the SONICC instrument couples an ultraviolet two-photon excitation fluorescence (UV-TPEF) method with SHG imaging, allowing overlapping images of a drop for secondary characterization. This is a great cross validation for proteins with any aromatic residues that either have a SONICC prohibitive space group (false negatives) or a precipitant that can form chiral (non-protein) crystals (false positives). The use of a multiphoton process is inherently confocal in nature and allows a decreased background and narrower depth of field, particularly important with the smallest size regime or unoptimized conditions. Excellent reviews on the principles of SHG and UV-TPEF imaging can be found in Kissick et al. [36] and Madden et al. [37], respectively.

3.3.2.2 Light Scattering Techniques for Size Determination

Light scattering methods are traditionally used to characterize particle sizes in suspension. Among those, dynamic light scattering (DLS) and nanoparticle tracking analysis (NTA) have been primarily applied for the characterization of crystal suspensions. NTA has only become recently available commercially but has found immediate application in crystallography. DLS is a well-established method used routinely in nanoparticle and microparticle analysis.

DLS takes advantage of the scattering characteristics of suspended particles in solution. It is thus not surprising that DLS has also been employed for the characterization of suspensions of small crystals required for crystallography and in particular the smaller crystals needed for SFX. In DLS, a laser is directed into the crystal suspension and the scattered light is measured at some fixed angle. Since the particles in the solution exhibit Brownian motion, the measured scattered light intensity will also vary randomly over time, typically in the microsecond time regime. If the particles are large, the time variations at the detector are slow, whereas for small particles the time variations are faster. The time fluctuations of this scattering are recorded in DLS and related to the particle size distribution in solution using suitable correlation analysis.

We may write the autocorrelation function of the scattered light intensity as [38]:

where t is the time and g(t) is the normalized first order time autocorrelation function. For monodiperse particles, g(t) constitutes an exponential function with a time decay governed by the particle diffusion coefficient, D, and the scattering vector, q:

The scattering vector is given by:

with λ the wavelength and θ the scattering angle. From Eq. (3.6) we notice the direct relation of g(t) to the diffusion coefficient and thus the size of a particle. The latter is obtained through the Stokes–Einstein relation:

Here, k B is the Boltzmann constant, T is the temperature, η is the viscosity, and r is the radius of a sphere. In a DLS measurement, we thus determine the diffusion coefficient of a particle corresponding to a sphere. Therefore r is replaced with the hydrodynamic diameter (d h = 2r) for non-spherical particles. Suitable mathematical corrections need to be applied to obtain the size of non-spherical particles in DLS measurements.

Most particle and specifically crystal suspensions are not monodisperse. To account for polydispersity in DLS measurements, one introduces the methods of “cumulants.” The decay function g(t) is then assumed to consist of the sum of decay functions, where each summand accounts for a specific subset of particles. Analyzing polydispersity in DLS measurements requires suitable software, which is included in most commercial DLS instrumentation. It is also interesting to note that while DLS typically cannot distinguish between crystal and amorphous particles, a technique in which depolarized light from the scattered light is ascribed to birefringence has been developed, indicating crystallinity [39]. For a more detailed description of the DLS theoretical framework we refer the interested reader to the literature, for example a review by Pecora [38] or a book by Schmitz [40].

The size range suitable for a DLS analysis spans from as low as 1 nm well into the micron regime where optical characterization becomes available. However, a sample needs to be carefully characterized in order to avoid gravitational settling, which may compete with Brownian motion in μm-sized crystals. Moreover, the scattered intensity scales with the particle diameter to the sixth power, which signifies that a ten times larger particle scatters a million times more intensely. This relation needs to be critically viewed in DLS as the scattering of larger particles can easily overtake the much weaker scatting of smaller particles and bias the data analysis. Small crystals can thus be easily overlooked in suspension containing larger particles, such that the potentially more useful particle sizes for SFX may not be recognized.

However, DLS has become a routine tool in size characterization and it is powerful when (1) different crystallization batches are compared, (2) rapid analysis is necessary—such as at a beam time prior to injection, (3) the amount of crystal suspension is limited and not compatible with sample cell size for NTA (see below) or dilution of the sample cannot be performed for NTA, and (4) if a broad size range from several tens of nanometers up to micrometers is to be characterized.

DLS instruments offer a variety of cuvettes and thus variation in the sample volume to be analyzed. Standard measurements can be routinely carried out in volumes from 1 mL down to 50 μL. For crystallization trials, performed in small volumes, such as the hanging droplet method or even miniaturized on microfluidic platforms [38], it becomes important to perform DLS analysis in volumes below 50 μL. This can be accomplished with instruments exhibiting specialized optics, such that the DLS laser can be directed into a hanging droplet or microfluidic channel.

Alternative to DLS, particle tracking has been applied for the characterization of crystal size distributions. In particle tracking methods, the scattering or fluorescence of small objects below 1 μm is recorded by video microscopy; hence, the method is often referred to as nanoparticle tracking analysis, or NTA. A laser of certain wavelength is directed in a sample chamber and the displacement of individual particles from frame to frame is recorded. The mean square displacement of a particle, \( \overline{x} \), is related to the diffusion coefficient, D, in the two-dimensional case via:

where t is the time. Once D is determined it can be related to the particle size, or more precisely its hydrodynamic radius, r H, via the Stokes–Einstein relation shown in Eq. (3.8). Be aware that D is a function of both temperature and viscosity in addition to the particle size so a new calibration must be performed whenever one or both of these variables are modified.

Suitable algorithms can track the particle motions and displacement and determine their size. In NTA, since it is a direct visualization process, particles can be counted and thus particle concentrations can be determined. This is an important additional data point for SFX experiments and the particle concentration can be adjusted to optimize hit rates. Since NTA tracks single particles, it also allows a more detailed analysis of multimodal distributions compared to DLS, an ensemble process, and is less prone to masking of smaller particles due to the augmented scattering properties of larger particles as apparent in DLS [40].

Particle tracking analysis per se is not a novel method and can be easily implemented via suitable imaging instrumentation and free software packages [18], such as available for ImageJ [41]. NTA has recently become available commercially and thus facilitated greatly for crystallography applications. The NanoSight instrument from Malvern (UK) has suitable measurement cells that allow for size distribution analysis for proteins and protein crystals in the range from ∼30 nm to 2 μm. Clearly, this size range shows that crystal suspensions with larger expected particle size distributions should be analyzed by DLS. Another consideration in particle tracking analysis is the size of the measurement chamber, requiring several hundred microliters to fill the entire chamber. If crystal suspensions are limited in amount, recovery needs to be attempted or in the worst case, this analysis cannot be performed.

3.3.2.3 Transmission Electron Microscopy

Transmission electron microscopy (TEM) is a useful tool in crystallography, and has provided valuable information on the quality of micro- and nanocrystals prior to serial crystallography. TEM represents a vacuum technique, where an electron beam is directed through a thin specimen. Microcrystals and nanocrystals are typically mounted on a thin grid for TEM imaging with use of a negative stain for increased contrast. The electron beam interacts with both the electron cloud and nuclei of the atoms in the crystals leading to electron scattering. Scattered electrons pass through an objective lens, which upon focusing creates the primary image. Additional optical components are used to form a highly magnified final image of the primary image.

Obviously, as an imaging technique for nm-sized particles, a strength of TEM is to provide unequivocal information of the size of nanocrystals and microcrystals. It is the most direct visualization method of assessing the size of crystals, but is not suited for fast analysis. Analyzing the size-distribution of protein crystals with TEM is a time-consuming process, and requires the sophisticated and expensive TEM instrument as well as specialized training for the experimenter. However, provided enough crystalline material is at hand, this analysis could be automated to provide size and heterogeneity information of a particular crystallization trial. But this analysis is typically not performed in favor of faster and less cumbersome techniques such as NTA and DLS related to size-based analysis.

The major strength of TEM relates to revealing information about crystallinity. TEM can provide information about the existence of nanocrystals including variations in the crystal forms or the evaluation of diffraction quality. TEM allows for direct visualization of the crystal lattices and the Fourier transform of TEM images from protein crystals reveals their electron diffraction patterns or “Bragg” spots. The higher the order of these spot, the better—in general—the diffraction quality of protein crystals.

Stevenson et al. demonstrated that Bragg spots obtained from TEM analysis of several analyzed protein crystals are indicative of crystal quality [42]. The information gained with TEM analysis of crystals includes information related to crystal lattice variations between different crystallization methods as well as crystal pathology including lattice defects, anisotropic diffraction, and nanocrystal nuclei contamination by heavy protein aggregates.

TEM thus constitutes a useful tool to identify nanocrystals for challenging protein targets [43] and for the evaluation and optimization of crystal growth [44]. TEM has also been used to characterize the crystal quality after the fractionation of protein crystals by size using microfluidic tools. Abdallah et al. used a negative stain TEM (following a procedure previously published by Stevenson et al. [45]) to show that PSI crystals subjected to size-based sorting showed excellent lattice resolution and highly ordered Bragg spots, as demonstrated in Fig. 3.6. Indeed, SFX at an X-Ray FEL was successful with these sorted crystals [45].

TEM imaging of nanocrystals (a) TEM image of PSI crystals prior to microfluidic size fractionation and corresponding Bragg spots after Fourier transformation (inset); (b) TEM image obtained using negative uranyl acetate staining image of PSI crystals after microfluidic size fractionation and corresponding Bragg spots after Fourier transformation (inset). TEM analysis confirms excellent crystallinity of PSI crystals after fractionation (Reproduced with permission of the International Union of Crystallography and adapted from Stevenson et al. [44])

3.3.2.4 Powder Diffraction

As anyone who has grown aesthetically beautiful crystals only to find that they have less than stellar diffraction can attest, you never know until you shoot them! While the size of the samples generally prohibits any single crystal diffraction at home X-Ray sources, nanocrystals are perfectly suited for powder diffraction at more accessible X-Ray sources. If possible, this should be attempted before a serial crystallography experiment. While the low comparative flux can prevent knowledge of the actual diffraction limit that might be attainable at a more powerful source, powder diffraction is the best way to test for a diffracting sample and can differentiate between batches or conditions by comparative resolution limits. It is also very easy to prepare for since typically nanocrystals solutions are already a “powder.” However, at higher resolutions the powder rings become more frequent and faint, eventually causing them to be indiscernible from background, providing a limit on resolution. The powder diffraction rings can be evaluated to get a rough estimate of the crystal size contributing to the powder rings, though resolution of a reliable size estimate is likely limited to a very small size regime (less than a few hundred nanometers). One can also obtain other information such as using the spacing of the rings to obtain information about the lattice spacing in the crystal.

The easiest way to do this is to harvest an aliquot of the sample (about 10 μL of pelleted nanocrystals usually works well) and put them into an X-Ray transparent mountable capillary (e.g., MiTeGen MicroRT). This should be centrifuged to create a dense powder pellet from which the liquid should be removed to ensure density. In practice, some of the mother liquor should be implemented elsewhere in the tube to avoid drying of the pellet, which can affect resolution. Then the sealed capillary can be mounted and data collected on the dense nanocrystalline pellet. Be sure to mimic the conditions as close as possible to your actual experiment (e.g., lighting for light-sensitive proteins, temperature, mother liquor composition).

3.4 Recap

Experimental beamtime at X-Ray FELs are currently much more limited than at other light sources. The careful development and characterization of crystal samples for SFX experiments with X-Ray FELs is thus extremely important. There are unique characteristics that must be considered for each specific experiment, having a profound effect upon the quality of data that can be obtained. Generally, as many characterization methods as possible should be performed and this chapter summarized the most important and currently applied methods. The primary factor to consider for initial nanocrystallization trials are the higher concentrations generally key to converting macrocrystal conditions into nanocrystal conditions. Obtained nanocrystals need to be carefully characterized by size, size homogeneity, density, quantity, and quality, which will all play a critical role in a successful experiment. It is always helpful to carry out cross validation whenever possible while characterizing results with nanocrystals. With the further development and improvement of facilities at the existing X-Ray FEL instruments and the next generation of X-Ray FELs either coming online or in the planning stages, the available characterization facilities are expected to be considerably more abundant and state of the art. This will certainly facilitate crystal characterization prior to SFX with X-Ray FELs for any future experiment. In addition, as Chap. 8 will explore, the movement towards smaller size regimes accessible in crystallography has led to the ability to access interesting characteristics inherent to truly discrete crystals, resulting in new modes of data collection and analysis.

References

Scopes, R. K. (2013). Protein purification: Principles and practice. Berlin, Germany: Springer.

Doublié, S. (2007). Macromolecular crystallography protocols (Vol. 1). New York: Springer.

Jancarik, J., & Kim, S.-H. (1991). Sparse matrix sampling: A screening method for crystallization of proteins. Journal of Applied Crystallography, 24(4), 409–411.

Vekilov, P. G., Feeling-Taylor, A., Yau, S.-T., & Petsev, D. (2002). Solvent entropy contribution to the free energy of protein crystallization. Acta Crystallographica Section D: Biological Crystallography, 58(10), 1611–1616.

Garcıa-Ruiz, J. M. (2003). Nucleation of protein crystals. Journal of Structural Biology, 142(1), 22–31.

Perutz, M. F., Rossmann, M. G., Cullis, A. F., Muirhead, H., Will, G., & North, A. (1960). Structure of hæmoglobin: A three-dimensional Fourier synthesis at 5.5-Å. Resolution, obtained by X-ray analysis. Nature, 185(4711), 416–422.

Giegé, R. (2013). A historical perspective on protein crystallization from 1840 to the present day. The FEBS Journal, 280(24), 6456–6497.

McPherson, A. (2017). Protein crystallization. In Protein Crystallography: Methods and Protocols (pp. 17–50). New York: Springer.

Rupp, B. (2009). Biomolecular crystallography: Principles, practice, and application to structural biology. Abingdon, UK: Garland Science.

Cohen, A. E., Soltis, S. M., Gonzalez, A., Aguila, L., Alonso-Mori, R., Barnes, C. O., Baxter, E. L., Brehmer, W., Brewster, A. S., Brunger, A. T., Calero, G., Chang, J. F., Chollet, M., Ehrensberger, P., Eriksson, T. L., Feng, Y., Hattne, J., Hedman, B., Hollenbeck, M., Holton, J. M., Keable, S., Kobilka, B. K., Kovaleva, E. G., Kruse, A. C., Lemke, H. T., Lin, G., Lyubimov, A. Y., Manglik, A., Mathews, I. I., McPhillips, S. E., Nelson, S., Peters, J. W., Sauter, N. K., Smith, C. A., Song, J., Stevenson, H. P., Tsai, Y., Uervirojnangkoorn, M., Vinetsky, V., Wakatsuki, S., Weis, W. I., Zadvornyy, O. A., Zeldin, O. B., Zhu, D., & Hodgson, K. O. (2014). Goniometer-based femtosecond crystallography with X-ray free electron lasers. Proceedings of the National Academy of Sciences of the United States of America, 111(48), 17122–17127.

Hirata, K., Shinzawa-Itoh, K., Yano, N., Takemura, S., Kato, K., Hatanaka, M., Muramoto, K., Kawahara, T., Tsukihara, T., & Yamashita, E. (2014). Determination of damage-free crystal structure of an X-ray-sensitive protein using an XFEL. Nature Methods, 11(7), 734–736.

Aquila, A., Hunter, M. S., Doak, R. B., Kirian, R. A., Fromme, P., White, T. A., Andreasson, J., Arnlund, D., Bajt, S., Barends, T. R., Barthelmess, M., Bogan, M. J., Bostedt, C., Bottin, H., Bozek, J. D., Caleman, C., Coppola, N., Davidsson, J., DePonte, D. P., Elser, V., Epp, S. W., Erk, B., Fleckenstein, H., Foucar, L., Frank, M., Fromme, R., Graafsma, H., Grotjohann, I., Gumprecht, L., Hajdu, J., Hampton, C. Y., Hartmann, A., Hartmann, R., Hau-Riege, S., Hauser, G., Huaser, H., Hirsemann, P., Holl, J., Holton, M., Hömke, A., Johansson, L., Kimmel, N., Kassemeyer, S., Krasniqi, F., Kühnel, K.-U., Liang, M., Lomb, L., Malmerberg, E., Marchesini, S., Martin, A. V., Maia, F. R., Messerschmidt, M., Nass, K., Schlichting, I., Schmidt, C., Schmidt, K. E., Schulz, J., Seibert, M. M., Shoeman, R. L., Sierra, R., Soltau, H., Starodub, D., Stellato, F., Stern, S., Strüder, L., Timneanu, N., Ullrich, J., Wang, X., Williams, G. J., Weidenspointner, G., Weierstall, U., Wunderer, C., Barty, A., Spence, J. C. H., & Chapman, H. N. (2012). Time-resolved protein nanocrystallography using an X-ray free-electron laser. Optics Express, 20(3), 2706–2716.

Kupitz, C., Basu, S., Grotjohann, I., Fromme, R., Zatsepin, N. A., Rendek, K. N., Hunter, M. S., Shoeman, R. L., White, T. A., Wang, D., James, D., Yang, J.-H., Cobb, D. E., Reeder, B., Sierra, R. G., Liu, H., Barty, A., Aquila, A. L., Deponte, D., Kirian, R. A., Bari, S., Bergkamp, J. J., Beyerlein, K. R., Bogan, M. J., Caleman, C., Chao, T.-C., Conrad, C. E., Davis, K. M., fleckenstein, H., Galli, L., Hau-Riege, S. P., Kassemeyer, S., Laksmono, H., Liang, M., Lomb, L., Marchesini, S., Martin, A. V., Messerschmidt, M., Milathianaki, D., Nass, K., Ros, A., Roy-Chowdhury, S., Schmidt, K., Seibert, M., Steinbrener, J., Stellato, F., Yan, L., Yoon, C., Moore, T. A., Moore, A. L., Pushkar, Y., Williams, G. J., Boutet, S., Doak, R. B., Weierstall,~U., Frank, M., Chapman, H. N., Spence, J. C. H., & Fromme, P. (2014). Serial time-resolved crystallography of photosystem II using a femtosecond X-ray laser. Nature, 513(7517), 261–265.

Pande, K., Hutchinson, C. D. M., Groenhof, G., Aquila, A., Robinson, J. S., Tenboer, J., Basu, S., Boutet, S., Deponte, D., Liang, M., White, T., Zatsepin, N., Yefanov, O., Morozov, D., Oberthuer, D., Gati, C., Subramanian, G., James, D., Zhao, Y., Koralek, J., Brayshaw, J., Kupitz, C., Conrad, C., Roy-Chowdhury, S., Coe, J., Metz, M., Paulraj Lourdu, X., Grant, T., Koglin, J., Ketawala, G., Fromme, R., Srajer, V., Henning, R., Spence, J., Ourmazd, A., Schwander, P., Weierstall, U., Frank, M., Fromme, P., Barty, A., Chapman, H., Moffat, K., Van Thor, J. J., & Schmidt, M. (2016). Femtosecond structural dynamics drives the trans/cis isomerization in photoactive yellow protein. Science, 352(6286), 725–729.

Tenboer, J., Basu, S., Zatsepin, N., Pande, K., Milathianaki, D., Frank, M., Hunter, M., Boutet, S., Williams, G. J., Koglin, J. E., Oberthuer, D., Heymann, M., Kupitz, C., Conrad, C., Coe, J., Roy-Chowdhury, S., Weierstall, U., James, D., Wang, D., Grant, T., Barty, A., Yefanov, O., Scales, J., Gati, C., Seuring, C., Srajer, V., Henning, R., Schwander, P., Fromme, R., Ourmazd, A., Moffat, K., Van Thor, J. J., Spence, J. C. H., Fromme, P., Chapman, H. N., & Schmidt, M. (2014). Time-resolved serial crystallography captures high-resolution intermediates of photoactive yellow protein. Science, 346(6214), 1242–1246.

Kupitz, C., Grotjohann, I., Conrad, C. E., Roy-Chowdhury, S., Fromme, R., & Fromme, P. (2014). Microcrystallization techniques for serial femtosecond crystallography using photosystem II from thermosynechococcus elongatus as a model system. Philosophical Transactions of the Royal Society B: Biological Sciences, 369(1647), 20130316.

Salemme, F. (1972). A free interface diffusion technique for the crystallization of proteins for X-ray crystallography. Archives of Biochemistry and Biophysics, 151(2), 533–539.

Abdallah, B. G., Roy-Chowdhury, S., Fromme, R., Fromme, P., & Ros, A. (2016). Protein crystallization in an actuated microfluidic nanowell device. Crystal Growth & Design, 16, 2074–2082.

Kupitz, C., Olmos, J. L., Jr., Holl, M., Tremblay, L., Pande, K., Pandey, S., Oberthür, D., Hunter, M., Liang, M., Aquila, A., Tenboer, J., Calvey, G., Katz, A., Chen, Y., Wiedorn, M. O., Knoska, J., Meents, A., Mariani, V., Norwood, T., Poudyal, I., Grant, T., Miller, M. D., Xu, W., Tolstikova, A., Morgan, A., Metz, M., Martin-Garcia, J. M., Zook, J. D., Roy-Chowdhury, S., Coe, J., Nagaratnam, N., Meza, D., Fromme, R., Basu, S., Frank, M., White, T., Barty, A., Bajt, S., Yefanov, O., Chapman, H. N., Zatsepin, N., Nelson, G., Weierstall, U., Spence, J., Schwander, P., Pollack, L., Fromme, P., Ourmazd, A., Phillips, G. N., & Schmidt, M. (2017). Structural enzymology using X-ray free electron lasers. Structural Dynamics, 4(4), 044003.

Redecke, L., Nass, K., DePonte, D. P., White, T. A., Rehders, D., Barty, A., Stellato, F., Liang, M., Barends, T. R., Boutet, S., Williams, G. J., Messerschmidt, M., Seibert, M. M., Aquila, A., Arnlund, D., Bajt, S., Barth, T., Bogan, M. J., Caleman, C., Chao, T. C., Doak, R. B., Fleckenstein, H., Frank, M., Fromme, R., Galli, L., Grotjohann, I., Hunter, M. S., Johansson, L. C., Kassemeyer, S., Katona, G., Kirian, R. A., Koopmann, R., Kupitz, C., Lomb, L., Martin, A. V., Mogk, S., Neutze, R., Shoeman, R. L., Steinbrener, J., Timneanu, N., Wang, D., Weierstall, U., Zatsepin, N. A., Spence, J. C., Fromme, P., Schlichting, I., Duszenko, M., Betzel, C., & Chapman, H. N. (2013). Natively inhibited Trypanosoma brucei cathepsin B structure determined by using an X-ray laser. Science, 339(6116), 227–230.

Sawaya, M. R., Cascio, D., Gingery, M., Rodriguez, J., Goldschmidt, L., Colletier, J. P., Messerschmidt, M. M., Boutet, S., Koglin, J. E., Williams, G. J., Brewster, A. S., Nass, K., Hattne, J., Botha, S., Doak, R. B., Shoeman, R. L., DePonte, D. P., Park, H. W., Federici, B. A., Sauter, N. K., Schlichting, I., & Eisenberg, D. S. (2014). Protein crystal structure obtained at 2.9 a resolution from injecting bacterial cells into an X-ray free-electron laser beam. Proceedings of the National Academy of Sciences of the United States of America, 111(35), 12769–12774.

Ostermeier, C., & Michel, H. (1997). Crystallization of membrane proteins. Current Opinion in Structural Biology, 7(5), 697–701.

Fromme, R., Ishchenko, A., Metz, M., Chowdhury, S. R., Basu, S., Boutet, S., Fromme, P., White, T. A., Barty, A., Spence, J. C., Weierstall, U., Liu, W., & Cherezov, V. (2015). Serial femtosecond crystallography of soluble proteins in lipidic cubic phase. IUCrJ, 2(5), 545–551.

Martin-Garcia, J. M., Conrad, C. E., Nelson, G., Stander, N., Zatsepin, N. A., Zook, J., Zhu, L., Geiger, J., Chun, E., Kissick, D., Hilgart, M. C., Ogata, C., Ishchenko, A., Nagaratnam, N., Roy-Chowdhury, S., Coe, J., Subramanian, G., Schaffer, A., James, D., Ketawala, G., Venugopalan, N., Xu, S., Corcoran, S., Ferguson, D., Weierstall, U., Spence, J. C. H., Cherezov, V., Fromme, P., Fischetti, R. F., & Liu, W. (2017). Serial millisecond crystallography of membrane and soluble protein microcrystals using synchrotron radiation. IUCrJ, 4(4), 439–454.

Weierstall, U., James, D., Wang, C., White, T. A., Wang, D., Liu, W., Spence, J. C., Doak, R. B., Nelson, G., & Fromme, P. (2014). Lipidic cubic phase injector facilitates membrane protein serial femtosecond crystallography. Nature Communications, 5, 3309.

Ibrahim, M., Chatterjee, R., Hellmich, J., Tran, R., Bommer, M., Yachandra, V. K., Yano, J., Kern, J., & Zouni, A. (2015). Improvements in serial femtosecond crystallography of photosystem II by optimizing crystal uniformity using microseeding procedures. Structural Dynamics, 2(4), 041705.

Kirian, R. A., Wang, X., Weierstall, U., Schmidt, K. E., Spence, J. C., Hunter, M., Fromme, P., White, T., Chapman, H. N., & Holton, J. (2010). Femtosecond protein nanocrystallography—Data analysis methods. Optics Express, 18(6), 5713–5723.

Uervirojnangkoorn, M., Zeldin, O. B., Lyubimov, A. Y., Hattne, J., Brewster, A. S., Sauter, N. K., Brunger, A. T., & Weis, W. I. (2015). Enabling X-ray free electron laser crystallography for challenging biological systems from a limited number of crystals. eLife, 4, e05421.

Ginn, H. M., Brewster, A. S., Hattne, J., Evans, G., Wagner, A., Grimes, J. M., Sauter, N. K., Sutton, G., & Stuart, D. (2015). A revised partiality model and post-refinement algorithm for X-ray free-electron laser data. Acta Crystallographica Section D: Biological Crystallography, 71(6), 1400–1410.

Ginn, H. M., Messerschmidt, M., Ji, X., Zhang, H., Axford, D., Gildea, R. J., Winter, G., Brewster, A. S., Hattne, J., Wagner, A., Grimes, J. M., Evans, G., Sauter, N. K., Sutton, G., & Stuart, D. I. (2015). Structure of CPV17 polyhedrin determined by the improved analysis of serial femtosecond crystallographic data. Nature Communications, 6.

Spence, J. C. H., Weierstall, U., & Chapman, H. N. (2012). X-ray lasers for structural and dynamic biology. Reports on Progress in Physics, 75(10), 102601.

Brehms, W., & Diederichs, K. (2014). Breaking the indexing ambiguity in serial crystallography. Acta Crystallographica Section D, D70, 101–109.

Abdallah, B. G., Zatsepin, N. A., Roy-Chowdhury, S., Coe, J., Conrad, C. E., Dörner, K., Sierra, R. G., Stevenson, H. P., Grant, T. D., Nelson, G., James, D. R., Calero, G., Wachter, R. M., Spence, J. C. H., Weierstall, U., Fromme, P., & Ros, A. (2015). Microfluidic sorting of protein nanocrystals by size for XFEL diffraction. Structural Dynamics, 2, 041719.

Abdallah, B. G., Chao, T. C., Kupitz, C., Fromme, P., & Ros, A. (2013). Dielectrophoretic sorting of membrane protein nanocrystals. American Chemical Society Nano, 7(10), 9129–9137.

Haupert, L. M., & Simpson, G. J. (2011). Screening of protein crystallization trials by second order nonlinear optical imaging of chiral crystals (SONICC). Methods, 55(4), 379–386.

Kissick, D. J., Wanapun, D., & Simpson, G. J. (2011). Second-order nonlinear optical imaging of chiral crystals. Annual Review of Analytical Chemistry, 4, 419.

Madden, J. T., DeWalt, E. L., & Simpson, G. J. (2011). Two-photon excited UV fluorescence for protein crystal detection. Acta Crystallographica Section D: Biological Crystallography, 67(10), 839–846.

Pecora, R. (2000). Dynamic light scattering measurement of nanometer particles in liquids. Journal of Nanoparticle Research, 2(2), 123–131.

Schubert, R., Meyer, A., Dierks, K., Kapis, S., Reimer, R., Einspahr, H., Perbandt, M., & Betzel, C. (2015). Reliably distinguishing protein nanocrystals from amorphous precipitate by means of depolarized dynamic light scattering. Journal of Applied Crystallography, 48(5), 1476–1484.

Schmitz, K. S. (1990). An introduction to dynamic light scattering of macromolecules. Kansas City, MO: University of Missouri.

Rasband, W. (1997). ImageJ. Bethesda, MD: US National Institutes of Health.

Bai, K., Barnett, G. V., Kar, S. R., & Das, T. K. (2017). Interference from proteins and surfactants on particle size distributions measured by nanoparticle tracking analysis (NTA). Pharmaceutical Research, 34(4), 800–808.