Abstract

This chapter describes and illustrates how concepts of ecological integrity, thresholds, and reference conditions can be integrated into a research and monitoring framework for natural resource management. Ecological integrity has been defined as a measure of the composition, structure, and function of an ecosystem in relation to the system’s natural or historical range of variation, as well as perturbations caused by natural or anthropogenic agents of change. Using ecological integrity to communicate with managers requires five steps, often implemented iteratively: (1) document the scale of the project and the current conceptual understanding and reference conditions of the ecosystem, (2) select appropriate metrics representing integrity, (3) define externally verified assessment points (metric values that signify an ecological change or need for management action) for the metrics, (4) collect data and calculate metric scores, and (5) summarize the status of the ecosystem using a variety of reporting methods. While we present the steps linearly for conceptual clarity, actual implementation of this approach may require addressing the steps in a different order or revisiting steps (such as metric selection) multiple times as data are collected. Knowledge of relevant ecological thresholds is important when metrics are selected, because thresholds identify where small changes in an environmental driver produce large responses in the ecosystem. Metrics with thresholds at or just beyond the limits of a system’s range of natural variability can be excellent, since moving beyond the normal range produces a marked change in their values. Alternatively, metrics with thresholds within but near the edge of the range of natural variability can serve as harbingers of potential change. Identifying thresholds also contributes to decisions about selection of assessment points. In particular, if there is a significant resistance to perturbation in an ecosystem, with threshold behavior not occurring until well beyond the historical range of variation, this may provide a scientific basis for shifting an ecological assessment point beyond the historical range. We present two case studies using ongoing monitoring by the US National Park Service Vital Signs program that illustrate the use of an ecological integrity approach to communicate ecosystem status to resource managers. The Wetland Ecological Integrity in Rocky Mountain National Park case study uses an analytical approach that specifically incorporates threshold detection into the process of establishing assessment points. The Forest Ecological Integrity of Northeastern National Parks case study describes a method for reporting ecological integrity to resource managers and other decision makers. We believe our approach has the potential for wide applicability for natural resource management.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Assessment point

- Communication tool

- Conceptual diagram

- Condition metric

- Ecological integrity

- Ecological threshold

- Forest

- Index of biological integrity

- Natural variability

- Wetland

Introduction

Ecological thresholds have been defined in many ways, including the commonly used definition from Groffman et al. (2006): “an ecosystem quality, property or phenomenon … where small changes in an environmental driver produce large responses in the ecosystem.” As scientists tasked with monitoring long-term trends in natural resource conditions, we are keenly interested in using multiple methods to detect important thresholds, be they strict ecological thresholds as defined by Groffman et al. (2006), or simply a point along a continuum that reflects a shift to an undesirable state. As communicators who are required to convey complex results to decision makers and the public, we need a simple, flexible framework suitable for reporting data analyses in a way that can be easily understood and applied. The goal of this chapter is to provide you with an approach, based on the concept of ecological integrity , that incorporates threshold ideas and reference conditions and is broadly applicable for presenting research and monitoring results to decision makers. We present two case studies using ongoing monitoring by the US National Park Service Vital Signs program (Fancy et al. 2009) that illustrate the use of an ecological integrity approach to communicate ecosystem status to resource managers. Each example has different objectives and a different emphasis in order to demonstrate some of the range of applications of the general approach.

“Ecological integrity” builds on the related concepts of biological integrity and ecological health, and is a useful endpoint for ecological assessment and reporting (Czech 2004). “Integrity” is defined as the quality of being unimpaired, sound, or complete. To have integrity, an ecosystem should be relatively unimpaired across a range of characteristics, and across spatial and temporal scales (De Leo and Levin 1997). Ecological integrity has been defined as a measure of the composition, structure, and function of an ecosystem in relation to the system’s natural or historical range of variation, as well as perturbations caused by natural or anthropogenic agents of change (Karr and Dudley 1981). An ecological system has integrity “when its dominant ecological characteristics (e.g., elements of composition, structure, function, and ecological processes) occur within their natural ranges of variation and can withstand and recover from most perturbations imposed by natural environmental dynamics or human disruptions” (Parrish et al. 2003).

As Tierney et al. (2009) describe, ecological integrity can be difficult to assess. One approach builds on the Index of Biological Integrity (IBI) , which was originally used to interpret stream integrity based on 12 metrics that reflected the condition, reproduction, composition, and abundance of fish species (Karr 1981). Each metric was rated by comparing measured values with the values expected under relatively unimpaired conditions, and the ratings were aggregated into a total score. Related biotic indices have sought to assess the integrity of other aquatic and wetland ecosystems , primarily via faunal (and more recently, floral) assemblages. Building upon this foundation, others have suggested measuring the integrity of ecosystems by developing suites of indicators or metrics comprising the key biological, physical, and functional attributes of those ecosystems (Andreasen et al. 2001; Parrish et al. 2003; Mack and Kentula 2010).

For the purpose of communicating information about ecosystem condition to managers, ecological integrity can be summarized as one or more metrics of ecosystem composition, structure, and function. The acceptable ranges of these metrics are established through knowledge of their natural variability at defined spatial and temporal scales and their resistance to perturbation (Tierney et al. 2009). In some cases, extensive data sets and prior research are available to determine the natural range of variation ; in other cases, an initial period of baseline data collection or expert judgment can be used to establish the acceptable ranges. Regardless of the specifics of how these ranges are developed, attention to potential ecological thresholds is important. Managers are particularly concerned about nonlinear effects near thresholds that produce outsized impacts on resource condition or shift ecosystems into new and unnatural stable states (Groffman et al. 2006). An example of such a dramatic shift in an ecosystem’s state (cited in Groffman et al. 2006) is Florida Bay, which in the 1990s abruptly shifted from an oligotrophic clear water system dominated by seagrasses to a turbid system dominated by phytoplankton blooms. Knowledge of the strength and location of thresholds like the one that led to the ecological shift in Florida Bay allows scientists and managers to develop precautionary “assessment points” for metrics that will trigger action before the threshold is reached (Bennetts et al. 2007). In this chapter, we use the term “ecological threshold” in the sense implied by Groffman et al. (2006), to refer to a nonlinear response by a system to a stressor. We follow Bennetts et al. (2007) in their use of “assessment point” to refer to a value along the continuum of a metric that has relevance to managers, including an ecological threshold.

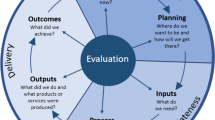

Using ecological integrity to communicate with managers requires five steps, often implemented iteratively: (1) document the scale of the project and the current conceptual understanding of the ecosystem, (2) select appropriate metrics, (3) define assessment points, (4) collect data and calculate metrics, and (5) produce a report or other communication tool . In particular, steps 2 and 3 may be revisited multiple times as a monitoring program develops and data is collected and analyzed, causing scientists to rethink the metric choices and assessment points. This five-step process shares a number of characteristics with other frameworks for developing research and monitoring programs (e.g., Fancy and Bennetts 2012). The next sections cover these steps in depth, and highlight places where knowledge of ecological thresholds fits into the framework. We then present two case studies illustrating the use of an ecological integrity approach to communicate ecosystem status to resource managers.

The Ecological Integrity Framework

Define Scale and Develop Conceptual Diagram

Begin by defining the scale, specifically the spatial and temporal scale of the ecological system being evaluated. This includes documenting the geographic boundary of the system and the specific features of the system within that boundary. The spatial scale is equivalent to a statistical population, and can be general (e.g., forests of the USA) or specific (e.g., Pitch Pine Woodlands in Acadia National Park greater than 0.5 ha in areal extent and correctly identified on the 2003 vegetation map). The temporal scale is also important, and includes consideration of the timing of data collection (e.g., summer only or year-round) and the planned duration (e.g., one time or repeated). Clear spatial and temporal scales are essential for data collection, and will help guide the development of the conceptual diagram and metrics.

Conceptual ecological diagrams or models that describe major ecosystem functions and delineate linkages between key ecosystem attributes and known stressors or agents of change are an essential tool for identifying and interpreting metrics with high ecological and management relevance (Fig. 10.1) (Noon 2003). The specific features of conceptual diagrams can vary, and the approach can include models that organize the linkages among on-site condition and patch size with surrounding landscape attributes (Unnasch et al. 2009, Faber-Langendoen et al. 2012). Here we focus on the primary components of integrity: composition, structure, and function. Composition refers to the species making up the ecosystem , including overall species richness and evenness. Structure means the physical characteristics of the system at multiple scales, including vertical stratification, physical substrates and microhabitats, and landscape level features like patchiness and connectivity. Function covers dynamic characteristics like species demography and interactions as well as ecological processes like carbon, nitrogen, and water cycling. For each of these components, it is essential to document the important ecological features, and how they relate to one another, including aspects of the ecosystem that are important for resource managers.

Example conceptual diagram documenting the expected effects of climate change on tidal marsh systems. (From Stevens et al. 2010)

Next, consider the ecosystem drivers, or the factors that work to maintain the system in its current state, and stressors that can disrupt the system. Formally, drivers are external forces like climate, fire, and natural disturbance that have large scale influences on natural systems (National Park Service 2012). In contrast, stressors are perturbations to a system that are either foreign to the system or are applied at an excessive (or deficient) level (Barrett et al. 1976). Stressors therefore can cause a shift in the status of a driver, with potentially cascading effects on the ecosystem . While considering the ecosystem drivers, think about and document the different pathways through which the drivers and stressors can affect ecosystem composition, structure, and function. It may help to distinguish between two different types of drivers: external drivers (like climate) create an effect, while internal drivers (like nutrient levels) convey the effect to the biota. Understanding the linkages whereby internal drivers mediate or transfer the effects of human disturbance to the biotic communities can be important for devising interventions to restore the system. The conceptual diagram is also the first place to consider the potential impacts of ecological thresholds . Are some stressors more likely to produce nonlinear or threshold effects on ecosystems than others? For example, will an increase in atmospheric deposition of nitrogen cause a sudden shift in trophic status of an aquatic system, or a gradual change?

Your conceptual diagram may be a simple figure with supplemental text that describes ecological components and potential effects of stressors (e.g., Mitchell et al. 2006), or it may be a highly structured set of models and submodels that makes specific hypotheses about the mechanistic relationships between model elements (e.g., Miller et al. 2010a). Whatever the level of detail chosen, the goal is to formally document the current understanding of the ecosystem —including known and potential threshold effects—in a way that supports the selection of a suite of metrics suitable for representing ecological integrity . Looking to the future, statistical methods now exist that can permit conceptual diagrams to be translated into formal causal network hypotheses, which can be evaluated using empirical data (Grace et al. 2010). As knowledge of the ecosystem improves, the conceptual diagram or model should be periodically updated, and the changes should be reviewed to determine whether changes in metrics or assessment points are warranted.

Select Metrics

The second step in determining ecological integrity is identifying a limited number of metrics that best distinguish condition classes or gradients from a highly impacted, degraded, or depauperate state to a relatively unimpaired, complete, and functioning state. These metrics can be a single response measure (field measurement) but more commonly they are calculated values based on field data. They may be properties that typify a particular ecosystem or attributes that change predictably in response to anthropogenic stress . The suite of metrics selected should be comprehensive enough to incorporate composition, structure, and function of an ecosystem across the spatial and temporal scales defined at the beginning of the previous step. Ideally, indicators of the magnitude of key stressors acting upon the system will be included to increase understanding of the relationships between stressors and effects (Tierney et al. 2009). Developing effective metrics requires access to existing studies or pilot data so that a variety of metrics can be calculated and assessed; this process may be iterative, as initial data collection efforts demonstrate the need for revised metrics and potentially different data.

When choosing metrics, consider the following four fundamental questions (Kurtz et al. 2001): (1) Is the metric conceptually relevant? Conceptually relevant metrics are related to the characteristics of the ecosystem or to the stressors that affect its integrity, and can provide information that is meaningful to resource managers. (2) Can the metric be feasibly implemented? The most feasible metrics can be sampled and measured using methods that are technically sound, appropriate, efficient, and inexpensive. (3) Is the response variability understood? Every metric has an associated measurement error, temporal variability , and spatial variability, and the best metrics will have low error and variability compared to the variability in the ecological component or stressor it is designed to measure. In other words, good metrics have high discriminatory ability, and the signal from the metric is not lost in measurement error or environmental noise. Ideally the metric will be measured across a range of sites that span the gradient of stressor levels (DeKeyser et al. 2003), and verified to show a clear response to the stressor. (4) Is the metric interpretable and useful? The best metrics provide information on ecological integrity that is meaningful to resource managers.

Part of the process of selecting metrics should include exploring the relationship between each metric and ecological condition, with explicit consideration of threshold behavior. Indicators with thresholds at or just beyond the limits of a system’s range of natural variability (Fig. 10.2a) can be excellent ecological integrity metrics, since in this case, moving beyond the normal range produces a marked change in the value of the indicator that should be easier to detect. Indicators with thresholds within but near the edge of the range of natural variability (Fig. 10.2b) can also be suitable ecological integrity metrics, because they can serve as an early warning of potential change. However, indicators with thresholds far inside or outside the range of natural variability (Fig. 10.2c, d) are usually poor ecological integrity metrics, since they can lead to false alarms or not show a change until after the ecosystem has fundamentally changed (although see below for a situation where Fig. 10.2d may be a good metric).

Determine Assessment Points

Once you have selected metrics, review the list and think about how you plan to report ecological integrity to decision makers. Is it important for describing the overall condition of systems to be able to arrive at a single number representing ecological integrity derived from the suite of metrics, such as through an Index of Biotic Integrity or other modeling approach (Karr 1991)? Or, will it be more valuable to provide a set of metrics that reflects different components of the system’s overall integrity? A single value is often attractive because of its simplicity, but you risk oversimplification and difficulty interpreting its meaning. If you decide to combine a set of metrics into a single summary metric, any data analyses and modeling should be clearly documented, with assessment points usually developed for the summary metric rather than the component metrics. On the other hand, a suite of metrics can provide more nuanced insight into particular aspects of ecological integrity that may be at risk. In most situations, it will help to present a combination metric like an IBI as an overall summary, while also including some or all of its component metrics, which may have more direct management relevance and be easier to interpret.

For each final metric, establish assessment points that distinguish expected or acceptable conditions from undesired ones that warrant concern, further evaluation, or management action (Bennetts et al. 2007). Assessment points are “preselected points along a continuum of resource-indicator values where scientists and managers have together agreed that they want to stop and assess the status or trend of a resource relative to program goals, natural variation, or potential concerns” (Bennetts et al. 2007). Based on Bennetts et al., we define two categories of assessment points that are useful for ecological integrity reporting: ecological assessment points related to ecosystem condition, and management assessment points derived from the goals of resource managers. Types of management assessment points include surveillance assessment points that indicate when extra attention, research, and planning are needed; and action assessment points that define when management action should be taken. Two or more assessment points can share the same metric value, such as when action and ecological assessment points are identical. Alternatively, one category of assessment point may have multiple values, such as when one ecological assessment point represents the point where a system exceeds its range of natural variation and additional ecological assessment points indicate different levels of degradation.

Ecological assessment points are derived from some characterization of either natural or historical variability. Estimates of historical or natural variation in ecosystem attributes provide a reference for gauging the effects of current anthropogenic stressors , while at the same time recognizing the inherent natural variation in ecosystems across space, time, and stages of ecological succession (Landres et al. 1999). This may be empirically derived from the extant distribution of a metric across a defined spatial and temporal scale (especially of relatively pristine ecosystems like large wilderness areas or national parks), inferred from the best available information prior to meaningful anthropogenic disturbance (e.g., paleoecological reconstructions) or via models of ecosystem dynamics. In some cases, there is no relevant existing data for one or more metrics, and in these cases, initial assessment points should be established based on expert judgment or baseline data collection (e.g., the first 5 years of data, assuming the sample design is appropriate for this purpose). Although all of these provide useful insight, our understanding of historical and natural conditions in many ecosystems relies on a limited number of key studies, and care must be taken when extrapolating these data to other areas (Tierney et al. 2009). Whatever the source of the data, our understanding of the range of natural variation and any ecological assessment points based on this knowledge need to be periodically reviewed and updated to ensure that we are using the best available information for decisions.

If you are confident that a nonlinearity in a metric’s functional form corresponds to a true ecological threshold , it may make sense to use the threshold as an ecological assessment point rather than strictly relying on the range of natural variability . This aligns with the idea of ecological integrity including resistance to perturbation in addition to the historical or natural variation in the system (Parrish et al. 2003). Even though an ecosystem component may function within a certain range, it may be that integrity (as measured by the particular metric) does not change noticeably unless the range of natural variation is exceeded by a large amount (e.g., Fig. 10.2d). In this situation, ecological integrity may not be threatened by exceeding the range of natural variation, but it would be altered by exceeding the ecological threshold , so the latter point may be more suitable for an ecological assessment point.

In many cases, the point where a metric’s value indicates that an ecosystem has exceeded its range of natural variation—a critical ecological assessment point —can also be used as an action assessment point. This is the point where active steps need to be taken to bring the ecosystem back within the natural range. A separate surveillance assessment point can be established near this point but within the natural range of variation , indicating a need for vigilance and planning for potential corrective measures (Fig. 10.3). In other cases, particularly when metrics exhibit thresholds near an ecological assessment point, the placement of action and surveillance assessment points may need to be adjusted (Fig. 10.4). Setting an action assessment point near the ecological threshold will maximize the discriminatory ability of the assessment effort and help ensure that action is not taken in the absence of a real change in ecological integrity . A surveillance assessment point is best set where the metric’s value begins to enter the zone where small changes in the ecological state begin to produce a large effect on the metric (Fig. 10.4). In making these decisions, it is important to consider the lag times associated with system response, however, because lag responses can increase the need to anticipate a system’s approach to a threshold so that actions can prevent further degradation (Contamin and Ellison 2009).

Action and surveillance assessment points may be shifted from an ecological assessment point in some additional situations. One of these is when there is high uncertainty in measurements of a metric. In this case, one must balance the risk of delaying discovery of an ecological problem with that of falsely identifying a problem. If you determine the risk of delaying discovery to be more important, assessment points should be shifted inside the natural range of variation . If the risk of falsely reporting a problem is more important, then shift the assessment point outside the natural range of variation . You may also want to consider dropping this metric, improving the precision of the measurement, or quantifying measurement error through quality assurance and quality control procedures.

Another type of situation occurs when resource managers have a goal other than ecological integrity , or when an ecosystem is already well outside of ecological integrity and interim recovery goals are needed. In these cases, ecological assessment points still serve as valuable, science-based benchmarks, but the action and surveillance points will likely be set relative to management targets or “utility thresholds” (Nichols et al. 2012) chosen for their relevance to decision makers. For example, managers of a historic site or a military base may be willing to accept some deviations from ecological integrity in order to preserve the historic scene or military readiness, and can benefit from working with scientists to set reasonable action and surveillance assessment points that protect ecological integrity as much as possible.

After establishing assessment points for each metric, you should thoroughly document the relevant spatial and temporal scales, information used in determining the natural range of variation and resistance to perturbation, implications of sampling uncertainty , and all decisions regarding where to place assessment points. These decisions may be based on statistical analyses, professional judgment (e.g., through discussions with resource managers), or they may be somewhat arbitrary. It is important to make the basis of all decisions clear and easily accessible in order to facilitate periodic reviews and revisions (Fancy et al. 2009).

Collect Data and Calculate Metrics

Some amount of data collection probably happened before metrics were chosen and assessment points defined, and this information is important to the previous steps and for future iterations of the ecological integrity framework . Existing data can be particularly valuable in determining whether metrics are feasible, with appropriately understood response variability (Kurtz et al. 2001). In many cases, though, the conceptual diagramming and metric selection process identifies new or different metrics that have not previously been collected or calculated, so new data and analyses are needed before indicators of ecological integrity can be estimated. Data collection should be matched to the desired spatial and temporal scale defined in the first step of the framework. This typically entails a sampling design focused on the statistical population, but it is also possible to use a well-chosen set of index sites to document site-specific trends, although this prohibits rigorous extrapolation to the full population.

Regularly scheduled new data collection and metric calculation, typically integrated into long-term ecological monitoring using detailed protocols (see Oakley et al. 2003 for guidelines), is essential for providing up-to-date ecological integrity data to decision makers. An extensive longitudinal data set for a population of sites provides a foundation for testing hypotheses about relationships among ecological components and stressors that are based on the conceptual diagram , and facilitates updating the diagram. Longitudinal data also help to clarify temporal variability of metrics and can uncover metrics that are highly correlated and thus duplicative and unnecessary for continued use in ecological integrity reporting.

Report Results

The final step in the ecological integrity framework is to ensure that results reach the hands of decision makers in a timely manner and in a format that is accessible and useful. This can be a one or two page “brief” that presents the highlights for upper-level administrators or a longer report with more detail for resource managers. Regardless of format, the information should describe the spatial and temporal scale and refrain from extrapolating beyond the data. It should include a simple summary that illustrates metric values for sites or management units in relation to the established assessment points , plus audience-appropriate explanations of each metric and key findings and recommendations. Ecological integrity reports also need to highlight measurement or other uncertainties, including uncertainties about ecological assessment points.

Although a variety of reporting approaches are possible, some of the authors (B. Mitchell, G. Tierney, and K. Miller) have had success using a “stoplight” system, where “Good” (green) represents an acceptable condition, “Caution” (yellow) indicates that the surveillance assessment point has been passed and a problem may exist, and “Significant Concern” (red) means that the action assessment point has been passed and that an undesirable condition exists that may require management correction (Tierney et al. 2009). A similar approach would categorize the condition of sites as “Good,” “Moderate,” and “Poor” (e.g., James-Pirri et al. 2012). It is important not to raise a false alarm when historical information or the data have high levels of uncertainty . One way to avoid this pitfall is by avoiding use of the “Significant Concern” category for metrics where there is uncertainty about the location of an ecological assessment point ; in these cases, it may help to define a “Caution” or surveillance assessment point and defer decisions on other assessment points until additional data are available.

In our experience, the most effective reporting approach has been a tiered system, with short summaries pointing the way to a more detailed report that contains links or references to the most detailed raw data, descriptions of the conceptual diagram , metrics, assessment points, and data collection methods. Tiered reports allow decision makers to start with the simplest summaries, and drill down to the level of detail that is most appropriate for them. This approach also ensures maximum transparency, by making it easy to find the raw data, rationale for the choice of metrics, justifications for the assessment points, and data collection methods.

Wetland Ecological Integrity in Rocky Mountain National Park

The National Park Service (NPS) Rocky Mountain Inventory and Monitoring Network (ROMN) is using the ecological integrity framework to monitor and report the condition of wetlands in several park units. Here we focus on the process followed in Rocky Mountain National Park (ROMO). ROMO is a large park in the North Central Rockies of Colorado. Most of the park is designated wilderness, and it has important wetland resources that support iconic wildlife such as elk and beaver.

Define Scale and Develop Conceptual Diagram

The primary spatial scales of interest for wetland monitoring in ROMO included specific individual wetlands as well as the complete population of wetlands across the park. Most sites were selected using a spatially balanced survey design (Stevens and Olsen 2004) that allows unbiased estimation (Olsen et al. 1999) for the population of wetlands in the park. Because implementing a survey in a park like ROMO is expensive, these sites are sampled across time using a paneled structure (Urquhart and Kincaid 1999). Additional annual monitoring is conducted at a subset of hand-picked “sentinel” wetlands that are either representative of key wetland types in the park or have management significance. Sentinel sites allow more detailed treatments of the ecology of place (Billick and Pierce 2010) and are more efficient to monitor, but do not statistically represent wetland resources throughout the park. The temporal scale of interest is long term. Shorter term variation is important and the sample design and analyses attempt to accommodate it, but the true power and utility of the approach may not be realized for several years.

As with all NPS monitoring networks, ROMN developed conceptual diagrams as part of its general monitoring plan (Britten et al. 2007). These were revisited during the development of the wetland monitoring protocol (Fig. 10.5) (Schweiger et al. 2010a, 2010b) to ensure that park-specific drivers and stressors were included (in ROMO, beaver and ungulate herbivory), as well as more global threats like anthropogenic hydrological alterations (Gage and Cooper 2009), climate change (Field et al. 2007), and aerial nitrogen deposition (Baron et al. 2009).

Select Metrics

Metrics were selected by the ROMN using two strategies. First, several were defined a priori based on conceptual diagramming. Second, and perhaps more importantly, a set of metrics was developed using a large pilot effort in the park and a series of analyses and models (summarized below). This ensured that the metrics would be scale-appropriate, ecologically responsive, efficient, and logistically feasible given budget constraints. Using pilot data also allowed the ROMN to work with resource managers to evaluate the management relevance of candidate metrics. All metrics were related to ecosystem composition , structure, function, and key stressors—the core elements of ecological integrity.

Compositional metrics were based on the wetland vegetation assemblage , which was particularly important at ROMO because wetlands are biodiversity hotspots , containing approximately 37 % of the park’s plant taxa within less than 4 % of its area (Schweiger et al. 2010b). An a priori decision was made to focus on wetland vegetation as the primary biological response measure given cost considerations, the integrative and likely sensitive response of vegetation to wetland disturbance (Mack 2001), and its central role in nearly all wetland functions . Vascular and nonvascular vegetation was sampled using a suite of nested plots at each site (Peet et al. 1998), and the data were developed into both individual metrics and Indices of Biotic Integrity (IBI) for each wetland type.

Wetland extent was the primary structural metric. This is because larger wetlands are likely better buffered from disturbance; their vegetation typically remains more intact and diverse (Risvold and Fonda 2001); and hydrologic services like water storage and purification function more naturally (Cooper et al. 2006; Mitsch and Gosselink 2007). Extent was quantified using the survey design and analysis of field assays of individual wetland complex area and type.

Hydrology can serve a structural and functional role in wetlands , and was also selected as a core metric. The hydrology of a wetland is likely one of the most important drivers of its extent, type, and condition (Gage and Cooper 2009), but because hydrology primarily affects wetlands via patterns in hydrologic variability, it is a difficult and expensive metric to monitor. ROMN measured instantaneous ground water depths at the peak of vegetation growth and development, when deviations from the range of natural variation should be most meaningful. ROMN also continuously recorded water table depths at sentinel sites and will integrate these more meaningful data in the future.

Other functional metrics were related to water chemistry and wetland soils . For water chemistry, ROMN focused on data that could be collected using a hand-held probe: pH, specific conductance, and temperature. Nutrients and other analytes were considered, but ROMN decided that the laboratory costs would be too high. The network addressed wetland soils by determining percent organic matter, depth of peat, and a suite of structural aspects like texture and horizon depths at their monitoring sites. A more detailed set of parameters including minerals, soil pH, carbon and nitrogen content, and cation exchange capacity were collected at sentinel sites, and these more complete characterizations of the soil resource will be integrated in the future.

The ROMN wetland conceptual diagram included stressors with strong hypothesized or known effects on wetland ecological integrity . Anthropogenic disturbance was estimated at the site, meso scale (wetland buffers), and landscape scale (the catchment of each wetland) through a series of measures of land use and cover, hydrologic alterations, and physical/chemical disturbances. Example response measures included estimates of intensive land use such as roads, trails, structures, dams, and ditches that have been shown to strongly influence wetland condition (Mack 2007; Lemly and Rocchio 2009). The individual disturbance indicators were combined into a metric of Human Disturbance Index (HDI) following an approach similar to Faber-Langendoen et al. (2006) and Lemly and Rocchio (2009). The HDI provides an independent measure of wetland condition against which vegetation attributes can be assessed to determine their relationship with human disturbance.

To incorporate the important role of natural disturbances in the park, several measures of stress not directly due to anthropogenic factors were developed. For example, beavers play an important role in shaping and maintaining wetlands in the park (Baker et al. 2005), and the network included a metric of the extent of beaver presence in ROMO wetlands. Similarly, the large elk herd at the park is a stressor to woody species like Salix spp. (Baker et al. 2005), and ROMN defined three browse metrics, including percent of dead stems, percent of crown dieback, and percent of browsed live stems.

Determine Assessment Points

Several of the ROMN’s ecological assessment points were developed based on existing literature (especially Faber-Langendoen et al. (2006) and Lemly and Rocchio (2009)), collective experience with wetlands in the park, and discussions with park resource managers. This was the case for wetland extent, fen hydrology, some water chemistry parameters, most soil metrics, and elk browse. These responses had some existing science to support their assessment points, but they were not always specific to ROMO and therefore may not necessarily reflect wetland ecology in the park. These points represent a starting point for assessing ecological integrity , and will be reviewed as more data are collected and additional research is conducted.

A key element of the ROMN approach is the empirical development of park-specific reference conditions and ecological assessment points for wetland vegetation. The ROMN protocol adopted and modified methods for quantifying reference distributions and ecological assessment points created over the last two decades (Stoddard et al. 2006). The ROMN felt this was necessary because of the paucity of established assessment points or relevant thresholds for wetland vegetation , as well as the possible inappropriateness of applying existing regional research results to ROMO. National parks like ROMO are often unique landscapes with largely intact habitats and few of the anthropogenic stressors that structure wetland condition in more developed landscapes. There are gradients in human disturbance across the park, but they encompass a different range than broader landscapes and likely reflect different stressors.

The ROMN approach required several steps and was based on pilot data from over 300 sample events at 140 sites collected between 2007 and 2009. First, data were classified into three wetland types (fens, wet meadows, and riparian) based on extensive prior wetland classification work in the region (Cooper 1998). Then the HDI was generated for each site, and assigned to a priori disturbance classes based on Colorado Natural Heritage Program break points established using professional judgment (Fig. 10.6). Third, metrics that described distinct responses of vegetation to anthropogenic disturbance were generated. Examples of metrics include percent invasive species (which might be expected to increase with disturbance), mean conservatism score (a measure of the fidelity of plant species to intact or degraded habitat that decreases with disturbance; Wilhelm and Masters 1995), and percent moss cover (which tends to decrease with disturbance). In total, ROMN created over 130 candidate metrics. The best metrics were selected from the full list by choosing the ones that were most strongly predictive of the anthropogenic disturbance gradient and that passed various statistical tests (including information content, reproducibility, independence from other metrics, and interpretability; Stoddard et al. 2008). The final metrics had meaningful responses to disturbance, were ecologically interpretable, were not redundant, and had favorable precision. ROMN also looked at relationships with environmental gradients like elevation and precipitation. If a metric responded to a natural environmental driver in the reference set of wetlands, ROMN statistically adjusted the data to remove the influence (Stoddard et al. 2008). This step was important in ROMO because several metrics did covary with environmental features, and these relationships can confound our ability to detect a response to the HDI. Finally, the best metrics were summed and scaled to range from 0 to 10, with ecological integrity increasing with the score. This final combined metric is an Index of Biological Integrity (IBI; Karr 1991; Mack 2001; Miller et al. 2006; Mack 2007) that the ROMN interprets as a synthetic estimate of wetland ecological integrity in the park.

Map of Human Disturbance Index (HDI) values in ROMO wetlands (all types). HDI ranges from ~ 0 to ~ 100. Reference, impacted, and highly impacted sites as defined by Colorado Natural Heritage Program (CNHP) arbitrary breakpoints (< 33.67 reference, 33.67–66.67 impacted, and > 66.67 highly impacted) are defined by the size and color of each point (larger circles and redder colors indicate sites with more human disturbance). A clear gradient exists from high-to-low HDI scores with higher disturbance in low elevation front country wetlands on both the east and west sides of the park

The final IBI for each of the three wetland types contained between four and six component metrics that described a broad spectrum of wetland vegetation response to disturbance. The component metrics were distinct for each wetland type, but metrics based on either species invasiveness or species conservatism occur in each IBI. While the component metrics all had significant relationships with disturbance, each final index had stronger relationships (R2 between 0.30 and 0.61) with the HDI than the individual component metrics. This result suggests that the indices were integrating ecological response and were likely meaningful indictors of the ecological integrity of wetlands in the park (Karr and Chu 1997).

To incorporate relevant ecological thresholds and establish ecological assessment points, the ROMN conducted a series of analyses to define condition classes specific for each wetland type. Regression tree models were used to determine change points in a predictor variable that best distinguished groups of values of a response variable. Reciprocal regression tree analyses (De’ath and Fabricius 2000) were conducted, one using IBI values as the predictor and HDI as the response and one vice versa to estimate these thresholds for each variable. Figure 10.7 is a riparian wetland example of the relationship between IBI and HDI, including the calculated threshold values. All three IBIs were best split into only two classes: “reference” or “nonreference.” Importantly, these ratings are specific to ROMO. In other landscapes, only two classes might suggest the final model was not very precise, but the ROMN believes this accurately describes the distribution in the park. Wetlands near visitor facilities, roads, and some park boundaries were often disturbed while wetlands in the wilderness backcountry were largely intact. Finally, all IBI models strongly discriminated among HDI classes, suggesting that there were ecological thresholds in the park’s wetland vegetation communities that were suitable for use as ecological assessment points, and that it will be possible to place novel sites into the correct ecological integrity category most of the time (Hawkins et al. 2000).

Scatter plot of ROMO riparian wetland IBI vs. HDI. Linear model and Pearson correlations in inset show strong relationship with HDI. Classification error rate was derived using cross-validation models. Ecological assessment points based on threshold values (vertical and horizontal lines on the figure with corresponding scores of HDI = 30.25 and IBI = 4.94) were generated using regression tree models. (See Schweiger (2010b) for details)

Both the reference and nonreference classes were characterized by a range of values at the appropriate scale (park rather than state or ecoregion). For reference sites, this reflects the natural range of variability, and for disturbed sites, it reflects variation due to human impacts plus the underlying natural variability . One of the primary reasons for the modeling effort conducted by the ROMN was to define the park-specific condition gradient from reference to impacted. This gradient in ROMO may be quite different from the larger landscape; a non-reference designation in ROMO may be a relatively intact wetland if the scale of the assessment were broader.

Because most of the sample sites used to generate the IBI models were from a survey design, the ROMN used design-based analysis (Olsen et al. 1999) with the IBI-based ecological assessment points (and many other metrics, see Schweiger et al. 2010b) to estimate wetland condition at the population scale. Fig. 10.8 shows an example of one of the key outputs from these analyses using the ROMO fen IBI—a cumulative distribution function (CDF). Generally speaking, a CDF is an “area so far” function of the probability distribution for a response or a metric. Graphical presentations of CDFs aid visualization of the probability distribution and readily facilitate interpreting thresholds placed within the distribution by locating the percentage of the response that is above or below the threshold. Using the threshold of 5.64 in the IBI generated from the regression tree analysis, the proportion of all fens in the park in an impacted and a reference state are shown graphically in Fig. 10.8. Sixty-nine percent (± 20 %) of ROMO fen habitat is in a reference state and 31 % (± 20 %) is in a nonreference condition.

Cumulative distribution function (CDF) of ROMO fen IBI. Green and red regions on the figure are based on where the ecological assessment point of 5.64 intersects the curve. Using the properties of a CDF, this defines the percentage (or area) of the ROMO fen resource that is in impacted (30.8 ± 20 %) and reference (69.2 ± 20 %) condition classes. (See Schweiger (2010b) for details)

These analyses and results define the baseline of wetland condition for long-term monitoring in ROMN. Additionally, the ROMN approach to developing metrics and assessment points facilitates the distillation of large volumes of ecological data into concise results that decision makers can use for resource management.

Collect Data and Calculate Metrics

Select elements of the pilot summarized above also served as the initial monitoring effort for wetlands in the park. The ROMN is currently reviewing what worked and what did not within the pilot and finalizing long-term plans for continued wetland monitoring in ROMO and other NPS units. Current plans include statistical sampling of the park’s wetland population every 5–10 years, plus annual monitoring at four sentinel wetland complexes (Schweiger et al. 2010a). This frequency of data collection and recalculation of ecological integrity metrics will ensure that current information is available for resource managers, and that they have time to plan and react to changing conditions. The ROMN is also working directly with the park to develop related management assessment points based on the ecological assessment points developed during the pilot, the management needs of the park, and the precision of the wetland protocol.

Report Results

Now that ROMN has completed the pilot and its initial assessment of wetland ecological integrity in the park, the network is developing a suite of products to convey the results to managers, the public, and others. ROMN is developing concise “resource briefs” suitable for nonscientists, plus website content and other summaries. These documents will link to more detailed products that will include metric and assessment point justifications, field sampling methods, and analysis details (Schweiger et al. 2010a). This means that park managers and other stakeholders will have access to relevant summary information on wetland ecological integrity at ROMO, and will have the option of digging deeper to investigate the science behind each assessment.

Forest Ecological Integrity in Northeastern National Parks

Another example of the ecological integrity framework in action is forest monitoring in northeastern national parks. The NPS Northeast Temperate Inventory and Monitoring Network (NETN) monitors forests in ten national parks, including Acadia National Park (ACAD), Marsh-Billings-Rockefeller National Historical Park (MABI), and Morristown National Historical Park (MORR). Covering over 14,000 ha, ACAD is situated on the coast of Maine, and is dominated by second-growth spruce–fir forests that have had minimal management for nearly 100 years. MABI is a small (225 ha) park in rural Vermont with an ongoing forestry operation. The park is dominated by forest land, which consists of a patchwork of northern hardwoods and monoculture conifer plantations. MORR, a 691 ha park in suburban New Jersey, is predominantly northern hardwoods and is heavily impacted by invasive species and browsing by white-tailed deer. These three parks are the focus of this example, although NETN monitors and reports forest ecological integrity for the larger group.

Define Scale and Develop Conceptual Diagram

NETN defined its scale for forest ecological integrity as the long-term monitoring of forest condition (and more open woodland communities at ACAD) within park boundaries during the summer season. Within this population, permanent plots were stratified by park and selected using a spatially balanced random sample (Stevens and Olsen 2004). Like the Rocky Mountain Network, NETN developed a conceptual diagram for forests during the development of their monitoring plan (Mitchell et al. 2006; Fancy et al. 2009) and used this diagram during the metric selection process. NETN evaluates ecological integrity of forested systems using the plot data and 13 metrics of ecological composition, structure, and function that are broadly applicable across northeastern temperate forests (Tierney et al. 2009, 2010).

Select Metrics

NETN uses five composition metrics. Tree regeneration indicates the quantity and composition of established tree seedlings and therefore of potential future canopy composition, and is substantially impacted by a historically large eastern US population of white-tailed deer (Odocoileus virginianus) (Cote et al. 2004). Tree condition, based on qualitative observations of disease, pests, pathogens, and canopy foliage problems, provides an early warning indicator of infestation, disease, or decline of one or more species. Biotic homogenization is the process by which regional biodiversity declines over time, due to the addition of widespread exotic species and the loss of native species (Olden and Rooney 2006); this metric can be calculated between site pairs as a simple ratio of species present at two sites over the total species present at either site (Jaccard’s Similarity Index; Olden and Poff 2003). Invasive exotic plant species exploit and alter habitat, and are monitored by recording the frequency of 22 exotic species that are highly invasive in northeastern forest , woodland, and successional habitats. Deer browse can affect understory plant composition in addition to tree regeneration (Augustine and DeCalesta 2003), and NETN monitors the change between monitoring events in the abundance of common preferred browse species and unpalatable species.

The network’s structural forest ecological integrity metrics include two landscape metrics and three stand-level metrics. Forest patch size strongly impacts habitat suitability for a variety of taxa (Fahrig 2003), with larger forest patches supporting larger populations of fauna and more native, specialist, and forest interior-dwelling species. Human land use, based on the percentage of land area containing human land use versus “natural” land use within a 50 ha (400 m radius) circle around each forest plot, is used to estimate the impacts of habitat loss within a local neighborhood. The stand-level metrics are stand structural class, snag abundance, and coarse woody debris (CWD) volume. Using the method of Goodell and Faber-Langendoen (2007), NETN calculates stand structural stage from tree size and canopy position measurements; this metric helps the network assess altered disturbance regimes coincident with global change and exotic pest and pathogen outbreaks (Dale et al. 2001). Dead wood, in the form of snags (standing dead trees) and fallen CWD, is an important structural component that provides necessary habitat for many forest taxa. Silviculture and land management often reduce the quantity and quality of dead wood, but ecologically based land management can retain or enhance these features (Keeton 2006).

The NETN also selected three metrics of ecosystem function: canopy tree growth and mortality, acid stress, and nitrogen saturation. Decreased growth or elevated mortality rates may indicate a particular health problem, such as sugar maple decline (Duchesne et al. 2003), or may indicate a regional environmental stress (Dobbertin 2005). Acid stress (primarily from atmospheric deposition) is measured in forest soil based on the molar ratio of calcium to aluminum (Cronan and Grigal 1995). Nitrogen saturation (also from atmospheric deposition) may exacerbate the effects of acidification (Aber et al. 1998) and is measured in forest soil based on the ratio of carbon to nitrogen.

Determine Assessment Points

Once the metrics were identified, the NETN developed assessment points . NETN established action and surveillance assessment points based on ecological assessment points, using existing research whenever possible. If the available data suggested a range of values for the limits of natural variation, the network typically created a surveillance assessment point at the lower (more “natural”) value and an action assessment point at the higher (less “natural”) value (Tierney et al. 2010). Because NETN’s field methods and many of their metrics were closely related to methods used by the well-established US Forest Service’s Forest Inventory and Analysis (FIA) program (http://www.fia.fs.fed.us/), in some cases there were scientifically-based ecological assessment points or existing baseline data to facilitate the process. For example, assessment points for the tree regeneration metric were partly based on FIA research (McWilliams et al. 2005), using a stocking ratio metric and associated assessment point that varies by forest type and is partly based on a proposed metric for detecting ungulate impacts in forests (Sweetapple and Nugent 2004). In other cases, the FIA data were used as baseline data. This was the case for the tree growth rate assessment points, which were based on FIA regional and species-specific patterns (Tierney et al. 2010). A few NETN assessment points rely on comparisons to baseline data collected by the NETN. For the biotic homogenization and indicator browse metrics, assessment points were based on the changes from the baseline condition rather than a comparison to predetermined values, given the challenges of establishing historical baselines for these metrics (Tierney et al. 2010).

Most NETN assessment points were established by reviewing and applying existing research, and this is the primary place where the existence of ecological thresholds played an integral role in the process. For example, Aber et al. (2003) compiled data from sites across the northeastern USA and discovered that nitrification increased sharply below a C:N ratio of 20–25. Additionally, the Indicators of Forest Ecosystem Functioning (IFEF) database compiled data from sites across Europe and found that, below a C:N ratio of 25, overall nitrate leaching was significantly higher and more strongly correlated to nitrogen deposition (MacDonald et al. 2002). NETN used this research to establish a surveillance assessment point (“Caution” rating) at a C:N ratio of 25, and an action assessment point (“Significant Concern” rating) at a C:N ratio of 20. Ecological thresholds are also present in the minimum habitat patch sizes needed to support species. Kennedy et al. (2003) reviewed the available research, and found that minimum patch areas ranged up to 1 ha for invertebrates, up to 10 ha for small mammals, and up to 50 ha for the majority (75 %) of bird species, with much bigger patch sizes needed to support large mammals. The relatively small parks for which the NETN metric was designed could not independently support large mammal populations. Therefore, the network chose ecological assessment points based on the threshold patch sizes needed to support birds, small mammals, and invertebrates.

Collect Data and Calculate Metrics

NETN has been collecting data annually since 2006 at 350 fixed plots. Plot numbers vary across the ten parks, with as few as ten plots (one plot for each two forested hectares) at Weir Farm National Historic Site and up to 176 plots (1 plot for each 73 forested hectares) at ACAD. Half the plots at each of the network’s small parks are sampled every other year, and a quarter of ACAD’s plots are sampled each year (Tierney et al. 2010). Metrics are automatically calculated by the network’s monitoring database at a minimum of once every complete sampling cycle (every 4 years). Often interim calculations are produced using the current year’s data or a rolling window of the most recent 4 years. This frequency of data collection and metric calculation ensures that there are always current data available to address the needs of park managers.

Report Results

An integral part of the Northeast Temperate Network’s forest monitoring is producing a variety of reports that ensure park managers are aware of current forest conditions, that they have information explaining these conditions, and that they have access to the raw data if they need to explore the summary information more fully. The foundation of the NETN approach is simple summary tables (e.g., the comparison of three parks in Table 10.1) that provide an intuitive scorecard for managers and help them see the status of their park and how it compares to other network parks. Most of the metrics in the table are assessed park-wide or by management unit (for some of the larger parks). A few metrics, such as tree condition and tree regeneration, can be assessed accurately at the plot scale, allowing for the use of pie charts to convey the proportion of the park’s forest in each assessment category (Table 10.1).

The scorecard table suggests that ACAD is doing well, with all park-wide metrics within the ecological assessment points , and a small percentage of plots indicating poor integrity for the tree condition and regeneration metrics. Conditions at MABI warrant close ongoing observation, since many of the metrics had scores between the surveillance and action assessment points. In addition, tree regeneration is likely inhibited at the park, and nitrogen saturation may have reached problematic levels. This park has chosen to be proactive about forest condition , and projects ranging from extensive invasive plant removal to silvicultural actions that increase snag and coarse woody debris abundance will likely improve the park’s scores in the future. MORR’s ratings are more checkered than the other parks. Although the structural and functional metric ratings indicate a reasonably good condition (albeit with excess nitrogen deposition), the compositional metrics indicate some problems. In particular, invasive plants are having a significant effect on plant diversity , and overabundant deer have effectively eliminated tree regeneration in much of the park. Both of these issues are high priorities for park managers.

While the ecological integrity scorecard is great for an at-a-glance summary, NETN makes sure to supplement the scores with additional details that include the actual values and assessment points for each metric, as well as discussion about the implications of the scores and possible corrective actions (e.g., Miller et al. 2010b). This information is reported at the park level, and when possible (for parks with more plots), the scores and interpretation are provided for management units within each park. Network staff also produce a series of resource briefs that highlight key information from the more technical report (e.g., Fig. 10.9); these publications are often popular with higher level managers as well as park education staff and interested members of the public. The scorecard report and resource briefs always provide citations or links to additional information, including the monitoring protocol that documents the ecological integrity metrics and assessment points (Tierney et al. 2010). All of these reports and communication tools are intended to support and supplement (rather than replace) regular in-depth data analyses and scientific reports that will explore trends and patterns in the long-term data set. The whole range of publications is organized and made available in digital format to resource managers through the NETN web site, so that they can quickly locate information when the need arises.

Conclusions

The ecological integrity framework is a powerful tool for organizing complex data sets and conveying important information to resource managers. Even when managers do not have the time or background to fully explore the statistics or threshold dynamics that led to the choices of different ecological assessment points , they intuitively grasp the idea of an ecological system being inside or outside its historical range of variability. This framework has a number of important features. It can accommodate application of ecological thresholds where they exist—at multiple points, particularly in the choice of suitable assessment points. In many cases, thresholds facilitate the process, particularly when they occur near limits of the range of historical variation of a system. The presence of threshold behavior in a metric can help guide the development of assessment points, because thresholds indicate places where the metric value changes rapidly (and can be detected more easily) in response to changes in the system. Lack of clear thresholds make the identification of ecological assessment points somewhat more arbitrary, but also allow for greater flexibility in the choice of surveillance and action points.

Thresholds have an additional value to contribute to the framework, in that they may highlight an important exception to the usual practice of setting assessment points around the historical range of variation. If there is significant resistance to perturbation in an ecosystem , with threshold behavior not occurring until well beyond the historical range of variation, this may provide a scientific basis for shifting an ecological assessment point beyond the historical range. For example, suppose an ecosystem has a natural range of variation in its carbon to nitrogen ratio of 40–80, but research shows no effects of nitrogen saturation until the ratio drops below 25. This situation suggests placement of an ecological assessment point at a ratio of 25, even though 40 represents the lower bound of the range of natural variation.

Other valuable features of the framework stem from its focus on providing useful and timely information to resource managers. The framework is transparent, since decisions and analyses are documented and easily available for review. It is appropriate for a variety of audiences, particularly when a hierarchy of publications is produced that vary in technical detail and allow readers to find their level of comfort, while keeping the full details within easy reach. The framework is also iterative and easily integrated into the adaptive management cycle (Lancia et al. 1996). The iterative nature of the framework is particularly apparent early in the process, when one step in the framework often requires revisiting other steps. For example, initial data collection may reveal that a metric has more variability or is more expensive than originally expected, triggering a re-evaluation of the metric selection and assessment points . Alternatively, a new publication and ongoing data collection may reveal that a hypothesized relationship in the conceptual diagram was incorrect; this may suggest new hypothesis, new metrics, and even the discontinuation of current metrics. The ecological integrity framework can play a central role in the adaptive management cycle by regularly reporting current results to managers, incorporating new information (including the results of management actions and data analyses) into the conceptual foundation of the framework, and making modifications to metrics and reporting that reflect new knowledge.

References

Aber, J., W. McDowell, K. Nadelhoffer, A. Magill, G. Berntson, M. Kamakea, S. McNulty, W. Currie, L. Rustad, and I. Fernandez. 1998. Nitrogen saturation in temperate forest ecosystems: Hypotheses revisited. Bioscience 48:921–934.

Aber, J. D., C. L. Goodale, S. V. Ollinger, M. L. Smith, A. H. Magill, M. E. Martin, R. A. Hallett, and J. L. Stoddard. 2003. Is nitrogen deposition altering the nitrogen status of northeastern forests? Bioscience 53:375–389.

Andreasen, J. K., R. V. O’Neill, R. Noss, and N. C. Slosser. 2001. Considerations for the development of a terrestrial index of ecological integrity. Ecological Indicators 1:21–35.

Augustine, D. J., and D. DeCalesta. 2003. Defining deer overabundance and threats to forest communities: From individual plants to landscape structure. Ecoscience 10:472–486.

Baker, B. W., H. C. Ducharme, D. C. S. Mitchell, T. R. Stanley, and H. R. Peinetti. 2005. Interaction of beaver and elk herbivory reduces standing crop of willow. Ecological Applications 15:110–118.

Baron, J. S., T. M. Schmidt, and M. D. Hartman. 2009. Climate-induced changes in high elevation stream nitrate dynamics. Global Change Biology 15:1777–1789.

Barrett, G. W., G. M. Van Dyne, and E. P. Odum. 1976. Stress ecology. Bioscience 26:192–194.

Bennetts, R. E., J. E. Gross, K. Cahill, C. McIntyre, B. B. Bingham, A. Hubbard, L. Cameron, and S. L. Carter. 2007. Linking monitoring to management and planning: Assessment points as a generalized approach. The George Wright Forum 24:59–77.

Billick, I., and M. Pierce, eds. 2010. The ecology of place: Contributions of place-based research to ecological understanding. Chicago: The University of Chicago Press.

Britten, M., E. W. Schweiger, B. Frakes, D. Manier, and D. Pillmore. 2007. Rocky Mountain Network vital signs monitoring plan. (Natural resource report NPS/ROMN/NRR-2007/010.) Fort Collins: National Park Service

Contamin, R., and A. M. Ellison. 2009. Indicators of regime shifts in ecological systems: What do we need to know and when do we need to know it? Ecological Applications 19:799–816.

Cooper, D. J. 1998. Classification of Colorado’s wetlands for use in HGM functional assessment: A first approximation. In A characterization and functional assessment of reference Wetlands in Colorado. Denver: Department of Natural Resources, Colorado Geological Survey—Division of Minerals and Geology.

Cooper, D. J., J. Dickens, N. T. Hobbs, L. Christensen, and L. Landrum. 2006. Hydrologic, geomorphic and climatic processes controlling willow establishment in a montane ecosystem. Hydrological Processes 20:1845–1864.

Cote, S. D., T. P. Rooney, J. P. Tremblay, C. Dussault, and D. M. Waller. 2004. Ecological impacts of deer overabundance. Annual Review of Ecology Evolution and Systematics 35:113–147.

Cronan, C. S., and D. F. Grigal. 1995. Use of calcium/aluminum ratios as indicators of stress in forest ecosystems. Journal of Environmental Quality 24:209–226.

Czech, B. 2004. A chronological frame of reference for ecological integrity and natural conditions. Natural Resources Journal 44:1113–1136.

Dale, V. H., L. A. Joyce, S. McNulty, R. P. Neilson, M. P. Ayres, M. D. Flannigan, P. J. Hanson, L. C. Irland, A. E. Lugo, C. J. Peterson, D. Simberloff, F. J. Swanson, B. J. Stocks, and B. M. Wotton. 2001. Climate change and forest disturbances. Bioscience 51:723–734.

De’ath, G., and K. E. Fabricius. 2000. Classification and regression trees: A powerful yet simple technique for ecological data analysis. Ecology 81:3178–3192.

De Leo, G. A., and S. Levin. 1997. The multifaceted aspects of ecosystem integrity. Conservation Ecology 1(1): 3.

DeKeyser, E. S., D. R. Kirby, and M. J. Ell. 2003. An index of plant community integrity: Development of the methodology for assessing prairie wetland plant communities. Ecological Indicators 3:119–133.

Dobbertin, M. 2005. Tree growth as indicator of tree vitality and of tree reaction to environmental stress: a review. European Journal of Forest Research 124:319–333.

Duchesne, L., R. Ouimet, and C. Morneau. 2003. Assessment of sugar maple health based on basal area growth pattern. Canadian Journal of Forest Research 33:2074–2080.

Faber-Langendoen, D., J. Rocchio, M. Schafale, C. Nordman, M. Pyne, J. Teague, T. Foti, and P. J. Comer. 2006. Ecological integrity assessment and performance measures for wetland mitigation. Arlington: NatureServe.

Faber-Langendoen, D., C. Hedge, M. Kost, S. Thomas, L. Smart, R. Smyth, J. Drake, and S. Menard. 2012. Assessment of wetland ecosystem condition across landscape regions: A multi-metric approach. EPA/600/R-12/021a. U.S. Environmental Protection Agency Office of Research and Development, Washington, DC.

Fahrig, L. 2003. Effects of habitat fragmentation on biodiversity. Annual Review of Ecology Evolution and Systematics 34:487–515.

Fancy, S. G., and R. E. Bennetts. 2012. Institutionalizing an effective long-term monitoring program in the US national park service. In Design and analysis of long-term ecological monitoring studies, ed. R. A. Gitzen, J. J. Millspaugh, A. B. Cooper, and D. S. Licht, 481–497. Cambridge: Cambridge University Press.

Fancy, S. G., J. E. Gross, and S. L. Carter. 2009. Monitoring the condition of natural resources in US national parks. Environmental Monitoring and Assessment 151:161–174.

Field, C. B., L. D. Mortsch, M. Brklacich, D. L. Forbes, P. Kovacs, J. A. Patz, S. W. Running, and M. J. Scott. 2007. North America. In Climate change 2007: Impacts, adaptation and vulnerability. Contribution of working group II to the fourth assessment report of the intergovernmental panel on climate change, ed. M. L. Parry, O. F. Canziani, J. P. Palutikof, P. J. van der Linden, and C. E. Hanson, 617–652. Cambridge: Cambridge University Press.

Gage, E., and D. Cooper. 2009. Historical range of variation assessment for wetland and riparian ecosystems, U.S. Forest service, Rocky Mountain region. Fort Collins: Colorado State University.

Goodell, L., and D. Faber-Langendoen. 2007. Development of stand structural stage indices to characterize forest condition in Upstate New York. Forest Ecology and Management 249:158–170.

Grace, J. B., T. M. Anderson, H. Olff, and S. M. Scheiner. 2010. On the specification of structural equation models for ecological systems. Ecological Monographs 80:67–87.

Groffman, P. M., J. S. Baron, T. Blett, A. J. Gold, I. Goodman, L. H. Gunderson, B. M. Levinson, M. A. Palmer, H. W. Paerl, G. D. Peterson, N. L. Poff, D. W. Rejeski, J. F. Reynolds, M. G. Turner, K. C. Weathers, and J. Wiens. 2006. Ecological thresholds: The key to successful environmental management or an important concept with no practical application? Ecosystems 9:1–13.

Hawkins, C. P., R. H. Norris, J. N. Hogue, and J. W. Feminella. 2000. Development and evaluation of predictive models for measuring the biological integrity of streams. Ecological Applications 10:1456–1477.

James-Pirri, M.-J., J. L. Swanson, C. T. Roman, H. S. Ginsberg, and J. F. Heltshe. 2012. Ecological thresholds for salt marsh nekton and vegetation communities. In Application of threshold concepts in natural resource decision making, ed. G. Guntenspergen. New York: Springer.

Karr, J. R. 1981. Assessment of biotic integrity using fish communities. Fisheries 6:21–27.

Karr, J. R. 1991. Biological integrity—a long-neglected aspect of water-resource management. Ecological Applications 1:66–84.

Karr, J. R., and E. W. Chu. 1997. Biological monitoring: Essential foundation for ecological risk assessment. Human and Ecological Risk Assessment 3:993–1004.

Karr, J. R., and D. R. Dudley. 1981. Ecological perspectives on water quality goals. Environmental Management 5:55–68.

Keeton, W. S. 2006. Managing for late-successional/old-growth characteristics in northern hardwood-conifer forests. Forest Ecology and Management 235:129–142.

Kennedy, C., J. Wilkinson, and J. Balch. 2003. Conservation thresholds for land use planners. Washington, D.C.: Environmental Law Institute.

Kurtz, J. C., L. E. Jackson, and W. S. Fisher. 2001. Strategies for evaluating indicators based on guidelines from the Environmental Protection Agency’s Office of Research and Development. Ecological Indicators 1:49–60.

Lancia, R. A., C. E. Braun, M. W. Collopy, R. D. Dueser, J. G. Kie, C. J. Martinka, J. D. Nichols, T. D. Nudds, W. R. Porath, and N. G. Tilghman. 1996. ARM! For the future: Adaptive resource management in the wildlife profession. Wildlife Society Bulletin 24:436–442.

Landres, P. B., P. Morgan, and F. J. Swanson. 1999. Overview of the use of natural variability concepts in managing ecological systems. Ecological Applications 9:1179–1188.

Lemly, J., and J. Rocchio. 2009. Vegetation index of biotic integrity (VIBI) for headwater wetlands in the southern Rocky Mountains: Version 2.0: Calibration of selected VIBI models. Fort Collins: Colorado Natural Heritage Program.

MacDonald, J. A., N. B. Dise, E. Matzner, M. Armbruster, P. Gundersen, and M. Forsius. 2002. Nitrogen input together with ecosystem nitrogen enrichment predict nitrate leaching from European forests. Global Change Biology 8:1028–1033.

Mack, J. J. 2001. Vegetation index of biotic integrity (VIBI) for wetlands: Ecoregional, hydrogeomorphic, and plant community comparisons with preliminary wetland aquatic life use designations. In D. o. S. W. Ohio Environmental Protection Agency, ed. Wetland Ecology Group, 99. Columbus: State of Ohio Environmental Protection Agency.

Mack, J. J. 2007. Developing a wetland IBI with statewide application after multiple testing iterations. Ecological Indicators 7:864–881.

Mack, J. J., and M. E. Kentula. 2010. Metric similarity in vegetation-based wetland assessment methods. (EPA/600/R-10/140.) Washington, D.C.: U.S. Environmental Protection Agency, Office of Research and Development.

McWilliams, W. H., T. W. Bowersox, P. H. Brose, D. A. Devlin, J. C. Finley, K. W. Gottschalk, S. Horsley, S. L. King, B. M. LaPoint, T. W. Lister, L. H. McCormick, G. W. Miller, C. T. Scott, H. Steele, K. C. Steiner, S. L. Stout, J. A. Westfall, and R. L. White. 2005. Measuring tree seedlings and associated understory vegetation in Pennsylvania’s forests. In Proceedings of the Fourth Annual Forest Inventory and Analysis Symposium. General Technical Report NC-252, ed. R. E. McRoberts, G. A. Reams, P. C. Van Deusen, W. H. McWilliams, and C. J. Cieszewski, 21–26. St. Paul: U.S. Department of Agriculture, Forest Service, North Central Research Station.

Miller, S. J., D. H. Wardrop, W. M. Mahaney, and R. R. Brooks. 2006. A plant-based index of biological integrity (IBI) for headwater wetlands in central Pennsylvania. Ecological Indicators 6:290–312.

Miller, D. M., S. P. Finn, A. Woodward, and A. Torregrosa. 2010a. Conceptual models for landscape monitoring. In Conceptual ecological models to guide integrated landscape monitoring of the Great Basin, ed. D. M. Miller, S. P. Finn, A. Woodward, A. Torregrosa, M. E. Miller, D. R. Bedford, and A. M. Brasher, 1–12. Reston: United States Geological Survey.

Miller, K. M., G. L. Tierney, and B. R. Mitchell. 2010b. Northeast temperate network forest health monitoring report: 2006–2009. (Natural Resource Report NPS/NETN/NRR-2010/206.) Fort Collins: National Park Service.

Mitchell, B. R., W. G. Shriver, F. Dieffenbach, T. Moore, D. Faber-Langendoen, G. Tierney, P. Lombard, and J. P. Gibbs. 2006. Northeast temperate network vital signs monitoring plan. Woodstock: National Park Service, Northeast Temperate Network.

Mitsch, W. J., and J. G. Gosselink. 2007. Wetlands. 4th ed. Hoboken: Wiley.

National Park Service. 2012. Guidance for designing an integrated monitoring program. Natural Resource Report NPS/NRSS/NRR—545. National Park Service, Fort Collins, Colorado

Nichols, J. D., M. J. Eaton, and J. Martin. 2012. Thresholds for conservation and management: structured decision making as a conceptual framework. In Application of threshold concepts in natural resource decision making, ed. G. Guntenspergen. . New York: Springer.

Noon, B. R. 2003. Conceptual issues in monitoring ecological resources. In Monitoring ecosystems: Interdisciplinary approaches for evaluating ecoregional initiatives, ed. D. E. Busch, and J. E. Trexler, 27–72. Washington, D.C.: Island Press.

Oakley, K. L., L. P. Thomas, and S. G. Fancy. 2003. Guidelines for long-term monitoring protocols. Wildlife Society Bulletin 31:1000–1003.