Abstract

We used data envelopment analysis to measure the relative performance of New York State school districts in the 2011–2012 academic year and provided detailed alternative improvement pathways for each district. We found that 201 of the 624 (32.2 %) school districts with one or more high schools and 28 of the 31 (90.3 %) school districts with no high school were on the performance frontier. Using a mixed orientation, we found evidence that FTE teachers could be reduced by 8.4 %, FTE teacher support personnel could be reduced by 17.2 %, and FTE building administration and professional staff personnel could be reduced by 9.4 %. In addition, we found that the percentage of students who score 3 or 4 on the English exam could increase by 4.9 % points, 5.0 % points on the mathematics exam, and 5.8 % points on the science exam and the average graduation rate could increase by 5.4 % points.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Data envelopment analysis (DEA)

- School district performance

- Education policy

- Education administration

- Education finance

- Benchmarking

13.1 Introduction

In 2011, New York State’s 695 school districts (New York State Education Department n.d.) spent $53.7 billion (U.S. Census Bureau 2011, Table 6) to educate almost 2.7 million elementary and secondary pupils (U.S. Census Bureau 2011, Table 19), a cost of over $19,000 per pupil (U.S. Census Bureau 2011, Table 8). Elementary and secondary education accounts for nearly one-quarter of all state and local expenditures in New York State (U.S. Government Spending n.d.). While New York State has some excellent school districts, others struggle with poor standardized test scores and low graduation rates. Many of the reasons for the differences among school districts are widely accepted. These include differences in wealth, English proficiency, and inefficient use of resources.

Given the high cost of public education and its critical importance for the future of New York and the nation, it is natural for taxpayers, legislators, and administration officials to hold public education institutions accountable for producing high quality outcomes. To do so, we must measure the performance of each school district in an objective, data-informed manner. Commonly used methods for performance measurement under these circumstances are often called benchmarking models . When applied to school districts, a benchmark model identifies leading school districts, called benchmark school districts , and it facilitates the comparison of other school districts to the benchmark school districts. Non-benchmark school districts can focus on specific ways to improve their performance and thereby that of the overall statewide school system.

In this chapter, we present an appropriate benchmarking methodology , apply that methodology to measure the performance of New York State school districts in the 2011–2012 academic year, and provide detailed alternative improvement pathways for each school district.

13.2 Choosing an Appropriate Benchmarking Methodology

There are several methods used to perform benchmarking analysis. They differ in the nature of the data employed and the manner in which the data are analyzed. They also differ in their fundamental philosophies.

Some approaches compare individual units to some measure of central tendency, such as a mean or a median. For example, we might measure the financial performance of each firm within an industry by comparing its net income to the average net income of all firms in the industry. A moment’s reflection reveals that large firms will outperform small firms simply due to their size and without regard to their managerial performance. We might attempt to correct for this by computing each firm’s net income divided by its total assets, called the firm’s return on assets. This approach is called ratio analysis, and a firm’s performance might be measured by comparing its return on assets to the mean (or median) return on assets of all firms in the industry. Ratio analysis, however, assumes constant returns to scale—the marginal value of each dollar of assets is the same regardless of the size of the firm—and this may be a poor assumption in certain applications.

To avoid this assumption, we might perform a regression analysis using net income as the dependent variable and total assets as the independent variable. The performance of an individual firm would be determined by its position relative to the regression model, that is, a firm would be considered to be performing well if its net income were higher than predicted by the model given its total assets. We point out, however, that regression is a (conditional) averaging technique and measures units relative to average, rather than best, performance, and therefore does not achieve the primary objective of benchmarking.

Other approaches compare individual units to a measure of best, rather than average, performance. For example, we might modify ratio analysis by comparing a firm’s return on assets to the largest return on assets of all firms in the industry. This has the advantage of revealing how much the firm needs to improve its return on assets to become a financial leader in the industry. Using such a methodology, we would encourage firms to focus on the best performers, rather than on the average performers, in its industry.

The complexity of business organizations means that no one ratio can possibly measure the multiple dimensions of a firm’s financial performance. Therefore, financial analysts often report a plethora of ratios, each measuring one specific aspect of the firm’s performance. The result can be a bewildering array of financial ratios requiring the analyst to piece together the ratios to create a complete, and inevitably subjective, picture of the firm’s financial performance.

Fortunately, there is a methodology, called data envelopment analysis (DEA) that overcomes the problems associated with ratio analysis of complex organizations. As described in the next section, DEA employs a linear programming model to identify units called decision-making units, or DMUs whose performance, measured across multiple dimensions, is not exceeded by any other units or even any other combination of units. Cook et al. (2014) argue persuasively that DEA is a powerful “balanced benchmarking” tool in helping units to achieve best practices.

13.3 Data Envelopment Analysis

DEA has proven to be a successful tool in performance benchmarking. It is particularly well suited when measuring the performance of units along multiple dimensions, as is the case with complex organizations such as school districts. DEA has been used since the 1950s in a wide variety of applications, including health care, banking, pupil transportation, and most recently, education. DEA’s mathematical development may be traced to Charnes et al. (1978), who built on the work of Farrell (1957) and others. The technique is well documented in the management science literature (Charnes et al. 1978, 1979, 1981; Sexton 1986; Sexton et al. 1986; Cooper et al. 1999), and it has received increasing attention as researchers have wrestled with problems of productivity measurement in the services and nonmarket sectors of the economy. Cooper et al. (2011) covers several methodological improvements in DEA and describes a wide variety of applications in banking, engineering, health care, and services. Emrouznejad et al. (2008) provided a review of more than 4000 DEA articles. Liu et al. (2013) use a citation-based approach to survey the DEA literature and report finding 4936 DEA papers in the literature. See deazone.com for an extensive bibliography of DEA publications as well as a DEA tutorial and DEA software.

DEA empirically identifies the best performers by forming the performance frontier based on observed indicators from all units. Consequently, DEA bases the resulting performance scores and potential performance improvements entirely on the actual performance of other DMUs, free of any questionable assumptions regarding the mathematical form of the underlying production function. On balance, many analysts view DEA as preferable to other forms of performance measurement.

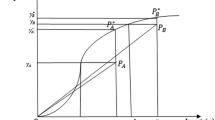

Figures 13.1 and 13.2 illustrate the performance frontier for a simple model of school districts. We can use this simple model, which is clearly inadequate for capturing the complexity of school districts, to demonstrate the fundamental concepts of DEA. In this model, we assume that each school district employs only one type of resource, full-time equivalent (FTE) teachers, and prepares students for only one type of standardized test, mathematics at the appropriate grade level, measured as the percentage of students who score at a given level or higher. Each school district is represented by a point in the scatterplot.

In Fig. 13.1, school districts A, B, and C define the performance frontier. In each case, there is no school district or weighted average of school districts that has fewer FTE teachers per 100 students and has a higher percentage of students who scored 3 or 4 on the standardized mathematics test. Such school districts, if they existed, would lie to the Northwest of A, B, or C, and no such districts, or straight lines between any two districts, exists.

School district D, in Fig. 13.2, does not lie on the performance frontier and therefore its performance can improve. In principle, D can choose to move anywhere on the performance frontier. If school district D chooses to focus on resource reduction without test performance change, it would move to the left, reaching the performance frontier at point DRR. This move would require a reduction from 8.39 to 7.71 FTE teachers per 100 students. If school district D enrolls 10,000 students, this reduction would be from 839 to 771 teachers, a percentage reduction of 8.1 %. We refer to this strategy as the resource reduction orientation .

If school district D chooses to focus on performance enhancement without resource reduction, it would move upward, reaching the performance frontier at point DPE. This move would require 94.6 % of its students to score 3 or 4 on the standardized mathematics test, up from 77 %. If 1000 students in school district D sat for the standardized mathematics test, students scoring 3 or 4 would increase would from 770 to 946, or by 22.9 %. We refer to this strategy as the performance enhancement orientation .

School district D might prefer an intermediate approach that includes both resource reduction and performance enhancement and move to point DM. This entails both a reduction in FTE teachers per 100 students from 8.39 to 7.80 and an increase in the percentage of students who score 3 or 4 on the standardized mathematics test from 77 to 82.4 %. If school district D enrolls 10,000 students, this reduction would be from 839 to 780 teachers, or by 7.0 %, and an increase in students scoring 3 or 4 from 770 to 824, or 7.0 %. We refer to this strategy as the mixed orientation . The mixed orientation has the feature that the percentage decrease in each resource equals the percentage increase in each performance measure.

The three points DRR, DPE, and DM are called targets for school district D because they represent three possible goals for D to achieve to reach the performance frontier. School district D can choose its target anywhere on the performance frontier, but these three points represent reasonable reference points for D as it improves its overall performance.

Of course, this model does not consider other resources used by school districts such as teacher support personnel and other staff, nor does it consider standardized test scores in science or English. It also ignores graduation rates in school districts with one or more high schools. Moreover, it does not recognize differences in important district characteristics such as the number of elementary and secondary students , the percentage of students who qualify for free or reduced price lunch or who have limited English proficiency, or the district’s combined wealth ratio.

When other measures are included in the model, we can no longer rely on a simple graphical method to identify a school district’s target school district. For this purpose, we rely on the linear programming model that we describe in detail in the Appendix. Nonetheless, the target school district will have the same basic interpretation. Relative to the school district in question, the target school district consumes the same or less of each resource, its students perform the same or better on each standardized test, its graduation rate is at least as high (if applicable), it educates the same number or more students, and it operates under the same or worse district characteristics.

13.4 A DEA Model for School District Performance in New York State

To apply the DEA methodology to measure the performance of New York State school districts, we began by identifying three categories of important school district measurements. They were:

-

resources consumed;

-

performance measures; and

-

district characteristics.

We defined the resources consumed as:

-

FTE teachers;

-

FTE teacher support (teacher assistants + teacher aides); and

-

building administration and professional staff (principals + assistant principals + other professional staff + paraprofessionals).

For school districts with no high school, we defined the performance measures as:

-

percentage of students scoring at or above level 3 on ELA grade 6;

-

percentage of students scoring at or above level 3 on math grade 6; and

-

percentage of students scoring at or above level 3 on science grade 4.

For school districts with one or more high schools, we defined the performance measures as:

-

total cohort results in secondary-level English after 4 years of instruction: percentage scoring at levels 3–4;

-

total cohort results in secondary-level math after 4 years of instruction: percentage scoring at levels 3–4;

-

grade 8 science: percentage scoring at levels 3–4 all students; and

-

4-year graduation rate as of August.

We defined the district characteristics as:

-

number of elementary school students;

-

number of secondary school students;

-

percentage of students with free or reduced price lunch;

-

percentage of students with limited English proficiency; and

-

school district’s combined wealth ratio.

We recognize that other choices of variables are possible. We use this particular set of variables because it captures a reasonable range of resources consumed, performance dimensions to be measured, and district characteristics to be taken into account. Other variables may be added if statewide data are available for every school district. Our objective is to illustrate the model and its ability to provide school districts with useful feedback for strategic planning and other purposes.

We consider all three possible orientations. The resource reduction orientation seeks to reduce resource consumption as much as possible while maintaining performance measures at their current levels. The performance enhancement orientation seeks to improve performance measures as much as possible while maintaining resource consumption at current levels. The mixed orientation seeks to improve performance measures and reduce resource consumption simultaneously in a balanced way.

We present the results of all three orientations to provide school district administrators with alternative options for reaching the performance frontier. One district might elect to focus on resource reduction; another might opt for increases in test scores and graduation rate , while a third might prefer a blended strategy that combines these two objectives. Moreover, there are infinitely many points on the performance frontier toward which a district may move; the three that we present are designed to highlight three possible alternatives.

We point out that the performance frontier is unaffected by the choice of orientation. Any district that lies on the performance frontier in one orientation will also lie on it in any other orientation. Orientation only determines the location of the target district on the performance frontier.

13.5 Data and Results

We obtained complete data for 624 public school districts with one or more high schools and 31 public school districts with no high school for the academic year 2011–2012. Complete data were unavailable for certain districts. All data were obtained from the New York State Education Department.

13.6 Results for Three Example Districts

Table 13.1 shows the results for three districts based on the model described above. These districts were selected to illustrate the manner in which the model results can be presented to school districts and how they might be interpreted.

School district A would reduce all three resources by 18.3 % using the resource reduction orientation and by 4.0 % under the mixed orientation, but would not reduce any resources under the performance enhancement orientation. Improvements in English and science would be virtually the same using all three orientations (in the range of 4 %) but the improvements in math and graduation rate are notably higher using either the performance enhancement or mixed orientations. The message for school district A is that it can raise all three test measures by about 4 % and graduation rate by about 8% with little or no reduction in resources. Alternatively, it can improve English and science (but not math) by about 4% and graduation rate by 4–5 % even with significant resource reductions. The choice of strategy would be influenced by many other factors not reflected in the model.

School district B can reduce its FTE teachers by at least 6.9 % but its greater opportunity lies in teacher support, which it can reduce by at least 27.4 %. Despite these reductions, it can improve English by almost 7% and math by almost 4 %.

School district C is performing very well regardless of orientation with the exception of math, which it can improve by almost 14 %.

13.7 Statewide Results

We found no evidence that 201 of the 624 (32.2 %) school districts with one or more high schools can reduce resource consumption or improve performance. The same statement applies to 28 of the 31 (90.3 %) school districts with no high school. Put another way, each of these school districts serves as its own target school district—none of these school districts can simultaneously reduce each of its resources and improve each of its performance measures while operating under the same district characteristics.

13.8 Districts with One or More High Schools

The 624 school districts with one or more high schools employed 126,470 FTE teachers, 33,035 FTE teacher support personnel, and 25,492.5 FTE building administration and professional staff in the academic year 2011–2012. The average percentage of students who scored 3 or 4 on the English exam was 84.4 %; on the mathematics exam, the average was 86.0 %, and on the science exam, the average was 81.6 %. The average graduation rate was 84.2 %. See Table 13.2.

Using a mixed orientation, we found evidence that the number of FTE teachers can be reduced by 8.4 %, the number of FTE teacher support personnel can be reduced by 17.2 %, and the number of FTE building administration and professional staff personnel can be reduced by 9.4 %. In addition, that the averageFootnote 1 percentage of students who score 3 or 4 on the English exam can rise by 4.9 % points, by 5.0 % points on the mathematics exam, and by 5.8 % points on the science exam. Moreover, the averageFootnote 2 graduation rate can rise by 5.4 % points.

Using a resource reduction orientation , we found evidence that the number of FTE teachers can be reduced by 19.1 %, the number of FTE teacher support personnel can be reduced by 22.3 %, and the number of FTE building administration and professional staff personnel can be reduced by 19.3 %. In addition, the average percentage of students who score 3 or 4 on the English exam can rise by 2.2 % points, by 2.4 % points on the mathematics exam, and by 3.7 % points on the science exam. Moreover, the average graduation rate can rise by 2.3 % points.

Finally, using a performance enhancement orientation, we found evidence that the number of FTE teachers can be reduced by 5.7 %, the number of FTE teacher support personnel by 15.5 %, and the number of FTE building administration and professional staff personnel by 7.1 %. In addition, the average percentage of students who score 3 or 4 on the English exam can rise by 5.3 % points, by 5.3 % points on the mathematics exam, and by 6.0 % points on the science exam. Moreover, the average graduation rate can rise by 6.8 % points.

Figures 13.3, 13.4, and 13.5 illustrate the potential improvements in the three resource categories. For districts that lie on the diagonal of one of these graphs, there is no evidence that they could reduce their use of this resource category. Other districts have the potential to reduce resource consumption by the amount that they lay below the diagonal.

Figures 13.6, 13.7, 13.8, and 13.9 illustrate the potential improvements in the four performance measures. For districts that lie on the diagonal of one of these graphs, there is no evidence that they could improve their performance in this dimension. Other districts have the potential to improve by the amount that they lay above the diagonal.

Figure 13.10 shows the histograms of the school districts for each of the three factor performances associated with the resources, excluding those districts for which no improvement is possible. Figure 13.11 shows the histograms of the school districts for each of the four factor performances associated with the performance measures, again excluding those for which no improvement is possible.

13.9 Districts Without a High School

The 31 school districts with no high school employed 2233 FTE teachers, 762 FTE teacher support personnel, and 416 FTE building administration and professional staff in the academic year 2011–2012. The average percentage of students who scored 3 or 4 on the English exam was 84.4 %; on the mathematics exam, the average was 86.0 %, and on the science exam, the average was 81.6 %. See Table 13.3.

Using a mixed orientation, we found evidence that the number of FTE teachers can be reduced by 0.2 %, the number of FTE teacher support personnel by 4.3 %, and the number of FTE building administration and professional staff personnel by 3.3 %. In addition, the average percentage of students who score 3 or 4 on the English exam can rise by 0.4 % points, by 0.9 % points on the mathematics exam, and by 0.3 % points on the science exam.

Using a resource reduction orientation, we found evidence that the number of FTE teachers can be reduced by 0.8 %, the number of FTE teacher support personnel by 4.6 %, and the number of FTE building administration and professional staff personnel by 4.8 %. In addition, the average percentage of students who score 3 or 4 on the English exam can rise by 0.6 % points, by 0.6 % points on the mathematics exam, and by 0.0 % points on the science exam.

Finally, using a performance enhancement orientation , we found evidence that the number of FTE teachers can be reduced by 0.0 %, the number of FTE teacher support personnel by 4.3 %, and the number of FTE building administration and professional staff personnel by 3.0 %. In addition, the average percentage of students who score 3 or 4 on the English exam can rise by 0.4 % points, by 0.9 % points on the mathematics exam, and by 0.3 % points on the science exam.

13.10 Implementation

We reiterate that other choices of variables are possible. An important first step is for the school districts and the New York State Education Department (NYSED) to work together to modify this model as necessary. For example, the current model does not include data on Regents exam scores. In principle, the only requirement is that complete data exists for all school districts for the specified school year. In addition, it is important to provide a complete data set so that all school districts, especially those in New York City, can be included. This data set needs to be compiled for the latest school year for which complete data are available.

The NYSED would need to determine the distribution of model results. Perhaps the initial distribution during a pilot phase should be restricted to the school districts and NYSED. This would allow school districts the opportunity to understand the full meaning of their own results better and to begin to incorporate the results into their operations and planning. The pilot phase would also allow school districts and NYSED to suggest further improvements in the model.

Ultimately, the model can serve as a key element in a quality improvement cycle . By providing direct feedback to each school district about its performance along multiple dimensions, it supports school district decisions about how to improve and allows them to demonstrate that their decisions have in fact had the desirable effects.

13.11 Conclusions

We have presented a flexible model that allows school districts and NYSED to measure school district performance throughout New York State. The model provides multiple, mathematically-derived performance measures that allow school districts to detect specific areas for improvement. The model also enables NYSED to identify school districts that are the top performers in the state and others that most require improvement.

The results of a preliminary version of the model applied to data from the 2011–2012 school year shows that approximately one-third of the school districts in New York State are performing as well as can be expected given their local school district characteristics. Another 26.8–42.3 %, depending on the specific resource or performance measure, can improve by no more than 10 %.

Nonetheless, substantial statewide improvements are possible. Using the mixed orientation , for example, if every school district were to match to its target, New York State would have between 8 and 17 % fewer personnel, 6–7 % more students scoring 3 or 4 on standardized tests, and 6% more students graduating within 4 years.

Public education is critically important to the future of New York State and the nation. This model offers the potential to support public school education leaders in recognizing where improvements are possible and in taking appropriate action to implement those improvements.

Notes

- 1.

These are unweighted averages and therefore they do not represent the statewide percentages.

- 2.

See previous footnote.

References

Charnes A, Cooper WW, Rhodes E (1978) Measuring the efficiency of decision making units. Eur J Oper Res 2:429–444

Charnes A, Cooper WW, Rhodes E (1979) Measuring the efficiency of decision making units: short communication. Eur J Oper Res 3:339

Charnes A, Cooper WW, Rhodes E (1981) Evaluating program and managerial efficiency: an application of data envelopment analysis to program follow through. Manag Sci 27:668–697

Cook WD, Tone K, Zhu J (2014) Data envelopment analysis: prior to choosing a model. Omega 44:1–4

Cooper WW, Seiford LM, Tone K (1999) Data envelopment analysis: a comprehensive text with models, applications, references and DEA-Solver software. Kluwer, Boston

Cooper WW, Seiford LM, Zhu J (2011) Handbook on data envelopment analysis. Springer, New York

Emrouznejad A, Parker BR, Tavares G (2008) Evaluation of research in efficiency and productivity: a survey and analysis of the first 30 years of scholarly literature in DEA. Socioecon Plann Sci 42:151–157

Farrell MJ (1957) The measurement of productive efficiency. J R Stat Soc Ser A 120(3):253–290

Liu JS, Lu LYY, Lu WM, Lin BJY (2013) Data envelopment analysis 1978–2010: a citation-based literature survey. Omega 41:3–15

New York State Department of Education (n.d.) Guide to reorganization of school districts. http://www.p12.nysed.gov/mgtserv/sch_dist_org/GuideToReorganizationOfSchoolDistricts.htm. Accessed 17 Mar 2014

Sexton TR (1986) The methodology of data envelopment analysis. In: Silkman RH (ed) Measuring efficiency: an assessment of data envelopment analysis New Directions for Program Evaluation No. 32. Jossey-Bass, San Francisco, pp 7–29

Sexton TR, Silkman RH, Hogan A (1986) Data envelopment analysis: critique and extensions. In: Silkman RH (ed) Measuring efficiency: an assessment of data envelopment analysis New Directions for Program Evaluation No. 32. Jossey-Bass, San Francisco, pp 73–105

U.S. Census Bureau (2011) Public education finances. http://www2.census.gov/govs/school/11f33pub.pdf. Accessed 17 Mar 2014

U.S. Government Spending (n.d.) New York State spending. http://www.usgovernmentspending.com/New_York_state_spending.html. Accessed 17 Mar 2014

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: The Mathematics of the DEA Model

Appendix: The Mathematics of the DEA Model

We use two slightly different DEA models in this chapter, one for school districts with one or more high schools, and one for school districts without a high school. The differences lie in the performance measures (different points at which test scores are measured, and no graduation rate for school districts with no high school). In addition, each model is employed with three different orientations (resource reduction, performance enhancement, and mixed). The text that follows describes the model for school districts with one or more high schools.

Let n = 624 be the number of school districts to be analyzed. The DEA literature refers to units under analysis as decision-making units, or DMUs. Let X ij be amount of resource i consumed by DMU j, for i = 1, 2, 3, and j = 1, 2, …, 624. In particular, let X 1j be the FTE teachers in DMU j, let X 2j be the FTE teacher support in DMU j, and let X 3j be the FTE building administration and professional staff in DMU j.

Let Y rj be performance measure r achieved by DMU j, for r = 1, 2, 3, 4 and j = 1, 2, …, 624. In particular, let Y 1j be the percentage of students scoring at levels 3 or 4 in secondary-level English after 4 years of instruction in DMU j, let Y 2j be the percentage of students scoring at levels 3 or 4 in secondary-level math after 4 years of instruction in DMU j, let Y 3j be the percentage of students scoring at levels 3 or 4 in Grade 8 Science in DMU j, and let Y 4j be the 4-year graduation rate as of August in DMU j, for j = 1, 2, …, 624.

Let S kj be the value of site characteristic k at DMU j, for k = 1, 2, 3, 4, 5 and j = 1, 2, …, 624. In particular, let S 1j be the number of elementary school students in DMU j, let S 2j be the number of secondary school students in DMU j, let S 3j be the percentage of students with free or reduced price lunch in DMU j, let S 4j be the percentage of students with limited English proficiency in DMU j, and let S 5j be the combined wealth ratio in DMU j, for j = 1, 2, …, 624.

13.1.1 The Resource Reduction DEA Model

The resource reduction DEA model with variable returns to scale, for DMU d, d = 1, 2, …, 624, is below. We must solve n = 624 linear programs to perform the entire DEA.

Min E d | (13.1) | |

subject to | ||

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{1j}\le {E}_d{X}_{1d} \) | (13.2a) | FTE teachers |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{2j}\le {E}_d{X}_{2d} \) | (13.2b) | FTE teacher support |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{3j}\le {E}_d{X}_{3d} \) | (13.2c) | Building administration and professional staff |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{1j}\ge {Y}_{1d} \) | (13.3a) | Secondary level English (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{2j}\ge {Y}_{2d} \) | (13.3b) | Secondary level math (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{3j}\ge {Y}_{3d} \) | (13.3c) | Grade 8 science (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{4j}\ge {Y}_{4d} \) | (13.3d) | Graduation rate (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{1j}\ge {S}_{1d} \) | (13.4a) | Number of elementary school students |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{2j}\ge {S}_{2d} \) | (13.4b) | Number of secondary school students |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{3j}\ge {S}_{3d} \) | (13.4c) | Percentage of students with free or reduced price lunch |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{4j}\ge {S}_{4d} \) | (13.4d) | Percentage of students with limited English proficiency |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{5j}\le {S}_{5d} \) | (13.4e) | School district’s combined wealth ratio |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j=1 \) | (13.5) | Variable returns to scale |

\( {\lambda}_j\ge 0\ for\ j=1,\ 2, \dots,\ 624 \) | (13.6) | Nonnegativity |

\( {E}_d\ge 0 \) | (13.7) | Nonnegativity |

We observe that setting λ d = 1, λ j = 0 for j ≠ d, and E d = 1 is a feasible, but not necessarily optimal, solution to the linear program for DMU d. This implies that E d *, the optimal value of E d , must be less than or equal to 1. The optimal value, E d *, is the overall efficiency of DMU j. The left-hand-sides of (13.2)–(13.4) are weighted averages, because of (13.5), of the resources, performance measures, and site characteristics, respectively, of the 524 DMUs. At optimality, that is with the λ j replaced by λ j *, we call the left-hand-sides of (13.2a)–(13.4e) the target resources , target performance measures , and target site characteristics , respectively, for DMU d.

Equations (13.2a)–(13.2c) imply that each target resource will be less than or equal to the actual level of that resource at DMU d. Similarly, (13.3a)–(13.3d) imply that each target performance measure will be greater than or equal to the actual level of that performance measure at DMU d.

The nature of each site characteristic inequality in (13.4a)–(13.4e) depends on the manner in which the site characteristic influences efficiency. Equations (13.4a)–(13.4d) correspond to unfavorable site characteristics (larger values imply a greater need for resources to obtain a given performance level, on average); therefore, we use the greater-than-or-equal to sign. Equation (13.4e) corresponds to a favorable site characteristic (larger values imply a lesser need for resources to obtain a given performance level, on average); therefore we use the less-than-or-equal to sign. Thus, (13.4a)–(13.4e) imply that the value of each target site characteristic will be the same as or worse than the actual value of that site characteristic at DMU d.

Thus, the optimal solution to the linear program for DMU d identifies a hypothetical target DMU d * that, relative to DMU d, (a) consumes the same or less of every resource, (b) achieves the same or greater level of every performance measure, and (c) operates under the same or worse site characteristics. Moreover, the objective function expressed in (13.1) ensures that the target DMU d * consumes resources levels that are reduced as much as possible in across-the-board percentage terms.

Of course, to proceed we must assume that a DMU could in fact operate exactly as does DMU d *. In the theory of production, this is the assumption, made universally by economists, that the production possibility set is convex. In this context, the production possibility set is the set of all vectors \( \left\{{\boldsymbol{X}}_i,{\boldsymbol{Y}}_r\Big|{\boldsymbol{S}}_k\right\} \) of resources, performance measures, and site characteristics such that it is possible for a DMU to use resource levels X i to produce performance measures Y r under site characteristics S k . The convexity assumption assures that DMU d * is feasible and that it is reasonable to expect that DMU d could modify its performance to match that of d *.

We use the Premium Solver Pro© add-in (Frontline Systems, Inc., Incline Village, NV) in Microsoft Excel© to solve the linear programs. We use a macro written in Visual Basic for Applications© (VBA) to solve the 624 linear programs sequentially and save the results within the spreadsheet. Both the Basic Solver© and VBA© are available in all versions of Microsoft Excel©. However, the Basic Solver© is limited to 200 variables and 100 constraints, which limits the size of the problems to no more than 199 DMU and no more than 99 resources, performance measures, and site characteristics combined. We use the Premium Solver Pro©, available from Frontline Systems, Inc., for this application.

13.1.2 The Performance Enhancement DEA Model

The performance enhancement DEA model with variable returns to scale, for DMU d, d = 1, 2, …, 624, is below. In this model, we eliminate E d as the objective function (13.8) and from the resource constraints (13.9a)–(13.9c) and introduce θ d as the new objective function (now to be maximized) and into the performance enhancement constraints (13.10a)–(13.10d). The parameter θ d will now be greater than or equal to one, and it is called the inverse efficiency of DMU d.

Max θ d | (13.8) | |

subject to | ||

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{1j}\le {X}_{1d} \) | (13.9a) | FTE teachers |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{2j}\le {X}_{2d} \) | (13.9b) | FTE teacher support |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{3j}\le {X}_{3d} \) | (13.9c) | Building administration and professional staff |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{1j}\ge {\theta}_d{Y}_{1d} \) | (13.10a) | Secondary level English (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{2j}\ge {\theta}_d{Y}_{2d} \) | (13.10b) | Secondary level math (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{3j}\ge {\theta}_d{Y}_{3d} \) | (13.10c) | Grade 8 science (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{4j}\ge {\theta}_d{Y}_{4d} \) | (13.10d) | Graduation rate (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{1j}\ge {S}_{1d} \) | (13.11a) | Number of elementary school students |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{2j}\ge {S}_{2d} \) | (13.11b) | Number of secondary school students |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{3j}\ge {S}_{3d} \) | (13.11c) | Percentage of students with free or reduced price lunch |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{4j}\ge {S}_{4d} \) | (13.11d) | Percentage of students with limited English proficiency |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{5j}\le {S}_{5d} \) | (13.11e) | School district’s combined wealth ratio |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j=1 \) | (13.12) | Variable returns to scale |

\( {\lambda}_j\ge 0\ for\ j=1,\ 2, \dots,\ 624 \) | (13.13) | Nonnegativity |

\( {\theta}_d\ge 0 \) | (13.14) | Nonnegativity |

13.1.3 The Mixed DEA Model

The mixed DEA model with variable returns to scale, for DMU d, d = 1, 2, …, 624, is below. In this model, we keep both E d and θ d in the constraints and we may now choose to either minimize θ d or maximize θ d . We introduce a new constraint (13.20) that ensures balance between the goals of reducing resources and enhancing performance.

Min E d or Max θ d | (13.15) | |

subject to | ||

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{1j}\le {E}_d{X}_{1d} \) | (13.16a) | FTE teachers |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{2j}\le {E}_d{X}_{2d} \) | (13.16b) | FTE teacher support |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{X}_{3j}\le {E}_d{X}_{3d} \) | (13.16c) | Building administration and professional staff |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{1j}\ge {\theta}_d{Y}_{1d} \) | (13.17a) | Secondary level English (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{2j}\ge {\theta}_d{Y}_{2d} \) | (13.17b) | Secondary level math (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{3j}\ge {\theta}_d{Y}_{3d} \) | (13.17c) | Grade 8 science (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{Y}_{4j}\ge {\theta}_d{Y}_{4d} \) | (13.17d) | Graduation rate (%) |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{1j}\ge {S}_{1d} \) | (13.18a) | Number of elementary school students |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{2j}\ge {S}_{2d} \) | (13.18b) | Number of secondary school students |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{3j}\ge {S}_{3d} \) | (13.18c) | Percentage of students with free or reduced price lunch |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{4j}\ge {S}_{4d} \) | (13.18d) | Percentage of students with limited English proficiency |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j{S}_{5j}\le {S}_{5d} \) | (13.18e) | School district’s combined wealth ratio |

\( {\displaystyle \sum_{j=1}^n}{\lambda}_j=1 \) | (13.19) | Variable returns to scale |

\( {E}_d+{\theta}_d=2 \) | (13.20) | Balance resource reduction and performance enhancement |

\( {\lambda}_j\ge 0\ for\ j=1,\ 2, \dots,\ 624 \) | (13.21) | Nonnegativity |

\( {E}_d,\ {\theta}_d\ge 0 \) | (13.22) | Nonnegativity |

Rights and permissions

Copyright information

© 2016 Springer Science+Business Media New York

About this chapter

Cite this chapter

Sexton, T.R., Comunale, C., Higuera, M.S., Stickle, K. (2016). Performance Benchmarking of School Districts in New York State. In: Zhu, J. (eds) Data Envelopment Analysis. International Series in Operations Research & Management Science, vol 238. Springer, Boston, MA. https://doi.org/10.1007/978-1-4899-7684-0_13

Download citation

DOI: https://doi.org/10.1007/978-1-4899-7684-0_13

Published:

Publisher Name: Springer, Boston, MA

Print ISBN: 978-1-4899-7682-6

Online ISBN: 978-1-4899-7684-0

eBook Packages: Business and ManagementBusiness and Management (R0)