Abstract

It is a well-known fact that the increase in energy demand and the advent of the deregulated market mean that system stability limits must be considered in modern power systems reliability analysis. In this chapter, a general analytical method for the probabilistic evaluation of power system transient stability is discussed, and some of the basic contributes available in the relevant literature and previous results of the authors are reviewed. The first part of the chapter is devoted to a review of the basic methods for defining transient stability probability in terms of appropriate random variables (RVs) (e.g. system load, fault clearing time and critical clearing time) and analytical or numerical calculation. It also shows that ignoring uncertainty in the above parameters may lead to a serious underestimation of instability probability (IP). A Bayesian statistical inference approach is then proposed for probabilistic transient stability assessment; in particular, both point and interval estimation of the transient IP of a given system is discussed. The need for estimation is based on the observation that the parameters affecting transient stability probability (e.g. mean value and variances of the above RVs) are not generally known but have to be estimated. Resorting to “dynamic” Bayes estimation is based upon the availability of well-established system models for the description of load evolution in time. In the second part, the new aspect of on-line statistical estimation of transient IP is investigated in order to predict transient stability based on a typical dynamic linear model for the stochastic evolution of the system load. Then, a new Bayesian approach is proposed in order to perform this estimation: such an approach seems to be very appropriate for on-line dynamic security assessment, which is illustrated in the last part of this article, based on recursive Bayes estimation or Kalman filtering. Reported numerical application confirms that the proposed estimation technique constitutes a very fast and efficient method for “tracking” the transient stability versus time. In particular, the high relative efficiency of this method compared with traditional maximum likelihood estimation is confirmed by means of a large series of numerical simulations performed assuming typical system parameter values. The above results could be very important in a modern liberalized market in which fast and large variations are expected to have a significant effect on transient stability probability. Finally, some results on the robustness of the estimation procedure are also briefly discussed in order to demonstrate that the methodology efficiency holds irrespective of the basic probabilistic assumptions made for the system parameter distributions.

The singular and plural of names are always spelled the same; boldface characters are used for vectors; random variables (RVs) are denoted by uppercase letters.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Stability assessment has long been recognized as a fundamental requirement in power system planning, design, operation and control. Transient stability can be defined as a property of an assigned power system to remain in a certain equilibrium point under normal conditions and reach a satisfactory equilibrium point after large disturbances such as faults, loss of generation, line switching, etc. [1]. Transient stability, therefore, constitutes a key aspect of modern power system reliability, and this fact is increasingly recognized in the modern power systems literature [2]. Indeed, some methods based on reliability theory are used in this chapter to perform an efficient assessment of power system stability. The traditional approach to transient stability analysis is deterministic, being based on the “worst case” approach. More specifically, transient stability quantitative assessment is generally performed on a three-phase fault on specific system buses as well as considering the load demand attaining its peak value over a prefixed time interval.

The application of probabilistic techniques for transient stability analysis was introduced in a series of articles by Billinton and Kuruganty [3–7], motivated by the random nature of:

-

the system steady-state operating conditions;

-

the time of fault occurrence;

-

the fault type and location;

-

the fault clearing phenomenon.

In fact, the steady-state operating conditions that heavily affect stability strongly depend on the load, which is a random process due to its intrinsic nature. This is especially evident in planning studies where the load level is the major source of uncertainty.

The time to clear the fault (fault clearing time, FCT), a crucial parameter in stability investigations, is also not known in advance, and so it should also be regarded as an RV. The probabilistic approach has also been explored in other significant articles such as [8], based on Monte Carlo simulations and [9], based on the “conditional probability” approach. An exhaustive account of the topic and the relevant bibliography can be found in the book by Anders [10, Chap. 12] which clearly states that: “stability analysis is basically a probabilistic rather than a deterministic problem”.

The analytical computation of the probability distributions of the intermediate RVs is one of the most challenging aspects due to the complexity of the mathematical models, as also pointed out by Anders [10, p. 577].

In a few articles [11–15], some theoretical results from probability theory and statistics have been utilized in order to develop an analytical approach to the transient stability evaluation of electrical power systems by performing critical considerations on the basic probability distributions. Instability probability (IP) over a certain period of time, with regard to a given fault, is defined and calculated by means of both critical clearing time (CCT) distribution and FCT distribution. This analytical approach, overcoming the drawbacks of Monte Carlo simulations, is very useful in actual operation since it permits straightforward sensitivity analysis of IP with regard to system parameters thus highlighting those which mostly influence the system stability characteristics and providing a quantitative tool for performing proper preventive control actions. For similar reasons, the proposed analytical probabilistic approach is also a powerful tool with regard to the practical aspect of the estimation of the basic parameters relevant to the transient stability assessment like IP or other measures of “stability margin” (SM). This topic—generally neglected, or dealt with in approximate “sensitivity analyses” in the literature—was faced in [16], where an effort was made to tailor a simple, analytical, transient stability probability estimator which allows the required characteristics of both efficiency and robustness to be obtained, in the framework of classical estimation.

In this article, a new Bayesian approach is proposed in order to provide this estimation: such an approach appears to be the most suitable one for on-line transient stability assessment. Numerical application performed confirms that the estimation technique is able to adequately “track” the transient stability in time, being far more efficient than the classical maximum likelihood (ML) estimation of the IP. This could be an interesting property in a modern liberalized market in which fast and large variations are expected to have a significant effect on transient stability probability.

In the final part of the chapter, some results on the robustness of the estimation procedure are also briefly discussed in order to illustrate that the methodology efficiency holds irrespective of the basic probabilistic assumptions effected with regard to “a priori” distributions of the various system parameters.

In the four Appendices to the chapter:

-

1.

a mathematical study of the IP versus the system parameters is illustrated in order to establish a proper “sensitivity analysis” of system stability which can be useful in the design stage.

-

2.

some basic properties of Bayesian estimation, relevant for the problem under study, are briefly mentioned and some properties already derived by the authors in previous articles for the interval estimation of the IP are also included.

2 Probabilistic Modelling for Transient Stability Analyses

2.1 Definition and Evaluation of Transient IP

Many state variables of an electrical power system possess an intrinsically stochastic nature and, consequently, a probabilistic description of transient stability aspects is able to infer interesting deductions also in terms of control actions for improving system robustness. For instance, the steady-state conditions, fault conditions and circuit breaker clearing times are not precisely known or predictable. The various involved uncertainties should be properly taken into account using suitable probabilistic models. In a probabilistic frame, both the network configuration and faults are described as random quantities. According to this approach, all faults potentially causing instability and all the possible network states at the instant of fault (e.g. in terms of the requested loads at load buses) have to be considered, together with their probability of occurrence. Once the cost of any consequence brought about by the loss of stability is known, IP assessment allows the instability risk to be evaluated. A risk value provides a quantitative measure for undertaking adequate preventive control actions for stability improvement and avoiding the need for conservative or “worst-case” criteria like those based on the classical deterministic analyses.

Hence, in order to effectively apply a probabilistic approach, a preliminary identification of the relevant statistical parameters has to be performed. This is a crucial step since they are potentially infinite: the choice can be made according to the required degree of accuracy.

Formally, let a proper probability space (O,P,S) be defined, where O is the sample space of all possible outcomes, S a sigma Algebra of events and P an additive probability measure over S. For the purpose of stability investigation, the sample space O may be defined as the product space O = O 1 × O 2 , in which O 1 is the set of all possible disturbances which can (potentially) affect system stability, O 2 is the set of all possible “state vector” trajectories after the disturbance. This requires the definition of a proper “state vector” as a vector whose components are all the system variables whose values are the basis on which stability assessment is performed (see also following Eq. 2).

Let the random event I be the event of instability (over a given time horizon H of power system operation) and let (C 1,…, C m ) be a finite set of random events constituting all the credible—and mutually exclusive—disturbances (“contingencies”) which can affect the system operation in H and potentially make system stability worse. Then, the IP in the interval H is provided, according to the total probability theorem, by:

where P(C j ) = probability of occurrence of the disturbance C j in the time horizon under consideration; P(I|C j ) = probability of instability once the disturbance C j occurred.

The above relation may also still hold, at least as an approximation, when the disturbances C k are not mutually exclusive random events, provided that the joint probability of two (or more) disturbances is negligibleFootnote 1: this typically happens in very short time (VST) operation which the second part of this chapter focusses on.

In the following, IP strictly denotes a term like P(I|C) where C is the given fault.

As long as the fault statistics are known from available data of the system under consideration, the P(C j ) terms can be considered known terms; the P(I|C j ) terms are evaluated as shown in the following so that P(I) is readily obtained from the above relation.

A quite similar reasoning still applies if the fault location is modelled by an RV.

Basic RVs are load demand and the FCT. Aiming at the description of the system stability characteristics as a consequence of a given fault (for instance, a three-phase short-circuit), IP may be expressed as a function of these RVs.

For an assigned electrical power system, characterized by a state vector x 0 at time t 0 in which the fault is supposed to occur, let us denote the stability region of the post-fault equilibrium point with S. Naturally, x 0 is an RV (more precisely, a random vector), mainly due to the random nature of the load demand which, as previously mentioned, has a significant effect on the operation state. Let τ be the FCT: denoting by x(t, τ; x 0) the state vector trajectory at the time t after the fault clearing, the CCT for transient stability can be defined as follows:

This relation clearly shows the dependence of the critical time on the random initial state x 0, thus T cr is also an RV. As discussed in [11–13], the FCT should also be regarded as an RV which will be denoted by T cl.

By keeping in mind that the system maintains its stability conditions if and only if the FCT is smaller than the CCT, i.e. T cl < T cr, the IP for a given fault can be expressed as:

Formally, the model in the above relation is quite similar to the “Stress–Strength” model in reliability theory: indeed, if the failure of a certain device or system is caused by the occurrence of a “stress” T cl greater than the “strength” T cr of the device or system, than the above probability q represents the unreliability (failure probability) of the device or system.

Once the probability distributions of T cl and T cr are known, q = P(T cl > T cr) may be easily computed, as is well known in probability theory, as shown below. In fact, by describing both T cl and T cr as continuous non-negative RVs, with joint probability density function (pdf) f(t cr, t cl), the IP q is expressed as:

In practice, the two RV are always considered in the literature as being statistically independent of each other since they are related to independent phenomena (as discussed in Sect. 2.3): under this assumption, let f cl(t) and f cr(t) be the marginal pdf of the RVs T cl and T cr, respectively, and let F cl(t) and F cr(t) be the correspondent cumulative probability distribution functions (cdf). The above expression may then be rewritten as follows:

Alternatively, by conditioning the instability event on the values of the clearing time T cl, q can also be equivalently expressed as:

In order to evaluate the probability q, the following preliminary steps have to be taken:

-

load demand and clearing time randomness have to be properly characterized in terms of distributions on the basis of realistic assumptions, by also taking the available data into account;

-

the CCT distribution has to be evaluated in terms of the load distribution since there is a conceptual and analytical relationship between them (this aspect will be adequately discussed in the following sections);

-

finally, the evaluation of the above integral has to be performed: often, this integration requires the use of numerical computation, but the analytical approach of this method allows it to be evaluated in a closed form.

This procedure is straightforward for the single-machine case (the so-called “one-machine infinite bus” system), as discussed in [11] since an analytical expression between T cr and the load demand, based on the well-known equal area criterion, can be demonstrated. Besides, in [12], the procedure was also extended to a multi-machine system by resorting to the so-called “Extended Equal Area Criterion” [17]. This procedure allows difficulties arising in the evaluation of T cr distribution to be overcome since T cr can be analytically expressed as a function of the load demand.

2.2 Probabilistic Modelling of the CCT

In this section, the functional expression between the CCT and the load demand L at the instant of contingency is discussed. Due to the random nature of the load demand evolution and the unpredictability of the instant of fault, the load active power L has to be correctly regarded as an RV. This implies that T cr is also an RV and its probability distribution may be calculated in terms of the load distribution which is generally estimated by load forecasting. In a quite general way, the load demand over time can be efficiently described through a continuous random process, L(t).

With reference to a generic time instant t, the load probability cumulative distribution function is denoted by F L (l; t) and is defined over the non-negative real numbers as F L (l; t) = P(L(t) ≤ l), l ≥ 0.

On the basis of the central limit theorem, it is generally assumed that L(t) can be described by a Gaussian random process [18]. The functional dependence between T cr and L can be described in a compact way as T cr = g(L) where g(·) is a continuous non-negative function over the positive real axis. Moreover, it can be proved that g(·) is a decreasing function of the active power L. Since T cr is a function of the RV L and the function g(·) is continuous and non-negative, the CCT is also represented by a continuous non-negative RV. Once the distribution function of L is known, in principle, the distribution function of T cr can be calculated by means of well-known theorems with regard to RV transformations applied to the analytical relation. Nevertheless, since the function g(·) is not analytically invertible, a closed-form expression for the probability distribution function of the variable T cr cannot be obtained. In these cases, the problem of the distribution evaluation is often solved using a stochastic Monte Carlo simulation.

However, in [11], an approximate method for the analytical calculation of the probability distribution function of T cr has been presented. The first step for the analytical evaluation is the approximation of the true characteristic g(L) with a simpler, invertible, analytical function. In particular, a log-linear model has proved to be very adequate when expressing the above characteristic for any given set of electrical parameters:

or:

The model coefficients (β0, β1) are positive constants (so that: a is real, b positive), depending on the electrical parameters of the system. They can be efficiently determined by performing a linear regression of the natural logarithm of T cr with regard to the load; i.e. according to the least-square method, a and b are chosen as the values minimizing the sum of the square deviations:

the points (T cr,i , L i ; i = 1,…, n) being chosen assuming a proper step in the interval (L 1, L n ) in which they will probably occur. For instance, a μ ± 4σ interval may be chosen to represent the load values generated by a Gaussian distribution with mean μ and standard deviation σ.

On the basis of the model (see Sect. 2.1), the evaluation of the cdf of T cr in terms of the probability distribution function of the load L is straightforward and, for non-negative values of the CCT, it is expressed by:

The above cdf is, of course, equal to zero for negative values of the argument. The above relation is quite general, i.e. independent of any particular assumption made about the load distribution. Moreover, it can be seen that the cdf of the load L(t)—i.e. the function F L (·) which appears in the right-hand side of the previous equation—is dependent, of course, on time t (even if this not explicitly expressed in the previous equation); therefore, the expression of the cdf of T cr also depends on time, and it is valid for any particular time instant t in which the fault occurs. The hypothesis of a Gaussian distribution, generally adopted to describe load, implies a Log-Normal distribution for the CCT and this model will be used as illustrated in the sequel. It should be stressed, however, that this distribution varies with time, although this fact may not be apparent at first sight. Indeed, time will not always be represented explicitly in the relevant equations which are often referred to at given short time intervals in which the above RV—the load, and thus the CCT—may be considered constant, and also the corresponding distribution. However, in the successive interval, this distribution is subject to changes. This should be quite clear in the framework of dynamic estimation.

2.3 Analytical Evaluation of IP: A General Methodology

As previously stated, the time interval needed for fault clearing (comprehensive of the time for the fast reclosure of the faulted line) should also be regarded as an RV, here denoted by T cl. The arc extinction phenomenon, in fact, is intrinsically not deterministic; the randomness of T cl may also be due to imperfect switching which can depend on the wear conditions of the poles caused by previous faults. Moreover, the (random) environmental conditions (temperature, humidity) also influence the clearing time T cl. The RV T cl is assumed to be continuous, non-negative and independent from the time instant of fault occurrence since the above phenomena can be considered independent of those which cause the fault. According to the definition of the CCT, it is natural to define the probability of instability after a contingency occurring at a given time instant t as:

In (8), the relation T cr = g[L(t)] between the CCT and the load at (intended as “immediately before”) the instant t of the contingency is explicitly presented. In order to obtain IP over a prefixed time horizon (0, h), the statistics of the random process of faults should be taken into account. This means that (8) must be integrated with the probability distribution of the number of faults in (0, h) which is indeed a random process. A Poisson stochastic process [10, 19] may be generally assumed as valid for this purpose. However, a different and simpler approach is possible [11]. The stability event over (0, h) may be defined as the property whereby—in the whole interval–stress T cl never exceeds strength T cr, which depends on time t through function g. Hence, the IP can be defined as the probability that T cr is exceeded by T cl for at least one time instant t in (0, h): this happens if and only if, as time t varies in (0, h), T cl exceeds the minimum value of T cr attainable in this interval.

Mathematically speaking, the IP, q, can be expressed as follows:

Let the peak load value, Λ = sup (L(t); 0 < t < h), over the interval (0, h) be introduced: since L(t) is a continuous random process, Λ is a continuous RV whose probability distribution function is denoted by F Λ(λ). As previously mentioned, the function g[L(t)] is continuous and decreasing versus L. Therefore, the “minimum” CCT over (0, h), again denoted by T cr, can be expressed as follows:

Hence, the IP is expressed by:

Hence, by keeping in mind the expression in (7), T cr can be expressed in terms of the peak load Λ, so that its probability distribution function F cr(t cr) can be written as follows:

where the constants a and b depend on the particular system, but are indeed constant with time (unless system topology changes; this case is excluded here but can be dealt with the same methodology, once it occurs, by simply computing the new values of a and b).

The problem can then be easily solved once the peak load and the clearing time distributions are known. The distribution function and the probability density function of the clearing time T cl are denoted by F(t) and f(t), respectively. Assuming, as reasonable, that the variables T cl and T cr are statistically independent, the IP in Eq. 11 can then be calculated as in Eqs. 4b or 4c. This approach, taken from [13], expresses the IP over an arbitrarily large interval—once the pdf of peak load is known—and is useful if a planning horizon is being studied. It was presented here for the sake of completeness: the application in this chapter is in fact devoted to on-line stability assessment for VST applications, related to time intervals typically lasting 1 h or less, so that in those intervals, the load L may be considered as a constant, albeit unknown (random) value so that, in practice, it will be modelled through an RV instead of a stochastic process. However, such a distinction does not affect the methodology followed in the sequel, since—as anticipated—the Gaussian distribution which will be used here is widely employed for describing the load process since it is also common practice to describe the peak load uncertainty by means of a Gaussian RV whose expected value is the forecasted peak load. For very large time horizons, the Extreme value (EV) distribution is also a natural candidate for describing the peak load [20]: this model—as well as others—can also be handled in practice with no particular problems as shown in [13], using the same methodology illustrated here.

3 Analytical IP Evaluation for Gaussian Load and Log-Normal FCT

3.1 Analytical Expression of IP

In this section, the analytical expression of the statistical parameter q—the IP of the given system under a given fault occurrence—is discussed on the basis of reasonable assumptions for the distribution functions of the RVs L (and consequently T cr) and T cl. For the sake of notation simplicity, the RVs T cr and T cl will be, respectively, named T x and T y .

The load L is then assumed to be a N(μ L , σ L ) RV: then, letting l be a given possible load value, L is characterized by the following pdf over (−∞ < l < +∞):

The interval of possible values is (−∞ < l < +∞) only theoretically, being derived from the Gaussian representation. In fact, the probability of negative values for L should, of course, be equal to zero; in practice, it is known that P(L < l) ≈ 0 if l < μ − 3σ.

The natural logarithm of T x is also normally distributed on the basis of the above-discussed relationship X = ln(T x ) = a − bL. Hence, the distribution of T x can be described by a Log-Normal distribution. It can be seen that the authors have shown [11–13], by means of extended numerical simulations and adequate statistical tests, that the proposed Log-Normal model for the distribution of the critical time, when the load is a Gaussian RV, is very adequate.

The Log-Normal pdf with parameters α (scale) and β (shape) is expressed by:

and the density f(t) is zero for t < 0.

In expression (13) α and β represent the mean value and the standard deviation of the natural logarithm of the Log-Normal variable, respectively; the mean value μ and the standard deviation σ, corresponding to Eq. 13, are:

In this section, T x is thus assumed to follow a Log-Normal distribution, with parameters α x and β x .

From relationships (14), the parameters α x and β x can be obtained from the statistical parameters μ L , σ L and the regression coefficients a and b—denoting by X the natural logarithm of the CCT T x —are expressed by:

A proper probabilistic modelling for the clearing time T y must also be introduced.

If there is a lack of experimental data, a Gaussian distribution is often assumed. However, the Gaussian distribution does not appear to be a very adequate and flexible choice, and the Log-Normal model is used instead, as in [11–13]: the Log-Normal pdf is indeed very flexible, since it can assume a large variety of shapes with positive “skewness index” which allows for a typical long “right tail” [21] whereas the Gaussian model only allows a single shape for the distribution of the clearing time, i.e. a symmetrical (bell-shaped) distribution around the mean value which is not likely to occur in real applications. The presence of a right tail in the Log-Normal density accounts for the possibility of relatively large clearing times compared with the expected value: thus, the Log-Normal assumption corresponds to a conservative approach which is appropriate when the exact distribution is unknown. The Log-Normal assumption for T y also permits a straightforward analytical calculation of the IP, without being restrictive, since other distributions may be adopted with the same methodology as shown in [13], requiring only elementary numerical methods.

Furthermore, if the β coefficient of the Log-Normal pdf is small enough, the pdf tends to become symmetrical and may also satisfactorily approximate a Gaussian model.

In this section, the IP computation is performed under the previously discussed hypothesis that both the clearing time T y and the minimum CCT T x are described by Log-Normal, independent, RVs. It is, therefore, assumed that T y has a Log-Normal distribution with parameters α y and β y , with density \( f_{{T_{y} }} (t_{y} ) \) expressed by (13). As a particular case, in VST applications the FCT may be considered as a known constant (as discussed in Sect. 3). With reference to the choice of α y and β y , they can be related to the values of \( \mu_{{T_{y} }} \) (mean value of T y ) and \( \sigma_{{T_{y} }} \) (standard deviation of T y ), on which some information could be known in practice.

Denoting by \( v_{y} = {\frac{{\sigma_{{T_{y} }} }}{{\mu_{{T_{y} }} }}} \) the coefficient of variation (CV) of T y , the relations specifying α y and β y as functions of \( \mu_{{T_{y} }} \) and \( \sigma_{{T_{y} }} \) are the following:

Different values can be considered for the parameters \( \mu_{{T_{y} }} \) and v y , in order to establish a sensitivity analysis. The IP variability versus the mean FCT \( \mu_{{T_{y} }} \) is particularly interesting since such a mean clearing time is a practical measure of the reliability level of the protection system.

The determination of the probability q, when T x and T y are Log-Normal and independent of each other, is now considered.

First, the following auxiliary RVs are introduced:

Under the assumed hypotheses, the probability laws of the above RV X and Y are, respectively, N(α x , β x ) and N(α y , β y ), where, using from now on the symbol α x instead of αcr, and generally suffixes (x, y) instead of (cr, cl):

According to the well-known properties of the Gaussian distribution, the variable Z, being the difference between two independent Gaussian RVs, is also Gaussian with mean value and standard deviation given by:

It is opportune, although obvious, to remark that in practice μ z is always positive (α x > α y ) since the FCT must always be small enough when compared with the CCT for the system to possess an acceptable level of stability, namely possess a very small IP value. This—being IP = P(Z < 0)—can occur only if E[X] is larger than E[Y]: this intuitive fact will be confirmed by the computations in the following.

By introducing the standard Gaussian distribution function:

the IP can be easily computed as:

by using the well-known property: Φ(−x) = 1 − Φ(x), valid for each real number x.

Alternatively, the “Complementary standard Gaussian distribution function” (CSGCDF) can be used as we have done here:

Since the CSGDF Ψ(x) is a strictly decreasing function of x from the value Q(−∞) = 1 to the value Q(∞) = 0, and Q(0) = 0.5 (see Appendix 1 for some curves), the IP can be expressed by the more compact expression:

where

The above quantity u, which plays a key role in the statistical assessment of the IP, can be defined as the “SM” of the system (under the given fault) since the larger the value of u, the smaller the IP. Confirmation of the fact that the relation (α x > α y ) must always be satisfied in practice (although it is not mandatory on theoretical grounds) is that, unless this happens, the IP is greater than 0.5 (if α x = α y , then u = 0 → q = 0.5).

3.2 A Numerical Example

As a numerical example, typical values of the mean values of FCT and CCT (which will be used in the applications in the chapter) are μ x = 0.145 s and μ y = 0.10 s, respectively.

It is, therefore, assumed that the CCT and FCT follow two independent LN distributions with mean values as above; moreover, a common CV value of 0.1 is assumed for both the CCT and the FCT (i.e. v x = v y = 0.10). The following values of (αx, α y , βx, β y ) correspond to these values:

having used the relations already stated:

It can be seen that α x > α y , as expected. The above values β x and β y in the example are equal since they depend on the CV value only.

Finally, the SM value is \( u = {\frac{{\alpha_{x} - \alpha_{y} }}{{\sqrt {\beta_{x}^{2} + \beta_{y}^{2} } }}} = 2.634 \) and the IP is then evaluated as q = Ψ(u) = 0.00423.

For high values of the SM like the one above, it is worth noting that (see Appendix 1 too) the IP is very sensitive to the variations of system parameters such as the mean FCT μ y , as can also be seen by taking the derivative of q with regard to μ y . For instance, if the mean FCT increases from the above 0.10 to 0.11 s (a 10% increase), with the same CVs, then the IP increases to 0.0251 (a 493% increase!). The IP variation compared with both CV variations is also very high. This is just an example of some analytical remarks, briefly discussed below, which may be useful in actual practice.

3.3 Some Final Remarks on IP Sensitivity and Its Estimation

Deferring a more detailed illustration of the IP expression to Appendix 1, this section concludes by highlighting some basic facts which are easily deduced when observing the expression of q:

Since Ψ is a decreasing function of its argument, then, as intuitive, q decreases (and stability improves) as the SM increases, e.g. the mean CCT μ x increases, or the mean FCT μ y decreases; for given values of μ x and μ y , it can be verified, also analytically (see Appendix 1), that the IP also increases when the CV of the CCT and/or FCT increases.

In other words, IP increases as the uncertainty about the above times increases: this consideration has the practical implication that, if uncertainty in load values (which entails uncertainty in the CCT) and/or in FCT is neglected (i.e. their CV values are assumed as zero), the IP may be undesirably underestimated.

It can also be seen that q(u) decreases very quickly towards 0—as exemplified in the above numerical example—especially when the SM u is large enough. This and other mathematical aspects of the relation between the IP and its parameters are discussed and also illustrated graphically, with some details in Appendix 1, in which a sensitivity analysis of the IP is also illustrated.

The great advantage of the proposed analytical approach—compared with numerical methods or Monte Carlo simulation—consists indeed in the very easy way that this approach enables us to perform this sensitivity analysis with regard to system parameters. This is clearly a very desirable property in view of an efficient system design (i.e. with regard to the protection system: taking decisions on how to improve performance of the protection system, lowering the mean value of the FCT, or improving data acquisition in order to reduce its SD or, with regard to the network topology: trying to devise the opportune actions in order to increase the mean value of the FCT and similar actions).

It can be seen that the IP value obtained by the above methodology is only a (statistical) point estimate of the “true” IP since it is obtained from estimated values of the true parameters α y , α x , β y , β x (as far as the CCT parameters are concerned, they are “forecasted” since they are obtained on the basis of a load forecast; the FCT parameters are estimated from available field or laboratory data). The topic of estimation is discussed in a Bayesian framework in the following sections where ML estimation is also mentioned.

The problem of IP sensitivity described above and estimation are closely related. The above results, and those in Appendix 1, show that, in view of the above high sensitivity of the IP to system parameters, particular attention should be paid to developing an efficient estimation of the characteristic parameters of the CCT and FCT.

4 Bayesian Statistical Inference for Transient Stability

4.1 Introduction

Bayesian inference [22–24] is becoming more and more popular as a powerful tool in all engineering applications including recent applications to power system analysis. This section and the following one, including the last ones which focus on numerical applications, are devoted to a novel methodology for the Bayesian statistical estimation, or briefly “Bayesian estimation” of the IP. In particular, here we are interested in developing a proper methodology for making inference about IP, once prior information and experimental data are available regarding the pdf of the unknown parameters of the IP, q, the transient IP.

It has been seen that the analytical expression of the IP value requires efficient statistical estimates of the true parameters α y , α x , β y , β x to be evaluated in actual practice (e.g. the CCT parameters, as pointed out before, depend on the load parameters, which are not known, but estimated as a consequence of a load forecast). The extreme IP sensitivity in the region of the values of practical interest (i.e. those yielding IP values of the order of 1e−3 or less) reinforces the need for an efficient estimation.

The aim of the inference is to establish both point and interval estimates of the unknown probability q = Q(u) given that the parameters (α x , β x , α y , β y ) of the two LN distributions must be estimated on the basis of the available random samples (T xk : k = 1,…, n) and (T yk : k = 1,…, m).

Bayesian inference [22–25] successfully provides a coherent and effective probabilistic framework for sequentially updating estimates of model parameters as demonstrated by the ever increasing number of publications addressed to it in both theoretical and applied fields. Bayes estimation, therefore, appears to be quite adequate for on-line sequential estimation of model parameters. For well-known reasons, moreover, it is particularly efficient (compared with traditional classic estimation, based on ML methods, briefly mentioned in the final part of this section) when rare events are of interest, as is the case here. This is so true that it is currently proposed even when there are no data (see, e.g. [26] for a recent application).

The core of the Bayesian approach is the description of all uncertainties present in the problem by means of probability, and its philosophical roots lay in the subjective meaning of probability [25]. According to such philosophy, the unknown parameters to be estimated are considered RVs, characterized by given distributions whose meaning is not a description of their “variability” (parameters are indeed considered fixed but unknown quantities) but a description of the observer’s uncertainty about their true values. Let ω = (ω1, ω2,…, ω n ) be the n-dimensional vector of the parameters to be estimated. The first step in a Bayes estimation process is to introduce—in order to express the available knowledge on the parameters before observing data—a “prior” probability distribution, characterized—in the continuous case here considered—by a joint (n-dimensional) pdf over the parameter space Ω:

This prior pdf is often—but not always—chosen “subjectively”, which does not mean “arbitrarily”, but means “on the basis of the knowledge available to the analyst”, also using “objective” pieces of information which in most cases could not be used in classical (frequentist) statistical estimation [22–25] which does not admit the existence of a prior pdf.

Then, the data D are observed according to a formal probability model which is assumed to represent the probabilistic mechanism for some (unknown) value of ω which has generated the observed data D. This model gives rise to the “likelihood function” (LF), L(D|ω), i.e. the conditional probability of the data, given ω ∈ Ω. After observing the data D, all the new (updated) available knowledge is contained in the corresponding posterior distribution of ω. This is represented by a posterior joint probability density, g(ω|D), obtained from Bayes’ theorem:

where the denominator is the n-fold integral over the whole parameter space Ω. Then, if a function τ = τ(ω) of the parameters in ω is the subject of estimation, according to the well-known “mean square error” (MSE) criterion, the best Bayes estimate “point” estimate—denoted by τ°—is given by the posterior mean of τ, given the data D. This may be obtained by well-known rules related to the expectation of a function of RV [19] by:

The particular case τ (ω) = ω κ —for any given k value, k = 1,2,…, n—yields the Bayes estimation of any single parameter ω κ (k = 1,2,…, n).

Alternatively, by denoting the prior pdf of τ by h(·), i.e. the pdf induced—by a proper manipulation of the pdf g(ω)—on the space of τ values by the transformation τ = τ(ω), and introducing, analogously, the posterior pdf of τ, h(τ|D), the above expectation may be obtained equivalently by the following integral:

with Ξ being the space of τ values. In practice, and also in this application, it is very difficult, if not impossible, to deduce an analytical expression for the posterior pdf of τ, and the above expectation may be more easily obtained by the integral over Ω even if, in most cases, it is evaluated numerically or by means of simulation.

Unlike classical estimation, which is inherently focussed on the point estimate, here this is only a particular piece of information: indeed, Bayesian inference aims to express all the available knowledge on the parameters not by a single value but by means of the complete posterior pdf of τ, h(τ|D), denoting by h(·) the pdf induced—by a proper transformation of the pdf g(ω)—on the space of τ values by the transformation τ = τ(ω). The point estimate is only a “synthesis” of this pdf. This pdf (which would have no meaning in the classical inference since it is regarded as an unknown constant, not an RV) is the “key” information provided by Bayes estimation since it allows any probabilistic statement about the values of τ to be expressed. Typically this pdf is used to form a “Bayesian confidence interval” (BCI) or “Bayesian credible interval” of the unknown τ, defined as:

so that P(τ1 < τ < τ2) = π, where π is a given probability. The BCI is expressed in terms of the posterior pdf of τ as follows:

In practice, the above relation is generally not sufficient to find the BCI but further requirements, such as a search for the “Highest Posterior Density” regions [23], allow the determination of both the unknowns (τ1, τ2) above.

4.2 A General Methodology for Bayesian Inference on Transient IP

Let us transpose the above concepts of Bayesian inference to the estimation of the IP:

with u being the SM whereas Ψ(z) is the CSGCDF Ψ(z) = 1 − Φ(z):

For the purpose of Bayes estimation, in the most general case, all the parameters shall be considered unknowns. Therefore, in the Bayes approach, the four parameters (α x ,α y ,β x ,β y ) and also the SM u and the same IP, q, are regarded as a realization of RV which will be denoted by the following capital letters in the sequel: Footnote 2

The symbols M and V are also chosen for “mnemonic” reasons since, as mentioned below, they correspond to mean values and variances, in particular of the logarithm of the FCT (T x ) and of the logarithm of the CCT (T y ):

Bayes estimation, therefore, consists of assessing prior distributions to the above parameters (M x ,M y ,V x ,V y ), and then evaluating point and interval estimates of the unknown parameter IP, described by the RV Q, function of the RV U:

The above relations specify the relation between the basic RV (M x ,M y ,V x ,V y ) and the IP Q:

These relations appear to be quite complicated, in particular due to the presence of the special function Ψ(U), whatever the choice of the prior pdf of the basic RV, a topic which is dealt with below. The same argument also applies, a fortiori, to the posterior pdf. In practice, it is impossible to evaluate the pdf of Q—be it prior or posterior—analytically. It can be, however, handled numerically by resorting to a reasonable “Beta approximation”, for example—introduced in a different study by Martz et al. [27] and also used by the authors in the above-mentioned (a.m.) article [16] in the framework of ML estimation. This approximation is illustrated in Appendix 3 and has been shown to be very adequate, although not being the only possible approximation, since every pdf over (0, 1) which can be rather smooth and flexible may be a good candidate (possible alternative choices studied by the authors are also mentioned in Appendix 2). In any case, once the numerical pdf of Q is obtained, its usefulness in this application seems to consist, first of all, in establishing a proper “upper confidence bound” for IP, i.e. a q UP value that makes the probability of the “desirable” event (Q < q UP) high enough, say 0.95 or 0.99. Therefore, by denoting this high probability value with η, interest may be focussed on the determination of a q UP value so that:

With a sufficiently large probability value, we are, therefore, assured that the “true” IP, Q, is smaller than an “upper bound” q UP, which is the Bayes counterpart of the confidence level of 100η%.

An important characteristic, perhaps the most important one, of the Bayes inference methods is the one, already mentioned, of allowing any probabilistic statement on the values under investigation, here the IP, to be expressed, e.g. in terms of the above BCIs.

This is the core of “Bayes inference”, which is something more than pure estimation, and this is also the reason behind the heading of this section (“Bayesian inference” rather than “Bayesian estimation”). Also, in many practical cases (e.g. in order to control if some prefixed requirements or standards are met by system performances), an interval estimate may be more significant than the point estimate alone.

However, also in view of the analytical or numerical difficulties mentioned above associated with the establishment of the BCI, in actual practice, there is no doubt that the typical objective of the Bayes methods is to assess the point estimate of Q. This is the topic dealt with from now on. This point estimate of Q may be evaluated after the assessment or evaluation of the:

-

prior parameters’ pdf: \( g_{{m_{x} ,m_{y} ,v_{x} ,v_{y} }} \) (m x ,m y ,v x ,v y ), briefly denoted as g(m x ,m y ,v x ,v y );

-

the LF: L(D|(m x ,m y ,v x ,v y ), which is given in this case by the conditional joint pdf of the observed data (FCT values, i.e. the times T xk , and CCT values, i.e. times T yk , recorded in the interval of interest for the IP prediction). This joint pdf is conditional to the parameters (m x ,m y ,v x ,v y );

-

posterior parameters’ pdf: \( g_{{M_{x} ,M_{y} ,V_{x} ,V_{y} }} \) (m x ,m y ,v x ,v y |D), briefly denoted as g(m x ,m y ,v x ,v y |D), obtained by the prior pdf and the LF by means of the Bayes theorem as illustrated above.

Finally, the Bayes estimate, denoted by Q°, of the IP Q is given in principle by the four-dimensional integral:

with Ω the parameter space above specified for the four parameters (m x ,m y ,v x ,v y ), Q(m x ,m y ,v x ,v y ) = Ψ(u) (lowercase letters are used for the single determinations of the RV being studied), \( u = {\frac{{m_{x} - m_{y} }}{{\sqrt {v_{x} + v_{y} } }}} \), and Ψ the above CSGDF.

As far as the choice of prior pdf both for the above parameters is concerned, it is well known that the most simple natural candidates are the so-called “conjugate prior pdf” [23, 24]: this means adopting Gaussian prior pdf for the mean values M x and M y and Inverted Gamma prior pdf for the variances V x and V y (see Appendix 2). These are indeed the prior pdf of mean and variances for both the normal and the Log-Normal sampling distributions [21–24].

The above integral may appear quite cumbersome, yet its evaluation can be made—at least in some cases—relatively simple, observing that the particular form of the random IP, Q(U), can be reformulated in terms of the RV: M = M x − M y , V = V x + V y , \( S = \sqrt V . \) Hence Q = Ψ(M/S).

If prior and posterior information on the four parameters (M x ,M y ,V x ,V y ) is recast into prior and posterior information on the difference M = (M x − M y ) and the sum V = (V x + V y ), the above integral, hence, reduces to a double integral (with respect to the pdf of M and S) which can be solved using methods related to Bayes estimation of Gaussian probabilities [23, 24].

The above transformation between the pdf of (M x ,M y ,V x ,V y ) and those of M and V may be effected by elementary RV transformations, taking advantage of the assumed s-independence between the CCT and the FCT which logically implies the s-independence between their mean values and variances. For instance, adopting conjugate Gaussian prior pdf both for M x and M y , assumed as s-independent, then the prior pdf for M = (M x − M y ) is again Gaussian with obvious values of the parameters; the same holds, as is well known, for the posterior pdf. As far as the variances are concerned, the same reasoning does not apply for the above-mentioned conjugate Inverted Gamma pdf which is typically adopted as the prior pdf. However, if one is able to express information directly in terms of the sum of variances V = (V x + V y ), by using an Inverted Gamma pdf for V, the classical results of Bayes estimation for the Gaussian model (mentioned in Appendix 2) may still be applied. In general, however, if other prior models are chosen, the above estimation must be carried out numerically. This poses no particular problem nowadays since specific codes and algorithms have been devised for such purposes [22–24].

A major simplification occurs in the particular case considered in the application of this contribution, i.e. in VST applications in which the FCT may be considered in practice as a known constant (as discussed in the following section).

4.3 A Simplified Method for Bayes Estimation of the IP in the Event of VST Stability Prediction

The general theoretical problem of the Bayes estimation for the IP, discussed above, will not be pursued here in view of the VST application of this contribution. In this case, indeed, an event of instability—in a very short interval lasting typically 1 h—is very unlikely as confirmed by the typical values of the IP illustrated in the previous section and observed in actual system practice. Observing new FCT values is a very rare event.

For practical purposes, the FCT T y can, therefore, be considered a known constant instead of an RV. This constant value is the prior estimate of the FCT before observing data since no inference can be made out of them; i.e. T y assumes a deterministic value, t y = t*, estimated from previous experiences.

Alternatively, the FCT may be considered as an RV, with the assumed law LN(α y ,β y ), whose parameters are characterized by their prior pdf, since it is highly improbable that new data can change our information about the FCT in a VST interval (or the data are so rarely acquired that they do not change the prior pdf much).

The two cases are equivalent, as will be shown later, so that in the sequel reference will be made to the first one (i.e. a deterministic value, t*, of the FCT T y is assumed).

Let t be the FCT and T x the RV describing the CCT in the given interval under investigation. Two alternative hypotheses can be assumed for the RV describing the load: (1) the load has a constant value (i.e. it is unknown, but constant in time) due to the interval shortness; (2) the load variations with time are considered—adopting a more rigorous approach—not negligible: then, reference to the peak load is made in the interval. As previously shown, the two cases are formally equivalent.

Then, the IP value in that interval is given—by using the usual transformation from an LN cdf into a Gaussian one:

always using

Obviously, the above relation could also be deduced from the general one:

with α y = ln(t); β y = 0 (since T y is deterministic, as is its logarithm Y, so that its only assumed value, ln(t), coincides with its mean value whereas its SD β y is zero).

The consequent IP expression is, therefore, equal to:

which can be handled like that of a Gaussian cdf, as shown in the sequel, in order to perform a Bayes point estimation of the IP.

In the framework of Bayes estimation, let us assume that the mean value α x of X = ln(T x )—being T x the CCT—is an RV, denoted as M (with analogy with the previous section) whereas its SD β x = s—as assumed in common practice—is known.Footnote 3

Let the prior information about the unknown parameter M be described by a conjugate prior normal distribution with known parameters (m 0, s 0), i.e. M ∽ N(m 0, s 0) so that the prior pdf of M (bear in mind that M, the mean value of the RV X = ln(T x ) can be negativeFootnote 4):

By using results in Appendix 2, the posterior pdf of M, after observing data X, is again Gaussian:

with posterior mean and variance given by:

where

being X k a generic log-CCT value of the sample X.

The Bayes point estimate of the IP is, therefore, given by:

which, after some manipulation, after changing the variable to: \( z = {\frac{m - \tau }{s}} \) (see [19], in chapter titled Transmission Expansion Planning: A Methodology to Include Security Criteria and Uncertainties Using Optimization Techniques), can be shown to be equal to:

This estimator will be used in the final numerical application related to VST stability prediction, in which, on the basis of an adequate dynamic model of the load (and the CCT), the posterior means and variances will be updated at each time step.

In these applications, typically only one datum of the CCT is observed at each step (so in the above relations n = 1 will be used)—i.e. at the generic kth step—the measured or forecasted load value L k . This “data” scarcity renders the Bayes estimation more attractive, as discussed above.

Finally, let us briefly examine the second case, mentioned above, with regard to the knowledge of the pdf of the RV T y . Let us assume that it is an RV, and not a constant as above, letting the parameters of the RV T y , i.e. (α y , β y )—denoted as (α, β) in the sequel—be distributed according to their prior pdf, which remain unchanged after every interval, since no new FCT value is obtained. Let us assume, as above, that only the mean of Y, α = α y is unknown with a prior conjugate Gaussian distribution N(μ o, σo). Consequently, the pdf of Y (conditional to α y = α) and the pdf of α are, respectively:

Then, using the total probability theorem for continuous RV [19] or known results from Bayesian estimation theory [22–24] (see also Appendix 2), it can be seen that the marginal pdf is still a Gaussian pdf:

In the light of this fact, it is not difficult to show that (40) still holds with properly re-arranged values of the constants (α x , β x ).

4.4 A Mention of the Classical Estimation of the IP

Here, only a brief account of classical (ML) estimation of Q is given in order to compare it with the one adopted here. Some details can be found in [21, 28]. As stated in Sect. 4.1, let us assume that the following data are available: X = (X 1,.., X n ), where X k = ln(T xk ), k = 1,…, n: i.e. X is a random sample of n elements constituted by the natural logarithms of a CCT sample, and let Y = (Y 1,.., Y m ), where Y k = ln(T yk ), k = 1,…, m: i.e. Y is a random sample of m elements constituted by the natural logarithms of the FCT sample which can be obtained from field or laboratory data on the system protection components, with regard to the assumed kind of fault.

By referring, for easier notation, to estimated quantities with capital letters, the most widely adopted estimators (A x , B x , A y , B y ) of the above four parameters—for the well-known properties of the ML estimation [21, 28]—are given, for the LN variables under study, by:

These estimators indeed maximize, compared with any other function of the data, the LF L[(X,Y)|(α x , α y , β x , β y )].

In practice, these estimators coincide with the sample estimators of the mean values (A x and A y ) and standard deviations (B x and B y ) of the Normal RV X = ln(T x ) and Y = ln(T y ), and show some desirable properties such as consistency. Moreover, the log-mean estimators A x and A y are also unbiased estimators of α x and α y , respectively.

Then, the ML estimator Q* of q is given by:

In [16], the authors analysed the classical point and interval estimates of Q based on an estimator of this type whose properties are not easy to assess.

As a final remark, it should be clear that, when prior information are available, as in most engineering applications and also in this case, the Bayes estimator definitely performs better then the ML estimators. This is especially evident in on-line estimation, as will be shown later, since very few data can be collected for inference. Typically, indeed, no data are available on FCT if the fault does not occur, and this non-occurrence is of course very likely; only one datum is available on CCT, based on the forecasted load value for the time interval under investigation.

5 Dynamic Bayesian Estimation of Mean CCT and IP for VST Applications

5.1 Introduction

In this section, devoted to VST system operation, the principle of recursive Bayesian estimation is applied for a fast and efficient on-line evaluation of the mean CCT (actually, of its natural logarithms), and thus of the IP, in a dynamic framework. This evaluation exploits:

-

1.

the above-discussed relation between the CCT and the system load L;

-

2.

the probabilistic knowledge of the time evolution of the load which is generally available in VST applications.

With regard to point (1), reference is made here for illustrative purposes to a single-machine system,Footnote 5 or to a system which is reducible to it. The above discussed log-linear characteristic is therefore assumed to hold—at any given instant (for a given network topology)—between the CCT T x and the load L:

with model coefficients (β0, β1) which are positive known constants, depending on the electrical parameters of the system. As mentioned above, they can be determined by performing a linear regression of the natural logarithms of T x with respect to the load. This is accomplished after computing the CCT values off-line, for the given network and fault, by means of an appropriate system model based on the classical Lyapunov direct methods for transient stability analysis and sensitivity. Therefore, by denoting—as before—the natural logarithm of T x by X and the values of X and L at a given time instant t k by (X k , L k ), respectively, the following relation is assumed:

with

This linear relation between the logarithm of the CCT (LCCT in the following) and the system load is the basis for dynamic estimation. In particular, the proposed Bayes recursive estimation uses known results in dynamic estimation—such as the Kalman filter theory—which are well established under the hypothesis that the series {X k } to be estimated is a Gaussian time series. Such hypothesis is true if the load L(t) is a Gaussian process as above assumed (generalizations to other kinds of load distribution are of course possible without particular problems).

With regard to point (2), namely, the load evolution in time, an adequate load evolution model must be chosen like those adopted for VST load forecasting algorithms.

In particular, we must consider the given time instants t 1, t 2,…, t k ,… of interest for VST operation (typically, the successive hours of a certain time interval in which the network topology is assumed as fixed). Then, the following “dynamic linear model” (DLM) [29], or “autoregressive model” is often satisfactorily adopted for the stochastic process (L k , k = 1,2…), which is supposed to generate the load values at times t k according the “system equation”:

in which{λ k } is a “White Gaussian Noise” (WGN) sequence, i.e. a set of IID Gaussian RV with mean 0, and known SD, denoted by W. This is formally expressed as:

The sequence is “initiated” by a value L 0 (load value at time t = 0) which is (like all the L k values) an RV, as appropriate in a Bayes framework, with known pdf representing our prior information. It is also assumed to be a Gaussian RV (with known mean \( \mu_{{L_{0} }} \) and known SD \( \sigma_{{L_{0} }}),\) statistically independent of any finite set of the sequence {λ k }

The above model tries to capture a reasonable “Markovian” dependence between the successive random values L k+1 and L k in a simple way, suitable for VST applications. However, it may be extended without excessive difficulty to cover, e.g. more complex autoregressive model, such as ARIMA processes, or non-linear models [29].

Generally, the values of the load L k are not measurable with precision but their acquisition is subject to forecasting or measurement errors (also taking into account possible time delays or even missing values in the acquisition process). The following “observations equation” is typically adopted for the estimation of the DLM:

where {ν k } is another WGN sequence, with mean 0, and known SD sν, statistically independent of the sequence {λ k } and all the other RVs in the model:

The above assumptions assure that both L k and Y k are Gaussian sequences.

Analogously to “Kalman filtering” language [29], the basic DLM equations (46a) and (47a) can be, respectively, regarded, as the “state equation” and the “measurement equation”.

In order to define a similar DLM for the LCCT values, and repeating for convenience equation (45), let us define the sequences:

It is easy to see that these definitions, observing that {ξ k } and {ν k } are still WGN sequences, allow the definition of a DLM for the sequence of the LCCT values as follows:

where (ξ k , η k ) are, respectively, the system and measurement noise for the DLM of the LCCT.

The above assumptions for (X 0, ξ k , η k ) are formally expressed as follows:

The SD w of the model and the SD s of the measures, appearing in the above relations, are clearly related to the above SD (W,S) of λ k and ν k by the following, obvious, linear relationsFootnote 6:

Finally, the initial mean and SD of the LCCT sequence X k , i.e. those of X 0 (first equation of 49b), denoted simply by (μ0,σ0) are obviously expressed in terms of the corresponding initial load L 0 parameters \( (\mu_{{L_{0} }} ,\sigma_{{L_{0} }} ) \) in (46c) as follows:

It is apparent from the second equation (48) and the above hypotheses summarized in (49a) and (49b) that Z k , the observed LCCT Z k , being the sum of two Gaussian independent RV, X k and ξ k , is still a Gaussian RV whose marginal pdf is easily deducible (it is sufficient to compute its mean value and variance, as shown below). Moreover, if X k should be known, the conditional distribution of Z k —being ξ k a Gaussian RV with zero mean—would be a Gaussian one with mean equal to X k , and known SD w. FormallyFootnote 7:

Therefore, in the framework adopted here for the estimation process, X k is the unknown (unobservable) mean value of the observable Gaussian RV Z k , with known SD w. In other words, interest here is focused on the estimation of the mean value of the LCCT, so that results mentioned above (and recalled in Appendix 2) related to estimation of the unknown mean value of a Gaussian RV may be adopted. For brevity, the term “LCCT” (both in the acronym form or in the complete one) will, however, still be used in the sequel instead of the more correct “mean LCCT”.

The X k sequence is “initiated” by a value X 0 which, based on prior information for the load L 0, is again assumed to be a Gaussian RV, as above reported, statistically independent of any finite set of the sequences {ξ k } and {η k }.

5.2 Estimation Methodology

Once the measurements (z 1,z 2,…, z k ) have been assigned until time instant t k , the optimal dynamical state estimate \( \hat{X}_{k} \) of the “true” state X k at time t k —according to the Bayesian approach to estimation—is provided by a posteriori “MSE” minimization:

This can be accomplished, as will be shown, using recursive Bayesian estimation (Appendix 2, see also [28]) which is substantially resumed by the following recursive relationship.

where \( {}^{ - }{\hat{\rm x}}_{k} \) represents the state estimate at instant t k , before z k knowledge, i.e. the a priori estimate at stage k, and G k is a constant which is obtained as shown below on the basis of the above “minimum MSE” criterion. The above relation is substantially equivalent to Kalman Filter, but is obtained using the Bayes estimation process, as discussed in [29]: this method has the advantage over the classic Kalman Filter derivation of accounting for the random nature of state X and of allowing the computation of any probabilistic statement about this state. The constant G k corresponds to the well-known “Kalman gain” [28].

The following stages to which the Bayes procedure is applied can be defined:

Stage “0”, or “a priori” Stage: “Stage 0” means the initial stage before any observation is available. Therefore, in this stage the only available information is the a priori characterization for the RV X at time instant t0:

Thus, from a Bayesian point of view with quadratic “Loss function”, the initial optimal estimate is \( \hat{X}_{0} = \mu_{0} . \)

Stage 1: In this stage and in following stages, according to the Bayes methodology, two kinds of information are available, before and after the measurement—which here is the first observation z1—is acquired. The first (prior) information yields the prior estimation, the latter (posterior) information yields the posterior estimation.

Before the first measurement z 1 is performed, the following a priori estimation can be given:

where the prior mean value and varianceFootnote 8 are determined by:

Once the measurement z 1 is known, the aim is directed towards X 1 estimation conditional to z 1. Denoting by z 1 the observed realization of the RV Z 1. Z 1 is still a Gaussian RV, with conditional mean (given X 1) equal to X 1, and SD equal to that of η1, i.e. s. Formally

and since E[Z 1|X 1] = X 1, it can be deduced that the posterior mean (i.e. the Bayes estimate) of X 1 is:

where

The posterior estimate is used as the prior for the next stage according to recursive Bayesian estimation, as illustrated in Appendix 2. By applying this algorithm recursively, the following result at time t k can be obtained.

Generic Stage k: By applying recursive Bayesian estimation, we can immediately verify (e.g. by induction) that the following relation—clearly appearing as the general case of (59)—holds at time instant t k :

Similarly, the following recursive formulation for the Bayesian estimate at time t k can be obtained:

where

As is well known, this estimate exhibits the noticeable property to minimize the posterior MSE for every time instant t k , expressed by:

Of course, the recursive procedure allows knowledge of the pdf of X k at each stage k (needless to say, unlike the “static” Bayes estimation, the posterior pdf of X changes with time), and also allows Bayes estimation of the IP. This is directly deduced using results derived in Sect. 4.3: since (61) is the posterior mean of the LCCT and σ 2 k the posterior variance, by re-arranging Eq. 40, the following recursive Bayesian estimate of the IP at time t k is obtained:

Confidence intervals, particularly the previously illustrated “upper confidence bounds” may also be computed for both the LCCT and the IP. In the following numerical application, for sake of brevity the estimation procedure is illustrated only for the LCCT sequence, which is a Gaussian one, so that its results are more easily interpretable. Moreover, the confidence interval assessment is straightforward for the LCCT sequence whereas for the IP sequence it can be computed by applying the procedure illustrated in Appendix 3 at each step using the a.m. Beta approximation, since no analytical result exists. Numerical simulations results were similar as regards parameter point estimation. A numerical example of the BCI computation is still reported in Appendix 3, only for the IP, being it straightforward for the LCCT.

5.3 Concluding Remark

The proposed procedure is based on a relation (CCT–Load) which can be analysed and computed off-line—for the given network—once for all, so that on-line estimation shown here does not require the solution of the system model at each iteration. This allows the time duration of the intervals in which the stability is assessed to be shortened and is favourable to reliable and efficient security assessment. A distributed version of the proposed Kalman filtering approach can be applied in the case of large power systems [30].

6 Numerical Application of the Bayes Recursive Dynamic Model

In this section, a simple numerical application—based on typical load and CCT values and simulated patterns of the load process in time—is presented in order to illustrate the on-line estimation of the LCCT in VST operation.

The load process is assumed to follow the DLM model, with (k = 1,2…):

in which

-

{λ k } is a “WGN” sequence, WGN(0, W);

-

{ν k } is another WGN sequence, WGN(0, S);

the two sequences are statistically independent of each other and the other RV in the model.

For simplicity of notation, the SDs in the above WGN sequences (λ k , ν k ) are, respectively, denoted as (W, S) instead of (σλ, σ ν ) as in the previous section. The lowercase letters (w, s) will be used for the LCCT sequence.

The evolution model of the LCCT corresponding to (65) is, as already deduced:

with the already discussed basic assumptions

and with the SD of the WGN sequences (ξ k , η k ), respectively, equal to w = bW, s = bS.

For the sake of a numerical example, let us assume that the starting value of the system load, L 0, measured in p.u., is a Gaussian RV with mean \( \mu_{{L_{0} }} = 0.8750\;{\text{p}} . {\text{u}} . \) and SD \( \sigma_{{L_{0} }} = 0.0417\;{\text{p}} . {\text{u}} . \)

These values imply that L 0, with probability 0.9973, assumes values in the following interval (0.75–1 p.u.) of amplitude equal to \( 6\sigma_{{L_{0} }} \) around the mean value \( \mu_{{L_{0} }} . \)

Let us also assume that, in the log-linear model X = a − bL, the following values of the regression coefficients have been computed: a = 1.7242, b = 4.1774.

Consequently, the mean and SD of X, i.e. the parameters of the LN pdf of the CCT, are equal to \( \mu_{{X_{0} }} = - 1.9310; \) and \( \sigma_{{X_{0} }} = 0.1741. \) The mean value corresponds to a CCT of about 0.145 s, which was used for the numerical examples illustrated above.

The numerical results, obtained by means of stochastic simulation of the above sequences, will be expressed in relation to the values of the “primary” SD values (S, W) of the load model and the initial load variance, V 0, i.e. the variance of L 0, a value which is chosen by the analyst in a Bayes methodology, on the basis of her/his prior information.

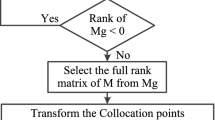

More specifically, the values (0.05 and 0.1) will be used for S and W, and these values will be swapped with each other in the course of the application to obtain at least some basic information on the sensitivity of the results in relation to variations of the model parameters. The initial variance, V 0, of the model (i.e. the variance of X 0, a value which is subjectively chosen by the analyst in a Bayes methodology) will alternatively also assume 2 values, 0 or 1, corresponding to different degrees of belief in the prior information (very strong in the first case, slight in the second one). An example of a possible sample path of the load sequence with these parameters (and V 0 = 0), simulated by means of the “normrnd” function of MATLAB®, is illustrated in Fig. 1 in a time interval covering 500 h of system operation (the corresponding series of the LCCT values will be shown in Fig. 2, darker curve).

Example of load pattern {L k }, generated by a DLM (65) with parameters: W = 0.05, S = 0.10, and initial variance V 0 = 0

Example of LCCT pattern {X k }, and its estimated values generated by a DLM as in Sect. 1.2 in chapter titled Transmission Expansion Planning: A Methodology to Include Security Criteria and Uncertainties Using Optimization Techniques, with parameters: W = 0.05, S = 0.10, and initial variance V 0 = 0

To each load sequence, generated by a DLM corresponds, as discussed above, an LCCT sequence of X k values, also constituting a DLM, which are estimated by the recursive approach by the values X k °. In Fig. 2, for a sample path of N = 120 time values, the sequence of LCCT values and of its estimated values are shown, obtained with the same values of W, S, V 0 as in Fig. 1.

The efficiency of the estimation method is evaluated using extensive Monte Carlo simulations [31]. In particular, the model performance has been summarized for any given set of time instants (t 1, t 2,…, t N ) by the average squared error (ASE)Footnote 9:

in which ζ j is the quantity to be estimated (in this case, the LCCT X j at any given instant t j ) and ζ 0 j is its estimate. In practice, given the length N of a sequence (here N = 120 is chosen), M simulated sequences have been generated by the same algorithm and the average of the squared errors values obtained has been reported as a sample estimate of the “true” squared error. Extensive simulations were based on a number M = 104 of replications for each simulated trial; only a significant subset of the relevant results are reported in the following.

The ASE obtained using the traditional ML method has also been evaluated since the ML estimator at time k is equal—as is well known from estimation theory for the mean of a normal RV—to the sample mean of the k observed values Z j (j = 1,…, k) so far. The precision (as measured by the relative bias and the maximum relative estimation error) of the dynamic Bayes estimator of the LCCT has also been verified. The basic statistics—estimated at the end of each simulation case study—which describe the efficiency of the proposed estimates, and which will be reported below—are:

-

ASEB: average squared error of the bayes estimator;

-

ASEL: average squared error of the ML estimator;

-

ARE = ASEL/ASEB: average relative efficiency of the Bayes estimator compared with the ML estimator.

The ARE ratio, which is in a sense the dynamical counterpart of the classic “relative efficiency” of the Bayes estimator compared with the ML estimator used for a “static” parameter is indeed a synthetic measure of efficiency of the estimation method. The more the ARE value exceeds unity, the more efficient the Bayes estimate is when compared with the ML estimate.

A lot of different combinations of values of the model parameters (W, S, V 0) have been adopted to explore the estimation performances. In the following, the eight combinations indicated below will be reported for the triplet (W, S, V 0):

-

1.

(0.05, 0.10, 0)

-

2.

(0.05, 0.10, 1)

-

3.

(0.10, 0.05, 0)

-

4.

(0.10, 0.05, 1)

-

5.

(0.025, 0.05, 0)

-

6.

(0.025, 0.05, 1)

-

7.

(0.05, 0.025, 0)

-

8.

(0.05, 0.025, 1)

It is recalled that the above quantities are the SD describing uncertainty in the system load model (i.e. the equations in L k and Y k from which the ones for the LCCT are derived). The SD of the LCCT dynamic model, s and w, are larger than the correspondent load model parameters (W, S) values indicated here since s = bS and w = bW, and b = 4.1774, as reported above.

It is seen that in the first four cases the values (0.05, 0.10) or (0.05, 0.10) are used for S and W, and every combination is obtained from the previous one by changing the value of the initial variance from V 0 = 0 to V 0 = 1, or by swapping the values of S and W. For instance, in cases 3 and 4, the values of W and S are swapped in relation to cases 1 and 2. In cases 1 and 3, V 0 = 0 was chosen; in cases 2 and 4, V 0 = 1 was chosen.

An analogous method has been used to form the combinations (5)–(8), by using the values (0.025, 0.05) for (W, S), i.e. half of the values (0.05, 0.10) used in the first four combinations. It is noticed that the combination of SD values of the first four combinations may be too high (particularly, because of the value 0.1 for S or W), especially for VST applications. Indeed some unrealistic value has been obtained in the course of the simulations for the LCCT (and, thus, for the IP). They have been reported here only to show that the estimation procedure works quite well even in these unrealistic cases, in which high SD values could imply high estimation errors.

Indeed, it is observed that—as typically occurs—the different choices do not affect the performances of the methodology.

Out of the many numerical simulations which have been performed, the most significant have been reported in the two tables of this section, Table 1 being relevant to the first four combinations, Table 2 relevant to the other four combinations.

For each case, the results of three different simulations (proofs), amongst all the ones performed, are reported. In particular: proof #1 is—for any given sample size—the one with the “worst” results (i.e. when the ARE gets the lowest observed value); proof #3 is the one with the “best” results (i.e. when the ARE gets the highest observed value); proof #2 gives the average results for the REFF, thus resulting intermediate between proof #1 and proof #3. So, a total number of 12 proofs is shown in each table. For example, in Table 1, the case 1.1 (with ARE = 3.4485) precedes case 1.2 (with ARE = 7.2570). The three cases 1.1, 1.2 and 1.3 are all relevant to the same combination of values of (W, S and V 0) i.e. (0.05, 0.10 and 0), the first of the eight combinations above reported.

One of the results in Table 1 (the case 1.2) is also reported in Fig. 2, already mentioned.