Abstract

This chapter summarises recent developments on personalised medicine in psychiatry with a focus on ADHD and depression and their associated biomarkers and phenotypes. Several neurophysiological subtypes in ADHD and depression and their relation to treatment outcome are reviewed. The first important subgroup consists of the ‘impaired vigilance’ subgroup with often-reported excess frontal theta or alpha activity. This EEG subtype explains ADHD symptoms well based on the EEG Vigilance model, and these ADHD patients responds well to stimulant medication. In depression this subtype might be unresponsive to antidepressant treatments, and some studies suggest these depressive patients might respond better to stimulant medication. Further research should investigate whether sleep problems underlie this impaired vigilance subgroup, thereby perhaps providing a route to more specific treatments for this subgroup. Finally, a slow individual alpha peak frequency is an endophenotype associated with treatment resistance in ADHD and depression. Future studies should incorporate this endophenotype in clinical trials to investigate further the efficacy of new treatments in this substantial subgroup of patients.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The landscape in psychiatry recently underwent a dramatic change. Large-scale studies investigating the effects of conventional treatments for ADHD and depression in clinical practise have demonstrated, at the group-level, limited efficacy of antidepressant medication and cognitive behavioural therapy in depression (STAR*D: Rush et al. 2006), an overestimation of the effects of cognitive behavioural therapy for depression as a result of publication bias (Cuijpers et al. 2010) and limited long-term effects of stimulant medication, multicomponent behaviour therapy and multimodal treatment in ADHD (NIMH-MTA trial: Molina et al. 2009), although latent class analysis reported a subgroup consisting of children who demonstrated sustained effects of treatment at 2 years follow-up (Swanson et al. 2007). Furthermore, several large pharmaceutical companies announced that they would ‘…pull the plug on drug discovery in some areas of neuroscience…’ (Miller 2010). This can be considered a worrying development, since there is still much to improve in treatments for psychiatric disorders. The conclusions about limitations in efficacy and long-term effects are all based on the interpretation of group-averaged data, but also demonstrate that there is a percentage of patients responding to antidepressants (Rush et al. 2006) and there is a subgroup of patients demonstrating long-term effects (Swanson et al. 2007). Therefore, a move beyond data regarding the average effectiveness of treatments to identify the best treatment for any individual (Simon and Perlis 2010) or personalised medicine is crucial.

The fact that only subgroups of patients respond to treatment raises important questions about the underlying assumptions of neurobiological homogeneity within psychiatric disorders, and is rather suggestive of neurobiological heterogeneity. Therefore, a move beyond data regarding the average effectiveness of treatments, to identify the best treatment for a given individual (Simon and Perlis 2010) or personalised medicine is highly relevant. In personalised medicine it is the goal to prescribe the right treatment, for the right person at the right time as opposed to the current ‘trial-and-error’ approach, by using biomarkers of endophenotypes.

From the point of view that biomarkers should be cost-effective, easy applicable and implemented within the routine diagnostic procedure, the quantitative EEG (QEEG) seems to be appropriate. Still the question is whether it should be considered a diagnostic or prognostic technique? Although several EEG-biomarkers have shown robust discriminative power regarding neuropsychiatric conditions (for depression also see: Olbrich and Arns 2013) it seems not within reach that biomarkers will replace the clinical diagnosis (Savitz et al. 2013). As another illustration, consider any psychiatric disorder as defined according to the DSM-IV or DSM-V (DSM). Besides a list of behavioural symptoms, there is always the final criterion that the complaints result in ‘impairments in daily life’. Specifically, this criterion makes it almost impossible to devise any neurobiological test to replace diagnosis based on the DSM, since for one person the same level of impulsivity and inattention is considered a blessing (i.e. artist or CEO), whereas for another person the same levels of impulsivity and inattention is considered a curse, and hence results in a diagnosis only for the latter subject.

Given the recent development of personalised medicine (in line with the NIMH Strategic Plan on Research Domain Criteria or RdoC, and termed Precision Medicine) and the above limitations of current psychiatric diagnosis and treatments, in this chapter we will focus on the prognostic use of QEEG in psychiatry.

This prognostic use of EEG or QEEG has a long history. For example, Satterfield et al. (1971, 1973) were the first to investigate the potential use of EEG in predicting treatment outcome to stimulant medication (main results outlined further on). In 1957 both Fink, Kahn and Oaks (Fink and Kahn 1957) and Roth et al. (1957) investigated EEG predictors to ECT in depression. Fink recently summarised these findings eloquently as: ‘Slowing of EEG rhythms was necessary for clinical improvement in ECT’ (Fink 2010).

2 Personalised Medicine: Biomarkers and Endophenotypes

Personalised medicine aims to provide the right treatment to the right person at the right time as opposed to the current ‘trial-and-error’ approach. Genotypic and phenotypic information (or ‘biomarkers’) lie at the basis of this approach. However, 2011 marked the 10th year anniversary of the completion of the Human Genome project, which has sparked numerous large-scale Genome Wide Association studies (GWA) and other genotyping studies in psychiatric disorders, only accounting for a few percent of the genetic variance (Lander 2011). This suggests that a strictly genetic approach to personalised medicine for psychiatry will not be as promising as initially expected. The notion of personalised medicine suggests heterogeneity within a given DSM-IV disorder, rather than homogeneity, at least from a brain-function-based perspective. Therefore, a variety of ‘endophenotypes’ or ‘biomarkers’ are expected within a single DSM-IV disorder to require a different treatment.

The National Institutes of Health declared a biomarker as ‘A characteristic that is objectively measured and evaluated as an indicator of normal biologic processes, pathogenic processes or pharmacologic responses to a therapeutic intervention’ (De Gruttola et al. 2001). However, the idea behind an endophenotype is that it is the intermediate step between genotype and behaviour and thus is more closely related to genotype than behaviour alone. Therefore, endophenotypes can be investigated to yield more information on the underlying genotype. Given the interest in the last couple of years for genetic linkage studies, this term has become more topical again. In parallel, there have also been many studies using the term biological marker, trait, biomarker etc. Here it is important that, in line with Gottesman and Gould (2003), an ‘endophenotype’ refers to a marker when also certain heritability indicators are fulfilled, whereas a ‘biomarker’ simply refers to differences between patient groups, which do not necessarily have a hereditary basis.

Older studies attempting to aid the prescription process with more objective knowledge have studied biological (e.g. neurotransmitter metabolites), psychometric (personality questionnaires), neuropsychological (cognitive function) and psychophysiological (EEG, ERP) techniques (Joyce and Paykel 1989). Biological techniques (such as neurotransmitter metabolites) have to date shown little promise as reliable predictors of treatment response and are not yet recommended for routine clinical practise (Joyce and Paykel 1989; Bruder et al. 1999). Similarly, the clinical utility of ‘behavioural phenotypes’ remains poor and, at this moment, none of these predictors have clinical use in predicting treatment outcome to various anti-depressive treatments (Simon and Perlis 2010; Cuijpers et al. 2012; Bagby et al. 2002).

However, there has been renewed interest in the use of other measures such as pharmacogenomics (Frieling and Tadić 2013) and pharmacometabolomics (Hefner et al. 2013), which are speculated to show promise in the use of personalised medicine. However to date pharmacogenomics have not shown promising results in predicting treatment outcome in psychiatric disorders (Johnson and Gonzalez 2012; Ji et al. 2011; Menke 2013) and pharmacometabolomics is considered potentially promising at most at this moment, with few reports on its role in personalised medicine (Johnson and Gonzalez 2012; Quinones and Kaddurah-Daouk 2009).

Recent studies suggest that more direct measures of brain function, such as psychophysiology and neuropsychology, may be more reliable in predicting treatment response in depression (Olbrich and Arns 2013). The underlying idea behind this concept is that for example neurophysiological data from EEG capture ongoing neuronal activity at the timescale it takes place, outpassing any other modality such as neuroimaging techniques like fMRI or PET. Further, the EEG is not a surrogate marker of neuronal activity (such as the blood desoxygenation level dependent signal in fMRI or the glucose utilisation in PET) but gives insight into the actual cortical activity. Therefore, the EEG can help to define stable endophenotypes incorporating both the effects of nature and nurture. This potentially makes the EEG an ideal candidate biomarker, which has the potential to predict treatment outcome.

3 EEG as an Endophenotype?

Many studies have investigated the heritability of the EEG in twin studies and family studies (Vogel 1970), and found that many aspects of the EEG are heritable. In a meta-analysis van Beijsterveldt and van Baal (2002) demonstrated high heritability for measures such as the alpha peak frequency (81 %), alpha EEG power (79 %), P300 amplitude (60 %) and P300 latency (51 %), all suggesting that EEG and ERP parameters fulfil the definition of an endophenotype. Below two examples of EEG Phenotypes are discussed in more detail.

3.1 Low-Voltage (Alpha) EEG (LVA) and Alpha Power

LVA is the most well-described EEG phenotype to date and was first described by Adrian and Matthews (1934). The latter author exhibited an EEG in which alpha rhythm ‘…may not appear at all at the beginning of an examination, and seldom persists for long without intermission…’. The LVA EEG has been known to be heritable (autosomal dominant) and the heritability of alpha power is estimated at 79–93 % (Smit et al. 2005, 2010; Anokhin et al. 1992). Low-voltage EEG is a well-described endophenotype in anxiety and alcoholism (Enoch et al. 2003; Ehlers et al. 1999; Bierut et al. 2002). Alpha power and LVA have been successfully associated with a few chromosome loci (Ehlers et al. 1999; Enoch et al. 2008) but also with single genes: a serotonin receptor gene (HTR3B) (Ducci et al. 2009), corticotrophin-releasing binding hormone CRH-BP (Enoch et al. 2008), a gamma-amino butyric acid (GABA)-B receptor gene (Winterer et al. 2003) and with the BDNF Val66Met polymorphism (Gatt et al. 2008; Zoon et al. 2013).

3.2 Alpha Peak Frequency (APF)

The APF has been shown to be the most reproducible and heritable EEG characteristic (van Beijsterveldt and van Baal 2002; Smit et al. 2005; Posthuma et al. 2001) and has been associated with the COMT gene, with the Val/Val genotype marked by a 1.4 Hz slower APF as compared to the Met/Met group (Bodenmann et al. 2009) which could not be replicated in two large independent samples in our lab (Veth et al. submitted), casting doubt on this specific linkage and requiring further studies to unravel the genetic underpinnings of this measure.

In summary, the EEG has a long history in identifying biomarkers or endophenotypes aiding the prediction of treatment outcome and the EEG can be considered a stable, reproducible measure of brain activity with high heritability.

4 ADHD

Considerable research has been carried out for investigating the neurophysiology of ADHD. The first report describing EEG findings in ‘behavior problem children’ stems from 1938 (Jasper et al. 1938) when the authors described a distinct EEG pattern: ‘…There were occasionally two or three waves also in the central or frontal regions at frequencies below what is considered the normal alpha range, that is, at frequencies of 5–6/s…’ (Jasper et al. 1938, p. 644), which we now know to be frontal theta, although the term theta was not introduced until 1944 by Walter and Dovey (1944). In this group of ‘behavior problem children’ they described a ‘Class 1’ as ‘hyperactive, impulsive and highly variable’ which closely resembles the current diagnosis of ADHD. The most predominant features in this group were the occurrence of slow waves above one or more regions and an ‘abnormal EEG’ in 83 % of the cases. Within ‘Class 1’ they also reported a subgroup which they termed as ‘sub-alpha rhythm’ with slow frontal regular activity which occurred in a similar way as the posterior alpha (‘…In other cases a 5–6/s rhythm would predominate in the anterior head regions simultaneous with an 8–10/s rhythm from the posterior regions…’), thus already hinting at the heterogeneity of EEG findings that has continued to date and will be explained further below. Satterfield and colleagues (1971, 1973) were the first to investigate the potential use of EEG in predicting treatment outcome to stimulant medication. They found that children with excess slow wave activity and large amplitude evoked potentials were more likely to respond to stimulant medication (Satterfield et al. 1971) or, more generally, that abnormal EEG findings could be considered as predictor for positive treatment outcome (Satterfield et al. 1973). Below, the literature on ADHD will be reviewed in more detail focusing on some main subtypes for which at least replication studies have been published.

4.1 ‘Excess Theta’ and ‘Theta/Beta Ratio’: Impaired Vigilance Regulation

The most consistent findings reported in the literature on ADHD since the introduction of quantitative EEG are those of increased absolute power in theta and increased theta/beta ratio (TBR). The clearest demonstration of the ‘diagnostic utility’ of this measure is from Monastra et al. (1999), who showed in a multi-centre study of 482 subjects that using a single electrode location (Cz) they could classify with an accuracy of 88 % children with ADHD based on the TBR. Note that most of these studies focused on the EEG as a diagnostic tool for ADHD, which is not automatically compatible with the notion of using the EEG for predictive purposes (as part of personalised medicine) as these two aims have conflicting implications, where the diagnostic use of EEG assumes homogeneity among patients with ADHD, while the predictive approach assumes heterogeneity (‘A predictive biomarker is a baseline characteristic that categorises patients by their likelihood for response to a particular treatment’ (Savitz et al. 2013)).

Three meta-analyses have investigated the diagnostic value of theta power and the TBR in ADHD compared to healthy controls. Boutros and colleagues ( 2005) concluded that increased theta power in ADHD is a sufficiently robust finding to warrant further development as a diagnostic test for ADHD, with data suggesting that relative theta power is an even stronger discriminator than absolute theta power. In 2006, Snyder and Hall conducted a meta-analysis specifically investigating the TBR, theta and beta and concluded that an elevated TBR is ‘…a commonly observed trait in ADHD relative to controls… by statistical extrapolation, the effect size of 3.08 predicts a sensitivity and specificity of 94 %…’ (Snyder and Hall 2006, p. 453)). However, there is a problem with this extrapolation from an effect sizes (ES) to a sensitivity and specificity measure [see: (Arns et al. 2013a, b) for details] and hence these extrapolated values from Snyder and Hall (2006) should not be considered accurate. A recent meta-analysis incorporating more recent studies refines these findings further and shows a clear ‘time effect’ of studies, where earlier studies demonstrated the largest ES and more recent studies found the lowest ES between ADHD and non-ADHD groups (Arns et al. 2013a). This chronological effect in the findings was mostly related to the TBR being increased in the non-ADHD control groups which was interpreted by the authors as possibly being related to a decreasing sleep duration observed for non-ADHD children over time (Arns et al. 2013a, b; Iglowstein et al. 2003; Dollman et al. 2007) also found in a meta-analysis covering the last 100 years (Matricciani et al. 2011). Reduced sleep duration can result in prolonged sleep restriction, which results in increased fatigue and increased theta [see Arns and Kenemans (2012) for a review]. However, it was concluded that a substantial subgroup of ADHD patients (estimated between 26–38 %) are characterised by an increased TBR, even in recent studies (Arns et al. 2013a, b). Excess theta and elevated TBR are also favourable predictors for treatment outcome to stimulant medication (Arns et al. 2008; Clarke et al. 2002; Suffin and Emory 1995) and neurofeedback (Arns et al. 2012a; Monastra et al. 2002), thereby demonstrating the predictive value of this measure.

Conceptually, the EEG subtype with excess theta and/or enhanced TBR in ADHD are consistent with the EEG Vigilance model originally developed by Bente (1964) and further developed by Hegerl et al. (2012), which also overlaps with what is sometimes referred to as ‘underarousal’ and also with the EEG cluster described as ‘cortical hypoarousal’ (Clarke et al. 2011).

The EEG Vigilance framework can be regarded as an extension of the sleep stage model with a focus on eyes-closed resting period with transitions from relaxed wakefulness through drowsiness to sleep onset, which is seen in stage N2. The EEG allows classifying different functional brain stages at a time scale of, e.g. 1-s epochs, which reflect decreasing levels of vigilance from W to A1, A2, A3, B1, B2 and B3. W stage reflects a desynchronized low amplitude EEG which occurs, e.g. during arithmetic. The three A stages reflect stages where alpha activity is dominant posteriorly (A1), equally distributed (A2), followed by alpha anteriorisation (A3), whereas B stages are reflective of the lowest vigilance stages, which are characterised by an alpha drop-out or low-voltage EEG with slow horizontal eye movements (B1) followed by increased frontal theta and delta activity (B2/3). These vigilance stages are followed by sleep onset with the occurrence of K-complexes and sleep spindles, which mark the transition to stage C in the vigilance model, or classically to stage N2 sleep.

The sequence of EEG vigilance stages that can be assessed in an individual reflect the ability of relaxing or falling asleep. Due to its high temporal resolution of 1-s epochs it is sensitive to short drops of vigilance in contrast to traditional sleep medicine measures. Using a clustering method, three types of EEG vigilance regulation have been defined in a group of healthy subjects: a stable type, a slowly declining type and an unstable type (Olbrich et al. 2012). A stable or rigid EEG Vigilance regulation means that an individual remains in higher vigilance stages for an extended time and does not exhibit lower vigilance stages. This would be seen as rigid parietal/occipital alpha (stage A1), which is often seen in depression (Olbrich et al. 2012; Ulrich et al. 1990; Hegerl et al. 2012). On the other hand, unstable EEG Vigilance regulation suggests that an individual very quickly drops to lower EEG Vigilance stages, displaying the characteristic drowsiness EEG patterns such as frontal theta (stage B2/3), and they switch more often between EEG Vigilance stages. This labile or unstable pattern is often seen in ADHD (Sander et al. 2010). The often-reported ‘excess theta’ in ADHD mentioned above should thus be viewed as a predominance of the low B2/3 vigilance stages.

A summary of this model is depicted in Fig. 1. An unstable vigilance regulation explains the cognitive deficits that characterise ADHD and Attention Deficit Disorder (ADD), such as impaired sustained attention. Vigilance stabilisation behaviour explains the hyperactivity aspect of ADHD as an attempt to upregulate vigilance.

To summarise, in the majority of ADHD patients an EEG pattern is observed illustrative of a reduced and unstable vigilance regulation (i.e. the same EEG signature a healthy, but fatigued person would possibly demonstrate at the end of the day). The interpretation of increased theta activity as patterns of decreased tonic arousal suggests that the hyperactive behaviour of ADHD patients can be seen as a counter mechanism to auto-stabilisation via externalising behaviour that increases vigilance by riskful and sensation-seeking behaviour. Further, a decreased vigilance in a subgroup of patients with ADHD explains the positive effects of stimulant medication: vigilance is shifted to a high and stable level without the need for externalising behaviour. Interestingly, a similar pattern of reduced EEG vigilance can be found in manic patients, which sometimes also show patterns of reduced vigilance (Small et al. 1997) along with sensation-seeking behaviour. Again, this subtype with reduced vigilance seems responsive to stimulant medication (Schoenknecht et al. 2010).

Recent reviews are increasingly focusing on the role of sleep problems as the underlying aetiology of ADHD, in at least a subgroup of patients (Arns and Kenemans 2012; Miano et al. 2012). A majority of ADHD patients can be characterised by sleep onset insomnia, caused by a delayed circadian phase (van der Heijden et al. 2005; Van Veen et al. 2010). Although this cannot be considered a full-blown sleep disorder, chronic sleep onset insomnia can result in chronic sleep restriction which is known to result in impaired vigilance, attention and cognition (Van Dongen et al. 2003; Axelsson et al. 2008; Belenky et al. 2003). This is further evidenced by a recent meta-analysis incorporating data from 35,936 healthy children, reporting that sleep duration is positively correlated with school performance, executive function, and negatively correlated with internalising and externalising behaviour problems (Astill et al. 2012). Furthermore, it is known that symptoms associated with ADHD can be induced in healthy children by sleep restriction (Fallone et al. 2001; Golan et al. 2004), which also resulted in increased theta EEG power after a week of sleep restriction (effect size=0.53; Beebe et al. (2010)). These studies demonstrate that sustained sleep restriction results in impaired vigilance regulation (excess theta) as well as impaired attention, suggesting an overlap between ADHD symptoms and sleep disruptions. Chronobiological treatments normalising this delayed circadian phase, e.g. early morning bright light (Rybak et al. 2006) and sustained melatonin treatment (Hoebert et al. 2009) have been shown to normalise this sleep onset insomnia and also result in clinical improvement on ADHD symptoms. Therefore, this subgroup of ADHD patients with excess theta and elevated TBR is considered a group with impaired vigilance regulation caused by a delayed circadian phase (also see Fig. 1 and Arns and Kenemans (2012) for a review and Arns et al. (2013a, b)). Thereby it is understandable that vigilance stabilising treatments such as stimulant medication have been shown to be particularly effective in this subgroup (Arns et al. 2008; Clarke et al. 2002; Suffin and Emory 1995), whereas chronobiological treatments with sustained treatment (resulting in long-term normalisation) as well as neurofeedback treatment are also expected to be efficacious (Arns and Kenemans 2012).

Thus, conceptually, this excess theta subgroup can be interpreted as a subgroup with impaired vigilance regulation, likely caused by sleep restriction and/or other factors systematically influencing sleep duration.

4.2 The ‘Slow Individual Alpha Peak Frequency’ Subgroup

As pointed out above from the old Jasper et al. (1938) study in behavioural problem children, a cluster was identified which most closely resembles what we would now refer to as ADHD. In this ‘Class 1’ cluster they also reported an additional subgroup, which they termed a ‘sub-alpha rhythm’ with slow frontal regular activity, which occurred in a similar way as the posterior alpha (‘…In other cases a 5–6/s rhythm would predominate in the anterior head regions simultaneous with an 8–10/s rhythm from the posterior regions…’). Nowadays, we would consider this a slowed Alpha Peak Frequency or slowed APF. Interestingly since the introduction of quantitative EEG in the 1960s, almost no studies have reported on the APF in ADHD whereas older studies have consistently reported on this measure (Arns 2012). Since it has been shown that ADHD children with a slow APF do not respond well to stimulant medication (Arns et al. 2008), whereas ADHD children with excess theta do (Clarke et al. 2002; Suffin and Emory 1995), it is crucial to dissociate these two different EEG subtypes, which tend to overlap in the EEG frequency domain. As pointed out by Arns et al. (2008) and further demonstrated in Lansbergen et al. (2011), the often-reported increased TBR in ADHD actually combines both the excess frontal theta group (interpreted as the ‘impaired vigilance regulation subgroup’) as well as a slow APF subgroup, due to the alpha frequency slowing to such a degree that it overlaps with the theta frequency band (4–8 Hz). Therefore, in addition to the limited validity of TBR presented above, this is a further reason why the TBR is probably not a specific measure since it incorporates different subtypes of ADHD. From a personalised medicine perspective this is not optimal, since these subtypes respond differentially to medication and are hypothesised to have a different underlying pathophysiology.

Several studies have now demonstrated that a slow APF is associated with non-response to several treatments such as stimulant medication in ADHD (Arns et al. 2008), rTMS in depression (Arns et al. 2010; Arns 2012), antidepressant medication (Ulrich et al. 1984), comorbid depressive symptoms in ADHD after neurofeedback (Arns et al. 2012a) and antipsychotic medication (Itil et al. 1975). Since alpha peak frequency is associated with a heritability of 81 % (van Beijsterveldt and van Baal 2002), this suggests that a slow APF might be considered a non-specific predictor or even endophenotype for non-response to treatments across a range of disorders. This subgroup comprises a substantial proportion of patients (28 % in ADHD: Arns et al. (2008), 17 % in depression: Arns et al. (2012b)) for whom currently no known treatment exists [see: Arns (2012) for a review].

4.3 Paroxysmal EEG Abnormalities and Epileptiform Discharges

Older studies preceding the era of quantitative EEG have mainly employed visual inspection of the EEG such as identification of epileptiform or paroxysmal activity, estimating the incidences of paroxysmal patterns in ADHD (or former diagnostic classes of ADHD) to be from between 12–15 % (Satterfield et al. 1973; Capute et al. 1968; Hemmer et al. 2001) to approximately 30 % (Hughes et al. 2000), which is high compared to 1–2 % in normal populations (Goodwin 1947; Richter et al. 1971). Note that these individuals did not present with convulsions and thus did not have a clinical diagnosis of epilepsy, but simply exhibited a paroxysmal EEG without a history of seizures. In autism, a prevalence of 46–86 % for paroxysmal EEG activity or epileptiform EEG abnormalities has been reported (Parmeggiani et al. 2010; Yasuhara 2010), hence the findings in the old research on ‘abnormal’ EEG might have been partly confounded by a subgroup with autism, since autism was not included as a diagnostic entity in the DSM until 1980 when the DSM-III was released.

The exact implications of this paroxysmal and epileptiform EEG activity in subjects without a history of clinical signs of seizures are not very well understood and it is good clinical practise not to treat these subjects with anticonvulsive medication (‘Treat the patient, not the EEG’). In a very large study among healthy jet fighter pilots, Lennox-Buchthal et al. (1960) classified 6.4 % as ‘marked and paroxysmally abnormal’. Moreover, they found that pilots with such EEGs were three times more likely to be involved in a plane crash due to pilot error, indicating that even though these people are not ‘epileptic’ their brains are ‘not normal’ and hence the presence of paroxysmal EEG continues to be an exclusion criterion for becoming a pilot to this day. It is interesting to note that several studies found that ADHD patients (Itil and Rizzo 1967; Davids et al. 2006; Silva et al. 1996) and patients with autism (Yasuhara 2010) do respond to anticonvulsant medication. The reported effect size for carbamazepine in the treatment of ADHD was 1.01, which is quite similar to the effect size for stimulant medication (Wood et al. 2007). Furthermore, some studies have demonstrated that interictal and/or subclinical spike activity has detrimental effects on neuropsychological, neurobehavioural, neurodevelopmental, learning and/or autonomic functions and some of these children with subclinical spike patterns do respond to anticonvulsant medication both with a reduction of spikes measured in the EEG and with improvements on memory and attention (Mintz et al. 2009). Like in other psychiatric disorders such as panic disorders (Adamaszek et al. 2011) these findings suggest the existence of a subgroup with paroxysmal EEG, who might better respond to anticonvulsant medication; however further research is required to substantiate this.

4.4 Excess Beta Subgroup

There is clear evidence for a subgroup of ADHD patients that are characterised by excess beta or beta-spindles, and make up 13–20 % (Chabot and Serfontein 1996; Clarke et al. 2001a). Several studies demonstrated that these patients do respond to stimulant medication (Clarke et al. 2003; Chabot et al. 1999; Hermens et al. 2005). Relatively little is known about this excess beta group and about the occurrence of beta-spindles. The latter are generally observed as a grapho-element that indicate sleep onset (AASM Manual) and can also be found in patients with mania (Small et al. 1997). Further, they occur as medication effect due to vigilance decreasing agents like benzodiazepines (Blume 2006) or barbiturates (Schwartz et al. 1971). Furthermore, Clarke et al. (2001b) reported this ADHD subgroup was more prone to moody behaviour and temper tantrums and Barry et al. (2009) reported that the ERP’s of this subgroup differed substantially from ADHD children without excess beta, suggesting a different dysfunctional network explaining their complaints. Interestingly the ERP’s of the excess beta subgroup appear more normal than those of the ADHD subgroup without excess beta.

Originally Gibbs & Gibbs in 1950 (see: Niedermeyer and Lopes da Silva 1993) distinguished two types of predominantly fast EEG, a moderate increased beta, which they termed ‘F1’ and a marked increased beta, which they termed ‘F2’. Records of the F1 type were initially considered as ‘abnormal’ until the 1940s, whereas since that time Gibbs & Gibbs only considered the F2 type as ‘abnormal’. However, currently electroencephalographers have shown a more lenient philosophy towards the interpretation of fast tracings (Niedermeyer and Lopes da Silva 1993, p. 161). At this moment, the only EEG pattern in the beta range considered abnormal is the ‘paroxysmal fast activity’ or ‘beta band seizure pattern’, which most often occurs during non-REM sleep, but also during waking (Stern and Engel 2004). This pattern is quite rare (4 in 3,000) and is most often seen in Lennox–Gastaut syndrome (Halasz et al. 2004). Vogel (1970) also described an EEG pattern of ‘occipital slow beta waves’ or also termed ‘quick alpha variants 16–19/s’ which responds in the same way as alpha to eyes opening and also has a similar topographic distribution. This pattern was only found in 0.6 % of a large population of healthy air force applicants and given its very low prevalence and occipital dominance, this subtype is unlikely the explanation of the ‘excess beta’ or ‘beta spindling’ subtype observed in ADHD. Therefore, the ADHD subgroup with excess beta or beta spindling (assuming the paroxysmal fast activity has been excluded) can neurologically be considered a ‘normal variant’. However, neurophysiologically this can be considered a separate subgroup of ADHD, which does respond to stimulant medication (Chabot et al. 1999; Hermens et al. 2005). Probably, the ‘beta-spindle’ group also represents a subgroup with impaired vigilance ‘(see above), as beta-spindles are common signs for sleep onset. More research is required to investigate the exact underlying neurophysiology of this subtype and if other treatments could more specifically target this excess beta or beta spindling.

5 Depression

Lemere published the first description of EEG findings related to depression in 1936 (Lemere 1936). After inspecting the EEG of healthy people and several psychiatric patients he concluded: ‘…The ability to produce “good” alpha waves seems to be a neurophysiological characteristic which is related in some way to the affective capacity of the individual…’. This increased alpha power is to date still considered a hallmark of depression (e.g. see Itil (1983)) and recent studies suggest this endophenotype to be the mediator between the BDNF Val66Met polymorphism and trait depression (Gatt et al. 2008; Zoon et al. 2013).

One of the first attempts at using the EEG as a prognostic tool in depression stems from 1957. Roth et al. (1957) investigated barbiturate-induced EEG changes (delta increase) and found this predicted to some degree the long-term outcome (3–6 months) of ECT in depression. Many subsequent studies have demonstrated that greater ‘induced’ delta EEG power predicts favourable outcome to ECT (Ictal EEG power (Nobler et al. 2000); ECT-induced delta (Fink and Kahn 1957; Fink 2010; Volavka et al. 1972) and barbiturate-induced delta (Roth et al. 1957)). Or, as Max Fink concluded in a recent review, ‘slowing of EEG rhythms was necessary for clinical improvement in ECT’ (Fink 2010).

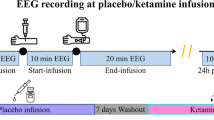

5.1 Metabolic Activity in the Anterior Cingulate (ACC) and Other Structures

In 1997 Mayberg et al. [see: Mayberg et al. (1997)] reported that pre-treatment increased resting glucose metabolism of the rostral anterior cingulate (BA 24a/b) and predicted favourable treatment response to antidepressants. Two earlier studies already demonstrated a similar finding for the relation between increased ACC metabolism and response to sleep deprivation (Ebert et al. 1994; Wu et al. 1992), which was also confirmed in later studies (Smith et al. 1999; Wu et al. 1999). Since then this has sparked a huge research interest into the link between the ACC and treatment response in depression, and to date this is the most well-investigated finding in treatment prediction in depression. In order to integrate all these findings recently a meta-analysis was performed that included 23 studies (Pizzagalli 2011). Nineteen studies reported that responders to antidepressant treatments demonstrated increased ACC activity pre-treatment whereas the remaining four studies found the opposite. The overall effect size (ES) was a large effect size (ES=0.918). The relationship between increased ACC activity and favourable antidepressant response was found consistently across treatments (SSRI, TCA, ketamine, rTMS and sleep deprivation) and imaging modalities, and did not depend upon medication status at baseline (Pizzagalli 2011). No clear relationship between activity in the anterior cingulate and specific neurotransmitter systems has been reported (Mulert et al. 2007) and treatment-resistant depressive patients have also been shown to respond to deep brain stimulation of ACC areas (see: Hamani et al. (2011) for a review) suggesting that ACC activity reflects a reliable biomarker for antidepressant treatment response in general.

Most studies have used PET, SPECT and fMRI for assessing activity in the ACC. However with LORETA (low resolution brain electromagnetic tomography) as an algorithm for computation of intracortical EEG source estimates it is also possible to assess activity in the ACC using scalp-EEG time series (Pascual-Marqui et al. 1994). Increased theta in the ACC assessed with LORETA has been shown to reflect increased metabolism in the ACC (Pizzagalli et al. 2003). Furthermore, several studies have used this technique to probe ACC activity successfully [reviewed in Pizzagalli (2011)].

5.2 EEG Markers in Depression

In QEEG research, various pre-treatment differences in EEG measures have been reported to be associated with improved antidepressant treatment outcomes. The following summarises findings that have been replicated in at least one study and relate to baseline measures predicting treatment outcome. It should be the goal to identify biomarkers that not only yield valid and effective prediction of treatment response but also can be linked to the underlying pathomechanisms of depression. Only a marker that can be integrated into the prevailing view of pathogenesis or even widens the scope of our understanding will be trusted in the field of clinical routine diagnostic. Therefore EEG research on prediction biomarkers has to bridge the gap between the mere analyses of electrophysiological time series on the one side and psychopathology, behaviour and clinical picture on the other side.

Decreased theta has consistently been reported to be related to a favourable treatment outcome to different antidepressant treatments (Arns et al. 2012b; Iosifescu et al. 2009; Olbrich and Arns 2013) (with the exception of (Cook et al. 1999)) as well as lower delta power (Knott et al. 2000). Given that most LORETA studies found an association between increased theta in the ACC and treatment response, these findings appear contradictory. However, Knott et al. (2000) and Arns et al. (2012b) analysed the EEG activity across all sites and Iosifescu et al. (2009) only looked at Fp1, Fpz and Fp2. Given that frontal-midline theta has been localised to the medial pre-frontal cortex and anterior cingulate (Ishii et al. 1999; Asada et al. 1999), one would thus expect that only frontal-midline sites would reflect the increased theta, which was indeed reported by Spronk and colleagues who found increased theta at Fz to be associated with favourable treatment outcome (Spronk et al. 2011). Hence these findings have to be interpreted in that increased generalised slow EEG power is a predictor for non-response, whereas increased ACC theta or frontal-midline theta is a positive predictor for response. These reflect different types of theta activity: ACC theta, also referred to as phasic theta, reflective of frontal-midline theta related to information processing versus tonic theta, reflective of widespread frontal theta and related to drowsiness or unstable vigilance regulation (for a review of the different roles of tonic and phasic theta refer to [Klimesch 1999]).

Hegerl et al. (2012) and Olbrich et al. (2012) demonstrated a clear difference in EEG vigilance regulation in patients with depression compared to matched controls. Depressed patients exhibited a hyperstable vigilance regulation expressed by increased A1 stages (parietal alpha) and decreased B2/3 and C stages (frontal theta) which is consistent with a study by Ulrich and Fürstenberg (1999) and other studies demonstrating increased parietal alpha (Itil 1983; Pollock and Schneider 1990), as first observed by Lemere (1936). Vogel (1970) described a pattern of ‘Monotonous High Alpha Waves’, with a simple autosomal dominance of inheritance. The description of this EEG pattern found by Vogel (‘Kontinuität’) is very similar to the ‘hyperrigid’ or ‘hyperstable’ EEG vigilance found by Hegerl and Hensch (2012) and hence suggests this indeed reflects a ‘trait’ like EEG vigilance regulation.

Furthermore, increased pre-treatment alpha has been associated with improved treatment outcome to antidepressant medication (Ulrich et al. 1984; Bruder et al. 2001; Tenke et al. 2011) and most antidepressants also result in a decrease of alpha activity [see: Itil (1983) for an overview]. Therefore, the subgroup of non-responders characterised by frontal theta might be interpreted as a subgroup characterised by a decreased EEG vigilance regulation (Hegerl and Hensch 2012; Olbrich and Arns 2013), as opposed to the typically reported increased or hyperstable vigilance regulation (‘hyperstable’ parietal alpha). Given that patients with a decreased EEG vigilance regulation respond better to stimulant medication (manic depression: Hegerl et al. 2010; Bschor et al. 2001; Schoenknecht et al. 2010; ADHD: Arns et al. 2008; Sander et al. 2010), it is tempting to speculate whether this subgroup of non-responders might respond better to stimulant medication or other vigilance stabilising treatments. Although a recent Cochrane review did report significant improvements of depressive and fatigue symptoms for short-term stimulant medication as add on therapy in depressed patients (Candy et al. 2008), the clinical significance remained unclear and there were very few controlled studies which could be included, thus limiting the generality of this finding (Candy et al. 2008). However, stimulant medication has been applied successfully in a subgroup of depression with excess theta by Suffin and Emory (1995), which was replicated in a prospective randomised controlled trial (Debattista et al. 2010). Therefore, along the same lines as discussed above in relation to sleep problems as the core pathophysiology of ADHD, future research should focus on investigating EEG vigilance regulation and the existence of sleep problems in this subgroup of non-responders in order to develop an appropriate treatment for these patients, who are found to be non-responders to gold-standard antidepressant treatments.

In summary, responders to antidepressant treatments such as antidepressants and rTMS are generally characterised by increased parieto-occipital alpha (or a ‘hyperstable’ vigilance regulation) and increased theta in the rostral anterior cingulate (Pizzagalli 2011) reflected as frontal-midline theta. A subgroup of non-responders to antidepressant treatments are characterised by generalised increased frontal theta reflective of decreased EEG vigilance regulation. It is hypothesised that this latter group might be better responders to vigilance stabilising treatments such as psychostimulants or chronobiological treatments such as melatonin or early morning bright light.

5.3 Alpha Peak Frequency in Depression

In one of the earliest studies investigating EEG predictors of treatment response in depression, Ulrich et al. (1984) found that non-responders to a tricyclic antidepressant (TCA), specifically amitryptiline, and pirlindole (a tetracyclic compound) demonstrated slower APF (8 Hz) as compared to responders (9.5 Hz). Furthermore, they also found that after 4 weeks of treatment only responders demonstrated an increase of 0.5 Hz in their APF, whereas the non-responders did not. More recently, it has also been shown that depressed patients with a pre-treatment slow APF also respond less well to rTMS (Arns et al. 2010, 2012b). Furthermore, as discussed above, a slow APF could represent a generic biomarker for non-response.

5.4 Treatment Emergent or Pharmacodynamic Biomarkers in Depression

The measures discussed above all involved baseline measures, which were investigated for their capability of predicting treatment outcome. However, another well-investigated line of research relates to ‘treatment emergent biomarkers’ or ‘pharmacodynamic’ biomarkers (Savitz et al. 2013) which measure the EEG at baseline and subsequently after treatment for several days, with the changes used to predict treatment outcome. This approach has been mainly applied to antidepressants as, given that this class of drugs generally takes 4–6 weeks to demonstrate its clinical effects, knowing whether a drug is likely to prove efficacious within several days has clinical relevance. Two of these methods will be discussed in more detail in the following, namely EEG cordance and the Antidepressant Treatment Response.

5.5 EEG Cordance

The EEG cordance method was initially developed by Leuchter and colleagues to provide a measure, which had face-validity for the detection of cortical deafferentation (Leuchter et al. 1994a, b). They observed that the EEG over a white-matter lesion often exhibited decreased absolute theta power, but increased relative theta power, which they termed ‘discordant’. Therefore, the EEG cordance method combines both absolute and relative EEG power. Negative values of this measure (discordance)—specifically in theta or beta—reflect low perfusion or metabolism, whereas positive values (concordance)—specifically in alpha—reflect high perfusion or metabolism (Leuchter et al. 1994a, b). This has been confirmed by comparing cordance EEG with simultaneous measuring perfusion employing PET scans (Leuchter et al. 1999).

In a first study, it was found that depressive patients characterised by a ‘discordant’ brain state at baseline could be characterised as non-responders (Cook et al. 1999). Subjects were classified into ‘discordant’ if >30 % of all electrodes exhibited discordance or if fewer electrodes that are highly deviant. Furthermore, central (Cz, FC1, FC2) theta cordance was related to treatment outcome after ECT (Stubbeman et al. 2004). More recent studies have focused on EEG cordance in the theta frequency band at pre-frontal electrodes (Fp1, Fp2, Fpz) and have found that theta cordance change (decrease) across 48 h to 2 weeks of treatment predicted longer-term treatment outcome (Cook et al. 2002, 2005). In an independent replication study, Bares et al. (2007, 2008, 2010) also found that responders were characterised by a decrease in pre-frontal (Fp1, Fp2, Fz) theta cordance after 1 week. Furthermore, Cook et al. (2005) demonstrated that a medication wash-out period for assessing the quantitative EEG is not critical in reliably using EEG cordance. This further suggests that change in frontal theta cordance is a reflection of the early beneficial effects of the treatment and is hence not dependent upon treatment type since the same cordance effects have been observed with SSRI, SNRI, TCA, rTMS and ECT. Across studies of depressive patients treated with various antidepressant medications, decreases in pre-frontal theta cordance 1 week after start of medication have consistently predicted response, with overall accuracy ranging from 72 to 88 % (Iosifescu et al. 2009).

A pre-frontal theta cordance increase was found in placebo-responders (Leuchter et al. 2002). A more recent study from this group refined this further by examining right-medial frontal sites and found that theta cordance after 1 week was only decreased in the medication responders but not in the placebo-responders (Cook et al. 2009), hence demonstrating specificity of this measure to treatment outcome and not to placebo response.

As a limitation of this measure it should be noted that the mentioned mixture of absolute and relative EEG power values for calculation of the cordance measure lowers the possibility for interpretation of the underlying neuronal activities (Kuo and Tsai 2010).

5.6 Antidepressant Treatment Response

The ATR measure was also developed by Leuchter and colleagues (2009a, b) and is commercialised by Aspect Medical Systems. The first results of this measure were published in 2009 by Iosifescu et al. (2009), demonstrating that the ATR measure was able to predict treatment outcome to an SSRI or Velafaxine with an accuracy of 70 % (82 % sensitivity; 54 % specificity). Recently, the results of a large clinical trial (BRITE-MD) investigating the ATR were published (Leuchter et al. 2009a, b). This measure is based on EEG recorded from Fpz (FT7 and FT8) and is the non-linear weighted combination of (1) combined relative alpha and theta (3–12 Hz/2–20 Hz) at baseline and (2) the difference between absolute alpha1 power (8.5–12 Hz) at baseline and absolute alpha2 power (9–11.5 Hz) after 1 week of treatment (Leuchter et al. 2009a, b). It was demonstrated that a high ATR value predicted response to an SSRI with 74 % overall accuracy (58 % sensitivity, 91 % specificity). Interestingly, in another study, they reported that patients with a low ATR responded better to the atypical antidepressant bupropion (Leuchter et al. 2009a, b) thereby demonstrating that this measure identified two subgroups of depressive patients with subsequent implications for two types of antidepressants.

The disadvantage of this method is that patients already need to be prescribed the medication before any prediction can be made and this method could not be used on 15 % of the patients due to ECG artefacts (Leuchter et al. 2009a, b), hence also reflecting a ‘treatment emergent biomarker’.

6 Conclusion

Much research has been conducted in ADHD and depression to investigate the potential of predicting treatment outcome using EEG as a marker, and the results are promising. The next step would be to integrate these different metrics further, make advantage of the different information they provide about the underlying neuronal activity and investigate the similarities and differences in order to further our knowledge, so that EEG- and ERP-based data can be used in practise to predict treatment outcome. Finally, some examples have been presented where the identification of EEG-based subgroups sheds more light on the underlying pathology of the disease state, and can thus be used to develop more effective treatments for the different subgroups.

References

Adamaszek M, Olbrich S, Gallinat J (2011) The diagnostic value of clinical EEG in detecting abnormal synchronicity in panic disorder. Clin EEG Neurosci 42(3):166–174

Adrian ED, Matthews BHC (1934) The Berger rhythm: potential changes from the occipital lobes in man. Brain: J Neurol 57(4):355

Anokhin A, Steinlein O, Fischer C, Mao Y, Vogt P, Schalt E, Vogel F (1992) A genetic study of the human low-voltage electroencephalogram. Hum Genet 90(1–2):99–112

Arns M (2012) EEG-based personalized medicine in ADHD: Individual alpha peak frequency as an endophenotype associated with nonresponse. J Neurotherapy 16(2):123–141

Arns M, Kenemans JL (2012) Neurofeedback in ADHD and insomnia: vigilance stabilization through sleep spindles and circadian networks. Neurosci Biobehav Rev. doi:10.1016/j.neubiorev.2012.10.006

Arns M, Conners CK, Kraemer HC (2013a) A decade of EEG theta/beta ratio research in ADHD: a meta-analysis. J Attention Disord 17(5):374–383

Arns M, Drinkenburg WHIM, Kenemans JL (2012a) The effects of QEEG-informed neurofeedback in ADHD: an open-label pilot study. Appl Psychophysiol Biofeedback 37(3):171–180

Arns M, Drinkenburg WHIM, Fitzgerald PB, Kenemans JL (2012b) Neurophysiological predictors of non-response to rTMS in depression. Brain Stimulation 5:569–576

Arns M, Gunkelman J, Breteler M, Spronk D (2008) EEG phenotypes predict treatment outcome to stimulants in children with ADHD. J Integr Neurosci 7(3):421–438

Arns M, Spronk D, Fitzgerald PB (2010) Potential differential effects of 9 and 10 Hz rTMS in the treatment of depression. Brain Stimulation 3(2):124–126

Arns M, van der Heijden KB, Arnold LE, Kenemans JL (2013b) Geographic variation in the prevalence of attention-deficit/hyperactivity disorder: the sunny perspective. Biol Psychiatry. doi:10.1016/j.biopsych.2013.02.010

Asada H, Fukuda Y, Tsunoda S, Yamaguchi M, Tonoike M (1999) Frontal midline theta rhythms reflect alternative activation of prefrontal cortex and anterior cingulate cortex in humans. Neurosci Lett 274(1):29–32

Astill RG, Van der Heijden KB, Van Ijzendoorn MH, Van Someren EJ (2012) Sleep, cognition, and behavioral problems in school-age children: a century of research meta-analyzed. Psychol Bull 138:155–159

Axelsson J, Kecklund G, Åkerstedt T, Donofrio P, Lekander M, Ingre M (2008) Sleepiness and performance in response to repeated sleep restriction and subsequent recovery during semi-laboratory conditions. Chronobiol Int 25(2–3):297–308

Bagby RM, Ryder AG, Cristi C (2002) Psychosocial and clinical predictors of response to pharmacotherapy for depression. J Psychiatry Neurosci: JPN 27(4):250–257

Bares M, Brunovsky M, Kopecek M, Novak T, Stopkova P, Kozeny J, Sos P, Krajca V, Höschl C (2008) Early reduction in prefrontal theta QEEG cordance value predicts response to venlafaxine treatment in patients with resistant depressive disorder. European Psychiatry: J Assoc Eur Psychiatrists 23(5):350–355

Bares M, Brunovsky M, Kopecek M, Stopkova P, Novak T, Kozeny J, Höschl C (2007) Changes in QEEG prefrontal cordance as a predictor of response to antidepressants in patients with treatment resistant depressive disorder: a pilot study. J Psychiatr Res 41(3–4):319–325

Bares M, Brunovsky M, Novak T, Kopecek M, Stopkova P, Sos P, Krajca V, Höschl C (2010) The change of prefrontal QEEG theta cordance as a predictor of response to bupropion treatment in patients who had failed to respond to previous antidepressant treatments. Eur Neuropsychopharmacol 20(7):459–466

Barry RJ, Clarke AR, McCarthy R, Selikowitz M, Brown CR (2009) Event-related potentials in children with attention-deficit/hyperactivity disorder and excess beta activity in the EEG. Acta Neuropsychologica 7(4):249

Beebe DW, Rose D, Amin R (2010) Attention, learning, and arousal of experimentally sleep-restricted adolescents in a simulated classroom. J Adolesc Health 47(5):523–525

Belenky G, Wesensten NJ, Thorne DR, Thomas ML, Sing HC, Redmond DP, Russo MB, Balkin TJ (2003) Patterns of performance degradation and restoration during sleep restriction and subsequent recovery: a sleep dose-response study. J Sleep Res 12(1):1–12

Bente D (1964) Die Insuffizienz des Vigilitätstonus eine klinische und elektroencephalographische Studie zum Aufbau narkoleptischer und neurasthenischer Syndrome, Habilitationsschrift

Bierut LJ, Saccone NL, Rice JP, Goate A, Foroud T, Edenberg H, Almasy L, Conneally PM, Crowe R, Hesselbrock V, Li TK, Nurnberger J, Porjesz B, Schuckit MA, Tischfield J, Begleiter H, Reich T (2002) Defining alcohol-related phenotypes in humans, the collaborative study on the genetics of alcohol. Alcohol Res Health 26(3):208–213

Blume WT (2006) Drug effects on EEG. J Clin Neurophysiol 23(4):306

Bodenmann S, Rusterholz T, Dürr R, Stoll C, Bachmann V, Geissler E, Jaggi-Schwarz K, Landolt HP (2009) The functional Val158Met polymorphism of COMT predicts interindividual differences in brain alpha oscillations in young men. J Neurosci 29(35):10855–10862

Boutros N, Fraenkel L, Feingold A (2005) A four-step approach for developing diagnostic tests in psychiatry: EEG in ADHD as a test case. J Neuropsychiatry Clin Neurosci 17(4):455–464

Bruder GE, Stewart JW, Tenke CE, McGrath PJ, Leite P, Bhattacharya N, Quitkin FM (2001) Electroencephalographic and perceptual asymmetry differences between responders and nonresponders to an SSRI antidepressant. Biol Psychiatry 49(5):416–425

Bruder GE, Tenke CE, Stewart JW, McGrath PJ, Quitkin FM (1999) Predictors of therapeutic response to treatments for depression: a review of electrophysiologic and dichotic listening studies. CNS Spectr 4(8):30–36

Bschor T, Müller-Oerlinghausen B, Ulrich G (2001) Decreased level of EEG-vigilance in acute mania as a possible predictor for a rapid effect of methylphenidate: a case study. Clinical EEG (Electroencephalography) 32(1):36–39

Candy M, Jones L, Williams R, Tookman A, King M (2008) Psychostimulants for depression. Cochrane Database Syst Rev (Online) 16(2): p. CD006722

Capute AJ, Niedermeyer EFL, Richardson F (1968) The electroencephalogram in children with minimal cerebral dysfunction. Pediatrics 41(6):1104

Chabot RJ, Serfontein G (1996) Quantitative electroencephalographic profiles of children with attention deficit disorder. Biol Psychiatry 40(10):951–963

Chabot RJ, Orgill AA, Crawford G, Harris MJ, Serfontein G (1999) Behavioral and electrophysiologic predictors of treatment response to stimulants in children with attention disorders. J Child Neurol 14(6):343–351

Clarke AR, Barry RJ, Dupuy FE, Heckel LD, McCarthy R, Selikowitz M, Johnstone SJ (2011) Behavioural differences between EEG-defined subgroups of children with attention-deficit/hyperactivity disorder. Clin Neurophysiol 122(7):1333–1341

Clarke AR, Barry RJ, McCarthy R, Selikowitz M (1998) EEG analysis in attention-deficit/hyperactivity disorder: a comparative study of two subtypes. Psychiatry Res 81(1):19–29

Clarke AR, Barry RJ, McCarthy R, Selikowitz M (2001a) EEG-defined subtypes of children with attention-deficit/hyperactivity disorder. Clin Neurophysiol 112(11):2098–2105

Clarke AR, Barry RJ, McCarthy R, Selikowitz M (2001b) Excess beta activity in children with attention-deficit/hyperactivity disorder: an atypical electrophysiological group. Psychiatry Res 103(2–3):205–218

Clarke AR, Barry RJ, McCarthy R, Selikowitz M, Croft RJ (2002) EEG differences between good and poor responders to methylphenidate in boys with the inattentive type of attention-deficit/hyperactivity disorder. Clin Neurophysiol 113(8):1191–1198

Clarke AR, Barry RJ, McCarthy R, Selikowitz M, Clarke DC, Croft RJ (2003) Effects of stimulant medications on children with attention-deficit/hyperactivity disorder and excessive beta activity in their EEG. Clin Neurophysiol 114(9):1729–1737

Cook IA, Hunter AM, Abrams M, Siegman B, Leuchter AF (2009) Midline and right frontal brain function as a physiologic biomarker of remission in major depression. Psychiatry Res: Neuroimaging 174:152–157

Cook IA, Leuchter AF, Morgan M, Witte E, Stubbeman WF, Abrams M, Rosenberg S, Uijtdehaage SH (2002) Early changes in prefrontal activity characterize clinical responders to antidepressants. Neuropsychopharmacology 27(1):120–131

Cook IA, Leuchter AF, Morgan ML, Stubbeman W, Siegman B, Abrams M (2005) Changes in prefrontal activity characterize clinical response in SSRI nonresponders: a pilot study. J Psychiatr Res 39(5):461–466

Cook IA, Leuchter AF, Witte E, Abrams M, Uijtdehaage SH, Stubbeman W, Rosenberg-Thompson S, Anderson-Hanley C, Dunkin JJ (1999) Neurophysiologic predictors of treatment response to fluoxetine in major depression. Psychiatry Res 85(3):263–273

Cuijpers P, Reynolds CF, Donker T, Li J Andersson G, Beekman A (2012) Personalized treatment of adult depression: medication, psychotherapy or both? a systematic review. Depression and anxiety 29(10):855–864

Cuijpers P, Smit F, Bohlmeijer E, Hollon SD, Andersson G (2010) Efficacy of cognitive-behavioural therapy and other psychological treatments for adult depression: meta-analytic study of publication bias. Br J Psychiatry 196:173–178

Davids E, Kis B, Specka M, Gastpar M (2006) A pilot clinical trial of oxcarbazepine in adults with attention-deficit hyperactivity disorder. Prog Neuropsychopharmacol Biol Psychiatry 30(6):1033–1038

Debattista C, Kinrys G, Hoffman D, Goldstein C, Zajecka J, Kocsis J, Teicher M, Potkin S, Preda A, Multani G, Brandt L, Schiller M, Iosifescu D, Fava M (2010) The use of referenced-EEG (rEEG) in assisting medication selection for the treatment of depression. J Psychiatr Res 45(1):64–75

Dollman J, Ridley K, Olds T, Lowe E (2007) Trends in the duration of school-day sleep among 10–15 year-old South Australians between 1985 and 2004. Acta Paediatr 96(7):1011–1014

Ducci F, Enoch MA, Yuan Q, Shen PH, White KV, Hodgkinson C, Albaugh B, Virkkunen M, Goldman D (2009) HTR3B is associated with alcoholism with antisocial behavior and alpha EEG power–an intermediate phenotype for alcoholism and co-morbid behaviors. Alcohol 43(1):73–84

Ebert D, Feistel H, Barocka A, Kaschka W (1994) Increased limbic blood flow and total sleep deprivation in major depression with melancholia. Psychiatry Res 55(2):101–109

Ehlers CL, Garcia-Andrade C, Wall TL, Cloutier D, Phillips E (1999) Electroencephalographic responses to alcohol challenge in native American mission Indians. Biol Psychiatry 45(6):776–787

Enoch MA, Schuckit MA, Johnson BA, Goldman D (2003) Genetics of alcoholism using intermediate phenotypes. Alcohol Clin Exp Res 27(2):169–176

Enoch MA, Shen PH, Ducci F, Yuan Q, Liu J, White KV, Albaugh B, Hodgkinson CA, Goldman D (2008) Common genetic origins for EEG, alcoholism and anxiety: the role of CRH-BP. PLoS ONE 3(10):e3620

Fallone G, Acebo C, Arnedt JT, Seifer R, Carskadon MA (2001) Effects of acute sleep restriction on behavior, sustained attention, and response inhibition in children. Percept Mot Skills 93(1):213–229

Fink M (2010) Remembering the lost neuroscience of pharmaco-EEG. Acta Psychiatr Scand 121(3):161–173

Fink M, Kahn RL (1957) Relation of electroencephalographic delta activity to behavioral response in electroshock; quantitative serial studies. AMA Arch Neurol Psychiatry 78(5):516–525

Frieling H, Tadić A, (2013) Value of genetic and epigenetic testing as biomarkers of response to antidepressant treatment. Int Rev Psychiatry (Abingdon, England) 25(5):572–578

Gatt JM, Kuan SA, Dobson-Stone C, Paul RH, Joffe RT, Kemp AH, Gordon E, Schofield PR, Williams LM (2008) Association between BDNF Val66Met polymorphism and trait depression is mediated via resting EEG alpha band activity. Biol Psychol 79(2):275–284

Golan N, Shahar E, Ravid S, Pillar G (2004) Sleep disorders and daytime sleepiness in children with attention-deficit/hyperactive disorder. Sleep 27(2):261–266

Goodwin JE (1947) The significance of alpha variants in the EEG, and their relationship to an epileptiform syndrome. Am J Psychiatry 104(6):369–379

Gottesman II, Gould TD (2003) The endophenotype concept in psychiatry: etymology and strategic intentions. Am J Psychiatry 160(4):636–645

De Gruttola VG, Clax P, DeMets DL, Downing GJ, Ellenberg SS, Friedman L, Gail MH, Prentice R, Wittes J, Zeger SL (2001) Considerations in the evaluation of surrogate endpoints in clinical trials, summary of a national institutes of health workshop. Control Clin Trials 22(5):485–502

Halasz P, Janszky J, Barcs G, Szcs A (2004) Generalised paroxysmal fast activity (GPFA) is not always a sign of malignant epileptic encephalopathy. Seizure 13(4):270–276

Hamani C, Mayberg H, Stone S, Laxton A, Haber S, Lozano AM (2011) The subcallosal cingulate gyrus in the context of major depression. Biol Psychiatry 69(4):301–308

Hefner G, Laib AK, Sigurdsson H, Hohner M, Hiemke C (2013) The value of drug and metabolite concentration in blood as a biomarker of psychopharmacological therapy. Int Rev Psychiatry 25(5):494–508

Hegerl U, Hensch T (2012) The vigilance regulation model of affective disorders and ADHD. Neurosci Biobehav Rev. doi:10.1016/j.neubiorev.2012.10.008

Hegerl U, Himmerich H, Engmann B, Hensch T (2010) Mania and attention-deficit/hyperactivity disorder: common symptomatology, common pathophysiology and common treatment? Curr Opin Psychiatry 23(1):1–7

Hegerl U, Wilk K, Olbrich S, Schoenknecht P, Sander C (2012) Hyperstable regulation of vigilance in patients with major depressive disorder. World J Biol Psychiatry 13(6):436–446

Hemmer SA, Pasternak JF, Zecker SG, Trommer BL (2001) Stimulant therapy and seizure risk in children with ADHD. Pediatr Neurol 24(2):99–102

Hermens DF, Cooper NJ, Kohn M, Clarke S, Gordon E (2005) Predicting stimulant medication response in ADHD: evidence from an integrated profile of neuropsychological, psychophysiological and clinical factors. J Integr Neurosci 4(1):107–121

Hoebert M, van der Heijden KB, van Geijlswijk IM, Smits MG (2009) Long-term follow-up of melatonin treatment in children with ADHD and chronic sleep onset insomnia. J Pineal Res 47(1):1–7

Hughes JR, DeLeo AJ, Melyn MA (2000) The electroencephalogram in attention deficit-hyperactivity disorder: emphasis on epileptiform discharges. Epilepsy Behav: E&B 1(4):271–277

Iglowstein I, Jenni G, Molinari L, Largo H (2003) Sleep duration from infancy to adolescence: reference values and generational trends. Pediatrics 111(2):302–307

Iosifescu DV, Greenwald S, Devlin P, Mischoulon D, Denninger JW, Alpert JE, Fava M (2009) Frontal EEG predictors of treatment outcome in major depressive disorder. Eur Neuropsychopharmacol 19(11):772–777

Ishii R, Shinosaki K, Ukai S, Inouye T, Ishihara T, Yoshimine T, Hirabuki N, Asada H, Kihara T, Robinson SE, Takeda M (1999) Medial prefrontal cortex generates frontal midline theta rhythm. NeuroReport 10(4):675–679

Itil TM (1983) The discovery of antidepressant drugs by computer-analyzed human cerebral bio-electrical potentials (CEEG). Prog Neurobiol 20(3–4):185–249

Itil TM, Rizzo AE (1967) Behavior and quantitative EEG correlations during treatment of behavior-disturbed adolescents. Electroencephalogr Clin Neurophysiol 23(1):81

Itil TM, Marasa J, Saletu B, Davis S, Mucciardi AN (1975) Computerized EEG: predictor of outcome in schizophrenia. J Nerv Ment Dis 160(3):118–203

Jasper HH, Solomon P, Bradley C (1938) Electroencephalographic analyses of behavior problem children. Am J Psychiatry 95(3):641

Ji Y, Hebbring S, Zhu H, Jenkins GD, Biernacka J, Snyder K, Drews M, Fiehn O, Zeng Z, Schaid D, Mrazek DA, Kaddurah-Daouk R, Weinshilboum RM (2011) Glycine and a glycine dehydrogenase (GLDC) SNP as citalopram/escitalopram response biomarkers in depression: pharmacometabolomics-informed pharmacogenomics. Clin Pharmacol Ther 89(1):97–104

Johnson CH, Gonzalez FJ (2012) Challenges and opportunities of metabolomics. J Cell Physiol 227(8):2975–2981

Joyce PR, Paykel ES (1989) Predictors of drug response in depression. Arch Gen Psychiatry 46(1):89–99

Knott V, Mahoney C, Kennedy S, Evans K (2000) Pre-treatment EEG and it’s relationship to depression severity and paroxetine treatment outcome. Pharmacopsychiatry 33(6):201–205

Kuo CC, Tsai JF (2010) Cordance or antidepressant treatment response (ATR) index?. Psychiatry Res 180(1):60 (author reply 61–2)

Lander ES (2011) Initial impact of the sequencing of the human genome. Nature 470(7333):187–197

Lansbergen M, Arns M, van Dongen-Boomsma M, Spronk D, Buitelaar JK (2011) The increase in theta/beta ratio on resting state EEG in boys with attention-deficit/hyperactivity disorder is mediated by slow alpha peak frequency. Prog Neuropsychopharmacol Biol Psychiatry 35:47–52

Lemere F (1936) The significance of individual differences in the Berger rhythm. Brain: J Neurol 59:366–75

Lennox-Buchtal M, Buchtal F, Rosenfalck P (1960) Correlation of electroencephalographic findings with crash rate of military jet pilots. Epilepsia 1:366–372

Leuchter AF, Cook IA, Gilmer WS, Marangell LB, Burgoyne KS, Howland RH, Trivedi MH, Zisook S, Jain R, Fava M, Iosifescu D, Greenwald S (2009a) Effectiveness of a quantitative electroencephalographic biomarker for predicting differential response or remission with escitalopram and bupropion in major depressive disorder. Psychiatry Res 169(2):132–138

Leuchter AF, Cook IA, Lufkin RB, Dunkin J, Newton TF, Cummings JL, Mackey JK, Walter DO (1994a) Cordance: a new method for assessment of cerebral perfusion and metabolism using quantitative electroencephalography. NeuroImage 1(3):208–219

Leuchter AF, Cook IA, Marangell LB, Gilmer WS, Burgoyne KS, Howland RH, Trivedi MH, Zisook S, Jain R, McCracken JT, Fava M, Iosifescu D, Greenwald S (2009b) Comparative effectiveness of biomarkers and clinical indicators for predicting outcomes of SSRI treatment in major depressive disorder: results of the BRITE-MD study. Psychiatry Res 169(2):124–131

Leuchter AF, Cook IA, Mena I, Dunkin JJ, Cummings JL, Newton TF, Migneco O, Lufkin RB, Walter DO, Lachenbruch PA (1994b) Assessment of cerebral perfusion using quantitative EEG cordance. Psychiatry Res 55(3):141–152

Leuchter AF, Cook IA, Witte EA, Morgan M, Abrams M (2002) Changes in brain function of depressed subjects during treatment with placebo. Am J Psychiatry 159(1):122–129

Leuchter AF, Uijtdehaage SH, Cook IA, O’Hara R, Mandelkern M (1999) Relationship between brain electrical activity and cortical perfusion in normal subjects. Psychiatry Res 90(2):125–140

Matricciani L, Olds T, Williams M (2011) A review of evidence for the claim that children are sleeping less than in the past. Sleep 34(5):651–659

Mayberg HS, Brannan SK, Mahurin RK, Jerabek PA, Brickman JS, Tekell JL, Silva JA, McGinnis S, Glass TG, Martin CC, Fox PT (1997) Cingulate function in depression: a potential predictor of treatment response. NeuroReport 8(4):1057–1061

Menke A (2013) Gene expression: biomarker of antidepressant therapy? Int Rev Psychiatry 25(5):579–591

Miano S, Parisi P, Villa MP (2012) The sleep phenotypes of attention deficit hyperactivity disorder: the role of arousal during sleep and implications for treatment. Med Hypotheses 79(2):147–53

Miller G (2010) Is pharma running out of brainy ideas? Science 329(5991):502

Mintz M, Legoff D, Scornaienchi J, Brown M, Levin-Allen S, Mintz P, Smith C (2009) The underrecognized epilepsy spectrum: the effects of levetiracetam on neuropsychological functioning in relation to subclinical spike production. J Child Neurol 24(7):807–815

Molina BS, Hinshaw SP, Swanson JM, Arnold LE, Vitiello B, Jensen PS, Epstein JN, Hoza B, Hechtman L, Abikoff HB, Elliott GR, Greenhill LL, Newcorn JH, Wells KC, Wigal T, Gibbons RD, Hur K, Houck PR, MTA Cooperative Group (2009) The MTA at 8 years: prospective follow-up of children treated for combined-type ADHD in a multisite study. J Am Acad Child Adolesc Psychiatry 48(5):484–500

Monastra VJ, Lubar JF, Linden M, VanDeusen P, Green G, Wing W, Phillips A, Fenger TN (1999) Assessing attention deficit hyperactivity disorder via quantitative electroencephalography: an initial validation study. Neuropsychology 13(3):424–433

Monastra VJ, Monastra DM, George S (2002) The effects of stimulant therapy, EEG biofeedback, and parenting style on the primary symptoms of attention-deficit/hyperactivity disorder. Appl Psychophysiology Biofeedback 27(4):231–249

Mulert C, Juckel G, Brunnmeier M, Karch S, Leicht G, Mergl R, Möller HJ, Hegerl U, Pogarell O (2007) Prediction of treatment response in major depression: integration of concepts. J Affect Disord 98(3):215–225

Niedermeyer E, Lopes da Silva FH (1993) Electroencephalography : basic principles, clinical applications, and related fields. Williams & Williams, Baltimore

Nobler MS, Luber B, Moeller JR, Katzman GP, Prudic J, Devanand DP, Dichter GS, Sackeim HA (2000) Quantitative EEG during seizures induced by electroconvulsive therapy: relations to treatment modality and clinical features. I. Global analyses. The journal of ECT 16(3):211

Olbrich S, Arns SM (2013) EEG biomarkers in major depressive disorder: discriminative power and prediction of treatment response. Int Rev Psychiatry 25(5):604–618

Olbrich S, Sander C, Minkwitz J, Chittka T, Mergl R, Hegerl U, Himmerich H (2012) EEG vigilance regulation patterns and their discriminative power to separate patients with major depression from healthy controls. Neuropsychobiology 65(4):188–194

Parmeggiani A, Barcia G, Posar A, Raimondi E, Santucci M, Scaduto MC (2010) Epilepsy and EEG paroxysmal abnormalities in autism spectrum disorders. Brain Dev 32(9):783–789

Pascual-Marqui RD, Michel CM, Lehmann D (1994) Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. Int J Psychophysiol: Official J Int Organ Psychophysiol 18(1):49–65

Pizzagalli DA (2011) Frontocingulate dysfunction in depression: toward biomarkers of treatment response. Neuropsychopharmacology 36:183–206

Pizzagalli DA, Oakes TR, Davidson RJ (2003) Coupling of theta activity and glucose metabolism in the human rostral anterior cingulate cortex: an EEG/PET study of normal and depressed subjects. Psychophysiology 40(6):939–949

Pollock VE, Schneider LS (1990) Quantitative, waking EEG research on depression. Biol Psychiatry 27(7):757–780

Posthuma D, Neale MC, Boomsma DI, de Geus EJ (2001) Are smarter brains running faster? Heritability of alpha peak frequency, IQ, and their interrelation, Behavior genetics 31(6):567–579

Quinones MP, Kaddurah-Daouk R (2009) Metabolomics tools for identifying biomarkers for neuropsychiatric diseases. Neurobiol Dis 35(2):165–176

Richter PL, Zimmerman EA, Raichle ME, Liske E (1971) Electroencephalograms of 2,947 United States air force academy cadets (1965–1969). Aerosp Med 42(9):1011–1014

Roth M, Kay DW, Shaw J, Green J (1957) Prognosis and pentothal induced electroencephalographic changes in electro-convulsive treatment; an approach to the problem of regulation of convulsive therapy. Electroencephalogr Clin Neurophysiol 9(2):225–237

Rush AJ, Trivedi MH, Wisniewski SR, Nierenberg AA, Stewart JW, Warden D, Niederehe G, Thase ME, Lavori PW, Lebowitz BD, McGrath PJ, Rosenbaum JF, Sackeim HA, Kupfer DJ, Luther J, Fava M (2006) Acute and longer-term outcomes in depressed outpatients requiring one or several treatment steps: a STAR*D report. Am J Psychiatry 163(11):1905–1917

Rybak YE, McNeely HE, Mackenzie BE, Jain UR, Levitan RD (2006) An open trial of light therapy in adult attention-deficit/hyperactivity disorder. J Clin Psychiatry 67(10):1527–1535

Sander C, Arns M, Olbrich S, Hegerl U (2010) EEG-vigilance and response to stimulants in paediatric patients with attention deficit/hyperactivity disorder. Clin Neurophysiol 121:1511–1518

Satterfield JH, Cantwell DP, Saul RE, Lesser LI, Podosin RL (1973) Response to stimulant drug treatment in hyperactive children: prediction from EEG and neurological findings. J Autism Child Schizophr 3(1):36–48

Satterfield JH, Lesser LI, Podosin RL (1971) Evoked cortical potentials in hyperkinetic children. Calif Med 115(3):48

Savitz JB, Rauch SL, Drevets WC (2013) Clinical application of brain imaging for the diagnosis of mood disorders: the current state of play. Mol Psychiatry 18(5):528–539

Schoenknecht P, Olbrich S, Sander C, Spindler P, Hegerl U (2010) Treatment of acute mania with modafinil monotherapy. Bio Psychiatry 67:e55–e57

Schwartz J, Feldstein S, Fink M, Shapiro DM, Itil TM (1971) Evidence for a characteristic EEG frequency response to thiopental. Electroencephalogr Clin Neurophysiol 31(2):149–153

Silva RR, Munoz DM, Alpert M (1996) Carbamazepine use in children and adolescents with features of attention-deficit hyperactivity disorder: a meta-analysis. J Am Acad Child Adolesc Psychiatry 35(3):352–358

Simon GE, Perlis RH (2010) Personalized medicine for depression: can we match patients with treatments? Am J Psychiatry 167(12):1445–1455

Small JG, Milstein V, Medlock CE (1997) Clinical EEG findings in mania. Clinical EEG (Electroencephalography) 28(4):229–235

Smit DJ, Boersma M, van Beijsterveldt CE, Posthuma D, Boomsma DI, Stam CJ, de Geus EJ (2010) Endophenotypes in a dynamically connected brain. Behav Genet 40(2):167–177

Smit DJ, Posthuma D, Boomsma DI, Geus EJ (2005) Heritability of background EEG across the power spectrum. Psychophysiology 42(6):691–697

Smith GS, Reynolds CF, Pollock B, Derbyshire S, Nofzinger E, Dew MA, Houck PR, Milko D, Meltzer CC, Kupfer DJ (1999) Cerebral glucose metabolic response to combined total sleep deprivation and antidepressant treatment in geriatric depression. Am J Psychiatry 156(5):683–689

Snyder SM, Hall JR (2006) A meta-analysis of quantitative EEG power associated with attention-deficit hyperactivity disorder. J Clin Neurophysiol 23(5):440–455

Spronk D, Arns M, Barnett KJ, Cooper NJ, Gordon E (2011) An investigation of EEG, genetic and cognitive markers of treatment response to antidepressant medication in patients with major depressive disorder: a pilot study. J Affect Disord 128:41–48

Stern JM, Engel J (2004) Atlas of EEG patterns. Lippincott Williams & Wilkins, Baltimore

Stubbeman WF, Leuchter AF, Cook IA, Shurman BD, Morgan M, Gunay I, Gonzalez S (2004) Pretreatment neurophysiologic function and ECT response in depression. J ECT 20(3):142–144

Suffin SC, Emory WH (1995) Neurometric subgroups in attentional and affective disorders and their association with pharmacotherapeutic outcome. Clinical EEG (Electroencephalography) 26(2):76–83

Swanson JM, Hinshaw SP, Arnold LE, Gibbons RD, Marcus S, Hur K, Jensen PS, Vitiello B, Abikoff HB, Greenhill LL, Hechtman L, Pelham WE, Wells KC, Conners CK, March JS, Elliott GR, Epstein JN, Hoagwood K, Hoza B, Molina BS, Newcorn JH, Severe JB, Wigal T (2007) Secondary evaluations of MTA 36-month outcomes: propensity score and growth mixture model analyses. J Am Acad Child Adolesc Psychiatry 46(8):1003–1014

Tenke CE, Kayser J, Manna CG, Fekri S, Kroppmann CJ, Schaller JD, Alschuler DM, Stewart JW, McGrath PJ, Bruder GE (2011) Current source density measures of electroencephalographic alpha predict antidepressant treatment response. Biol Psychiatry 70(4):388–394

Ulrich G, Fürstenberg U (1999) Quantitative assessment of dynamic electroencephalogram (EEG) organization as a tool for subtyping depressive syndromes. Eur Psychiatry 14(4):217–229

Ulrich G, Herrmann WM, Hegerl U, Müller-Oerlinghausen B (1990) Effect of lithium on the dynamics of electroencephalographic vigilance in healthy subjects. J Affect Disord 20(1):19–25

Ulrich G, Renfordt E, Zeller G, Frick K (1984) Interrelation between changes in the EEG and psychopathology under pharmacotherapy for endogenous depression. Contrib Predictor Question, Pharmacopsychiatry 17(6):178–183

van Beijsterveldt CE, van Baal GC (2002) Twin and family studies of the human electroencephalogram: a review and a meta-analysis. Biol Psychol 61(1–2):111–138

van der Heijden KB, Blok MJ, Spee K, Archer SN, Smits MG, Curfs LM, Gunning WB (2005) No evidence to support an association of PER3 clock gene polymorphism with ADHD-related idiopathic chronic sleep onset insomnia. Biol Rhythm Res 36(5):381–388