Abstract

We present a review of different computational methods to describe time-dependent phenomena in open quantum systems and their extension to a density-functional framework. We focus the discussion on electron emission processes in atoms and molecules addressing excited-state lifetimes and dissipative processes. Initially we analyze the concept of an electronic resonance, a central concept in spectroscopy associated with a metastable state from which an electron eventually escapes (electronic lifetime). Resonances play a fundamental role in many time-dependent molecular phenomena but can be rationalized from a time-independent context in terms of scattering states. We introduce the method of complex scaling, which is used to capture resonant states as localized states in the spirit of usual bound-state methods, and work on its extension to static and time-dependent density-functional theory. In a time-dependent setting, complex scaling can be used to describe excitations in the continuum as well as wave packet dynamics leading to electron emission. This process can also be treated by using open boundary conditions which allow time-dependent simulations of emission processes without artificial reflections at the boundaries (i.e., borders of the simulation box). We compare in detail different schemes to implement open boundaries, namely transparent boundaries using Green functions, and absorbing boundaries in the form of complex absorbing potentials and mask functions. The last two are regularly used together with time-dependent density-functional theory to describe the electron emission dynamics of atoms and molecules. Finally, we discuss approaches to the calculation of energy and angle-resolved time-dependent pump–probe photoelectron spectroscopy of molecular systems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

All natural phenomena occur away from equilibrium. Non-equilibrium systems can range in scale from microscopic (such as nanostructures and bacteria) to geological phenomena, and away-from-equilibrium processes occur on timescales ranging from nanoseconds to millennia. Despite the ubiquitous non-equilibrium systems and processes, most of the current understanding of physical and biological systems is based on equilibrium concepts. In fact, in interacting many-body systems, more often than not we face the fact that the electronic states have finite lifetimes because of the coupling to the environment or to a continuum of states (resonance processes). Even if we were able to prepare a perfectly isolated quantum system, we would need to regard a measurement of the system as bringing the system into contact with an environment. Already a single atom in vacuum cannot be regarded as completely isolated, because the atom is embedded in the surrounding photon field (spontaneous emission). Other examples where the coupling to the surrounding plays a prominent role include hot electron relaxation in bulk systems and surfaces after laser irradiation, thermalization caused by electron–phonon coupling, decoherence in pump–probe experiments, exciton propagation and relaxation in biological chromophores, and vibrational relaxation in nanomaterials and molecular systems. Understanding these decay mechanisms provides important information about electron correlations, quantum coherence, dissipative and decoherence processes, and control of these processes has important implications. For instance, this would make it possible to enhance the performance of molecular/solid-based optoelectronic devices.

In this context, density-functional theory (DFT) provides an exact theoretical framework which could yield observable quantities directly, by-passing the need to calculate the many-body wavefunction Ψ. Hohenberg and Kohn [1] proved that all observable properties of a static many-electron system can be extracted exactly from the one-body ground-state density alone (density–potential mapping). Later, Runge and Gross extended this theorem to time-dependent systems [2]. Time-dependent density-functional theory (TDDFT) is a rigorous reformulation of the non-relativistic time-dependent quantum mechanics of many-body systems. The central theorem of TDDFT is the Runge–Gross theorem which proves a one-to-one correspondence between the time-dependent external potential νext(r, t) and the electronic one-body density n(r, t) for many-body systems evolving from a fixed initial state Ψ0. This implies that the time-dependent electronic density determines all properties of the interacting many-electron system: all observable properties of a many-electron system can be extracted from the one-body time-dependent density alone [2]. What has made both DFT and TDDFT so successful is the Kohn–Sham scheme [3]: the density of the interacting many-electron system is obtained as the density of an auxiliary system of non-interacting fermions, living in a one-body potential. Because of the excellent balance between the computational load it requires and the accuracy it provides, TDDFT is now a tool of choice for quite accurate and reliable predictions for excited-state properties in solid state physics, chemistry, and biophysics, in both the linear and nonlinear regimes. However, there exist many situations where the electronic degrees of freedom are not isolated but must be treated as a subsystem embedded in an environment, which influences it in a non-negligible way. Those situations go beyond the realm of the original formulation of TDDFT which is meant to tackle the isolated dynamics of electronic systems. It is therefore clear that there is a need to extend density-functional approaches to the realm of open quantum systems to allow us to treat the processes described above.

Burke and co-workers recently introduced a TDDFT approach based on a Kohn–Sham master equation [4], and in recent work this has been pursued by the group of Aspuru-Guzik [5–7]. This group also proposed a description of open quantum systems in terms of a unitarily evolving closed Kohn–Sham system [7, 8]. The theory of open quantum systems (OQS) mostly deals with the situation where the environment exchanges energy and momentum with the system but particle number is conserved ([9, Chap. 10]). What happens in the case when the environment exchanges particles with the system is an equivalently important problem which has been less developed. Here we intend to review methods developed to address this kind of problem. We describe the theoretical frameworks and approximations that can be used to describe particle exchange.

Solving the problem of describing a system which exchanges electrons with the environment is only half the challenge. In fact, even in the ideal case where one is able to calculate the correct time-dependent wavefunction, one is faced with the additional problem that some observables may require the knowledge of the complete wavefunction or of eigenstates in the continuum. This includes ionization products such as photoelectron spectra and resonance lifetimes/widths, and is also connected to the measurement process of an open system. These problems are even more severe in the case of DFT and TDDFT, where the density is the only physical object, and where finding the explicit density-functional linking to a physical observable is a daunting task.

This review is structured as follows. We first introduce the general concept of resonance in Sect. 2 and describe how it can be observed in many different physical situations. Then in Sect. 3 we introduce the reader to the basic concepts of the complex scaling theory which is one of the most important tools for studying shape resonances in a static framework. In Sect. 4 we review the successful extension of the complex scaling theory to the realm of DFT, including some recent work adapting the method to the time-dependent realm. In Sect. 5 we review several methods for the incorporation of boundary conditions with the TDDFT equations in order to include the dynamic exchange of electrons with an environment/reservoir. We discuss the strategies for describing specific observables in Sect. 6 where we focus on the case of electron photoemission.

Unless otherwise specified, atomic units are used throughout (ħ = m e = e = 4πε 0 = 1).

2 Resonances

Consider a system acted upon by an external oscillating force characterized by some energy and corresponding frequency. If the system responds particularly strongly close to a particular frequency, we call that a resonance process. The typical textbook case is that of a classical damped harmonic oscillator acted upon by an external sinusoidal force. For each frequency the system responds by oscillating with some amplitude, and the resonances appear as strong narrow peaks in the amplitude.

This simple model has two important properties that are very general to any type of resonance: First, if the oscillatory force is turned off, the resonant oscillation decays as governed by the damping force, and the rate of decay is proportional to the width of the resonance peak. Second, if we consider the phase of the oscillation of the system with respect to that of the external force, we see that it shifts quickly by up to π as the energy passes that of the resonance. The rate with which it shifts is inversely proportional to the decay rate.

We mention here a few commonly studied types of resonance in atomic, molecular, and condensed-matter physics:

-

Plasmon resonances where the whole electron charge density in a material resonates with incoming light. Surface plasmon resonances are central to the field of plasmonics.

-

Scattering resonances where incident electrons interact with an atom or molecule. Near a resonance energy, the electrons couple strongly and the scattering cross-section shows a peak. The process may be understood as the incoming electron becoming temporarily trapped in a metastable state before escaping.

-

Asymmetric Fano resonances [10]. These occur when two coupled excitation pathways interfere with each other.

-

Autoionizing resonances, wherein a system such as an atom or molecule is unstable with respect to the ejection of one or more electrons. These are similar to those that would be observed in time-resolved spectroscopies and electron scattering experiments as mentioned above.

-

Electron transport processes with molecular junctions, where a bias voltage causes electrons to jump from one metallic lead across a metastable state at a molecule, then escapes through another lead. Such processes have, for example, been studied using DFT plus non-equilibrium Green functions represented with atomic basis sets [11–14].

-

Adsorption of an atom onto a surface where the continuum states of the surface couple with the discrete atomic states which then become unstable, broadening into resonances. The Newns–Anderson model [15] describes this process for a one-electron adsorbate.

There are many further classes of resonance which we do not mention here. Below we consider only a small class of resonances, namely scattering or autoionizing ones. In this context, a resonance is a metastable quantum mechanical state that the system possesses, and which can be associated with a wavefunction. Below we describe some mathematical properties of such resonances, with the objective of eventually calculating them from static or time-dependent DFT.

2.1 Definition and Properties of Resonant States

Let us consider a typical scattering experiment where an incoming electron is captured by atom and is temporarily trapped before it escapes again. Whereas scattering processes are clearly time-dependent, resonances can nevertheless be captured from time-independent methods as static properties of the system. A conceptually simple method is to study the phase δ of the wavefunction in the asymptotic region, taking in one dimension the form cos(kx + δ). See for instance the simple demonstration by Gellene [16] which we consider again later. A resonance energy and width can be estimated by locating the energy where the phase shift δ changes most rapidly, and the width can be estimated from the maximum rate of change. This intuitively relates the resonance to a strong coupling of the system with continuum states in a narrow energy interval, as we noted in the beginning.

A more mathematically precise way of identifying a resonance is, following the work of Siegert [17], to search for complex energies corresponding to singularities of the scattering cross section. A pole close to the real energy axis would produce a peak in the scattering cross-section for real energies, consistent with a resonance. As noted by Siegert, the corresponding condition on a wavefunctionFootnote 1 ψ(r) is that far away from the scattering region:

with the energy

where ε > 0 is the real resonance energy, and Γ > 0 its width. This yields a discrete set of resonant states characterized by being purely outgoing waves. States obeying the boundary condition (1) are frequently called Siegert or Gamow–Siegert states, and they diverge as r → ∞. See, for example, Hatano et al. [18] for a detailed description of resonant states.

Most computational methods in quantum mechanics work in terms of square integrable states, and thus cannot straightforwardly represent a resonance wavefunction. An elegant solution to this problem is the complex scaling method, where one uses complex spatial coordinates to suppress the exponential divergence. One thus solves for functions that obey the usual boundary conditions, ψ(r) → 0 for r → ∞. This also has the convenient advantage that the boundary conditions no longer depend on k. The method relies on the properties of analytic functions to transform the Hamiltonian into a non-Hermitian operator whose point spectrum consists exactly of that of the bound states along the negative real axis plus the complex resonance energies which have positive real part and negative imaginary part. The wavefunctions of bound as well as resonance states are square integrable analytic continuations of the original ones. These properties make the complex scaling method a powerful computational tool as it can make use of many existing methods which do not otherwise apply to unbounded scattering states.

Although the complex scaling method clearly works with any kind of particles in a finite system, here we explicitly assume that we are dealing with electrons temporarily trapped by simple potentials representing atoms or molecules. The electrons eventually tunnel out to a far-away region which we do not wish to represent explicitly in the calculation. We are thus dealing with the specific case of an open quantum system where we only have particles leaving the system.

3 Calculation of Resonances from Complex Scaling

The complex scaling method was initially developed by Aguilar, Balslev, Combes, and Simon [19–21], and is based on a scaling r → reiθ of the position variable in the Schrödinger equation. This is referred to as uniform complex scaling. Here we review uniform complex scaling in the simple case of independent particles. Most recent work is based on a later generalization called exterior complex scaling [22], which we consider later. The following is a rather informal description of complex scaling, focusing on a few important cases. More information can be found in any of the many existing reviews.[23–27]

3.1 Formalism

Consider the standard independent-particle time-independent Schrödinger equation for a finite system:

The Hamiltonian is \( \widehat{H}=-\frac{1}{2}{\nabla}^2+v\left(\mathbf{r}\right) \), where ν(r) is some reasonably well-behaved potential which approaches zero as r → ∞. Formally, the potential has to be dilation or dilatation analytic [19], but the method has been applied successfully to potentials that are not, an example of which is the Stark effect [28–30]. For our informal review we only insist that it be analytic in relevant parts of the complex plane.

The spectrum of H^ consists of a negative point spectrum corresponding to the bound states, and the continuum ε ≥ 0. The goal of complex scaling is to identify resonances associated with positive energies somewhere within the continuum.

The complex scaling operation is implemented by the operator \( {\widehat{R}}_{\theta } \) defined by

where N is the number of spatial coordinates on which the scaling is applied (thrice the number of particles in the 3D many-body case). θ, the scaling angle, is a fixed number formally supposed to lie within 0 ≤ θ ≤ π/4, although this depends on the analyticity of the potential. The scaling operation transforms the position and momentum operators as x → xeiθ and d/dx → e−iθd/dx, wherefore the Hamiltonian transforms to

with

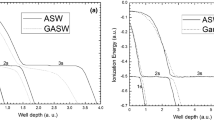

The transformation maps the potential to its analytic continuation on reiθ in the complex plane. The interesting property of H^ θ is how its spectrum and eigenstates are related to that of H^. First of all, H^ θ is non-Hermitian and therefore admits complex eigenvalues. The continuous spectrum “swings down” by an angle of 2θ as shown in Fig. 1. Meanwhile, the energies of any bound states remain unaffected. Finally, for sufficiently large θ, new eigenvalues materialize which are independent of further increase of θ and which are taken to represent resonances. Let us have a closer look at each of these three effects separately.

Effect of complex scaling on the spectrum for the 1D potential ν(x) = 3(x 2 − 2)exp(−x 2/4). Bound-state eigenvalues (bold circles) are independent of θ while the continuous spectrum rotates by −2θ around the threshold 0. Because of the finite size of the simulation box, the numerically calculated unbound states (uncircled) do not fall exactly on the line arg z = −2θ. Resonances (thin circles) are resolved when θ is sufficiently large for them to segregate from the continuum states. Calculated using a uniform real-space grid from −18 to 18 a.u. with 250 points and fourth-order Laplacian finite-difference stencil

3.2 Bound States

Suppose ϕ(r) and ψ(r) are square-integrable and reasonably well-behaved states. We consider first the scaling operation Û η ψ(r) = eNη/2 ψ(reη) where η is a real number. This operation is easily seen to be unitary; for example it preserves scalar products:

where we used the substitution r′ = reη. A real scaling therefore preserves matrix elements and eigenvalues.

The derivation of the complex scaling method starts with the unitarity of the real scaling, then considers the extension to the complex plane of the scaling parameter η. However, as we see, the situation becomes radically different when the scaling is complex. In order for the method to be correct, the scaling operation must retain some property resembling unitarity to make sure that observables do not arbitrarily change with the scaling parameter. The analytic continuations of functions defined originally on the real axis are not always within the Hilbert space (hence breaking unitarity). However, for suitable states and operators, as we see later, the complex scaling operation corresponds simply to a change of integration path which preserves scalar products. Let us consider a matrix element of some local operator

This integral is taken for each coordinate axis over all real numbers −∞ to ∞. Imagine now that we liberate each position coordinate and allow it to take complex values. Then, instead, we take the integral over some complex path, such as the one in Fig. 2 with three segments. If the diagonal segment is long enough (L → ∞ in the figure), and the integrand is analytic and sufficiently localized, then the integral along the vertical segments is zero. Thus the integral over the diagonal z = xeiθ is independent of θ and equal to that along the real line:

Complex integration path with directions indicated by arrows. If the integrand is suitably localized and analytic on the integration path, the indicated path becomes equivalent to that over the real axis from −∞ to ∞ as L → ∞. This ensures that the unphysical complex scaling angle does not affect matrix elements or expectation values

The substitution r′ = reiθ then transforms the integral back so the integration variable is (unlike the integrand) real:

with

Note how (1) the complex prefactors of eiNθ/2 from (4) serve to “absorb” exactly the volume element eiNθ produced by the variable substitution, and (2) the left states or bras are effectively rotated by −θ. Furthermore, if the unscaled state ϕ(r) is real, the cumbersome notation for \( {\overline{\phi}}_{\theta}\left(\mathbf{r}\right) \) of (12) can be avoided:

We can then calculate the matrix element without conjugating anything.

What we have established is that the complex scaling operation corresponds to a change of integration path when calculating matrix elements. For states and operators that produce a sufficiently localized integrand and do not possess poles that interfere with the integration path, it preserves values of matrix elements. In particular this guarantees that observables or eigenvalues of bound states under complex scaling, at least for sufficiently small values of θ, are independent of θ.

3.3 Continuum States

The previous discussion does not apply to states that are not localized, such as continuum states. Let us consider the complex-scaled Schrödinger equation for a free particle in one dimension:

We immediately see that this is the same differential equation as the unscaled one, and thus has the usual set of solutions:

As per the standard procedure, let us say that the particle is confined to some finite box. We then require that ψ θ (x) be 0 on the boundaries, which quantizes k to a set of real positive numbers. Taking the limit of large boxes, we see that solutions exist for all k > 0. It follows that the energy ε θ in (15) must become complex according to

Evidently the spectrum has been rotated by an angle of −2θ into the fourth quadrant of the complex plane. Meanwhile, the solution wavefunctions for the free particle have the same form as without the complex scaling operation.

What, then, is so interesting about the complex-scaled solutions ψ θ (x)? Because they are not normalizable, and because their energy depends on the scaling angle θ, they are not of much use computationally. However, we can gain some insight by scaling them back to θ = 0 to obtain

For x → ∞ the right-going term diverges whereas the left-going one dies out. For x → −∞ it is the left-going one which survives. The solution ψ θ (x) to the complex-scaled problem therefore resembles an outgoing, exponentially diverging state. We see intuitively that the complex scaling operation may have something to say about the outgoing character of states. However, as mentioned, the states ψ θ (x) are not normalizable and their energies depend on θ. The main effect of the complex scaling operation was to move the continuous spectrum of the Hamiltonian away from the real axis, close to which we find the resonance eigenvalues as we see later.

If the system consists of a central, (almost) localized potential surrounded by vacuum, an unbound state still has the form (16) almost everywhere in space. Importantly and non-trivially, this also works with the Coulomb potential in spite of its long range. The complex scaling transformation still causes the continuous spectrum to rotate by exactly −2θ. In numerical representations this is only approximately true because of incompleteness of the basis and in particular finite simulation boxes as in Fig. 1.

We note here that the method cannot in general be combined with extended (periodic) systems, because complex scaling fundamentally works in terms of the asymptotic form of decaying functions. For example, a metal would possess occupied continuum states which do not decay at the end of the cell. This makes their properties depend on the scaling angle θ as we saw for free particles. However, from what we have seen so far, one could well imagine using complex scaling in some directions and not others – for example, to describe electrons escaping in the z direction from a surface which is periodic along x and y, or radially from a one-dimensional nanowire.

3.4 Resonant States

From standard scattering theory we know that resonances are associated with wavefunctions that diverge exponentially at increasing distances. If the resonance is generated by a short-range potential, the resonance wavefunction must far away equal or approach that of a free particle.

In one dimension the resonance wavefunction must therefore have the form

where we have used the complex wavenumber k = p − iq with positive p (so the wave is outgoing) and q (so it diverges exponentially). Now apply the complex scaling transformation to this function:

This function is square integrable if q < p tan θ. Physically we would expect a resonance peak to be located at a positive energy, and that the resonance width is much smaller than the resonance energy. The energy of this wave is (p − iq)2/2 = (p 2 − q 2 − 2ipq)/2, and we would thus expect p to be well greater than q for any resonance. Some intermediate value of θ therefore easily ensures that q < p tan θ, i.e., that the resonance wavefunction is square integrable.

We conclude from this that the Siegert wavefunction representing a resonant state indeed becomes square integrable under adequate complex scaling. This makes matrix elements with resonant states invariant to variations in θ, similarly to bound states, as long as the variation of θ does not make them unbounded.Footnote 2

The numerical convergence of resonance energies and widths is a non-trivial issue with complex scaling. When using a numerical representation such as a finite basis set, matrix elements are not perfectly independent of θ. For a given system it is standard practice to compare calculated resonance energies and widths over a range of different θ-values, looking for a stationary point or a cusp which, following the “complex virial theorem,” would best approximate the fully converged complex energy [32, 33].

3.5 Exterior Complex Scaling

We established previously that the complex scaling operation preserves scalar products of square integrable states because it corresponds to a change of integration contour of an analytic function. Suppose we want to calculate a resonance of a molecule in the Born–Oppenheimer approximation. The nuclear point charges cause poles in the Coulomb potential at each nuclear position. Uniform complex scaling does not work because of these poles. A solution to this problem is to change the integration contour to avoid the poles. From complex analysis we know that we could have chosen many other integration paths, corresponding to other definitions of the scaling operation \( {\widehat{R}}_{\theta } \), and those contours would equally well preserve scalar products as long as the integration contours have the same start and end points and do not enclose poles. This is the basis for exterior complex scaling which was proposed by Simon [22] to solve exactly this problem. Another method is to use the analytic continuation of matrix elements within a basis set representation [34–36], which effectively approximates the exterior complex scaling approach [37].

We thus complex-scale the exterior of a region containing all the point charges by an operation, here written in one dimension, of the form

The uniform and exterior scaling integration contours are shown in Fig. 3. The important condition for exterior scaling is that the scaling retains the asymptotic form x → xeiθ which ensures outgoing-wave boundary conditions. Because the integration contour is not differentiable, neither is an exterior complex-scaled function that corresponds to a smooth original function. Recall from uniform scaling that we needed to multiply by eiθ/2 to “absorb” the now complex volume element when integrating. We have not done anything to the volume element in (21), and therefore we need to apply a factor of eiθ when calculating integrals over the complex segments. Alternatively, most authors define the exterior scaling operation so the wavefunction in the exterior segments includes the complex prefactor; the functions then become discontinuous [38], but we do not need to consider the volume element when integrating. Here we have followed the original convention of Simon [22] where the function is always continuous. As long as the discontinuities of the complex-scaled functions or their derivatives are well incorporated into the numerical basis set used to represent them, they are harmless.

Possible complex integration contours for uniform, exterior and smooth exterior complex scaling. θ = 0.6. The contours must be continuously deformable (without crossing any poles) back to the real axis in order for them to be equivalent to a real integration. Note that, as per basic complex analysis, the contours themselves do not have to be differentiable – it is sufficient that the integrand be analytic

If for numerical reasons we want smooth functions everywhere, we can equally well choose a smooth integration contour. This is called smooth-exterior complex scaling. The scaling operator here acts by applying a smooth function x → z = F(x) to the position coordinate, with F(x) ~ xeiθ for large |x|.

Once again we have the choice of where to include the smoothly varying volume element: either in the definition of the scaling operation, or explicitly when integrating. This yields different expressions which are given, for example, by Moiseyev [39]. If we include the volume element in the scaling operation, it reads

The Hamiltonian subject to this transformation is

where

An example contour is shown in Fig. 3. The contour defined by F can be quite general, but one would choose F(x) = x within the interior region such that \( {V}_{\mathtt{0}}^F(x)={V}_1^F(x)=0 \) and [F′(x)]−2 = 1. Note how (23) then reduces to the usual Schrödinger equation as it should. With this formulation we do not need to mind any discontinuities of wavefunctions, their derivatives, or the Jacobian, and standard methods such as finite-difference stencils can be applied straightforwardly as long as F is adequately differentiable.

How do the different types of complex scaling discussed above compare computationally? The basic equations of exterior and smooth exterior complex scaling are clearly more complicated than those for uniform scaling. However, as mentioned, the purpose of exterior complex scaling is that it admits potentials that are not analytic within the interior region. This includes any strictly localized function such as most atomic pseudopotentials – a major advantage for advanced self-consistent field methods such as DFT. One other advantage of exterior scaling is that. within the interior region, quantities such as the density retain their true physical values rather than a difficult-to-interpret complex continuation which is also numerically difficult to rotate back to real space.

For real-space methods, an advantage of smooth exterior complex scaling is that one can transparently use finite-difference stencils as per (23). Standard finite-difference stencils, representing, for instance, the kinetic operator, do not work on a non-differentiable contour although one can derive special stencils for this case [40]. Basis sets should also make sure to take the discontinuity into account. Finite-element representations involving some kind of basis are commonly used; see, for example, Rescigno et al. [41] and Scrinzi and Elander [42]. Rescigno and co-workers have reported that finite-element calculations with a basis set which properly takes the discontinuity of “sharp” exterior scaling into account require less functions than a purely analytic basis set using smooth scaling [41]. A more detailed discussion of the numerical representations and basis sets can be found in work by McCurdy et al. [24], who also argue that grid-based methods enjoy a similar advantage with sharp exterior complex scaling, provided the scaling onset is exactly on a grid point.Footnote 3

3.6 Example: Resonance in One Dimension

Let us perform an analytic calculation of a resonance using complex scaling to see how exactly the resonance emerges. We consider a barrier formed by the piece-wise constant potential

seen in Fig. 4. Both Gellene [16] and Simons [43] have considered this problem previously. As the rectangular barrier is not an analytic function, we cannot use uniform complex scaling. However, nothing stops us from using exterior scaling, with the scaling transformation starting somewhere outside the barrier at x = c > b.

We thus use the contour

For x ≥ c the Hamiltonian is therefore \( -\frac{1}{2}{\mathrm{e}}^{-i2\theta}\frac{{\mathrm{d}}^2}{\mathrm{d}{x}^2} \). This gives us four regions, within each of which the wavefunction must be a solution to the Schrödinger equation for a free particle but with different local momenta k 1, k 2, and k θ3 which may be complex:

The expression for ψ 1(x) has been chosen to fulfill the boundary condition ψ 1(0) = 0, and A eventually determines the normalization of the state. To relate the three wavenumbers k 1, k 2, and k θ3 , we note that applying the Hamiltonian to the wavefunction must yield the same energy eigenvalue \( {\varepsilon}_{\theta }={k}_1^2/2={k}_2^2/2+{V}_0={\left({\mathrm{e}}^{-i\theta }{k}_3^{\theta}\right)}^2/2 \) within each segment. From this may take \( {k}_3^{\theta }={k}_1{\mathrm{e}}^{i\theta } \).

The segments must be joined continuously and differentiably, i.e., ψ 1(a) = ψ 2(a) and \( {\psi}_1^{\prime }(a)={\psi}_2^{\prime }(a) \) at x = a. Likewise ψ 2(b) = ψ 3(b) and \( {\psi}_2^{\prime }(b)={\psi}_3^{\prime }(b) \). At x = c, the onset of the scaled exterior region, the derivative ψ′3(c) must match the scaled derivative ψ θ4 ′ (c), so the derivative becomes discontinuous [22]:

(We have here, for esthetic reasons, chosen not to include the square root of the volume element or Jacobian in the definition of ψ θ4 (x); if we had, the function itself would have been discontinuous as discussed in Sect. 3.5.)

We thus have two equations at each of the points a, b, and c, for a total of six equations. A seventh equation follows from the requirement that the function be square integrable. These seven equations determine the six unknown coefficients C, D, F, G, I, and J, and further quantize the energy so that we get solutions only for specific wavenumbers k 1, k 2, and k θ3 .

Gellene [16] provides expressions for the coefficients C, D, F, and G in terms of A so that ψ 1(x), ψ 2(x), and ψ 3(x) match at the points a and b. The resonances are then found by considering the phase shift between the incoming and outgoing coefficients F and G of ψ 3(x). However, this is very different in our case using complex scaling; here, the coefficients I and J of ψ θ4 (x) must ensure square integrability.

Physically, we would expect of a resonance that its energy is much greater than its width. The wavenumber k 1 then has real and imaginary parts k 1 = p − iq such that p ≫ q. The wavefunction can thus be written as

For scaling angles θ not too close to zero, the first term converges whereas the second diverges as x → ∞, and so we conclude that J = 0. Relating the right and left values and derivatives of ψ 3(x) and ψ θ4 (x) at x = c we get

and it immediately follows that G = 0, i.e., there is no incoming wave component. This is very different from the Hermitian treatment demonstrated by Gellene which yields F = G *, exactly balancing the outgoing and incoming flux. We see that, as previously discussed, the square integrability requirement of the complex-scaled solution ensures that waves are purely outgoing. In a simple model we could just as easily have forgotten everything about complex scaling and set G = 0 immediately. However, in a numerical calculation things are not so simple, and we have to rely on the complex scaling transformation to ensure square integrability and to extract the resonant states in a tractable form.

In a more complicated potential generated by multiple atoms, the situation would be similar sufficiently far away from the system. The asymptotic form of the wavefunctions may differ slightly because of long-range interactions such as the Coulomb interaction, but this doesn’t prevent the exponentially localizing effect of the complex scaling operation from functioning.

However, let us get back to the determination of the resonance eigenvalues. The requirement that G = 0 allows us to proceed, linking F, D, and C by means of the differentiability and continuity requirements. Once all coefficients are eliminated, the condition for resonance is

For any energy ε − iΓ/2, the wavenumbers k 1 and k 2 are uniquely determined. The solutions can then be determined numerically. The three complex resonance energies closest to 0 are given by the real parts ε = 0.421, 1.65, 3.57, and half-widths Γ/2 = 0.00138, 0.0189, 0.138. Figure 5 shows the corresponding resonance wavefunctions. The eigenvalues slightly disagree with those by Gellene who works effectively on the real axis. This is because the two methods are different: With complex scaling we find an eigenvalue in the complex plane which corresponds exactly to an outgoing wave. Working on the real axis, we would find the real energy which responds most strongly to that eigenvalue. However, as the complex eigenvalue gets further away from the real axis, location and width soon begin to differ.

4 Density Functional Resonance Theory

As the complex scaling formalism is based on the many-particle Schrödinger equation, the method inherits the same exponential computational cost with respect to the number of particles. The method in the original form is therefore practical only for systems with very few particles, such as small atoms or molecules, using, for example, correlated basis sets [44, 45]. However, larger systems require more scalable computational methods, of which many have been investigated. Of particular interest are self-consistent field methods such as Hartree–Fock [46], post-Hartree–Fock methods [47, 48], and DFT [49, 50]. DFT as always has the drawback that it relies on a complicated formalism including an approximation of the exchange and correlation effects which is difficult to control, but its inarguable performance advantages nevertheless make it more than worthy of consideration. Below we describe the extension of DFT with complex scaling.

4.1 Complex Scaling and DFT

DFT is based on the minimization of a functional of the real electron density. The minimum of the functional and the corresponding electron density are the ground-state energy and electron density [1, 3]. For practical calculations one uses a set of single-particle states or Kohn–Sham states to facilitate evaluation of the kinetic part of the functional. The Kohn–Sham energy functional contains the following contributions: the kinetic energy, the Hartree energy, the exchange–correlation (XC) energy, and the energy from a system-dependent external potential. The kinetic energy functional depends explicitly on the Kohn–Sham wavefunctions whereas the others depend on them only through the density. Either way, all the terms can be understood as sums of matrix elements of operators. We know from Sect. 3.2 how the complex scaling operation conserves matrix elements of states that are spatially localized, provided that the operators are analytic. We can therefore reasonably expect complex scaling to be made to work within DFT, once we know how each term in the energy functional scales. The combination has been dubbed density functional resonance theory (DFRT) [50].

One would thus propose a complex-valued energy functional

with the complex-scaled density

where f n are occupation numbers, and N the number of dimensions in which the coordinates are complex-scaled. In (38) the kinetic and external contributions are complex-scaled as normal. In the Hartree energy, ρ θ (r) denotes the complex-scaled charge density which is the electron density n θ (r) plus any other contributions such as pseudopotential charges (whose complex-scaled form is uniquely determined by requiring that their Hartree potential scales as normal). The Hartree energy itself scales as \( {E}_{\mathrm{H}}^{\theta}\left[{n}_{\theta}\right]={\mathrm{e}}^{-i\theta }{E}_{\mathrm{H}}\left[{n}_{\theta}\right] \), i.e., the standard Hartree functional is applied to the complex density, with the factor e−iθ appearing because of the 1/r kernel. We discuss the complex XC energy functional E θxc [n θ ] later.

Being complex, “minimizing” the energy functional (38) does not strictly make sense. Nevertheless, the lowest-energy resonance is obtainable as a stationary point of the complex energy functional [51]. An equation for the stationary point can, as normal, be obtained by taking the derivative with respect to the wavefunctions plus a set of Lagrange multipliers which ensure normalization. This yields the complex scaled Kohn–Sham equations

for ψ θn (r) and ε θn , where we have taken the derivative with respect to the left states \( {\overline{\psi}}_{\theta n}\left(\mathbf{r}\right) \). If the unscaled Hamiltonian is real, the states can be chosen to be real so that \( {\overline{\psi}}_{\theta n}\left(\mathbf{r}\right)={\psi}_{\theta n}\left(\mathbf{r}\right) \). In general, however, we could equally well have derived a Hamiltonian for the left states \( {\overline{\psi}}_{\theta n}\left(\mathbf{r}\right) \).

In the Kohn–Sham equations (40) we have introduced the effective potential

defined as the density-derivatives of terms in the energy functional. The Hartree potential is

which allows the potential to be determined from the charge density by solving a complex Poisson problem using standard techniques. What remains to be discussed now is the XC functional.

4.2 Complex Scaling of Exchange and Correlation

The first DFRT calculations were carried out in a one-dimensional model potential with two electrons in the same (singlet) state [50]. The method was demonstrated using the exact KS potential, which in this case is

along with exact exchange (EXX) which, in this case, simply cancels out half the Coulomb energy. However, for systems with more particles, and indeed for realistic numerical calculations in the style of modern DFT software, the XC functional would have to be one of the many commonly used approximations. For simplicity we ignore any notion of spin below. The simplest functional is the local density approximation (LDA), the complex scaling of which was studied by Larsen et al. [49]. The first question is whether the functional is analytic. The exchange energy is given by

where the fractional power n 4/3 is three-valued on the complex numbers and we must mind the branch cuts. Following the arguments of Sect. 3.2 for handling the change in complex contour, the integral scales as follows as long as we do not run into a branch cut:

The complex-scaled XC potential is naturally defined as

Taking the derivative with respect to n θ (r) we get the exchange potential

i.e., the expression is consistent with analytically continuing the expression for the unscaled potential.

As already noted, the expressions are three-valued because of the fractional power. In Larsen et al. [49] this was resolved by “stitching” the potential from the three branches of the cube root: In the origin, the potential must be real as the spatial co-ordinate is real. Further away, whenever the cube root encounters a branch cut, one of the other branches is chosen to restore analyticity. This procedure is illustrated in Fig. 6.

“Stitching” branches of the cube root for the LDA exchange potential. The procedure starts at x = 0 where we know that the potential must be real. When the density takes the value of a branch cut of the cube root (indicated by arrows), the function must switch to a different branch to retain analyticity. The stitched function, indicated by the shaded gray band, is analytic everywhere and always follows one of the three branches of the cube root. In this example the density is a Gaussian function. From Larsen et al. [49]

Following the Perdew–Wang parametrization of the LDA correlation functional [52], the correlation potential is given by

where

and r s is the Wigner–Seitz radius, i.e., r s (r) = [3/(4πn(r))]1/3. The complex logarithm can be stitched quite analogously to the cube root. Other XC functionals can be stitched similarly, provided that they do not contain poles that get in the way of the integration contour. With exterior complex scaling we avoid scaling the regions of space where most of the action happens, potentially avoiding these problems. We mention a recent time-dependent study [53] which uses smooth exterior complex scaling with the LB94 [54] XC model potential for spin σ:

with

This expression also has several issues with analyticity as it involves both division and fractional powers. In Telnov et al. [53] the exterior scaling contour was probably chosen so as to avoid these, but unfortunately the issue was not mentioned.

4.3 Resonance Lifetimes in DFRT

In this section we present a few results from DFRT on physical systems. Figure 7 shows the ionization rate of a helium atom in an electric field as a function of field strength calculated with different methods: LDA, EXX (Hartree–Fock), ADK [55], and an accurate correlated-electron calculation by Scrinzi [45].

Ionization rates of the helium atom in static electric fields from different methods. The accuracy at low field strengths is determined by how well the XC functional predicts the energy of the highest occupied orbital, which LDA is known to greatly overestimate. From Larsen et al. [49]

ADK is a simple approximation which is correct in the limit of weak fields. The ionization potential of the atom entirely determines the form of the curve in this limit. Precisely because low-field asymptotics are determined by the value of the ionization potential, the utility of a functional in this limit is directly linked to the precision with which it estimates the ionization potential, i.e., which energy it assigns to the highest occupied state.

LDA is well known to overestimate this energy, and therefore calculates too high ionization rates for low fields. This problem is attributed to the wrong asymptotic decay of the LDA potential [54]. Meanwhile, Hartree–Fock is known to produce accurate orbital energies, and the decay of the exact exchange potential has the correct asymptotic form. EXX also yields results that are close to the reference by Scrinzi. This all suggests that a good XC functional for DFRT resonance lifetime calculations is one retaining the correct asymptotic form of the potential, such as the previously mentioned LB94 functional.

4.4 Time-Dependence in Complex Scaling

In this section we consider the extension of complex scaling to time-dependent simulations. Most obviously, one could simulate the dynamics of a system whose initial state is derived from a resonance. However, the method has been found useful for another practical reason, namely that complex scaling can be used to avoid the effects of waves reflecting from the boundaries. An early approach by Parker and McCurdy [56] showed that a complex basis set, with properties closely related to the complex scaling method, reduced the amount of basis functions necessary to represent properly a Gaussian wave packet under time evolution. The authors found that the representation avoided reflection effects produced by incompleteness of the basis sets as the wave packet moved away from the central region.

Exterior complex scaling is now widely used as a practical absorber to prevent reflections of waves because of the finite size of the simulation box. Details of its use in this context are given in Sect. 5.6.

Let us go back to the basic question of how to time evolve complex-scaled states. Bengtsson and co-workers [57, 58] have considered this problem in detail. The time evolution of a state vector and its corresponding functional (or bra) are determined by

We apply the complex rotation operator and get

A general state ψ θ (r t) can be time-evolved according to its expansion in eigenstates. If ϕ 0 θ (r) is an eigenstate with energy ε θ , then

If, further, the eigenstate represents a resonance, so that its energy has a negative imaginary part, ϕ θ (r t) decays exponentially while everywhere maintaining its shape. To calculate a general expectation value after a certain time, we would, according to (10), need to apply the left state \( {\overline{\psi}}_{\theta}\left(\mathbf{r}t\right)={\left[{\psi}_{-\theta}\left(\mathbf{r}t\right)\right]}^{*} \) as per (12). The left state can be time evolved using (54) with −θ. The Hamiltonian H^−θ is the conjugate of H^ θ so all eigenvalues are likewise conjugated. If ψ θ (r t) contains exponentially decaying components, the corresponding components of ψ −θ (r t) exponentially increase at the same rate (one could equivalently say that they propagate backward in time [59]). In principle the increase of the left state would be cancelled by the decay of the right so that the norm, calculated using both left and right states, is time independent, but any numerical error accumulates over the course of the time evolution and eventually causes the procedure to break down.

Although Bengtsson and co-workers have demonstrated that a complex time propagation path can be used to stabilize the time evolution [58], most applications of complex scaling with time evolution have been handled differently. The typical approach is to use exterior complex scaling and time evolve only the right states, then calculate all physical quantities using only the right states although this in general is not formally justified. This approach is discussed further in Sect. 5.6.

5 Open Boundary Conditions

In the previous sections we showed how it is possible to capture intrinsically time-dependent properties such as the lifetime of a resonance using a static, time-independent approach. Now we turn instead to the class of problems where the explicit time-dependence must be taken into account. As we see, the concepts introduced in the previous sections reemerge in the description of physical processes where the total number of particles is no longer a conserved quantity. In particular, insistence on describing an infinitely extended problem in a bounded domain naturally results in dynamics governed by a non-Hermitian Hamiltonian.

Let us divide space into two parts as in Fig. 8 where we have a bounded region we call A and its complement B. We want to solve the equations of motion in A without having to describe explicitly the environment in B. In other words, the problem we have is finding the appropriate boundary conditions for the equations in A, such that the localized solution Ψ A (t) is equal to the full solution Ψ(t) evaluated in A at all times t.

The class of processes which can be described by the scheme in Fig. 8 includes all the scattering problems where electrons enter A from one side and escape after having interacted with the system. This encompasses, for instance, electron diffraction or molecular transport. It also includes scattering problems where electrons are scattered by other kinds of particles such as photons or protons, thus leading to photoionization or proton impact ionization. This last class of processes is sometimes called half-scattering processes because, from the point of view of the electron, the scattering happens with another kind of particle. The main difference between scattering and half-scattering processes is that the boundary conditions for describing the half process are simpler because there is no need to inject charge but only to absorb it. We must, however, note that if nonlinear effects are dominant, for instance when strong laser fields are involved, this distinction is less clear and one may also need to account for incoming electrons for half-scattering problems.

Below we review some of the most notable methods in the literature that have been employed to address this problem. We anticipate that, in all the approaches we discuss, the boundary conditions are implemented by modifying the Hamiltonian with the addition of a complex term that explicitly breaks Hermiticity.

5.1 Transparent Boundary Conditions Using Green Functions

Transparent boundary conditions include, by definition, all boundary conditions that allow an exact solution of the open boundary problem. As such, they allow electrons to move back and forth between A and B without reflection. We examine below the class of boundary conditions that can be defined in terms of Green functions. This is not the only possible solution, and other instances of transparent boundaries can be constructed, for example, by using time dependent exterior complex scaling or split propagation schemes as we show in Sects. 5.6 and 6.3, respectively. So-called decimation techniques have also been employed to describe transparent boundaries; see, for instance, García-Moliner and Flores [60] and Kudrnovský et al. [61].

Green function boundary conditions are based on the idea of matching the inner solution Ψ A of the Schrödinger equation with the outer one Ψ B expressed in terms of Green functions. Underlying this strategy is the hypothesis that the Hamiltonian describing the system in B is easier to handle than the one describing the system in A. In general, the problem of finding the Green function for an arbitrary system is hard to solve. However, including in A most of the atomic and molecular structure leaves us in B with a problem which, in many cases, can be easily solved.

The simplest case consists of choosing B to represent the empty space, and the method lends itself to the description of scattering or ionization [62, 63]. On a more advanced level, one may choose B to represent a bulk system and, in conjunction with a time-dependent potential, create a base model for electron transport [64, 65]. Alternatively, by mixing both bulk and empty space Green functions, the frameworks can adapt to the description of ionization from surfaces [66, 67].

The approach is adaptable to a large variety of situations. This versatility has, however, to face the fact that discretizing the otherwise exact equations often leads to computationally demanding implementations with limited application. On the practical level, either one introduces an approximation which affects the quality of the results, or one just uses a simple time propagation of a full-dimensional system, which represents a challenging task [68].

In spite of the technical limitations, the approach provides a fundamental and illustrative description of the open boundary problem. Below we discuss two of the most notable derivations present in the literature.

5.2 Time-Dependent Embedding

The original Green function embedding was developed in the context of surface and solid state physics for the static Schrödinger equation by Inglesfield [69]. It was subsequently extended to the time-dependent case in Inglesfield [67, 70] by the same author, but similar derivations have been proposed earlier in different fields, for instance, to describe the interaction of a strong laser with atoms in Boucke et al. [62] and Ermolaev et al. [63], and for electron transport in Hellums and Frensley [64].

Below we introduce the theory following an approach similar to the one used to describe molecular transport with TDDFT by Kurth [65].Footnote 4 We first restrict ourselves to the single-electron case and then discuss the extension to the many-electron one with TDDFT.

Let us consider the case of a system in contact with a reservoir as shown in Fig. 9. We want to find a closed set of conditions that have to be imposed on the equations for a wavefunction in A such that it correctly matches its outer part in B for all times. Following the division in the figure, we can write the time-dependent Schrödinger equation for the system A coupled with a reservoir/environment B using a block matrix representation:

where ψ A (r,t) and ψ B (r,t) are the wavefunctions projected onto each separate region. Here we consider the general case where the Hamiltonian is time-dependent, and its components include two diagonal terms H^ A,A (t) and H^ B,B (t) operating within each separate region and two coupling terms H^ A,B (t) and H^ B,A (t) connecting the environment to the system.

Time-dependent embedding. Embedding consists in modifying the Hamiltonian in A in such a way that, solving the associated time-dependent Schrödinger equation in A only, it automatically imposes the matching of ψ A (r,t) with ψ B (r,t) for all t. The modification is made using an embedding operator derived in terms of the Green function G 0(r,r′,t,t′) of the environment B

To derive the embedded time-dependent equations we introduce the retarded Green function G 0 for the reservoir, defined as

with boundary conditions G 0(r,r′,t +,t) = −i, G 0(r,r′,t,t +) = 0, and where t + represents a time approaching t from above. Because of the explicit time dependence of H^ B,B (t), it generally depends on both the time variables t and t′. We note however that the solution greatly simplifies if we consider B to represent empty space. In this case, G 0(r,r′,t,t′) is the free propagator, which depends only on the time difference t − t′ and is known analytically.

Using G 0(r,r′,t,t′) we can directly build the solution of the differential equations in B. This corresponds to considering only the second row in (56), and results inFootnote 5

The final equation governing the time evolution for ψ A (t) can be written in a closed form simply by plugging (58) into the first row of (56). After that we obtain

with

In this equation, \( \widehat{\Sigma}\left(t,t^{\prime}\right)={\widehat{H}}_{A,B}(t){\widehat{G}}_0\left(t,t^{\prime}\right){\widehat{H}}_{B,A}\left(t,^{\prime}\right) \) can be identified with the self-energy responsible for the hopping in and out of the system, whereas the last term is responsible for imposing the initial conditions in the reservoir. It is zero if the wavefunction is completely localized in A at t = 0. The time evolution of ψ A (t) is thus governed by a modified Hamiltonian containing an additional time-dependent embedding operator H^Σ[ψ A ](t). The dependence on the wavefunction is written in square brackets to stress the fact that H^Σ[ψ A ](t) is not just a simple local potential but involves a more general non-local action.

The kernel \( \widehat{\Sigma}\left(t,t^{\prime}\right) \) of the time integral in (60) is, in the most general case, an explicit function of t and t′. This is the case, for instance, when one wants to apply this method to model molecular transport and B represents an electrode with a time-dependent voltage bias. Evaluating (60) thus requires one to keep track of ψ A (t) for all times up to t. This is one of the biggest drawbacks of the approach as it restricts the propagation to short times because of storage limitations. Direct approximations of the kernel intended to mitigate this problem have to face the fact that the kernel is often non-analytical and highly oscillating, especially for t → t′ [65]. However, we note that when the Hamiltonian in B is not explicitly time-dependent, \( \widehat{\Sigma}\left(t,t,^{\prime}\right) \) depends only on the time difference t − t′ and we are left with a much easier convolution integral.

In this last case, i.e., when the Hamiltonian in B is time-independent, an alternative but equivalent form for the embedding operator can be obtained following the derivation of Inglesfield [67]. In this approach we are given two wavefunctions ψ A (r,t) and ψ B (r,t) which have equal amplitude on the surface S separating A and B, but arbitrary derivative as illustrated in Fig. 9. Assuming that ψ B (r,t) is a solution of the time-dependent Schrödinger equation in B, we need to find a closed set of equations for ψ A (r,t) to connect perfectly to ψ B (r,t) on S for all t.

The problem is solved with the use of what in the field of partial differential equations goes under the name of Dirichlet-to-Neumann and its inverse Neumann-to-Dirichlet maps [68, 71, 72]. These maps allow one to transform Dirichlet boundary conditions, fixing the value of a function on a surface, into Neumann boundary conditions, fixing the normal derivative over a surface, and vice versa. The resulting time-dependent equations for ψ A (r,t) can be written in the same way as (59) with an embedding operator defined as [67, 70]

where ∂/∂n s denotes the directional derivative out of A and perpendicular to S, and

Here \( {G}_0^{-1}\left({\mathbf{r}}_S,{\mathbf{r}\mathbf{\prime}}_S,\varepsilon \right) \) is the inverse of the Green function defined by (57) evaluated on the boundary surface S with \( {\mathbf{r}}_S,\mathbf{r}{\mathbf{\prime}}_S\in S \). Because G 0(r,r′,t − t′) depends only on time differences it is conveniently expressed in the energy domain ε with a Fourier transform over the time domain. Because of the presence of the δ(r − r S ), the embedding operator (61) is non-zero only on the boundary surface and involves normal and time derivatives of ψ A (r,t) over that surface.

Because of the equivalence of H^ℰ and H^Σ defined in (60) and (61), we refer in the following to an embedding operator with the symbol \( \widehat{\mathrm{\mathcal{E}}}\left[{\psi}_A\right](t) \) for simplicity. We are now in the position to comment on the most characteristic features of \( \widehat{\mathrm{\mathcal{E}}}\left[{\psi}_A\right](t) \). In general, it involves complex quantities which make it an explicitly non-Hermitian operator. This fact implies that the total number of electrons is no longer conserved during the propagation. Furthermore, it contains a memory term in the form of a time integral. In Frensley [73] it was postulated that transparent boundary conditions should break time reversal symmetry. The presence of a memory term in (59) turns the time propagation into a non-Markovian process and precisely breaks this symmetry.

The extension to the many-electron case is straightforward using the same 2 × 2 block structure of (56) with the difference that the entries must be interpreted as operators acting on the N-body Hilbert space. The previous steps of the derivation hold in a completely equivalent way up to (59) and (60) provided the interacting many-body Green function G is used in place of G 0.

Formulating this in the language of TDDFT, the OQS-TDDFT theory establishes a one-to-one connection between potential and density for non-unitary dynamics [5–7]. The evolution from an initial state is uniquely defined if we find a way to write the coupling with the environment as a functional ν B[n] of the total density n. Once again the equations retain the block structure of (56) with entries interpreted as multi-index tensors, each index being associated with a Kohn–Sham orbital. The result is a set of equations equivalent to (59) for each orbital, where the exact embedding operator \( \widehat{\mathrm{\mathcal{E}}}\left[n\right] \) depends on the total density of the system (i.e., in A∪B) through each orbital and the full many-body Green function G[n]. The total embedding operator can thus be interpreted as the coupling functional ν B[n] with the environment. Obviously, this connection involving the full many-body Green function is of little use in practical situations, but it provides a clear starting point for further approximations.

5.3 Absorbing Boundaries

Describing charge transfer between a system and its environment implies a modification of the isolated Hamiltonian. In the previous section we showed how the exact condition requires the addition of an embedding operator \( \widehat{\mathrm{\mathcal{E}}}\left[{\psi}_A\right](t) \) that turns the Hamiltonian non-Hermitian. The evaluation of such an operator can, however, be very demanding and one needs to resort to simpler strategies.

Absorbing boundaries (ABs) or boundary absorbers are cheaper options. They can be defined as any approximation of the form

to an embedding operator such as the one given by (60) or (61). This approximation is specific to the case where B represents the empty space and we only have to absorb outgoing electrons. We know that \( \widehat{\mathrm{\mathcal{E}}}\left[{\psi}_A\right](t) \) can be spatially localized on the boundary surface. The absorbing boundary operator is instead generally allowed to act on the wavefunctions over a larger region close to the boundaries, as illustrated in Fig. 10. In the large majority of approximations, this operator is taken to be a local potential:

Absorbing boundaries. An absorbing boundary Hamiltonian H^AB(t) acting on the striped region is added to the original one H^(t) to prevent reflections from the boundaries during time propagation. The perfect absorber is the one that matches the full solution ψ(t) with ψ A (t) in the inner (non-striped) region for all times t

Its purpose is to absorb completely any outgoing wave packet entering the region (striped in the figure) of its support. The main goal here is to apply the absorber that best simulates the exact embedding operator with the minimum computational cost.

From a TDDFT perspective, when we apply H^AB to each Kohn–Sham orbital, on top of all the approximations which might be involved in the description of the embedding operator, we are also approximating the interaction between the system and the environment by setting it to zero.

The absorbing properties of a boundary depend strongly on the numerical implementation. We do not enter any specific implementation here but just point out the fact that none of the absorbers presented in the literature are completely free from reflections. We refer to De Giovannini et al. [74] for a recent review on the reflection properties of members of each boundary family.

We discuss below two of the most popular families of absorbing boundaries: the complex absorbing potentials (CAPs) and the mask function absorbers (MFAs). These families are substantially phenomenological approximations to the open boundary problem for which the main point of attraction rests on their simplicity of implementation and limited computational costs.

5.4 Complex Absorbing Potentials (CAPs)

We already noted above that the exact embedding potential has to be a complex quantity to turn the Hamiltonian non-Hermitian, and the fundamental mechanism of CAPs is precisely based on this observation. The idea was originally introduced from a different standpoint by Neuhauser and Baer [75, 76] with the use of negative imaginary potentials for the Schrödinger equation. This was in connection with the so-called optical potentials or perfectly matched layers developed for electromagnetic waves [77].

The effect of a CAP can be easily understood by observing the action of the infinitesimal time evolution operator on a wavefunction

when \( {\widehat{V}}_{\mathrm{CAP}} \) is a negative imaginary potential with support on a region close to the boundaries of A. In this case, the effect simply results in an exponential suppression of the wavefunction in the absorbing region. In other words, the time evolution operator associated with the non-Hermitian Hamiltonian modified with \( {\widehat{V}}_{\mathrm{CAP}} \) is non-unitary and no longer conserves the wavefunction norm. The norm decreases if \( {\widehat{V}}_{\mathrm{CAP}} \) is negative and increases if it is positive. In the latter case it becomes possible to simulate charge injection, and this fact has been used to mimic reservoirs acting as sinks or sources in the attempt to simulate electron transport [78–80].

CAPs are by no means restricted to purely imaginary potentials and there is a huge body of literature describing their different forms and declinations [81]. We stress the fact that their properties strongly depend both on their mathematical form and the specific implementation, and, without exception, they all reflect in some energy range [74]. For practical purposes it is thus very important to ensure that the CAP we choose for our calculations has good absorption properties in the range of interest.

As an example, in Fig. 11 we show the absorption cross-sections for argon and neon in the continuum, above the first ionization threshold, calculated in linear response with TDDFT and a CAP. The CAP is chosen to minimize reflections around E = 93 eV for neon and E = 105 eV for argon. The spectra are in good agreement with the experimental ones in a fairly large range around those energies and reflections appear as oscillations.

Neon and argon atom absorption cross-sections above the first ionization threshold calculated with TDDFT and different exchange and correlation functionals: LDA, CXD-LDA [82], PBE [83], and LB94 [54]. A CAP is introduced to reduce reflections in an energy window centered around E = 93 eV (Ne) and E = 105 eV (Ar). Adapted from Crawford-Uranga et al. [84]

What is interesting about this result is that we are able to calculate a quantity involving transitions to infinitely extended continuum states just performing a time propagation in a bounded volume. Although at first it might seem counterintuitive, the explanation is actually quite intuitive. In fact, we are calculating here a quantity involving the dipole matrix element between an initial state, the ground state of our system Ψ0, to a final state, a continuum state \( {\Psi}_{E>0}:\left\langle {\Psi}_0\right|\widehat{d}\left|{\Psi}_{E>0}\right\rangle \). The main contribution to this matrix element comes from an integration over the overlap region between the two wavefunctions and, because the ground state is bounded, this region is safely included in A. The extent to which we manage to remove reflection thus directly relates to the quality with which we calculate this integral and, eventually, the quality of the absorption cross-section.

5.5 Mask Function Absorbers (MFAs)

MFAs are an alternative formulation of CAPs. They have been employed to study a variety of phenomena including high harmonic generation [85], electron and proton emission [86], and above-threshold ionization [87].

They are defined by directly modifying the infinitesimal time evolution operator with a mask function M(r) as follows:

The effect of this modification can be easily understood by choosing M(r) to be a real function equal to 1 in the inner part of A and smoothly decaying to zero close to the boundaries. With this choice, recursive application of U M(t + dt,t) to ψ A (t) directly suppresses the part of the wavefunction in the decay region.

This is only one of the possible choices of MFA and, in general, M(r) can be a complex function. We illustrate the effect of using complex M(r) by showing the equivalence between MFAs and CAPs. In fact, given a \( {\widehat{V}}_{\mathrm{CAP}} \), we can obtain the corresponding M CAP(r) straightforwardly by expanding the exponential in (65). To first order in dt the MFA M (1)CAP associated with \( {\widehat{V}}_{\mathrm{CAP}} \) is

The mask function can thus be a complex function, and becomes real when \( {\widehat{V}}_{\mathrm{CAP}} \) is purely imaginary. The inverse relation can be obtained in a similar way, and to first order it reduces to

In De Giovannini et al. [74] it was shown that the first-order relations above, for a given pair of CAP and MFA, yield reflection properties in excellent agreement with each other.

One important feature of the MFA approach is that by multiplying M(r) and 1 − M(r) by a wavefunction it is possible to split its propagation in two different components moving in separate regions. This property is fundamental for split-domain propagation schemes initially derived in Chelkowski et al. [88] and Grobe et al. [89] and later extended to the study of electron photoemission with TDDFT in De Giovannini et al. [90]. We return to this point in Sect. 6.3.

5.6 Time-Dependent Exterior Complex Scaling

In Sect. 3.5 we introduced exterior complex scaling as an extension of complex scaling where the transformation is only applied outside a certain region. It was noted that it shares an important feature with the global transformation: it naturally imposes outgoing boundary conditions on the Schrödinger equation. We discuss here to what extent this property applies to the time-dependent case.

Let us consider a scaling transformation similar to those illustrated in Fig. 3. We further select a path on the real axis deep into region A that departs for the complex plane at some point close to the boundary and eventually reaches the asymptotic form r → reiθ. Following this scaling transformation, the time-dependent Schrödinger equation can be formally cast into a set of equations:

for left \( {\overline{\psi}}_{\theta}\left(\mathbf{r},t\right) \) and right states ψ θ (r,t), where H^ ECS θ (t) represents the scaled Hamiltonian. Extrapolating from the discussion in Sect. 4.4 we can interpret (69) as imposing purely outgoing boundary conditions and (70) as the incoming counterpart.

In the theory of complex scaling, the calculation of the expectation value of an observable O^ θ on the scaled path as of (10) involves left and right states on an equal footing. This extends to the time-dependent case with the requirement of having both left and right states at the same time to calculate O^ θ . Hence, we need, in principle, to solve (69) and (70) simultaneously.

The fact that the scaling path lies exactly on the real axis in a certain region simplifies the equations. In fact, on the real axis, left and right states are complex conjugates: \( {\overline{\psi}}_{\theta}\left(\mathbf{r},t\right)\equiv {\left[{\psi}_{-\theta}\left(\mathbf{r},t\right)\right]}^{*}={\psi}_{\theta }{\left(\mathbf{r},t\right)}^{*} \) for r in the interior region. This is particularly true when the system contains only a local potential and the propagation is initialized with a state localized in the unscaled region at t = 0 and propagating outward. If we restrict ourselves to observables in the unscaled region and we want to describe a purely outgoing process, we resolve to use the right state ψ θ (r,t) only. This state can be obtained by propagating (69) which involves only right states [38]. Following this, most applications of exterior complex scaling are limited to a use with the decaying right states and observables evaluated in the unscaled region.

Equation (69) perfectly describes problems where imposing purely outgoing boundary conditions represents an exact condition similar to that, for example, in ionization processes. In those cases it can be regarded as equivalent to a transparent boundary condition described with a Green function. Here, because we are dealing with purely outgoing conditions, we should note that the title of perfect absorber is more appropriate than that of transparent boundary, because electrons can flow in only one direction.

However, we note an important difference between the two approaches. Whereas the Green function embedding defines the exact matching conditions at the boundary of a finite volume A, the scaled (69) acts on a wavefunction defined in the full space A∪B. This makes the size of the simulation box a weakness in numerical simulations if a wave is capable of reaching the end of the box. The scaling transformation imposes an asymptotic form which can be efficiently captured by exponential functions e−αr. By employing a finite element approach with an element at infinity which captures the exponential tail, it was numerically shown by Scrinzi [38] that exterior complex scaling indeed provides perfectly absorbing conditions for numerical precision.

Restricting (69) to A otherwise implies a truncation which irrevocably breaks its perfect properties. In this case the scaling transformation reduces to an absorbing boundary which can be regarded as a simple CAP and, as such, presents reflections [74, 91]. We should mention that the use of (69) restricted to A in combination with a smooth exterior complex scaling in the literature has been going under the misleading name of reflection-free CAP, in spite of presenting a certain degree of reflection [39, 91–93].

In the context of TDDFT, exterior complex scaling has been applied purely as an absorbing boundary [53, 94].

6 Electron Photoemission

We focus here on the approaches that can be employed in the description of multi-electron ionization initiated by external electromagnetic fields within TDDFT. As in previous sections, we are interested only in electronic processes, neglecting any ionic motion, and we restrict ourselves to the class of methods that requires knowledge of the wavefunctions only on a bounded region of space A much as in Fig. 8.

We are interested in the family of problems characterized by time-dependent electronic Hamiltonians with the structure

where ν ee is the electron–electron Coulomb interaction, ν ext is the external potential which generally consists of a static potential produced by the nuclei, \( \mathbf{\mathcal{A}}(t) \) is the vector potential of the external field, and c is the speed of light. In writing (71) we implied the choice of the velocity gauge to describe the action of the field. The associated electric field can easily be obtained as a time derivative: \( \mathbf{\mathcal{E}}(t) = -{\partial}_t\mathbf{\mathcal{A}}(t) \). Typically, one would want to perform a simulation by choosing a vector potential representing one or more laser pulses, then investigate the induced dynamic.

Ionization takes place whenever the field is capable of inducing a bound-to-continuum transition, resulting in electrons escaping with a given kinetic energy. Calculation of observables characterizing these ionized electrons is at the center of our interest here.

To some extent we already approached this problem in Sect. 5. In fact, total ionization can be naturally described using only information contained in a bounded volume A surrounding our system. The total number of electrons contained in A can be simply calculated from the knowledge of the time-dependent density as

Combined with the use of one of the boundary conditions described above, (72) implements a practical strategy for the calculation of N(t). The total ionization probability, i.e., the probability of ejecting an electron in the long-time limit, is thus naturally obtained using only quantities defined in A as