Abstract

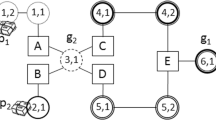

Given a large group of cooperative agents, selecting the right coordination or conflict resolution strategy can have a significant impact on their performance (e.g., speed of convergence). While performance models of such coordination or conflict resolution strategies could aid in selecting the right strategy for a given domain, such models remain largely uninvestigated in the multiagent literature. This chapter takes a step towards applying the recently emerging distributed POMDP (partially observable Markov decision process) frameworks, such as MTDP (Markov team decision process), in service of creating such performance models. A strategy is mapped onto an MTDP policy, and strategies are compared by evaluating their corresponding policies. To address issues of scale-up in applying the distributed POMDP-based models, we use small-scale models, called building blocks that represent the local interaction among a small group of agents. We discuss several ways to combine building blocks for performance prediction of a larger-scale multiagent system.

We present our approach in the context of DCSPs (distributed constraint satisfaction problems), where we first show that there is a large bank of conflict resolution strategies and no strategy dominates all others across different domains. By modeling and combining building blocks, we are able to predict the performance of five different DCSP strategies for four different domain settings, for a large-scale multiagent system. Thus, our approach in modeling the performance of conflict resolution strategies points the way to new tools for strategy analysis and performance modeling in multiagent systems in general.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

D. S. Bernstein, S. Zilberstein, and N. Immerman. The complexity of decentralized control of mdps. In Proceedings of the International Conference on Uncertainty in Artificial Intelligence, 2000.

T. Dean and S. Lin. Decomposition techniques for planning in stochastic domains. In Proceedings of the International Joint Conference on Artificial Intelligence, 1995.

C. Excelente-Toledo and N. Jennings. Learning to select a coordination mechanism. In Proceedings of the International Joint Conference on Autonomous Agents and Multiagent Systems, 2002.

C. Gomes, B. Selman, N. Crato, and H. Kautz. Heavy-tailed phenomenon in satisfiability and constraint satisfaction problems. Journal of Automated Reasoning, 24, 2000.

H. Jung, M. Tambe, and S. Kulkarni. Argumentation as distributed constraint satisfaction: Applications and results. In Proceedings of the International Conference on Autonomous Agents, 2001.

M. Littman, T. Dean, and L. P. Kaelbling. On the complexity of solving markov decision problems. In Proceedings of the International Conference on Uncertainty in Artificial Intelligence, 1995.

L. Lobjois and M. Lemaitre. Branch and bound algorithm selection by performance prediction. In Proceedings of the Seventeenth National Conference on Artificial Intelligence, 1998.

S. Minton, M. D. Johnston, A. Philips, and P. Laird. Solving large-scale constraint satisfaction and scheduling problems using a heuristic repair method. In Proceedings of the National Conference on Artificial Intelligence, 1990.

P. Modi, H. Jung, M. Tambe, W. Shen, and S. Kulkarni. A dynamic distributed constraint satisfaction approach to resource allocation. In Proceedings of the International Conference on Principles and Practice of Constraint Programming, 2001.

R. Musick and S. Russell. How long it will take? In Proceedings of the National Conference on Artificial Intelligence, 1992.

R. Parr. Flexibile decomposition algorithms for weakly coupled Markov decision problems. In Proceedings of the International Conference on Uncertainty in Artificial Intelligence, 1998.

J. Pineau, N. Roy, and S. Thrun. A hierarchical approach to POMDP planning and execution. In Proceedings of the ICML Workshop on Hierarchy and Memory in Reinforcement Learning, 2001.

N. Prasad and V. Lesser. The use of meta-level information in learning situation-specific coordination. In Proceedings of the International Joint Conference on Artificial Intelligence, 1997.

M. L. Puterman. Markov Decision Processes. John Wiley & Sons, 1994.

D. Pynadath and M. Tambe. The communicative multiagent team decision problem: analyzing teamwork theories and models. Journal of Artificial Intelligence Research, 2002.

O. Rana. Performance management of mobile agent systems. In Proceedings of the International Conference on Autonomous Agents, 2000.

M. C. Silaghi, D. Sam-Haroud, and B. V. Faltings. Asynchronous search with aggregations. In Proceedings of the National Conference on Artificial Intelligence, 2000.

P. Xuan and V. Lesser. Multi-agent policies: from centralized ones to decentralized ones. In Proceedings of the International Conference on Autonomous Agents, 2002.

M. Yokoo and K. Hirayama. Distributed constraint satisfaction algorithm for complex local problems. In Proceedings of the International Conference on Multi-Agent Systems, 1998.

W. Zhang and L. Wittenburg. Distributed breakout revisited. In Proceedings of the National Conference on Artificial Intelligence, 2002.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2004 Springer Science + Business Media, Inc.

About this chapter

Cite this chapter

Jung, H., Tambe, M. (2004). Performance Models for Large Scale Multi-Agent System: A Distributed Pomdp-Based Approach. In: Wagner, T.A. (eds) An Application Science for Multi-Agent Systems. Multiagent Systems, Artificial Societies, and Simulated Organizations, vol 10. Springer, Boston, MA. https://doi.org/10.1007/1-4020-7868-4_12

Download citation

DOI: https://doi.org/10.1007/1-4020-7868-4_12

Publisher Name: Springer, Boston, MA

Print ISBN: 978-1-4020-7867-5

Online ISBN: 978-1-4020-7868-2

eBook Packages: Springer Book Archive