Abstract

This paper proposes a novel architecture, termed multiscale principle of relevant information (MPRI), to learn discriminative spectral-spatial features for hyperspectral image classification. MPRI inherits the merits of the principle of relevant information (PRI) to effectively extract multiscale information embedded in the given data, and also takes advantage of the multilayer structure to learn representations in a coarse-to-fine manner. Specifically, MPRI performs spectral-spatial pixel characterization (using PRI) and feature dimensionality reduction (using regularized linear discriminant analysis) iteratively and successively. Extensive experiments on three benchmark data sets demonstrate that MPRI outperforms existing state-of-the-art methods (including deep learning based ones) qualitatively and quantitatively, especially in the scenario of limited training samples. Code of MPRI is available at http://bit.ly/MPRI_HSI.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of hyperspectral imaging techniques, current sensors always have high spectral and spatial resolution (He et al., 2018). For example, the ROSIS sensor can cover spectral resolution higher than 10 nm, reaching 1 m per pixel spatial resolution (Cao et al., 2018; Zare et al., 2018). The increased spectral and spatial resolution enables us to accurately discriminate diverse materials of interest. As a result, hyperspectral images (HSIs) have been widely used in many practical applications, such as precision agriculture, environmental management, mining and mineralogy (He et al., 2018). Among them, HSI classification, which aims to assign each pixel of HSI to a unique class label, has attracted increasing attention in recent years. However, the unfortunate combination of high-dimensional spectral features and the limited ground truth samples, as well as different atmospheric scattering conditions, make the HSI data inherently highly nonlinear and difficult to be categorized (Ghamisi et al., 2017).

Early HSI classification methods straightforwardly apply conventional dimensionality reduction techniques, such as the principal component analysis (PCA) and the linear discriminant analysis (LDA), on spectral domain to learn discriminative spectral features. Although these methods are conceptually simple and easy to implement, they neglect the spatial information, a complement to spectral behavior that has been demonstrated effective to augment HSI classification performance (He et al., 2018; Ghamisi et al., 2015). To address this limitation, Chen et al. 2011) proposed the joint sparse representation (JSR) to incorporate spatial neighborhood information of pixels. Soltani-Farani et al. (2015) designed spatial aware dictionary learning (SADL) by using a structured dictionary learning model to incorporate both spectral and spatial information. Kang et al., suggested using an edge-preserving filter (EPF) to improve the spatial structure of HSI (Kang et al., 2014) and also introduced PCA to encourage the separability of new representations (Kang et al., 2017). A similar idea appears in Pan et al. (2017), in which EPF is substituted with a hierarchical guidance filter. Although these methods perform well, the discriminative power of their extracted spectral-spatial features is far from satisfactory when being tested on challenging land covers.

A recent trend is to use deep neural networks (DNN), such as autoencoders (AE) (Ma et al., 2016) and convolutional neural networks (CNN) (Chen et al., 2016), to learn discriminative spectral-spatial features (Zhong et al., 2018). Although deep features always demonstrate superior discriminative power than hand-crafted features in different computer vision or image processing tasks, existing DNN based HSI classification methods either improve the performance marginally or require significantly more labeled data (Yang et al., 2018). On the other hand, collecting labeled data is always difficult and expensive in remote sensing community (Zare et al., 2018). Admittedly, transfer learning has the potential to alleviate the problem of limited labeled data, it still remains an open problem to construct a reliable relevance between the target domain and the source domain due to the large variations between HSIs obtained by different sensors with unmatched imaging bands and resolutions (Zhu et al., 2017).

Different from previous work, this paper presents a novel architecture, termed multiscale principle of relevant information (MPRI), to learn discriminative spectral-spatial features for HSI classification. MPRI inherits the merits of the principle of relevant information (PRI) (Chapter 8, Principe 2010) (Chapter 3, Rao 2008) to effectively extract multiscale information from given data, and also takes advantage of the multilayer structure to learn representations in a coarse-to-fine manner. To summarize, the major contributions of this work are threefold.

-

We demonstrate the capability of PRI, originated from the information theoretic learning (ITL) (Principe 2010), to characterize 3D pictorial structures in HSI data.

-

We generalize PRI into a multilayer structure to extract hierarchical representations for HSI classification. A multiscale scheme is also incorporated to model both local and global structures.

-

MPRI outperforms state-of-the-art HSI classification methods based on classical machine learning models (e.g., PCA-EPF Kang et al., 2017 and HIFI Pan et al., 2017) by a large margin. Using significantly fewer labeled data, MPRI also achieves almost the same classification accuracy compared to existing deep learning techniques (e.g., SAE-LR Chen et al., 2014 and 3D-CNN Li et al., 2017).

The remainder of this paper is organized as follows. Section 2 reviews the basic objective of PRI and formulates PRI under the ITL framework. The architecture and optimization of our proposed MPRI is elaborated in Sect. 3. Section 4 shows experimental results on three popular HSI data sets. Finally, Sect. 5 draws the conclusion.

2 Elements of Renyi’s \(\alpha \)-entropy and the principle of relevant information

Before presenting our method, we start with a brief review of the general idea and the objective of PRI, and then formulate this objective under the ITL framework.

2.1 PRI: the general idea and its objective

Suppose we are given a random variable \(\mathbf{X}\) with a known probability density function (PDF) g, from which we want to learn a reduced statistical representation characterized by a random variable \(\mathbf{Y}\) with PDF f. The PRI (Chapter 8, Principe, 2010) (Chapter 3, Rao, 2008) casts this problem as a trade-off between the entropy H(f) of \(\mathbf{Y}\) and its descriptive power about \(\mathbf{X}\) in terms of their divergence \(D(f\Vert g)\). Therefore, for a fixed PDF g, the objective of PRI is given by:

where \(\beta \) is a hyper-parameter controlling the amount of relevant information that \(\mathbf{Y}\) can extract from \(\mathbf{X}\). Note that, the minimization of entropy can be viewed as a means of finding the statistical regularities in the outcomes of a process, whereas the minimization of information theoretic divergence, such as the Kullback-Leibler divergence (Kullback & Leibler, 1951) or the Chernoff divergence (Chernoff, 1952), ensuring that the regularities are closely related to \(\mathbf{X}\). The PRI is similar in spirit to the Information Bottleneck (IB) method (Tishby et al., 2000), but the formulation is different because PRI does not require an observed relevant (or auxillary) variable and the optimization is done directly on the random variable \(\mathbf{X}\), which provides a set of solutions that are related to the principal curves (Hastie & Stuetzle, 1989) of g, as will be demonstrated below.

2.2 Formulation of PRI using Renyi’s entropy functional

In information theory, a natural extension of the well-known Shannon’s entropy is the Renyi’s \(\alpha \)-entropy (Rényi et al., 1961). For a random variable \(\mathbf {X}\) with PDF f(x) in a finite set \(\mathcal {X}\), the \(\alpha \)-entropy of \(H(\mathbf {X})\) is defined as:

On the other hand, motivated by the famed Cauchy–Schwarz (CS) inequality:

with equality if and only if f(x) and g(x) are linearly dependent (e.g., f(x) is just a scaled version of g(x)), a measure of the “distance” between the PDFs can be defined, which was named the CS divergence (Jenssen et al., 2006), with:

the term \(H_2(f;g)=-\log \int f(x)g(x)\hbox {d}x\) is also called the quadratic cross entropy (Principe, 2010).

Combining Eqs. (2) and (4), the PRI under the 2-order Renyi’s entropy can be formulated as:

the second equation holds because the extra term \(\beta H_2(g)\) is a constant with respect to f.

Given \(\mathbf {X}=\{\mathbf {x}_i\}_{i=1}^N\) and \(\mathbf {Y}=\{\mathbf {y}_i\}_{i=1}^N\), both in \(\mathbb {R}^p\), drawn i.i.d. from g and f, respectively. Using the Parzen-window density estimation (Parzen, 1962) with Gaussian kernel \(G_{\delta }(\cdot )=\exp (-\frac{\Vert \cdot \Vert ^2}{2\delta ^2})\), Eq. (5) can be simplified as Rao (2008):

It turns out that the value of \(\beta \) defines various levels of information reduction, ranging from data mean value (\(\beta =0\)), clustering (\(\beta =1\)), principal curves (Hastie and Stuetzle, 1989) extraction at different dimensions, and vector quantization obtaining back the initial data when \(\beta \rightarrow \infty \) (Principe, 2010; Rao, 2008). Hence, the PRI achieves similar effects to a moment decomposition of the PDF controlled by a single parameter \(\beta \), using a data driven optimization approach. See Fig. 1 for an example. From this figure we can see that the self organizing decomposition provides a set of hierarchical features of the input data beyond cluster centers, that may yield more robust features. Note that, despite its strategic flexibility to find reduced structure of given data, the PRI is mostly unknown to practitioners.

Illustration of the structures revealed by the PRI for a Intersect data set. As the values of \(\beta \) increase the solution passes through b a single point, c modes, d and e principal curves at different dimensions, and in the extreme case of f \(\beta \rightarrow \infty \) we get back the data themselves as the solution

3 Multiscale principle of relevant information (MPRI) for HSI classification

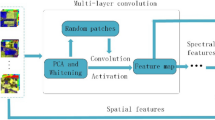

In this section, we present MPRI for HSI classification. MPRI stacks multiple spectral-spatial feature learning units, in which each unit consists of multiscale PRI and a regularized LDA (Bandos et al., 2009). The architecture of MPRI is shown in Fig. 2.

The architecture of multiscale principle of relevant information (MPRI) for HSI classification. The spectral-spatial feature learning unit is marked with red dashed rectangle. The spectral-spatial features are extracted by performing PRI (in multiple scales) and LDA iteratively and successively on HSI data cube (after normalization). Finally, features from each unit are concatenated and fed into a k-nearest neighbors (KNN) classifier to predict pixel labels. This plot only demonstrates a 3-layer MPRI, but the number of layers can be increased or decreased flexibly

To the best of our knowledge, apart from performing band selection (e.g., Feng et al., 2015; Yu et al., 2019) or measuring spectral variability (e.g., Chang, 2000), information theoretic principles have seldom been investigated to learn discriminative spectral-spatial features for HSI classification. The most similar work to ours is Kamandar and Ghassemian (2013), in which the authors use the criterion of minimum redundancy maximum relevance (MRMR) (Peng et al., 2005) to extract linear features. However, owing to the poor approximation to estimate multivariate mutual information, the performance of Kamandar and Ghassemian (2013) is only slightly better than the basic linear discriminant analysis (LDA) (Du, 2007).

3.1 Spectral-spatial feature learning unit

Let \(\mathbf {T}\in \mathbb {R}^{m\times n \times d}\) be the raw 3D HSI data cube, where m and n are the spatial dimensions, d is the number of spectral bands. For a target spectral vector \(\mathbf {t}_\star \in \mathbb {R}^d\), we extract a local cube (denote \(\hat{\mathbf{X}}\)) from \(\mathbf {T}\) using a sliding window of width \(\hat{n}\) centered at \(\mathbf {t}_\star \), i.e., \(\hat{\mathbf{X}}=\{\hat{\mathbf{x}}_1, \hat{\mathbf{x}}_2, \cdots , \hat{\mathbf{x}}_{\hat{N}}\}\in \mathbb {R}^{\hat{N}\times d}\), \(\hat{n}\times \hat{n}=\hat{N}\), and \(\mathbf {t}_\star =\hat{\mathbf{x}}_{\lfloor \hat{n}/2 \rceil +1,\lfloor \hat{n}/2 \rceil +1}\), where \(\lfloor \cdot \rceil \) is the nearest integer function. We obtain the spectral-spatial characterization \(\hat{\mathbf{Y}}=\{\hat{\mathbf{y}}_1, \hat{\mathbf{y}}_2, \cdots , \hat{\mathbf{y}}_{\hat{N}}\}\in \mathbb {R}^{\hat{N}\times d}\) from \(\hat{\mathbf{X}}\) using PRI via the following objective:

We finally use the center vector of \(\hat{\mathbf{Y}}\), i.e., \(\hat{\mathbf{y}}_{\lfloor \hat{n}/2 \rceil +1,\lfloor \hat{n}/2 \rceil +1}\), as the new representation of \(\mathbf {t}_\star \). We scan the whole 3D cube with a sliding window of width \(\hat{n}\) targeted at each pixel to get the new spectral-spatial representation. The procedure is depicted in Fig. 3.

Equation (7) is updated iteratively. Specifically, denote \(V(\hat{\mathbf{Y}})=\frac{1}{{\hat{N}}^2}\sum _{i,j=1}^{\hat{N}}G_\delta (\hat{\mathbf{y}}_i-\hat{\mathbf{y}}_j)\) and \(V(\hat{\mathbf{Y}}; \hat{\mathbf{X}})=\frac{1}{\hat{N}^2}\sum _{i,j=1}^{\hat{N}}G_\delta (\hat{\mathbf{y}}_j-\hat{\mathbf{x}}_i)\), taking the derivative of Eq. (7) with respect to \(\hat{\mathbf{y}}_\star \) and equating to zero, we have:

Rearrange Eq. (8), we have:

Divide both sides of the Eq. (9) by

and let

we obtain the fixed point update rule for \(\hat{\mathbf{y}}_\star \):

where \(\tau \) is the iteration number. We move the sliding window pixel by pixel, and only update the representation of the center target pixel, as shown in Fig. 3.

We also introduce two modifications to increase the discriminative power of the new representation. First, different values of \(\hat{n}\) (3, 5, 7, 9, 11, 13 in this work) are used to model both local and global structures. Second, to reduce the redundancy of raw features constructed by concatenating PRI representations in multiple scales, we further perform a regularized LDA (Bandos et al., 2009).

Note that, the hyper-parameter \(\beta \) and different values of \(\hat{n}\) play different roles in MPRI. Specifically, \(\beta \) in PRI balances the trade-off between the regularity of extracted representation and its discriminative power to the given data. Therefore, it should be set in a reasonable range to avoid over-smoothing effect of the resulting image and unsatisfactory classification performance. A deeper discussion is shown in Sect. 4.1.1. By contrast, \(\hat{n}\) controls the spatial scale of the learned representation. The motivation is that the discriminative information of different categories may not be easily characterized by a sliding window of a fixed size (i.e., \(\hat{n}\times \hat{n}\)). Thus, it would be favorable if one can incorporate discriminative information from different scales, by changing the value of \(\hat{n}\).

3.2 Stacking multiple units

In order to characterize spectral-spatial structures in a coarse-to-fine manner, we stack multiple spectral-spatial feature learning units described in Sect. 3.1 to constitute a multilayer structure and concatenate representations from each layer to form the final spectral-spatial representation. We finally feed this representation into a standard k-nearest neighbors (KNN) for classification.

Different from existing DNNs that are typically trained with error backpropagation or the combination of a greedy layer-wise pretraining and a fine-tuning stage, our multilayer structure is trained successively from bottom layer to top layer without error backpropagation. For the ith layer, the input of PRI is the representation learned from the previous layer (denoted \(T_{i-1}\)). We then learn new representation \(T_i\) by iteratively updates \(T_{i-1}\) with Eq. (12) and a dimensionality reduction step with LDA at the end of iteration. As for the multiscale PRI, it can be trained in parallel with respect to different sliding window sizes.

The interpretation of DNN as a way of creating successively better representations of the data has already been suggested and explored by many (e.g., Achille and Soatto, 2018). Most recently, Schwartz-Ziv and Tishby Shwartz-Ziv and Tishby (2017) put forth an interpretation of DNN as creating sufficient representations of the data that are increasingly minimal. For our deep architecture, in order to have an intuitive understanding to its inner mechanism, we plot the 2D projection (after 1000 t-SNE Maaten and Hinton, 2008 iterations) of features learned from different layers in Fig. 4. Similar to DNN, MPRI creates successively more faithful and separable representations in deeper layers. Moreover, the deeper features can discriminate the with-in class samples in different geography regions, even though we do not manually incorporate geographic information in the training.

2D projection of features learned by MPRI in different layers on Indian Pines data set. Features of “Woods” in the 1st layer, the 2nd layer, and the 3rd layer are marked with red rectangle in a–c. Similarly, features of “Grass-pasture” are marked with magenta ellipses in d–f. g The locations of “Region 1” and “Region 2”. h shows the locations of “Region 3”, “Region 4” and “Region 5”. i shows class legend

4 Experimental results

We conduct three groups of experiments to demonstrate the effectiveness and superiority of the MPRI. Specifically, we first perform a simple test to determine a reliable range for the value of \(\beta \) in PRI and the number of layers in MPRI. Then, we implement MPRI and several of its degraded variants to analyze and evaluate component-wise contributions to performance gain. Finally, we evaluate MPRI against state-of-the-art methods on benchmark data sets using both visual and qualitative evaluations.

Three popular data sets, namely the Indian Pines (Baumgardner et al., 2015), the Pavia University and the Pavia Center, are selected in this work. We summarize the properties of each data set in Table 1.

-

1.

The first image, displayed in Fig. 5a, is called Indian Pines. It was gathered by the airborne visible/infrared imaging spectrometer (AVIRIS) sensor over the agricultural Indian Pines test site in northwestern Indiana, United States. The size of this image is \(145\times 145\) pixels with spatial resolution of 20 m. The low spatial resolution leads to the presence of highly mixed pixels (Ghamisi et al., 2014). A three-band false color image and the ground-truth map are shown in Fig. 5a, b, where there are 16 classes of interest. And the name and quantity of each class are reported in Fig. 5c. The number of bands has been reduced to 200 by removing 20 bands covering the region of water absorption. This scene constitutes a challenging classification problem due to the significant presence of mixed pixels in all available classes and the unbalanced number of available labeled pixels per class (Li et al., 2013).

-

2.

The second image is the Pavia University, which was recorded by the reflective optics spectrographic imaging system (ROSIS) sensor during a flight campaign over Pavia, northern Italy. This scene has \(610\times 340\) pixels with a spatial resolution of 1.3 m (covering the wavelength range from 0.4 to 0.9\(\upmu \) m). There are 9 ground-truth classes, including trees, asphalt, bitumen, gravel, metal sheet, shadow, bricks, meadow, and soil. In our experiments, 12 noisy bands have been removed and finally 103 out of the 115 bands were used. The class descriptions and sample distributions for this image are given in Fig. 6c. As can be seen, the total number of labeled samples in this image is 43,923. A three-band false color image and the ground-truth map are also shown in Fig. 6.

-

3.

The third data set is Pavia Center. It was acquired by ROSIS-3 sensor in 2003, with a spatial resolution of 1.3m and 102 spectral bands (some bands have been removed due to noise). A three-band false color image and the ground-truth map are also shown in Fig. 7a, b. The number of ground truth classes is 9 (see Fig. 7) and it consists of \(1096\times 492\) pixels. The number of samples of each class ranges from 2108 to 65,278 (Fig. 7e). There are 5536 training samples and 98,015 testing samples (Fig. 7c, d). Note that these training samples are out of the testing samples.

Three metrics are used for quantitative evaluation (Cao et al., 2018): overall accuracy (OA), average accuracy (AA) and the kappa coefficient \(\kappa \). OA is computed as the percentage of correctly classified test pixels, AA is the mean of the percentage of correctly classified pixels for each class, and \(\kappa \) involves both omission and commission errors and gives a good representation of the the overall performance of the classifier.

For our method, the values of the kernel width \(\delta \) in PRI were tuned around the multivariate Silverman’s rule-of-thumb (Silverman, 1986): \((\frac{4}{d+2})^{\frac{1}{d+4}} s^{\frac{-1}{4+d}}\sigma _1\le \delta \le (\frac{4}{d+2})^{\frac{1}{d+4}} s^{\frac{-1}{4+d}} \sigma _2\), where s is the sample size, d is the variable dimensionality, \(\sigma _1\) and \(\sigma _2\) are respectively the smallest and the largest standard deviation among each dimension of the variable. For example, in Indian Pines data set, the estimated range in the 5th layer corresponds to [0.05, 0.51], and we set kernel width to 0.4. On the other hand, the PRI in each layer is optimized with \(\tau =3\) iterations, which has been observed to be sufficient to provide desirable performance.

4.1 Parameter analysis

4.1.1 Effects of parameter \(\beta \) in PRI

The parameter \(\beta \) in PRI balances the trade-off between the regularity of extracted representation and its discriminative power to the given data. We illustrate the values of OA, AA, and \(\kappa \) for MPRI with respect to different values of \(\beta \) in Fig. 8a. As can be seen, these quantitative values are initially stable, but decrease when \(\beta \ge 3\). Moreover, the value of AA drops more drastically than that of OA or \(\kappa \). A likely interpretation is that when training samples are limited, many classes have only a few labeled samples (\(\sim 1\) for minority classes, such as Oats, Grass-pasture-mowed, and Alfalfa). An unreasonable value of \(\beta \) may severely influence the classification accuracy in these classes, hereby decreasing AA at first.

The corresponding classification maps are shown in Fig. 9. It is obviously that, the smaller the \(\beta \), the more smooth results achieved by MPRI. This is because large \(\beta \) encourages a small divergence between the extracted representation and the original HSI data. For example, in the scenario of \(\beta =0\), PRI clusters both spectral and spatial structures into a single point (the data mean) that has no discriminative power. By contrast, in the scenario of \(\beta \rightarrow \infty \), the extracted representation gets back to the HSI data itself (to minimize their divergence) such that PRI will fit all noisy and irregular structures.

From the above analysis, extremely large and small values of \(\beta \) are not interesting for classification of HSI. Moreover, the results also suggest that \(\beta \in [2, 4]\) is able to balance a good trade-off between preserving relevant spatial information (such as edges in classification maps) and filtering out unnecessary one. Unless otherwise specified, the PRI mentioned in the following experiments uses three different values of \(\beta \), i.e., \(\beta =2\), \(\beta =3\), and \(\beta =4\). The final representation of PRI is formed by concatenating representations obtained from each \(\beta \).

4.1.2 Effects of the number of layers

We then illustrate the values of OA, AA and \(\kappa \) for MPRI with respect to different number of layers in Fig. 8b. The corresponding classification maps are shown in Fig. 10. Similar to existing deep architectures, stacking more layers (in a reasonable range) can increase performance. If we keep the input data size the same, more layers (beyond a certain layer number) will not increase the performance anymore and the classification maps become over-smooth. This work uses a 5-layer MPRI because it provides favorable visual and quantitative results.

4.1.3 Effects of the classifier

MPRI uses the basic KNN for classification and sets \(k=1\) throughout this work. The purpose is to validate the discriminative power of the spectral-spatial features extracted by multiple layers of PRI. To further confirm the superiority of our MPRI is independent to the used classifier, we also evaluate the performances of MPRI and other three feature extraction methods for HSI classification (EPF Kang et al., 2014, SADL Soltani-Farani et al., 2015, PCA-EPF Kang et al., 2017) and use a kernel SVM as the baseline classifier. The kernel size \(\sigma \) is tuned by cross-validation from the set \(\{0.0001, 0.001, 0.01, 0.1, 1, 10, 100\}\). The best performance is summarized in Table 2. As can be seen, KNN and SVM always lead to comparable results. Our MPRI is consistently better than other competitors, regardless of the used classifier.

4.2 Evaluation on component-wise contributions

Before systematically evaluating the performance of MPRI, we first compare it with its degraded baseline variants to demonstrate the component-wise contributions to the performance gain. The results are summarized in Table 3. As can be seen, models that only consider one attribute (i.e., multi-layer, multi-scale and multi- \(\beta \)) improve the performance marginally. Moreover, it is interesting to find that multi-layer and multi-scale play more significant roles than multi- \(\beta \). One possible reason is that the representations learned from different \(\beta \) contain redundant information with respect to class labels. However, either the combination of multi-layer and multi-\(\beta \) or the combination of multi-scale and multi-\(\beta \) can obtain remarkable improvements. Our MPRI performs the best as expected. This result indicates that multi-layer, multi-scale and multi- \(\beta \) are essentially important for the problem of HSI classification.

4.3 Comparison with state-of-the-art methods

Having illustrated component-wise contributions of MPRI, we compare it with several state-of-the-art methods, including EPF (Kang et al., 2014), MPM-LBP (Li et al., 2013), SADL (Soltani-Farani et al., 2015), MFL (Li et al., 2015), PCA-EPF (Kang et al., 2017), HIFI (Pan et al., 2017), hybrid spectral convolutional neural network (HybridSN) (Roy et al., 2020), similarity-preserving deep features (SPDF) (Fang et al., 2019), convolutional neural network with Markov random fields (CNN-MRF) (Cao et al., 2018), local covariance matrix representation (LCMR) (Fang et al., 2018), and random patches network (RPNet) (Xu et al., 2018).

Tables 4, 5 and 6 summarized quantitative evaluation results of different methods. For each method, we report its classification accuracy in each land cover category as well as the overall OA, AA and \(\kappa \) values across all categories. To avoid biased evaluation, we average the results from 10 independent runs (except for the Pavia Center data set, in which the training and testing samples are fixed). Obviously, MPRI achieves the best or the second best performance in most of items. These results suggest that MPRI is able to learn more discriminative spectral-spatial features than its counterparts using classical machine learning models.

The classification maps of different methods in three data sets are demonstrated in Figs. 11, 12 and 13, which further corroborate the above quantitative evaluations. The performances of EPF and MPM-LBP are omitted due to their relatively lower quantitative evaluations. It is very easy to observe that our proposed MPRI improves the region uniformity (see the small region marked with dashed border) and the edge preservation (see the small region marked by solid line rectangles) significantly, both criteria are critical for evaluating classification maps (Kang et al., 2017). By contrast, other methods either fail to preserve local details (such as edges) of different classes (e.g., MFL) or generate noises in the uniform regions (e.g., SADL, PCA-EPF and HIFI).

To evaluate the robustness of our method with respect to the number of training samples, we demonstrate, in Fig. 14, the OA values of different methods in a range of the percentage of training samples per class. As can be expected, the more training samples, the better classification performance. However, MPRI is consistently superior to its counterparts, especially when the training samples are limited.

4.4 Computational complexity analysis

We finally investigate the computational complexity of different sliding window filtering based HSI classification methods. Note that, PRI can also be interpreted as a special kind of filtering, as the center pixel representation is determined by its surrounding pixels with a Gaussian weight [see Eq. (12)].

The computational complexity and the averaged running time on each pixel (in s) of different methods are summarized in Table 7. For PCA-EPF, \({\tilde{d}}\) is the dimension of averaged images, \({\hat{S}}\) is the number of different filter parameter settings, \({\hat{T}}\) is the number of iterations. For HIFI, d is the number of hyperspectral bands, \(\hat{H}\) is the number of the hierarchies. For MPRI, L, S, and B are respectively the numbers of layers, scales and betas. Usually, \({\tilde{d}}\) is set to 16, \({\hat{S}}\) is set to 3, and \({\hat{T}}\) is set to 3, which makes PCA-EPF very fast.

According to Eq. (7), the computational complexity of PRI grows quadratically with data size (i.e., \(\hat{N}\)). Although one can simply apply rank deficient approximation to the Gram matrix for efficient computation of PRI, this strategy is preferable only when \(l\ll \hat{N}\), where l is the square of number of subsamples used to approximate the original Gram matrix (Sánchez Giraldo and Príncipe, 2011). In our application, \(\hat{N}\) is less than a few hundreds (\(\sim 169\) at most), whereas we always need to set \(l\ge 25\) to guarantee a non-decreasing accuracy. From Table 7, the reduced computational power by Gram matrix approximation is marginal. However, as shown in Fig. 15, such an approximation method is prone to cause over-smooth effect.

Finally, one should note that, although MPRI takes more time than its sliding window filtering based counterparts, it is still much more timesaving than prevalent DNN based methods. For example, CNN-MRF takes more than 6, 000s (on a PC equipped with a single 1080 Ti GPU, i7 8700k CPU and 64 GB RAM) to train a CNN model using \(2\%\) labeled data on Indian pines data set with 10x data augmentation.

5 Conclusions

This paper proposes multiscale principle of relevant information (MPRI) for hyperspectral image (HSI) classification. MPRI uses PRI—an unsupervised information-theoretic learning principle that aims to perform mode decomposition of a random variable X with a known (and fixed) probability distribution g by a hyperparameter \(\beta \)—as the basic building block. It integrates multiple such blocks into a multiscale (by using sliding windows of different sizes) and multilayer (by stacking PRI successively) structure to extract spectral-spatial features of HSI data from a coarse-to-fine manner. Different from existing deep neural networks, MPRI can be efficiently trained greedy layer-wisely without error backpropagation. Empirical evidence indicates \(\beta \in [2,4]\) in PRI is able to balance the trade-off between the regularity of extracted representation and its discriminative power to HSI data. Comparative studies on three benchmark data sets demonstrate that MPRI is able to learn discriminative representations from 3D spatial-spectral data, with significantly fewer training samples. Moreover, MPRI enjoys an intuitive geometric interpretation, it also prompts the region uniformity and edge preservation of classification maps. In the future, we intend to speed up the optimization of PRI. In this line of research, the random fourier feature (Rahimi & Recht, 2008) seems to be a promising avenue.

References

Achille, A., & Soatto, S. (2018). Information dropout: Learning optimal representations through noisy computation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(12), 2897–2905.

Bandos, T., Bruzzone, L., & Camps-Valls, G. (2009). Classification of hyperspectral images with regularized linear discriminant analysis. IEEE Transactions on Geoscience and Remote Sensing, 47(3), 862–873.

Baumgardner, M. F., Biehl, L. L., & Landgrebe, D. A (2015). 220 band aviris hyperspectral image data set: June 12, 1992 Indian pine test site 3. https://doi.org/10.4231/R7RX991C

Cao, X., Zhou, F., Xu, L., Meng, D., Xu, Z., & Paisley, J. (2018). Hyperspectral image classification with Markov random fields and a convolutional neural network. IEEE Transactions on Image Processing, 27(5), 2354–2367.

Chang, C. I. (2000). An information-theoretic approach to spectral variability, similarity, and discrimination for hyperspectral image analysis. IEEE Transactions on Information Theory, 46(5), 1927–1932.

Chen, Y., Jiang, H., Li, C., Jia, X., & Ghamisi, P. (2016). Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Transactions on Geoscience and Remote Sensing, 54(10), 6232–6251.

Chen, Y., Lin, Z., Zhao, X., Wang, G., & Gu, Y. (2014). Deep learning-based classification of hyperspectral data. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 7(6), 2094–2107.

Chen, Y., Nasrabadi, N. M., & Tran, T. D. (2011). Hyperspectral image classification using dictionary-based sparse representation. IEEE Transactions on Geoscience and Remote Sensing, 49(10), 3973–3985.

Chernoff, H. (1952). A measure of asymptotic efficiency for tests of a hypothesis based on the sum of observations. The Annals of Mathematical Statistics, 23(4), 493–507.

Du, Q. (2007). Modified fisher’s linear discriminant analysis for hyperspectral imagery. IEEE Geoscience and Remote Sensing Letters, 4(4), 503–507.

Fang, L., He, N., Li, S., Plaza, A. J., & Plaza, J. (2018). A new spatial-spectral feature extraction method for hyperspectral images using local covariance matrix representation. IEEE Transactions on Geoscience and Remote Sensing, 56(6), 3534–3546.

Fang, L., Liu, Z., & Song, W. (2019). Deep hashing neural networks for hyperspectral image feature extraction. IEEE Geoscience and Remote Sensing Letters, 16(9), 1412–1416.

Feng, J., Jiao, L., Liu, F., Sun, T., & Zhang, X. (2015). Mutual-information-based semi-supervised hyperspectral band selection with high discrimination, high information, and low redundancy. IEEE Transactions on Geoscience and Remote Sensing, 53(5), 2956–2969.

Ghamisi, P., Benediktsson, J. A., Cavallaro, G., & Plaza, A. (2014). Automatic framework for spectral-spatial classification based on supervised feature extraction and morphological attribute profiles. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 7(6), 2147–2160.

Ghamisi, P., Dalla Mura, M., & Benediktsson, J. A. (2015). A survey on spectral-spatial classification techniques based on attribute profiles. IEEE Transactions on Geoscience and Remote Sensing, 53(5), 2335–2353.

Ghamisi, P., Yokoya, N., Li, J., Liao, W., Liu, S., Plaza, J., et al. 2017). Advances in hyperspectral image and signal processing: A comprehensive overview of the state of the art. IEEE Geoscience and Remote Sensing Magazine, 5(4), 37–78.

Hastie, T., & Stuetzle, W. (1989). Principal curves. Journal of the American Statistical Association, 84(406), 502–516.

He, L., Li, J., Liu, C., & Li, S. (2018). Recent advances on spectral-spatial hyperspectral image classification: An overview and new guidelines. IEEE Transactions on Geoscience and Remote Sensing, 56(3), 1579–1597.

Jenssen, R., Principe, J. C., Erdogmus, D., & Eltoft, T. (2006). The Cauchy-Schwarz divergence and parzen windowing: Connections to graph theory and mercer kernels. Journal of The Franklin Institute, 343(6), 614–629.

Kamandar, M., & Ghassemian, H. (2013). Linear feature extraction for hyperspectral images based on information theoretic learning. IEEE Geoscience and Remote Sensing Letters, 10(4), 702–706.

Kang, X., Li, S., & Benediktsson, J. A. (2014). Spectral-spatial hyperspectral image classification with edge-preserving filtering. IEEE Transactions on Geoscience and Remote Sensing, 52(5), 2666–2677.

Kang, X., Xiang, X., Li, S., & Benediktsson, J. A. (2017). Pca-based edge-preserving features for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 55(12), 7140–7151.

Kullback, S., & Leibler, R. A. (1951). On information and sufficiency. The Annals of Mathematical Statistics, 22(1), 79–86.

Li, J., Bioucas-Dias, J. M., & Plaza, A. (2013). Spectral-spatial classification of hyperspectral data using loopy belief propagation and active learning. IEEE Transactions on Geoscience and Remote Sensing, 51(2), 844–856.

Li, J., Huang, X., Gamba, P., Bioucas-Dias, J. M., Zhang, L., Benediktsson, J. A., & Plaza, A. (2015). Multiple feature learning for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 53(3), 1592–1606.

Li, Y., Zhang, H., & Shen, Q. (2017). Spectral-spatial classification of hyperspectral imagery with 3d convolutional neural network. Remote Sensing, 9(1), 67.

Ma, X., Wang, H., & Geng, J. (2016). Spectral-spatial classification of hyperspectral image based on deep auto-encoder. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 9(9), 4073–4085.

Maaten, L. V. D., & Hinton, G. (2008). Visualizing data using t-sne. Journal of Machine Learning Research, 9, 2579–2605.

Pan, B., Shi, Z., & Xu, X. (2017). Hierarchical guidance filtering-based ensemble classification for hyperspectral images. IEEE Transactions on Geoscience and Remote Sensing, 55(7), 4177–4189.

Parzen, E. (1962). On estimation of a probability density function and mode. The Annals of Mathematical Statistics, 33(3), 1065–1076.

Peng, H., Long, F., & Ding, C. (2005). Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(8), 1226–1238.

Principe, J. C. (2010). Information theoretic learning: Renyi’s entropy and kernel perspectives. New York: Springer.

Rahimi, A., & Recht, B (2008). Random features for large-scale kernel machines. In: Advances in neural information processing systems, pp. 1177–1184

Rao, S. M. (2008). Unsupervised learning: An information theoretic framework. Ph.D. thesis, University of Florida

Rényi, A. (1961). On measures of entropy and information. In Proceedings of the fourth Berkeley symposium on mathematical statistics and probability, volume 1: Contributions to the theory of statistics. The Regents of the University of California.

Roy, S. K., Krishna, G., Dubey, S. R., & Chaudhuri, B. B. (2020). Hybridsn: Exploring 3-d-c2-d cnn feature hierarchy for hyperspectral image classification. IEEE Geoscience and Remote Sensing Letters, 17(2), 277–281.

Sánchez Giraldo, L. G., & Príncipe, J. C. (2011). An efficient rank-deficient computation of the principle of relevant information. In 2011 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 2176–2179). IEEE.

Shwartz-Ziv, R., & Tishby, N (2017). Opening the black box of deep neural networks via information. arXiv preprint arXiv:1703.00810

Silverman, B. W. (1986). Density estimation for statistics and data analysis. London: Chapman & Hall.

Soltani-Farani, A., Rabiee, H. R., & Hosseini, S. A. (2015). Spatial-aware dictionary learning for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 53(1), 527–541.

Tishby N., et al (2000). The information bottleneck method. arXiv preprint arXiv:physics/0004057

Xu, Y., Du, B., Zhang, F., & Zhang, L. (2018). Hyperspectral image classification via a random patches network. ISPRS Journal of Photogrammetry and Remote Sensing, 142, 344–357.

Yang, X., Ye, Y., Li, X., Lau, R. Y., Zhang, X., & Huang, X. (2018). Hyperspectral image classification with deep learning models. IEEE Transactions on Geoscience and Remote Sensing, 56(9), 5408–5423.

Yu, S., Sanchez Giraldo, L. G., Jenssen, R., & Principe, J. C. (2019). Multivariate extension of matrix-based Renyi’s \(\alpha \)-order entropy functional. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(11), 2960–2966.

Zare, A., Jiao, C., & Glenn, T. (2018). Discriminative multiple instance hyperspectral target characterization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(10), 2342–2354.

Zhong, Z., Li, J., Luo, Z., & Chapman, M. (2018). Spectral-spatial residual network for hyperspectral image classification: A 3-d deep learning framework. IEEE Transactions on Geoscience and Remote Sensing, 56(2), 847–858.

Zhu, X. X., Tuia, D., Mou, L., Xia, G. S., Zhang, L., Xu, F., & Fraundorfer, F. (2017). Deep learning in remote sensing: a comprehensive review and list of resources. IEEE Geoscience and Remote Sensing Magazine, 5(4), 8–36.

Acknowledgements

This research was funded by the National Natural Science Foundation of China under Grants 61502195, the Humanities and Social Sciences Foundation of the Ministry of Education under Grant 20YJC880100, the Natural Science Foundation of Hubei Province under Grant 2018CFB691, the Fundamental Research Funds for the Central Universities under Grant CCNU20TD005, and the Open Fund of Hubei Research Center for Educational Informationization, Central China Normal University under Grant HRCEI2020F0101.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Editors: Dino Ienco, Thomas Corpetti, Minh-Tan Pham, Sebastien Lefevre, Roberto Interdonato.

Rights and permissions

About this article

Cite this article

Wei, Y., Yu, S., Giraldo, L.S. et al. Multiscale principle of relevant information for hyperspectral image classification. Mach Learn 112, 1227–1252 (2023). https://doi.org/10.1007/s10994-021-06011-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-06011-9