Abstract

In many linear regression problems, including ill-posed inverse problems in image restoration, the data exhibit some sparse structures that can be used to regularize the inversion. To this end, a classical path is to use \(\ell _{12}\) block-based regularization. While efficient at retrieving the inherent sparsity patterns of the data—the support—the estimated solutions are known to suffer from a systematical bias. We propose a general framework for removing this artifact by refitting the solution toward the data while preserving key features of its structure such as the support. This is done through the use of refitting block penalties that only act on the support of the estimated solution. Based on an analysis of related works in the literature, we introduce a new penalty that is well suited for refitting purposes. We also present a new algorithm to obtain the refitted solution along with the original (biased) solution for any convex refitting block penalty. Experiments illustrate the good behavior of the proposed block penalty for refitting solutions of total variation and total generalized variation models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider linear inverse problems of the form \(y = \varPhi x + w\), where \(y \in {\mathbb {R}}^p\) is an observed degraded image, \(x \in {\mathbb {R}}^n\) the unknown clean image, \(\varPhi : {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}^p\) a linear operator and \(w \in {\mathbb {R}}^p\) a noise component, typically a zero-mean white Gaussian random vector with standard deviation \(\sigma > 0\). To reduce the effect of noise and the potential ill-conditioning of \(\varPhi \), we consider a regularized least squares problem with a sparse analysis regularization term based on an \(\ell _{12}\) block penalty of the form

where \(\lambda > 0\) is a regularization parameter, \(\varGamma : {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}^{m \times b}\) is a linear analysis operator mapping an image over m blocks of size b, and for \(z \in {\mathbb {R}}^{m \times b}\)

with \(z_i=(z_{i,j})_{j=1}^b\in {\mathbb {R}}^b\). Note that, the terminology sparse analysis is used here by opposition to sparse synthesis models as discussed in the seminal work of [27]. The first term in (1) is a data fidelity term enforcing x to be close to y through \(\varPhi \), while the second term enforces the so-called group sparsity on x (sometimes referred to as joint sparsity, block sparsity or structured sparsity) capturing the organization of the data as encoded by \(\varGamma \), see for instance [1].

1.1 Related Examples

A typical example is the Lasso (Least absolute shrinkage and selection operator) [48]. The Lasso is a statistical procedure used for variable selection and relying on regularized linear least square regression as expressed in eq. (1) in which \(\varGamma = {\mathrm {Id}}\), \(m = n\) and \(b = 1\). The Lasso is known to promote sparse solutions, i.e., such that \({\hat{x}}_k = 0\) for most indices \(1 \le k \le n\). Since blocks are of size \(b=1\), the regularization term boils down to the classical \(\ell _1\) sparsity term that is unstructured as no interactions between the elements of \({\hat{x}}\) are considered. In this paper, we will focus instead on cases of block penalties where \(b > 1\). The group Lasso [32, 56] is one of them, for which \(\varGamma \) is designed to reorganize the elements \({\hat{x}}_k\) into groups \({\hat{z}}_i = (\varGamma {\hat{x}})_i\) supposedly meaningful according to some prior knowledge on the data. The group Lasso is known to promote block sparse solutions, i.e., such that \({\hat{z}}_i = 0_b\) for most of the groups \(1 \le i \le m\). Note that elements within a nonzero group \({\hat{z}}_i \ne 0_b\) are not required to be sparse.

Regarding image restoration applications, the authors of [38] use an \(\ell _{12}\) regularization term where \(\varGamma \) extracts \(m=n\) overlapping blocks of wavelet coefficients of size b or where \(\varGamma \) use a dyadic decomposition of the wavelet coefficients into blocks of variable size but non-overlapping [39]. Such strategy was also used in audio processing [55] for denoising. Another example that we will investigate here is one of the total-variations (TVs) [43]. We can distinguish two different forms of TV models. Anisotropic total-variation (TVaniso) [28], considers \(\varGamma \) the operator which concatenates the vertical and horizontal components of the discrete gradients into a vector of size \(m=2n\), hence \(b=1\). Isotropic total-variation (TViso) considers instead \(\varGamma = \nabla \) being the operator which extracts \(m=n\) discrete image gradient vectors of size \(b = 2\). Unlike TVaniso, TViso jointly enforces vertical and horizontal components of the gradient to be simultaneously zero. Since TVaniso does not take into account interactions between both directions, it over favors vertical and horizontal structures while TViso behaves similarly in all directions, hence their name [28]. Both models promote sparsity of the discrete gradient field of the image, and, as a result, their solutions are piece-wise constant. A major difference is that TVaniso favors constant regions that are rectangular-like shaped and separated by sharp edges. On the other hand, TViso favors constant regions that are round-like shaped, as it has been shown by studying the properties of TV reconstructions in terms of extreme points of the level sets of the regularization functional [4, 5, 12]. In practice, when considering classical discretizations, constant areas of TViso solutions are separated by fast but gradual transitions. These smooth transitions only appear in particular directions that depend on the considered discretization scheme on the regular image grid (see [11] for a detailed analysis). These numerical artifacts can be solved with more advanced schemes [11, 14, 19].

Nevertheless, as TV is designed to promote piece-wise constant solutions, it is known to produce staircasing artifacts that are all the more harmful as the images contain shaded objects [15, 24]. To reduce this effect, the authors of [34] suggested using an \(\ell _{12}\) block sparsity term not only by grouping vertical and horizontal components of the gradient, but by grouping neighboring gradients in overlapping patches. An alternative to reduce staircasing that we will also investigate here is the second-order Total Generalized Variation (TGV) model [6] that promotes piece-wise affine solutions. As we will see, TGV is another example of models that falls into this type of least squares problems regularized with a sparse analysis term based on \(\ell _{12}\) block penalties. Sparsity in that case encodes that sought images are composed of few shaded regions with few variations of slopes and separated by edges.

1.2 Support of the Solution

Solutions of the sparse analysis regularization model in (1) are known to be sparse [27, 36], i.e., such that \((\varGamma x)_i = 0_b\) for most blocks \(1 \le i \le m\). It results that a key notion, central to all of these estimators, is the one of support, i.e., the set of nonzero blocks in \(\varGamma {\hat{x}}\), defined as

For the group Lasso, the support is typically used to identify groups of covariates (columns of \(\varPhi \)) being explanatory variables for the dependent variable y (i.e., significantly correlated with y). For TViso, the support is the set of pixel indices where transitions occur in the restored image. In general, the support plays an important role as it captures the intrinsic structural information underlying the data. While being biased, in practice, the estimate \(\hat{x}\) obtained by sparse analysis regularization (1) recover quite correctly the support \({{\,\mathrm{supp}\,}}(\varGamma x)\) of the underlying sparse signal x. Under some additional assumptions, support recovery is even proven to be exact as proved in [52] for \(b=1\) (anisotropic case) and [53] for \(b \ge 1\).

1.3 Bias of the Solution

Though the support \({\hat{{\mathcal {I}}}}\) of \(\hat{x}\) can be very close to the one of the sought image x, the estimated amplitudes \(\hat{x}_i\) suffers from a systematical bias. When \(\varPhi = {\mathrm {Id}}\), the Lasso corresponds to the soft-thresholding (ST) operator

for which all nonzero elements of the solutions are shrinked toward 0 by a shift \(\pm \lambda \) resulting to under- and over-estimated values. With TViso, this bias is reflected by a loss of contrast in the image since the amplitudes of some regions are regressed toward the mean of the image [45, 50, 51]. In TGV, not only a loss of contrast results from this bias, but we observe that the slopes in areas of transitions are often mis-estimated [30].

1.4 Boosting Approaches

Given the artifacts induced by the \(\ell _{12}\) sparse regularization, many approaches have been developed to re-enhance the quality of the solutions, e.g., to reduce the loss of contrast and staircasing of TViso. We refer to these approaches as boosting. Most of them consist in solving (1) iteratively based on the residue \(\varPhi \hat{x} - y\), or a related quantity, obtained during the previous iterations. Among them, the well-known Bregman iterations [37] is often considered to recover part of the loss of contrast for TViso. Other related procedures are twicing [49], boosting with the \(\ell _2\) loss [8], unsharp residual iteration [16], SAIF-boosting [35, 47], ideal spectral filtering in the analysis sense [29] and SOS-boosting [42]. While these approaches reduce the bias in the estimated amplitudes, the support \({\hat{{\mathcal {I}}}}\) of the original solution is not guaranteed to be preserved in the boosted solution, even though this one may correspond to the support of the sought image x.

1.5 Projection on the Support

Given the key role of the support of solutions of (1), we believe that it is of main importance that a re-enhanced solution \({\tilde{x}}\) preserves it, i.e., such that \({{\,\mathrm{supp}\,}}(\varGamma x) \subseteq \hat{{\mathcal {I}}}\). For this reason, we focus on refitting strategies that, unlike boosting, reduce the bias while preserving the support of the original solution. In the Lasso (\(\varGamma ={\mathrm {Id}}\), \(m=n\) and \(b=1\)) [48], a well-known refitting scheme consists in performing a posteriori a least-square re-estimation of the nonzero coefficients of the solution. This post-refitting technique became popular under various names in the statistical literature: Hybrid Lasso [26], Lasso-Gauss [41], OLS post-Lasso [3], Debiased Lasso (see [3, 31] for extensive details on the subject). Such approaches consists in approximating y through \(\varPhi \) by an image sharing the same support as \(\hat{x}\):

where \(\hat{{\mathcal {I}}} = {{\,\mathrm{supp}\,}}(\varGamma \hat{x})\). While this strategy works well for blocks of size \(b=1\), e.g., for the Lasso or TVaniso, it suffers from an excessive increase in variance whenever \(b\ge 2\) (see [22] for illustrations on TViso). This is due to the fact that solutions do not only present sharp edges, but may involve larger regions of gradual transitions where the debiasing may be too strong by re-introducing too much noise. To cope with this issue, additional features of \({\hat{x}}\) than its support must be also preserved by a refitting procedure.

1.6 Advanced Refitting Strategies

For the Lasso, it has been observed that a pointwise preservation of the sign of \({\hat{x}}_i\) onto the support improves the numerical performances of the refitting [17]. For \(b=2\) and TViso like models, the joint projection on the support with conservation of the direction (or orientation) of \((\varGamma {\hat{x}})_i\) has been proposed in [7]. Extension to second-order regularization such as TGV [6] are investigated in [10] in the context of partially order spaces and approximate operators \(\varPhi \). In a parallel line of research, it has been proposed in [54] to respect the inclusion of the level lines of \({\hat{x}}\) in the refitting by solving an isotonic regression problem. All these models are constrained to respect exactly the orientation \((\varGamma {\hat{x}})_i\) of the biased solution on elements of the support, i.e., when \(i \in {\hat{{\mathcal {I}}}}\). In [22, 40], an alternative approach, based on the preservation of covariant information between \({\hat{x}}\) and y, aims only at preserving the orientation \((\varGamma {\hat{x}})_i\) to some extent. While also respecting the support of \({\hat{x}}\), this gives more flexibility for the refitted solution to correct \({\hat{x}}\) and adapt to the data content y. This model is nevertheless insensitive to the direction, and it involves a quadratic penalty that tends to promote over-smoothed refitting.

1.7 Outline and Contributions

In Sect. 2, we present a general framework for refitting solutions promoted by \(\ell _{12}\) sparse regularization (1) that extends a preliminary version of this work [23]. Our variational refitting method relies on the use of block penalty functions that act on the support of the biased solution \({\hat{x}}\). We introduce the Soft-penalized Direction model (SD), while discussing suitable properties a refitting block penalty should satisfy.

In Sect. 3, we propose stable algorithms to compute our refitting strategy for any convex refitting block penalty.

We show in Sect. 4 how our model relates and inherits the advantages of other methods such as Bregman iterations [37] or de-biasing approaches [7, 22].

Experiments in Sect. 5 exhibit the practical benefits for the SD refitting for imaging problems involving TViso, a variant of TViso for color images and TGV-based regularization.

With respect to the short paper [23], the contributions are as follows. We propose new block penalties and a deep analysis of their properties. While providing technical information on the implementation of particular block penalties, we also detail how the process can be generalized to arbitrary convex functions. The refitting scheme has been extended to another optimization algorithm, and a TGV-based regularization model is finally proposed (5.4) and experimented (Fig. 7).

2 Refitting with Block Penalties

The refitting procedure of a biased solution \({\hat{x}}\) of (1) is expressed in the following general framework

where \(\phi : {\mathbb {R}}^b \times {\mathbb {R}}^b \rightarrow {\mathbb {R}}\) is a block penalty (\(b \ge 1\) is the size of the blocks) promoting \(\varGamma x\) to share information with \(\varGamma \hat{x}\) in some sense to be specified. To compute global optimum of the refitting model (6), we only consider in this paper refitting block penalties such that \(z \mapsto \phi (z, \hat{z})\) is convex.

To refer to some features of the vector \(\varGamma \hat{x}\), let us first define properly the notions of relative orientation, direction and projection between two vectors.

Definition 1

Let z and \({\hat{z}}\) be vectors in \({\mathbb {R}}^b\), we define

where \(P_{{\hat{z}}}(z)\) is the orthogonal projection of z onto \({{\,\mathrm{Span}\,}}({\hat{z}})\) (i.e., the orientation axis of \({\hat{z}}\)). We say that z and \({\hat{z}}\) share the same orientation (resp. direction), if \(|\cos (z, \hat{z}) | = 1\) (resp. \(\cos (z, \hat{z}) = 1\)). We also consider that \( \cos (z, \hat{z})=1\) in case of null vectors \(z=0_b\) and/or \({\hat{z}}=0_b\).

Thanks to Definition 1, we can now introduce our refitting block penalty designed to preserve the desired features of \(\hat{z}=\varGamma {\hat{x}}\) in a simple way. We call our block penalty the Soft-penalized Direction (SD) penalty which reads as

We also introduce five other alternatives, the Hard-constrained Orientation (HO) penalty

where \(\iota _{\mathcal {C}}\) is the 0/\(+\infty \) indicator function of a set \(\mathcal {C}\), the Hard-constrained Direction (HD) penalty

the Quadratic penalized Orientation (QO) penalty

the Quadratic penalized Direction (QD) penalty

and the Soft-constrained Orientation (SO) penalty

We will see in Sect. 4 that HD, HO and QO lead us to retrieve existing refitting models known, respectively, in the literature as ICB (Infimal Convolution between Bregman distances) debiasing [7], Bregman debiasing [7], and CLEAR (Covariant LEAst square Refitting) [22].

2.1 Desired Properties of Refitting Block Penalties

We now introduce properties a block penalty \(\phi \) should satisfy for refitting purposes, for any \(\hat{z}\):

-

(P1)

\(\phi \) is convex, nonnegative and \(\phi (z, \hat{z}) = 0\), if \(\cos (z, {\hat{z}}) = 1\) or \(\Vert z \Vert _2=0\),

-

(P2)

\(\phi (z', \hat{z}) \ge \phi (z'', \hat{z})\) if \(\Vert z' \Vert _2 = \Vert z'' \Vert _2\) and \(\cos (z'', \hat{z}) \ge \cos (z', \hat{z})\),

-

(P3)

\(z \mapsto \phi (z, \hat{z})\) is continuous,

-

(P4)

\( \phi (z, \hat{z}) \le C \Vert z \Vert _2, \text { for } C > 0. \)

Property (P1) stipulates that no configuration can be more favorable than z and \({\hat{z}}\) having the same direction. Hence, the direction of the refitted solution should be encouraged to follow the one of the biased solution. Property (P2) imposes that for a fixed amplitude, the penalty should be increasing w.r.t. the angle formed with \({\hat{z}}\). Property (P3) enforces refitting that can continuously adapt to the data and be robust to small perturbations. Property (P4) claims that a refitting block penalty should not penalize more some configurations than the original penalty \(\Vert . \Vert _{1,2}\), at least up to some multiplicative factor \(C > 0\).

Proposition 1

Properties of block penalties lead to the following implications.

-

(a)

(P1) \(\Rightarrow \Vert \varPhi \tilde{x}^{\phi } - y \Vert ^2_2\le \Vert \varPhi {\hat{x}} - y \Vert ^2_2\).

-

(b)

(P1) \(\Rightarrow \phi \) is non-decreasing with respect to \(\Vert z \Vert _2\) for a fixed angle \((z,{\hat{z}})\),

-

(c)

(P2) \(\Rightarrow \) \(\phi \) is symmetric with respect to the orientation axis induced by \({\hat{z}}\),

-

(d)

(P1) + (P2) \(\Rightarrow \phi (z', \hat{z}) \ge \phi (z, \hat{z})\), if \(\Vert z' \Vert _2 \ge \Vert z \Vert _2\) and \(\cos (z, \hat{z}) =\cos (z', \hat{z})\),

-

(e)

(P1) + (P3) \(\Rightarrow \phi (z,{\hat{z}})\rightarrow 0\) when \(\cos (z, \hat{z})\rightarrow 1\).

Proof

-

(a) As \({\tilde{x}}^\phi \) is solution of (6), the relation is obtained by observing that \(\phi ({\hat{z}},{\hat{z}})=0\) with (P1).

-

(b) Looking at a ray [0, z) for any vector z, this is a consequence of the convexity of \(\phi \) and the fact that \(\phi (z,{\hat{z}})=0\) for \(\Vert z \Vert _2=0\).

-

(c) Since \(\cos (z,{\hat{z}})=\cos (-z,{\hat{z}})\), one can combine \((z',z'')=(z,-z)\) and \((z',z'')=(-z,z)\) in (P2) to obtain (c).

-

(d) Direct consequence of points (b) and (c).

-

(e) This is due to the continuity of the function \(\phi (.,{\hat{z}})\) that is 0 on the ray \([0,{\hat{z}})\) from (P1). \(\square \)

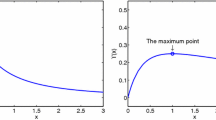

2.2 Properties of Considered Block Penalties

The properties of the previously introduced refitting block penalties are synthesized in Table 1. The proposed SD model is the only one satisfying all the desired properties. Figure 1 gives an illustration of the evolution of the different refitting block penalties as a function of its arguments (first column). It also exhibits the influence of the angle between z and \({\hat{z}}\) (second column) and the norm of z (third column) on the penalization value. From this figure, SD is shown to be a continuous penalization that increases continuously with respect to the absolute angle between z and \({\hat{z}}\). In addition of satisfying the desired properties, one can also observe from this figure that unlike some other alternatives, SD has a unique minimizer as a function of the angle and the amplitude of z.

Other block penalties are insensitive to directions (HO, QO and SO), completely intolerant (HD and HO) or too tolerant (QD) to small changes of orientations, hence not satisfying. These drawbacks will be illustrated in our experiments conducted in Sect. 5.

When \(b=1\), the orientation-based penalties (QO, HO and SO) have absolutely no effect while the direction-based penalties HD and QD preserve the sign of \((\varGamma {\hat{x}})_i\). In this paper, when \(b \ge 1\), we argue that the direction of the block \((\varGamma {\hat{x}})_i\) carries important information that is worth preserving when refitting, at least to some extent.

Illustration of block penalties: (left) 2D level lines of \(\phi \) for \(z=(z_1,z_2) = A \left( \begin{array}{ll} \cos \theta &{}-\sin \theta \\ \sin \theta &{}\cos \theta \end{array}\right) \hat{z}\), (middle) evolution regarding the angle \(\theta \) between z and \({\hat{z}}\) and (right) evolution with respect to the modulus A of z. HO and HD penalties have discontinuities while the ones on the four last rows are continuous and vary according to A. The penalties acting on directions (HD, QD and SD) are increasing w.r.t the amplitude of the angle \(\theta \). In Fig. 1: \(\varphi \) and \(\phi \) mismatch in legends

3 Refitting in Practice

We now introduce a general algorithm aiming to jointly solve the original problem (1) and the refitting one (6) for any refitting block penalty \(\phi \). This framework has been extended from the stable projection onto the support developed in [21] and later adapted to refitting with the Quadratic Orientation penalty in [22].

Given \({\hat{x}}\) solution of (1), a posterior refitting can be obtained by solving (6) for any refitting block penalty \(\phi \). To that end, we write the characteristic function of support preservation as \(\sum _{i\in \hat{{\mathcal {I}}}^c} \iota _{\{0\}}(\xi _i),\) where \(\iota _{\{0\}}(z)=0\) if \(z=0\) and \(+\infty \) otherwise. By introducing the convex function

the general refitting problem (6) can be expressed as

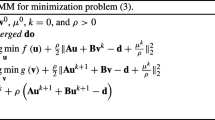

We now describe two iterative algorithms that can be used for the joint computation of \({\hat{x}}\) and \({\tilde{x}}\).

3.1 Primal-Dual Formulation

We first consider the primal dual formulation of the problems (1) and (16) that reads

where \(\iota _{B_2^\lambda }\) is the indicator function of the \(\ell _2\) ball of radius \(\lambda \) (that is 0 if \(\Vert z_i \Vert _2\le \lambda \) for all \(i\in [m]\) and \(+\infty \) otherwise) and

is the convex conjugate, with respect to the first argument of \(\omega _\phi (\cdot ,\varGamma {\hat{x}},\hat{{\mathcal {I}}})\).

Two iterative primal-dual algorithms are used to solve these problems. They involve the biased variables \(({\hat{z}}^k,{\hat{x}}^k)\) and the refitted ones \(({\tilde{z}}^k,{\tilde{x}}^k)\). Let us now present the whole algorithm, defined for parameters \(\kappa > 0\), \(\tau > 0\) and \(\theta \in [0, 1]\) as:

with the operator \(\varPhi _\tau ^{-}=({\mathrm {Id}}+\tau \varPhi ^t\varPhi )^{-1}\). The function \(\varPsi \) is considered to approximate the value of \(\varGamma {\hat{x}}\) from the current numerical variables and will be specifed in the next paragraph on Online direction and norm identification, while the functions \(\varPi \) and \(\mathrm {prox}_{\kappa \omega _\phi ^*}\) are detailed below. As we will see in Proposition 2, the quantity \(\beta > 0\) is used to guarantee that we estimate the support of \(\hat{x}\) correctly. In practice, we choose \(\beta \) as the smallest available nonzero floating number. The process for the biased variable involves the orthogonal projection on the \(\ell _2\) ball of radius \(\lambda \) in \({\mathbb {R}}^b\)

whereas the refitting algorithm relies on the proximal operator of \(\omega ^*_\phi \). We recall that the proximal operator of a convex function \(\omega \) at point \(\xi _0\) reads

From the block structure of the function \(\omega _\phi \) defined in (15), the computation of its proximal operator may be realized pointwise. Since \(\iota _{\{0\}}(\xi )^*=0\), we have

Table 2 gives the expressions of the dual functions \(\phi ^*\) with respect to their first variable and their related proximal operators \(\mathrm {prox}_{\kappa \phi ^*}\) for the refitting block penalties considered in this paper. All details are given in Appendix A.

Following [13], for any positive scalars \(\tau \) and \(\kappa \) satisfying \(\tau \kappa \Vert \varGamma ^t\varGamma \Vert _2<1\) and \(\theta \in [0,1]\), the estimates \(({\hat{\xi }}^k,{\hat{x}}^k,{\hat{v}}^k)\) of the biased solution converge to \(({\hat{\xi }}, {\hat{x}},{\hat{x}})\), where \(({\hat{\xi }},{\hat{x}})\) is a saddle point of (17). When the last arguments of the function \(\omega ^*_\phi \) are the converged \(\varGamma {\hat{x}}\) and its support \({\hat{{\mathcal {I}}}}\), the refitted variables converge to a saddle point of (18). However, we did not succeed to show convergence for the refitting process, since the quantities \(\varGamma {\hat{x}}\) and \({\hat{{\mathcal {I}}}}\) are only estimated from the biased variables at the current iteration, as explained in the next paragraphs.

Online support identification. Estimating \({{\,\mathrm{supp}\,}}(\varGamma {\hat{x}})\) from an estimation \({\hat{x}}^k\) is not stable numerically: the support \({{\,\mathrm{supp}\,}}(\varGamma {\hat{x}}^k)\) can be far from \({{\,\mathrm{supp}\,}}(\varGamma {\hat{x}})\) even though \({\hat{x}}^k\) is arbitrarily close to \({\hat{x}}\). As in [21], we rather consider the dual variable \({\hat{\xi }}^k\) to estimate the support. We indeed expect (see for instance [9]) at convergence \({\hat{\xi }}^k\) to saturate on the support of \(\varGamma {\hat{x}}\) and to satisfy the optimality condition \({\hat{\xi }}_i^k=\lambda \tfrac{(\varGamma {\hat{x}})_i}{\Vert (\varGamma {\hat{x}})_i \Vert _2}\). In practice, the norm of the dual variable \({\hat{\xi }}^k_i\) saturates to \(\lambda \) relatively fast onto \(\hat{{\mathcal {I}}}\). As a consequence, it is far more stable to detect the support of \(\varGamma {\hat{x}}\) with the dual variable \({\hat{\xi }}^k\) than with the vector \(\varGamma {\hat{x}}^k\) itself. The next proposition, adapted from [21], shows that this approach can indeed converge toward the support \({\hat{{\mathcal {I}}}}\).

Proposition 2

Let \(\alpha >0\) be the minimum nonzero value of \(\Vert (\varGamma {\hat{x}})_i \Vert _2\), and choose \(\beta \) such that \(\alpha \kappa>\beta >0\). Then, denoting \({\hat{\nu }}^{k+1}={\hat{\xi }}^k+\kappa \varGamma {\hat{v}}^k\), \({\hat{{\mathcal {I}}}}^{k+1}= \left\{ i \in [m] \;:\; \Vert {\hat{\nu }}_i^{k+1} \Vert _2>\lambda +\beta \right\} \) in Algorithm (20) converges in finite time to the true support \(\hat{{\mathcal {I}}} = {{\,\mathrm{supp}\,}}(\varGamma \hat{x})\) of the biased solution.Footnote 1

Proof

We just give a sketch of the proof. More details can be found in [21]. On the support, one has \(({\hat{\xi }}^k_i,{\hat{x}}^k_i,{\hat{v}}^k_i)\rightarrow (\lambda \varGamma {\hat{x}}^k_i/\Vert (\varGamma {\hat{x}}^k)_i \Vert _2,{\hat{x}}^k_i,{\hat{x}}^k_i)\). Then for k sufficiently large, \(\Vert {\hat{\xi }}_i^k+\kappa (\varGamma {\hat{v}}^k)_i \Vert \le \lambda +\beta \) if and only if \(i\in {\mathcal {I}}^c\). \(\square \)

Online direction and norm identification. In algorithm (20), the function \(\varPsi ({\hat{\nu }}^{k+1})\) aims at approximating \(\varGamma {\hat{x}}\) . As for the estimation of the support, instead of directly considering \(\varGamma {\hat{x}}^k\), we rather rely on \({\hat{\nu }}^{k+1}={\hat{\xi }}^k+\kappa \varGamma {\hat{v}}^k \) to obtain a stable estimation \({\hat{z}}\) of the vector \(\varGamma {\hat{x}}\).

Table 2 shows that for all the considered block penalties, the computation of the proximal operator of \(\phi ^*\) involves the normalized vector \({\hat{z}}_i/\Vert {\hat{z}}_i \Vert _2\) on the support \({\hat{{\mathcal {I}}}}\). This direction is approximated at each iteration by normalizing \({\hat{\nu }}_i^{k+1}\). The amplitude \(\Vert {\hat{z}}_i \Vert _2\) is just required for the models QO and QD.

The method in [22] relies on the QO block penalty (see Sect. 4.3 for more details) and considers the algorithmic differentiation of the biased process to obtain a refitting algorithm. Given its good numerical performance, we leverage this approach to define the function \(\varPsi \) as shown in the next proposition.

Proposition 3

The approach of [22] corresponds to refit with the QO block penalty and the following convergent estimation \(\varPsi ({\hat{\nu }}^{k+1}_i)\) of \((\varGamma {\hat{x}})_i\).

Proof

The method in [22] realizes the refitting through an algorithmic differentiation of the projection of the biased process. This leads to Algorithm (20) except for the update of the dual variable \({\tilde{\xi }}_i\) on the support \(i\in {\hat{{\mathcal {I}}}}^{k+1}\) that reads in [22]:

instead of

in Algorithm (20). Using the proximal operator given by the QO block penalty in Table 2 gives : \({\Vert {\hat{\nu }}^{k+1}_i \Vert _2}={\lambda +\kappa \Vert \varPsi (\nu ^{k+1}_i) \Vert _2}\) which leads to the function (23) for estimating \({\hat{z}}^k_i\).

We deduce that \(\varPsi ({\hat{\nu }}^{k+1}_i)=\varPsi ({\hat{\xi }}^k+\kappa \varGamma {\hat{v}}^k)\rightarrow (\varGamma {\hat{x}})_i\) from the convergence of the biased variables \(({\hat{\xi }}^k_i,{\hat{x}}^k_i,{\hat{v}}^k_i)\rightarrow (\lambda \varGamma {\hat{x}}^k_i/\Vert (\varGamma {\hat{x}}^k)_i \Vert _2,{\hat{x}}^k_i,{\hat{x}}^k_i)\). \(\square \)

Discussion. This joint-estimation considers at every iteration k different refitting functions \(\omega _\phi ^*(.,\varPsi ({\hat{\nu }}^{k+1}),\hat{{\mathcal {I}}}^{k+1})\) in (16). Then, unless \(b=1\) (see [21]), we cannot show the convergence of the refitting scheme. As in [22], we nevertheless observe convergence and a stable behavior for this algorithm.

In addition to its better numerical stability, the running time of joint-refitting is more interesting than the posterior approach. In Algorithm (20), the refitted variables at iteration k require the biased variables at the same iteration, and the whole process can be realized in parallel without significantly affecting the running time of the original biased process. On the other hand, posterior refitting is necessarily sequential and the running time is doubled in general.

3.2 Douglas–Rachford Formulation

An alternative to obtain solutions \({\hat{x}}\) of (1) and \({\tilde{x}}\) of (16) is to consider the splitting TViso reformulation, as proposed in [18], and given by

This problem can be solved with the Douglas–Rachford algorithm [25, 33]. Introducing the parameters \(\alpha \in (0,2)\) and \(\tau >0\), the iterates read

with \(\varGamma ^{-}=({\mathrm {Id}}+ \varGamma ^t\varGamma )^{-1} \), the block Soft Thresholding (ST) operator

and the proximal operator of \(\omega _\phi \) that can be written pointwise as

The estimates \(({\hat{x}}^k,{\hat{\xi }}^k,{\hat{\zeta }}^k)\) of the biased solution converge to \(({\hat{x}},{\hat{\xi }},{\hat{\zeta }})=({\hat{x}},\varGamma {\hat{x}},(1+\tau \lambda )(\varGamma {\hat{x}}))\), from the optimality conditions of problem (24). On the other hand, there is again no convergence guarantee for the refitted variables since the last two arguments of the function \(\omega _\phi \) are potentially modified at each iteration.

The support of \(\varGamma {\hat{x}}\) is estimated in line from auxiliary variables as \({\hat{{\mathcal {I}}}}^{k+1}= \left\{ i \in [m] \;:\; \Vert {\hat{\zeta }}_i^{k+1} \Vert _2>\tau \lambda +\beta \right\} \), where, as shown in [20], \(\beta \) has to be taken such that \(0<\beta <\lambda \min _{i\in {\hat{{\mathcal {I}}}}}\Vert (\varGamma {\hat{x}})_i \Vert _2\) to have convergence in finite time of \({\hat{{\mathcal {I}}}}^{k+1}\) to the true support \(\hat{{\mathcal {I}}} = {{\,\mathrm{supp}\,}}(\varGamma \hat{x})\). Again the quantity \(\beta > 0\) is chosen in practice as being the smallest available nonzero floating number.

Considering the stable algorithmic differentiation strategy of [22] suggested in the previous subsection, the vector \(\varGamma {\hat{x}}\) is approximated on the support \({\hat{{\mathcal {I}}}}^{k+1}\) at each iteration with the function \(\varUpsilon ({\hat{\zeta }})=\frac{\Vert {\hat{\zeta }} \Vert _2-\lambda \tau }{\Vert {\hat{\zeta }} \Vert _2}{\hat{\zeta }}\).

4 Related Refitting Works

We now review some other related refitting methods. First, we will discuss of refitting methods based on Bregman divergences, next the refitting approsch developed in [22], and then we will see how these techniques are related to the block penalties introduced in Sect. 2.

4.1 Bregman-Based Refitting

4.1.1 Bregman Divergence of \(\ell _{12}\) Structured Regularizers

In the literature [7, 37], Bregman divergences have proven to be well suited to measure the discrepancy between the biased solution \({\hat{x}}\) and its refitting \({\tilde{x}}\). We recall that for a convex, proper and lower semicontinuous function \(\psi \), the associated (generalized) Bregman divergence between x and \({\hat{x}}\) is, for any subgradient \({\hat{p}}\in \partial \psi ({\hat{x}})\):

If \(\psi \) is an absolutely 1-homogeneous function, i.e., \(\psi (\alpha x)=|\alpha | \psi (x)\), \(\forall \alpha \in {\mathbb {R}}\), then

and the Bregman divergence simplifies into

As an example, let us consider \(\psi (x)=\Vert x \Vert _2\). Since \(\psi \) is 1-homogeneous, it follows that

For regularizers of the form \(\psi (x)=\Vert \varGamma x \Vert _{1,2}\), we introduce the following notations

For all \({\hat{p}} \in {\mathbb {R}}^n\), we have [9]:

or in short \(\varOmega ({\hat{x}}) = \varGamma ^t \varDelta ({\hat{x}})\). Interestingly, \(\varDelta (x)\) enjoys a separability property in terms of all subgradients associated with the \(\ell _2\) norms of the blocks

Additionally, the next proposition shows that there is a similar separability property for the Bregman divergence.

Proposition 4

Let \(\hat{\eta } \in \varDelta ({\hat{x}})\) and \({\hat{p}} = \varGamma ^t \hat{\eta }\). Then,

Proof

The proof is obtained with straigthforward computations:

\(\square \)

In the following, we consider \(\hat{x}\) and its support \(\hat{{\mathcal {I}}}\) to be fixed, and we denote by \(D^{\hat{\eta }}_i(\varGamma x)\) the following

The next proposition shows that such a divergence measures the fit of directions between \((\varGamma x)_i\) and \((\varGamma {\hat{x}})_i\), but also partially captures the support \({\hat{{\mathcal {I}}}}\).

Proposition 5

Let \(\hat{\eta } \in \varDelta (\hat{x})\). We have for \(i\in \hat{{\mathcal {I}}}\)

and we have for \(i\in \hat{{\mathcal {I}}}^c\)

Proof

-

For \(i\in \hat{{\mathcal {I}}}\), i.e. \(\Vert (\varGamma x)_i \Vert _2>0\), we get \( \hat{\eta }_i = \frac{(\varGamma {\hat{x}})_i}{\Vert (\varGamma {\hat{x}})_i \Vert _2}\) from (36). We conclude from the definition \(D_i^{\hat{\eta }}(\varGamma x)=\Vert (\varGamma x)_i \Vert _2-\langle {\hat{\eta }}_i,(\varGamma x)_i\rangle =0\).

-

For \(i\in \hat{{\mathcal {I}}}^c\) and using (36), we distinguish two cases from (31). If \(\Vert {\hat{\eta }}_i \Vert _2<1\), then \(D_i^{\hat{\eta }}(\varGamma x)=0\) iff \({(\varGamma x)_i}=0_b\). Otherwise, one necessarily gets \( \hat{\eta }_i = \frac{(\varGamma x)_i}{\Vert (\varGamma x)_i \Vert _2}\).

\(\square \)

As noticed in the image fusion model in [2], minimizing \(D_i^{\hat{\eta }}(\varGamma x)\) enforces the alignment of the direction \((\varGamma x)_i\) with \({\hat{\eta }}_i\), and it is an efficient way to avoid contrast inversion.

In the following sections, unless stated otherwise, we will always consider \(\hat{\eta } \in \varDelta ({\hat{x}})\) and \({\hat{p}} = \varGamma ^t \hat{\eta } \in \varOmega ({\hat{x}})\).

4.1.2 Iterative Bregman Regularization

The Bregman process [37] reduces the bias of solutions of (1) by successively solving problems of the form

with \(\tilde{p}_l \in \varOmega (\tilde{x}_l)\). We consider a fixed \(\lambda \), but different strategies can be considered with decreasing parameters \(\lambda _l\) as in [44, 46]. For \(l=0\), setting \(\tilde{x}_{0}=0_n\), and taking \(\tilde{p}_0=0_n\in \varOmega (\tilde{x}_{0})\) so that \(D^{\tilde{p}_0}_{\Vert \varGamma \cdot \Vert _{1,2}} (x,\tilde{x}_{0})= \Vert \varGamma x \Vert _{1,2}\), the first step exactly gives the biased solution of (1) with \(\tilde{x}_{1}={\hat{x}}\). We denote by \(\tilde{x}^{\mathrm {IB}({\hat{p}})}=\tilde{x}_{2}\) the solution obtained after 2 steps of the Iterative Bregman (IB) procedure (44):

As underlined in relation (42), by minimizing \(D^{\hat{p}}_{\Vert \varGamma \cdot \Vert _{1,2}} (x,{\hat{x}}) = \sum _{i=1}^m D_i^{\hat{\eta }}(\varGamma x)\), one aims at preserving the direction of \(\varGamma {\hat{x}}\) on the support \(\hat{{\mathcal {I}}}\), without ensuring \({{\,\mathrm{supp}\,}}(\varGamma {\tilde{x}}^{\text {IB}(\hat{p})})\subseteq \hat{{\mathcal {I}}}\).

For the iterative framework, the support of the previous solution may indeed not be preserved (\(\Vert (\varGamma {\tilde{x}}_l)_i \Vert _2=0 \nRightarrow \Vert (\varGamma {\tilde{x}}_{l+1})_i \Vert _2=0\)) and can hence grow. The support of \(\varGamma x_0\) for \(\tilde{x}_{0}=0_n\) is for instance totally empty whereas the one of \({\hat{x}}= \tilde{x}_{1}\) may not (and should not) be empty. For \(l\rightarrow \infty \), the process actually converges to some x such that \(\varPhi x=y\). Because the IB procedure does not preserve the support of the solution, it cannot be considered as a refitting procedure and is more related to boosting approaches as discussed in Sect. 1.

4.1.3 Bregman-Based Refitting

In order to respect the support of the biased solution \({\hat{x}}\) and to keep track of the direction \(\varGamma {\hat{x}}\) during the refitting, the authors of [7] proposed the following model:

This model enforces the Bregman divergence to be 0, since, from Eq. (29), we have:

We see from (42) that for \(i\in \hat{{\mathcal {I}}}\), the direction of \((\varGamma {\hat{x}})_i\) is preserved in the refitted solution. From (43), we also observe that the absence of support is also preserved for any \(i\in \hat{{\mathcal {I}}}^c\) where \(\Vert \hat{\eta }_i \Vert _2<1\). Note that extra elements in the support \(\varGamma {\tilde{x}}^{\text {B}({\hat{p}})}\) may be added at coordinates \(i\in \hat{{\mathcal {I}}}^c\) where \(\Vert \hat{\eta }_i \Vert _2=1\).

4.1.4 Infimal Convolutions of Bregman (ICB) Distance-Based Refitting

To get rid of the direction dependency, the ICB (Infimal Convolutions of Bregman distances) model is also proposed in [7]:

The orientation model may nevertheless involve contrast inversions between biased and refitted solutions. In practice, relaxations are used in [7] by solving, for a large value \(\gamma >0\),

The main advantage of this refitting strategy is that no support identification is required since everything is implicitly encoded in the subgradient \({\hat{p}}\). This makes the process stable even if the estimation of \({\hat{x}}\) is not highly accurate. The support of \(\varGamma {\hat{x}}\) is nevertheless only approximately preserved, since the constraint \(D^{{\hat{p}}}_{\Vert \varGamma \cdot \Vert _{1,2}} (x,{\hat{x}})=0\) can never be ensured numerically with a finite value of \(\gamma \).

4.1.5 The Best of Both Bregman Worlds

From relations (37) and (41), the refitting models given in (45) and (46) can be reexpressed as a function of \(\hat{\eta }\)

Alternatively, we now introduce a mixed model that we coin Best of Both Bregman (BBB), as

With such reformulations, connections between refitting models (50), (51) and (52) can be clarified. The solution \(\tilde{x}^{\mathrm {IB}}\) [37] is too relaxed, as it only penalizes the directions \((\varGamma x)_i\) using \((\varGamma {\hat{x}})_i\), without aiming at preserving the support of \({\hat{x}}\). The solution \(\tilde{x}^{\mathrm {B}}\) [7] is too constrained: the direction within the support is required to be preserved exactly. The proposed refitting \(\tilde{x}^{\mathrm {BBB}}\) lies in-between: it preserves the support, while authorizing some directional flexibility, as illustrated by the sharper square edges in Fig. 1g.

An important difference with BBB is that we consider local inclusions of subgradients of the function \(\lambda \Vert \cdot \Vert _{1,2}\) at point \((\varGamma x)_i\) instead of the global inclusion of subgradients of the function \(\lambda \Vert \varGamma \cdot \Vert _{1,2}\) at point x as in (46) and (48). Such a change of paradigm allows to adapt the refitting locally by preserving the support while including the flexibility of the original Bregman approach [37].

4.2 Covariant LEAst Square Refitting (CLEAR)

We now describe an alternative way for performing variational refitting. When specialized to \(\ell _{1,2}\) sparse analysis regularization, CLEAR, a general refitting framework [22], consists in computing

where we recall that \(P_{(\varGamma {\hat{x}})_i}(.)\) is the orthogonal projection onto \({{\,\mathrm{Span}\,}}(\varGamma {\hat{x}})_i\). This model promotes refitted solutions preserving to some extent the orientation \(\varGamma {\hat{x}}\) of the biased solution. It also shrinks the amplitude of \(\varGamma x\) all the more that the amplitude of \(\varGamma {\hat{x}}\) are small. This penalty does not promote any kind of direction preservation, and as for the ICB model, contrast inversions may be observed between biased and refitted solutions. The quadratic term also over-penalizes large changes of orientation.

4.3 Equivalence

In the next proposition we show that, for a given vector \({\bar{\eta }}\), the concurrent Bregman-based refitting techniques coincides with two of the refitting block penalties introduced in Sect. 2, namely HD, HO, and that SD is nothing else than the proposed Best of Both Bregman-based penalty.

Proposition 6

Let \({\bar{\eta }}\in {\mathbb {R}}^m\) be defined as

Then, for any y, we have the following equalities

-

1.

\(\tilde{x}^{\mathrm{B}({\bar{\eta }})} = \tilde{x}^{\mathrm{HD}}\) ,

-

2.

\(\tilde{x}^{\mathrm{ICB}({\bar{\eta }})} = \tilde{x}^{\mathrm{HO}}\) ,

-

3.

\(\tilde{x}^{\mathrm{BBB}({\bar{\eta }})} = \tilde{x}^{\mathrm{SD}}\) .

where the equalities have to be understood as an equality of the corresponding sets of minimizers.

Proof

(Proposition 6) First notice that from (31), \( {\bar{\eta }}\) can be written explicitly as

-

[1.] This is a direct consequence of relation (47) and Proposition 5. Using (42), we have for \( i\in {\hat{{\mathcal {I}}}}\) that \(D_i^{{\bar{\eta }}}((\varGamma x)_i)=0\Leftrightarrow \cos ((\varGamma x)_i, (\varGamma {\hat{x}})_i)=1 \Leftrightarrow \cos ((\varGamma x)_i, {\bar{\eta }}_i)=1\). This is also valid for potential vanishing components \((\varGamma x)_i\) with the considered convention \(\cos (0_b,{\hat{z}})=0\). We thus recover the penalty function HD in(11). Next, for all \(i \in {\hat{{\mathcal {I}}}}^c\), we have \({\bar{\eta }}_i = 0\) by assumption, hence according to eq. (43):

$$\begin{aligned} D_i^{\hat{\eta }}(\varGamma x)=0 \Leftrightarrow (\varGamma x)_i = 0_b \Leftrightarrow {{\,\mathrm{supp}\,}}(\varGamma x) \subseteq {\hat{{\mathcal {I}}}} . \end{aligned}$$(57)It follows that \(\tilde{x}^{\mathrm{B}({\bar{\eta }})} = \tilde{x}^{\mathrm{HD}}\). This case \( {\hat{{\mathcal {I}}}}^c\) is the same for all next points.

-

[2.] With the \({\mathrm{ICB}}\) model, we have \(\pm {\hat{p}} \in \varOmega (x)\). For \(i\in {\hat{{\mathcal {I}}}}\), it gives with (42) that \(D_i^{\pm {\bar{\eta }}}(\varGamma x)=0\Leftrightarrow \Vert (\varGamma x)_i) \Vert _2=\pm \langle (\varGamma x)_i),{\hat{\eta }}_i\rangle \). This is equivalent to \(|\cos ((\varGamma x)_i, (\varGamma {\hat{x}})_i) |=1\) that corresponds to the penalty function HO in (10).

-

[3.] For all \(i \in {\hat{{\mathcal {I}}}}\), we have

$$\begin{aligned} D_i^{\hat{{\bar{\eta }}}}((\varGamma x)_i)&= \Vert (\varGamma x)_i \Vert _2 - \left\langle \frac{(\varGamma {\hat{x}})_i}{\Vert (\varGamma {\hat{x}})_i \Vert _2},\,(\varGamma x)_i \right\rangle \end{aligned}$$(58)$$\begin{aligned}&= \Vert (\varGamma x)_i \Vert _2(1 - \cos ((\varGamma x)_i, (\varGamma {\hat{x}})_i))~, \end{aligned}$$(59)that gives the penalty function SD in (9). We then get that \(\tilde{x}^{\mathrm{BBB}({\bar{\eta }})} = \tilde{x}^{\mathrm{SD}}\).

\(\square \)

Additionally, we can show that CLEAR corresponds to the QO refitting block penalty also introduced in Sect. 2.

Proposition 7

For any y, we have \(\tilde{x}^{\mathrm{CLEAR}} = \tilde{x}^{\mathrm{QO}}\) where the equality has to be understood as an equality of the sets of minimizers.

Proof

The case \({\hat{{\mathcal {I}}}}^c\) is treated in Proposition 6. For \(i\in {\hat{{\mathcal {I}}}}\) with the CLEAR model, we just have to observe that \( \left\| (\varGamma x)_i-P_{(\varGamma {\hat{x}})_i}((\varGamma x)_i)\right\| ^2= \Vert (\varGamma x)_i \Vert ^2_2(1- \cos ^2((\varGamma x)_i,(\varGamma {\hat{x}})_i)),\) which corresponds to the penalty function QO in (12).

\(\square \)

5 Experiments and Results

5.1 Toy Experiments with TViso

We first consider TViso regularization of gray-scale images on denoising problems of the form \(y = x + w\) where w is an additive white Gaussian noise with standard deviation \(\sigma \). We start with a simple toy example of a \(128 \times 128\) imageFootnote 2 composed of elementary geometric shapes, and we chose \(\sigma = 50\). The corresponding image and its noisy version are given in Fig. 2a, b, respectively. To highlight the different behaviors between the six refitting block penalties, we chose to set the regularization parameter of TViso to a large value \(\lambda = 750\) leading to a strong bias in the solution. Figure 2c depicts this solution in which not only some structures are lost (the darkest disk), but a large loss of contrast can be observed (the white large square became gray). Unlike boosting, the purpose of refitting is not to recover the lost structures, but only to recover the correct amplitudes of the reconstructed objects without changing their geometrical aspect. In a second scenario, we consider the standard cameraman image of size \(256 \times 256\), and we set \(\sigma = 20\) and \(\lambda = 36\). The corresponding images are given in Fig. 3a–c.

We applied the iterative primal-dual algorithm with our joint-refitting (Algorithm (20)) for 4, 000 iterations, with \(\tau = 1/\Vert \nabla \Vert _2\), \(\kappa = 1/\Vert \nabla \Vert _2\) and \(\theta = 1\), and where \(\Vert \nabla \Vert _2 = 2 \sqrt{2}\). The results of refitting with HO, HD, QO, QD, SO and SD are given for the two scenarios on Figs. 2d–i and 3d–i, respectively. Numerically, the difference when changing the block penalty comes from the computation of the proximal operator, presented in Table 2, that is only used in the fifth (resp. last) step of Algorithm 20 (resp. Algorithm 26). As a consequence, the overall computational cost is stable for all considered block penalties.

As a quantitative measure of refitting between an estimate \(\hat{x}\) and the underlying image x, we consider the peak signal-to-noise ratio (PSNR) defined in this case as \( \mathrm{PSNR} = -10 \log _{10} \tfrac{1}{n} \Vert x - \hat{x} \Vert _2^2\). The higher the PSNR, the better the refitting is supposed to be. In Fig. 2, we first observe that block penalties that are only based on orientation (HO, QO and SO) lead to refitted solution with contrast inversions compared to the original solution of TViso (see the banana dark shapes at the interface between the two squares). In that scenario, HD, QD and SD provides satisfying visual quality, the latest achieving highest PSNR. In the context of Fig. 3, the image being more complex, the TViso solution presents more level lines than in the previous scenario. The block penalties HO and HD must preserve exactly these level lines. The refitted problem becomes too constrained, and this lack of flexibility prevents HO and HD to deviate much from TViso. Their refitted solution remains significantly biased. Comparing now the quadratic penalties (QO and QD) against the soft ones (SO and SD) reveals that quadratic penalties does not allow to re-enhance as much the contrast of some objects (see, e.g., the second tower on the right of the image). Again SD achieved the highest PSNR in that scenario.

5.2 Comparison Between Sequential and Joint Refitting

The joint refitting algorithm is more stable with respect to the threshold \(\beta \) considered for detecting the support:

We illustrate this property in Fig. 4, where the Douglas–Rachford solver with the SD block penalty has been tested both sequentially and jointly, with the same iteration budget and the same parameter \(\beta \) in (20) and (60). In this experiment, we consider a \(256\times 256\) image with \(\sigma = 30\) and \(\lambda =50\). For the parameters of the algorithm 26, we set \(\alpha =0.5\) and \(\tau = 0.01\).

When considering a large threshold \(\beta =10^{-4}\) to detect the support of the solution, both approaches leads to visually fine results. The support of the TViso solution is nevertheless underestimated. On the other hand, with a low threshold \(\beta =10^{-8}\), the detection of the support is not stable with the sequential approach. It leads to noisy refitted solutions, whereas the joint approach still gives clean refittings. This stable behavior is also underlined by looking at the PSNR values with respect to the number of iterations: the sequential approach (dotted curve) presents fluctuating PSNR values with a low (and thus accurate) threshold.

5.3 Experiments with color TViso

We now consider TViso regularization of degraded color images with three channels Red, Green, Blue (RGB). We defined blocks obtained by applying \(\varGamma =(\nabla ^1_R,\nabla ^2_R,\nabla ^1_G,\nabla ^2_G, \nabla ^1_B,\nabla ^2_B)\), where \(m=n\), \(b=6\), and \(\nabla _C^d\) denotes the forward discrete gradient in the direction \(d \in \{ 1, 2 \}\) for the color channel \(C \in \{ R, G, B \}\).

We first focused on a denoising problem \(y = x + w\) where x is a color image and w is an additive white Gaussian noise with standard deviation \(\sigma =20\). We next focused on a deblurring problem \(y = \varPhi x + w\) where x is a color image, \(\varPhi \) is a convolution simulating a directional blur, and w is an additive white Gaussian noise with standard deviation \(\sigma =2\). In both cases, we chose \(\lambda =4.3 \sigma \). We apply the iterative primal-dual algorithm with our joint-refitting (Algorithm (20)) for 1, 000 iterations, with \(\theta = 1\), \(\tau = 1/\Vert \varGamma \Vert _2\) and \(\kappa = 1/\Vert \varGamma \Vert _2\), where, as for the gray-scale case, \(\Vert \varGamma \Vert _2 = 2 \sqrt{2}\). Note that in order to use the primal-dual algorithm, we have to implement \(\varGamma ^t\), defined for \(z = (z_R, z_G, z_B) \in {\mathbb {R}}^{n \times 6}\) where \(z_R\), \(z_G\), \(z_B\) are 2d vector fields, as given by

The results are provided on Figs. 5 and 6. Comparisons of refitting with our proposed SD block penalty, HO, HD, QO, QD and SO are provided. Using our proposed SD block penalty offers the best refitting performances in terms of both visual and quantitative measures. The loss of contrast of TViso is well corrected, amplitudes are enhanced while smoothness and sharpness of TViso is preserved. Meanwhile, the approach does not create artifacts, invert contrasts or reintroduce information that were not recovered by TViso.

5.4 Experiments with Second-Order TGV

We finally consider the second-order Total Generalized Variation (TGV) regularization [6] of gray-scale images that can be expressed, for an image \(x \in {\mathbb {R}}^n\) and regularization parameters \(\lambda > 0\) and \(\zeta \ge 0\), as

where \(\nabla = (\nabla ^1, \nabla ^2) : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^{n \times 2}\), \(\nabla ^d\) denotes the forward discrete gradient in the direction \(d \in \{ 1, 2 \}\), and \({\mathcal {E}}: {\mathbb {R}}^{n \times 2} \rightarrow {\mathbb {R}}^{n \times 2 \times 2}\) is a symmetric tensor field operator defined for a 2d vector field \(z = (z^1, z^2)\) as

where \({\bar{\nabla }}^d\) denotes the backward discrete gradient in the direction \(d \in \{ 1, 2 \}\), and \(\Vert \cdot \Vert _{1,F}\) is the pointwise sum of the Frobenius norm of all matrices of a field. One can observe that for \(\zeta = 0\), this model is equivalent to TViso. Interestingly, the solution of the second-order TGV can be obtained by solving a regularized least square problem with an \(\ell _{12}\) sparse analysis term as

where for an image \(x \in {\mathbb {R}}^n\) and a vector field \(z \in {\mathbb {R}}^{n \times 2}\), we consider \( X = \begin{pmatrix} x, z \end{pmatrix} \in {\mathbb {R}}^{n \times 3}\) and \( \varXi : (x, z) \rightarrow \varPhi x \), and we define \(\varGamma : {\mathbb {R}}^{n \times 3} \mapsto {\mathbb {R}}^{2 n \times 3}\) as

After solving (64), the solution of (62) is obtained as the first component of \(\hat{X}=(\hat{x},\hat{z})\). We use Chambolle-Pock algorithm [13] for which we also need to implement the adjoint \(\varGamma ^t : {\mathbb {R}}^{2 n \times 3} \rightarrow {\mathbb {R}}^{n \times 3}\) of \(\varGamma \) given for fields \(z \in {\mathbb {R}}^{n \times 2}\) and \(e \in {\mathbb {R}}^{n \times 3}\) by

We focused on a denoising problem \(y = x + w\) where x is a simulated elevation profile, ranging in [0, 255], and w is an additive white Gaussian noise with standard deviation \(\sigma =2\). We chose \(\lambda =15\) and \(\zeta = 0.45\). We applied the iterative primal-dual algorithm with our joint-refitting (Algorithm (20)) for 4, 000 iterations, with \(\tau = 1/\Vert \varGamma \Vert _2\), \(\kappa = 1/\Vert \varGamma \Vert _2\) and \(\theta = 1\), and where we estimated \(\Vert \varGamma \Vert _2 \approx 3.05\) by power iteration (note that the quantity \(\Vert \varGamma \Vert _2\) depends on the value of \(\zeta \)).

Refitting comparisons between our proposed SD block penalty, and the five other alternatives are provided on Fig. 7. Using the SD model, it offers the best refitting performances in terms of both visual and quantitative measures. The bias of TGV is well corrected, elevations (see the chimneys) and slopes (see the sidewalks) are enhanced while smoothness and sharpness of TGV is preserved. Meanwhile, the approach does not create artifacts, invert contrasts or reintroduce information that was not recovered by TGV.

6 Conclusion

We have presented a block penalty formulation for the refitting of solutions obtained with \(\ell _{12}\) sparse regularization. Through this framework, desirable properties of refitted solutions can easily be promoted. Different block penalty functions have been proposed, and their properties are discussed. We have namely introduced the SD block penalty that interpolates between Bregman iterations [37] and direction preservation [7].

Based on standard optimization schemes, we have also defined stable numerical strategies to jointly estimate a biased solution and its refitting. In order to take advantage of our efficient joint-refitting algorithm, we underline that it is important to consider simple block penalty functions, which proximal operator can be computed explicitly. This is the case for all the presented block penalties, more complex ones having been discarded from this paper for this reason.

Initially designed for TViso regularization, the approach has finally been extended to a generalized TGV model. Experiments show how the block penalties are able to preserve different structures of the biased solutions, while recovering the correct signal amplitude.

The refitting strategy is also robust to the numerical artifacts due to classical TViso discretization, and it allows to recover sharp discontinuities in any direction (see the circle in Fig. 2), without considering advanced schemes [11, 14, 19].

For the future, a challenging problem would be to define similar simple numerical schemes able to consider global properties on the refitting, such as the preservation of the tree of shapes [54] of the biased solution.

Notes

As in [7], the extended support \(\Vert {\hat{\xi }}_i \Vert _2=\lambda \) can be tackled by testing \(\Vert {\hat{\nu }}^{k+1}_i \Vert \ge \lambda \).

In this paper, we always consider images whose values are in the range [0, 255].

References

Bach, F., Jenatton, R., Mairal, J., Obozinski, G., et al.: Optimization with sparsity-inducing penalties. Found. Trends® in Mach. Learn. 4(1), 1–106 (2012)

Ballester, C., Caselles, V., Igual, L., Verdera, J., Rougé, B.: A variational model for p+ xs image fusion. Int. J. Comput. Vision 69(1), 43–58 (2006)

Belloni, A., Chernozhukov, V.: Least squares after model selection in high-dimensional sparse models. Bernoulli 19(2), 521–547 (2013)

Boyer, C., Chambolle, A., Castro, Y.D., Duval, V., De Gournay, F., Weiss, P.: On representer theorems and convex regularization. SIAM J. Optim. 29(2), 1260–1281 (2019)

Bredies, K., Carioni, M.: Sparsity of solutions for variational inverse problems with finite-dimensional data. Calc. Var. Partial. Differ. Equ. 59(1), 14 (2020)

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3(3), 492–526 (2010)

Brinkmann, E.M., Burger, M., Rasch, J., Sutour, C.: Bias-reduction in variational regularization. J. Math. Imaging Vis. 59(3), 534–566 (2017)

Bühlmann, P., Yu, B.: Boosting with the L2 loss: regression and classification. J. Am. Stat. Assoc. 98(462), 324–339 (2003)

Burger, M., Gilboa, G., Moeller, M., Eckardt, L., Cremers, D.: Spectral decompositions using one-homogeneous functionals. SIAM J. Imaging Sci. 9(3), 1374–1408 (2016)

Burger, M., Korolev, Y., Rasch, J.: Convergence rates and structure of solutions of inverse problems with imperfect forward models. Inverse Prob. 35(2), 024006 (2019)

Caillaud, C.: Asymptotical estimates for some algorithms for data and image processing: a study of the sinkhorn algorithm and a numerical analysis of total variation minimization. Ph.D. thesis, Institut Polytechnique de Paris (2020)

Chambolle, A., Caselles, V., Cremers, D., Novaga, M., Pock, T.: An introduction to total variation for image analysis. Theor. Found Numer Methods Sparse Recov. 9(263–340), 227 (2010)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40, 120–145 (2011)

Chambolle, A., Pock, T.: Crouzeix-raviart approximation of the total variation on simplicial meshes. J. Math. Imaging Vis. 62, 872–899 (2020)

Chan, T., Marquina, A., Mulet, P.: High-order total variation-based image restoration. SIAM J. Sci. Comput. 22(2), 503–516 (2000)

Charest, M.R., Milanfar, P.: On iterative regularization and its application. IEEE Trans. Circuits Syst. Video Technol. 18(3), 406–411 (2008)

Chzhen, E., Hebiri, M., Salmon, J.: On lasso refitting strategies. Bernoulli 25(4A), 3175–3200 (2019)

Combettes, P.L., Pesquet, J.C.: A douglas-rachford splitting approach to nonsmooth convex variational signal recovery. IEEE J. Sel. Top. Signal Process. 1(4), 564–574 (2007)

Condat, L.: Discrete total variation: new definition and minimization. SIAM J. Imaging Sci. 10(3), 1258–1290 (2017)

Deledalle, C.A., Papadakis, N., Salmon, J.: Contrast re-enhancement of total-variation regularization jointly with the douglas-rachford iterations. In: Signal Processing with Adaptive Sparse Structured Representations (2015)

Deledalle, C.A., Papadakis, N., Salmon, J.: On debiasing restoration algorithms: applications to total-variation and nonlocal-means. In: International Conference on Scale Space and Variational Methods in Computer Vision, pp. 129–141. Springer (2015)

Deledalle, C.A., Papadakis, N., Salmon, J., Vaiter, S.: CLEAR: covariant least-square re-fitting with applications to image restoration. SIAM J. Imaging Sci. 10(1), 243–284 (2017)

Deledalle, C.A., Papadakis, N., Salmon, J., Vaiter, S.: Refitting solutions promoted by \(\ell _{12}\) sparse analysis regularizations with block penalties. In: International Conference on Scale Space and Variational Methods in Computer Vision, pp. 131–143. Springer (2019)

Dobson, D.C., Santosa, F.: Recovery of blocky images from noisy and blurred data. SIAM J. Appl. Math. 56(4), 1181–1198 (1996)

Douglas, J., Rachford, H.: On the numerical solution of heat conduction problems in two and three space variables. Trans. Am. Math. Soc. 82(2), 421–439 (1956)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32(2), 407–499 (2004)

Elad, M., Milanfar, P., Rubinstein, R.: Analysis versus synthesis in signal priors. Inverse Prob. 23(3), 947–968 (2007)

Esedo\(\bar{\text{g}}\)lu, S., Osher, S.J.: Decomposition of images by the anisotropic rudin-osher-fatemi model. Commun. Pure Appl. Math. 57(12), 1609–1626 (2004)

Gilboa, G.: A total variation spectral framework for scale and texture analysis. SIAM J. Imaging Sci. 7(4), 1937–1961 (2014)

Kongskov, R.D., Dong, Y., Knudsen, K.: Directional total generalized variation regularization. BIT Numer. Math. 49(4), 903–928 (2019)

Lederer, J.: Trust, but verify: benefits and pitfalls of least-squares refitting in high dimensions. arXiv preprint arXiv:1306.0113 (2013)

Lin, Y., Zhang, H.H.: Component selection and smoothing in multivariate nonparametric regression. Ann. Stat. 34(5), 2272–2297 (2006)

Lions, P.L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979)

Liu, J., Huang, T.Z., Selesnick, I.W., Lv, X.G., Chen, P.Y.: Image restoration using total variation with overlapping group sparsity. Inf. Sci. 295, 232–246 (2015)

Milanfar, P.: A tour of modern image filtering: New insights and methods, both practical and theoretical. IEEE Signal Process. Mag. 30(1), 106–128 (2013)

Nam, S., Davies, M., Elad, M., Gribonval, R.: The cosparse analysis model and algorithms. Appl. Comput. Harmon. Anal. 34, 30–56 (2013)

Osher, S., Burger, M., Goldfarb, D., Xu, J., Yin, W.: An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 4(2), 460–489 (2005)

Peyré, G., Fadili, J.: Group sparsity with overlapping partition functions. In: 2011 19th European Signal Processing Conference, pp. 303–307. IEEE (2011)

Peyré, G., Fadili, J., Chesneau, C.: Adaptive structured block sparsity via dyadic partitioning. Proc. EUSIPCO 2011, 1455–1459 (2011)

Pierre, F., Aujol, J.F., Deledalle, C.A., Papadakis, N.: Luminance-guided chrominance denoising with debiased coupled total variation. In: International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, pp. 235–248. Springer (2017)

Rigollet, P., Tsybakov, A.B.: Exponential screening and optimal rates of sparse estimation. Ann. Stat. 39(2), 731–771 (2011)

Romano, Y., Elad, M.: Boosting of image denoising algorithms. SIAM J. Imaging Sci. 8(2), 1187–1219 (2015)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1–4), 259–268 (1992)

Scherzer, O., Groetsch, C.: Inverse scale space theory for inverse problems. In: Kerckhove, M. (ed.) Scale-Space and Morphology in Computer Vision, pp. 317–325. Springer, Berlin (2001)

Strong, D., Chan, T.: Edge-preserving and scale-dependent properties of total variation regularization. Inverse Prob. 19(6), 165–187 (2003)

Tadmor, E., Nezzar, S., Vese, L.: A multiscale image representation using hierarchical (BV, L2) decompositions. Multiscale Model. Simul. 2(4), 554–579 (2004)

Talebi, H., Zhu, X., Milanfar, P.: How to saif-ly boost denoising performance. IEEE Trans. Image Process. 22(4), 1470–1485 (2013)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 58(1), 267–288 (1996)

Tukey, J.W.: Exploratory data analysis. Reading, MA (1977)

Vaiter, S., Deledalle, C., Fadili, J., Peyré, G., Dossal, C.: The degrees of freedom of partly smooth regularizers. Ann. Inst. Stat. Math. 69(4), 791–832 (2017)

Vaiter, S., Deledalle, C.A., Peyré, G., Dossal, C., Fadili, J.: Local behavior of sparse analysis regularization: applications to risk estimation. Appl. Comput. Harmon. Anal. 35(3), 433–451 (2013)

Vaiter, S., Peyré, G., Dossal, C., Fadili, J.: Robust sparse analysis regularization. IEEE Trans. Inf. Theory 59(4), 2001–2016 (2012)

Vaiter, S., Peyré, G., Fadili, J.: Model consistency of partly smooth regularizers. IEEE Trans. Inf. Theory 64(3), 1725–1737 (2017)

Weiss, P., Escande, P., Bathie, G., Dong, Y.: Contrast invariant SNR and isotonic regressions. Int. J. Comput. Vis. 127(8), 1144–1161 (2019)

Yu, G., Mallat, S., Bacry, E.: Audio denoising by time-frequency block thresholding. IEEE Trans. Signal Process. 56(5), 1830–1839 (2008)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B Stat. Methodol. 68(1), 49–67 (2006)

Acknowledgements

This work was supported by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie Grant Agreement No 777826.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proximity Operators of Block Penalties

Proximity Operators of Block Penalties

1.1 Convex Conjugates \(\phi ^*\)

We here compute the convex conjugate \(\phi ^*\) of the different block penalties \(\phi (z,{\hat{z}})\) that only depends on \(z\in {\mathbb {R}}^b\) and where \({\hat{z}}\in {\mathbb {R}}^b\) is a given fixed non-null vector. We consider the following representation of the vectors z with respect to the \({\hat{z}}\) axis: \(z=\xi \frac{{\hat{z}}}{\Vert {\hat{z}} \Vert _2}+\zeta \frac{{\hat{z}}^\perp }{\Vert {\hat{z}} \Vert _2}\). This expression is valid for the case \(b=2\). If \(b=1\) then z is only parameterized by \(\xi \). When \(b>2\), \({\hat{z}}^\perp \) must be understood as a subspace S of dimension \(b-1\) and \(\zeta \) as a vector of \(b-1\) components corresponding to each dimension of S.

With this change of variables, we have \(\Vert z \Vert ^2_2=\xi ^2+\Vert \zeta \Vert ^2_2\) (since \(\zeta \) is of dimension \(b-1\)), \(\cos (z,{\hat{z}})=\xi /\sqrt{\xi ^2+\Vert \zeta \Vert ^2_2}\), \(P_{{\hat{z}}}(z)=\xi {\hat{z}}/\Vert {\hat{z}} \Vert _2\) and \(z-P_{{\hat{z}}}(z)=\zeta {\hat{z}}^\perp /\Vert {\hat{z}} \Vert _2\). We also observe for instance that \(|\cos (z,{\hat{z}}) |=1\Leftrightarrow \zeta =0_{b-1}\). All the block penalties \(\phi (z, {\hat{z}})\) can thus be expressed as \(\phi (\xi ,\zeta )\). The convex conjugate reads

-

[HO] It results that the convex conjugate \(\phi _{\mathrm{HO}}^*(\xi _0,\zeta _0)\) is

$$\begin{aligned} \sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \iota _{\{ 0_{b-1} \}}(\zeta ) = \iota _{\{ 0 \}}(\xi _0)~. \end{aligned}$$(68) -

[HD] The convex conjugate \(\phi _{\mathrm{HD}}^*(\xi _0,\zeta _0)\) is

$$\begin{aligned} \sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \iota _{{\mathbb {R}}_+^* \times \{ 0_{b-1} \}}(\xi , \zeta ) = \iota _{\mathbb {R}_{-}}(\xi _0)~. \end{aligned}$$(69) -

[QO] The convex conjugate \(\phi _{\mathrm{QO}}^*(\xi _0,\zeta _0)\) is

$$\begin{aligned}&\sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \frac{\lambda }{2} \frac{\xi ^2+\Vert \zeta \Vert ^2_2}{\Vert \hat{z} \Vert _2}\left( 1- \frac{\xi ^2}{\xi ^2+\Vert \zeta \Vert ^2_2}\right) \end{aligned}$$(70)$$\begin{aligned}&\quad = \sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \frac{\lambda }{2} \frac{\Vert \zeta \Vert ^2_2}{\Vert \hat{z} \Vert _2} \end{aligned}$$(71)$$\begin{aligned}&\quad = \left\{ \begin{array}{ll} \frac{\Vert {\hat{z}} \Vert _2\Vert \zeta _0 \Vert ^2_2}{2\lambda } &{} {\text {if} \quad }\xi _0 = 0 ~,\\ + \infty &{} {\text {otherwise}}~, \end{array} \right. \end{aligned}$$(72)since the optimality condition on \(\zeta \) gives us \(\zeta ^\star =\frac{\zeta _0\Vert {\hat{z}} \Vert _2}{\lambda }\).

-

[QD] The convex conjugate \(\phi _{\mathrm{QD}}^*(\xi _0,\zeta _0)\) is

$$\begin{aligned}&\sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \frac{\lambda }{2} \frac{\Vert \zeta \Vert ^2_2}{\Vert \hat{z} \Vert _2}- \left\{ \begin{array}{ll} \frac{\lambda }{2} \frac{\xi ^2}{\Vert \hat{z} \Vert _2} &{} {\text {if} \quad }\xi \le 0\\ 0&{}{\text {otherwise}}. \end{array} \right. \end{aligned}$$(73)$$\begin{aligned}&= \left\{ \begin{array}{ll} \frac{\Vert {\hat{z}} \Vert _2(\xi _0^2+\Vert \zeta _0 \Vert ^2_2)}{2\lambda } &{} {\text {if} \quad }\xi _0\le 0 ~,\\ + \infty &{} {\text {otherwise}}~, \end{array} \right. \end{aligned}$$(74)where we used that if \(\xi _0>0\), taking \(\zeta =0\) and letting \(\xi \rightarrow \infty \) leads to \(\phi ^*_{\mathrm{QD}}(\xi _0,\zeta _0)=+\infty \), otherwise, we used the optimality conditions on \(\zeta \) and \(\xi \) giving us \(\zeta ^\star =\frac{\zeta _0\Vert {\hat{z}} \Vert _2}{\lambda }\) and \(\xi ^\star =\frac{\xi _0\Vert {\hat{z}} \Vert _2}{\lambda }\).

-

[SO] The convex conjugate \(\phi _{\mathrm{SO}}^*(\xi _0,\zeta _0)\) is

$$\begin{aligned}&\sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \lambda \Vert \zeta \Vert _2 = \iota _{\{ 0 \} \times B_2^\lambda }(\xi _0, \zeta _0)~, \end{aligned}$$(75)since the optimality condition on \(\zeta \) gives us \(\Vert \zeta _0 \Vert _2 \le \lambda \) if \(\zeta ^\star = 0\), and \(\zeta _0 = \lambda \frac{\zeta ^\star }{\Vert \zeta ^\star \Vert _2}\) otherwise.

-

[SD] The convex conjugate \(\phi _{\mathrm{SD}}^*(\xi _0,\zeta _0)\) is

$$\begin{aligned}&\sup _{\xi ,\zeta } \; \xi _0 \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \frac{\lambda }{2} \left( \sqrt{\xi ^2+\Vert \zeta \Vert _2^2 }-\xi \right) \end{aligned}$$(76)$$\begin{aligned}&=\sup _{\xi ,\zeta } \; (\xi _0+\lambda /2) \xi +\left\langle \zeta _0,\,\zeta \right\rangle - \frac{\lambda }{2} \sqrt{\xi ^2+\Vert \zeta \Vert _2^2 } \end{aligned}$$(77)$$\begin{aligned}&= \left\{ \begin{array}{ll} 0&{} {\text {if} \quad }\sqrt{\Vert \zeta _0 \Vert ^2_2+(\xi _0+\lambda /2)^2}\le \lambda /2~,\\ + \infty &{} {\text {otherwise}}~, \end{array} \right. \end{aligned}$$(78)since if \(\sqrt{\Vert \zeta _0 \Vert ^2_2+(\xi _0+\lambda /2)^2}>\lambda /2\), then letting \(\xi \rightarrow {{\,\mathrm{sign}\,}}{(\xi _0+\lambda /2)}\times \infty \) and \(\zeta \rightarrow {{\,\mathrm{sign}\,}}{\zeta _0}\times \infty \) leads to \(\phi _{\mathrm{SD}}^*(\xi _0,\zeta _0)=+\infty \).

1.2 Computing \(\mathrm {prox}_{\kappa \phi ^*}\)

We here give the computation of the proximal operator \(\mathrm {prox}_{\kappa \phi ^*}(\xi _0,\zeta _0)\) of the different \(\phi ^*\) that is given at point \((\xi _0,\zeta _0)\) by

-

[HO] The proximal operator \(\mathrm {prox}_{\kappa \phi _{HO}^*}(\xi _0,\zeta _0)\) is given by

$$\begin{aligned} \underset{\xi ,\zeta }{{{\,\mathrm{argmin}\,}}}\; \frac{1}{2\kappa } \Big |\Big | \begin{pmatrix}\xi \\ \zeta \end{pmatrix} - \begin{pmatrix}\xi _0\\ \zeta _0 \end{pmatrix} \Big |\Big |^2_2+ \iota _{\{ 0 \}}(\xi ) =(0,\zeta _0). \end{aligned}$$(80) -

[HD] The proximal operator \(\mathrm {prox}_{\kappa \phi _{HD}^*}(\xi _0,\zeta _0)\) is given by

$$\begin{aligned} \underset{\xi ,\zeta }{{{\,\mathrm{argmin}\,}}}\; \frac{1}{2\kappa } \Big |\Big | \begin{pmatrix}\xi \\ \zeta \end{pmatrix} - \begin{pmatrix}\xi _0\\ \zeta _0 \end{pmatrix} \Big |\Big |^2_2+ \iota _{{\mathbb {R}}_{-}}(\xi ) =(\min (0,\xi _0),\zeta _0). \end{aligned}$$(81) -

[QO] The proximal operator \(\mathrm {prox}_{\kappa \phi _{QO}^*}(\xi _0,\zeta _0)\) is given by

$$\begin{aligned}&\underset{\xi ,\zeta }{{{\,\mathrm{argmin}\,}}}\; \frac{1}{2\kappa } \Big |\Big | \begin{pmatrix}\xi \\ \zeta \end{pmatrix} - \begin{pmatrix}\xi _0\\ \zeta _0 \end{pmatrix} \Big |\Big |^2_2 + \left\{ \begin{array}{ll} \frac{\Vert {\hat{z}} \Vert _2\Vert \zeta \Vert ^2_2}{2\lambda } &{} {\text {if} \quad }\xi = 0 ~,\\ + \infty &{} {\text {otherwise}}~. \end{array} \right. \end{aligned}$$(82)$$\begin{aligned}&=\frac{\lambda }{\lambda +\kappa \Vert {\hat{z}} \Vert _2}\left( 0,\zeta _0\right) , \end{aligned}$$(83)since the optimality condition gives \(\lambda (\zeta ^\star -\zeta _0)+\kappa \Vert {\hat{z}} \Vert _2 \zeta ^\star =0\).

-

[QD] The proximal operator \(\mathrm {prox}_{\kappa \phi _{QD}^*}(\xi _0,\zeta _0)\) is given by

$$\begin{aligned}&\underset{\xi ,\zeta }{{{\,\mathrm{argmin}\,}}}\; \frac{1}{2\kappa } \Big |\Big | \begin{pmatrix}\xi \\ \zeta \end{pmatrix} - \begin{pmatrix}\xi _0\\ \zeta _0 \end{pmatrix} \Big |\Big |^2_2 + \left\{ \begin{array}{ll} \frac{\Vert {\hat{z}} \Vert _2(\xi ^2+\Vert \zeta \Vert ^2_2)}{2\lambda } &{} {\text {if} \quad }\xi \le 0 ~,\\ + \infty &{} {\text {otherwise}}~. \end{array} \right. \nonumber \\&=\frac{\lambda }{\lambda +\kappa \Vert {\hat{z}} \Vert _2}\left( \min (0,\xi _0),\zeta _0\right) . \end{aligned}$$(84) -

[SO] The proximal operator \(\mathrm {prox}_{\kappa \phi _{QD}^*}(\xi _0,\zeta _0)\) is given by

$$\begin{aligned}&\underset{\xi ,\zeta }{{{\,\mathrm{argmin}\,}}}\; \frac{1}{2\kappa } \Big |\Big | \begin{pmatrix}\xi \\ \zeta \end{pmatrix} - \begin{pmatrix}\xi _0\\ \zeta _0 \end{pmatrix} \Big |\Big |^2_2 + \iota _{\{ 0 \} \times B_2^\lambda }(\xi _0, \zeta _0) \nonumber \\&= \frac{\lambda }{\max (\lambda , \Vert \zeta _0 \Vert _2)} (0, \zeta _0)~. \end{aligned}$$(85) -

[SD] The proximal operator \(\mathrm {prox}_{\kappa \phi _{QD}^*}(\xi _0,\zeta _0)\) is given by

$$\begin{aligned}&\underset{\xi ,\zeta }{{{\,\mathrm{argmin}\,}}}\; \frac{1}{2\kappa } \Big |\Big | \begin{pmatrix}\xi \\ \zeta \end{pmatrix} - \begin{pmatrix}\xi _0\\ \zeta _0 \end{pmatrix} \Big |\Big |^2_2 \nonumber \\&\quad + \left\{ \begin{array}{ll} 0&{} {\text {if} \quad }\sqrt{\Vert \zeta \Vert ^2_2+(\xi +\lambda /2)^2}\le \lambda /2~,\\ + \infty &{} {\text {otherwise}}~. \end{array} \right. \end{aligned}$$(86)$$\begin{aligned}&= \frac{\lambda }{2}\frac{(\xi _0+\lambda /2,\zeta _0) }{\max (\lambda /2,\sqrt{\Vert \zeta _0 \Vert ^2_2+(\xi _0+\lambda /2)^2})} -\left( \lambda /{2},0\right) , \end{aligned}$$(87)which just corresponds to the projection of the \(\ell _2\) ball of \({\mathbb {R}}^b\) of radius \(\lambda /2\) and center \((-\lambda /2,0)\).

Rights and permissions

About this article

Cite this article

Deledalle, CA., Papadakis, N., Salmon, J. et al. Block-Based Refitting in \(\ell _{12}\) Sparse Regularization. J Math Imaging Vis 63, 216–236 (2021). https://doi.org/10.1007/s10851-020-00993-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-020-00993-2