Abstract

To detect careless and insufficient effort (C/IE) survey responders, researchers can use infrequency items – items that almost no one agrees with (e.g., “When a friend greets me, I generally try to say nothing back”) – and frequency items – items that almost everyone agrees with (e.g., “I try to listen when someone I care about is telling me something”). Here, we provide initial validation for two sets of these items: the 14-item Invalid Responding Inventory for Statements (IDRIS) and the 6-item Invalid Responding Inventory for Adjectives (IDRIA). Across six studies (N1 = 536; N2 = 701; N3 = 500; N4 = 499; N5 = 629, N6 = 562), we found consistent evidence that the IDRIS is capable of detecting C/IE responding among statement-based scales (e.g., the HEXACO-PI-R) and the IDRIA is capable of detecting C/IE responding among both adjective-based scales (e.g., the Lex-20) and adjective-derived scales (e.g., the BFI-2). These findings were robust across different analytic approaches (e.g., Pearson correlations; Spearman rank-order correlations), different indices of C/IE responding (e.g., person-total correlations; semantic synonyms; horizontal cursor variability), and different sample types (e.g., US undergraduate students; Nigerian survey panel participants). Taken together, these results provide promising evidence for the utility of the IDRIS and IDRIA in detecting C/IE responding.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

If you have ever conducted a survey, you have probably encountered so-called “careless and insufficient effort” (C/IE) responders (see Curran, 2016). C/IE responders are participants who provide responses to items on a survey that are unrelated to the content of those items. For example, a participant who does not read any items on a survey and instead selects the same response option for every item would be classified as a C/IE responder, as would a participant who responds according to some predetermined pattern or who selects responses at random.

The purpose of the present set of six studies is to validate two scales for detecting C/IE responders: the 14-item Invalid Responding Inventory for Statements (IDRIS; Kay, 2021) and the 6-item Invalid Responding Inventory for Adjectives (IDRIA; Kay, 2023). Despite recently being included in the Comprehensive Infrequency/Frequency Item Repository (CIFR; Kay & Saucier, 2023) – an online database of 660 infrequency/frequency items – these two scales have not undergone any form of formal validation. Here, we provide this validation by examining the IDRIS and IDRIA in relation to several well-established indices of C/IE responding.

Background

Although estimates of the prevalence of C/IE responding vary widely (e.g., Berry et al., 1992; Johnson, 2005; Maniaci & Rogge, 2014; Meade & Craig, 2012), many researchers place the number somewhere around 10% (see Curran, 2016). This would not be a problem if C/IE responding had little to no impact on data quality, but researchers have consistently found that including even small numbers of C/IE responders in one’s data can have dramatic consequences. For example, Woods (2006) found that data with as little as 10% C/IE responders can introduce additional factors in otherwise unidimensional data (see also Arias et al., 2020; DeSimone et al., 2018; Schmitt & Stults, 1985). Other researchers have, likewise, found that including C/IE responders in one’s data can artificially inflate (Cornell et al., 2012; DeSimone et al., 2018; Holtzman & Donnellan, 2017; Zorowitz et al., 2023) and artificially deflate (Credé, 2010; Hough et al., 1990; Huang et al., 2015a; Oppenheimer et al., 2009) observed effect sizes, leading to increased type I and type II error rates, respectively.

Given the threat that C/IE responders pose to data quality, researchers have, unsurprisingly, devoted considerable time and effort to developing methods for detecting these participants (see Curran, 2016; Ward & Meade, 2023). Researchers have, for example, developed ways of detecting these responders by looking at the length of time it takes participants to complete surveys (Huang et al., 2012), the variability participants show in their responses to surveys (Thalmayer & Saucier, 2014), the number of items in a row that participants provide the same response to on surveys (Johnson, 2005), and the movement of participants’ cursors when completing surveys (Pokropek et al., 2023).

One additional method that has recently been receiving greater attention is the so-called infrequency/frequency-item method (see Curran, 2016; Ward & Meade, 2023). The idea behind this method is simple: participants who are engaged in C/IE responding will be more likely to agree with items that most people disagree with and disagree with items that most people agree with. Following this method, researchers include in their surveys items that are known to be endorsed relatively infrequently (e.g., “I will be punished for meeting the requirements of my job”; Huang et al., 2015b) and items that are known to be endorsed relatively frequently (e.g., “It feels good to be appreciated”; Maniaci & Rogge, 2014). They then screen their data for participants who show a consistent pattern of agreeing and disagreeing with the infrequency and frequency items, respectively.Footnote 1,Footnote 2

One of the first instantiations of the infrequency/frequency-item method was Washburne’s (1935; see also Hartshorne & May, 1928) objectivity scale. As part of his social adjustment measure for children, Washburne included items that asked respondents whether they had engaged in behaviours that almost everyone has or has not engaged in. For example, participants were asked whether they had ever broken or lost something that belonged to someone else and whether they were always on time for school and other appointments. These items were, according to Washburne, meant to assess a respondent’s ability to accurately report their conduct and feelings, with the ultimate goal being to guard against “intentional and unintentional inaccuracies in the answering of the questionnaire” (p. 126).

Eight years after the introduction of Washburne’s objectivity scale, Hathaway and McKinley (1943) introduced what would become one of the most widely used infrequency/frequency scales, even today. In the first edition of the Minnesota Multiphasic Personality Inventory (MMPI), Hathaway and McKinley included an “F scale”, which comprised 44 infrequency items (e.g., “Evil spirits possess me at times”) and 20 frequency items (e.g., “I get angry sometimes”). The infrequency items were those that were endorsed by fewer than 10% of visitors to the hospital and outpatient department at the University of Minnesota. The frequency items were those that were endorsed by greater than 90% of the visitors to the hospital and outpatient department. The scale was originally intended to detect C/IE responders, participants who had misinterpreted items, and response sheets that had been miscoded. Later, it would be recognized that the scale could also be used to detect participants who were trying to exaggerate their symptoms (i.e., “faking bad”; Meehl & Hathaway, 1946). Since its debut, the MMPI has undergone several revisions (e.g., the MMPI-3; Ben-Porath & Tellegen, 2020) and has, directly or indirectly, influenced the creation of numerous infrequency/frequency measures.

One set of measures indirectly inspired by the MMPI is the IDRIS (Kay, 2021) and the IDRIA (Kay, 2023). Despite having similar names, the IDRIS and IDRIA are intended for two distinct use cases. The IDRIS was developed to be used with scales composed of statements, such as the HEXACO-PI-R (e.g., “In social situations, I’m usually the one who makes the first move”; Ashton & Lee, 2005; Lee & Ashton, 2004). Accordingly, the IDRIS includes seven infrequency statements (e.g., “I am older than my parents”) and seven frequency statements (e.g., “I can remember the names of most of my close family members”) (Appendix 1). The IDRIA, on the other hand, was developed to be used with scales composed of adjectives, such as the Big Five Mini-Markers (e.g., “Bold”; Saucier, 1994). Accordingly, the IDRIA includes three infrequency adjectives (e.g., “triangular”) and three frequency adjectives (e.g., “mortal”) (Appendix 2).

Although the IDRIS and IDRIA have not been formally validated, both scales have a number of features that make them promising as infrequency/frequency scales. To start, the infrequency items and frequency items that make up the IDRIS and IDRIA are relatively infrequent and frequent, respectively. For infrequency/frequency scales to work, most people (or, more specifically, most non-C/IE responders) have to disagree with the infrequency items and agree with the frequency items (see Hathaway & McKinley, 1943). This appears to be the case for the IDRIS and IDRIA. Specifically, Kay and Saucier (2023) demonstrated that, on a five-point Likert scale, participants provided an average response of 1.08 to 1.42 to the infrequency items from the IDRIS and an average response of 4.44 to 4.72 to the frequency items from the IDRIS. Likewise, participants provided an average response of 1.47 to 1.91 to the infrequency items from the IDRIA and an average response of 4.60 to 4.81 to the frequency items from the IDRIA.Footnote 3

A second desirable feature of the IDRIS and IDRIA is that both scales are nonproprietary.Footnote 4 The scales can, therefore, be accessed by researchers who may not otherwise have the funds to purchase measurement manuals or scale booklets, a common barrier to conducting research for early-career researchers and researchers from countries without established funding agencies. Being nonproprietary also means the IDRIS and IDRIA are free to be reworded, rearranged, and otherwise modified by researchers, saving the scales from the psychometric purgatory that often befalls proprietary measures (see Goldberg et al., 2006).

A third desirable feature of the IDRIS and IDRIA is that both scales include equal numbers of infrequency items and frequency items. Most extant infrequency/frequency scales include more infrequency items than frequency items (e.g., Hathaway & McKinley, 1943), with some scales being composed entirely of infrequency items (e.g., Beach, 1989; Huang et al., 2015b). In some ways, including more infrequency items than frequency items makes sense. Like most participants (Cronbach, 1946), C/IE responders are more likely to agree with items than disagree with items (Johnson, 2005). Since infrequency items are designed to detect improbable agreement, it is understandable that researchers would feel compelled to include more infrequency items than frequency items in their scales. However, including only infrequency items make these scales unable to detect C/IE responders who, for whatever reason, tend to disagree with items. Based on estimates from Johnson (2005), this could be as much as 30.71% of C/IE responders.

A fourth desirable feature of the IDRIS and IDRIA is that both scales were developed with subtlety in mind. Ideally, infrequency/frequency items should be as subtle as possible (see Curran, 2016), as this minimizes the chance that a C/IE responder’s attention will be drawn to the items long enough for them to be recognized as attention check items. As noted by Kay and Saucier (2023), many extant infrequency/frequency items include conspicuous linguistic features that undermine their subtlety. These conspicuous linguistic features include (a) proper nouns (e.g., “I own Starbucks”; Dunn et al., 2018), (b) uncommon words (e.g., “I am paid biweekly by leprechauns”; Meade & Craig, 2012), (c) numbers (e.g., “I can run 2 miles in 2 minutes”; Huang et al., 2015b), and (d) unusual punctuation (e.g., “I lie 100 % of the time”; Dunn et al., 2018). The items from the IDRIS were developed specifically to avoid these features.

A fifth desirable feature relates only to the IDRIA, and it is that the IDRIA can be used among adjective-based scales. Although adjective-based scales have fallen somewhat out of favor over the last several decades, many are still in widespread use. For example, the Big Five Mini-Markers (Saucier, 1994) – a set of 40 adjectives for assessing the Big Five personality traits – was cited (if not necessarily used) 140 times in 2020 alone. This is not to mention the Midlife Development Inventory (Lachman & Weaver, 1997), which has been used in numerous large-scale data collection efforts over the past three decades (e.g., Beals et al., 2003; Juster & Suzman, 1995; Ryff et al., 2018). The recent development of a number of adjective-based scales (e.g., the Narcissistic Grandiosity Scale; Crowe et al., 2016) also suggests the field may be going through something of an adjective-based-scale renaissance. As far as we know, the IDRIA (Kay, 2023) is the only infrequency/frequency scale that is composed entirely of adjectives and is, therefore, the only infrequency/frequency scale that is appropriate for use among adjective-based scales.

With only a small amount of modification, the IDRIA is also the only infrequency/frequency scale that is appropriate for use among adjective-derived scales. By “adjective-derived scales”, we mean statement-based scales that were originally developed using adjectives. Take the Big Five Inventory (John & Srivastava, 1999) as an example. The scale is ostensibly statement-based, but, from looking at the items, it is evident that they were developed, at least in part, from adjectives. The item “I see myself as someone who is original, comes up with new ideas” can, for instance, be boiled down to the adjective “original”. By reversing this process, the IDRIA items can be made to work with adjective-derived scales (see Appendix 2). The IDRIA item “asleep” can, for example, be changed to “I am asleep, not awake”, which would fit in quite well with the other items from the Big Five Inventory. In contrast, the IDRIS item “If I heard a loud noise behind me, I would turn around to see what it was” would be quite conspicuous if administered alongside the items from the Big Five Inventory.

The current study

Despite their promising features, it is yet unclear whether the IDRIS and IDRIA are able to actually detect C/IE responding. This is, of course, a problem: detecting C/IE responding is the whole purpose of these scales. To remedy this issue, we conducted six studies. Study 1 evaluated the validity of the IDRIS by examining its association with a number of previously validated indices of C/IE responding. Study 2 further evaluated the validity of the IDRIS, while also providing an initial validation of the IDRIA. Study 3 served as an additional replication of the results for the IDRIS from Study 1 and Study 2, while Study 4 served as an additional replication of the results for the IDRIA from Study 2. In Study 5, we moved beyond the samples of American undergraduate students used in the prior four studies to examine whether the IDRIS and IDRIA are capable of detecting C/IE responding in broad samples of adults from the US, India, and Nigeria. In Study 6, we used a sample from Amazon’s Mechanical Turk (MTurk) to investigate whether the IDRIS and IDRIA are able to detect C/IE survey responding on a popular on-demand data collection platform. Moreover, we examined whether the IDRIS and IDRIA are associated with C/IE responding to the same degree as a previously validated infrequency/frequency scale. In five of the six studies, we also fit receiver operating characteristic (ROC) curves to identify optimal cut thresholds for identifying C/IE respondents using the IDRIS and IDRIA.

Study 1

Study 1 was intended to provide a preliminary investigation of the validity of the IDRIS. To that end, we examined the associations of the IDRIS with several common indices of C/IE responding, including response durations, long strings of identical responses, intra-individual response variabilities, person-total correlations, responses to psychometric synonyms, and responses to psychometric antonyms. We also tested whether the IDRIS is able to predict whether a given participant will provide fake e-mail addresses when asked to provide contact information for three informants.

Method

Participants and procedures

Five hundred undergraduate students (70.00% women; 27.60% men; M age = 19.52; SD age = 2.40) completed the IDRIS as part of a larger survey administered at the University of Oregon. This sample size was selected to fit the needs of a separate project. Nevertheless, a sample of this size would have a 99.98% probability of detecting a large effect (r = .30; Funder & Ozer, 2019) with a two-tailed alpha level of .001 when such an effect existed. The survey included 264 statements spread across four blocks. The first block included 77 items, the second block included 82 items, the third block included 76 items, and the fourth block included 29 items. These items were drawn from a diverse set of measures, including the 40-item Narcissistic Personality Inventory (Raskin & Hall, 1979), the 32-item Uniqueness Scale (Snyder & Fromkin, 1977), and the 20-item Desirability of Control Scale (Burger & Cooper, 1979)Footnote 5.

Materials

IDRIS

The IDRIS (\({\overline{r}}_{ij}=.30\); \(\alpha =.85\)) items were intermixed with the other items in the survey. Specifically, two of the IDRIS items were included in the first block of the survey and four of the IDRIS items were included in the second, third, and fourth blocks of the survey. Each block included equal numbers of infrequency items and frequency items. In order to create an index of C/IE responding, the frequency items were reverse-scored and averaged together with the infrequency items. Higher scores on the resulting composite indicated a greater likelihood of C/IE responding. Participants responded to the IDRIS, as well as the filler items, on a five-point Likert scale (– 2 = “Strongly disagree”; 2 = “Strongly agree”).

Response duration

Response duration refers to the length of time it takes a participant to respond to a survey (Bowling et al., 2021; Huang et al., 2012; Wise & Kong, 2005), with shorter durations being indicative of C/IE responding. In the present study, some participants had extremely long response durations (e.g., 85 min), potentially due to leaving the survey open while they completed other tasks. In order to address this issue, we recoded the response times for the 10% slowest responders as missing values (see Meade & Craig, 2012).

Long-string index

The long-string index captures the longest string of identical responses provided by each participant (Johnson, 2005). In this case, longer strings are indicative of C/IE responding. In the present study, we used the longstring function from the careless package (Yentes & Wilhelm, 2021) to produce a long-string index across the entire survey, as well as within each of the four blocks.

Intra-individual response variability

Intra-individual response variability (IRV) refers to the standard deviation of a participant’s responses to a set of items (Thalmayer & Saucier, 2014; see also Dunn et al., 2018). Low IRV values are indicative of C/IE responding.Footnote 6 In the present study, we used the irv function from the careless package (Yentes & Wilhelm, 2021) to calculate the IRV for each participant. As with the long-string index, we calculated IRV scores across the entire survey, as well as within each of the four blocks.

Person-total correlation

A person-total correlation is a correlation between a given participant’s responses and the average participant’s responses (Donlon & Fischer, 1968; see also Curran, 2016). Although participants should not all exhibit the same pattern of responses, they should, if they are responding validly, provide responses that are at least somewhat similar to the responses of others. As such, low person-total correlations are indicative of C/IE responding. We used the profile function from the panoply package (Kay, 2019) to calculate person-total correlations for each participant in the present survey. As with the long-string index and IRV scores, we produced person-total correlations across the entire survey, as well as within each of the four blocks.

Psychometric-synonyms and psychometric-antonyms indices

The psychometric synonyms index represents the within-person correlation between highly positively correlated pairs of items, while the psychometric antonyms index represents the within-person correlation between highly negatively correlated pairs of items (Meade & Craig, 2012). Accordingly, smaller positive correlations on the psychometric synonyms index are indicative of C/IE responding, while smaller negative correlations on the psychometric antonyms index are indicative of C/IE responding. In the present study, the psychometric synonyms included any pairs of items that demonstrated a correlation more extreme than .60. The psychometric antonyms were initially intended to include any pairs of items that demonstrated a correlation more extreme than – .60 (see Meade & Craig, 2012). This threshold was decreased to – .50, however, as there were not enough pairs of items to produce the psychometric antonyms index when the threshold was – .60. The psychometric synonyms index was based on 33 pairs of items and, after reducing the threshold, the psychometric antonyms index was based on 3 pairs of items. The indices were calculated using the psychsyn function from the careless package (Yentes & Wilhelm, 2021).

Fake informant e-mail addresses

After completing the four blocks of the survey, the participants were asked to provide e-mail addresses for three people who knew them well enough to accurately rate their personalities. The first author reviewed the e-mail addresses and flagged any participants that provided e-mail addresses that (a) included the participants’ first or last names, suggesting the participants were recommending themselves, (b) were Gmail e-mail addresses and less than six characters long (i.e., the minimum length for Gmail e-mail addresses), (c) indicated that the participant was purposefully not cooperating with the instructions (e.g., providing the e-mail addresses “no@email.com”, “nope@email.com”, and “nothanks@email.com”), (d) were all identical (e.g., providing the e-mail address “jsmith@email.com” for all three informants), (e) included the names of celebrities (e.g., providing the e-mail addresses “markhamill@email.com”, “carriefisher@email.com”, and “harrisonford@email.com”), (f) included the names of fictional characters (e.g., providing the e-mail addresses “drmanhattan@email.com”, “rorschach@email.com”, and “ozymandias@email.com”), (g) followed a pattern (e.g., providing the e-mail addresses “aaaaaa@email.com”, “bbbbbb@email.com”, and “cccccc@email.com”), or (h) were otherwise improbable (e.g., providing the e-mail addresses “htnkjl@email.com”, “mnhjktl@email.com”, and “bchpkljh@email.com”).

Results and discussion

Descriptive statistics for all of the Study 1 variables are provided in the Supplementary Material, as are the zero-order correlations among the variables.

Associations of the IDRIS with the C/IE indices

Consistent with our expectations, the higher a person scored on the IDRIS, the more likely they were to (a) speed through the survey (r = – .40, p < .001), (b) provide long strings of identical responses (r = .48, p < .001), (c) exhibit low response variabilities (r = – .55, p < .001), (d) depart from the average pattern of responses (r = – .75, p < .001), (e) respond differently to psychometrically synonymous items (r = – .57, p < .001), and (f) respond similarly to psychometrically antonymous items (r = .23, p < .001) (Table 1)Footnote 7. When asked to provide e-mail addresses for the three informants, participants scoring high on the IDRIS were also more likely to provide fake e-mail addresses, \({\chi }^{2}\left(1, N=500\right)=16.22\), p < .001. Specifically, the odds of the participant providing a fake e-mail increased by 2.05 (95% CI [1.45, 2.91]) times for every one-unit higher they scored on the IDRIS (b = 0.72, 95% CI [0.37, 1.07], SE = 0.18, Wald = 4.02, p < .001). Overall, these results indicate that the IDRIS is a useful predictor of C/IE responding.

Optimal cut-off thresholds for identifying C/IE responders using the IDRIS

To identify the best cut-off scores for identifying C/IE responders using the IDRIS, we fit an ROC curve (see Stanislaw & Todorov, 1999; Table 2). An ROC curve illustrates the trade-off between sensitivity – the proportion of correctly classified positive cases among all positive cases – and specificity – the proportion of correctly classified negative cases among all negative cases – for all levels of some classifier. The area under the curve (AUC) provides an index of how well the scale is able to differentiate positive from negative cases. In the present study, positive and negative cases of C/IE responding were identified by subjecting the six continuous indices of C/IE responding to a K-means clustering algorithm.Footnote 8 The greatest average Silhouette score (i.e., how close a participant was to their own cluster relative to other clusters) was achieved for a two-cluster solution (.46). According to thresholds reported by Mandrekar (2010), the IDRIS had an excellent classification ability (AUC = .97). The cut-off score with the best sensitivity and specificity for the IDRIS was – .96.

Study 2

The purpose of Study 2 was two-fold. First, we wanted to replicate and extend the findings from Study 1. For the most part, we did this by examining the association of the IDRIS with the same indices of C/IE responding used in Study 1. However, instead of having participants recommend three informants, we had participants complete a self-report measure of C/IE responding. Second, we wanted to provide an initial evaluation of the validity of the IDRIA. We did this by examining the IDRIA in relation to the same indices used to further test the validity of the IDRIS.

Method

Participants and procedures

Seven hundred one undergraduate students (67.76% women; 28.10% men; M age = 19.48; SD age = 1.92) completed the IDRIS and IDRIA as part of a larger survey administered at the same university as in Study 1. Again, this sample size was selected to fit the needs of a separate project. A sample of this size would have a 99.99% probability of detecting a large effect (r = .30; Funder & Ozer, 2019) with a two-tailed alpha level of .001 when such an effect existed. The survey included 377 statements spread across three blocks: the first block included 125 statements, the second block included 124 statements, and the third block included 128 statements. As in Study 1, the statements were pulled from a variety of measures, including the 100-item HEXACO-PI-R (Ashton & Lee, 2005; Lee & Ashton, 2004), the 64-item Self-Report Psychopathy Scale - 4 (Paulhus et al., 2016), and the 20-item Mach-IV (Christie & Geis, 1970). The survey also included 101 adjectives, which were presented in a fourth block. The adjectives included the 6 items from the IDRIA and the 95 items from the Lexical Factor Model of Personality - 20 (Lex-20; Saucier & Iurino, 2020).

Materials

IDRIS

See Study 1 for a full description of the IDRIS (\({\overline{r}}_{ij}=.31\); \(\alpha =.84\)). In the present study, four of the IDRIS items were included in each of the first, second, and third blocks of the survey. As in Study 1, each block included equal numbers of infrequency items and frequency items.

Due to a coding error, the first IDRIS item was not collected for the first 500 respondents. We used the missRanger function from the missRanger package (Mayer, 2021) to impute the missing values using a chained random forest model with a maximum of 10 chaining iterations, 10,000 trees, unlimited tree depth, and 3 variables randomly sampled at each split. The conclusions that can be drawn from the analyses were the same if we used the imputed values or if we based the IDRIS scores on the average of the 13 IDRIS items that were administered to these participants.

IDRIA

The IDRIA (\({\overline{r}}_{ij}=.19\); \(\alpha =.56\)) items were intermixed randomly with the adjectives from the Lex-20 in the fourth block. As with the IDRIS, the frequency items were reverse scored and averaged together with the infrequency items to produce an index of C/IE responding. Participants responded to these adjectives, as well as the Lex-20, using a nine-point response scale (– 4 = “extremely inaccurate”; 4 = “extremely accurate”).

Response duration

See Study 1 for a full description of how response duration was assessed. In Study 2, we added a separate timer to each block, allowing us to calculate each participant’s overall and block-specific response durations.

Long-string index

See Study 1 for a full description of how the long-string index was calculated. We produced a long-string index across the three statement-based survey blocks, as well as within each of the four blocks.

Intra-individual response variability

See Study 1 for a full description of how IRV was calculated. We calculated IRVs across the three statement-based survey blocks, as well as within each of the four blocks.

Person-total correlation

See Study 1 for a full description of how the person-total correlations were calculated. We produced person-total correlations across the three statement-based survey blocks, as well as within each of the four blocks.

Psychometric-synonyms and psychometric-antonyms indices

See Study 1 for a full description of how psychometric synonyms and psychometric antonyms indices were calculated. In the present study, we calculated separate psychometric synonyms and psychometric antonyms indices for the statements (Block 1, Block 2, and Block 3) and for the adjectives (Block 4). A correlation of .60 was sufficient for calculating the psychometric synonyms index for the statements and the adjectives. A correlation of – .55 was required to generate the psychometric antonyms for the statements and the adjectives. In the end, the psychometric synonyms index for the statements was based on 42 pairs of items, and the psychometric synonyms index for the adjectives was based on 18 pairs of items. The psychometric antonyms index for the statements was based on 3 pairs of items and the psychometric antonyms index for the adjectives was based on 6 pairs of items.

Self-report measure of C/IE responding

Participants responded to a single item at the end of the survey assessing their self-reported levels of C/IE responding. The item was similar to that used by Aust and colleagues (2012) and Meade and Craig (2012). Specifically, the participants were asked, “Is there any reason we should exclude your responses from our analyses (e.g., you did not respond to the survey questions truthfully; you selected answers at random)?” Participants could respond by either selecting “Yes – my survey SHOULD be thrown out” or “No – my survey SHOULD NOT be thrown out.” We decided to include this item only after we had already collected data from 75 participants.

Results and discussion

Descriptive statistics for all of the Study 2 variables are provided in the Supplementary Material, as are the zero-order correlations among the variables.

Associations of the IDRIS and IDRIA with the C/IE indices

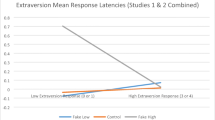

As in Study 1, participants scoring high on the IDRIS were more likely to (a) speed through the survey (r = – .27, p < .001), (b) provide long strings of identical responses (r = .50, p < .001), (c) exhibit low response variabilities (r = – .68, p < .001), (d) depart from the average pattern of responses (r = – .68, p < .001), (e) respond differently to psychometrically synonymous items (r = – .65, p < .001), and (f) respond similarly to psychometrically antonymous items (r = .18, p < .001) (Table 3; Fig. 1)Footnote 9. Participants high on the IDRIS were also more likely to indicate that their data should be discarded, \({\chi }^{2}\left(1, N=629\right)\) = 94.85, p < .001. Specifically, for every one-unit higher a participant scored on the IDRIS, the odds that they said their data should be excluded increased by 20.68 (95% CI [10.42, 45.09]) times (b = 3.03, 95% CI [2.34, 3.81], SE = 0.37, Wald = 8.16, p < .001) (Fig. 2).

Scatterplots depicting the association of the A IDRIS and B IDRIA scores with response durations, the long string index, intra-individual response variabilities, person-total correlations, psychometric synonyms, and psychometric antonyms in Study 2

Participants high on the IDRIA were also more likely to (a) speed through the survey (r = – .24, p = .001), (b) provide long strings of identical responses (r = .29, p < .001), (c) exhibit low response variabilities (r = – .51, p < .001), (d) depart from the average pattern of responses (r = – .36, p < .001), (e) respond differently to psychometrically synonymous items (r = – .36, p < .001), and (f) respond similarly to psychometrically antonymous items (r = .26, p = .001) (Table 3; Fig. 1). They were also more likely to indicate that their data should be discarded, \({\chi }^{2}\)(1, \(N\) = 629) = 39.62, p < .001. For every one-unit higher a participant scored on the IDRIA, the odds that they said their data should be excluded increased by 3.69 (95% CI [2.36, 6.03]) times (b = 1.30, 95% CI [0.86, 1.80], SE = 0.24, Wald = 5.47, p < .001) (Fig. 2).

Overall, these findings indicate that, as in Study 1, the IDRIS is a valid predictor of C/IE responding. It also provides preliminary evidence that the IDRIA is a valid predictor of C/IE responding.

Optimal cut-off thresholds for identifying C/IE responders using the IDRIS and IDRIA

To identify the best cut-off scores for identifying C/IE responders using the IDRIS and IDRIA, we fit ROC curves (Table 2). As in Study 1, positive and negative cases of C/IE responding were identified by subjecting the continuous indices of C/IE responding to a K-means clustering algorithm. For the IDRIS, we used the combined C/IE responding indices from Block 1, 2, and 3, and, for the IDRIA, we used the C/IE responding indices from Block 4. In both cases, the greatest average Silhouette score was achieved for a two-cluster solution (Silhouette1/2/3 = .56; Silhouette4 = .55). The IDRIS had an outstanding classification ability (AUC = .99) and the IDRIA had an excellent classification ability (AUC = .88). The optimal cut-off score was – .93 for the IDRIS and – .75 for the IDRIA.

We also reran the ROC curve analysis using the self-report measure of C/IE responding as the outcome variable. The results were largely the same as when we used K-means clustering. Both the IDRIS (AUC = .89) and IDRIA (AUC = .80) had excellent classification abilities. The optimal cut-off score was – .79 for the IDRIS and – .75 for the IDRIA.

Study 3

The purpose of Study 3 was to provide further validation of the IDRIS. The indices used to validate the IDRIS in Study 3 are the same as those used in Study 2, but we did not assess self-reported C/IE responding. Instead, we calculated a semantic antonyms index.

Method

Participants and procedures

Five hundred thirty-six undergraduate students (64.55% women; 31.53% men; M age = 19.80; SD age = 2.35) completed the IDRIS as part of a larger survey administered at the same university as in Study 1 and Study 2. As in the prior studies, the sample size was selected to fit the needs of a separate project. A sample of this size would have a 99.99% probability of detecting a large effect (r = .30; Funder & Ozer, 2019) with a two-tailed alpha level of .001 when such an effect existed. The survey included a single block of 88 statements, including 14 items from the IDRIS, 60 items from the HEXACO-PI-R (Ashton & Lee, 2005; Lee & Ashton, 2004), and 14 items from a Semantic Antonyms Set.

Materials

See Study 1 for a full description of the IDRIS (\({\overline{r}}_{ij}=.42\); \(\alpha =.90\)). In the present study, we made several changes to the IDRIS items. Most of these changes were intended to either increase clarity or make the infrequency items more infrequent and the frequency items more frequent. As an example of increasing clarity, we updated the item, “I often say goodbye before I end a phone call” to “I often say some form of goodbye right before I end a phone call”. We wanted to make it clear that participants should endorse this item if they use any words of farewell before hanging up the phone (e.g., “see you later”), not just the literal word “goodbye”. As an example of making the infrequency items more infrequent and the frequency items more frequent, we updated the item “It should be illegal to intentionally kill another person” to “It should be illegal to intentionally kill an innocent person”. We were concerned that some people would disagree with this item solely because they support capital punishment. A side-by-side comparison of the items used in Study 1, Study 2, Study 3, and Study 5 can be found in the Supplementary Material.

Response duration

See Study 1 for a full description of how response duration was assessed.

Long-string index

See Study 1 for a full description of how the long-string index was calculated.

Intra-individual response variability

See Study 1 for a full description of how IRV was calculated.

Person-total correlation

See Study 1 for a full description of how the person-total correlations were calculated.

Psychometric-synonyms and psychometric-antonyms indices

See Study 1 for a full description of how the psychometric synonyms and psychometric antonyms indices were calculated. In the present study, a correlation of .50 was necessary to generate the psychometric synonyms index. A correlation of – .60 was sufficient for generating the psychometric antonyms index. In the end, the psychometric synonyms index was based on four pairs of items, and the psychometric antonyms index was based on three pairs of items.

Semantic antonyms

The idea behind a semantic antonyms index is the same as a psychometric antonyms index but, instead of selecting pairs of items based on observed correlations in one’s data, researchers include items in their surveys that are judged to be semantically antonymous a priori (see Goldberg & Kilkowski, 1985). Here, we included seven pairs of semantically antonymous items (e.g., “I go through money quickly” and “I am good at saving money”), hereafter referred to as the Semantic Antonyms Set. These items turned out to be both semantically antonymous and psychometrically antonymous: the correlations between the pairs of items in the Semantic Antonyms Set ranged from – .31 to – .71. A full list of the items in the Semantic Antonyms Set, as well as their correlations, can be found in the Supplementary Material.

Results and discussion

Descriptive statistics for all of the Study 3 variables are provided in the Supplementary Material, as are the zero-order correlations among the variables.

Associations of the IDRIS with the C/IE indices

Consistent with our expectations, the higher a person scored on the IDRIS, the more likely they were to (a) speed through the survey (r = – .58, p < .001), (b) provide long strings of identical responses (r = .52, p < .001), (c) exhibit low response variabilities (r = – .65, p < .001), (d) depart from the average pattern of responses (r = – .62, p < .001), (e) respond differently to psychometrically synonymous items (r = – .34, p < .001), (f) respond similarly to psychometrically antonymous items (r = .32, p < .001), and (g) respond similarly to semantically antonymous items (r = .41, p < .001) (Table 4)Footnote 10. As in Study 1 and Study 2, the present results indicate that the IDRIS is a useful predictor of C/IE responding.

Optimal cut-off thresholds for identifying C/IE responders using the IDRIS

As in the prior two studies, we fit an ROC curve to identify the best cut-off score for identifying C/IE responders using the IDRIS (Table 2). Positive and negative cases of C/IE responding were, again, identified by subjecting the continuous indices of C/IE responding to a K-means clustering algorithm. In this case, the greatest average Silhouette score was achieved for a two-cluster solution (Silhouette = .51). The IDRIS had an outstanding classification ability (AUC = .97), and the optimal cut-off score was – .96.

Study 4

The purpose of Study 4 was to provide further validation of the IDRIA. However, instead of administering the IDRIA items in their adjectival form, we presented them in a statement-based form. The purpose of this change was to examine whether the IDRIA would be able to detect C/IE responding among adjective-derived scales (e.g., the Big Five Inventory - 2). We examined the IDRIA in relation to the same indices used in Study 3, as well as two instructed response items (e.g., “Select disagree for this statement”).

Method

Participants and procedures

Four hundred ninety-nine undergraduate students (63.13% women; 33.87% men; M age = 19.63; SD age = 2.05) completed the IDRIA as part of a larger survey administered at the same university as in the first three studies. As in the prior studies, the sample size was selected to fit the needs of a separate project. A sample of this size would have a 99.99% probability of detecting a large effect (r = .30; Funder & Ozer, 2019) with a two-tailed alpha level of .001 when such an effect existed. After responding to several blocks of items relevant to a separate project, participants were administered a single block of 52 statements, including 6 statements adapted from the IDRIA, 30 statements from the Big Five Inventory – 2 Short Form (Soto & John, 2017b), 14 statements from the Semantic Antonyms Set, and 2 instructed response statements.

Materials

IDRIA

See Study 2 for a full description of the IDRIA (\({\overline{r}}_{ij}=25\); \(\alpha =63\)). In order to administer the IDRIA adjectives as statements, we added text that was intended to clarify (but, critically, not change) the underlying meaning of the items (Appendix 2). For example, “asleep” became “I am asleep, not awake”. Participants responded to this version of the IDRIA on a five-point Likert scale (– 2 = “Strongly disagree”; 2 = “Strongly agree”).

Response duration

See Study 1 for a full description of how response duration was assessed.

Long-string index

See Study 1 for a full description of how the long-string index was calculated.

Intra-individual response variability

See Study 1 for a full description of how IRV was calculated.

Person-total correlation

See Study 1 for a full description of how the person-total correlations were calculated.

Psychometric-synonyms and psychometric-antonyms indices

See Study 1 for a full description of how the psychometric synonyms and psychometric antonyms indices were calculated. In the present study, a correlation of .55 was necessary to generate the psychometric synonyms and a correlation of – .55 was necessary to generate the psychometric antonyms. In the end, the psychometric synonyms index was based on four pairs of items, and the psychometric antonyms index was based on four pairs of items.

Semantic antonyms

See Study 3 for a full description of how the semantic antonyms index was calculated.

Instructed response items

Instructed response items direct participants to select a specific response option from a response scale (see Curran, 2016). Not selecting the requested response option is taken as evidence of C/IE responding. Here, we included two of these items: (1) “Select disagree for this statement” and (2) “Choose the middle response option for this statement”.

Results and discussion

Descriptive statistics for all of the Study 4 variables are provided in the Supplementary Material, as are the zero-order correlations among the variables.

Associations of the IDRIA with the C/IE indices

Consistent with our expectations, the higher a person scored on the IDRIA, the more likely they were to (a) speed through the survey (r = – .33, p < .001), (b) provide long strings of identical responses (r = .42, p < .001), (c) exhibit low response variabilities (r = – .51, p < .001), (d) depart from the average pattern of responses (r = – .42, p < .001), (e) respond differently to psychometrically synonymous items (r = – .26, p < .001), (f) respond similarly to psychometrically antonymous items (r = .18, p < .001), and (g) respond similarly to semantically antonymous items (r = .27, p < .001) (Table 5).Footnote 11 Moreover, when instructed to respond “disagree” to an item, participants scoring high on the IDRIA were more likely to select something other than “disagree”, \({\chi }^{2}\left(1, N=499\right)\) = 68.70, p < .001. Specifically, the odds of the participant responding something other than “disagree” increased by 5.48 (95% CI [3.59, 8.56]) times for every one-unit higher they scored on the IDRIA (b = 1.70, 95% CI [1.28, 2.15], SE = 0.22, Wald = 7.69, p < .001). Likewise, when instructed to select the middle response option to an item, participants scoring high on the IDRIA were more likely to select something other than the middle response option, \({\chi }^{2}\left(1, N=499\right)\) = 65.52, p < .001. Specifically, the odds of the participant selecting something other than the middle response option increased by 16.13 (95% CI [7.53, 39.42]) times for every one-unit higher they scored on the IDRIA (b = 2.78, 95% CI [2.02, 3.67], SE = 0.42, Wald = 2.78, p < .001).Footnote 12 As in Study 2, the present results indicate that the IDRIA is a useful predictor of C/IE responding.

Optimal cut-off thresholds for identifying C/IE responders using the IDRIA

As before, we fit an ROC curve to identify the best cut-off score for identifying C/IE responders using the IDRIA (Table 2). Positive and negative cases of C/IE responding were again identified by subjecting the continuous indices of C/IE responding to a K-means clustering algorithm, with the greatest average Silhouette score being achieved by a two-cluster solution (Silhouette = .49). The IDRIA had an outstanding classification ability (AUC = .91). The optimal cut-off score was – .75.

Study 5

The purpose of Study 5 was to examine whether the IDRIS and IDRIA are capable of detecting C/IE responders in broad samples of adults recruited from the US, India, and Nigeria. It is well known that the frequencies of beliefs and behaviors vary across countries (Henrich et al., 2010). If an infrequency/frequency scale is intended to be used in a country other than the one it was developed in, it is, therefore, crucial to evaluate whether the scale is actually able to detect C/IE responding in that country. To our knowledge, this is the first study to compare the validity of non-proprietary infrequency/frequency scales across countries (but see Cheung et al., 1991).

Before proceeding, it should be noted that the data for Study 5 was prescreened by Qualtrics Panels based on two indicators: response durations and long strings of identical responses. Because of this, we have not reported the correlations of the IDRIS and IDRIA with these indices in the main text, as they would not be an accurate reflection of the true associations among these variables. We have, nevertheless, provided these correlations in the Supplementary Material. For the same reason, we did not produce ROC curves in this study.

Method

Participants and procedures

We used Qualtrics Panels to recruit participants from the US (N = 209; 49.76% women; M age = 40.14; SD age = 13.74); India (N = 210; 50.00% women; M age = 35.84; SD age = 11.27); and Nigeria (N = 210; 50.00% women; M age = 34.90; SD age = 10.18). As in the prior studies, the sample sizes were selected to fit the needs of a separate project. Samples of these sizes would have an 87.53% to 87.75% probability of detecting a large effect (r = .30; Funder & Ozer, 2019) with a two-tailed alpha level of .001 when such an effect existed. All participants completed a survey with two blocks. The first block comprised 76 statements, including the IDRIS, the 20-item Mach-IV (Christie & Geis, 1970), the 13-item Narcissistic Personality Inventory - 13 (Gentile et al., 2013), and the 29-item Self-Report Psychopathy Scale - 4 (Short Form) (Paulhus et al., 2016). The second block comprised 101 adjectives, including the IDRIA and the Lex-20 (Saucier & Iurino, 2020).

Materials

IDRIS

See Study 1 for a full description of the IDRIS (US: \({\overline{r}}_{ij}=.29\), \(\alpha =.83\); India: \({\overline{r}}_{ij}=.17\), \(\alpha =.69\); Nigeria: \({\overline{r}}_{ij}=.14\), \(\alpha =.64\)). Due to a discrepancy between our estimate of the duration of the survey and Qualtrics Panels’ estimate of the duration of the survey, we administered a reduced set of eight items to the first 25 participants from the US and India. Once we confirmed our estimate was accurate, we administered the full set of 14 items. As in Study 2, we used the missRanger function from the missRanger package (Mayer, 2021) to impute the missing values using a chained random forest model with a maximum of 10 chaining iterations, 10,000 trees, unlimited tree depth, and 3 variables randomly sampled at each split. The conclusions that can be drawn from the analyses were the same regardless of whether we used the imputed values or based the IDRIS scores on the average of the eight IDRIS items that were administered to these participants.

IDRIA

See Study 2 for a full description of the IDRIA (US: \({\overline{r}}_{ij}=.17\), \(\alpha =.56\); India: \({\overline{r}}_{ij}=.12\), \(\alpha =.42\); Nigeria: \({\overline{r}}_{ij}=.13\), \(\alpha =.47\)).

Intra-individual response variability

See Study 1 for a full description of how IRV was calculated.

Person-total correlations

See Study 1 for a full description of how the person-total correlations were calculated.

Psychometric-synonym and psychometric-antonym indices

See Study 1 for a full description of how the psychometric synonyms and psychometric antonyms indices were calculated. In the present study, a correlation of .50 was required to generate psychometric synonyms for the statements in each country, and a correlation of .60 was required to generate psychometric synonyms for the adjectives in each country. A correlation of – .25 was required to generate psychometric antonyms for the statements in each country, and a correlation of – .35 was required to generate psychometric antonyms for the adjectives in each country. In the end, the psychometric synonyms scores for the statements were based on 134, 26, and 7 pairs of items for the US, Indian, and Nigerian samples, respectively. The psychometric synonyms scores for the adjectives were based on 53, 41, and 95 pairs of items for the US, Indian, and Nigerian samples, respectively. The psychometric antonyms scores for the statements were based on 17, 5, and 6 pairs of items for the US, Indian, and Nigerian samples, respectively. The psychometric antonyms scores for the adjectives were based on 44, 8, and 185 pairs of items for the US, Indian, and Nigerian samples, respectively.

Results and discussion

Descriptive statistics for all of the Study 5 variables are provided in the Supplementary Material, as are the zero-order correlations among the variables.

Associations of the IDRIS and IDRIA with the C/IE indices

With one exception, participants scoring high on the IDRIS in each country were more likely to (a) exhibit low response variabilities (US: r = – .41, p < .001; India: r = – .60, p < .001; Nigeria: r = – .49, p < .001), (b) depart from the average pattern of responses (US: r = – .59, p < .001; India: r = – .54, p < .001; Nigeria: r = – .46, p < .001), (c) respond differently to synonymous items (US: r = – .32, p < .001; India: r = – .50, p < .001; Nigeria: r = – .31, p < .001), and (d) respond similarly to antonymous items (US: r = .56, p < .001; India: r = .50, p < .001; Nigeria: r = .16, p = .023) (Table 6)Footnote 13. The one exception was that people from Nigeria who scored high on the IDRIS were no more likely to respond similarly to antonymous items, at least at the more conservative alpha level of .001.

Similar to the IDRIS, participants scoring high on the IDRIA were more likely to (a) exhibit low response variabilities (US: r = – .51, p < .001; India: r = – .58, p < .001; Nigeria: r = – .40, p < .001), (b) depart from the average pattern of responses (US: r = – .43, p < .001; India: r = – .53, p < .001; Nigeria: r = – .49, p < .001), (c) respond differently to synonymous items (US: r = – .55, p < .001; India: r = – .44, p < .001; Nigeria: r = – .25, p < .001), and (d) respond similarly to antonymous items (US: r = .48, p < .001; India: r = .28, p < .001; Nigeria: r = .23, p = .001).

Taken together, these results indicate that – even when using a dataset that has been prescreened for low response durations and long strings of identical responses – the IDRIS and IDRIA can be used to successfully detect C/IE responding in samples drawn from the US, India, and Nigeria.

Study 6

Study 6 had two purposes. First, we wanted to further replicate and extend the findings from the prior studies. We did this by examining the associations of the IDRIS and IDRIA with the same indices of C/IE responding used in Study 4. However, we used MTurk – a data-collection platform known for its low-quality data (Douglas et al., 2023; Moss & Litman, 2018) – to recruit participants. We also implemented cursor tracking to test whether the IDRIS and IDRIA could detect patterns indicative of C/IE responding in cursor movements. The second purpose of the proposed study was to compare the performance of the IDRIS and IDRIA against an extant infrequency/frequency item measure: the Deviant Responding subscale of the Psychopathic Personality Inventory (PPI-DR; Lilienfeld, 1994).

We had six preregistered hypotheses (https://osf.io/dpckj/?view_only=680406f9cfdb41a3b12006241973def0).Footnote 14 We expected (H1) the IDRIS, IDRIA, and PPI-DR to be positively correlated with each other; (H2) the IDRIS and IDRIA to be negatively correlated with response durations, intra-individual response variabilities, the standard deviations of the participants’ horizontal cursor movements, person-total correlations, and the psychometric synonyms index; (H3) the IDRIS and IDRIA to be positively correlated with long strings of identical responses, the psychometric antonyms index, and the semantic antonyms index; (H4) the correlations of the IDRIS and IDRIA with the continuous indices of C/IE responding to not differ significantly from those seen for the PPI-DR; and (H5) higher scores on the IDRIS and IDRIA to be associated with greater odds of failing instructed response items. Critically, we (H6) did not expect higher scores on the IDRIS and IDRIA to be associated with greater odds of reporting that one’s data should be excluded via the self-report measure of C/IE responding. This differs from the finding from Study 2, which showed higher scores on the IDRIS and IDRIA were associated with greater odds of reporting that one’s data should be excluded. The reason we expected this departure from the prior results was that participants in Study 6 were being paid to participate in the study and may have, consequently, believed that reporting that their data should be excluded would result in their payments being withheld. Participants in Study 2 were undergraduate students taking part in the study for course credit, so this would not have been a concern.

Method

Participants and procedures

Five hundred sixty-two MTurk workers (31.85% women; 66.55% men; M age = 32.52; SD age = 8.32) completed the IDRIS, IDRIA, and PPI-DR. They were paid at a rate equivalent to $7.25 an hour for their participation. The sample size in this case was based on two power analyses. The first power analysis was for a Pearson r correlation. It indicated that 486 participants would be required to detect a .18 correlation – the smallest focal correlation from Study 3 – 80% of the time that such an effect existed with a two-tailed alpha level of .0016. An alpha level of .0016 was used to account for type I error rate inflation resulting from testing 30 associations (i.e., the associations of the IDRIS, IDRIA, and PPI-DR with the 10 indices of C/IE responding). The second power analysis was for the comparison of two dependent correlations with a shared variable, since one of the goals of Study 6 was to compare the associations of the IDRIS and IDRIA with the indices of C/IE responding to the associations of the PPI-DR with the indices of C/IE responding. The power analysis indicated that 304 participants would be required to detect a difference between a correlation of .50 and a correlation of .30 – the smallest difference deemed to be of practical interest – 80% of the time that such an effect existed with a two-tailed alpha level of .0016. In this case, the alpha level of .0016 was used to account for type I error rate inflation resulting from conducting 30 comparisons (i.e., the IDRIS associations versus the IDRIA associations; the IDRIS associations versus the PPI-DR associations; and the IDRIA associations versus the PPI-DR associations). Taking into account both of these power analyses, we opted to collect at least 500 participants.Footnote 15

The survey included 105 statements administered in a single block. The 105 items included 14 items from the IDRIS, 6 items from the statement version of the IDRIA, 10 items from the PPI-DR, 6 items from the Semantic Antonyms Set, 2 instructed response items, 12 items from agreeableness subscale of the International Personality Item Pool’s NEO-60 (Maples-Keller et al., 2019), 12 items from the agreeableness subscale of the Big Five Inventory – 2 (Soto & John, 2017a), and 43 items from the antagonism subscale of the Personality Inventory for DSM-5 (Krueger et al., 2012). Participants responded to the statements using a seven-point Likert scale (– 3 = “Strongly disagree”; 3 = “Strongly agree”).

Materials

IDRIS

See Study 1 for a full description of the IDRIS (\({\overline{r}}_{ij}=.09\); \(\alpha =.58\)).

IDRIA

See Study 2 and Study 4 for a full description of the IDRIA (\({\overline{r}}_{ij}=.06\); \(\alpha =.28\)).

PPI-DR

The PPI-DR (\({\overline{r}}_{ij}=.07\); \(\alpha =.48\)) includes five infrequency items (e.g., “I occasionally forget my name”) and five frequency items (e.g., “I smile at a funny joke at least once in a while”). As with the IDRIS and IDRIA, the frequency items were reverse-scored and averaged together with the infrequency items to create an index of C/IE responding. Higher scores on the resulting composite indicated a greater likelihood of C/IE responding.

Response duration

See Study 1 for a full description of how response duration was assessed.

Long-string index

See Study 1 for a full description of how the long-string index was calculated.

Intra-individual response variability

See Study 1 for a full description of how IRV was calculated.

Horizontal cursor variability

The horizontal cursor variability index was based on research from Pokropek and colleagues (2023). However, instead of calculating the sum of each participant’s horizontal cursor travel, we calculated the standard deviation of each participant’s horizontal cursor travel.Footnote 16 Consequently, the index represented how far each participant’s cursor typically was from the average position of their cursor in the x-dimension. As with intra-individual response variability, low horizontal cursor variability should be indicative of C/IE responding, as it indicates that participants are only using a relatively small portion of the response scale. Using code provided by Walters (2015), we assessed the position of the participants’ cursors every 200 ms in Qualtrics.

Person-total correlation

See Study 1 for a full description of how the person-total correlations were calculated.

Psychometric-synonyms and psychometric-antonyms indices

See Study 1 for a full description of how the psychometric synonyms and psychometric antonyms indices were calculated. A correlation of .60 was sufficient for calculating the psychometric synonyms index. A correlation of .10 was required to generate the psychometric antonyms.Footnote 17 In the end, the psychometric synonyms index was based on 334 pairs of items and the psychometric antonyms index was based on 7 pairs of items.

Semantic antonyms

See Study 3 for a full description of how the semantic antonyms index was calculated. To keep administration costs down, only the three best-performing pairs of items from the Semantic Antonyms Set were used in the present study.

Instructed response items

See Study 4 for a full description of instructed response items. In the present study, the two instructed response items were: (1) “Choose strongly disagree for this item” and (2) “Select the middle response option for this item”.

Self-report measure of C/IE responding

See Study 2 for a full description of the self-report measure of C/IE responding.

Results and discussion

Descriptive statistics for all of the Study 6 variables are provided in the Supplementary Material, as are the zero-order correlations among the variables.

Associations of the IDRIS and IDRIA with the C/IE indices

The results were largely consistent with our hypotheses. Consistent with Hypothesis 1, the IDRIS (r = .84, p < .001) and IDRIA (r = .71, p < .001) were both highly positively associated with the PPI-DR (Table 7). Consistent with Hypothesis 2 and Hypotheses 3, those scoring high on the IDRIS were more likely to (a) speed through the survey (r = – .32, p < .001), (b) exhibit low response variabilities (r = – .61, p < .001), (c) exhibit low horizontal cursor variabilities (r = – .28, p < .001), (d) depart from the average pattern of responses (r = – .60, p < .001), (e) respond differently to psychometrically synonymous items (r = – .54, p < .001), (f) respond similarly to psychometrically antonymous items (r = .50, p < .001), and (g) respond similarly to semantically antonymous items (r = .19, p < .001)Footnote 18. Moreover, those scoring high on the IDRIA were more likely to (a) speed through the survey (r = – .34, p < .001), (b) exhibit low response variabilities (r = – .57, p < .001), (c) exhibit low horizontal cursor variabilities (r = – .25, p < .001), (d) depart from the average pattern of responses (r = – .63, p < .001), (e) respond differently to psychometrically synonymous items (r = – .51, p < .001), and (f) respond similarly to psychometrically antonymous items (r = .53, p < .001). Consistent with Hypothesis 4, the associations of the IDRIS and IDRIA with the indices of C/IE responding were not significantly different than those seen for the PPI-DR.

Turning to Hypothesis 5, participants scoring high on the IDRIS were more likely to select something other than “strongly disagree” when instructed to select “strongly disagree” \(\left({\chi }^{2}\left(1, N=562\right)\right)\) = 60.85, p < .001) and something other than the middle response option when instructed to select the middle response option (\({\chi }^{2}\)(1, \(N\) = 562) = 75.97, p < .001). Specifically, the odds of a participant selecting something other than “strongly disagree” in the first case increased by 5.36 (95% CI [3.18, 9.73]) times for every one-unit higher they scored on the IDRIS (b = 1.68, 95% CI [1.16, 2.28], SE = 0.29, Wald = 5.89, p < .001). The odds of a participant selecting something other than the middle response option in the second case increased by 5.60 (95% CI [3.45, 9.69]) times for every one-unit higher they scored on the IDRIS (b = 1.72, 95% CI [1.24, 2.27], SE = 0.26, Wald = 6.53, p < .001). Participants scoring high on the IDRIA were also more likely to select something other than “strongly disagree” when instructed to select “strongly disagree” \(\left({\chi }^{2}\left(1, N=562\right)\right)\) = 84.35, p < .001) and something other than the middle response option when instructed to select the middle response option \(\left({\chi }^{2}\left(1, N=562\right)\right)\) = 101.35, p < .001). Specifically, the odds of a participant selecting something other than “strongly disagree” in the first case increased by 4.26 (95% CI [2.95, 6.42]) times for every one-unit higher they scored on the IDRIA (b = 1.45, 95% CI [1.08, 1.86], SE = 0.20, Wald = 7.30, p < .001). The odds of a participant selecting something other than the middle response option in the second case increased by 4.33 (95% CI [3.08, 6.33]) times for every one-unit higher they scored on the IDRIA (b = 1.47, 95% CI [1.12, 1.85], SE = 0.18, Wald = 7.98, p < .001).

Taken together, these findings indicate that, as in the prior studies, the IDRIS and IDRIA are valid predictors of C/IE responding. Nevertheless, there were some notable departures from our hypotheses. First, inconsistent with Hypothesis 3, the IDRIS (r = .10, p = .022) and IDRIA (r = .06, p = .148) were not associated with providing long strings of identical responses. This appeared to be due to a markedly low incidence of straightlining in the dataset. A closer inspection of the data revealed that there were, however, a large number of participants who seemed to be cycling through a small set of responses (e.g., “Strongly agree”, “Moderately agree”, “Slightly agree”), perhaps as a way to avoid being flagged for straightlining. To test the idea that some participants may have adopted a sort of “bandlining” to avoid being detected as straightliners, we recoded any level of disagreement as – 1, “Neither agree nor disagree” as 0, and any level of agreement as 1 and reran the long string analyses. The IDRIS (r = .38, p < .001) and IDRIA (r = .44, p < .001) were both highly associated with the updated long-string index, suggesting that participants may have, indeed, shifted to a less conspicuous form of perseverative responding.

As a second departure from Hypothesis 3, the IDRIA was not significantly associated with the semantic antonyms index (r = .12, p = .009). The IDRIS was associated with the semantic antonyms index, but the correlation was smaller than that seen in the prior studies (r = .19, p < .001). These results may be due to the fact that we only used three pairs of items from the Semantic Antonyms Set in the present study instead of the seven pairs of items used in the previous studies. The scale may have simply been a less reliable indicator of inconsistent responding in the present study.

Finally, departing from Hypothesis 6, we found that participants scoring high on the IDRIS \(\left({\chi }^{2}\left(1, N=562\right)\right)\) = 98.18, p < .001) and IDRIS \(\left({\chi }^{2}\left(1, N=562\right)\right)\) = 97.74, p < .001) were more likely to say that their data should be excluded. Specifically, for every one-unit higher a participant scored on the IDRIS, the odds that they said their data should be excluded increased by 8.54 (95% CI [4.91, 15.95]) times (b = 2.14, 95% CI [1.59, 2.77], SE = 0.30, Wald = 7.13, p < .001) and, for every one-unit higher a participant scored on the IDRIA, the odds that they said their data should be excluded increased by 4.08 (95% CI [2.93, 5.88]) times (b = 1.41, 95% CI [1.07, 1.77], SE = 0.18, Wald = 7.91, p < .001). This was unexpected. We had predicted that the IDRIS and IDRIA would not be associated with reporting that one’s data should be excluded, since we assumed C/IE respondents would not want to admit their data was low quality out of a concern that this would result in their payments being withheld. The uncynical interpretation of this finding is that participants were genuinely concerned about our study and wanted to report that their data was low quality, even if it meant sacrificing their reward for completing the study. The more cynical interpretation of the finding is that the participants did not read the question and, instead, selected “yes” at random. In line with this latter interpretation, when asked via a textbox to indicate why their responses might not be accurate, 4.97% of the responses explicitly noted that the text was generated by a large language model (e.g., “My responses may not be accurate because I am a computer program and I rely on pre-programmed information and algorithms to provide answers”); 22.09% of the responses included instructions indicating that they were likely copied from the output of a large language model (e.g., “When answering, provide an example to show that you've used accuracy to complete projects. Example: ‘Accuracy is incredibly important to me, which is why I use it every day when I interact with my customers’”); and 48.07% of the responses included some variant of “yes”, “no”, “nothing”, “n/a”, “good”, “nice”, or “done”.

Optimal cut-off thresholds for identifying C/IE responders using the IDRIS and IDRIA

As in the prior studies, we fit an ROC curve to identify the best cut-off scores for identifying C/IE responders using the IDRIS and IDRIA (Table 2). Positive and negative cases of C/IE responding were first identified by subjecting the continuous indices of C/IE responding to a K-means clustering algorithm. The greatest average Silhouette score was achieved for a two-cluster solution (Silhouette = .33). The IDRIS (AUC = .83) and IDRIA (AUC = .86) both had excellent classification abilities. The optimal cut-off score was – 0.43 for the IDRIS and – .58 for the IDRIA.

We also reran the ROC curve analysis using the self-report measure of C/IE responding as the outcome variable. In this case, the IDRIS (AUC = .73) and IDRIA (AUC = .74) only had acceptable classification abilities. The optimal cut-off score was – .21 for the IDRIS and – .42 for the IDRIA.

General discussion

The purpose of the present set of studies was to evaluate the validity of two scales for detecting C/IE survey responders: the 14-item, statement-based IDRIS and the 6-item, adjective-based IDRIA. To that end, we conducted six studies. The first study (N1 = 536) was intended to provide an initial validation of the IDRIS. The second study (N2 = 701) was intended to further validate the IDRIS, while also providing an initial validation of the IDRIA. The third (N3 = 500) and fourth (N3 = 499) studies were intended to further validate the IDRIS and IDRIA, respectively. The fifth study (N5 = 629) was intended to test whether the IDRIS and IDRIA could be used to detect C/IE responding among broad samples of adults recruited from the US, India, and Nigeria. The sixth study (N6 = 562) was intended to provide a further replication and extension of the prior studies, while also examining the IDRIS and IDRIA in relation to an extant infrequency/frequency scale (i.e., the PPI-DR; Lilienfeld, 1994).

The results of the present studies provided clear evidence that the IDRIS is capable of detecting C/IE responding among statement-based scales. Undergraduate students scoring high on the IDRIS were more likely to speed through surveys, provide long strings of identical responses, exhibit low response variabilities, depart from the average pattern of responses, provide different responses to psychometrically synonymous items, and provide similar responses to psychometrically antonymous items (Study 1, Study 2, and Study 3). They were also more likely to provide fake e-mail addresses when asked to provide contact information for informants (Study 1); report that their data should be excluded from further analysis for data quality reasons (Study 2); and provide similar responses to semantically antonymous items (Study 3). Additionally, participants sampled from the US, India, and Nigeria who scored high on the IDRIS were more likely to exhibit low response variabilities, depart from the average pattern of responses, provide different responses to psychometrically synonymous items, and provide similar responses to psychometrically antonymous items (Study 5), even when the data was prescreened to remove participants who sped through or straightlined the surveys. Furthermore, participants sampled from MTurk who scored high on the IDRIS were more likely to speed through surveys, exhibit low response variabilities, exhibit low horizontal cursor variabilities, depart from the average pattern of responses, provide different responses to psychometrically synonymous items, provide similar responses to psychometrically antonymous items, and provide similar responses to semantically antonymous items (Study 6). They were also more likely to score high on an existing infrequency/frequency measure (i.e., the PPI-DR; Lilienfeld, 1994), fail instructed response items, and report that their data should be excluded from further analysis for data quality reasons.

The results of the present studies also provided clear evidence that the IDRIA is capable of detecting C/IE responders among adjective-based and adjective-derived scales. Undergraduate students scoring high on the IDRIA were more likely to speed through surveys, provide long strings of identical responses, exhibit low response variabilities, depart from the average pattern of responses, provide different responses to psychometrically synonymous items, and provide similar responses to psychometrically antonymous items (Study 2 and Study 4). They were also more likely to report that their data should be excluded from further analysis for data quality reasons (Study 2); provide similar responses to semantically antonymous items (Study 4); and fail instructed response items (Study 4). Participants sampled from the US, India, and Nigeria who scored high on the IDRIA were also more likely to exhibit low response variabilities, depart from the average pattern of responses, provide different responses to psychometrically synonymous items, and provide similar responses to psychometrically antonymous items (Study 5). Again, this was even when the data was prescreened to remove participants who sped through or straightlined the surveys. Similarly, participants sampled from MTurk who scored high on the IDRIA were more likely to speed through surveys, exhibit low response variabilities, exhibit low horizontal cursor variabilities, depart from the average pattern of responses, provide different responses to psychometrically synonymous items, and provide similar responses to psychometrically antonymous items (Study 6). They were also more likely to score high on an existing infrequency/frequency measure (i.e., the PPI-DR; Lilienfeld, 1994), fail instructed response items, and report that their data should be excluded from further analysis for data quality reasons.

Taken together, the results of the present studies indicate that the IDRIS and IDRIA are capable of detecting C/IE responding. Although more validation work can (and should) be done, we feel comfortable recommending researchers use the IDRIS and IDRIA to detect C/IE responders among statement-based, adjective-based, and adjective-derived scales.

There does, however, remain the issue of what cut-off scores researchers should use to identify C/IE responders when using the IDRIS and IDRIA. Ultimately, the best cut-off scores for a researcher to use will depend on the specific characteristics of their study. Therefore, we generally recommend researchers use a histogram-based approach (see Kay & Saucier, 2023) to identify cut-off scores. Using this approach, researchers produce a histogram of their infrequency/frequency scale scores. They then try to identify the single value that best separates the non-C/IE responders, who should be clustered on the left-hand side of the histogram, from the C/IE responders, who should be clustered on the right-hand side of the histogram.

We do, however, appreciate that researchers may prefer more concrete cut-off thresholds for the IDRIS and IDRIA. To that end, we fit ROC curves to identify optimal cut-off thresholds for identifying C/IE respondents in five of the six studies reported here. The results indicated that, when using a five-point response scale, a cut-off threshold somewhere between – .96 and – .14 would provide the best trade-off between sensitivity and specificity for the IDRIS and a cut-off threshold somewhere between – .75 and – .28 would provide the best trade-off between sensitivity and specificity for the IDRIA. Based on these results, we recommend a cut-off threshold of zero for both scales.

A cut-off threshold of zero differs slightly from the cut-off thresholds recommended by the ROC curves, but we have three reasons for providing this recommendation. The first is that a cut-off threshold of zero makes it slightly harder to incorrectly reject non-C/IE responders. By definition, the optimal cut-off value from an ROC curve balances sensitivity and specificity. However, in this case, it is not clear that we want to be equally likely to exclude non-C/IE responders as we are to include C/IE responders. In fact, the prevailing wisdom among C/IE researchers (e.g., Curran, 2016; Smith & Burger, 1997; Tellegen, 1988) is to err on the side of retaining C/IE responders if it means reducing the number of non-C/IE responders who are incorrectly flagged for exclusion. A cut-off value of zero is a step in that direction, sacrificing some sensitivity in order to obtain greater specificity.

The second reason for our recommendation is that it will result in the exclusion of any participant who, at a minimum, fails approximately half of the infrequency/frequency items from the IDRIS and IDRIA. For example, a participant who selects “strongly agree” to all of the infrequency and frequency items from the IDRIS would be flagged as a C/IE responder, as would a participant who selects “strongly disagree” to all of the infrequency and frequency items from the IDRIS. The reason that this is a desirable property for a cut-off threshold is that it can be fairly easily communicated to various research stakeholders. For example, it makes it easier to explain the cut-off threshold to participants during informed consent and to reviewers during peer review. It also makes it easier to explain the cut-off threshold to administrators at on-demand data collection platforms, which may be necessary to implement the IDRIS and IDRIA as a part of one’s exclusionary criteria (see Prolific, 2024).

The final reason we recommend a cut-off threshold of zero is that it avoids researchers having to transform the threshold for every response scale they want to use. A cut-off threshold of – 0.50 on a five-point response scale would, for instance, need to be transformed to be used with a four-point response scale, as it would to be used with a six-point or seven-point response scale. A cut-off threshold of zero remains the same regardless of the response scale used.

Limitations and future directions