Abstract

For decades, researchers across the social sciences have sought to document and explain the worldwide variation in social group attitudes (evaluative representations, e.g., young–good/old–bad) and stereotypes (attribute representations, e.g., male–science/female–arts). Indeed, uncovering such country-level variation can provide key insights into questions ranging from how attitudes and stereotypes are clustered across places to why places vary in attitudes and stereotypes (including ecological and social correlates). Here, we introduce the Project Implicit:International (PI:International) dataset that has the potential to propel such research by offering the first cross-country dataset of both implicit (indirectly measured) and explicit (directly measured) attitudes and stereotypes across multiple topics and years. PI:International comprises 2.3 million tests for seven topics (race, sexual orientation, age, body weight, nationality, and skin-tone attitudes, as well as men/women–science/arts stereotypes) using both indirect (Implicit Association Test; IAT) and direct (self-report) measures collected continuously from 2009 to 2019 from 34 countries in each country’s native language(s). We show that the IAT data from PI:International have adequate internal consistency (split-half reliability), convergent validity (implicit–explicit correlations), and known groups validity. Given such reliability and validity, we summarize basic descriptive statistics on the overall strength and variability of implicit and explicit attitudes and stereotypes around the world. The PI:International dataset, including both summary data and trial-level data from the IAT, is provided openly to facilitate wide access and novel discoveries on the global nature of implicit and explicit attitudes and stereotypes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

It is nearly impossible to imagine a world without social group attitudes (i.e., evaluative representations, such as young–good/old–bad; Eagly & Chaiken, 1998) and stereotypes (i.e., attribute representations not reducible to valence, such as female–arts/male–science). After all, attitudes and stereotypes are, in large part, the driving force behind consequential social behaviors (Ajzen & Fishbein, 1977, 2005), helping to guide who we approach or avoid, who is hired or promoted (e.g., Moss-Racusin et al., 2012), and even who receives quality healthcare (e.g., Penner et al., 2010). It has become almost clichéd at this point to quote Allport (1935) in asserting that attitudes are the most indispensable construct in social psychology; yet, the continued presence of research on these topics shows that attitudes and stereotypes indeed continue to be indispensable (Banaji & Heiphetz, 2010).

Research on attitudes and stereotypes began with the use of direct measures, such as Likert scales and other forms of self-report, to reveal relatively explicit attitudes and stereotypes (Allport, 1935). These direct measures of attitudes and stereotypes have helped uncover insights into the basic organization of social group knowledge, its antecedents and consequences, as well as its variability across individuals and in response to contextual variations (e.g., Albarracín et al., 2005; McGuire, 1969; Petty et al., 1997; Wood, 2000). Research from recent decades, however, has revealed that much of social cognition is not exclusively explicit or deliberative, but rather can also occur rapidly and with relatively little introspection or control (Bargh, 1989; Devine, 1989; Fazio et al., 1986; Greenwald & Banaji, 1995, 2017). That is, attitudes and stereotypes can be relatively implicit and indexed using indirect measures, such as the Evaluative Priming Task (Fazio et al., 1986) the Implicit Association Test (IAT; Greenwald et al., 1998), and the Affect Misattribution Procedure (Payne et al., 2005).Footnote 1 It is now well established that the indirect measurement of attitudes and stereotypes can reveal unique patterns – different from those captured through direct measurement alone – whether in terms of demographic correlates (Nosek et al., 2007), correlations with consequential behaviors (Kurdi et al., 2019), or patterns of malleability and change (Charlesworth & Banaji, 2019; Gawronski & Bodenhausen, 2006). As such, any study of attitudes and stereotypes is most comprehensive when it considers both direct and indirect measures.

To date, research on implicit and explicit attitudes and stereotypes has been largely conducted at the level of the individual. A typical study may be aimed at identifying what makes an individual reveal stronger or weaker attitudes, such as the individual’s attitude structure (e.g., the other attitudes they hold; Eagly & Chaiken, 1998) or the individual’s current experimental context (e.g., the presence of a Black experimenter; Lowery et al., 2001). More recently, however, the increased availability of big data archives of attitude and stereotype measures has made it possible to also examine these constructs at the societal level. That is, one can aggregate measures of attitudes and stereotypes across thousands or even millions of respondents to estimate how a given culture, on average, represents a given social group (Charlesworth & Banaji, in press-b; Hehman et al., 2019).

Studying societal-level attitudes and stereotypes is crucial for understanding the nature of culture: Cultures are definable cultures, in part, because they differ in how they feel and what they think about the social groups that make up their societies (North & Fiske, 2015; Segall et al., 1998; Spencer-Rodgers et al., 2012). Additionally, studying societal-level attitudes and stereotypes holds the potential for deepening our understanding of the fundamental nature of attitudes and stereotypes, including the types of societal experiences and phenomena that they reflect (e.g., historical legacies of slavery; Payne et al., 2019).

Here, we contribute to this new direction of research on societal-level implicit and explicit attitudes and stereotypes by introducing the PI:International dataset – a dataset that will facilitate comprehensive studies across multiple cultures and multiple years. The PI:International dataset comprises (a) a sample of more than 2.3 million participants, drawn from 34 countries, (b) assessing seven different social group topics (attitudes toward race, sexual orientation, age, body weight, nationality, and skin tone, as well as gender stereotypes associating men with science and women with arts), (c) collected continuously for 11 years between 2009 and 2019, (d) with both direct (self-report) measures and indirect measures (IATs) administered (e) in the country’s native language(s). Additionally, the dataset uniquely includes trial-level data from the IAT to facilitate analyses of measurement reliability and the use of process dissociation models (Conrey et al., 2005). Finally, the PI:International dataset is freely and openly available online through the Open Science Framework in a user-friendly cleaned format, with detailed codebooks and companion R scripts to facilitate research on the global nature of attitudes and stereotypes.

Past studies of cross-cultural variation in social group attitudes and stereotypes

The study of attitudes and stereotypes has been at the center of social psychological research for decades (Banaji & Heiphetz, 2010) and thus, it will come as no surprise that there are now tens of thousands of studies and datasets that investigate questions of attitude and stereotype magnitude and variation. Characterizing this wealth of research is no easy task. However, from the perspective of the present work, we classify past studies and datasets into one of four profiles, each with its own contributions: (a) the simultaneous study of both explicit and implicit attitudes and stereotypes; (b) the study of cross-country differences; (c) the study of longitudinal variation in attitudes and stereotypes; and (d) the study of the intersection of these previous features (e.g., both implicit and explicit attitudes compared across countries).

The first, and probably largest, set of studies includes those that investigate both explicit and implicit attitudes or stereotypes, but only in a single country sample and at a single moment in time (for a recent review, see Kurdi & Banaji, 2021). Such studies are typically focused on understanding the nature of individual-level attitudes and stereotypes, as discussed above, revealing insights into topics such as the unique relationships between implicit and explicit attitudes and behaviors (Kurdi et al., 2019) or the unique malleability of implicit and explicit attitudes (Blair, 2002; Gawronski & Bodenhausen, 2006).

A second set of studies includes those that survey multiple countries, but only investigate explicit attitudes and only at a single moment in time. This group includes many one-off social surveys and public polls that seek to characterize how societies differ on explicit social opinions, such as their endorsement of gay rights (e.g., Poushter & Fetterolf, 2019) or support for immigration (Gonzalez-Barrera & Connor, 2019). These studies have made a substantial contribution to our understanding of cross-cultural variation in explicit attitudes.

A third set of studies includes those that survey attitudes over multiple years, but in a single country and only for explicit attitudes. Many country-specific social surveys (such as the General Social Survey in the United States) fall into this group, and have provided important insights into societal attitude change, such as increases in the US support for gay marriage (e.g., Gallup, 2013; McCarthy, 2020). In short, these first three sets of studies largely investigate one feature in isolation, either studying implicit and explicit attitudes, or multiple countries, or multiple years of data.

A fourth, and considerably smaller, set of studies includes those that tackle the two-way intersections of these three features (implicit/explicit, multiple countries, multiple years). For instance, a handful of studies have measured both implicit and explicit attitudes across a small set of countries (e.g., China, Canada, Cameroon), revealing systematic patterns of implicit ingroup preferences across multiple cultures (Qian et al., 2016; Steele et al., 2018). However, such studies include data from only a single moment in time. On the other hand, large-scale opinion polls such as the World Values Survey, or European Values Survey study social opinions across multiple countries over multiple years, revealing discoveries such as the widespread, cross-country decrease in religiosity (Abramson & Inglehart, 1995; Li & Bond, 2010), and yet these surveys too remain limited in only studying explicit social attitudes.

Finally, the US Project Implicit website dataset (https://implicit.harvard.edu), hereafter referred to as PI:US (reviewed in Nosek et al., 2007; Ratliff et al., 2021), provides data on both implicit and explicit attitudes and stereotypes collected over multiple years, but it is limited in its focus on a single country, with the majority of PI:US data coming from English-speaking participants residing in the United States. PI:US also includes a small set of international participants, which has been helpful for initial studies of the correlates of implicit and explicit attitudes and stereotypes across cultures (Ackerman & Chopik, 2021; Lewis & Lupyan, 2020; Nosek et al., 2009). Nevertheless, the international samples included in PI:US are relatively small and biased toward international citizens who speak English and are self-selecting into a US-centric website.Footnote 2

Unique advantages of the PI:International dataset and future questions

Ultimately, what remains needed for comprehensive studies of societal attitudes is a dataset that sits at the intersection of three data features: (1) both indirect and direct measures of attitudes and stereotypes, given that such measures are known to have unique relations to behaviors, patterns of malleability, and more; (2) across multiple countries, given that countries are known to vary in attitudes and stereotypes; and (3) across multiple years, given that attitudes and stereotypes are known to be capable of change over time. As described above, the PI:International dataset uniquely satisfies all three criteria, with both direct and indirect measures of attitudes and stereotypes from 34 countries collected continuously over 11 years. The intersection of these data will, for the first time, equip researchers to investigate (or control for) the interaction of attitude and stereotype measurement type, country, and time.

Although we leave elaboration on avenues for future research to the General discussion, we highlight here a few questions newly facilitated by the PI:International dataset. For instance, with PI:International data researchers could test whether some clusters of countries reveal systematically higher (or lower) mean levels in attitudes (Bergh & Akrami, 2016; Meeusen & Kern, 2016); whether those spatial clusters of “generalized bias” are similar for both implicit and explicit attitudes; and even whether the countries in those clusters have changed over time. Additionally, researchers could investigate how the variability within countries (such as the variability across states or counties in a country; e.g., Green et al., 2005; Hehman et al., 2021; Hester et al., 2021) compares to the variability across countries and, again, whether such within- versus across-country variability differs depending on the type of measurement. Finally, researchers may also be interested in explaining the patterns of change across time for implicit versus explicit attitudes (Charlesworth & Banaji, 2019) by investigating how change differs across countries and whether such country-level differences in change can be predicted by ecological and social factors (Jackson et al., 2019). In short, the PI:International dataset meets the evolving data demands for contemporary research on implicit and explicit attitudes and stereotypes across place and time.

Limitations of the PI:International dataset and potential remedies

Despite these potential contributions, PI:International nevertheless remains limited in at least three ways. First, the PI:International data is obtained from a non-random sample of volunteer participants who are either instructed to visit the website (e.g., for school or work requirements) or arrive at the website from self-directed searches and word-of-mouth. This largely self-selected convenience sample is therefore not representative of each country’s respective population and is often skewed to be more young, liberal, and female than the population (see Sample Demographics, below). Moreover, we note that the representativeness of samples may differ across countries: Those countries that have contributed larger amounts of data (e.g., the UK and Canada) may have relatively more representative samples (or, at least, samples that can be corrected for non-representativeness; see SM) than countries that have contributed smaller amounts of data (e.g., Romania and Serbia).

Second, non-representativeness may be further hampered by country-level differences in Internet access (e.g., in 2014, 96% of individuals in Denmark used the Internet, but only 49% in China did; Roser et al., 2015). High Internet-use countries may be more likely to have relatively representative samples from their populations visiting the PI:International websites, while low Internet-use countries may have samples in the current data that are biased toward more affluent, urban, or educated respondents. We therefore suggest that researchers interpret the results with caution around non-representativeness and preferably use methods (e.g., raking and weighting) to synthetically correct their specific country samples of interest.

To promote the use of these methods, we provide sample code and results for one country (United Kingdom) to illustrate how such raking and weighting can be implemented (see SM). We note that the results from re-weighted data show that re-weighting to the true population demographics slightly increases the mean estimates of implicit and explicit attitudes (e.g., the mean IAT D score increases from D = 0.34 to D = 0.36) but that the direction and significance of results remains consistent. Thus, while re-weighting will be helpful to guard against concerns of non-representativeness, these initial investigations can provide some confidence in the robustness of the current manuscript’s conclusions.

Third and finally, the PI:International dataset, although capturing a large number of countries and languages across nearly all continents, is far from providing truly global coverage. The most glaring gap is that the dataset includes only one African country (South Africa). Given the insights that can be gained from studying a wide diversity of countries beyond the typical WEIRD samples of psychology (Henrich et al., 2010), future work would benefit from generating collaborations across these missing countries to create PI:International websites in many more local languages and cultures.

The remainder of this paper is organized as follows. First, we describe the Project Implicit: International websites in greater detail, including the data source, stimuli, and materials for each of the seven included tasks, as well as data archiving procedures. Second, we report the characteristics of the PI:International data sample, including sample sizes and demographics across tasks and countries. Third, we examine the reliability and validity of the key measures, including internal consistency (split-half reliability), convergent validity (explicit–implicit correlations), and known groups validity of the IAT. In this section, we also provide an initial descriptive report of the data, including the means and geographic variation of implicit and explicit attitudes and stereotypes across countries and tasks. We close with a deeper discussion of the future research directions uniquely facilitated by this new dataset.

Method

Data source

Data were drawn from 34 individual demonstration websites of Project Implicit (PI), with two websites (Canada and Switzerland) offering tests in two languages (English/French, and French/German, respectively), thus resulting in 36 unique country/language sources. Each country’s data were collected on its unique website, written in that country’s language(s). These websites can be accessed from a drop-down list at the main landing page of https://implicit.harvard.edu.Footnote 3

All data were collected between January 1, 2009 and December 31, 2019, a timeframe chosen to ensure that all key measures were consistent across countries (before 2009, direct attitude and stereotype measures as well as demographic measures had frequently changed in coding schemes) and that the maximum number of countries could be retained with consistent data (after 2019 some low-activity websites were taken down).Footnote 4 The websites were created between the years 2007 and 2009 and have since been continuously maintained by international collaborators from each of the countries and by Project Implicit staff.

Visitors to the websites can choose a topic from a list of seven main tasks: six attitude tests of valenced associations – race (White/Black–good/bad), age (Young/Old–good/bad), sexuality (Straight/Gay or Straight/Lesbian–good/bad), skin tone (Light skin/Dark skin–good/bad), body weight (Thin/Fat–good/bad), and nationality (Own country/USA–good/bad) – as well as one stereotype test of gender–science associations (Male/Female–science/humanities). Some countries have additional tasks unique to them, such as an ethnicity task in Israel (attitudes toward Ashkenazi relative to Sephardi Jews), a region task in Germany (attitudes toward West Germany relative to East Germany), and a caste task in India (attitudes toward the Forward Caste relative to the Scheduled Castes). However, to facilitate consistent cross-country comparisons, the PI:International dataset focuses only on the seven main tasks listed above. Thus, the full sample of tasks-by-countries used is 252 individual datasets (i.e., seven tasks by 36 country and language-specific websites).

Data collection was approved by the Institutional Review Board for Social and Behavioral Sciences at the University of Virginia (protocol number: 2186). All participants provided informed consent upon visiting the website. Raw data were de-identified (i.e., postal codes and IP addresses were removed) before pre-processing and analyses; all results reported in the current manuscript constitute secondary analyses of de-identified data.

Measures

Implicit attitudes and stereotypes

Implicit attitudes and stereotypes were measured using the Implicit Association Test (IAT; Greenwald et al., 1998). The IAT remains the most common indirect measure of attitudes and stereotypes (Kurdi & Banaji, 2021). In the IAT, participants categorize two sets of category stimuli (e.g., White people and Black people) and two sets of attribute stimuli (e.g., “good” and “bad” on attitude tests), to the left or right using two response keys. All IATs in the PI:International dataset consist of the standard seven-block design (Greenwald et al., 1998).

In the first block (20 trials) participants practice categorizing a single set of category stimuli (e.g., White people to the left, Black people to the right), and in the second block (20 trials) participants practice categorizing a single set of attribute stimuli (e.g., good words to the left, bad words to the right). In the third (20 trials) and fourth (40 trials) blocks, participants complete a paired sorting of both category and attribute stimuli (e.g., White + Good to the left, Black + Bad to the right). In the fifth block (40 trials), the location of the categories is reversed (e.g., now White people are sorted to the right, Black people to the left) and participants practice this new location. Finally, in the sixth (20 trials) and seventh (40 trials) blocks, participants complete the contrasting paired sorting of category and attribute stimuli (e.g., White + Bad to the left, Black + Good to the right). On each trial, participants receive a red X if they provided an incorrect response and are requested to press the other response key (i.e., the correct response key) to move on to the next trial.

The dependent variable is the reaction time (and accuracy) for participants to categorize stimuli in the congruent block in which the pairings are in line with prevalent social attitudes or stereotypes (e.g., White + good/Black + bad) versus the incongruent block in which the pairings are reversed (e.g., White + bad/Black + good). The assumption is that categorizations should be easier, and hence faster and more accurate, when the category and attribute share an association in participants’ memory. The order of the two blocks (congruent first vs. incongruent first) and the location of the categories and attributes (left vs. right) are randomized across participants.

Table 1 provides example stimuli from the Italy website; all stimuli for specific countries (and in all languages) are available on the PI:International OSF archive, and a table of hyperlinks to the stimuli folders for each of the 252 country-by-task datasets is provided in Supplemental Materials. Across all six attitude IATs, the stimuli for the attributes were positively valenced words and negatively valenced words; for the gender–science stereotype IAT, the attribute stimuli were words related to science and humanities. The stimuli for the categories were: faces of people from the two categories (for the Race, Age, Skin tone, and Body WeightFootnote 5 tasks); words and images referring to straight and gay or lesbian couples (for the Sexuality task), with gay or lesbian stimuli randomized between participantsFootnote 6; images related to the participant’s home country and to USA (for the Nationality task); and words related to men (e.g., man) and women (e.g., woman) (for the Gender–Science task).

Explicit attitudes

Explicit attitudes for the six attitude tasks (i.e., all tasks except the Gender–Science task) were measured with two types of direct (self-report) measures: a seven-point Likert item and two 11-point feeling thermometers. For the Likert item, participants were asked to report their preference between the two categories as follows: Which statement best describes you?, on a scale from 1 (I strongly prefer NAME OF STIGMATIZED CATEGORY [e.g., Black people] to NAME OF DOMINANT CATEGORY [e.g., White people]) to 7 (I strongly prefer NAME OF DOMINANT CATEGORY to NAME OF STIGMATIZED CATEGORY).

Participants were also asked to answer two 11-point feeling thermometers (one for each of the group categories) with the wording as follows: Please rate how warm or cold you feel toward the following groups, with the scale anchored at −5 (very cold), 0 (neutral), and + 5 (very warm). To combine the two 11-point scales, we reverse-coded one of the two scales to have negative rather than positive values (e.g., the Black feeling thermometer was reverse coded such that +5 now indicated very cold feelings toward Black and – 5 now indicated very warm feelings toward Black). We then summed the two scales to create a 21-point relative feeling thermometer, ranging from – 10 (e.g., very cold to White and very warm to Black) to 0 (e.g., neutral to both White and Black) to +10 (e.g., very warm to White and very cold to Black). In short, on both the single Likert and the combined feeling thermometers, higher scores indicate stronger relative self-reported preferences for the typically preferred (dominant) group (e.g., White, young, straight) over the typically dispreferred (stigmatized) group (e.g., Black, old, gay).

Explicit attitudes were also collected for the Gender–Science stereotype task, but participants were asked to report their attitudes toward the attributes science and humanities on two separate five-point Likert scales anchored with – 2 (Strongly dislike), 0 (neutral), and + 2 (Strongly like). These two five-point scales were combined using the same reverse-coded summing process as above. That is, we reverse-coded one of the scales (i.e., + 2 indicated strongly dislike humanities, and – 2 indicated strongly like humanities) and then combined the two scales to create a nine-point relative self-reported attitude score, ranging from – 4 (i.e., strongly like humanities and strongly dislike science) to +4 (i.e., strongly dislike humanities and strongly like science). Thus, higher scores indicate greater relative preference for science over humanities.

Explicit stereotypes

Participants who completed the Gender–Science stereotype task were asked to report (on two separate seven-point Likert scales) how much they associated science and humanities with masculinity and femininity (e.g., Please rate how much you associate the following domains with males or females: Science [Humanities]), on a scale ranging from – 3 (Strongly female) to +3 (Strongly male). As above, the scales were combined by reverse-coding and summing: the humanities scale was reverse-coded such that – 3 indicated a strong male–humanities association and + 3 indicated a strong female–science association; the two scales were then combined to create a 13-point relative self-reported stereotype score, ranging from – 6 (i.e., strong male–humanities/female–science association) to +6 (i.e., strong female–humanities/male–science association). Thus, higher scores indicate stronger self-reported beliefs that science is relatively more male and the humanities are relatively more female.

Additional measures

Each of the seven tasks also included some unique self-report measures of attitudes, general beliefs, and demographic items. For example, participants completing the Race and Skin tone tasks also responded to (shortened versions) of the Social Dominance Orientation scale (Pratto et al., 1994) and the Right-Wing Authoritarianism scale (Altemeyer, 1981), and those completing the Age task also responded to belief questions such as “If you could choose, what age would you be?” and “How old do you feel?”. To maintain consistency in comparisons across tasks and countries, we do not report on those additional measures here. However, all measures are available in the cleaned data on the OSF archive.

Procedure

All participants were volunteers that navigated to the Project Implicit demonstration website through self-directed “word-of-mouth” searches, or from assignments for work or school. Participants arrived at their country-specific website either by selecting their chosen country from the drop-down list at the main Project Implicit landing page (http://implicit.harvard.edu) or from a direct link. After consenting to participate, they selected one of the seven included tasks (Race, Skin tone, Age, Sexuality, Nationality, Body Weight, or Gender–Science; with the labels of the task translated into the country’s native language). Participants then completed measures of explicit attitudes or stereotypes (Likert items and feeling thermometers), the measure of implicit attitudes or stereotypes (Implicit Association Test; IAT), and a set of demographic items. The order of the three sets of measures was randomized. Finally, participants were debriefed about the purpose and design of the IAT, and received feedback on their approximate IAT score.

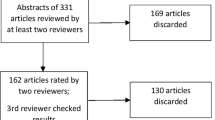

Data preparation

Data from the 34 countries (36 country websites) were divided among four of the authors. Each author processed the raw data from nine websites using a generic processing script (available on OSF) to clean and calculate the IAT D scores (see below for additional details), the combined self-report measures (described above), and demographic variables (see below). The processed data include (1) a wide-format file, with a single row for the summary data from each participant, and (2) a long-format file, with the trial-level IAT data from each participant. All codebooks and data were then archived on OSF using an automated archiving process.

IAT D score preparation

Following the recommendations of Greenwald, Nosek, and Banaji (2003), we excluded data from participants with incomplete IAT data (i.e., those who did not complete all trials), as well as from participants with more than 10% of fast trials (< 300 ms) on the IAT. Raw IAT data were then processed to produce IAT D scores using both the D2 and D6 algorithms (Greenwald et al., 2003), implemented in the cleanIAT function in the IAT R package (version 0.3; Martin, 2016).

As discussed briefly above, the IAT D score is computed by subtracting the mean latency (reaction times) of trials in the congruent blocks from the mean latency of trials in the incongruent blocks and then dividing this difference score by the combined standard deviation of all trials in all (congruent and incongruent) critical blocks. The main results reported in this paper rely on the D2 algorithm, which uses mean latencies from all trials (regardless of whether participants made an error)Footnote 7 and excludes trials faster than 400 ms and slower than 10,000 ms (in accordance with the algorithms).Footnote 8 Positive IAT D scores reflect the socially typical association, that is, an association of positivity with the dominant/higher-status social group and an association of negativity with the stigmatized/lower-status social group (e.g., White people + Good/Black people + Bad).

Demographic variable preparation

All websites recorded the participants’ age, number of previously taken IATs, gender, ethnicity, country of citizenship, country of residence, education level, education major, occupation, political identity (conservative/liberal), religious affiliation, religiosity, and, in some cases, participants’ race. The responses to these questions, if not numeric, were replaced with labels written in English at the stage of data coding to facilitate cross-country comparisons. However, we emphasize that the responses given by participants were always in their country’s native language (all language-specific response options are listed in the codebooks on the OSF).

In some cases (e.g., for the race and ethnicity variables) the number of factor levels and the factor labels for demographic variables vary between countries because different groups and labels are relevant to the local cultural context. For instance, in the Netherlands, participants were able to select from among seven racial/ethnic groups including, for example “Nederlands,” “Turks,” “Surinaams,” and “Antilliaans” (roughly translated as Dutch, Turkish, Surinamese, and Antillean). In contrast, in Hungary, participants were able select from among six racial groups including, for example, “Európai,” “Ázsiai,” “Negrid,” and “Mulatt” (European, Asian, African, and Mixed).

Analysis strategy for data quality (internal consistency, convergent validity, known groups validity)

Internal consistency (split-half reliability)

Split-half reliability was computed as a measure of data quality and internal consistency for the IAT D scores.Footnote 9 Conventionally, split-half reliability is deemed “acceptable” at values of .60 to .70, “good” at values .70 to .80, and “very good” at .80 or above (Hulin et al., 2001). Here, we calculate split-half reliability using the trial-level IAT data (available on OSF), which provide the raw latencies for each trial (e.g., each categorization of an image/word to the left or right). Due to the large size of trial-level data when analyzed across all 252 task-by-country samples, we randomly selected a subset of 500 participants for each task-by-country dataset or, if the dataset contained less than 500 participants, we used the entire country dataset. For this subset, we then used the D2 algorithm to calculate each participant’s (1) IAT D score for odd-numbered trials in congruent vs. incongruent blocks, and (2) IAT D score for even-numbered trials in congruent vs. incongruent blocks. Split-half reliability was computed as the correlation between the two IAT D scores (i.e., the correlation between odd IAT D scores and even IAT D scores).

Convergent validity

As a second test of data quality we examined whether the current data reveal the expected convergent validity by calculating correlations between implicit and explicit measures (Nosek et al., 2005). Since the introduction of implicit measures, mounting evidence has shown that implicit and explicit attitudes/stereotypes are separate but related constructs (Bar-Anan & Nosek, 2014; Bar-Anan & Vianello, 2018; Cunningham et al., 2001). For instance, early multitrait–multimethod investigations of explicit and implicit measures found that a correlated two-factor solution provided the best fit to data, indicating that the measures share some variance (i.e., measure overlapping constructs) but are not redundant with one another (Cunningham et al., 2001).Footnote 10 Given this evidence, if the current data are indeed valid, we expect to observe significant positive implicit–explicit correlations across all country and task datasets.

Known groups validity

As a final investigation of data quality, we examine known groups validity, a form of construct validation in which the measurement instrument reveals expected differences between certain groups (Cronbach & Meehl, 1955; Hattie & Cooksey, 1984). Ample research using the IAT has found theoretically meaningful differences between social groups in their IAT scores (Banse, 2001; Charlesworth & Banaji, 2019; Greenwald et al., 1998). For instance, straight participants tend to show straight–good/gay–bad attitudes on a Sexuality IAT, while gay/lesbian participants show straight–bad/gay–good attitudes (Banse, 2001). Similarly, White Americans tend to show White–good/Black–bad attitudes on a Race IAT, while Black Americans show White–bad/Black–good attitudes (Charlesworth & Banaji, 2019).

Here, we draw on past literature of group differences in IAT scores to establish a priori expectations of known groups validity. For each comparison, we expect participants from the higher status group (e.g., straight) to show higher IAT scores relative to participants from the lower status group (e.g., gay/lesbian; Axt et al., 2014; Dasgupta, 2004; Stern and Axt, 2019). Put another way, we anticipate that the lower status groups will exhibit scores that are lower in bias than the scores of the higher status group, but note that we do not necessarily expect that the lower status group will show pro-ingroup preferences (e.g., pro-gay/anti-straight preferences). The finding of lower, but not necessarily pro-ingroup, IAT scores among lower-status groups is expected because their IAT scores reflect the operation of two opposing forces – on the one hand, positive attitudes toward the participants’ ingroup arise from widespread ingroup preference, but, on the other hand, positive attitudes toward the participants’ outgroup arise from culturally reinforced positive associations with the socially dominant and powerful group. By contrast, ingroup preference and preference for the dominant group work together to yield higher IAT scores among members of high-status groups.

We test known group differences for demographic variables that were consistently collected across countries (i.e., demographics that use the same coding schemes across countries). Specifically, we examined the following five known-groups differences in implicit attitudes and stereotypes: (1) straight versus gay/lesbian respondents for the Sexuality IAT (with straight respondents expected to show higher IAT scores); (2) light-skin versus dark-skin respondents for the Skin tone IAT (with light-skin respondents expected to show higher IAT scores); (3) underweight versus overweight respondents for the Body weight task (with underweight respondents expected to show higher IAT scores); (4) male versus female respondents for the Gender–Science task (with male respondents expected to show higher IAT scores); and (5) younger versus older respondents for the Age task (with both groups expected to show similar magnitudes of positive IAT scores). The latter expectation – of no differences between younger and older respondents – may initially appear surprising in light of the above discussion on relative status. However, similar patterns of pro-young/anti-old implicit attitudes across all age groups are the most common pattern previously documented in large online samples (Nosek et al., 2007) and therefore formed the basis for our a priori expectations (but see Chopik & Giasson, 2017; Gonsalkorale et al., 2009).

Respondent race was not included among the tests of known group validity for a number of reasons. First, the coding of race was inconsistent across countries: some countries omitted recording respondent race altogether, while other countries used varying scales and labels to reflect the racial groups in their respective populations (see above for a comparison of Netherlands versus Hungary). Moreover, even if the labels used across different countries were consistent, we note that the meaning of racial group memberships is highly culture-specific (Appiah, 2018; Sidanius & Pratto, 1999), thus making simple cross-country comparisons difficult to interpret. Nevertheless, we did include a test of participants from different skin tone groups given that this variable was uniformly coded across countries and the meaning and importance of light skin versus dark skin is relatively more consistent across countries (e.g., Charles, 2003; Noe-Bustamante et al., 2021) compared to the variables of race and ethnicity.

Overview of data archive structure on OSF

The processed data, together with a codebook, are available on OSF https://osf.io/26pkd/. The data on OSF are organized first by task (seven sub-projects within the main OSF project) and then by country (36 country- and language-specific website sub-projects within each task). Each task-by-country project contains two folders. First, the folder Datasets and codebooks contains a zipped folder (data.zip) with both the wide processed data and the trial-level data, as well as a codebook listing all included variables. Second, the folder Experiment files contains the original study files and stimuli used to run the task on the PI:International website. In addition to the task-by-country projects, the main project also contains two summary folders named Data preprocessing and Data analyses, which contain the necessary R scripts and comma-separated values (CSV) file outputs to process the data and to analyze key variables for this manuscript. To ease the readers’ access to this information, the Supplemental Materials also provide a table with links to each task-by-country project on OSF.

Results

Descriptive statistics and demographic variables

Sample size

The Project Implicit International (PI:International) dataset includes 34 countries (two with bilingual data, for a total of 36 samples) and seven tasks (race, age, sexuality, skin tone, body weight, gender–science, and nationality), yielding 252 task-by-country datasets, collected continuously across 11 years (2009–2019) in the country’s native language(s). The total sample size across all tasks and countries is 2,386,123 respondents. The largest tasks are Sexuality and Race, and the smallest are Skin tone and Nationality (see Table 2). Additionally, by far the largest countries represented are the United Kingdom (Ntotal = 386,600; see Table 3, Fig. 1) and Canada (English site, Ntotal = 323,754), while the smallest are Romania (Ntotal = 4641) and Serbia (Ntotal = 7442). Finally, when sub-setting the data into each of the 252 task-by-country datasets (see OSF archive), the Ns ranged from a minimum of 426 total respondents (Romania Skin-tone task data) to a maximum of 91,624 total respondents (United Kingdom Race data), with an average of 9204 respondents per task-by-country dataset.

Sample demographics

In terms of demographics, the overall dataset is generally young (Mage = 29 years), female (58%), and liberal (42%) or politically neutral (33%; see Table 4), roughly approximating the sample from the Project Implicit US (PI:US) dataset (Charlesworth & Banaji, in press-a). Further demographics (e.g., ethnicity, education level) differed in whether and how they were recorded across countries and thus are not reported in this summary but are available for each country on OSF.

Within each task, the demographic composition followed that seen in the full sample (Table 4): Most tasks revealed samples that were predominantly liberal or politically neutral, young, and female. Nevertheless, when inspecting the individual task-by-country samples, there was more variability across key demographics (e.g., Mage ranged from 22.47 years for the Sexuality test in China to 37.03 years for the Age test in the United Kingdom; female participation ranged from 31.81% for the Nationality test in India to 84.12% for the Sexuality test in Korea; see Table 5). Demographics for the 252 task-by-country samples are available in the summary comma-separated values (CSV) file on OSF.

Although the dominant pattern of a young, liberal, and female sample remained largely consistent across task-by-country samples, future work could benefit from a deeper inspection of cross-country and cross-task-by-country differences in sample demographics (e.g., overall participation rates, female participation rates, conservative participation rates) and the possible reasons for these apparent differences. For instance, differences in the relative participation of older versus younger (or female versus male) respondents could indicate that a given social attitude topic is being more widely attended to and discussed in certain demographic circles (e.g., young social media channels). As such, these differences may be helpful in identifying the demographic groups most attentive to certain social attitudes and thus anticipating where we might expect greater social change.

As shown in Table 4, all tasks also had a high percentage of respondents reporting residency (and citizenship) of the country in which the website was hosted. Specifically, on average, approximately 71% of respondents who reported their residency were residents of the target country (i.e., the country of the website they visited), 84% of respondents who reported their citizenship were citizens of the target country, and 80% of respondents who reported both their residency and citizenship were indeed both residents and citizens of the target country.Footnote 11 Such high average percentages of residents and citizens imply that the samples can indeed provide accurate insights into the attitudes and stereotypes that are embedded in the respective cultural environments.

Data quality: Internal consistency, convergent validity, and known groups validity

Internal consistency (split-half reliability)

In general, the average split-half reliability across all 252 task-by-country datasets was deemed acceptable at r = .68 [range = .52; .80]. The task with the highest split-half reliability was the Sexuality task (Table 6) at r = .76, whereas Skin-tone task had the lowest reliability at r = .63, although even this task showed acceptable internal consistency by the typical standards (see Methods).

Convergent validity (implicit–explicit correlations)

All tasks showed the expected significant and positive correlations between implicit measures (IAT D scores) and explicit measures (either self-report Likert items or self-report thermometers, or, in the case of Gender–Science, self-reported stereotype difference scores; Table 6). Additionally, the magnitudes of all other implicit–explicit correlations were in line with data from the US website, with the largest correlations observed for the Sexuality task (r = .34 and .40 for thermometers and Likert scales, respectively; Table 6) and the lowest correlations observed for the Age (r = .11 and .12) and Body Weight tasks (r = .15 and .17); similar variation in correlations are found using the same tasks from the PI:US data (Charlesworth & Banaji, 2019). Notably, positive implicit–explicit correlations were also generally consistent across all 252 country-by-task datasets (see OSF archive for country-by-task summary data). Thus, the PI:International datasets appear to be of sufficient and consistent quality to capture the expected convergent relationships between explicit and implicit attitudes and stereotypes.Footnote 12

Known groups validity

In line with expectations, we found that the Sexuality task revealed expected known group differences in all countries, with straight respondents showing significantly stronger implicit pro-straight/anti-gay attitudes than gay/lesbian respondents, average Cohen’s d between groups, d = 1.10 (Table 7). Similarly, for the Skin-tone task, 31 out of the 36 website samples showed the expected significant differences between light-skinned and dark-skinned respondents, average Cohen’s d between groups, d = 0.39; and, for the Body Weight task, 25 out of 36 website samples showed the expected differences between underweight and overweight respondents, average Cohen’s d between groups, d = 0.19 (Table 7). The fact that most countries had results in line with expectations can be taken as an indication of both the data quality as well as the cross-country generalizability of known demographic differences by sexuality, skin tone, and body weight.

In contrast, less consistent demographic differences were observed for the Age task, where we found the expected null effect of implicit attitudes between the younger sample (< 20 years of age) and middle-to-older sample (> 35 years of age)Footnote 13 for only 10 out of 36 website samples (Table 7). Interestingly, all remaining 26 countries showed significant effects that reflected stronger pro-young/anti-old implicit attitudes among the relatively older sample with an average Cohen’s d = −0.21 (see also Chopik & Giasson, 2017 for similar findings). Perhaps this pro-young/anti-old preference among the older populations may reflect pervasive internalized anti-elderly bias that becomes activated as participants face reminders of their own aging (Levy & Banaji, 2002). However, we also note that stronger biases among older respondents could be due, in part, to age-related differences in executive functions that affect IAT performance (e.g., by limiting the ability of older respondents to inhibit the expression of bias, Gonsalkorale et al., 2009). The inclusion of trial-level data in PI:International will newly enable researchers to test such competing explanations using process modelling.

Finally, the Gender–Science task showed the expected effects (higher IAT scores among male respondents versus female respondents) in only 6 out of 36 country samples (Table 7). Instead, 17 countries showed a significant difference in the opposite direction, with female respondents revealing higher implicit male–science/female–arts stereotypes than male respondents, and 13 countries showing no overall gender difference, resulting in an average Cohen’s d = −0.13 across countries. Although this unexpected result could signal lower quality data, we argue instead that, given the adequate split-half reliability scores and convergent validity, it is more likely that such unexpected gender differences are real and meaningful effects worth explaining in future work. Indeed, while accounting for country-level mean differences (from PI:US data) has already been tackled in past work (Lewis & Lupyan, 2020; Nosek et al., 2009), the current results motivate future examinations and explanations not only of average differences across countries but also of the within-country variation revealed through such heterogenous gender differences.

Though most of the hypothesized group-differences emerged as expected, we again caution researchers of sample non-representativeness. Specifically, in the current case, there is some ambiguity regarding the demographic (and non-demographic) characteristics of the participants from the higher and lower status groups who decided to complete each of the tasks. Selection biases may impact the two groups in different ways (e.g., in some countries, female participants may skew even more liberal than male participants, and/or female participants may have different motivations for arriving at the website than male participants). Weighting and raking approaches that adjust the data for representativeness across the intersection of demographic variables (e.g., both politics and gender) will help to remedy some of these concerns. As discussed in the Introduction above, we provide an illustration of such a weighting and raking approach for future researchers in the SM. We also emphasize that, at least for these early investigations, the interpretation of results is generally consistent across both weighted and unweighted data, thus providing confidence in the current conclusions.

Descriptive results of country-level variation in implicit and explicit attitudes and stereotypes

Having established that the data in PI:International are of sufficient quality to yield expected internal consistency, convergent validity, and known groups validity, we next turn to summarizing the key dependent variables: (1) the overall results for implicit attitudes and stereotypes across tasks (combining all countries) as well as the countries showing the minimum and maximum scores on implicit (IAT) attitudes and stereotypes; and (2) the overall results (and minimum and maximum) for explicit attitudes and stereotypes across tasks (combining all countries) as well as the countries showing the minimum and maximum scores on explicit (self-reported) attitudes and stereotypes.

Implicit attitudes and stereotypes

All countries showed significant positive IAT D scores, for nearly every task. Given that these attitudes and stereotypes were assessed in each country’s native languages, with samples that were predominantly citizens and residents of the countries, this provides a particularly strong test of the widespread pervasiveness of implicit attitudes and stereotypes across countries, compared to previous tests using only US-based data (e.g., Nosek et al., 2007). On average, across countries, the strongest implicit attitudes were observed on the Age IAT followed, in order, by the Nationality IAT, Body Weight IAT, Gender–Science IAT, Skin tone IAT, Race IAT, and lastly, the Sexuality IAT (Table 8).

Despite the consistent presence of positive IAT D scores, there was nevertheless variation in the magnitude of implicit attitudes and stereotypes across countries (Fig. 2). The largest ranges were observed on the Sexuality IAT (range = 0.60 IAT D score points), and Body Weight IAT (range = 0.50 points), and the smallest ranges were observed on the Age (range = 0.19) and Race tasks (range = 0.22). Such differences in country-level variability across tasks may suggest that implicit sexuality and body weight attitudes are more affected by local cultural norms (e.g., the cross-country variation in same-gender marriage laws; Poushter & Kent, 2020); in contrast, implicit race and age attitudes may be more shaped by widely and cross-culturally shared preferences for the (socially dominant) groups of White and young people.

Country differences in implicit attitudes across six IAT tasks. Y-axes represent Cohen’s d effect sizes from one-sample tests against μ = 0. X-axes list the countries, ranked from left to right in order from strongest to weakest IAT D scores. Error bars represent 95% confidence intervals around Cohen’s d estimates.

However, we also note the caveat that some variation in the magnitude of attitudes between countries could reflect more extreme, outlier estimations for smaller sample-size countries (e.g., Romania, which often appears as the country with either the minimum or maximum estimated attitude). Nevertheless, inspecting the confidence intervals around the Cohen’s d estimates across countries (e.g., Fig. 2) shows that it is not always the country with the largest variance (and smallest sample size) that anchors an extreme end. Moreover, the confidence intervals show that, even in the countries with the smallest amounts of data, the mean appears to be estimated with adequate precision (the CIs do not span more than a few decimal points). Thus, despite variability in sample sizes, it appears possible to interpret the magnitude ranges across countries with some confidence.

Explicit attitudes and stereotypes

Having discussed the patterns of variation in implicit attitudes and stereotypes, we next turn to whether similar patterns emerge for explicit attitudes and stereotypes on the same topics. As described in the Method section above, explicit attitudes were assessed using two direct (self-report) measures: (1) a seven-point relative Likert scale and (2) two 11-point (from – 5 to +5) feeling thermometers (combined into a 21-point relative preference scale, from – 10 to +10). Across task-by-country datasets for the six attitude domains, results on the two direct measures were significantly and positively correlated, r = .73, t(214) = 15.53, p < .001. However, the pattern of results from each direct measure reveals its own nuances across countries and, as such, we report the Likert and thermometer results separately below. Additionally, the results for the one explicit stereotype task (gender–science) are reported separately at the end of the section because they were obtained using entirely different scales.

First, for explicit attitudes assessed using seven-point Likert scales, all countries showed significant, positive explicit attitudes for the typically preferred group (e.g., straight, White, young, own country) across every task (see Fig. 3). This result suggests that, much like implicit attitudes, relative explicit attitudes in favor of culturally dominant groups are widespread across countries. There was nevertheless variation across tasks in explicit attitude magnitude: the strongest effects were observed on the Nationality task, followed, in order, by the Body Weight task, Race task, Age task, Skin tone task, and Sexuality task (Table 8). Although this ordering is similar to that observed on implicit attitudes, one topic – age attitudes – showed a notable discrepancy between revealing the strongest implicit attitudes but the third weakest explicit attitudes.

Country differences in explicit attitudes across six tasks (Likert measures). Y-axes represent Cohen’s d effect sizes from one-sample tests against μ = 0, using seven-point Likert scales (with 0 indicating neutral attitudes). X-axes list the countries, ranked from left to right in order from strongest to weakest explicit attitudes. Error bars represent 95% confidence interval limits around Cohen’s d estimates.

Turning next to the thermometer scales, the strongest effects were again observed in the Nationality task, followed by the Body Weight task, Sexuality task, Skin tone task, Race task, and, lastly, the Age task. Here again, the most notable difference between implicit and explicit attitudes was on the Age task, perhaps suggesting that age attitudes are characterized by a particularly strong dissociation between direct and indirect measures. The thermometer scales also revealed another unique finding: Unlike the IAT scores or self-report Likert scales, most tasks had at least a handful of countries that expressed warmth in favor of the typically negatively evaluated group (e.g., eight countries indicated greater relative warmth toward older people over younger people, and four countries indicated greater relative warmth toward Black people over White people; Fig. 4).

Country differences in explicit attitudes across six tasks (thermometer measures). Y-axes represent Cohen’s d effect sizes from one-sample tests against μ = 0, from 21-point combined thermometer scales (with 0 indicating neutral warmth/coldness toward both groups). X-axes list the countries, ranked from left to right in order from strongest to weakest explicit attitudes. Error bars represent 95% confidence interval limits around Cohen’s d estimates.

Thermometers also showed overall lower average effect sizes than the other measures (mean Cohen’s d = 0.99 for the IAT, 0.68 for the Likert scale, and 0.39 for the thermometer scales). As has been argued elsewhere, non-relative (or exemplar-based) measures, such as the thermometer scales used here, may be less likely to reveal strong attitudes (e.g., Williams & Steele, 2017). Whether the true degree of attitudes is underestimated by the non-relative measures or overestimated by the relative measures remains an open question for future research. Alternatively, it is conceivable that the two types of measures capture related, but not fully identical, constructs that genuinely differ in their mean levels in the population.

Finally, for the Gender–Science task, explicit stereotypes were assessed using two measures: a combined Likert measure indexing the respondent’s stereotypes about the associations of science with male and humanities with female; and a combined Likert measure probing the respondent’s attitudes toward science relative to humanities. Results from the direct stereotype measure indicated that all countries showed a significant explicit association of science with male and humanities with female. In contrast, results from the direct attitude measure revealed that half of the countries showed a preference for humanities over science (indicated by negative scores; Fig. 5), while the other half of countries showed a preference for science over humanities (indicated by positive scores). Thus, as would be expected, results from the direct and indirect measures of gender–science stereotypes are more closely aligned than the results from a direct measure of attitudes and an indirect measure of stereotypes (Fig. 5).

Country differences in implicit and explicit Gender–Science stereotypes. Y-axes represent Cohen’s d effect sizes from one-sample tests against μ = 0 for Implicit Association Test D scores (panel 1), explicit attitudes toward science and humanities measured from ten-point combined Likert scales (with 0 indicating neutral attitudes toward both domains; panel 2), and explicit stereotypes associating science with men and arts with women from 14-point combined Likert scales (with 0 indicating neutral stereotypes; panel 3). X-axes list the countries, ranked from left to right in order from strongest to weakest attitudes and stereotypes. Error bars represent 95% confidence interval limits around Cohen’s d estimates.

General discussion

In this paper, we introduced the PI:International dataset, with over 2.3 million tests of explicit and implicit social group attitudes and stereotypes toward seven social group domains (race, skin tone, body weight, sexuality, age, nationality, and gender–science), collected continuously over 11 years (2009–2019) from 34 countries (using 36 country-specific websites in the country’s native languages). PI:International is distinct from past research in providing an intersection of three key data features: (1) both direct and indirect measures of seven attitudes and stereotypes, (2) measured across multiple countries, and (3) measured continuously across 11 years. Given the known differences in attitudes and stereotypes across measurement types (e.g., Kurdi & Banaji, 2021), countries (e.g., Poushter & Kent, 2020), and time (Charlesworth & Banaji, 2019), a dataset that enables researchers to comprehensively examine (or control for) the interaction of these features will offer unique benefits.

The analyses reported above suggest that the PI:International dataset performs well on tests of data quality, ensuring its usefulness for future research. Internal consistency of implicit attitude and stereotype scores was acceptable both overall and within each task. Satisfactory validity was also evident from tests of convergent validity (implicit–explicit correlations), with significant positive correlations found both overall in each task and in each of the 252 country-by-task datasets.

We also investigated known groups validity for five group comparisons (sexual orientation, skin tone, body weight, age, and gender), with some comparisons revealing the anticipated patterns and others providing more nuanced results. Specifically, expected group differences were consistently observed on the Sexuality, Skin tone, and Body Weight tasks, such that members of typically stigmatized groups (i.e., self-identified gay, dark-skinned, and fat participants) exhibited lower levels of bias than members of socially dominant groups (i.e., self-identified straight, dark-skinned, and thin participants). However, both the Age and Gender–Science tasks diverged from expected known groups effects. Younger and older respondents differed in their implicit anti-old/pro-young attitudes for most countries (unlike Nosek et al., 2007), and women had stronger implicit gender–science stereotypes for most countries (unlike in the United States; Charlesworth & Banaji, 2022). Ultimately, such results call for future research to explain why younger and older respondents may have similar implicit anti-old/pro-young attitudes in the US (Nosek et al., 2007) but not in other countries, as well as why women in some countries (but not all) may have stronger gender–science stereotypes than men.

Having established adequate data quality across various metrics, we next provided a descriptive summary of implicit and explicit attitudes and stereotypes across tasks and countries. Across nearly all countries and tasks, we found evidence for significant implicit and explicit attitudes and stereotypes in favor of the societally dominant group over societally stigmatized group, thereby attesting to the widespread pervasiveness of such social group representations across cultures and languages. It is remarkable that, despite the vast differences in country-level contexts and histories, all 36 website samples revealed, on average, implicit and explicit attitudes and stereotypes that favored the same high-status groups (e.g., White, light-skin, thin, young, straight, men) relative to the same low-status groups (e.g., Black, dark-skin, fat, old, gay, women).

Nonetheless, despite this impressive consistency in the direction of attitudes and stereotypes, we observed considerable variation in the magnitude of attitudes and stereotypes across domains and countries. For instance, on implicit sexuality attitudes – the task that showed the largest country-level range in magnitude – countries ranged from a weak pro-gay/anti-straight mean IAT score in Taiwan (Cohen’s d = − 0.32) to a strong pro-straight/anti-gay mean IAT score in Argentina (Cohen’s d = 1.03). Explaining and understanding why such variation exists is a primary future research direction that is now uniquely facilitated by the current dataset.

In short, the PI:International data will accelerate empirical and theoretical work on the patterns of implicit and explicit attitudes and stereotypes across time and space. Below, we highlight what we see as three exciting avenues for future research: (1) the effect of varying degrees of cultural immersion (e.g., language, citizenship, residency) on implicit and explicit attitudes and stereotypes; (2) the clustering of biases across topics and places; and (3) the patterns and sources of attitude and stereotype change across countries. Beyond these initial ideas that we are currently pursuing, we hope that the open data and code at the Open Science Framework will spur even more innovation and discoveries on the nature and variation of social attitudes and stereotypes.

The effect of cultural immersion on implicit and explicit attitudes and stereotypes

Cues to our cultural context – where one currently lives (i.e., residency), one’s national identity (i.e., citizenship), and the language that one tends to speak – shape the knowledge structures activated in our minds. For instance, Ogunnaike et al. (2010) showed that bilingual participants had higher pro-Moroccan IAT D scores on a Moroccan–good/French–bad IAT when completing the measure in Arabic rather than in French. Such results are in line with the broader notion that language serves as a cue to one’s current cultural frame of mind, in combination with many other contextual cues that immerse a participant in their culture (e.g., pictures of a country’s flag or natural landscapes). Indeed, an emerging body of observational research using aggregated IAT scores across geography also suggest a role for one’s physical culture in activating and maintaining implicit attitudes. For example, aggregate scores on the IAT are stronger in U.S. counties with more reminders of slavery (e.g., confederate monuments) and larger historical enslaved populations (Payne et al., 2019). Presumably, such results reflect a dynamic and mutually reinforcing process between the presence of cultural cues that emphasize group differences and the activation of strong social group attitudes (i.e., cultural cues increase the activation of attitudes which, in turn, help maintain the cultural cues and vice versa).

The PI:International dataset offers an exciting new opportunity to explore these dynamic relationships between culture and attitudes by examining how variation in the degree of cultural immersion (cultural cues) may affect the magnitude of implicit and explicit attitudes and stereotypes. That is, when coupled with the PI:US data, the combined datasets can now span the full range of participants immersed in a given culture as a function of their citizenship, residency, and language of assessment. For example, imagine a researcher interested in the influence of Brazilian culture on the Race IAT; they would be able to compare the IAT scores of Brazilian citizens who are residents of the US, speaking English, and taking the English-language race task on the US website (i.e., participants who only have one cultural cue of citizenship) to the IAT scores of Brazilian citizens, who are residents of Brazil, speaking Portuguese, and taking the Portuguese race task on the Brazil website (i.e., participants who have all cultural cues of citizenship, residency, and language), and all participants in between. Although the dataset does not currently include a variable on the length of residency in a participant’s current country (a factor typically included in research on acculturation), we emphasize that the existing variation in cues to cultural immersion (language, citizenship, residency) can provide a fruitful first step toward understanding the coupling between societal contexts and implicit and explicit attitudes (Payne et al., 2017).

Clustering of attitudes and stereotypes across topics and across countries

Early on in the study of social attitudes and stereotypes, Allport (1954) demonstrated that different social biases, e.g., evaluations of immigrants, religious minorities, and people with disabilities, are often highly correlated within an individual respondent. That is, respondents who score high on one bias will also score high on other biases, revealing a pattern of so-called “generalized prejudice” within individuals (Akrami et al., 2011; Bergh & Akrami, 2016). Similar patterns have now begun to be explored in explicit attitudes across nations as well (Meeusen & Kern, 2016), identifying which explicit attitudes are most strongly coupled together. Until the current data, however, no work to our knowledge has sought to examine such generalized patterns of implicit attitudes across tasks (e.g., whether the coupling between implicit race and sexuality attitudes is stronger than the coupling between implicit race and age attitudes), nor has research examined how explicit versus implicit measures may differ in the degree or type of “generalized prejudice”.

Beyond examining the clustering of attitudes and stereotypes across tasks, it is now also possible to examine the clustering across countries. That is, by using data from all seven tasks, researchers could identify which countries score systematically lower or higher on the set of implicit and explicit attitudes and stereotypes. For instance, given well-known patterns of spatial autocorrelations or dependencies (Tobler, 1970), adjacent countries may cluster together (i.e., be more similar in their attitudes and stereotypes than non-adjacent countries), perhaps implying that biases in judgment “bleed” across geographic boundaries through shared norms, media, or patterns of immigration.

A related question in this line of work concerns how to decompose the variability across versus within countries and then to quantify which factors best explain this across versus within variability in implicit and explicit attitudes and stereotypes. For instance, one can compare the contribution of a societal-level variable, such as country residence (or citizenship), against the contribution of a more individual-level variable, such as a respondents’ demographic groups or personality scales. Whether or not the variability in data is largely attributable to one’s country and context or to individual factors will contribute to ongoing discussions on the sources and nature of implicit and explicit attitudes and stereotypes as individual and societal (Connor & Evers, 2020; Payne et al., 2017, 2022).

Finally, after identifying how attitudes and stereotypes cluster across countries, the current data can also advance empirical and theoretical arguments on why that clustering happens by identifying the correlated ecological (e.g., rivers, mountains, pathogen threats) and social factors (e.g., demography, income, availability of health resources; Jackson et al., 2019). Recently, Hehman and colleagues (2020) employed statistical learning techniques (specifically, elastic net regularization) to generate bottom-up discoveries of the correlates of within-nation variation in implicit and explicit attitudes and stereotypes, revealing that higher regional biases in the US were most strongly predicted by sociodemographic variables (e.g., lower percentage of mental health providers and higher rates of premature death). Similar statistical learning approaches could now be performed to explain cross-national variation. In addition to such a bottom-up approach, future work can test top-down theoretical hypotheses on what correlates should be the strongest predictors of specific attitude domains (e.g., pathogen threats may predict anti-gay bias but not anti-Black bias; Murray & Schaller, 2016), versus what correlates may be the strongest predictors of the aforementioned “generalized” bias (e.g., GDP may predict bias across many topics).

Patterns of change in implicit and explicit social attitudes and stereotypes

For decades, the dominant theoretical assumption was that implicit social cognition, being less deliberate and more automatic, would be difficult (if not impossible) to change durably over time (e.g., Bargh, 1999). Over the past decade, however, this view of stability has evolved considerably. Initially, such strict notions of stability were challenged by experimental studies demonstrating that individuals’ implicit attitudes and stereotypes could be shifted temporarily and, under some carefully created experimental conditions, even changed beyond a single experimental session (for reviews, see Cone et al., 2017; De Houwer et al., 2020; Kurdi & Dunham, 2020). Whether and when such within-individual changes translate to changes in explicit attitudes, changes in behavior, or changes that persist over time spans of multiple years is ripe for further exploration.

Notably, recent analyses using the PI:US dataset have also shown attitude and stereotype change at the societal level, with durable transformations over the span of now 14 years. At least in the United States, implicit societal level attitudes have changed by as much as 65% (implicit sexuality attitudes) from 2007 to 2020, and explicit attitudes have dropped by as much as 98% (explicit race attitudes; Charlesworth & Banaji, in press-a, 2019, 2022). Moreover, this change was widespread within the US, occurring across demographic groups (e.g., men/women, educated/non-educated, religious/non-religious) and geographic locations (Charlesworth & Banaji, 2021). Yet no study, to our knowledge, has systematically explored whether change has also been consistent across countries in attitude and stereotype change for multiple social topics.

Given the practical and theoretical importance of understanding whether, to what extent, and why reductions in social biases occur, the PI:International dataset will be instrumental in expanding our knowledge on long-term change across countries. At the same time, we caution future users of the dataset that low sample sizes may not make it possible to meaningfully include all countries in analyses of change over time. Specifically, 65 task-by-country datasets (out of 252, or about 26% of the datasets) included in PI:International have a minimum yearly sample of less than 50 participants, and 19 task-by-country datasets (out of 252, or about 8%) have a median yearly sample with less than 50 participants.Footnote 14 However, even including only those countries with sufficient data beyond a given threshold will provide opportunities for new insights into cross-country patterns of change to emerge.

For instance, the vast cross-country variation in everything from norms to demography to climate provides a strong test for consistency in long-term implicit and explicit attitude and stereotype change. On the one hand, it is possible that the widespread trends observed in US data across most demographic groups (Charlesworth & Banaji, 2021) reflect truly global, societal transformations such that the same trends may be found across multiple cultures. If so, the results would reinforce and extend conclusions that the sources of implicit and explicit attitudes and stereotype change are likely to be events that cut across cultures at the most global, macro-level of society and affect not only multiple demographic groups but also multiple countries in similar ways (e.g., the global COVID-19 pandemic or international movements such as Black Lives Matter; see Charlesworth & Banaji, in press-a for a recent discussion).

On the other hand, there may be more variation in change across countries than within countries. For instance, countries that have witnessed legislative changes around same-sex marriage and large increases in positive LGBTQ+ media representation may also show rapid change in sexuality attitudes, while those countries that have had no such legislation or positive media representation may show no such change. Cross-country variability in trends – if it exists – will also provide the necessary methodological setting for identifying quasi-experimental causal impacts (Abadie & Cattaneo, 2018; Charlesworth & Banaji, in press-a). That is, one could test how variation in exposure to events across countries (e.g., the timing of legislation, elections, or media campaigns) predicts variation in the trends of change across countries. Until now, the consistency of trends in the PI:US data has made it difficult to tease apart and identify causal sources of change; the potential for greater variation across countries provides a promising opportunity to better understand the societal correlates of implicit and explicit attitude and stereotype change.

Final words of contribution and caution

As mentioned in the Introduction, despite the unique advantages of the PI:International dataset, enthusiasm must be tempered by inherent limitations of the data. Here we caution again that the data were obtained from non-representative samples, with distorted coverage of the world’s countries (missing nearly all countries in Africa) and possible biases resulting from country differences in Internet access (and the characteristics of those with versus without access). Researchers using this data are encouraged to interpret all results in the context of these limitations and to correct for such sampling biases to the extent possible (e.g., using the provided weighting scripts). From this place of both caution and optimism, we look forward to the many unique methodological, empirical, and theoretical contributions that can be spurred by the PI:International dataset. All data are available in a user-friendly format, archived on the Open Science Framework with R code to easily analyze the cleaned country datasets, thereby facilitating the rapid growth of understanding of the global distribution of implicit and explicit attitudes and stereotypes.

Notes

The present paper remains agnostic regarding the existence of separate explicit and implicit mental representations in memory. Rather, we use the short-hand terms “explicit” and “implicit” attitudes or stereotypes to refer to the outcomes of direct and indirect measurement procedures, respectively.