Abstract

For decades, research on metacomprehension has demonstrated that many learners struggle to accurately discriminate their comprehension of texts. However, while reviews of experimental studies on relative metacomprehension accuracy have found average intra-individual correlations between predictions and performance of around .27 for adult readers, in some contexts even lower near-zero accuracy levels have been reported. One possible explanation for those strikingly low levels of accuracy is the high conceptual overlap between topics of the texts. To test this hypothesis, in the present work participants were randomly assigned to read one of two text sets that differed in their degree of conceptual overlap. Participants judged their understanding and completed an inference test for each topic. Across two studies, mean relative accuracy was found to match typical baseline levels for the low-overlap text sets and was significantly lower for the high-overlap text sets. Results suggest text similarity is an important factor impacting comprehension monitoring accuracy that may have contributed to the variable and sometimes inconsistent results reported in the metacomprehension literature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

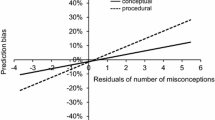

Metacognition includes a learner’s ability to accurately monitor and judge their learning so they can effectively regulate their studying (Dunlosky & Metcalfe, 2009). Monitoring accuracy has been assessed in terms of a learner’s confidence in their performance by computing a difference score between judgments of learning and actual test performance. This confidence bias measure reflects whether learners’ judgments are systematically biased in one direction (interpreted as over- vs. under-confidence). A related measure, absolute accuracy or absolute error, reflects the total amount of error in a learner’s predictions independent of error directionality. Both these calibration measures are directly dependent on actual task performance (Maki & McGuire, 2002; Nelson, 1984). These measures may be influenced by a priori heuristics such as perceived domain knowledge and self-efficacy, or other general learner characteristics that are insensitive to text-specific variations in comprehension (Griffin et al., 2009; Kwon & Linderholm, 2014; Zhao & Linderholm, 2011). A third measure that is commonly assessed is relative accuracy, computed as the intra-individual correlation between predicted and actual performance for a set of materials. Relative accuracy reflects how well learners can discriminate their learning across the set where greater monitoring accuracy is demonstrated by a stronger positive correlation. Because relative accuracy is independent of the learner’s absolute performance or skill in the primary task, it is generally considered a more direct reflection of true moment-to-moment meta-experiences (Griffin, Mielicki, & Wiley, 2019; Nelson, 1984) that are the defining feature of “metacognitive monitoring” as originally conceived (Flavell, 1979). Both calibration and relative accuracy are needed for effective self-regulated study (Dunlosky & Rawson, 2012). Absolute accuracy motivates the learner to continue study, while relative accuracy provides direction for what to study.

Within the literature on monitoring accuracy, much work that has focused on the monitoring of memory performance, for example learning of word pairs (vocabulary or novel associates) or definitions of key terms, has demonstrated conditions under which near perfect levels of relative accuracy can be observed (Nelson & Dunlosky, 1991; Rhodes & Tauber, 2011). In contrast, monitoring of understanding from texts, or metacomprehension, has been shown to be much less accurate and harder to improve than metamemory (Dunlosky & Lipko, 2007; Griffin, Mielicki, & Wiley, 2019; Maki, 1998b; Wiley, Thiede, & Griffin, 2016). Reviews of metacomprehension results have found average intra-individual correlations between predictions and performance of around .27 for adult readers (Dunlosky & Lipko, 2007; Fukaya, 2010; Prinz et al., 2020; Thiede et al., 2009; Yang et al., 2023). This draws attention to an important distinction between metamemory and metacomprehension monitoring processes. Metamemory accuracy for a list of items can be quite high because part of the item is generally provided as part of the judgment cue. Each item or item-pair is stored as a separate instance that is cued and retrieved at the time of monitoring, providing the learner with a direct way to self-test whether an item is in memory. In contrast, comprehending a text requires integrating ideas from the text with prior knowledge as the learner constructs a situation-model of what the text is about (Kintsch, 1994; Wiley et al., 2005). Comprehension is generally assessed with inference items that require extending the logic of what is in the text to novel situations, thus making monitoring of comprehension far less bounded with a less-finite number of potential questions to assess it, compared to monitoring memory for discrete pieces of information.

In many educational contexts, assigned readings will come from the same textbook and will be on similar concepts. Yet, most research on metacomprehension has been conducted using sets of texts on different topics. Studies conducted using sets of texts from the same textbook, or sections of a longer passage, have observed strikingly low levels of relative accuracy, with intra-individual correlations between predictions and performance on comprehension tests approaching zero (Griffin et al., 2009; Guerrero et al., 2022, 2023; Maki, 1998a; Maki et al., 1994; Ozuru et al., 2012; Wiley, Griffin, et al., 2016). These results suggest that the conceptual overlap present among a set of texts might affect relative metacomprehension accuracy, with greater overlap being linked to poorer comprehension monitoring.

One prior study (Griffin et al., 2009) actually employed two text sets. One set included only texts about baseball topics (bunting, curveballs, corked bats, batting averages, the crack of the bat). The other set was on highly disparate topics (the potato famine, dinosaur extinction, heart disease, electric cars, cell division). A single group of participants read, judged, and took tests on both text sets. The purpose of the Griffin et al. study was not to test for differences between the text sets, but to test for the effect of domain expertise on metacomprehension accuracy within a particular domain (with the diverse set serving as a control measure). However, the authors noted that accuracy for the baseball set was quite low (M = .12, SD = .53). In contrast, the relative accuracy for the diverse set was more typical (M = .32, SD = .47). Although Griffin et al. did not test for a difference between text sets, a post hoc computation indicates monitoring accuracy for the diverse set was significantly higher, t(200) = 2.84, p = .005; d = .40. This result is suggestive, but because Griffin et al. was not designed to test whether the similarity in topics among texts might be a critical factor, a number of issues prevent these data from providing clear support for the conceptual-overlap hypothesis. The two text sets were not intended to be equivalent and they differed on a number of dimensions. This means there are other possible explanations for the observed differences in relative accuracy, and that a clean test of the hypothesis is still needed to determine if conceptual overlap might impact comprehension monitoring.

A possible theoretical explanation for lower levels of accuracy is related to the distinctiveness of the materials between which learners are being asked to discriminate. When there is too much similarity between to-be-learned materials, then the content presented across texts may seem to “blur” together. In these instances, learners may be less sensitive to text-to-text variations in comprehension, and it would be especially difficult to decide which texts they have learned better and worse, reducing relative accuracy (Guerrero et al., 2022, 2023; Hildenbrand & Sanchez, 2022; Wiley et al., 2005).

Experiment 1

This study tested if strikingly low levels of metacomprehension accuracy could be seen by manipulating the conceptual overlap among sets of texts. Text sets were designed to be comparable in terms of difficulty and text structure. No differences were expected in average comprehension, average magnitude of judgments of comprehension, nor therefore in measures of calibration (absolute error, confidence bias). In contrast, the conceptual-overlap manipulation was expected to impact levels of relative accuracy.

Method

Participants

Based on past work that has found medium size effects using between-subjects manipulations on relative accuracy with adult readers (Griffin et al., 2008; Griffin, Wiley, & Thiede, 2019; Rawson et al., 2000; Shiu & Chen, 2013; Thiede & Anderson, 2003; Thiede et al., 2003; Waldeyer & Roelle, 2020; Wiley, Griffin, et al., 2016), and a recent meta-analysis reporting an average Hedges g of .46 between conditions (Prinz et al., 2020), which is likely to be low because it included both younger and adult readers, a medium size effect was used in a priori sample size projections. Assuming d = .5, the minimum sample size required was computed as N = 128 (64 per group) for 80% power on a two-tailed test. Data were collected for a total of N = 178 participants, who were undergraduate students at the University of Illinois at Chicago and who received course credit as part of an Introduction to Psychology subject pool. However, when participants have no variance in the judgments they make across texts, it is not possible to compute relative accuracy scores. For the present study a total of 18 participants were dropped due to this criterion.Footnote 1 Thus, the final sample included N = 160 participants (Mage = 19.51 years, 63% female; n = 87 for low-overlap condition, n = 73 for high-overlap condition) who identified as 27% Asian, 6% Black or African American, 35% Hispanic or Latina/o, and 24% White. The remaining 8% of participants declined to provide this demographic information.

Research design

Using a between-subjects design, metacomprehension accuracy was compared for two sets of texts that differed in their degree of conceptual overlap. Specifically, the texts were taken from either the same (high-overlap condition) or disparate (low-overlap condition) sub-fields of psychology as listed in an Introduction to Psychology textbook. Participants were randomly assigned to condition.

Materials

Texts

Text materials for this study were based on those used in Guerrero et al. (2022), and included excerpts from an Introduction to Psychology textbook. The six texts in the high-overlap text set included excerpts from chapters covering topics that typically fall within the sub-field of Social/Personality Psychology. The topics in this set were: Twin Studies, Conformity, Fundamental Attribution Error, Cognitive Dissonance, Biased and Representative Samples, and the Placebo Effect (the latter two are more central to the methods used in Social Psychology than some other Psychology areas). As one way to validate overlap among topics we found that the core concepts from each of these texts were listed within the index of the open-source online textbook, Principles of Social Psychology (Daffin & Lane, 2021). In contrast, the low-overlap text set included excerpts from multiple sub-fields. Three of the texts were the same as the high-overlap set (Biased and Representative Samples, Conformity, Fundamental Attribution Error), and the other three were topics not included in the Social Psychology textbook index (Broca’s and Wernicke’s Aphasia, Classical Conditioning, and Intelligence and Testing).

Automated measures of semantic overlap were obtained for both sets of texts using latent semantic analysis (LSA). Semantic overlap was computed using a pairwise, document-to-document analysis using the general reading up to first-year college semantic space with the maximum number of factors selected. “General reading up to first-year college" refers to the type of text corpus that was used to construct the semantic space. This means that the semantic space was built based on the co-occurrence patterns of words in a collection of texts that are typically encountered in general reading up to the level of a first-year college course. The resulting semantic space captures the latent semantic relationships between words in this type of text corpus, which can be used to analyze and understand the meaning of new texts that fall within this domain. For each text comparison the analysis resulted in a coefficient describing the degree of semantic overlap, with numbers closer to 1 representing a greater degree of semantic overlap. Pairwise document-to-document comparisons across all texts are shown in Table 1.

The first set of coefficients reflects the overlap among the three texts that appear in both sets (see Table 1, leftmost columns). They are all from the same sub-area, so it makes sense that their coefficients are relatively high (.42 to .60). The middle set of columns shows the 12 coefficients for the high-overlap text set that also resulted in relatively high coefficients (M = 0.49, SD = 0.11). The rightmost columns show the coefficients for the low-overlap set (M = 0.32, SD = 0.08), which are significantly lower than the high-overlap set, t(10) = 4.46, p < .001, d = 1.79. None of the coefficients were above .42 for the low-overlap text set, compared to 9 of 12 for the high-overlap text set. LSA semantic overlap estimates have been shown to follow human judgments of similarity (Landauer et al., 1998), so these analyses provide evidence for the validity of the conceptual-overlap manipulation.

Across both sets, all texts followed the same basic structure. The first paragraph consisted of a real-life accessible example of the theory or concept being discussed, which was then followed by its formal definition. Subsequent paragraphs in each text described the results of empirical studies to support the theory or concept. The texts had an average Flesch-Kincaid Grade Level of 12.8 and were approximately 800 words long.

Tests

For each text, the comprehension assessment consisted of five multiple-choice inference questions (based on Guerrero et al., 2022). These questions were designed to test learners’ understanding (and not just memory) for the text (Guerrero et al., 2022; Guerrero & Wiley, 2018; Wiley et al., 2005, Wiley, Thiede, & Griffin, 2016). As such, these questions required learners to make connections between ideas presented in the text or asked them to apply their understanding to a new context. None of the answers were explicitly stated in the text.

Procedure

The experiment was fully computerized and administered remotely and online through Qualtrics. It was required that the study was completed on a computer or laptop. In accordance with Internal Review Board requirements, all participants completed an agreement to participate before beginning the study. The procedure was the same across the two conditions: First, participants read all six texts for understanding. They were instructed:

You should study these in the same way you would usually study for a class. After reading you will be asked to answer questions about those texts.

All texts were always read in the same order: Biased and Representative Samples, Classical Conditioning, Conformity, Broca’s and Wernicke’s Aphasia, Fundamental Attribution Error, and Intelligence and Testing (for the low-overlap set) or Biased and Representative Samples, the Placebo Effect, Twin Studies, Conformity, Fundamental Attribution Error, and Cognitive Dissonance (for the high-overlap set). After reading all texts, participants were then prompted to make judgments of comprehension (JOCs) for all six texts:

You will take a multiple-choice test on each of these texts. How many questions out of five do you think you will get correct?

Participants completed JOCs for each text. As shown in Fig. 1, all six JOC prompts were presented on one page and in the same order in which participants had read the texts. Each of the texts were identified by their title.

Judgment of comprehension (JOC) rating prompt in Experiment 1

Following the JOCs, participants completed a five-item inference test on each of the six texts, also in the same order in which they had originally been read. For each text, all five questions were displayed on the same page. Reading, judgment, and testing portions of the experiment were all self-paced. Texts were only available during the reading phase, and JOCs were only collected after the reading phase had concluded and before any testing. Demographic background information including age, gender, race, and self-reported SAT was collected as part of an exit survey appended at the end of the experiment. When participants reported ACT scores but no SAT scores, the scores were converted using the 2018 version of the ACT/SAT concordance table. The purpose of collecting ACT/SAT scores was to use them as a simple check for non-random assignment. As shown in Table 2, no significant differences were seen between conditions (t < 1, Bayes Factor 8.20 in favor of no differences between conditions). The study took under 90 min to complete.

Metacomprehension measures

Relative accuracy is computed as the intra-individual correlation between participants’ predicted and actual performance across the set of texts. Both Pearson and Gamma correlations are reported. Although Gamma correlations were the original metric used in metamemory research because both predictions and performance are dichotomous variables in that context, Pearson correlations have been increasingly used in metacomprehension research because both predictions and performance are continuous measures (in this case, ranging from 0 to 5). Higher intra-individual correlations (closer to 1) represent better discrimination, and better monitoring accuracy.

Confidence bias is computed as the average signed difference between predicted performance (JOCs) and actual performance (comprehension scores) across texts. The closer one’s score is to zero the better calibrated are their JOC estimates. Positive values are interpreted as over-confidence and negative values are interpreted as under-confidence. Absolute error is computed as the average absolute difference between predicted and actual performance across texts regardless of directionality. Scores further from zero represent greater miscalibration in the JOC estimate. For graphing purposes, JOCs and comprehension test scores are reported as proportions (each out of 5 maximum), and confidence bias and absolute error are computed using these proportions.

Results

Judgments of comprehension (JOCs) and comprehension test scores

As shown in Table 2, no significant differences were seen in average JOCs, t(158) = 0.68, p = 0.50, d = 0.11, or comprehension, t(158) = 0.03, p = .97, d = 0.01.Footnote 2 These analyses suggest that the two sets were similar in terms of both perceived and actual difficulty.

Relative accuracy

Relative accuracy was analyzed using intra-individual Pearson correlations between participants’ predicted and actual performance across texts. As shown in Fig. 2, mean relative accuracy was similar to typical levels for the low-overlap set but was significantly lower for the high-overlap set, t(158) = 2.48, p = .01, d = 0.39. Mean relative accuracy was significantly different from zero for the low-overlap, t(86) = 5.77, p < .001, d = 0.62, but not the high-overlap set, t(72) = 1.66, p = .10, d = 0.20.Footnote 3 This suggests that the conceptual overlap among the topics impaired the ability to discriminate comprehension across the texts.

Experiment 1: Metacomprehension outcomes by overlap condition. Note. Relative accuracy computed as Pearson’s r, with the mean r by condition being reported. Absolute error and confidence bias were computed using proportion scores. Higher relative accuracy means better discrimination. Lower absolute error and confidence bias mean better calibration. The error bars represent the standard error of the means

An alternative reason why low relative accuracy may be found is if one condition experiences less within-participant variance than the other. Contrary to this alternative hypothesis, within-participant SDs for comprehension test performance did not differ significantly between the two conditions, t(158) = 0.90, p = 0.37, d = 0.14.Footnote 4 Thus, lower relative accuracy for the high-overlap set was not an artifact due to lack of variance within individuals in their test scores.

Absolute error and confidence bias

Although not the primary focus, two measures of learner calibration were also computed: confidence bias and absolute error. As shown in Fig. 2, no significant differences between conditions were seen for either absolute error, t(158) = 0.81, p = .42, d = 0.13, or confidence bias, t(158) = 0.60, p = .55, d = 0.10.Footnote 5 The mean difference between JOCs and actual performance was positive and significantly different from zero for both high-overlap, t(72) = 4.97, p < .001, d = 0.58, and low-overlap, t(86) = 4.76, p < .001, d = 0.51, conditions. The majority of participants were overconfident in their ability to answer questions about the texts (high-overlap condition, 67.1%; low-overlap condition, 66.7%).

Discussion

These results support the hypothesis that conceptual overlap among texts constrains relative metacomprehension accuracy. While for the low-overlap text set mean relative accuracy was found to be similar to typical levels reported in the literature, relative accuracy was significantly lower for the high-overlap text set. The text sets did not differ in average performance, perceived difficulty, nor within-participant variation in test performance. Therefore, differences in relative accuracy cannot be explained as a simple byproduct of differences in overall text difficulty or actual comprehension, nor simply because of more similar levels of performance across texts.

Experiment 2

The main goal of Experiment 2 was to extend the results from Experiment 1 to new text materials as well as to test a possible alternative explanation for low relative accuracy in the high-overlap condition. In Experiment 1, JOCs were collected after reading all six texts and each text was identified only by its title. One possible reason why relative accuracy might have been lower for the high-overlap set under these conditions is that the titles may provide weak cues to the individual texts. In contrast, for the low-overlap set each title may provide a better or stronger cue. In Experiment 2, the procedure was modified to clarify which text participants should be judging specifically for the high-overlap set. As in Experiment 1, participants were asked to judge their understanding of the different topics after reading all six texts. However, to limit confusion regarding which text they should be judging in the high-overlap condition, JOCs were also collected immediately after reading each text.

Methods

Participants

An a priori power analysis was conducted based on the effect size reported for Experiment 1. Assuming d = .39, the minimum sample size required was computed as N = 210 (105 per group) for 80% power on a two-tailed test. Data were collected for a total of N = 216 participants, who received course credit as part of the undergraduate Introduction to Psychology subject pool at the University of Illinois at Chicago. A total of 13 participants were dropped due to no variance in their judgments.Footnote 6 Thus, the final sample included N = 203 participants (Mage = 19.20, 68% female; n = 101 for the low-overlap condition, n = 102 for the high-overlap condition) who identified as 25% Asian, 14% Black or African American, 35% Hispanic or Latina/o, and 22% White. One participant identified as Native American. The remaining 4% of participants declined to provide this demographic information.

Research design

The overall study design was similar to Experiment 1. Using a between-subjects design, metacomprehension accuracy was compared for two sets of texts that differed in their degree of conceptual overlap. The low-overlap text set described different phenomena across the social and natural sciences. The high-overlap text set consisted of a series of excerpts taken from an Introduction to Biology textbook. Participants were randomly assigned to condition.

Materials

Texts

Two new sets of text materials were used for Experiment 2. The low-overlap text set was taken from Griffin, Wiley, and Thiede (2019), and described different phenomena across the social and natural sciences (Volcanoes, Evolution, Ice Ages, Antibiotics, Intelligence Testing, Monetary Policy). These texts have been used across several prior studies testing the effects of different manipulations on relative accuracy (Griffin et al., 2008; Jaeger & Wiley, 2014; Thiede et al., 2011). The high-overlap text set consisted of a series of excerpts taken from an Introduction to Biology textbook (How are proteins absorbed? How does natural selection work? How does body size affect animal physiology? How do nutrients cycle through ecosystems? How does chlorophyll capture light energy? and How do fungi participate in mutualistic relationships?). As in Experiment 1 and consistent across conditions, all texts followed the same basic structure. The texts in the low-overlap set varied from 650 to 900 words in length and had Flesch-Kincaid grade levels of 11–12. The texts in the high-overlap set varied from 830 to 1,200 words in length and had Flesch-Kincaid grade levels of 11–12. None of the texts from the low-overlap set were the same as those included as part of the high-overlap set. All texts were always read in the same order as listed above.

As in Experiment 1, automated measures of semantic overlap were obtained for each set of texts using LSA. Pairwise document-to-document comparisons for each set are shown in Tables 3 and 4.

Semantic overlap was computed using a pairwise, document-to-document analysis using the general reading up to first-year college semantic space with the maximum number of factors selected. As would be expected, mean semantic overlap was significantly lower for the low-overlap text set (M = 0.21, SD = 0.11) as compared to the high-overlap text set (M = 0.38, SD = 0.14), t(28) = -3.70, p < .001, d = 1.35.

Tests

As in Experiment 1, for each text, the comprehension assessment consisted of five multiple-choice inference questions. For the low-overlap text set on diverse social and natural science topics, the questions were the same as those used in Griffin, Wiley, and Thiede (2019). New questions were created for the high-overlap text set on biology topics. As was true for the psychology assessments used in Experiment 1, both sets of questions were designed to test learners’ understanding (and not just memory) for the text (Wiley et al., 2005, Wiley, Thiede, & Griffin, 2016). As such, these questions required learners to make connections between ideas presented in the text or asked them to apply their understanding to a new context. None of the answers were explicitly stated in the text.

Procedure

The overall procedure was the same as in Experiment 1 with two exceptions. First, instead of online administration, all data were collected in-person in the laboratory. Second, to limit confusion in the high-overlap condition, JOCs were collected both immediately after reading each text (using the same prompt as in Experiment 1) and again after reading all six texts. Only participants in the high-overlap condition completed two sets of judgments. Average JOCs were similar at both timepoints (Immediate M = .49, After reading all M = .50). Relative accuracy was also similar regardless of which JOCs were used: Immediate, Pearson M = . 09; After reading all, Pearson M = .06; Immediate, Gamma M = .10; After reading all, Gamma M = .08. Since the immediate JOCs provided more clarity about which text to judge, analyses were conducted using the immediate JOCs. For the low-overlap condition the procedure was the exact same as in Experiment 1. Participants completed the study individually with groups of up to ten people completing the study in the same session. The study took under 90 min to complete. As is shown in Table 5, a simple check for non-random assignment using self-reported SAT scores provided no evidence for significant differences between conditions (ts < 1.67; Bayes factor 3.23:1 in favor of no differences between conditions).

Results

JOCs and comprehension test scores

As shown in Table 5, significant differences between sets were seen in average JOCs, t(201) = 2.91, p = 0.004, d = 0.41, and performance on comprehension tests, t(201) = 5.26, p < .001, d = 0.74. The high-overlap condition had lower JOCs and test scores than the low-overlap condition.

Relative accuracy

The conceptual-overlap effect fully replicated. Mean relative accuracy (Pearson) was significantly lower for the high-overlap set, t(201) = 4.23, p < .001, d = 0.59.Footnote 7 Although average JOCs and test scores have no inherent relationship relative accuracy scores, which is a primary reason why they have been a preferred measure of monitoring accuracy (Nelson, 1984), given the differences between conditions in JOCs and test scores, both were included as covariates in ANCOVAs. The results were the same: Mean relative accuracy (Pearson) was significantly lower for the high-overlap set, F(1,199) = 9.34, MSE = 0.15, p = .003, η2p = 0.05.Footnote 8 There was no evidence that within-participant variation in performance differed between the two conditions, t(201) = 0.37, p = .72, d = 0.04.Footnote 9

Absolute error and confidence bias

As shown in Fig. 3, no differences were seen in absolute error, t(201) = 0.73, p = .47, d = 0.10, or confidence bias, t(201) = 1.04, p = .30, d = 0.15.Footnote 10 The mean difference between judgments and actual performance was positive and significantly different from zero for both high-overlap, t(101) = 6.62, p < .001, d = 0.66, and low-overlap sets, t(100) = 4.16, p < .001, d = 0.41. The majority of participants were overconfident in their ability to answer questions about the texts (high-overlap condition, 74.5%; low-overlap condition, 62.4%).

Experiment 2: Metacomprehension outcomes by overlap condition. Note. Relative accuracy computed as Pearson’s r, with the mean r by condition being reported. Absolute error and confidence bias were computed using proportion scores. Higher relative accuracy means better discrimination. Lower absolute error and confidence bias mean better calibration. The error bars represent the standard error of the means

Discussion

Experiment 2 replicated the conceptual-overlap effect from Experiment 1. Mean relative accuracy was significantly lower for the high-overlap text set on biology topics as compared to the low-overlap set on mixed science topics. Although the text sets were not matched in terms of perceived and actual difficulty, differences in relative accuracy were seen even after controlling for JOCs and test scores. Moreover, low relative accuracy for the high-overlap set was found even with JOCs collected immediately after reading each text. Thus, the present results suggest that lower resolution in the high-overlap condition is not simply due to the nature of the JOC prompt (where each text is identified only by its title), making it harder to know which text they should be judging when.

General discussion

The present results suggest that conceptual overlap among texts is an important factor that constrains relative metacomprehension accuracy. Across two experiments, mean relative accuracy was significantly lower for a high conceptual-overlap text set compared to when topics were less similar (low conceptual-overlap).

Several possible explanations for this effect seem unlikely. First, lower relative accuracy for the high-overlap sets was not simply due to more similar levels of performance across topics (less within-participant variation in test performance). A second possible reason why relative accuracy could have been lower for the high-overlap set is that the titles may not have clearly cued which text participants should be judging their comprehension of, and may have prompted them to think of the wrong text. In contrast, titles may have provided better or stronger cues at the time of judgment for the low-overlap set. To address this concern and specifically to reduce confusion in the high-overlap set, in Experiment 2 JOCs were collected both immediately after reading each text and again after reading all texts. Results were the same regardless of which set of JOCs (immediate vs. after reading) were used to compute relative accuracy. Even with this methodological change that removed ambiguity about which text should be judged, relative metacomprehension accuracy was still poorer for the high-overlap set.

In Experiment 1, significant differences in relative accuracy were seen despite the fact that the low-overlap texts were still from the same general domain and textbook. This highlights the impact that even subjectively small variations in topic overlap may have on learners’ metacomprehension accuracy. Experiment 2 was added to provide evidence that these results are generalizable to other materials and topic domains. Compared to Experiment 1, larger effect sizes were seen when there was even less overlap in the topics in the low-overlap set, which is consistent with the theory that the degree of overlap is a factor that constrains relative accuracy.

From a theoretical perspective, these findings highlight an important distinction that can help explain why metamemory judgments are more likely to be accurate than metacomprehension judgments. For metamemory, when a stimulus cue is provided at the time of judgment prompting retrieval of the to-be-learned item, then conceptual overlap or relatedness of items will be less relevant to judgment accuracy. In contrast, for metacomprehension, what it means to comprehend a text is more abstract and open-ended, with a less-finite number of possible test questions and broad potential connections with prior knowledge. When texts share conceptual overlap, it may be especially difficult for a learner to access their experience with one text independent of others, making it harder to discriminate how well one is likely to perform on a comprehension test for one text versus another.

Overall, the present results are consistent with the strikingly low levels of relative accuracy that have been found when a set of texts on similar topics or multiple paragraphs from a single text are used as stimuli in metacomprehension research (Griffin et al., 2009; Maki, 1998a; Maki et al., 1994; Ozuru et al., 2012). A broader implication of these results is that even the modest average levels of metacomprehension accuracy reported in multiple meta-analyses may be inflated by the low conceptual-overlap among texts used in most prior studies.

In addition, the lack of effects on confidence bias or absolute accuracy highlights the divergence between these measures and relative accuracy. It has been argued that the former measures do not require sensitivity to text-specific cues related to text-to-text variations in comprehension, but are rather more dependent upon a priori heuristics about the domain and one’s general aptitude that apply equally to all the texts (Griffin et al. 2009; Griffin, Mielicki, & Wiley, 2019; Griffin, Wiley, & Thiede, 2019). This argument is supported by the fact that only relative accuracy was impacted by the conceptual-overlap manipulation.

Additionally, the present findings also offer a possible explanation for inconsistent results from manipulations that sometimes have produced sizeable increases in relative metacomprehension accuracy, but other times have failed to replicate under conditions where there was much greater conceptual-overlap between texts. For example, one of the most robust approaches to improving metacomprehension accuracy has been to prompt readers to engage in explanation activities while studying (Griffin, Wiley, & Thiede, 2019; Wiley, Thiede, & Griffin, 2016). However, a recent study found that an explanation activity was not effective for improving relative accuracy for students in an Introductory Psychology course (Guerrero et al., 2022). Even though a similar activity was modestly more successful at improving relative accuracy in the context of a Research Methods course (Wiley, Griffin, et al., 2016), the overall level of accuracy on these course-based text sets was still below the typical levels. This raises concerns about the generalizability of many lab-based studies to actual classroom contexts. Various interventions and manipulations that have succeeded in improving accuracy using readings on a wide variety of topics may not yield benefits when applied to students trying to monitor their comprehension from readings within an actual course.

Beyond documenting conceptual overlap as a source of monitoring difficulty, the present work does not yet offer a solution for how to increase metacomprehension accuracy under these conditions. Different manipulations may be needed to improve the ability to engage in effective self-regulated learning and to accurately monitor understanding when learning about similar topics. Perhaps explicitly warning learners before reading that they will need to monitor their understanding of different topics, or prompting learners to consider both similarities and differences between concepts, may help them to be able to better discriminate between which topics they understand better and which they understand worse. Or perhaps there may be ways to introduce variation across texts and make each more distinctive by manipulating text structure or other elements of presentation. It is hoped that this work may serve as a basis for future studies to explore manipulations that may clarify and address the different sources of monitoring difficulty that can undermine metacomprehension and effective self-regulated learning.

Data availability

The data are available via the Open Science Framework at https://osf.io/2xk6f/. Materials are available upon request from the authors. The experiments were not preregistered.

Code availability

Not applicable.

Notes

Per condition this number includes n = 8 dropped from the high-overlap condition and n = 10 dropped from the low-overlap condition.

Following computational procedures as outlined in Jarosz and Wiley (2014), the data were also examined by estimating a Bayes factor using Bayesian Information Criteria (BIC) (Wagenmakers, 2007), comparing the fit of the data under the null hypothesis and the alternative hypothesis. For JOCs, the estimated Bayes factor (null/alternative) suggested that the data were 10.55:1 and in favor of the null hypothesis, or rather, at least ten times more likely to occur if there were indeed no difference in average JOCs between the two conditions. For average test scores, the estimated Bayes factor (null/alternative) suggested that the data were 9.22:1 and in favor of the null hypothesis, or rather, at least nine times more likely to occur if test performance did not differ between the two conditions.

Pearson scores were highly correlated with relative accuracy computed with Gamma, r = .95, p < .001. When results are analyzed using Gamma correlations the same pattern emerges where mean relative accuracy was significantly lower for the high-overlap set than the low-overlap set, t(158) = 2.29, p = .02 , d = 0.36.

The estimated Bayes factor (null/alternative) suggested that the data were 8.39:1 and in favor of the null hypothesis for which no difference between conditions is expected.

The estimated Bayes factor (null/alternative) suggested that the data were 17.36:1 and 10.55:1 (absolute error and confidence bias respectively) in favor of the null hypothesis.

Of the 13 participants who were dropped due to invariance in judgments, n = 9 were in the high-overlap condition and n = 4 were in the low-overlap condition.

This pattern was the same when relative accuracy was computed using Gamma correlations, t(201) = 3.56, p < .001, d = 0.50.

Average test performance was a significant covariate in the ANCOVA model, F(1, 199) = 5.83, MSE = 0.15, p = .02, η2p = 0.03, but average JOC was not, F(1, 199) = 0.20, MSE = 0.15, p = .71. The results were the same when the ANCOVA was run using Gamma correlations. Mean relative accuracy (Gamma) was significantly lower for the high-overlap set, F(1,199) = 6.52, MSE = 0.24, p = .01, η2p = 0.03. The covariate for average test performance was F(1, 199) = 3.83, MSE = 0.24, p = .052, η2p = 0.02, and the covariate for JOC was F(1, 199) = 0.18, MSE = 0.24, p = .67. The results were also the same when the relative accuracy measure for the high-overlap condition was computed using the delayed JOCs collected after reading all texts instead of immediate ratings. Mean relative accuracy (Pearson) was significantly lower for the high-overlap set than for the low-overlap set, F(1, 199) = 12.25, MSE = 0.16, p < .001, η2p = 0.06. Average test performance was a significant covariate in the model, F(1, 199) = 4.95, MSE = 0.16, p = .03, η2p = 0.02, but average JOC was not, F(1, 199) = 0.21, MSE = 0.16, p = .65.

Further, the estimated Bayes factor (null/alternative) suggested that the data were at least 13 times more likely to occur if there was indeed no difference in within-participant variation in performance between conditions.

The estimated Bayes factor (null/alternative) suggested that the data were 11.06:1 and 8:29:1 (absolute error and confidence bias respectively) in favor of the null hypothesis (that is no difference between conditions).

References

Daffin, L., & Lane, C. (2021). Principles of social psychology (2nd ed.). Washington State University. https://opentext.wsu.edu/social-psychology/

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16(4), 228–232. https://doi.org/10.1111/j.1467-8721.2007.00509.x

Dunlosky, J., & Metcalfe, J. (2009). Metacognition. Sage Publications Inc.

Dunlosky, J., & Rawson, K. A. (2012). Overconfidence produces underachievement: Inaccurate self evaluations undermine students’ learning and retention. Learning and Instruction, 22(4), 271–280. https://doi.org/10.1016/j.learninstruc.2011.08.003

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. American Psychologist, 34(10), 906–911. https://doi.org/10.1037/0003-066X.34.10.906

Fukaya, T. (2010). Factors affecting the accuracy of metacomprehension: A meta-analysis. Japanese Journal of Educational Psychology, 58(2), 236–251. https://doi.org/10.5926/jjep.58.236

Griffin, T. D., Wiley, J., & Thiede, K. W. (2008). Individual differences, rereading, and self-explanation: Concurrent processing and cue validity as constraints on metacomprehension accuracy. Memory & Cognition, 36, 93–103. https://doi.org/10.3758/MC.36.1.93

Griffin, T. D., Jee, B. D., & Wiley, J. (2009). The effects of domain knowledge on metacomprehension accuracy. Memory & Cognition, 37(7), 1001–1013. https://doi.org/10.3758/MC.37.7.1001

Griffin, T. D., Mielicki, M. K., & Wiley, J. (2019). Improving students' metacomprehension accuracy. In J. Dunlosky & K. A. Rawson (Eds.), The Cambridge handbook of cognition and education (pp. 619–646). Cambridge University Press. https://doi.org/10.1017/9781108235631.025

Griffin, T. D., Wiley, J., & Thiede, K. W. (2019). The effects of comprehension-test expectancies on metacomprehension accuracy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(6), 1066–1092. https://doi.org/10.1037/xlm0000634

Guerrero, T. A., & Wiley, J. (2018). Effects of text availability and reasoning processes on test performance. Proceedings of the 40th Annual Conference of the Cognitive Science Society (pp. 1745–1750). Cognitive Science Society. https://cogsci.mindmodeling.org/2018/papers/0336/0336.pdf

Guerrero, T. A., Griffin, T. D., & Wiley, J. (2022). I think I was wrong: The effect of making experimental predictions on learning about theories from psychology textbook excerpts. Metacognition & Learning, 17, 337–373. https://doi.org/10.1007/s11409-021-09276-6

Guerrero, T. A., Griffin, T. D., & Wiley, J. (2023). The effect of generating examples on comprehension and metacomprehension. Journal of Experimental Psychology: Applied. Advance online publication. https://doi.org/10.1037/xap0000490

Hildenbrand, L., & Sanchez, C. A. (2022). Metacognitive accuracy across cognitive and physical task domains. Psychonomic Bulletin & Review, 29(4), 1524–1530. https://doi.org/10.3758/s13423-022-02066-4

Jaeger, A. J., & Wiley, J. (2014). Do illustrations help or harm metacomprehension accuracy? Learning and Instruction, 34, 58–73. https://doi.org/10.1016/j.learninstruc.2014.08.002

Jarosz, A. F., & Wiley, J. (2014). What are the odds? A practical guide to computing and reporting Bayes factors. The Journal of Problem Solving, 7(1), 2–9. https://doi.org/10.7771/1932-6246.1167

Kintsch, W. (1994). Text comprehension, memory, and learning. American Psychologist, 49(4), 294–303. https://doi.org/10.1037//0003-066X.49.4.294

Kwon, H., & Linderholm, T. (2014). Effects of self-perception of reading skill on absolute accuracy of metacomprehension judgements. Current Psychology, 33(1), 73–88. https://doi.org/10.1007/s12144-013-9198-x

Landauer, T. K., Foltz, P. W., & Laham, D. (1998). An introduction to latent semantic analysis. Discourse Processes, 25(2–3), 259–284. https://doi.org/10.1080/01638539809545028

Maki, R. H. (1998a). Predicting performance on text: Delayed versus immediate predictions and tests. Memory & Cognition, 26(5), 959–964. https://doi.org/10.3758/BF03201176

Maki, R. H. (1998b). Test predictions over text material. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Metacognition in educational theory and practice (pp. 117–144). Lawrence Erlbaum.

Maki, R. H., & McGuire, M. J. (2002). Metacognition for text: Findings and implications for education. In T. J. Perfect & B. L. Schwartz (Eds.), Applied metacognition (pp. 39–67). Cambridge University Press. https://doi.org/10.1017/CBO9780511489976.004

Maki, R. H., Jonas, D., & Kallod, M. (1994). The relationship between comprehension and metacomprehension ability. Psychonomic Bulletin & Review, 1, 126–129. https://doi.org/10.3758/BF03200769

Nelson, T. O. (1984). A comparison of current measures of the accuracy of feeling-of-knowing predictions. Psychological Bulletin, 95(1), 109–133. https://doi.org/10.1037/0033-2909.95.1.109

Nelson, T. O., & Dunlosky, J. (1991). When people's judgments of learning (JOLs) are extremely accurate at predicting subsequent recall: The “delayed-JOL effect” Psychological Science, 2(4), 267–271. https://doi.org/10.1111/j.1467-9280.1991.tb00147.x

Ozuru, Y., Kurby, C. A., & McNamara, D. S. (2012). The effect of metacomprehension judgment task on comprehension monitoring and metacognitive accuracy. Metacognition and Learning, 7(2), 113–131. https://doi.org/10.1007/s11409-012-9087-y

Prinz, A., Golke, S., & Wittwer, J. (2020). How accurately can learners discriminate their comprehension of texts? A comprehensive meta-analysis on relative metacomprehension accuracy and influencing factors. Educational Research Review, 31, 100358. https://doi.org/10.1016/j.edurev.2020.100358

Rawson, K. A., Dunlosky, J., & Thiede, K. W. (2000). The rereading effect: Metacomprehension accuracy improves across reading trials. Memory & Cognition, 28(6), 1004–1010. https://doi.org/10.3758/BF03209348

Rhodes, M. G., & Tauber, S. K. (2011). The influence of delaying judgments of learning on metacognitive accuracy: A meta-analytic review. Psychological Bulletin, 137(1), 131–148. https://doi.org/10.1037/a0021705

Shiu, L. P., & Chen, Q. S. (2013). Self and external monitoring of reading comprehension. Journal of Educational Psychology, 105, 78–88. https://doi.org/10.1037/a0029378

Thiede, K. W., & Anderson, M. C. M. (2003). Summarizing can improve metacomprehension accuracy. Contemporary Educational Psychology, 28(2), 129–160. https://doi.org/10.1016/S0361-476X(02)00011-5

Thiede, K. W., Anderson, M., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95(1), 66–73. https://doi.org/10.1037/0022-0663.95.1.66

Thiede, K. W., Griffin, T. D., Wiley, J., & Redford, J. (2009). Metacognitive monitoring during and after reading. In D. J. Hacker, J. Dunlosky, & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 85–106). Routledge.

Thiede, K. W., Wiley, J., & Griffin, T. D. (2011). Test expectancy affects metacomprehension accuracy. British Journal of Educational Psychology, 81(2), 264–273. https://doi.org/10.1348/135910710X510494

Wagenmakers, E. J. (2007). A practical solution to the perva- sive problems of p values. Psychonomic Bulletin and Review, 14(5), 779–804. https://doi.org/10.3758/BF03194105

Waldeyer, J., & Roelle, J. (2020). The keyword effect: A conceptual replication, effects on bias, and an optimization. Metacognition and Learning, 16, 37–56. https://doi.org/10.1007/s11409-020-09235-7

Wiley, J., Griffin, T. D., & Thiede, K. W. (2005). Putting the comprehension in metacomprehension. Journal of General Psychology, 132, 408–428. https://doi.org/10.3200/GENP.132.4.408-428

Wiley, J., Griffin, T. D., Jaeger, A. J., Jarosz, A. F., Cushen, P. J., & Thiede, K. W. (2016). Improving metacomprehension accuracy in an undergraduate course context. Journal of Experimental Psychology: Applied, 22, 393–405. https://doi.org/10.1037/xap0000096

Wiley, J., Thiede, K. W., & Griffin, T. D. (2016). Improving metacomprehension with the situation model approach. In K. Mokhtari (Ed.), Improving reading comprehension through metacognitive reading instruction for first and second language readers (pp. 93–110). Rowman & Littlefield. https://doi.org/10.1037/xap0000096

Yang, C., Zhao, W., Yuan, B., Luo, L., & Shanks, D. R. (2023). Mind the gap between comprehension and metacomprehension: Meta-analysis of metacomprehension accuracy and intervention effectiveness. Review of Educational Research, 93(2), 143–194. https://doi.org/10.3102/00346543221094083

Zhao, Q., & Linderholm, T. (2011). Anchoring effects on prospective and retrospective metacomprehension judgments as a function of peer performance information. Metacognition and Learning, 6(1), 25–43. https://doi.org/10.1007/s11409-010-9065-1

Acknowledgements

The authors thank Tim George, Tricia A. Guerrero, and Marta K. Mielicki for their contributions and Lamorej Roberts for assistance in data collection.

Funding

This work was supported by the Institute of Education Sciences, US Department of Education under Grant R305A160008, and by the National Science Foundation (NSF) under DUE grant 1535299, to Thomas D. Griffin and Jennifer Wiley at the University of Illinois at Chicago. The opinions expressed are those of the authors and do not represent views of the Institute, the US Department of Education, or the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. The study was approved by the Institutional Review Board of the University of Illinois at Chicago (Protocol # 2000-0676).

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

Not applicable.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Practices Statement

The data are available via the Open Science Framework at https://osf.io/2xk6f/. Materials are available upon request from the authors. The experiments were not preregistered.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hildenbrand, L., Sarmento, D., Griffin, T.D. et al. Conceptual overlap among texts impedes comprehension monitoring. Psychon Bull Rev 31, 750–760 (2024). https://doi.org/10.3758/s13423-023-02349-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-023-02349-4