Abstract

Music is a powerful medium that influences our emotions and memories. Neuroscience research has demonstrated music’s ability to engage brain regions associated with emotion, reward, motivation, and autobiographical memory. While music's role in modulating emotions has been explored extensively, our study investigates whether music can alter the emotional content of memories. Building on the theory that memories can be updated upon retrieval, we tested whether introducing emotional music during memory recollection might introduce false emotional elements into the original memory trace. We developed a 3-day episodic memory task with separate encoding, recollection, and retrieval phases. Our primary hypothesis was that emotional music played during memory recollection would increase the likelihood of introducing novel emotional components into the original memory. Behavioral findings revealed two key outcomes: 1) participants exposed to music during memory recollection were more likely to incorporate novel emotional components congruent with the paired music valence, and 2) memories retrieved 1 day later exhibited a stronger emotional tone than the original memory, congruent with the valence of the music paired during the previous day’s recollection. Furthermore, fMRI results revealed altered neural engagement during story recollection with music, including the amygdala, anterior hippocampus, and inferior parietal lobule. Enhanced connectivity between the amygdala and other brain regions, including the frontal and visual cortex, was observed during recollection with music, potentially contributing to more emotionally charged story reconstructions. These findings illuminate the interplay between music, emotion, and memory, offering insights into the consequences of infusing emotional music into memory recollection processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Music holds profound power in both characterizing and cueing past experiences, as well as conveying and influencing emotion. In everyday life, music is commonly used to express and elicit emotional responses (Juslin & Sloboda, 2001) in various contexts, spanning from films (Cohen, 2001) to personal life events, such as weddings and sports competitions, and even during poignant moments, such as a romantic break-up (Eerola et al., 2018; Patel et al., 2023). A number of psychological mechanisms have been proposed to be responsible for music’s ability to mediate or induce emotion. For example, emotion may be induced via contagion or the listener automatically internally mirroring the emotion perceived in the music (Juslin, 2019; Juslin & Laukka, 2003). Episodic memories evoked by music also are capable of inducing emotion. Past literature has demonstrated that music can act as a context and retrieval cue to easily trigger associated autobiographical memories (Belfi et al., 2016; Jakubowski & Ghosh, 2021) as well as the memories’ associated emotions (Jäncke, 2008; Mado Proverbio et al., 2015).

Researchers have explored leveraging music for affect regulation in psychological therapy because of its influence on emotions. For example, music therapy has been applied to improve pleasantness and reduce anxiety in populations with posttraumatic stress disorder (Landis-Shack et al., 2017). Music therapy has been successfully used to reduce depression symptoms (Maratos et al., 2008). Despite evidence that supports music’s ability to modulate emotions both behaviorally and neurally (Koelsch, 2009, 2014), a less-explored aspect is whether emotional music can alter existing episodic memories by inducing emotions. This potential link between emotional music and memory modification is particularly intriguing in cases where exaggeration of negative components in memories may contribute to conditions, such as depression (Joormann et al., 2009). Previous studies have investigated how emotion regulation strategies used during memory encoding might influence subsequent memory (Erk et al., 2010; Hayes et al., 2010). For instance, Hayes et al. (2010) demonstrated that cognitive reappraisal improves memory while reducing negative affect. Building on this line of questions, our study examined whether music listening could be used to modulate episodic memory by associating the memory with the expressed valence of the music.

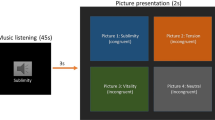

To investigate the impact of emotional music on episodic memory, we designed a multiday experimental protocol. On Day 1, participants experienced and memorized emotionally neutral scenarios. On Day 2, they underwent fMRI scanning while recalling memories in the presence of positively or negatively valenced music or silence, with emotional lures introduced. On Day 3, participants completed episode recall and word recognition memory tests on (see Methods for details). This multiday design allowed us to examine the immediate and delayed effects of music on memory, as well as the neural mechanisms underlying these processes.

Our study's hypotheses are grounded in the literature on memory reconsolidation and the possibility of memory modification during reactivation. Long-term memory formation often involves repeated learning and experiencing, which can strengthen but also change features in a memory trace; this process is sometimes referred to as reconsolidation. Studies have shown that reactivation of memory strengthens it and allows reconsolidation to improve storage of information into long-term memory (Forcato et al., 2007; Hupbach et al., 2007; Squire et al., 2015; Tay, 2018). However, evidence also suggests that memory reactivation may increase the possibility of memory modification, allowing memory updates but also false memory intrusion (Scully et al., 2017). For example, reactivation of a past memory presented with lures may both facilitate old memory and increase subsequent false memory (Gisquet-Verrier & Riccio, 2012; St. Jacques et al., 2013; Wichert et al., 2013). Building on this theoretical background, we investigate whether music during memory reactivation could modulate the occurrence of memory intrusions, particularly focusing on mood congruency between emotional “lures” (plausible, but false, memory content) and the paired music.

Our specific hypotheses are informed by the literature on the importance of mood congruency in memory encoding and integration, such as during music listening or film watching (Boltz, 2004; Cohen, 2001, 2015). Neuroimaging research highlights that music can activate mood-processing regions in the brain, including the amygdala, cingulate gyrus, and insula, potentially affecting subjective feeling and physiological arousal through emotion induction (Koelsch, 2010, p. 20, 2014; Koelsch et al., 2006). Given this evidence, we hypothesized that emotionally evocative music during memory recall would bias attention toward emotionally congruent content (i.e., lures), potentially resulting in integrating the original memory with false traces during reconsolidation, both behaviorally and neurologically. Additionally, we predicted heightened emotion in subsequent memory recall for episodes reactivated with music intervention.

By synthesizing existing literature from music cognition and psychology of memory, our study probes whether music during memory reactivation introduces false emotional traces. This research not only underscores music's profound impact on emotional perception but also suggests its potential utility in manipulating memory content. A comprehensive analysis of behavioral and neural data offers insights into the possibilities for enhancing everyday memory encoding and potential therapeutic applications of music. Our findings contribute to the growing body of literature on the complex interplay between music, emotion, and memory, and highlight the need for further research in this area.

Materials and methods

Participants

Forty-four healthy adults (24 females, 20 males; mean age: 19.9 years) with normal or corrected-to-normal hearing and vision participated in this experiment. Participants were recruited from the Georgia Tech School of Psychology subject pool and received either course credit or monetary compensation for their participation. Before beginning Day 1 of the study, all participants signed an informed consent form approved by the Georgia Tech Institutional Review Board. All participants also went through safety screening to exclude any magnetic resonance imaging contraindications or major neurological disorders. Five participants participated in a behavioral-only (no MRI) version of the study, resulting in a sample size of 39 for the MRI data. Two participants would later be removed from behavioral analyses only (see “Behavioral Preprocessing and Stimulus Validation”), resulting in a sample size of 42 for behavioral data. Because this study relates to emotion modulation, none of the participants were under any kind of psychological medication or treatment.

Stimuli

The experimental tasks (described in Procedures, below) involved the encoding and subsequent recollection of several short, distinct, fictional stories written to resemble autobiographical episodic memories. Because the goal of this study was to measure how musically induced emotion may influence the recollection of originally neutral memories, we wrote the story stimuli to be as emotionally neutral as possible. Each story was based around five “keywords:'' objects or descriptors that we considered central to each story and predicted would be at the core of participants’ memories. We selected these keywords from the Affective Norms for English Words (ANEW) database (Bradley & Lang, 1999). Independently, members from the research team manually selected five words (keywords) from the ANEW database that had a valence rating between 4–6 (ratings are on a 9-point Likert scale) and composed an emotionally neutral scenario around these five words. A total of 24 stories were originally written in this manner, and the 15 most neutral and distinct stories were selected to be used in this experiment. This number was determined based on pilot testing to ensure all stories could be memorized and recalled in sufficient detail for analysis. All stories were 80–120 words long and written in the first person. An example of one of these stimuli is provided below, with keywords in bold text.

To discourage participants from anticipating the nature of the experiment, five additional stories were included in the Day 1 procedures (see Procedures below), which were either positively or negatively valenced (three negative, two positive). The keywords for these stories were chosen in the same manner described above (i.e., neutrally valenced). However, unlike the neutral stories, the scenario composed around the keywords was either positively or negatively valenced (e.g., by explicitly referencing the protagonist’s/participant’s emotional state). None of the stories (emotional or neutral) shared any of the same keywords.

Music excerpts presented during the experiment were taken from Set 2 of the “Film Soundtracks” dataset (Eerola & Vuoskoski, 2011) Film music is frequently used in studies of musically induced emotion and memory (Boltz, 2004; Cohen, 2001, 2015; Eschrich et al., 2008; Marshall & Cohen, 1988; Tan et al., 2007). It is composed to function as a backdrop to narrative with strong emotional cues (Cohen, 2001; Eerola & Vuoskoski, 2011), fitting the goals and methods of this study. The “Film Soundtracks” dataset provides a large set of validated, instrumental, unfamiliar soundtrack excerpts with affective ratings. Specifically, using unfamiliar stimuli was necessary to avoid participants having preexisting associations (i.e., personal memories, nostalgic emotions) with the music that could influence their behavior. Using instrumental music avoids the possibility of lyrical messages (instead of the music itself) influencing participants’ behavior and memory during the task.

We carefully selected a subset of excerpts (Table 1) that had more extreme (either positive or negative valence) affective ratings and avoided those that conveyed mixed emotions. Although we intended for musical stimuli in the two target groups to differ only significantly in terms of valence, it is important to note there was a difference in tension and, to a lesser degree, energy as well. Despite our attempts to balance these dimensions during the stimulus selection process, it is challenging to negate the correlation between valence and tension ratings (Eerola & Vuoskoski, 2011). This limitation is considered further in the Discussion. We used the audio editing software Audacity (version 3.3.3) to normalize the loudness of all excerpts and either truncate or loop the provided clips to a duration of 30 s with a 1-s fade out. On Day 2 of the experiment, music stimuli were paired with the 15 neutral story stimuli described above (see Procedure for more information on the Day 2 task). Pairings were made pseudorandomly for each participant, such that the same story was not always associated with the same music across participants.

Procedure

The experiment took place over three consecutive days (Fig. 1). The multiday structure of the experimental protocol was adapted from previous memory reconsolidation studies (Forcato et al., 2007; Hupbach et al., 2007; Tay, 2018) and designed such that memories encoded during each task could then be consolidated during overnight sleep (Rasch & Born, 2013). Given the imagination and internalization requirements of the task, all participants completed the Vividness of Visual Imagery Questionnaire (VVIQ), a commonly used and validated measure of mental imagery abilities (Marks, 1973), before beginning the experiment. Furthermore, as this study measures emotional changes within memories, participants’ mood state was collected by using the Positive And Negative Affect Schedule (PANAS), a validated, self-report mood questionnaire (Watson et al., 1988) at the start of both Days 1 and 2 to ensure no outliers in baseline mood.

Experiment protocol over three consecutive days. Note. The combined task time over the three days was approximately 2 h. Participants experience and memorize 20 (15 neutral, 5 emotional) short fictional stories on Day 1 (left, top). Measures of their memory and subjective experience of each story also are collected (left, bottom). On Day 2, they complete an interactive story recollection task for the 15 neutral stories, paired with either positive-valence music, negative-valence music, or silence (middle). Functional MRI data is recorded. On Day 3, participants complete cued recall (right, top) and forced-choice word recognition (right, bottom) tests for the 15 neutral stories presented on Day 1

On Day 1, participants experienced and memorized the 20 short, fictional stories (15 neutral-valence, 5 emotional; see Stimuli, above). One at a time, text and audio recordings narrating each story were simultaneously presented to participants, with a 10-s break in between each story (Fig. 1, top left). Participants were instructed to imagine themselves in each story as if they were experiencing the described scenario. Each story was presented twice in random order. Participants then completed an untimed cued recall task in which they typed out each scenario as they had imagined it, using as much detail as possible. Participants also rated their felt valence, arousal, and vividness for each scenario using a 9-point Likert scale (Fig. 1, bottom left). In all, the Day 1 task resulted in the formation of true (laboratory-based) memories with a degree of internalization and autobiographical attachment in the participant (akin to memories resulting from movies or a book). The duration of the Day 1 task was approximately one hour. Stimulus presentation and response recording were coded using PsychoPy3.

On Day 2, participants completed a word selection activity inside the MRI scanner (Fig. 1, middle). During each trial, ten words were displayed on a screen in randomized positions. Four of these words were keywords from one of the previous days’ stories. The other six words were emotional “lures”—three positively valenced words and three negatively valenced words. We selected lure words that were semantically relevant to each story but did not appear in the story on Day 1. No lure words were repeated across stories. Participants had 30 s to read the ten words on the screen, determine (from the keywords) which story was being referenced in the trial, recall that story, and then select all the words on the screen that they felt best fit their experience of that story. Participants used an MRI-compatible, hand-held controller to make their word selections. Trials were separated by a 10-s rest period. This task only referenced the 15 neutral-valence stories from Day 1. Therefore, each run consisted of 15 trials (one per story) in random order.

Furthermore, the trials were pseudo-randomly divided to be equally assigned into three within-subject conditions: positively-valenced music, negatively-valenced music, or silence. Trials in either of the two music conditions had music playing while participants completed the word selection task, whereas trials in the silence condition had no music playing. We decided to use silence as the control condition in this study as opposed to some auditory stimulus (e.g., noise, constant tone, neutral music, etc.) to reduce the possibility of the control condition inducing a subjective emotional experience in the participants. Participants were told to attend to the music they heard; however, they were not specifically instructed as to how to use the music during the task. The music was pseudo-randomly paired with the stories before the start of the task (i.e., music-story pairings were different for each participant, but pairings remained fixed for a participant’s two repeated runs of the task). The total scanning and task time was approximately 30 min. Stimulus presentation and response recording were coded using PsychoPy3.

On Day 3, participants completed two memory tests for the 15 neutral-valence stories (Fig. 1, right). The first was an untimed cued recall task identical to that which they completed on Day 1. The second was a forced-choice word recognition task. A proportion of the keywords and lures were selected for this test. One at a time and in random order, participants were shown these words from the 15 stories and asked if the word appeared on Day 1. To increase difficulty, we also included some new words that never appeared on Day 1 or Day 2. All responses were recorded using Qualtrics and the task took approximately 30 min.

Behavioral preprocessing and stimulus validation

We reviewed participants’ recalled episodes following Day 1 to ensure each story had been sufficiently memorized for subsequent memory effects to be measurable (i.e., if they learned nothing on Day 1, we would not be able to detect meaningful memory alterations as a function of reconsolidation). Percent difference in word count was calculated between each original story and its subsequent Day 1 recall. To emphasize relative change within an individual over inter-individual variability, percent differences across all Day 1 trials were z-scored. The lowest 5% of trials were removed (corresponding to recall which had less than 29% the number of words of the original story); these stories were unlikely to reveal true versus false memory discriminability in later stages of the study. Upon reviewing participants’ responses, it also became clear that two of the neutral stories were repeatedly confused and intertwined with each other (words from one often misattributed to the other). Because we intended for each story to simulate a unique autobiographical episode (i.e., for trials to be independent of each other), all trials for both stories across all participants were removed before subsequent analyses.

We also reviewed participants’ subjective valence, arousal, and vividness ratings for each story after Day 1 to ensure that participants used these rating scales as instructed and that the 15 neutral valenced stories were perceived as less emotional than the five added “emotional” stories. Two participants were removed from subsequent analyses because of their valence and arousal ratings having almost no variability across all trials of any condition (resulting in final N = 42). VVIQ and PANAS scores were reviewed at this point, and we found no outlier participants in terms of visual imagery abilities or reported mood (no additional participants were removed). Ratings for each type of story stimulus after within-subject normalization (z-score) are shown in Table 2 below.

Behavioral analysis

During the in-scanner word selection task on Day 2, we examined participants' word selections while recalling their episodes. We calculated the average frequency of word selections across different word categories (neutral keywords, positive lures, and negative lures) in each music condition (positive valence music, negative valence music, and silence). We utilized repeated-measures ANOVA treating subjects as a random effect and music condition and word valence as fixed effects to identify differences in word selection frequency among conditions.

For the story recall test on Day 3, following a similar method to the Day 1 recall data, we reviewed participants’ recalled episodes to ensure each story had been sufficiently recalled and that there would be enough text to subsequently analyze. The same word-count threshold was applied, resulting in approximately 2.5% of trials removed. An initial count of lure words used in participants’ Day 3 recall revealed that the average number of lure words included verbatim was close to zero for positive-valence music (mean 0.121; SD 0.407), negative-valence music (mean 0.213; SD 0.424), and silence (mean 0.192; SD 0.436) trials. We therefore decided to analyze the change in recalled episode affect by manually annotating each paired Day 1 and Day 3 response for all emotionally charged words or phrases (not just experimenter-defined lure words). In other words, we annotated the Day 1 to Day 3 change in valence for each story, for each participant. Various algorithmic alternatives to manual scoring were initially explored; however, we found that none were able to effectively capture the nuanced changes in sentiment.

Manual annotation of recalled episodes was accomplished by tagging and counting positively and negatively valenced words or phrases separately. All negatively valenced words or phrases received − 1 point each, while positively valenced words or phrases received + 1 point each. An additional point (negative or positive, respectively) would be given if the word or phrase included an emphasizing modifier (“very,” “really,” “extremely,” etc.). Each trial’s final “score” resulted from summing its positive word/phrase score and its negative word/phrase score. This tagging and scoring process was completed for each Day 1 and Day 3 recall trial by two annotators blinded to the study conditions. Trials to be scored were first split evenly between the two annotators for initial scoring, and then were cross-checked by the other annotator for agreement. For analysis, we treated participants’ Day 1 recall scores for each story as a “baseline” to assess Day 1 to Day 3 change in valence. To test significance, we used repeated-measures one-way ANOVA to test whether this change in valence differed by music condition. Subjects were treated as a random effect in the model. Following the significant results, we used Tukey HSD post-hoc pairwise comparison to identify the source of significant differences.

Finally, we analyzed the results of the word recognition test. We categorized participants’ answers (each word) as “correct” (e.g., “hit” for correctly identified original keywords or “correct rejection” for correctly identified lure words) or “incorrect” (e.g., “miss” for unidentified original keywords or “false alarm” for mistaking lure words for original keywords). Calculating the overall recognition accuracy rates for each word type (keyword, positive lure, negative lure) and for each story, which was assigned to a particular music condition (positive valence, negative valence, silence) (e.g., if a subject successfully recognized 2 of 3 neutral words for a particular story, the accuracy rate would be 66.66%), repeated-measures ANOVA was again employed to assess the impact of the two main factors (music valence condition and word valence) on final recognition performance.

fMRI acquisition

Brain scans were collected using a 3 T Siemens Prisma scanner with a 32-channel head coil at the GSU/GT Center for Advanced Brain Imaging. High-resolution T1-weighted structural images were obtained using a magnetization prepared rapid gradient echo sequence (MPRAGE) sequences (TR = 2500 ms; TE = 2.22 ms; FOV = 240 * 256 mm; voxel size = 0.8-mm isotropic; flip angle = 7°). The functional T2*-weighted data and localizer data were collected following using echo planar imaging (EPI) sequences (TR = 750 ms; TE = 32 ms; FOV = 1760 * 1760 mm; voxel size = 2.5-mm isotropic; flip angle = 52°). The T2*-weighted images provided whole-brain coverage and were acquired with slices oriented parallel to the long axis of the hippocampus. All participants used foam ear plugs and a noise-canceling MRI-compatible headset to be able to talk to the experimenter between runs in the scanner as well as to better listen to the music during the task.

fMRI preprocessing

MRI data preprocessing was finished in the Analysis of Functional Neuro Images software (AFNI: http://afni.nimh.nih.gov/afni/) (Cox, 1996, p. 199). The preprocessing steps followed AFNI’s standardized pipeline (afni_proc.py). These steps included 1) despiking (3dDespike) to reduce extreme values caused by motion or other factors; 2) slice time shifting (3dTshift) to correct slice differences in acquisition time; 3) volume registration and alignment (3dvolreg) to the volume with the minimal outlier fraction; 4) scaling and normalization of time series into percent of signal change. We used a motion censoring threshold of 0.3 mm to remove outlier TRs. All EPI images were warped to MNI template space using nonlinear transformations supported by AFNI’s @SSwarper function. We smoothed all BOLD images by using a 4-mm full-width at half maximum Gaussian kernel.

fMRI data analysis

We ran a whole-brain voxel-wise general linear model (3dDeconvolve) for each subject to detect functional BOLD activity patterns during cognitive recollection for different music conditions. Except for the six nuisance regressors accounting for head motion (translational and rotational) and three accounting for scanner linear drift, the model included three task-related regressors for the music conditions (control/silence, negative music, positive music). For group-level analysis, we used a mixed effect linear model (3dLME) to compare neural responses during memory recollection among the three music conditions, treating each subject as a random effect to account for individual differences in music, emotion, or memory processing. To define significant differences in different music conditions, we used a nonparametric implementation of AFNI’s Monte Carlo simulation program (3dClustSim) with smoothing estimates derived from first-level model residuals (3dFWHMx) to get the probability of false positive clusters with a voxel-level threshold of p < 0.001. From this we derived a cluster size cutoff which resulted in a cluster-significance threshold of p < 0.005 (using 3dClustSim’s second-nearest neighbor clustering option—faces or edges touch; yielding cluster size minimum of 16). See Tables 3 and 4 for clusters showing different neural responses for pair-wise comparisons between our task conditions.

One research question of particular interest was whether the amygdala, an area important for encoding emotional episodic memory, shows distinct neural behaviors and modulated connectivity with other brain areas during memory recollection with background music in contrast with silent background. To delve into this question, we also utilized seed-based generalized psychophysiological interaction (gPPI) analysis (McLaren et al., 2012) to test the context dependent functional connectivity of the amygdala and the rest areas of the brain. We created a 5-mm sphere around the peak coordinate of the amygdala from the univariate results (MNI − 15 14.2 − 14.5). We extracted the average time series of the seeds using 3dmaskdump and detrended the time series using 3dDetrend. Then, the interaction terms (PPI terms) were created by multiplying the main task regressors and the time-series regressors. We then used 3dDeconvolve again, as in the univariate analysis, to test how the interaction regressors beyond the main task regressors modulated the differences of brain regions in regards of its functional connectivity with the amygdala, modulated by different music conditions (silence, positive, and negative). At the group level, we again utilized 3dLME to build a mixed effect linear regression model treating subjects as random effect and the gPPI terms as main factor. We used the same nonparametric multiple comparison correction from above; however, given the exploratory/descriptive nature of the resulting connectivity difference profiles, we used a more liberal voxel-level threshold p < 0.005, cluster-level threshold p < 0.005 (providing sensitivity to cluster sizes larger than 28) to identify clusters that showed music condition dependent functional connectivity with the amygdala.

Results

Behavioral results

We predicted that emotional lure words would be selected more frequently during congruent emotional music conditions (e.g., positive words would be more likely to be chosen if the background music is also positive). During the in-scanner task on Day 2, across participants the data revealed a consistent pattern in word selection behavior, favoring emotional lure words that aligned with the valence of the paired music for the trial. Figure 2 showcases this in qualitative terms using a neutral-valence story mentioned in the Methods section as an example. Due to random assignment, some participants experienced this episode with positive-valence music (Fig. 2, left), others with negative-valence music (Fig. 2, right), and others heard no music (Fig. 2, middle). Across all groups, participants frequently selected the original keywords of the story—"questions," "short," "hotel," and "map," indicating successful recall of the corresponding Day 1 episode during the trial.

Word selection behaviors example during day 2 recollection. Note. Relative word selection frequencies for keywords and emotional lures for one of the neutral episodes (see example in Methods, above) during the Day 2 task. The figure displays the participants’ average response and is sorted based on the paired music condition during the task (left: positively valenced music, middle: silence, right: negatively valenced music). Note that this figure provides a qualitative example of Day 2 behaviors and serves as an illustrative aid; it does not aim to serve as quantitative evidence (which is captured instead by our reported inferential statistics and other plots)

As anticipated, participants also tended to select emotionally congruent lure words based on the music's valence: "famous" and "attractive" with positive music, and "creepy" and "stalk" with negative music. This suggests that the music influenced participants' behavior and interaction with the episode during the task. Interestingly, both groups frequently selected the positive lure word "friendly" for this episode. While the positive music group slightly favored "friendly" more than the negative music group, the latter's notable selection of this positive lure word implies a potential nonmusical (possibly semantic) association between the word and the original story, influencing their behavior (more in Discussion).

In quantitative terms, a repeated-measures ANOVA test revealed a significant interaction effect of word valence and music condition (F(2) = 3.433, p = 0.033, ηG2 = 0.021). Focusing on the comparisons between music conditions, Fig. 3 reveals fewer negative lures selected during positive music trials (versus negative music and silence) (post-hoc pairwise comparison: positive < negative: p = 0.019, Cohen’s d = 0.374; positive < silence: p = 0.016, Cohen’s d = 0.248). The figure also shows an expected pattern for more positive lures selected during paired positive music trials (versus negative music and silence), but this was not statistically significant (p = 0.237). Interestingly, a repeated-measures ANOVA test also revealed a significant fixed effect of word valence (F(1) = 57.12, p < 0.001, ηG2 = 0.176), suggesting participants tended to select more positive lure words than negative lure words across all music conditions. We also ran ANOVA on neutral words' accurate recognition/selection on Day 2 and found no difference between music conditions (F(2) = 0.217, p = 0.805). This observation is further deliberated in the Discussion section.

In scanner recollection and word selection. Note. Overall trends in word selection frequencies for neutral keywords (left), negative lure words(middle), and positive lure words (right) during the Day 2 task. Each histogram presents the average frequency that each type of word was selected under each music condition (red: negative valence music, green: positive valence music, gray: silence). For example, the red column in the middle section represents the frequency (~ 27%) of negative lure words selected during episode recollection with paired negative music in the background

On Day 3, we hypothesized that the recalled episodes would exhibit greater emotional modulation for trials in which music was used on Day 2, compared with trials with silence. More specifically, we predicted that when recalling episodes on Day 3, participants would incorporate more emotional components into the originally neutral stories if those stories were revisited with emotional music on Day 2. Furthermore, we hypothesized that the valence of the emotional modulation in the recalled stories would be congruent with the valence of the paired music. Importantly for our theoretical framework, participants' final episode recall on Day 3 revealed the incorporation of additional emotional words or phrases that aligned with the valence of the paired music on Day 2. Using participants' Day 1 episode recall as the baseline, we computed an overall change in valence for each story (as outlined in the “Behavioral Analysis” of the Materials and Methods section). Figure 4a illustrates the average change in recalled episode valence for each music condition. In the figure, a higher positive value denotes increased positive valence, while a higher negative value signifies increased negative valence. A one-way repeated-measures ANOVA test uncovered a notable effect of the Day-2 music condition on the Day-3 change in valence (F(2) = 8.927, p < 0.001, ηG2 = 0.074). Specifically, Tukey HSD post-hoc pairwise comparisons revealed a significant difference between the change in recall valence for positive versus negative music conditions (p < 0.001, estimate of mean difference = 0.968, 95% CI = [0.427–1.509]) and a marginal difference between silence versus positive valence conditions (p = 0.051, estimate of mean difference = 0.555, 95% CI = [0–1.109]).

Day 3 memory tasks. Note. a. Average change in recalled episode valence between Day 1 and Day 3, for each music condition. Positive values suggest more positive valence in Day 3 recall whereas negative values suggest more negativity in Day 3 recalled stories. b. Average Day 3 recognition test results for original episode keywords (“neutral”) and positive and negative lure words. X-axis: music condition. Y-axis: recognition accuracy rate—hit rate for neutral keywords and correct rejection rate for lure words

For the word recognition test, we predicted that participants would have more hits and correct rejections for silence trials and more misses and false alarms (particularly for emotional words congruent with the paired music valence) for music trials. The average accuracy across subjects for the word recognition task was 80.77% (SD = 39.42%). We used repeated-measures ANOVA to test Day-3 word recognition data for whether word type/valence and Day-2 music condition factors affected final recognition performance. We found significant recognition accuracy differences among word valence (Fig. 4b, F(2) = 10.726, p < 0.001, ηG2 = 0.205). Neutral words (story keywords) were more correctly recognized overall. No interaction effect was found between music valence and word valence (F(4) = 0.178, p = 0.948). Interestingly, when comparing the lures specifically, we found a higher correct rejection rate for negative lure words than for positive lure words across music conditions (F(1) = 5.421, p = 0.026, ηG2 = 0.06). This observation aligns with the trends observed in the Day 2 word selection task, where there was a prevalence of selecting more positive lures overall. However, the ANOVA results did not reveal a significant effect of music condition on recognition rate.

fMRI results

Whole-brain GLM results

We asked two main univariate fMRI questions while participants recollected stories and recognized words on Day 2. The first question was how conditions with silence differed from the background music conditions during episode retrieval. We hypothesized that for the music conditions, we would observe higher BOLD activity in traditionally music-related areas from the literature, including the auditory cortex and inferior frontal gyrus, compared with the silence condition. Additionally, we expected increased engagement of emotion-processing regions, including the amygdala, hippocampus, cingulate cortex, and orbitofrontal cortex, during music conditions relative to silence, because both music conditions were valenced. Regarding the comparison between positive and negative music valence, we had no specific hypothesis, and this analysis was exploratory in nature. Consistent with these predictions, music conditions showed higher activity in the auditory-related areas including the superior temporal gyrus, the inferior frontal gyrus, and the cerebellum (Fig. 5, voxel-wise p < 0.001 for all univariate results). Importantly, retrieval with music also showed stronger amygdala and anterior hippocampus activation. Conversely, the silence condition elicited increased activity in various cortical and subcortical regions. Notably, higher engagement was observed in the silence condition in the supramarginal gyrus, superior frontal gyrus, insula, inferior frontal gyrus, and middle cingulate gyrus. We discuss these outcomes below, but these differences highlight both increased engagement and decreased engagement when music is in the background of functional network subregions, which collectively have the capacity to modulate memory. For a comprehensive breakdown of clusters exhibiting differential activation during memory recollection with music versus silence, please refer to Table 3.

Whole-brain univariate analysis comparing music vs. silence. Note. Whole-brain univariate analysis results comparing brain activity during memory recollection and recognition with music background versus silence. Group level results are presented using the MNI template. We used the neurological orientation (participants' left brain is presented on the left of each image). Yellow: Silence larger than Music. Blue: Music larger than Silence. Selected regions of interest were extracted to run ROI analysis and the histogram plots present the average percent signal change in each area with error bars comparing the music and the silence conditions during memory recollection

Contrasting neural activation within music conditions revealed distinct patterns during story retrieval with positive and negative music (Fig. 6). When recollecting stories with positive music, there was notably stronger activation observed in the superior temporal gyrus. On the other hand, retrieval in the presence of negative music exhibited heightened activity in a diverse set of brain regions. This included the caudate, superior orbital gyrus, inferior parietal lobule, superior parietal lobule, posterior cingulate gyrus, and cerebellum. These regions encompass networks associated with emotion regulation, attention, and sensorimotor processing. These results reveal divergent profiles for brain activity when retrieving with different valenced music. For a detailed overview of clusters demonstrating differential neural activity for positive versus negative music during story recollection, please refer to Table 4.

Whole-brain univariate analysis comparing negative vs. positive music. Note. Whole-brain univariate analysis results comparing negative music and the positive music in background during memory recollection. Group level results are presented using the MNI template. Yellow: Negative music larger than positive music. Selected regions of interest were extracted to run ROI analysis and the histogram plots present the average percent signal change in each area with error bars comparing the positive music, negative music, and the silence conditions during memory recollection

Functional connectivity (gPPI)

A particular interest for us was testing for evidence of shifts in amygdala connectivity during the different conditions in this task, inspired by its important functional role in 1) music emotion processing but also 2) regulating general episodic memory encoding and consolidation processes. Past literature has suggested that music improves imagery vividness during memory recall (Herff et al., 2022). Therefore, we hypothesized we would see corresponding neural evidence, reflected in increased activity in areas engaged by imagery including the visual cortex and cingulate cortex, as well as stronger connectivity between the amygdala and story memory-related areas, which one would expect if there was an interaction between the musically induced emotion and the reencoding of the story.

Using the amygdala as a seed region for gPPI, we first compare silence and music. As shown in Fig. 7, results showed that the amygdala had stronger functional connectivity with the inferior occipital gyrus (voxel extent = 57, MNI = [− 20, − 91.8, 7]) during music compared to silence. When then comparing the positive and the negative music (Fig. 7, bottom), in negative music conditions, amygdala showed stronger connectivity with the inferior frontal gyrus (voxel extent = 39, MNI = [− 50, 25.8, − 4.5]), the middle temporal gyrus (voxel extent = 32, MNI = [− 67.5, − 49.2, − 4.5]) and the anterior cingulate cortex (voxel extent = 30, MNI = [0, 40.8, 20.5]). Conversely, we did not observe any significant clusters more strongly connected with the amygdala for positive music.

Function connectivity of amygdala. Note. Generalized psychophysiological interaction analysis using amygdala as seed regions. Comparing silence and music conditions, music conditions showed stronger amygdala-visual cortex connectivity. Contrasting negative and positive music showed stronger amygdala connectivity with the inferior frontal gyrus, middle temporal cortex, and the anterior cingulate cortex in negative music condition during memory recollection. The brain images are presented in MNI template and in the neurological orientation

Discussion

The ability of music to influence emotions, both in everyday life and in therapeutic contexts, is a subject of keen interest within the field of music cognition. Research has shown that mood-related disorders, such as major depressive disorder and bipolar disorder, often involve emotional biases. These biases may manifest as tendencies to perceive or experience emotions more intensely (Bouhuys et al., 1999; Gilboa‐Schechtman et al., 2004; Matt et al., 1992; Panchal et al., 2019; Williams et al., 1997). Given this, exploring how music affects the way people encode and recall memories could reveal practical applications, particularly in managing stressful memories. Informed by research highlighting the role of memory reactivation not only in strengthening memories (Staresina et al., 2013; Wichert et al., 2013) but also in modifying them, for example, through retrieval-enhanced suggestibility (Loftus et al., 1978), our study employed a 3-day episodic memory paradigm. We investigated how emotionally charged music impacts the reactivation and subsequent recall of episodic memories. Specifically, we examined whether such music could influence participants' ability to update their memory, potentially leading to the incorporation of false memories, in relation to fictional events they had memorized from a first-person perspective at the start of the experiment.

Participants' behavior during 1) the story reactivation on Day 2 and 2) the subsequent story recall on Day 3 supported our hypothesis. We found that emotional music, especially positive music, played in the background during memory reactivation could alter the emotional tone of complex memories. Notably, on Day 2, participants tended to choose more positive than negative words across all conditions. We explore the reasons for this preference in the later sections of our discussion. Focusing on the influences of music, however, participants less frequently selected negative lures when the background music was positive compared to when it was negative or silent. This indicates that susceptibility to lures was modulated by their alignment with the mood of the music, especially the positive music, affecting how participants re-encoded and later retrieved the episodes. Given that participants were instructed to choose words that best reflected their retrieval of the episode, this finding implies that the valence of the accompanying music directed their attention and influenced their association with lures of the same emotional tone. This phenomenon of mood congruency and attention direction through music is consistent with previous studies in the domains of music and film. These studies have demonstrated that soundtracks play a crucial role in providing emotional context, enhancing the prominence of certain elements, and steering attention toward mood-congruent associations (Marshall & Cohen, 1988; Millet et al., 2021; Tan et al., 2007).

The behavioral results from the memory recall on Day 3 further support the findings from Day 2, demonstrating changes in the emotional expression of the laboratory-created declarative memories. Specifically, when participants recalled the episodes, we noted marked differences in emotional valence between stories reactivated with negative music on Day 2 and those with positive music. Stories paired with positive music during reactivation exhibited a more positive emotional tone in the recall on Day 3 compared with the silence condition. This finding suggests that music can influence the emotional context and semantic interpretation of episodic memories, even in a controlled experimental setting involving fictional stories. Some recent studies have provided similar evidence. One study investigated music-cued episodic memory and revealed that memory cued by music, compared with sound, was evoked more positively (Jakubowski et al., 2023). Furthermore, the memories were less accurately retrieved in the music cue condition. This might imply that music influences memory via the emotional level—a pattern that our data also indicated. Other studies have highlighted the importance of congruency between music’s arousal/valence and the cued memories during retrieval for its influences on memory (Jeong et al., 2011; Sheldon & Donahue, 2017). Our results aligned with these findings, demonstrating that the congruency between music valence and memory traces can modulate the emotional content of reactivated memories. Specifically, we found that positive music had a more clear effect on biasing memory toward positive valence compared to the influence of negative music on biasing memory toward negative valence.

Interestingly, the shift in valence on Day 3 recall was not directly linked to the number of experimenter-defined lure words (words presented on Day 2 to potentially influence memory), as we found mostly zero lures being added verbatim in stories recalled on Day 3. Instead, participants added different words that were semantically related to the story and aligned in mood with the Day 2 background music. This might suggest that 1) the music did not directly add the congruent lures to the memory during reactivation, but rather modulated the emotional perception and understanding of the stories at a higher level; and 2) considering the recall task, where participants were explicitly instructed to retrieve the stories they learned on the first day, participants might have consciously avoided using the lure words verbatim, perhaps showing some awareness that they were introduced on Day 2. Indeed, our word recognition task revealed a similar pattern with participants showing no difference in rejecting the lures between the music condition and silence. Interestingly, although not statistically significant, Fig. 4b revealed a slightly higher rejection accuracy for lures that were congruent with the music. Because the task spanned three consecutive days, participants might have retained memories from Day 2 to Day 3, thus enabling them to explicitly reject the lures when the recognition task instructed them to determine whether the word was from Day 1. Consequently, participants might have had better recall of seeing the lures on Day 2 when they were congruent with the music, consistent with the idea that music directed participants’ attention to lures on Day 2 during reactivation. Importantly, despite this hint of potential awareness of our task nature (specifically, the recognition of valenced lures in the recognition task, but the absence of lures during story recall), the emotional tone of the memory on Day 3 recall was still affected (reflected in valenced false memory errors). This observation points to a potential destabilization and alteration of memories at the emotional level, affecting the tone of confabulations rather than acting solely at the level of specific new details encoded on Day 2. Previous research has demonstrated the immediate impact of music as an affective primer, influencing subsequent emotion perception and interpretation (Steinbeis & Koelsch, 2011; Tan et al., 2007; Tay & Ng, 2019). Our study extends this understanding by providing evidence that these effects of music can persist through long-term memory and influence subsequent recall even when the music is no longer present.

Our behavioral results indicated that background music during memory reactivation could increase the likelihood of selecting and incorporating lures on an emotional and semantic level. This reactivation phase exhibited stronger engagement in the music conditions of brain regions including the superior temporal gyrus, cerebellum, inferior parietal lobule, amygdala, and anterior hippocampus. While the superior temporal gyrus and cerebellum are known for their roles in processing music and rhythm (Alluri et al., 2012; Bellier et al., 2023; Evers, 2023; Toiviainen et al., 2014), previous studies have linked the medial temporal lobe and the inferior parietal lobule engagement with memory intrusion during lure-involved reactivation (Gisquet-Verrier & Riccio, 2012; St. Jacques et al., 2013). Additionally, our findings that memory changes were predominantly valence-congruent suggest that emotional processing plays a critical role in memory modification with music. Consistent with this, in addition to the anterior hippocampus, participants also exhibited elevated amygdala activity when experiencing the stories on Day 2 with emotionally charged music. The anterior hippocampus and amygdala share intimate anatomical connections and are key components of the limbic system involved in emotional memory encoding (Richardson et al., 2004). Indeed, increased activity in the amygdala and hippocampus during music listening has been associated with emotional engagement and reward (Alluri et al., 2012; Gold et al., 2023; Koelsch, 2014; Toiviainen et al., 2014; Trost et al., 2012), and their combined engagement might support enhanced emotional processing and more robust reencoding of emotional memories in our music conditions, as seen in our behavioral data.

Furthermore, neuroscientific studies have suggested that amygdala stimulation during memory encoding can strengthen subsequent declarative memory, particularly through consolidation processes (Inman et al., 2018). This aligns with our observation of an increased tendency for participants to incorporate emotional content during subsequent recall of the original stories. We interpret the heightened amygdala and anterior hippocampus activity observed in the music conditions as reflecting a tighter association between emotional processing and memory encoding, facilitated by the presence of music. Consequently, we propose that the emotions induced by music in our study might have stimulated the limbic system, strengthening the memory trace for the emotional content presented during story reactivation on Day 2.

In our study, participants were instructed to imagine themselves in the stories during both encoding and reactivation phases. One point that we considered was how the increased intrusion of lures and heightened activity in areas like the amygdala and anterior hippocampus during music conditions (as opposed to silence) might instead be attributed to enhanced semantic and visual imagery processing associated with this self-projection task demand. However, this basic demand was equally present in the silence condition and would not fully account for the specific emotional modulation or the observed emotional congruency effects, where neutral words were unaffected. We propose that music provides an emotional backdrop, aiding participants in more vividly and emotionally “placing” themselves into the fictional scenarios. This would result in stories being encoded with new or altered emotional details and in a manner sensitive to the emotional context provided by the music. Past studies have shown greater amygdala and anterior hippocampus activation when emotional music is paired with neutral visual stimuli (Eldar et al., 2007), and when listening to music with eyes closed, indicating heightened emotional processing during imagination (Lerner et al., 2009). In our data, the higher selection of music-congruent lures on Day 2 suggests that music helped participants integrate both neutral words from Day 1 and lures, enabling emotionally altered reconstruction of the imagined episodes. When considered in the broader context of nonmusic literature, which emphasizes a role for the anterior hippocampus in episodic memory recall and imagination (Zeidman & Maguire, 2016), we suggest that activation in the hippocampus and amygdala in our study could reflect scene or story imagination; but this was enhanced (stronger) in music conditions compared to silence by the added contextual and emotionally charged backdrop of the music (and its congruence with provided narrative details and lures).

Consistent with that view, our functional connectivity analysis revealed a stronger connection between the amygdala and the inferior visual cortex/fusiform gyrus during memory reactivation with music. The fusiform gyrus, known to support visual word processing, is thought to be enhanced by emotional and communicative contexts (Schindler et al., 2015). It also is implicated in imagining emotional experiences—key aspect of our study's design (Preston et al., 2007). Previous research has shown that the amygdala-fusiform gyrus network is particularly active when processing emotional information, such as faces or emotionally charged objects (Frank et al., 2019; Herrington et al., 2011). This suggests a role for this circuit in integrating visual and emotional information. In light of this, we postulate that the increased connectivity between the amygdala and the ventral visual stream observed in our study reflects and facilitates the association between the emotional content/lures and the retrieved stories during visualization and imagination. Specifically, the presence of music during story reactivation on Day 2 might have facilitated the integration of emotional elements when participants revisited and reencoded the original memory traces, leading to a more emotionally laden representation of the original stories. Such enhanced integration was suggested by the behavioral results, with participants selecting more lures congruent with the story’s mood, thereby adding new emotional contents and details into the story and affecting the emotional tone of their subsequent recall on Day 3.

In contrast to the music conditions, the silence condition during story reactivation was associated with stronger activation in several brain areas, including the inferior temporal gyrus, supramarginal gyrus, rolandic operculum, insula, middle cingulate gyrus, supplementary motor cortex, superior frontal gyrus, and inferior frontal gyrus (IFG). Treating the silent condition as a “baseline” in our study, we propose that the increased activity in these regions during silence could indicate a higher cognitive workload for imagining and engaging with the story without music’s facilitation. For instance, the supramarginal gyrus, part of the inferior parietal lobule, and the inferior temporal lobe are closely associated with cognitive empathy, which involves understanding others' minds, moods, and motivations (Hooker et al., 2010; Kogler et al., 2020). Similarly, the IFG has been linked to both cognitive and affective empathy, which involves the ability to understand and share others’ emotions (Hooker et al., 2010; Kogler et al., 2020; Shamay-Tsoory et al., 2009), emotion perception and semantic comprehension during music listening (Tabei, 2015), and self-related processing during inner speech (Morin & Michaud, 2007). While other studies have reported increased IFG activity during music listening and recognition (Ding et al., 2019; Watanabe et al., 2008), our findings of higher IFG activation in the silence condition may suggest an alternative role for the IFG in our task, beyond music processing per se. One interpretation may be that music facilitates self-related processing and imagination of the story stimuli, thus reducing the processing demands on these functions. Music also may provide an extra context for emotion perception and semantic processing while interacting with the stories, reducing the neural demands on the IFG for imagining the stories and determining their semantic-emotional content. Additionally, regions like the insula and cingulate cortex, typically linked with cognitive challenges (Gasquoine, 2014) and known for supporting memory integration (Shah et al., 2013), emotion contextualization (Posner et al., 2009), and emotion induction during visual imagery and visual recall (Phan et al., 2002), also showed reduced engagement in music conditions compared to silence. These reduced patterns (relative to silence) together suggest that music might facilitate the processing of visualizing emotional experiences and integrating single-word stimuli into a coherent narrative. However, we acknowledge that this interpretation of the IFG findings is speculative. It is important to consider that the IFG also is a core area for language processing (along with other related processes, e.g., cognitive control (Jiang et al., 2018)). Higher IFG activity during story reactivation in the silence condition might simply suggest increased verbal processing and recall demands when no music was present. In contrast, in the music conditions, the presence of music might have occupied attentional resources, resulting in less engagement in word recall and processing.

The role of attention in music's impact on lure selection is another aspect we considered. On Day 2, during the episode reactivation task, we noticed that participants were more likely to select lures that matched the mood of the background music. This suggests that music might steer attention towards certain lures. From a neurological perspective, we observed differences in the superior frontal gyrus (particularly in the anterior medial region) and middle cingulate cortex. These brain regions are part of the cognitive control and default mode networks (Li et al., 2013) which are associated with working memory and attention performance. To the extent that attention to lures could be congruently modulated by music’s emotional influences, one would expect differences in such subsystems. Although their specific contribution to our results cannot be determined in this design, this is a speculation that could be tested in follow-up works.

One interesting observation in the behavioral data, noted above, was a stronger tendency for participants to modulate the neutral episodes toward a more positive valence than negative valence. This was progressively evident across all 3 days of the study. Our Day 1 analyses suggest the episodes were initially perceived and memorized by participants with a slight positive skew. One explanation could lie in the challenge of creating truly “neutral” story stimuli, although we took a deliberate, quantitative approach (see “Stimuli”). Alternatively, this could also be the result of a general “positivity bias” in our participant population, especially given that we excluded participants with mood-related psychopathologies (Dodds et al., 2015; Mezulis et al., 2004). This initial bias may explain why positive lure words were selected at high rates across all music conditions on Day 2. This pattern is nevertheless interesting when viewed through our proposed theoretical lens, because it could help to explain why paired positive music, compared with negative music, appeared to have a greater memory-modulating effect when analyzing the Day 3 episode recall. One possibility relates to the fact that negative music often evokes mixed emotional responses, and sometimes even positive responses (Eerola et al., 2018; Thompson et al., 2019). Thus, compared with positive music, negative music may be less effective in modulating memory toward one particular emotion direction. This interpretation is further supported by neural evidence suggesting that the brain processes and produces emotional reactions in different neural networks for positive and negative music (Fernandez et al., 2019; Koelsch et al., 2006). Indeed, our Day 2 brain univariate results showed that negative music during episode reactivation recruited higher activity in the caudate, an area tightly associated with music pleasantness and music-induced reward (Koelsch et al., 2006). Meanwhile, positive music trials had lower activity in areas including inferior and superior parietal lobule and posterior cingulate cortex compared to negative music. We speculate that this may represent less effort needed to imagine and reexperience the episode in the positive music condition, because there was already a positive valence to the narrative memory perception. Positive music trials were characterized by stronger superior temporal gyrus activity compared with negative music trials. This region encompasses the auditory cortex and is generally recruited during music processing, but greater activity can reflect better sensory integration (Pehrs et al., 2014), which we would expect to find for an affective congruency-facilitated processing condition relative to others (in our case, evidently the positive music trials).

Related to the positivity skew, a limitation of our study was an observed imbalance in arousal levels associated with the positive and negative valence music stimuli (see “Stimuli” and Table 1): negative valence stimuli had overall greater tension ratings than the positive valence stimuli. Therefore, it is possible that some of the behavioral and neural differences that were observed in direct contrast between positive and negative valence music were influenced by differences in tension/arousal or other valence-related properties of the stimuli. For example, we note that previous research has found increased superior temporal gyrus activation for positive (vs. negative) valence music stimuli in a multimedia task, similar to our results (Pehrs et al., 2014). In follow-up analyses (see Supplementary Material), we investigated the effects of music arousal as a separate factor in our models. Interestingly, we found that arousal significantly modulated word selection on Day 2. In particular, on Day 2, we found that higher music arousal was associated with a decreased selection of words, particularly positive and neutral words. In the music dataset, positive music generally had lower arousal compared with negative music. Taken together, the arousal effect aligns with the valence effect we observed, suggesting that music with high valence and low arousal had a more pronounced influence on word selection during memory reactivation. However, arousal did not show significant effects on Day 3 memory. This pattern suggests that while the emotional valence of music influenced both memory reactivation (Day 2) and subsequent retrieval (Day 3), music arousal may primarily effect online selection during reactivation phases, when participants are actively experiencing the music. These additional analyses provide insights into the potentially distinct roles of valence and arousal dimensions in music's influence on memory processes. The findings reinforce the need for future studies to disentangle carefully and examine the separate contributions of valence and arousal when investigating how music modulates memory and cognition.

Another limitation relates to the recognition task, where the average recognition accuracy was high across subjects, suggesting a potential ceiling effect. We expected a high recognition rate for neutral words, because on Day 1, we aimed to ensure that participants gained a moderate to good memory of the original stories. This was necessary for them to effectively reactivate those memories using the cue words on Day 2. The high lure rejection rate might be attributed to the fact that only a small set of six lures per story was presented on Day 2, making it easier for participants to remember whether they had encountered those words. To address this limitation and improve the task in future studies, we will consider presenting synonyms of the original words and lures during recognition. This would reduce reliance on verbatim memory and increase the difficulty of the task. Additionally, we could increase the number of lures presented on Day 2, making it more challenging to distinguish between studied and unstudied words during the recognition test.

In summary, this study delves into the intricate interaction between emotional music and episodic memory recall, unveiling how two realms of cognition might together reshape our memories and perceptions. We share compelling neuroimaging results that support the intriguing hypothesis that these two cognitive processes may be able to interact and thus modulate the emotional content of previously-formed episodic memories. Our data suggest that these effects manifest through reconsolidation mechanisms and neurally may be intimately linked to amygdala and medial temporal lobe function, as well as diffuse differences in engagement of networks associated with imagery, perspective taking, attention, and control. This study provides a novel perspective and evidence for using music during episodic memory retrieval to modulate and modify the memory contents and emotional level. We propose that music can enrich the reexperiencing of episodes, making memories more vivid and emotionally resonant by integrating emotionally congruent elements. This insight opens up exciting possibilities for applying music in everyday settings, such as enhancing the emotional depth of movie storytelling, and even extends to therapeutic applications, offering new approaches to treating mood-related disorders.

Data availability

The data and materials for the experiment are available at https://osf.io/yr5em.

Code availability

The code and scripts of the experiment can be found at https://osf.io/yr5em.

References

Alluri, V., Toiviainen, P., Jääskeläinen, I. P., Glerean, E., Sams, M., & Brattico, E. (2012). Large-scale brain networks emerge from dynamic processing of musical timbre, key and rhythm. NeuroImage, 59(4), 3677–3689. https://doi.org/10.1016/j.neuroimage.2011.11.019

Belfi, A. M., Karlan, B., & Tranel, D. (2016). Music evokes vivid autobiographical memories. Memory, 24(7), 979–989. https://doi.org/10.1080/09658211.2015.1061012

Bellier, L., Llorens, A., Marciano, D., Gunduz, A., Schalk, G., Brunner, P., & Knight, R. T. (2023). Music can be reconstructed from human auditory cortex activity using nonlinear decoding models. PLOS Biology, 21(8), e3002176. https://doi.org/10.1371/journal.pbio.3002176

Boltz, M. G. (2004). The cognitive processing of film and musical soundtracks. Memory & Cognition, 32(7), 1194–1205. https://doi.org/10.3758/BF03196892

Bouhuys, A. L., Geerts, E., & Gordijn, M. C. M. (1999). Depressed Patients’ Perceptions of Facial Emotions in Depressed and Remitted States Are Associated with Relapse: A Longitudinal Study. The Journal of Nervous and Mental Disease, 187(10), 595–602. https://doi.org/10.1097/00005053-199910000-00002

Bradley, M. M., & Lang, P. J. (1999). Affective Norms for English Words (ANEW): Instruction Manual and Affective Ratings. Technical Report C-1, The Center for Research in Psychophysiology, University of Florida, 49.

Cohen, A. J. (2001). Music as a source of emotion in film. In P. N. Juslin & J. A. Sloboda (Eds.), Music and emotion: Theory and research (pp. 249–272). Oxford University Press.

Cohen, A. J. (2015). Congruence-Association Model and Experiments in Film Music: Toward Interdisciplinary Collaboration. Music and the Moving Image, 8(2), 5–24. https://doi.org/10.5406/musimoviimag.8.2.0005

Cox, R. W. (1996). AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research, 29(3), 162–173.

Ding, Y., Zhang, Y., Zhou, W., Ling, Z., Huang, J., Hong, B., & Wang, X. (2019). Neural correlates of music listening and recall in the human brain. Journal of Neuroscience, 39(41), 8112–8123. https://doi.org/10.1523/JNEUROSCI.1468-18.2019

Dodds, P. S., Clark, E. M., Desu, S., Frank, M. R., Reagan, A. J., Williams, J. R., Mitchell, L., Harris, K. D., Kloumann, I. M., Bagrow, J. P., Megerdoomian, K., McMahon, M. T., Tivnan, B. F., & Danforth, C. M. (2015). Human language reveals a universal positivity bias. Proceedings of the National Academy of Sciences, 112(8), 2389–2394. https://doi.org/10.1073/pnas.1411678112

Eerola, T., & Vuoskoski, J. (2011). A comparison of the discrete and dimensional models of emotion in music. Psychology of Music, 39(1), 18–49. https://doi.org/10.1177/0305735610362821

Eerola, T., Vuoskoski, J. K., Peltola, H.-R., Putkinen, V., & Schäfer, K. (2018). An integrative review of the enjoyment of sadness associated with music. Physics of Life Reviews, 25, 100–121. https://doi.org/10.1016/j.plrev.2017.11.016

Eldar, E., Ganor, O., Admon, R., Bleich, A., & Hendler, T. (2007). Feeling the real world: Limbic response to music depends on related content. Cerebral Cortex, 17(12), 2828–2840. https://doi.org/10.1093/cercor/bhm011

Erk, S., von Kalckreuth, A., & Walter, H. (2010). Neural long-term effects of emotion regulation on episodic memory processes. Neuropsychologia, 48(4), 989–996. https://doi.org/10.1016/j.neuropsychologia.2009.11.022

Eschrich, S., Münte, T. F., & Altenmüller, E. O. (2008). Unforgettable film music: The role of emotion in episodic long-term memory for music. BMC Neuroscience, 9, 48. https://doi.org/10.1186/1471-2202-9-48

Evers, S. (2023). The Cerebellum in Musicology: A Narrative Review. The Cerebellum. https://doi.org/10.1007/s12311-023-01594-6

Fernandez, N. B., Trost, W. J., & Vuilleumier, P. (2019). Brain networks mediating the influence of background music on selective attention. Social Cognitive and Affective Neuroscience, 14(12), 1441–1452. https://doi.org/10.1093/scan/nsaa004

Forcato, C., Burgos, V. L., Argibay, P. F., Molina, V. A., Pedreira, M. E., & Maldonado, H. (2007). Reconsolidation of declarative memory in humans. Learning & Memory, 14(4), 295–303. https://doi.org/10.1101/lm.486107

Frank, D. W., Costa, V. D., Averbeck, B. B., & Sabatinelli, D. (2019). Directional interconnectivity of the human amygdala, fusiform gyrus, and orbitofrontal cortex in emotional scene perception. Journal of Neurophysiology, 122(4), 1530–1537. https://doi.org/10.1152/jn.00780.2018

Gasquoine, P. G. (2014). Contributions of the Insula to Cognition and Emotion. Neuropsychology Review, 24(2), 77–87. https://doi.org/10.1007/s11065-014-9246-9

Gilboa-Schechtman, E., Ben-Artzi, E., Jeczemien, P., Marom, S., & Hermesh, H. (2004). Depression impairs the ability to ignore the emotional aspects of facial expressions: Evidence from the Garner task. Cognition and Emotion, 18(2), 209–231. https://doi.org/10.1080/02699930341000176a

Gisquet-Verrier, P., & Riccio, D. C. (2012). Memory reactivation effects independent of reconsolidation. Learning & Memory, 19(9), 401–409. https://doi.org/10.1101/lm.026054.112

Gold, B. P., Pearce, M. T., McIntosh, A. R., Chang, C., Dagher, A., & Zatorre, R. J. (2023). Auditory and reward structures reflect the pleasure of musical expectancies during naturalistic listening. Frontiers in Neuroscience, 17. https://www.frontiersin.org/articles/https://doi.org/10.3389/fnins.2023.1209398

Hayes, J. P., Morey, R. A., Petty, C. M., Seth, S., Smoski, M. J., Mccarthy, G., & LaBar, K. S. (2010). Staying Cool when Things Get Hot: Emotion Regulation Modulates Neural Mechanisms of Memory Encoding. Frontiers in Human Neuroscience, 4. https://doi.org/10.3389/fnhum.2010.00230

Herff, S. A., McConnell, S., Ji, J. L., & Prince, J. B. (2022). Eye Closure Interacts with Music to Influence Vividness and Content of Directed Imagery. Music & Science, 5, 20592043221142710. https://doi.org/10.1177/20592043221142711

Herrington, J. D., Taylor, J. M., Grupe, D. W., Curby, K. M., & Schultz, R. T. (2011). Bidirectional communication between amygdala and fusiform gyrus during facial recognition. NeuroImage, 56(4), 2348–2355. https://doi.org/10.1016/j.neuroimage.2011.03.072

Hooker, C. I., Verosky, S. C., Germine, L. T., Knight, R. T., & D’Esposito, M. (2010). Neural activity during social signal perception correlates with self-reported empathy. Brain Research, 1308, 100–113. https://doi.org/10.1016/j.brainres.2009.10.006

Hupbach, A., Gomez, R., Hardt, O., & Nadel, L. (2007). Reconsolidation of episodic memories: A subtle reminder triggers integration of new information. Learning & Memory, 14(1–2), 47–53. https://doi.org/10.1101/lm.365707

Inman, C. S., Manns, J. R., Bijanki, K. R., Bass, D. I., Hamann, S., Drane, D. L., Fasano, R. E., Kovach, C. K., Gross, R. E., & Willie, J. T. (2018). Direct electrical stimulation of the amygdala enhances declarative memory in humans. Proceedings of the National Academy of Sciences, 115(1), 98–103. https://doi.org/10.1073/pnas.1714058114

Jakubowski, K., & Ghosh, A. (2021). Music-evoked autobiographical memories in everyday life. Psychology of Music, 49(3), 649–666. https://doi.org/10.1177/0305735619888803

Jakubowski, K., Walker, D., & Wang, H. (2023). Music cues impact the emotionality but not richness of episodic memory retrieval. Memory, 31(10), 1259–1268. https://doi.org/10.1080/09658211.2023.2256055

Jäncke, L. (2008). Music, memory and emotion. Journal of Biology, 7(6), 21. https://doi.org/10.1186/jbiol82

Jiang, J., Wagner, A. D., & Egner, T. (2018). Integrated externally and internally generated task predictions jointly guide cognitive control in prefrontal cortex. eLife, 7, e39497. https://doi.org/10.7554/eLife.39497

Joormann, J., Teachman, B. A., & Gotlib, I. H. (2009). Sadder and less accurate? False memory for negative material in depression. Journal of Abnormal Psychology, 118(2), 412–417. https://doi.org/10.1037/a0015621

Juslin, P. N. (2019). Musical Emotions Explained: Unlocking the Secrets of Musical Affect. Oxford University Press.

Juslin, P. N., & Laukka, P. (2003). Communication of emotions in vocal expression and music performance: Different channels, same code? Psychological Bulletin, 129(5), 770–814. https://doi.org/10.1037/0033-2909.129.5.770

Juslin, P. N., & Sloboda, J. A. (Eds.). (2001). Music and emotion: Theory and research (pp. viii, 487). Oxford University Press.

Koelsch, S. (2009). A Neuroscientific Perspective on Music Therapy. Annals of the New York Academy of Sciences, 1169(1), 374–384. https://doi.org/10.1111/j.1749-6632.2009.04592.x

Koelsch, S. (2010). Towards a neural basis of music-evoked emotions. Trends in Cognitive Sciences, 14(3), 131–137. https://doi.org/10.1016/j.tics.2010.01.002

Koelsch, S. (2014). Brain correlates of music-evoked emotions. Nature Reviews Neuroscience, 15(3), Article 3. https://doi.org/10.1038/nrn3666

Koelsch, S., Fritz, T., Cramon, V., & D. Y., Müller, K., & Friederici, A. D. (2006). Investigating emotion with music: An fMRI study. Human Brain Mapping, 27(3), 239–250. https://doi.org/10.1002/hbm.20180

Kogler, L., Müller, V. I., Werminghausen, E., Eickhoff, S. B., & Derntl, B. (2020). Do I feel or do I know? Neuroimaging meta-analyses on the multiple facets of empathy. Cortex, 129, 341–355. https://doi.org/10.1016/j.cortex.2020.04.031

Landis-Shack, N., Heinz, A. J., & Bonn-Miller, M. O. (2017). Music therapy for posttraumatic stress in adults: A theoretical review. Psychomusicology: Music, Mind, and Brain, 27(4), 334–342. https://doi.org/10.1037/pmu0000192

Lerner, Y., Papo, D., Zhdanov, A., Belozersky, L., & Hendler, T. (2009). Eyes wide shut: Amygdala mediates eyes-closed effect on emotional experience with music. PLoS ONE, 4(7), e6230–e6230.

Li, W., Qin, W., Liu, H., Fan, L., Wang, J., Jiang, T., & Yu, C. (2013). Subregions of the human superior frontal gyrus and their connections. NeuroImage, 78, 46–58. https://doi.org/10.1016/j.neuroimage.2013.04.011

Loftus, E. F., Miller, D. G., & Burns, H. J. (1978). Semantic integration of verbal information into a visual memory. Journal of Experimental Psychology: Human Learning and Memory, 4(1), 19–31. https://doi.org/10.1037/0278-7393.4.1.19

Mado Proverbio, A., Lozano Nasi, V., Alessandra Arcari, L., De Benedetto, F., Guardamagna, M., Gazzola, M., & Zani, A. (2015). The effect of background music on episodic memory and autonomic responses: Listening to emotionally touching music enhances facial memory capacity. Scientific Reports, 5(1), Article 1. https://doi.org/10.1038/srep15219