Abstract

Visual working memory is a volatile, limited-capacity memory that appears to play an important role in our impression of a visual world that is continuous in time. It also mediates between the contents of the mind and the contents of that visual world. Research on visual working memory has become increasingly prominent in recent years. The articles in this special issue of Attention, Perception, & Psychophysics describe new empirical findings and theoretical understandings of the topic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In May of 2013, a well-attended symposium was held on “The Structure of Visual Working Memory” at the annual meeting of the Visual Science Society. On the basis of the interest shown during that session, we decided to organize a special issue on Visual Working Memory in Attention, Perception, & Psychophysics. I am now happy to offer this special issue to the readers of AP&P.

As Luck and Vogel (2013) say in their useful 2013 review of the topic, “the term working memory is used in many different ways” (p. 391). However, those multiple uses of the term do have a common core. Visual working memory (VWM) is generally taken to be a volatile, limited-capacity memory that serves a current task. The tasks might include the integration of information across saccades (e.g., Hollingworth, Richard, & Luck, 2008), detection of change between two stimuli separated in time (e.g., Wolfe, Reinecke, & Brawn, 2006), or holding the target “template” in a visual search task (e.g., Soto, Humphreys, & Heinke, 2006).

Although a rich set of roles has been proposed for VWM, a very substantial proportion of the data on the topic have come from a rather impoverished set of stimuli. In a world laden with items to hold in VWM, much of what we know has come from experiments in which observers are asked to remember some small set of colored squares. In the paradigm first popularized in Luck and Vogel’s (1997) influential article, observers are shown an initial, memorization array, as in Fig. 1a. Then, following a blank retention period (1b), typically of about a second, those observers are asked in any of a number of ways to determine whether the test stimulus (1c) is the same as the initial, memorized stimulus. Later, Zhang and Luck (2008) introduced a method in which, after memorizing (1d) and retaining (1e) an array of items, observers indicate the color of one of those items by adjusting a cursor on a color wheel (1f). In this way, the precision of the memory representation can also be ascertained.

In the context of tasks like those just described, VWM seems clearly defined. However, when we think about what VWM might be used for in the world, as was mentioned earlier, things become less clear. Consider the role of VWM in visual search: It is clear that the contents of VWM can influence search (Cosman & Vecera, 2011; Soto et al., 2006). Recent evidence has indicated that only one item in VWM can capture visual attention (van Moorselaar, Theeuwes, & Olivers, 2014; but see Gilchrist & Cowan, 2011). On the basis of work of this sort, it has been suggested that VWM contains the representation of the current target of search—the search “template” (Olivers, Peters, Houtkamp, & Roelfsema, 2011). However, it is a bit too simple to think that the current instruction to your search engine is simply deposited in VWM, from whence it guides your search. For example, in search for specific real-world objects (find this cat, that toaster, etc.), it is possible to have observers search for any of, literally, hundreds of distinct possible targets at the same time, in a task known as “hybrid search” (Cunningham & Wolfe, 2012; Wolfe, 2012). The sheer number of these potential targets takes the storage and maintenance of this target set out of the realm of VWM. Moreover, the memory representations of these targets can last for many minutes or more, violating the temporal limits on VWM that are usually defined. Finally, it is possible to load VWM with colored boxes, as in Fig. 1a, without disrupting this multiple-target visual search (Drew, Boettcher, & Wolfe, 2013), even though other kinds of searches can interact antagonistically with VWM (e.g., Cain & Mitroff, 2013; Emrich, Al-Aidroos, Pratt, & Ferber, 2010).

It appears that a large set of possible target items can live in some “activated long-term memory,” or ALTM (Cowan, 1995). VWM may be a short-term buffer between ALTM and the search task. This is implied in Oberauer’s “three-embedded-components model” (Oberauer & Hein, 2012), but it remains for the future to reveal the details of how LTM, ALTM, VWM, and search work together. In any case, on the one hand VWM seems intimately, perhaps mandatorily, involved in tasks like visual search, but on the other hand, in some situations those tasks seem able to avoid the limitations of VWM.

This points to the fact that it is not always trivial to discriminate the VWM representation of a stimulus from other representations. For instance, in Wolfe et al. (2006), our observers looked at a set of 20 colored dots. At some point, while they were looking at the dots, one dot was cued by an increase in brightness. It could also change color at that moment. Observers were asked to say whether the cued dot had or had not changed color, and their detection of the change was very close to chance. This poor performance was consistent with an ability to monitor the color of no more than four of the 20 items, in spite of the fact that the dots were fully visible the whole time. This capacity limit on the ability to keep track of clearly visible items is very similar to the capacity limits seen in VWM experiments. A similar point has been made about the limits on the ability to track clearly visible, moving items in the multiple-object tracking paradigm (Alvarez & Cavanagh, 2005). Although monitoring a set of visible stimuli would not normally be thought of as a memory task, it might be that VWM limits also serve as a limit on our ability to attend to visible stimuli. This blurs the line between remembered and visible stimuli. Other experiments blur the line between VWM and LTM. The transitions and relations between VWM and a longer-term storage continue to be topics of active study (Endress & Potter, 2014).

These complexities notwithstanding, visual working memory is a booming topic in the field of visual cognition. This can be seen if we plot the number of articles on the topic, as registered by the Web of Science (Fig. 2).

As we know, the volume of scientific publication is increasing overall, so it could be that nothing is special about the rise in articles on VWM; maybe every topic is booming in the same way. As something of a test of the hypothesis that VWM is, indeed, a trending topic in the field, I inquired about other topics that have been important in my own work over the years. I looked for topics with roughly comparable numbers of total papers (1,000–1,500). For purposes of illustration, I have plotted the results for visual aftereffects and for a combination of change blindness (Simons & Rensink, 2005) and inattentional blindness (Mack & Rock, 1998). Visual aftereffects were a hot topic in the 1970s and have settled down to a rate of 30–40 articles per year over the last 20 years. Change and inattentional blindness are interesting because they came on the scene as research topics at about the same time as VWM, but although the blindness topics rose at about the same rate as VWM for about 15 years, they may have peaked a few years back, whereas the VWM output keeps increasing.

This special issue adds to that tally. What else, beyond mere numbers, will this issue contribute? I will say a word about each of the articles, with the intention to whet your appetite for more extended reading. Some of them add to our understanding of the properties of VWM. Others are directed at theoretical issues, usually trying to decide between “slot” and “resource” models. Slot models are those that propose that WM capacity is limited because of a limit on the number of discrete representations that can be held in WM. The usual estimate is that three to five slots are available to hold these representations. Resource models propose that WM is more continuous: Some amount of WM is available, but it can be allocated flexibly, with the quality of the representation varying as a function of the amount of resource devoted to that representation. Some studies serve both empirical and theoretical functions. The articles are roughly organized to place those concerned with the properties of VWM toward the beginning, with the theoretical pieces appearing toward the end of the issue.

Properties of working memory

Olivers and Schreij (2014) deal with the relationship of color and location in the classic VWM task, trying to connect the color memory task of Fig. 1 more closely to situations in the real world. They note that stimuli do not usually appear and disappear in a flash as they do in the standard VWM memorization phase. In the world, you turn a corner and see something new or the dynamic scene in front of you evolves, revealing new stimuli. As a step toward situations of that sort, Olivers and Schreij had their colored patches appear and disappear behind a virtual brick wall. They found that, as long as performance was off the ceiling and off the floor, their observers did better if the test display emerged in the same way that the original display had emerged. Thus, memory for the mode of appearance had an influence on the standard measures of VWM capacity.

In a different modification of the standard paradigm, Kool, Conway, and Turk-Browne (2014) asked what would happen in the color patch paradigm, illustrated in Figs. 1d–f, if the color patches were presented sequentially? This turns the classic VWM experiment into something more like the even more classic digit span or word list methods that have long been used for investigating short-term memory. Kool et al. report that spreading the memorization stage (Fig. 1d) over time produces strong recency effects—at least, when the set size gets larger than the standard VWM capacity of three or four items. If, instead of presenting five colored squares in a single flash, they presented them one after another, each in its own location, the last item presented was the one most accurately recalled when its location was probed. Primacy effects are much less marked; the first item presented got only a modest boost. These results looks a bit like a push-down stack: The item added to the top of the stack is remembered best, and the earlier items get pushed out by the more recent ones. One perhaps surprising aspect of the results of Kool et al. is that the precision of the memory, as measured with the color wheel method shown in Fig. 1f, does not show much change with serial position. There is more evidence for swaps between the earlier items on the list: You are cued for the item at location A, but you erroneously report the color of the item that was presented at location B. These results have a rather discrete, slot-like feel to them, but versions of resource models actually fit the data about as well.

Continuing with tasks based on particular colors in particular locations, Rajsic and Wilson (2014) document an interesting asymmetry. In many VWM tasks, observers are asked about the color of an item in a specific location or the location of an item of a specific color. These are not equivalent tasks: You do better if you are asked where the green item had been located than if you are asked which item had been green. Pertzov and Husain (2014) make a related point in their contribution. They found that previous objects in a location interfere with memory for the current object in that location, whereas previous objects sharing features other than location, such as color and orientation, do not. These studies are reminiscent of an earlier visual attention literature on the status of location. Is location a “feature” like color or orientation, or is it somehow different? As in the present VWM articles, the general conclusion of the older literature was that location is a different sort of feature (Kwak & Egeth, 1992; Nissen, 1985; Tsal & Lavie, 1988), though opinion has not been unanimous on this point (Bundesen, 1991).

Location may be a special sort of feature, but that does not mean that all other features form a homogeneous family. This has been a mistake in the study of visual attention. Although it is true that some rules do apply across features (e.g., Duncan & Humphreys, 1989), in other cases, a rule that applies, for example, to color might not apply to size (e.g., Bilsky & Wolfe, 1995). A similar situation arises in VWM. Just because you understand how things work with stimuli defined by one property does not mean that you understand how the findings apply to another property. Xing, Ledgeway, McGraw, and Schluppeck (2014) report new findings about the representation of contrast in VWM. The representation of some features, such as spatial frequency, seems to be stable over fairly long periods of time (see Magnussen & Dyrnes, 1994), but the precision of other representations degrades more rapidly. Contrast turns out to be a feature that degrades. Xing et al. were interested in whether the representation of contrast is abstract or is stored as the contrast of a specific spatial pattern. They found that VWM is more robust when the spatial arrangements do not change between exposure and test, suggesting that the representation is fairly specific.

Clevenger and Hummel (2014) expand the question of encoded features to include relationships between items in VWM. Suppose that you encode a big red square and a small blue triangle. What do you know about their relationship to each other? Do you know that the square is to the left of the triangle? Do you know how much bigger the square is? If you know these things, does each of these added bits of information take up space in VWM in some fashion? The core of the Clevenger and Hummel argument is that “encoding a pair of objects in WM entails encoding all the relations between that pair at no additional cost” (p. xxx). This claim is similar to the original Luck and Vogel (1997) claims about features in VWM. They held that you encode discrete objects and that you get all of the features of an encoded object as part of a package deal. That is, a big red vertical object is one object in VWM, not three features. Others have challenged the strong form of that claim (Cowan, Blume, & Saults, 2013). Similarly, it might turn out that there are limits on the “free” encoding of relationships between objects, but it is important to recognize that a limited-capacity VWM contains more than just the identities of three or four objects.

We tend to think of VWM as a single construct whose properties we are trying to unravel. At any moment, VWM might store four colored patches, or four of more complex conjunctions of features, as in the Clevenger and Hummel work, or, perhaps, four real-world objects. The burden of a literature review by Bancroft, Hockley, and Servos (2014) is that those stimuli might be represented in different neural substrates (cf. Xu & Chun, 2006). FMRI and electroencephalography experiments have localized evidence for VWM storage in prefrontal cortex and in sensory/task-specific cortex. Bancroft et al. argue that stimulus complexity is one (but not the only) factor that determines which part of the brain will hold any specific set of VWM stimuli. What goes where? We are used to thinking of a hierarchy in which simple visual properties are represented at early stages of cortical processing, with the frontal lobes handling more complex material. Here, however, the results point in the opposite direction. Simple VWM stimuli seem to be preferentially held in prefrontal cortex, with more complex stimuli apparently requiring the more specialized work of sensory/task-specific cortex. That said, behavioral data do not support the existence of independent VWM stores for complex and simple stimuli: We cannot increase WM capacity to eight by storing four color patches and four complex conjunctions. The distinct geography of simple and complex VWM described here must still be subject to some common capacity limitation.

There is more of an argument to be made for modality-specific WM stores (e.g., Baddeley & Hitch’s, 1974, phonological loop and visuospatial sketchpad). VWM is, by definition, “visual,” but of course, what gets encoded into memory need not be restricted to the visual modality. Salmela, Moisala, and Alho (2014) try to determine whether auditory and visual items are held in the same WM or in separate auditory and visual WM stores, as in the Baddeley and Hitch (1974) formulation. Their method involves looking at the precision of memory as a function of the number of items held there. Holding two visual spatial frequencies or two auditory tones in memory reduces the precision of memory, relative to just holding a single item. Salmela et al. report that holding one spatial frequency and one auditory tone at the same time exacts the same cost as holding two auditory or two visual stimuli, and from this result argue that WM is a cross-modal resource. Like other debates in this field (parallel vs. serial visual search, slots vs. resources in WM), this question will not be settled by any single experiment. Indeed, it seems likely, as Fougnie and Marois (2011) suggested, that WM is limited by both modality-specific and cross-modality factors, and that the pattern of results will depend on the specific methods used.

It is worth noting that, whereas precision and capacity are each used as measures of VWM, they are not measures of exactly the same thing. Lorenc, Pratte, Angeloni, and Tong (2014) use memory for faces to make this point. Their observers saw a sequence of faces, each in its own specific location, and were then probed for one of those faces. The number of faces in the sequence could vary. This method allowed the authors to get a measure of how many faces were held in memory, and it allowed them to measure the precision of the memory. They performed this experiment with upright and inverted faces. This revealed a dissociation between capacity and precision, because the number of faces held in memory did not change as a function of inversion, but the precision was lower for the inverted faces.

In their contribution, Konstantinou, Beal, King, and Lavie (2014) use WM as a way to test the predictions of load theory (Lavie, 2005). Load effects are often measured with flanker tasks in which a target letter is mapped to one response key. How much interference do you then get from a distractor that is mapped to another response key? The classic load theory finding is that increasing task difficulty reduces the interference from the distractor as more attentional resources are devoted to the target. In the Konstantinou et al. experiments, this is the pattern of results that you get if you impose a WM encoding load at the same time as the flanker task. Interestingly, you get the opposite result if the flanker task comes during WM maintenance. In this version, the WM load (e.g., the set of colored boxes) is presented well before the flanker task. The act of holding the items in WM makes observers more subject to the interfering effects of the distractor in the flanker task.

Two of the special issue articles deal with individual differences. The first, by Mall, Morey, Wolff, and Lehnert (2014), is a good example of a useful null result. As will be seen later in this issue, lots of evidence supports a close relationship between VWM and visual attention. Attention can be drawn to items held in VWM (Downing & Dodds, 2004; Soto, Hodsoll, Rotshtein, & Humphreys, 2008). There are strong claims that the attentional template for tasks such as visual search resides within WM (Olivers et al., 2011). Thus, it might seem reasonable that WM capacity would be related to tasks, like change detection, that make significant demands on visual attention. Mall et al. looked for evidence that WM capacity is related to the ability to filter out information that is not relevant to the current task, but they found no evidence for this. Models without a WM component fit the data better than do models that include WM. The other article dealing with individual differences, by Ko et al. (2014), concerns behavioral and neurophysiological (event-related potential) measures of the effects of aging on change detection. They measured evoked potentials while their participants did a version of the usual color patch paradigm. Perhaps the most interesting aspect of the Ko et al. results is a dissociation between contralateral delay activity (CDA) and WM capacity measures in older adults. The CDA signal has been taken as an electrophysiological measure of the current contents of WM and of its total capacity (Vogel & Machizawa, 2004), so it is interesting that the authors report that they “found a clear dissociation between the behavioral capacity estimates and the CDA” (Ko et al., this issue). Even though behavioral capacity was lower in the older observers, the CDA was not. Ko et al. speculate that the difference between younger and older observers may lie in the quality, and not the quantity, of VWM representations.

Gilchrist and Cowan (2014) report a result that points to an interesting parallel between search in VWM and search though visual stimuli (though, it should be noted, this is not the main point that they make in the article). Their observers performed a change detection task in which they saw a collection of colored patches and had to subsequently decide whether a test probe was or was not present in the display. As the memory set size varied, the RT × Set Size function was shallower for new probes (novel colors) than for old probes. Gilchrist and Cowan (2014) argue that this runs counter to models of WM. Interestingly, the result is very similar to what might be seen in a visual search experiment. If we take the novel colors to be the search target, then this follows the common rule that target-present RTs are generally faster than target-absent RTs, and that target-present RT × Set Size slopes are shallower. At small set sizes, Gilchrist and Cowan found that the new probes produced slopes near 0 ms/item, whereas the old probes produced relatively steep slopes. This is a pattern that is seen with some simple feature searches in visual search (searches in which the target is defined by a unique feature; e.g., color). If the feature search is not very easy, the target-present slopes are often near zero, but observers appear to do something more like a serial search for the target-absent trials (Wolfe, 1998). We can speculate that in these cases observers do not quite trust the signal that guides them to targets, so when there is no signal, they laboriously scrutinize the display to be sure that no target is present.

Pan, Lin, Zhao, and Soto (2014) asked whether WM could have an influence on perception outside of awareness. To examine this question, they used the continuous flash suppression (CFS) methodology, in which a salient, dynamic pattern in one eye effectively suppresses awareness of any stimulus in the other eye (Tsuchiya & Koch, 2005). They presented a face to observers for a later memory test. Then they started the CFS and slowly ramped up the contrast of a face in the suppressed eye. The face could be the old one or a new one, and the critical measure was the time until the face broke through the suppression to reach awareness. The face held in VWM broke through faster than a new face. This turned out to be the case even if the first presentation of the face was masked. Thus, a face that was unseen was coded into VWM and shortened the time required to break CFS. Importantly, the effect did not appear if the initial face was not memorized for a later memory test. Thus, VWM can operate below the radar of visual awareness. This makes a degree of intuitive sense, since if you are looking for an object—say, a pepper grinder—you are typically not aware of any visible template of that object. Indeed, you might have forgotten that you were explicitly looking for the item, realizing it only once you have found it.

Given that VWM stimuli influence other processes and are being influenced, in their turn, outside of awareness, one might be forgiven for feeling that the contents of VWM are promiscuously interacting with everything, while the conscious “owner” of that VWM is occupied elsewhere. Quak, Pecher, and Zeelenberg (2014) provide a useful negative finding to undercut this completely undifferentiated view of what is going on “under the hood.” They had individuals remembering objects that would require either a “precision grip” (like picking up a paperclip) or a “power grip” (like wielding a hammer). They also included objects that were never going to be picked up at all (like a bridge). Concurrent with the VWM task, they had observers engage in precision or power motor tasks in order to see whether the motor task interacted with VWM in an object-specific manner. The short answer is that, over a couple of experiments, doing a precision task showed no sign of interacting with memory for a paperclip any more than with memory for a bridge or hammer. Of course, this is a negative finding, but they make an argument that the studies were sufficiently powered to show an effect, if it were present. Quak et al. conclude that VWM does not make use of motor affordances when storing manipulable objects. Thus, some input streams do not seem to interact with VWM.

Theoretical matters: Slots, resources, and other models

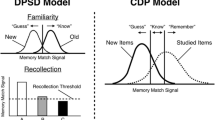

Turning to theories of VWM, it is clear that it is limited in its capacity, but how should we think about those limits? The theoretical landscape has been defined by two apparently opposing views. Luck and Vogel (1997) imagined that there are about four discrete “slots” into which one can place items to be held in VWM. Much of the work in the field has been framed by an assumption that there is a “magical number” of about four somethings (Cowan, 2001). Others—for example, Alvarez and Cavanagh (2004) and Wilken and Ma (2004)—have argued for a continuous “resource” that can be divided over an arbitrary number of objects in VWM. As you give less and less resource to an item, you get a poorer and poorer representation of that item in memory.

Ten years (or so) later, this debate has taken on something of the feel of other seemingly dichotomous debates, both in our field (e.g., serial vs. parallel arguments in visual search) and more broadly (e.g., nature vs. nurture). Over time, the models tend to become more nuanced, and the truth, as best we can see it, seems to incorporate aspects of both. This does not mean that the topic is either settled or uninteresting. It means that spectators in the VWM debate should not expect a knock-out blow that will leave either slots or resources standing victorious.

The more theoretically focused articles in this special issue illustrate some of the richness of the current intellectual landscape. Suchow, Fougnie, Brady, and Alvarez (2014) get us away from simple-minded slots-versus-resources dichotomies by offering a taxonomy based on “7 ± 2 core questions” (7 ± 2 equals 8, in this case). Some of these questions are largely orthogonal to the slots-versus-resources issue. Others illustrate how the slot and resource approaches could blend into one another. For example, suppose that you proposed some level of discrete quantization in VWM. For the sake of argument, let’s say that there are 24 VWM quanta. If you can assign those at will to different items, this will look a lot like a resource model; methods available to us are unlikely to tell the difference between a 24-quanta story and a completely continuous resource. If we suppose that assigning just one or two quanta to an item does not produce a reliable VWM representation, however, we will rapidly get to something that looks slot-like. Suppose that a reliable VWM representation requires four quanta. If you were very precise, you could squeeze six items into VWM, but if some items grabbed five or six quanta, you would produce something resembling a slot-like four-item limit. The preceding example is derived from Fig. 1 and Question 2 of Suchow et al. and is not intended as a real model. It merely illustrates that it would not be that hard to have an underlying structure that appeared like slots in one experiment and resources in another.

The article by Souza, Rerko, Lin, and Oberauer (2014) can serve as an illustration of the theoretical questions that hide within the dichotomous slots/resources debate. In the standard color patch experiment, suppose that an observer is holding a reddish item and a bluish item in VWM. If a probe is presented at one of the two locations, the observer can be asked whether the item at that location was the red or the blue one. The observer can also be asked to use a color wheel or other method to attempt to reproduce the exact shade of red (Fig. 1f). The memories supporting those two outputs could be dissociable. One might remember the red quite exactly but mislocalize it to the other location, or one might know that this location held the red item but be very imprecise about the precise hue. Souza et al. demonstrate this dissociation by delaying the time between the probe and the time of report. Once a probe has been presented, the chance of a transposition error during a subsequent delay is reduced, but the precision of the color judgment continues to decline. The authors’ modeling work argues that either slot or resource models need to represent the precision of location separately from the precision of the color representation. In this case, the data do not decide between the models, but do show that both classes of models can accommodate a richer representation in VWM.

One approach to attempting to choose between discrete slot and continuous resource styles of modeling of VWM is to seek improved methods for distinguishing between the predictions of those models. Donkin, Tran, and Nosofsky (2014) make use of “landscaping analysis of the ROC predictions” from slot and resource models. The work is an extension of Rouder et al. (2008), who argued in favor of discrete slots. The present article “did not point to a clear-cut winning model.” Donkin et al. report on four different experiments. Three of them point toward slots, but one strongly supported their continuous alternative. One conclusion that seems to follow is that the details of the experimental methods can be very important when trying to pick the best model in this area.

Another illustration of the difficulty in distinguishing between slots and resources comes from van den Berg and Ma’s (2014) article in this issue. “Plateau” methods are one of the standards in VWM studies. A measure of how many items are remembered is plotted against memory set size. For small memory set sizes, the amount remembered rises with the set size. At some point, that rise levels off to a plateau that is taken as a measure of VWM capacity (perhaps with some correction for guessing, and so forth). The presence of an inflection point between the rising and plateau portions of the curve is often cited as slot-friendly evidence. Resources, it is reasoned, should not yield a two-part curve. Using simulated data from slotted and slotless models, van den Berg and Ma argue that this logic is wrong. Again, the argument is reminiscent of earlier debates in the visual search literature in which RT × Set Size patterns that were thought to be clear evidence for “serial” models turned out to be ambiguous (Thornton & Gilden, 2007; Townsend & Wenger, 2004).

In the end, slots and resources are metaphors. No one would expect to open up the brain and find slots, like parking spots, in some specific location in the brain. One approach to modeling in this area is to drop the terms and formulate a different class of model. That is the choice of Johnson, Simmering, and Buss (2014). In the place of slot and resource metaphors for VWM representations, they offer a model based on neural dynamics. Swan and Wyble’s (2014) article can be seen as a different effort in the same spirit. At the heart of their model is a neural network dubbed the “binding pool” that links “types” of specific features (red, oblique, etc.) to specific object “tokens.” The tokens have a slot-like feel to them, whereas the pool is more like a continuous resource. The heart of the article illustrates how this architecture can produce the fundamental phenomena of the VWM field.

At the beginning of this introduction, I called the standard colored-box VWM stimuli “impoverished.” It is fitting to end the special issue with Orhan and Jacobs’s (2014) article making this point with much more depth and rigor. They argue that we are not really that interested in explaining memory for a few isolated items; what we want to know is how people operate in the real world. In the end, they remind us, this will require ecologically valid stimuli. In our daily life, we operate in a world of rich, continuous scenes in which you cannot actually count the set size (Neider & Zelinsky, 2008; Wolfe, Alvarez, Rosenholtz, Kuzmova, & Sherman, 2011; Yu, Samaras, & Zelinsky, 2014). They argue that the standard laboratory paradigms for studying VWM may significantly underestimate its capacity because, in the real world, our understanding of natural scene statistics allows us to do much more than we can do with four colored boxes, or even four isolated objects. They set forth a program that can move us closer to an understanding of VWM in the wild.

Finally, we have to confess to a bureaucratic embarrassment. The Johnson, Simmering, and Buss (2014) article, mentioned above, was inadvertently published in the previous issue. In an excess of efficiency, we also inadvertently published a couple ofShort Reports that were intended to be printed in this special issue. We reproduce these abstracts below and invite readers to seek out the whole articles elsewhere.

Töllner, Eschmann, Rusch, and Müller (2014) were interested in the question of whether or not each item in WM is coded independently. They had observers memorize sets of singleton items. These could all be singletons in the same dimension (e.g., red, yellow, and green items amidst blue distractors). Alternatively, each singleton could be defined by a unique value on a different dimension: the red item, the big item, and the tilted item, for example. Töllner and colleagues found that the task was a little more difficult if the items varied across dimensions. This is interesting, because it suggests that the memory of the big, red, vertical item is dependent on other items and is not entirely independent. Töllner et al. investigated some of the neural underpinnings of this effect using the CDA measure that has been so useful in VWM research.

The second Short Report that we published in advance also concerns the independence of the representation of items in VWM. As in the Töllner article, its conclusion is that items are not coded in isolation. Papenmeier and Huff (2014) addressed this question in a study of the role of spatial context in VWM (cf. Olivers & Schreij, 2014, discussed earlier). Papenmeier and Huff have long been interested in the viewpoint dependence/independence of visual representations (Huff, Meyerhoff, Papenmeier, & Jahn, 2010). Here, their observers memorized arrays of objects sitting on a slanted plane. When the view of that plane was changed, performance declined even though the objects were all still visible and recognizable. As in some of the studies in this special issue, an important takeaway message is that VWM items are stored in a context, not in splendid isolation (see also Brady & Tenenbaum, 2013).

We hope you find the special issue stimulating and useful. If you do, keep an eye out for another special issue on working memory due to be published in a few months in our sister journal, Memory & Cognition.

References

Alvarez, G. A., & Cavanagh, P. (2004). The capacity of visual short-term memory is set both by visual information load and by number of objects. Psychological Science, 15, 106–111. doi:10.1111/j.0963-7214.2004.01502006.x

Alvarez, G. A., & Cavanagh, P. (2005). Independent resources for attentional tracking in the left and right visual hemifields. Psychological Science, 16, 637–643.

Baddeley, A. D., & Hitch, G. (1974). Working memory. In G. H. Bower (Ed.), The psychology of learning and motivation (Vol. 8, pp. 47–89). New York, NY: Academic Press.

Bancroft, T. D., Hockley, W. E., & Servos, P. (2014). Does stimulus complexity determine whether working memory storage relies on prefrontal or sensory cortex? Attention, Perception, & Psychophysics, 76, 1–8. doi:10.3758/s13414-013-0604-0

Bilsky, A. A., & Wolfe, J. M. (1995). Part–whole information is useful in visual search for size × size but not orientation × orientation conjunctions. Perception & Psychophysics, 57, 749–760. doi:10.3758/BF03206791

Brady, T. F., & Tenenbaum, J. B. (2013). A probabilistic model of visual working memory: Incorporating higher order regularities into working memory capacity estimates. Psychological Review, 120, 85–109. doi:10.1037/a0030779

Bundesen, C. (1991). Visual selection of features and objects: Is location special? A reinterpretation of Nissen’s (1985) findings. Perception & Psychophysics, 50, 87–89.

Cain, M. S., & Mitroff, S. R. (2013). Memory for found targets interferes with subsequent performance in multiple-target visual search. Journal of Experimental Psychology: Human Perception and Performance, 39, 1398–1408.

Clevenger, P. E., & Hummel, J. E. (2014). Working memory for relations among objects. Attention, Perception, & Psychophysics, 76, 1–21. doi:10.3758/s13414-013-0601-3

Cosman, J., & Vecera, S. (2011). The contents of visual working memory reduce uncertainty during visual search. Attention, Perception, & Psychophysics, 73, 996–1002. doi:10.3758/s13414-011-0093-y

Cowan, N. (1995). Attention and memory: An integrated framework. New York, NY: Oxford University Press.

Cowan, N. (2001). The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behavioral and Brain Science, 24, 87–114. doi:10.1017/S0140525X01003922. discussion 114–185.

Cowan, N., Blume, C. L., & Saults, J. S. (2013). Attention to attributes and objects in working memory. Journal of Experimental Psychology: Learning, Memory, and Cognitive, 39, 731–747.

Cunningham, C. A., & Wolfe, J. M. (2012). Extending “hybrid” Visual × Memory search to very large memory sets and to category search. Paper presented at the annual meeting of the Psychonomic Society, St. Louis, Missouri.

Donkin, C., Tran, S. C., & Nosofsky, R. (2014). Landscaping analyses of the ROC predictions of discrete-slots and signal-detection models of visual working memory. Attention, Perception, & Psychophysics, 76, 1–14. doi:10.3758/s13414-013-0561-7

Downing, P., & Dodds, C. (2004). Competition in visual working memory for control of search. Visual Cognition, 11, 689–703.

Drew, T., Boettcher, S., & Wolfe, J. M. (2013). Hybrid visual and memory search remains efficient when visual working memory is full. Paper presented at the Annual Meeting of the Psychonomic Society, Toronto, Ontario.

Duncan, J., & Humphreys, G. W. (1989). Visual search and stimulus similarity. Psychological Review, 96, 433–458. doi:10.1037/0033-295X.96.3.433

Emrich, S. M., Al-Aidroos, N., Pratt, J., & Ferber, S. (2010). Finding memory in search: The effect of visual working memory load on visual search. Quarterly Journal of Experimental Psychology, 63, 1457–1466. doi:10.1080/17470218.2010.483768

Endress, A. D., & Potter, M. C. (2014). Something from (almost) nothing: Buildup of object memory from forgettable single fixations. Attention, Perception, & Psychophysics. doi:10.3758/s13414-014-0706-3

Fougnie, D., & Marois, R. (2011). What limits working memory capacity? Evidence for modality-specific sources to the simultaneous storage of visual and auditory arrays. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 1329–1341. doi:10.1037/a0024834

Gilchrist, A. L., & Cowan, N. (2011). Can the focus of attention accommodate multiple, separate items? Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 1484–1502. doi:10.1037/a0024352

Gilchrist, A. L., & Cowan, N. (2014). A two-stage search of visual working memory: Investigating speed in the change-detection paradigm. Attention, Perception, & Psychophysics, 76, 1–20. doi:10.3758/s13414-014-0704-5

Hollingworth, A., Richard, A. M., & Luck, S. J. (2008). Understanding the function of visual short-term memory: Transsaccadic memory, object correspondence, and gaze correction. Journal of Experimental Psychology: General, 137, 163–181. doi:10.1037/0096-3445.137.1.163

Huff, M., Meyerhoff, H., Papenmeier, F., & Jahn, G. (2010). Spatial updating of dynamic scenes: Tracking multiple invisible objects across viewpoint changes. Attention, Perception, & Psychophysics, 72, 628–636. doi:10.3758/APP.72.3.628

Johnson, J. S., Simmering, V. R., & Buss, A. T. (2014). Beyond slots and resources: Grounding cognitive concepts in neural dynamics. Attention, Perception, & Psychophysics, 76, 1–25. doi:10.3758/s13414-013-0596-9

Ko, P. C., Duda, B., Hussey, E., Mason, E., Molitor, R. J., Woodman, G. F., & Ally, B. A. (2014). Understanding age-related reductions in visual working memory capacity: Examining the stages of change detection. Attention, Perception, & Psychophysics, 76, 1–16. doi:10.3758/s13414-013-0585-z

Konstantinou, N., Beal, E., King, J.-R., & Lavie, N. (2014). Working memory load and distraction: Dissociable effects of visual maintenance and cognitive control. Attention, Perception, & Psychophysics, 76, 1–13. doi:10.3758/s13414-014-0742-z

Kool, W., Conway, A. R. A., & Turk-Browne, N. B. (2014). Sequential dynamics in visual short-term memory. Attention, Perception, & Psychophysics, 76, 1–17. doi:10.3758/s13414-014-0755-7

Kwak, H.-W., & Egeth, H. (1992). Consequences of allocating attention to locations and to other attributes. Perception & Psychophysics, 51, 455–464.

Lavie, N. (2005). Distracted and confused? Selective attention under load. Trends in Cognitive Sciences, 9, 75–82. doi:10.1016/j.tics.2004.12.004

Lorenc, E. S., Pratte, M. S., Angeloni, C. F., & Tong, F. (2014). Expertise for upright faces improves the precision but not the capacity of visual working memory. Attention, Perception, & Psychophysics, 76, 1–10. doi:10.3758/s13414-014-0653-z

Luck, S. J., & Vogel, E. K. (1997). The capacity of visual working memory for features and conjunctions. Nature, 390, 279–281. doi:10.1038/36846

Luck, S. J., & Vogel, E. K. (2013). Visual working memory capacity: From psychophysics and neurobiology to individual differences. Trends in Cognitive Sciences, 17, 391–400. doi:10.1016/j.tics.2013.06.006

Mack, A., & Rock, I. (1998). Inattentional blindness. Cambridge, MA: MIT Press.

Magnussen, S., & Dyrnes, S. (1994). High-fidelity perceptual long-term memory. Psychological Science, 5, 99–102.

Mall, J. T., Morey, C. C., Wolff, M. J., & Lehnert, F. (2014). Visual selective attention is equally functional for individuals with low and high working memory capacity: Evidence from accuracy and eye movements. Attention, Perception, & Psychophysics, 76, 1–17. doi:10.3758/s13414-013-0610-2

Neider, M. B., & Zelinsky, G. J. (2008). Exploring set size effects in scenes: Identifying the objects of search. Visual Cognition, 16, 1–10. doi:10.1080/13506280701381691

Nissen, M. J. (1985). Accessing features and objects: Is location special? In M. I. Posner & O. S. M. Marin (Eds.), Attention and performance XI (pp. 205–219). Hillsdale, NJ: Erlbaum.

Oberauer, K., & Hein, L. (2012). Attention to information in working memory. Current Directions in Psychological Science, 21, 164–169.

Olivers, C. N. L., Peters, J., Houtkamp, R., & Roelfsema, P. R. (2011). Different states in visual working memory: When it guides attention and when it does not. Trends in Cognitive Sciences, 15, 327–334. doi:10.1016/j.tics.2011.05.004

Olivers, C. N. L., & Schreij, D. (2014). Visual memory performance for color depends on spatiotemporal context. Attention, Perception, & Psychophysics, 76, 1–12. doi:10.3758/s13414-014-0741-0

Orhan, A. E., & Jacobs, R. A. (2014). Toward ecologically realistic theories in visual short-term memory research. Attention, Perception, & Psychophysics, 76, 1–13. doi:10.3758/s13414-014-0649-8

Pan, Y., Lin, B., Zhao, Y., & Soto, D. (2014). Working memory biasing of visual perception without awareness. Attention, Perception, & Psychophysics, 76, 1–12. doi:10.3758/s13414-013-0566-2

Papenmeier, F., & Huff, M. (2014). Viewpoint-dependent representation of contextual information in visual working memory. Attention, Perception, & Psychophysics, 76, 663–668. doi:10.3758/s13414-014-0632-4

Pertzov, Y., & Husain, M. (2014). The privileged role of location in visual working memory. Attention, Perception, & Psychophysics, 76, 1–11. doi:10.3758/s13414-013-0541-y

Quak, M., Pecher, D., & Zeelenberg, R. (2014). Effects of motor congruence on visual working memory. Attention, Perception, & Psychophysics, 76, 1–8. doi:10.3758/s13414-014-0654-y

Rajsic, J., & Wilson, D. E. (2014). Asymmetrical access to color and location in visual working memory. Attention, Perception, & Psychophysics, 76, 1–12. doi:10.3758/s13423-013-0502-4

Rouder, J. N., Morey, R. D., Cowan, N., Zwilling, C. E., Morey, C. C., & Pratte, M. S. (2008). An assessment of fixed-capacity models of visual working memory. Proceedings of the National Academy of Sciences, 105, 5975–5979. doi:10.1073/pnas.0711295105

Salmela, V. R., Moisala, M., & Alho, K. (2014). Working memory resources are shared across sensory modalities. Attention, Perception, & Psychophysics, 76, 1–13. doi:10.3758/s13414-014-0714-3

Simons, D. J., & Rensink, R. A. (2005). Change blindness: Past, present, and future. Trends in Cognitive Sciences, 9, 16–20. doi:10.1016/j.tics.2004.11.006

Soto, D., Hodsoll, J., Rotshtein, P., & Humphreys, G. W. (2008). Automatic guidance of attention from working memory. Trends in Cognitive Sciences, 12, 342–348. doi:10.1016/j.tics.2008.05.007

Soto, D., Humphreys, G. W., & Heinke, D. (2006). Working memory can guide pop-out search. Vision Research, 46, 1010–1018.

Souza, A. S., Rerko, L., Lin, H.-Y., & Oberauer, K. (2014). Focused attention improves working memory: Implications for flexible-resource and discrete-capacity models. Attention, Perception, & Psychophysics, 76, 1–23. doi:10.3758/s13414-014-0687-2

Suchow, J. W., Fougnie, D., Brady, T. F., & Alvarez, G. A. (2014). Terms of the debate on the format and structure of visual memory. Attention, Perception, & Psychophysics, 76, 1–9. doi:10.3758/s13414-014-0690-7

Swan, G., & Wyble, B. (2014). The binding pool: A model of shared neural resources for distinct items in visual working memory. Attention, Perception, & Psychophysics, 76, 1–22. doi:10.3758/s13414-014-0633-3

Thornton, T. L., & Gilden, D. L. (2007). Parallel and serial processes in visual search. Psychological Review, 114, 71–103.

Töllner, T., Eschmann, K. C. J., Rusch, T., & Müller, H. J. (2014). Contralateral delay activity reveals dimension-based attentional orienting to locations in visual working memory. Attention, Perception, & Psychophysics, 76, 655–662. doi:10.3758/s13414-014-0636-0

Townsend, J. T., & Wenger, M. J. (2004). The serial–parallel dilemma: A case study in a linkage of theory and method. Psychonomic Bulletin & Review, 11, 391–418. doi:10.3758/BF03196588

Tsal, Y., & Lavie, N. (1988). Attending to color and shape: The special role of location in selective visual processing. Perception & Psychophysics, 44, 15–21. doi:10.3758/BF03207469

Tsuchiya, N., & Koch, C. (2005). Continuous flash suppression reduces negative afterimages. Nature Neuroscience, 8, 1096–1101.

van den Berg, R., & Ma, W. J. (2014). “Plateau”-related summary statistics are uninformative for comparing working memory models. Attention, Perception, & Psychophysics, 76, 1–19. doi:10.3758/s13414-013-0618-7

van Moorselaar, D., Theeuwes, J., & Olivers, C. N. L. (2014). In competition for the attentional template: Can multiple items within visual working memory guide attention? Journal of Experimental Psychology: Human Perception and Performance, 40, 1450–1464. doi:10.1037/a0036229

Vogel, E. K., & Machizawa, M. G. (2004). Neural activity predicts individual differences in visual working memory capacity. Nature, 428, 748–751. doi:10.1038/nature02447

Wilken, P., & Ma, W. J. (2004). A detection theory account of change detection. Journal of Vision, 4(12), 1120–1135. doi:10.1167/4.12.11

Wolfe, J. M. (1998). What can 1 million trials tell us about visual search? Psychological Science, 9, 33–39. doi:10.1111/1467-9280.00006

Wolfe, J. M. (2012). Saved by a log: How do humans perform hybrid visual and memory search? Psychological Science, 23, 698–703. doi:10.1177/0956797612443968

Wolfe, J. M., Alvarez, G. A., Rosenholtz, R., Kuzmova, Y. I., & Sherman, A. M. (2011). Visual search for arbitrary objects in real scenes. Attention, Perception, & Psychophysics, 73, 1650–1671. doi:10.3758/s13414-011-0153-3

Wolfe, J. M., Reinecke, A., & Brawn, P. (2006). Why don’t we see changes? The role of attentional bottlenecks and limited visual memory. Visual Cognition, 14, 749–780. doi:10.1080/13506280500195292

Xing, Y., Ledgeway, T., McGraw, P., & Schluppeck, D. (2014). The influence of spatial pattern on visual short-term memory for contrast. Attention, Perception, & Psychophysics, 76, 1–8. doi:10.3758/s13414-014-0671-x

Xu, Y., & Chun, M. M. (2006). Dissociable neural mechanisms supporting visual short-term memory for objects. Nature, 440, 91–95. doi:10.1038/nature04262

Yu, C. P., Samaras, D., & Zelinsky, G. J. (2014). Modeling visual clutter perception using proto-object segmentation. Journal of Vision, 14(7), 4. doi:10.1167/14.7.4

Zhang, W., & Luck, S. J. (2008). Discrete fixed-resolution representations in visual working memory. Nature, 453, 233–235. doi:10.1038/nature06860

Acknowledgments

This work was supported by Office of Naval Research (ONR) Grant N000141010278, National Institute of Health (NIH) Grant EY017001, National Geospatial Agency (HM0177-13-1-0001_P00001), and the NSF CELEST Science of Learning Center.

Author information

Authors and Affiliations

Corresponding author

Additional information

Abstracts of previously published papers

Töllner, T., Eschmann, K. C. J., Rusch, T., & Müller, H. J. (2014). Contralateral delay activity reveals dimension-based attentional orienting to locations in visual working memory. Attention, Perception, & Psychophysics, 76, 655–662. doi:10.3758/s13414-014-0636-0

Abstract In research on visual working memory (WM), a contentiously debated issue concerns whether or not items are stored independently of one another in WM. Here we addressed this issue by exploring the role of the physical context that surrounds a given item in the memory display in the formation of WM representations. In particular, we employed bilateral memory displays that contained two or three lateralized singleton items (together with six or five distractor items), defined either within the same or in different visual feature dimensions. After a variable interval, a retro-cue was presented centrally, requiring participants to discern the presence (vs. the absence) of this item in the previously shown memory array. Our results show that search for targets in visual WM is determined interactively by dimensional context and set size: For larger, but not smaller, set sizes, memory search slowed down when targets were defined across rather than within dimensions. This dimension-specific cost manifested in a stronger contralateral delay activity component, an established neural marker of the access to WM representations. Overall, our findings provide electrophysiological evidence for the hierarchically structured nature of WM representations, and they appear inconsistent with the view that WM items are encoded in isolation.

Papenmeier, F., & Huff, M. (2014). Viewpoint-dependent representation of contextual information in visual working memory. Attention, Perception, & Psychophysics, 76, 663–668. doi:10.3758/s13414-014-0632-4

Johnson, J., Simmering, V., & Buss, A. (2014). Beyond slots and resources: Grounding cognitive concepts in neural dynamics. Attention, Perception, & Psychophysics, 76(6), 1630–1654. doi:10.3758/s13414-013-0596-9

Abstract Research over the past decade has suggested that the ability to hold information in visual working memory (VWM) may be limited to as few as three to four items. However, the precise nature and source of these capacity limits remains hotly debated. Most commonly, capacity limits have been inferred from studies of visual change detection, in which performance declines systematically as a function of the number of items that participants must remember. According to one view, such declines indicate that a limited number of fixed-resolution representations are held in independent memory “slots.” Another view suggests that such capacity limits are more apparent than real, but emerge as limited memory resources are distributed across more to-be-remembered items. Here we argue that, although both perspectives have merit and have generated and explained impressive amounts of empirical data, their central focus on the representations—rather than processes—underlying VWM may ultimately limit continuing progress in this area. As an alternative, we describe a neurally grounded, process-based approach to VWM: the dynamic field theory. Simulations demonstrate that this model can account for key aspects of behavioral performance in change detection, in addition to generating novel behavioral predictions that have been confirmed experimentally. Furthermore, we describe extensions of the model to recall tasks, the integration of visual features, cognitive development, individual differences, and functional imaging studies of VWM. We conclude by discussing the importance of grounding psychological concepts in neural dynamics, as a first step toward understanding the link between brain and behavior.

Rights and permissions

About this article

Cite this article

Wolfe, J.M. Introduction to the special issue on visual working memory. Atten Percept Psychophys 76, 1861–1870 (2014). https://doi.org/10.3758/s13414-014-0783-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-014-0783-3