Abstract

In this paper, we present novel semi-blind channel estimation schemes for Rayleigh fading Multi-Input Multi-Output (MIMO) channel. Here channel matrix H is decomposed as an upper triangular matrix R, which can be estimated blindly using the Householder transformation based QR decomposition of received output covariance matrix and Q matrix, which can be estimated using the Tikhonov regularization-based MAP (maximum a posteriori) and MMSE (minimum mean square error) techniques with the help of singular value decomposed orthogonal pilot symbols. Simulation results in terms of BER performance obtained for BPSK and 4-PSK data modulation schemes using Alamouti coded 2×6 (2 transmitter and 6 receiver antennas) and 2×8 (2 transmitter and 8 receiver antennas) cases by choosing different values of regularization parameter λ. Appropriate choice of regularization parameter can be calculated using discrepancy principles that gives better performance in terms of BER. The paper proposes the novel semi-blind channel estimation approach using the Householder QR decomposition based blind estimation of R and Tikhonov regularized based MMSE and MAP algorithms using pilot symbols for estimation of Q will yield good results in channel estimation methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1. Introduction

In this modern and high-tech world of communication, there is a requirement to deploy a robust and efficient wireless system to support high data rates with less errors. MIMO wireless communications have become an integral part of the most forthcoming commercial and next generation wireless data communication systems. These systems offer an increase in the data throughput and link robustness without any need for additional bandwidth or transmitting power [1]–[4].

MIMO antenna technology achieves this objective by spreading the same total transmit power over several antennas to accomplish an array gain that recovers the spectral efficiency (more bits per second per Hertz of bandwidth) or to achieve a diversity gain that increases the link reliability, i.e. resulting in a reduced fading [5]–[8].

Many techniques like pilot, blind, semi-based transmission methods have been explored for channel estimation, as a robust channel estimation method plays a very crucial role in the performance of MIMO system. Pilot-based channel algorithms may have a disadvantage as compared to blind channel estimation methods, which were shown to provide better spectral efficiencies since it uses received data statistics and does not requir pilot symbols [9], [10]. Hence, as a trade-off to balance both above techniques, semi-blind methods have been thoroughly studied.

The approaches for semi-blind channel estimation using a combination of few pilot-based symbols with blind statistical information make it possible to solve the poor convergence speed and high complexity problems of blind estimators [11]–[13].

Here we propose and present a novel semi-blind channel estimation scheme. The main steps of this novel scheme are as follows:

Step 1. From the received output data covariance matrix, it is necessary to estimate R matrix blindly using Householder transformation based QR decomposition method.

Step 2. From the orthogonal pilot symbols, it is necessary to estimate Q matrix using the Tikhonov regularization-based MAP and MMSE techniques with the help of singular value decomposition of orthogonal pilots and by choosing appropriate regularization parameter λ using discrepancy principal method [4], [14]–[16].

Final channel estimate will be calculated as follows:

Tikhonov’s regularization is a method that works well in case of a matrix with ill-conditioned inverse problems [4]. Tikhonov’s regularization is usually helpful in mitigating the problem in linear regression. This paper explores the use of Tikhonov regularized LS and MAP, both training-based methods to find the unitary matrix Q [17]–[20].

2. System Model

The MIMO system exploits the advantages of spatial diversity by playing with the number of transmitting and receiving antennas.

The basic model of a spatially multiplexed MIMO system (Fig. 1) includes the number of transmitting antennas Tx and the number of receiving antennas Rx, and Rx ≥ Tx. The general equation for the received signal matrix Y is given as

where X is the input signal matrix, H is the channel matrix and n is the noise matrix.

Basic block diagram for MIMO system [6].

The channel is modelled as H, which is a matrix. Here a flat fading scenario is considered where the channel characteristics remain constant for a block or a frame of certain symbols.

3. Proposed Semi-Blind Channel Estimation Technique

3.1. Householder QR Decomposition Based Blind Matrix Calculation

Here MIMO channel matrix H can be decomposed as a product \( \hat{\mathbf{H }} = \mathbf{R}{\hat{\mathbf{Q}}}^{\rm H} \) , where R denotes the upper triangular matrix, which is estimated blindly using the Householder decomposition of output covariance matrix and Q is estimated by using the Tikhonov regularized MAP and MMSE pilot-based methods.

Now using (1), we can write output covariance matrix \( {{\mathbf{R}}_{\mathbf{YY}}} \) as

where \( {{\mathbf{Y}}_{{b}}} \) are the received output data, \( {{\mathbf{X}}_{{b}}} \) are the blind input data, \( \sigma _{n}^{2} \) is the noise power, and ( )H is hermitian matrix.

Next, we can write output covariance matrix \( {{\mathbf{R}}_{\mathbf{YY}}} \) as

Now using the Householder QR transformation of output covariance matrix, a series of reflection matrices are applied to matrix \( {{\mathbf{R}}_{\mathbf{YY}}} \) column by column to annihilate the lower triangular elements. The orthonormal reflection transformations can be written as follows:

where g is the Householder vector and \( \beta =-2\left\| \mathbf{g} \right\|_{2}^{2} \) .

For matrix \( {{\mathbf{R}}_{\mathbf{YY}}} \) to annihilate the lower elements of the K-th column, matrix \( {{\mathbf{A}}_{{K}}} \) is constructed as follows:

1. Let g will be the K-th column of \( {{\mathbf{R}}_{\mathbf{YY}}} \) .

2. Update the g value by using expression

where \( \boldsymbol{\psi }={{[1,\,0,\,0,\,0]}^{\rm T}} \) .

3. Determine \( \beta \) to be equal to \( \beta =-2\left\| \mathbf{g} \right\|_{2}^{2} \) .

4. A is calculated as \( \mathbf{A}=(1+\beta \mathbf{g}{{\mathbf{g}}^{{\rm H}}}) \) .

Further results are pre-multiplied by \( {{\mathbf{R}}_{\mathbf{YY}}} \) sequentially as follows:

where R is the \( n\times n \) upper triangular matrix and 0 is the null matrix.

So, we can estimate R blindly using the Householder transformation based QR decomposition method.

Using the QR decomposition based semi-blind method, it is possible to determine the MIMO channel [17]

3.2. Tikhonov’s Regularization-based Cost Function for Pilot-based Channel Estimation

When using the Tikhonov regularization based constrained ML estimate to find matrix Q, the cost function is given as follows:

where \( ||\;||_F \) is Frobenius norm, λ is the regularization parameter, \( {\mathbf X}_p \) is the matrix of orthogonal pilot symbols and \( {\mathbf Y}_p \) is the output matrix of pilot symbols. Hence, using (5) we can write:

3.2.1. Tikhonov regularized MAP algorithm

Using MAP algorithm [7] (2008) in conjunction with the Tikhonov regularization method, the channel matrix can be calculated as follows

where \( {\mathbf R}_{hh} \) is the channel covariance matrix and \( {\mathbf R}_{nn} \) is the noise covariance matrix.

Using MAP analysis we can write equation of channel matrix as

where \( {{{\mathbf X}}_{p}^{\ne }} \) is the regularized inverse matrix given as follows

Now we can take SVD of \( {\mathbf X}_p \) and write the following equations

where \( \sigma_i \) are the singular values of matrix \( {\mathbf \Sigma }_x \) , and \( u_i \) and \( v_i \) are values of \( {\mathbf U}_x \) and \( {\mathbf V}_x \) orthonormal matrices.

Using Tikhonov filter factors

we obtain

Now we can write

then Q can be estimated as follows:

where \( (\,)^{\dagger } \) denotes pseudo inverse (moore- penrose inverse)

3.2.2. Tikhonov regularized MMSE algorithm

Using MMSE algorithm [7] in conjunction with Tikhonov’s method, the channel matrix can be calculated as follows:

Using the same analysis that was applied for MAP, we can write:

The choice of regularization parameter λ is one of important points that will be discussed in the next section.

3.2.3. Choosing regularization parameter λ while using discrepancy principle

We can write the solution norm in the form:

where \( ||e||_2\le\delta_e \) , \( {{{\mathbf H}}^{{\lambda }}} \) is the estimated channel for particular regularization value λ and \( \delta_e \) is the error of the norm, which minimizes the cost function. Estimation error can be found as

For the MAP method we can write the equation in the form

Next for the MMSE method, we can write the equation in the form

Now choosing λ in such way that

where \( {{{\mathbf H}}^{{\lambda }}}={\mathbf X}_{{p}}^{\ne }{{{\mathbf Y}}_{{p}}} \) , \( {{\alpha }^{2}}=\operatorname{cov}({{{\mathbf Y}}_{{p}}}) \) , and cov represents covariance of (Yp).

Next, using the generalized cross validation (GCV), we select parameter λ in such a way that minimizes function \( G(\lambda ) \)

4. Simulation Results

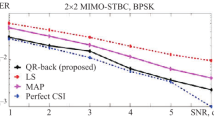

Extensive simulation has been conducted to demonstrate the bit error rate (BER) performance of proposed novel semi-blind channel estimation techniques.

Results are obtained for BPSK and 4-PSK data modulation schemes with 100 orthogonal pilot symbols using Alamouti coded 2 transmitter antennas and 6 or 8 receiver antennas cases by choosing and deriving values of regularization parameter λ equal to 0.5 and 0.1 using the discrepancy principle method.

Figures 2 and 3 demonstrate the performance of Tikhonov regularized MMSE channel estimation technique using 4-PSK modulation scheme for 6 and 8 receiver antennas for with and without noise cases. We can see that performance is improved by 1 dB for regularization factor equal to 0.5 compare to 1 for both cases and further performance is better by using 8 antennas in the same case. Figures 4 and 5 demonstrate the same analysis using BPSK modulation system, which depicts that performance is better compare to 4-PSK modulation scheme with overall analysis almost 2 to 3 dB improvements compare to the previous case.

Figures 6 to 9 demonstrate the performance analysis of Tikhonov regularized MAP channel estimation technique using 4-PSK and BPSK for 6 and 8 receiver antennas. We can further depict that better results can be observed with improvement of 1.5 dB for BPSK scheme and for 8 receiver antennas compare to 4-PSK scheme and with 6 receiver antennas for regularization factor equal to 0.1 compare to 0.5.

Here a Rayleigh fading MIMO channel has been considered for simulations with noise (practical case) and without noise criterion.

5. Conclusions

The results have shown that the chosen values of parameter λ equal to 0.1 and less yield the best results in terms of the BER vs SNR relationship. In case of increasing the number of receive antennas, the improvement in results was due to the MIMO advantage related to the spatial diversity.

The selection of the BPSK modulation scheme has given better results since the complexity involved in higher modulation schemes has been removed. However, with the demands that the evolving technology put on MIMO, the selection of higher modulation schemes will be a necessity for supporting the higher data rates with lesser interferences and robust link coverage.

The comparison with the perfect channel showed that the results for the simulations run without noise were closer to and at times the same as for the perfect channel case. However, in practical cases, the noise will be present no matter how good the environment conditions are. Hence, the simulations with noise should be considered for studying the schemes that can be proposed to obtain better channel estimation methods.

This paper proposes a novel semi-blind channel estimation scheme using Householder QR decomposition based blind estimation of matrix R and Tikhonov regularized based MMSE and MAP algorithms using pilot symbols for estimation of matrix Q will yield good results in channel estimation methods.

References

M. A. M. Moqbel, W. Wangdong, A. Z. Ali, "MIMO channel estimation using the LS and MMSE algorithm," IOSR J. Electron. Commun. Eng., v.12, n.01, p.13 (2017). DOI: https://doi.org/10.9790/2834-1201021322.

M. Biguesh, A. B. Gershman, "Training-based MIMO channel estimation: a study of estimator tradeoffs and optimal training signals," IEEE Trans. Signal Process., v.54, n.3, p.884 (2006). DOI: https://doi.org/10.1109/TSP.2005.863008.

A. K. Jagannatham, B. D. Rao, "Whitening-rotation-based semi-blind MIMO channel estimation," IEEE Trans. Signal Process., v.54, n.3, p.861 (2006). DOI: https://doi.org/10.1109/TSP.2005.862908.

S. Konstantinidis, S. Freear, "Performance analysis of Tikhonov regularized LS channel estimation for MIMO OFDM systems with virtual carriers," Wirel. Pers. Commun., v.64, n.4, p.703 (2012). DOI: https://doi.org/10.1007/s11277-010-0214-2.

F. Wan, W.-P. Zhu, M. N. S. Swamy, "Perturbation analysis of subspace-based semi-blind MIMO channel estimation approaches," in 2008 IEEE International Symposium on Circuits and Systems (IEEE, 2008). DOI: https://doi.org/10.1109/ISCAS.2008.4541371.

P. Meerasri, P. Uthansakul, M. Uthansakul, "Self-interference cancellation-based mutual-coupling model for full-duplex single-channel MIMO systems," Int. J. Antennas Propag., v.2014, p.1 (2014). DOI: https://doi.org/10.1155/2014/405487.

J. Gao, H. Liu, "Low-complexity MAP channel estimation for mobile MIMO-OFDM systems," IEEE Trans. Wirel. Commun., v.7, n.3, p.774 (2008). DOI: https://doi.org/10.1109/TWC.2008.051072.

J. Bhalani, R. Patel, S. Patel, "Performance analysis and implementation of different modulation techniques in Almouti MIMO scheme with Rayleigh channel," in International Conference on Recent Trends in Computing and Communication Engineering (MIT Group of Institutions, Hamirpur, 2013). URI: https://www.seekdl.org/conferences/paper/details/1323.html.

K. B. Petersen, M. S. Pedersen, The Matrix Cookbook (Technical University of Denmark, Lyngby, 2012). URI: https://www.math.uwaterloo.ca/~hwolkowi/matrixcookbook.pdf.

J. Proakis, M. Salehi, Digital Communications (McGraw-Hill Science/Engineering/Math, New York, 2007). URI: http://www.amazon.com/Digital-Communications-Edition-John-Proakis/dp/0072957166.

D. Pal, "Fractionally spaced semi-blind equalization of wireless channels," in [1992] Conference Record of the Twenty-Sixth Asilomar Conference on Signals, Systems & Computers (IEEE Comput. Soc. Press, Washington, 1992). DOI: https://doi.org/10.1109/ACSSC.1992.269117.

E. de Carvalho, D. T. M. Slock, "Asymptotic performance of ML methods for semi-blind channel estimation," in Conference Record of the Thirty-First Asilomar Conference on Signals, Systems and Computers (Cat. No.97CB36136) (IEEE Comput. Soc, Washington, 1998). DOI: https://doi.org/10.1109/ACSSC.1997.679177.

A. Medles, D. T. M. Slock, E. De Carvalho, "Linear prediction based semi-blind estimation of MIMO FIR channels," in 2001 IEEE Third Workshop on Signal Processing Advances in Wireless Communications (SPAWC’01). Workshop Proceedings (Cat. No.01EX471) (IEEE, Washington, 2001). DOI: https://doi.org/10.1109/SPAWC.2001.923843.

A. Jagannatham, B. D. Rao, "A semi-blind technique for MIMO channel matrix estimation," in 2003 4th IEEE Workshop on Signal Processing Advances in Wireless Communications - SPAWC 2003 (IEEE Cat. No.03EX689) (IEEE, Washington, 2004). DOI: https://doi.org/10.1109/SPAWC.2003.1318971.

X. Liu, F. Wang, M. E. Bialkowski, "Investigation into a whitening-rotation-based semi-blind MIMO channel estimation for correlated channels," in 2008 2nd International Conference on Signal Processing and Communication Systems (IEEE, Washington, 2008). DOI: https://doi.org/10.1109/ICSPCS.2008.4813728.

Q. Zhang, W.-P. Zhu, Q. Meng, "Whitening-rotation-based semi-blind estimation of MIMO FIR channels," in 2009 International Conference on Wireless Communications & Signal Processing (IEEE, Washington, 2009). DOI: https://doi.org/10.1109/WCSP.2009.5371541.

J. Bhalani, D. Chauhan, Y. P. Kosta, A. I. Trivedi, "Novel semi-blind channel estimation schemes for Rician fading MIMO channel," Radioelectron. Commun. Syst., v.55, n.4, p.149 (2012). DOI: https://doi.org/10.3103/S0735272712040012.

S. B. Patel, J. Bhalani, Y. N. Trivedi, "Performance of full rate non-orthogonal STBC in spatially correlated MIMO systems," Radioelectron. Commun. Syst., v.63, n.2, p.88 (2020). DOI: https://doi.org/10.3103/S0735272720020041.

P. Sagar, B. Jaymin, "Near optimal receive antenna selection scheme for MIMO system under spatially correlated channel," Int. J. Electr. Comput. Eng., v.8, n.5, p.3732 (2018). DOI: https://doi.org/10.11591/ijece.v8i5.pp3732-3739.

K. C. Jomon, S. Prasanth, "Joint channel estimation and data detection in MIMO-OFDM using distributed compressive sensing," Radioelectron. Commun. Syst., v.60, n.2, p.80 (2017). DOI: https://doi.org/10.3103/S0735272717020029.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

ADDITIONAL INFORMATION

Payal Saxena, Sagar B. Patel, and Jaymin K. Bhalani

The authors declare that they have no conflict of interest.

The initial version of this paper in Russian is published in the journal “Izvestiya Vysshikh Uchebnykh Zavedenii. Radioelektronika,” ISSN 2307-6011 (Online), ISSN 0021-3470 (Print) on the link http://radio.kpi.ua/article/view/S0021347021040014 with DOI: https://doi.org/10.20535/S0021347021040014

Additional information

Translated from Izvestiya Vysshikh Uchebnykh Zavedenii. Radioelektronika, No. 4, pp. 195-203, April, 2021 https://doi.org/10.20535/S0021347021040014 .

About this article

Cite this article

Saxena, P., Patel, S.B. & Bhalani, J.K. MIMO Semi-blind Channel Estimation by using Tikhonov Regularized MMSE and MAP Algorithms with Householder Transformation based QR Decomposition. Radioelectron.Commun.Syst. 64, 165–173 (2021). https://doi.org/10.3103/S0735272721040014

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S0735272721040014