Abstract

Epileptic seizure (ES) detection is an active research area, that aims at patient-specific ES detection with high accuracy from electroencephalogram (EEG) signals. The early detection of seizure is crucial for timely medical intervention and prevention of further injuries of the patients. This work proposes a robust deep learning framework called HyEpiSeiD that extracts self-trained features from the pre-processed EEG signals using a hybrid combination of convolutional neural network followed by two gated recurrent unit layers and performs prediction based on those extracted features. The proposed HyEpiSeiD framework is evaluated on two public datasets, the UCI Epilepsy and Mendeley datasets. The proposed HyEpiSeiD model achieved 99.01% and 97.50% classification accuracy, respectively, outperforming most of the state-of-the-art methods in epilepsy detection domain.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Epilepsy is an abnormal brain condition caused by various factors, which has affected many people all over the world. It is a brain disease that causes frequent and unpredictable disruptions to normal brain activity. It leads to various symptoms like uncontrollable jerking, unconsciousness, etc. On the other hand, epilepsy seizure refers to a sudden, uncontrolled, and abnormal electrical disturbance in the brain that leads to a wide range of symptoms or behaviors. According to the World Health Organization (WHO), yearly, over 50 million people over different age groups and residential backgrounds are affected by epilepsy [1,2,3] disease, out of whom many people die for lack of proper treatment. A report shows that there is a significant shortage of neurologists [4] who can treat this kind of disease [5,6,7]. Therefore, automating the process of epilepsy seizure detection will help us to aid neurologists and allied health providers in the treatment of such diseases.

Electroencephalographic (EEG) [8] is a method that aims to measure the electrical activity of different regions in the human brain [9]. It was first introduced by Hans Berger, who aimed to study the human brain. EEG is beneficial in the diagnosis of different kinds of brain disorders. During epilepsy seizures, EEG data of patients’ brains behaves differently than normal brain conditions. A detailed study of the EEG signals of different patients during epilepsy seizures helps us identify the specific characteristics of those signals that occur only during epilepsy seizures. In this literature, we have proposed a deep learning framework that can learn features from different EEG data of epilepsy patients by itself and accurately predict the epileptic activity of the brain from unknown EEG data. There are many other physiological methods [10,11,12] to get information about epilepsy other than EEG. However, EEG provides a noninvasive biophysical examination method for medical experts to study the characteristics of epilepsy. It gives us much more detailed information on the epilepsy condition of the patients. Most of the other physiological methods cannot offer as much detailed information about epilepsy as EEG can. Therefore, our study chose EEG signal data of patient’s brain activity for our epilepsy detection task.

Lately, Artificial Intelligence (AI), notably machine learning (ML) and deep learning (DL), have drawn considerable attention from researchers, spurring contributions across various fields and demanding research tasks including anomaly detection [13,14,15], signal analysis [16,17,18,19,20,21,22,23,24,25,26,27,28], neurodevelopmental disorder assessment and classification focusing on autism [29,30,31,32,33,34,35,36,37], neurological disorder detection and management [38,39,40,41,42,43,44], supporting the detection and management of the COVID-19 pandemic [45,46,47,48,49,50,51,52], elderly monitoring and care [53], cyber security and trust management [54,55,56,57,58,59], ultrasound image [60], various disease detection and management [61,62,63,64,65,66,67,68], smart healthcare service delivery [69,70,71], text and social media mining [72,73,74], understanding student engagement [75, 76], etc. Epilepsy detection problem has been tackled mainly by traditional machine learning approaches [77,78,79], which consists of extracting quality features followed by classifying the feature set whether it is epileptic. Unlike deep learning, it requires manual intervention of feature engineering, which is subject to several rounds of trial and error for optimal performance. The performance of these methods varies with different datasets.

Therefore, we have proposed a deep learning framework [80, 81] for robust classification of EEG datasets. We have chosen the deep learning paradigm since it eliminates the trouble of hand-crafted feature extraction and automatically generates all informative self-learned feature sets [25, 82].

We have chosen one-dimensional convolutional neural networks (CNN) and a special kind of recurrent neural network (RNN) for our study. Our model framework consists of 4 1D convolutional layers, a fully connected layer, and 2 Gated Recurrent Units (GRU) [83]. The last layer is a Softmax layer, which does the final prediction. Our model pipeline is further evaluated on two different public datasets, i.e. (i) UCI Epilepsy Dataset [84], (ii) Mendeley Dataset by Renuka Khati [85]. which outperforms most of the state-of-the-arts. Initially, we ran our model excluding the GRU component. Including GRU in our model pipeline has improved the performance metrics results by a significant margin. Results of both model pipelines (including and excluding GRU) have been compared in the Results section.

2 Related work

Several studies focused on epileptic seizure recognition from EEG signals, employing different techniques and approaches for accurately identifying and classifying seizures into their respective classes. This section has reviewed some recent state-of-the-art methods in this field. The problem of EEG-based epileptic seizure recognition has been broadly investigated over the past three decades. Initially, only traditional machine learning algorithms have been used in epilepsy detection [77,78,79]. Later, deep learning methods have also come into the picture. Most deep learning state-of-the-art methods [86,87,88] give more robust classification results than traditional ones. Some such state-of-the-art methods are reviewed and summarised here.

Chandaka et al. [89] proposed a method where three statistical features were computed using EEG cross-correlation coefficients and presented them in a feature vector in a support vector machine(SVM). This method has achieved a decent accuracy of 95.96% in detecting epilepsy seizures from EEG data. Furthermore, Aarabi et al. [90] proposed a deep learning method in which features are extracted from the time, frequency, wavelet domain and fed into a back-propagation neural network. This method has achieved a classification accuracy of 93.00%. In their proposed method, Yuan et al. [91] used Hursh exponent and entropy as a set of non-linear features and extreme learning machine (ELM) classifier. This method has led to good accuracy in epilepsy detection. Many authors have used wavelet transformation to extract representative features from EEG data. Subasi et al. [92] proposed another method where spectral components are used as an input in a mixture of experts. Spectral components are derived from wavelet transformation. This proposed method achieved a classification accuracy of 94.50%. Khan et al. [93] also have used wavelet transformation where wavelet coefficients at lower frequency range are processed to compute the representative features from EEG signals. This method gives around 91.80% classification accuracy. Kumar et al. [94] also proposed another wavelet transformation method where EEG signals are divided into five different frequency bands, then extracting a set of non-linear features and further feeding into a support vector machine (SVM) classifier. This method has achieved a moderate classification accuracy of 97.50%. Nicolaou et al. [95] used permutation entropy as a feature in epilepsy detection and employed a support vector machine (SVM). This method gives an average of 93.28% classification accuracy. In addition, Song et al. [96] also employed weighed permutation entropy features along with SVM to obtain a better classification result. This method also achieves around 97.25% classification accuracy. Some of the most recent works on this domain used combination of different models or statistical feature selection methods to ensure robust classification results.Rohan et al. [97] uses combination of an artificial neural network along with a XGBoost model for classification. It gives robust classification accuracy around 98.26%.Later, Shankar et al. [98] proposed a new method where artificial neural network model had been combined with principal component analysis statistical method.This state-of-the-art also achieved pretty well classification results on EEG data, around 97.55% accuracy.Similarly, Rahman et al. [99] also proposed a combination of Support Vector Machine and Multi-layer Perceptron classifier.This method achieves around 97.27% accuracy, 96.93% precision, 94.53% recall.Prakash et al. [100] tried something new and introduced Gated Recurrent Unit in his state-of-the-art.This method achieves remarkable around 98.84% classification accuracy.Later, Raibag et al. [101] used Support Vector Machine with Radial Basis Function kernel for epilepsy detection.In this method, firstly Principal Component Analysis has been performed for dimensionality reduction of EEG data, followed by employing a Support Vector Machine with Radial Basis Function kernel.This method gives around 96% classification accuracy.Osman et al. [102] proposed Self-organizing map along with Radial Basis Function kernel neural network for epilepsy detection.In this work, Self-organizing map(SOM) has been used for feature dimensionality reduction of raw EEG data.SOM converts high dimensional EEG data into a two dimentional map.Further, a Radial Basis Function neural network has been employed for further classification.This method gives around 97.47% classification accuracy. Upadhyaya et al. [103] introduced BAT algorithm on optimal feature selection from EEG data for epilepsy detection.This proposed method performs well and gives around 96.78% classification accuracy. Woodbright et al. [104] used convolutional neural networks and pooling layer to capture spatial and temporal features characteristics from EEG data.This method gives around 98.65% classification accuracy. Wang et al. [105] introduced rule-based classifier for epilepsy detection.In their work, firstly, noise reduction and signal normalization have been performed for preprocessing of EEG data, followed by employing a rule based classifier like Random Forest.Guha et al. [106] used artificial neural network for epilepsy detection.

We can see that most of the state-of-the-art methods currently present in the epilepsy recognition domain use either traditional machine learning algorithms or simple deep learning methods.There are very few recent works where hybrid combination of models or statistical feature selection methods have been used. Most state-of-the-art methods’ main focus relies on robust preprocessing of raw EEG data and not on proper model selection. Here, in this study, we are more focused on including a correct combination of models in our model pipeline so that it can give optimal results on any dataset. Since EEG signal data is a special type of time series data, we have decided to include a 1D convolutional neural network in our model pipeline. We know that RNN models generally perform well on sequential data. Since EEG signal data is a special sequential data type, we have also included a RNN in our model architecture. After several experiments, we have developed a hybrid CNN+GRU model that can give robust classification results on any EEG dataset.We have benchmarked the performance of our model framework with some of the most recent state-of-the-arts.

2.1 Motivation behind using this framework

In our model framework, we have used a hybrid 1D-CNN and GRU combination for epilepsy detection from EEG data. Although, there are many other approaches that we had considered to tackle this problem, but at the end, we had decided to move with this framework.Most of the works that had been carried out in this field of epilepsy detection, mostly revolves around traditional machine learning algorithms, wavelet transformations,statistical feature analysis etc.Here, we have come around something out of the box methodologies which gives much more robust classification results. Moreover, there are many state-of-the arts which were evaluated only on one dataset.Although, those state-of-the-arts perform very well on that particular dataset, there is still a question on generalized behavior of those state-of-the-arts. Therefore, we have decided to evaluate our model framework on more than one dataset to ensure the acceptability of our model framework.

We know that EEG data is a special kind of time-series data.Since, we are working on time-series data, we have come up with an idea to use such models which gives better classification results with time-series data. We have experimented with several such models and finally, have decided to move with 1D CNN model as a part of our overall model framework.

We have experimented with many models and later decided to use a hybrid combination of those models which had been performing very well on the datasets. Finally, we have come up with our model framework, named as HyEpiSeiD, a hybrid combination of CNN and GRU. More detail implementation of our model pipeline is described in Sect. 3.

3 Proposed HyEpiSeiD framework

This section provides a detailed overview of our model pipeline for epilepsy detection from EEG signal data. Figure 1 gives an abstract overview of the different stages of our model pipeline and how each stage interacts with each other.

Our HyEpiSeiD model pipeline architecture consists of the following stages.

-

Feeding the time series data in four consecutive 1D CNN.

-

Self-learned features in 1D convolutional layers are then passed through a pooling layer.

-

Further, a dropout layer is added after the pooling layer to prevent redundant features from propagating further.

-

Now, to obtain better accuracy in our datasets, we have introduced GRU layers, a special type of RNN. It further improves our model’s performance in our model pipeline.

-

Finally, a Softmax function is applied at the last layer in our model pipeline for final classification.

In our study, we have performed two types of classification: (i) 2-class classification, where we aim to check whether a seizure is epileptic, and (ii) 5-class classification, where the non-epileptic EEG signals are also further divided into different classes, leading the number to the classes becoming more than 2. We aim to classify all EEG signals into their respective classes.

Here, the datasets used in our study are already pre-processed and converted to time-series data. We are directly feeding them in our model architecture.

The stages of our model pipeline mentioned above are explained in detail in the following subsections.

3.1 Feed time series data into 1D convolution

In our study, the datasets we have used are already pre-processed and converted into time-series data. We have used those time-series data directly as input into our model pipeline. The first stage of our model architecture consists of four 1D CNNs. These 1D CNNs extract self-learning features from the input vector. These self-learning features are further used for accurate classification. The architectures of four 1D CNNs used in our study are explained below in subsections.

We have used four 1D CNNs in our model pipeline, all with different architectures, shown in Fig. 2. The input vector of the time-series dataset is directly fed into the first 1D CNN of our model architecture. The first 1D CNN consists of 64 kernels, each with a size of 3\(\times\)1. This convolutional layer is followed by a Rectified Linear Unit (ReLU) activation function, which introduces non-linearity to our model pipeline. The mathematical definition of our 1D convolutional network is explained below.

where, \(x_i^{l-1}\) represents the \(i^{\text {th}}\) feature mapping inside the model’s (l-1) convolutional layer. \(y_j^{l}\) represents the \(j^{\text {th}}\) feature mapping inside the model’s \(l^{\text {th}}\) convolutional layer, \(N_{l-1}\) represents the total number of feature maps in \((l-1)^{\text {th}}\) layer, \(w_{i,j}^l\) denotes the weight values of convolutional kernels used in the model, \(b_j^{l}\) denotes the bias of \(j^{\text {th}}\) feature mapping inside model’s \(l^{\text {th}}\) layer. In the equation (1), conv1D() represents one-dimensional convolutional operation on trainable parameters. Finally, ReLU represents the ReLU operation, which introduces non-linearity in our model. The mathematical definition of the ReLU activation function is defined as follows.

The ReLU function only activates when the input signal value is greater than 0; otherwise, it returns 0. Thus, it introduces non-linearity in our model and allows us to understand the dataset’s non-linear patterns. After the convolution operation, it outputs 64 feature maps, which are again passed to the next CNN layer.

The second 1D convolutional layer takes these 64 feature maps as input and performs the feature learning process again as the previous convolution layer. The second 1D convolution layer consists of 64 kernels, each with a size of 3\(\times\)1. As before, the CNN layer is followed by a ReLU activation function. This layer outputs 64 feature maps, further fed into the next convolutional layer.

The third convolutional layer takes 64 feature maps as input from the previous layer and performs the same operation as the previous one. This 1D CNN layer consists of 64 kernels, each kernel of size 3\(\times\)1. This layer extracts useful features from input and outputs 64 feature maps. As with other previous CNN layers, this CNN layer is also followed by a ReLU activation function. This ReLU portion helps to identify non-linear patterns of data, which leads to more accurate classification. The outputted feature maps are again fed into the next CNN layer.

The fourth CNN layer consists of 64 kernels, each with a size of 3\(\times\)1. This is the last convolution layer used in our model framework. It takes 64 feature maps as input and outputs 64 feature maps. This layer is also followed by a ReLU activation function.

These are the model architectures of all 1D convolutional layers used in our framework. Using four 1D CNNs leads our model to extract the most insightful features, training them properly and propagating them throughout the rest of the layers of our model. Since we have converted our dataset into time-series data, we have decided to use 1D CNN since it generally gives good accuracy performance measures on time-series data. Finally, 64 feature maps, which the last CNN layers have outputted, are passed to the pooling layer for further operation.

3.2 Feed CNN layer output into pooling layer

Finally, after four 1D CNN layers, the features are fed into the pooling layer. In our model framework, we have only used one pooling layer, which uses the Max pooling method. The 64 features from the last one-dimensional convolutional layer are directly passed into this pooling layer. The mathematical explanation of max pooling can be defined as below.

where, \(p_i^a\) denotes the value carried by a th neuron inside i th feature obtained from the previous layer after the max pooling operation. \(p_i^{a_1}\) denotes the value carried by \(a_1\) th neuron inside i th feature. max() denotes the maximum neuron value within the pooling window. Here, s is the size of the pooling window. In our model, the size of the pooling window is set to 2, and the stride of the pooling window is also set to 2. This layer reduces the number of training parameters of the features, accelerates the training process and reduces the probability of overfitting.

After the Maxpooling operation, 64 feature mappings are outputted with reduced dimensions and further propagated throughout the model pipeline.

3.3 Feed output features into a fully connected layer and dropout layer

After Maxpooling, we obtain 64 highly insightful feature maps as output. These output feature maps are passed through a fully connected layer (FC) followed by a dropout layer. The FC layer has 128 nodes, each with a ReLU activation function. All the nodes forward the input to the next layer. This FC layer is followed by a dropout layer where the dropout rate is 50%. This means that half of the features will not be propagated further and will be discarded. This layer maintains the number of quality features in the model and the discard of redundant features. It reduces the chances of overfitting problems in the model. After the dropout layer, half of the total features are further propagated to the next layer.

3.4 Feed output features into gated recurrent units

After the dropout layer, half of the total features are fed into two GRUs. We have integrated RNN [107] with CNN in our model framework. Since RNN and CNN both give better performance results with time-series data, we have integrated them to optimise their performance. Here, we have used a special type of RNN, i.e. GRU. Two layers of GRU have been used next to the dropout layer in our model framework. The architecture of a GRU network is presented in Fig. 3. The first GRU layer consists of 64 nodes and returns a full sequence of outputs for each time stamp for our time series dataset. The second GRU layer has 128 nodes and also returns a full sequence of outputs for each time stamp. GRU is a special type of RNN that aims to perform better on sequential data, text and time-series data classification. We know that RNN can store the previously generated output in its hidden state and again use those stored outputs as input, allowing the network to capture sequential dependencies. Here, we have used GRU, a special type of RNN which uses gating mechanisms to perform different state operations inside the network selectively at different timestamps. These gating mechanisms control the flow of information in and out of the network. The GRU consists of three gating mechanisms: (i) reset gate, (ii) update gate, and (iii) current memory gate.

The reset gate checks how much of the past data, stored in the hidden state, needs to be discarded in the next iteration. This gate discards all the unnecessary past information stored in the hidden state memory.

The update gate checks how much past data needs to be reused in the next iteration. It also updates the hidden state with new input data. This gate finally determines the output of GRU.

Current memory gate is a subpart of the reset gate. This gate transforms the original input into zero mean non-linear input.

The mathematical representation for each gate of GRU is given below.

3.4.1 Reset gate

where, \(r_t\) denotes the state after the reset gate operation, \(w_r\) denotes the learnable weight matrix, \(x_t\) represents the input at the timestamp t and \(h_{t-1}\) represents the hidden state value at timestamp \((t-1)\).

3.4.2 Update gate

where, \(z_t\) denotes the state after the update gate operation, \(w_z\) denotes the learnable weight matrix, \(x_t\) represents the input value at the timestamp t and \(h_{t-1}\) represents the hidden state value at timestamp \((t-1)\).

3.4.3 Candidate hidden state

where, \(h_{t-1}\) represents the candidate’s hidden state. \(w_h\) represents the learnable weight matrix, \(x_t\) represents the input at timestamp t, \(h_{t-1}\) represents the hidden state at timestamp \((t-1)\) and \(r_t\) represents the state after reset gate operation.

3.4.4 Hidden state

where, \(h_t\) represents the candidate hidden state, \(h_{t-1}\) represents the hidden state at the timestamp \((t-1)\), and \(z_t\) represents the state after the update gate operation. In all the above equations, sig() denotes the sigmoid activation function and tanh() denotes the hyperbolic tangent activation function.

3.5 Final classification

The outputs obtained from two GRU layers are further passed into three fully connected layers and a dropout layer, followed by a softmax activation function that performs the final classification. The architectures of all FC layers used in the final layer of our model framework are described in the following subsections. The first FC layer consists of 64 nodes, each with a ReLU activation function. This layer forwards the features to the next FC layer. The second FC layer also consists of 64 nodes, each with ReLU as its activation function. This layer takes input from the previous FC layer and forwards it to the next FC layer. The third and final FC layer consists of 128 nodes with a ReLU activation function. From this layer, 128 feature maps are fed into a dropout layer where 50% of these features are discarded to avoid overfitting problems. Finally, the rest of the features are passed into Softmax activation function, which performs the final classification.

4 Experimental design

Here, we explain the experimental design of our pipeline, the datasets that we used to evaluate our proposed model framework, and also the performance results we obtained on those datasets while evaluating our model. Finally, we list some comparisons of our model framework with existing state-of-the-art methods in epilepsy detection.

4.1 Datasets

Our proposed HyEpiSeiD framework is evaluated on two publicly available datasets. The two datasets used in our model evaluation are listed below.

-

1.

UCI Epilepsy dataset

-

2.

Mendeley dataset by Renuka Khati

4.1.1 UCI Epilepsy dataset

This dataset consists of 5 folders, each containing 100 files. Each file consists of the recording of the brain activity of one person. The brain activity for each person has been recorded for 23.6 s. These raw data files have already been pre-processed for our study. Each person’s brain activity data has been sampled into 4097 data points. The data points are further divided for each individual second timestamp. Therefore, for each second timestamp, only 178 data points are present. Hence, in the already pre-processed.csv file, there are 178 columns, each representing a particular feature value of EEG data at a specific timestamp. This dataset has five classes in total. The meaning of each class label is mentioned below.

Class 5—Label 5 indicates that the person’s eyes are open, meaning the patient had their eyes open when the brain’s EEG signal was being recorded.

Class 4—Label 4 indicates that the person’s eyes are closed, which means the patient had their eyes closed when the EEG signal had been recorded.

Class 3—Label 3 indicates that the region of the tumour in the brain was identified after recording the EEG activity from the healthy brain area.

Class 2—Label 2 indicates that the EEG signal was recorded from where the tumour was located.

Class 1—This label indicates the recording of seizure activity.

Class-wise data distribution in this UCI Epilepsy dataset is tabulated in Table 1. Here, we have performed two kinds of classification:

2-class classification—In our UCI epilepsy dataset, only class 1 represents epilepsy seizure, and the rest do not. Therefore, in the 2-class classification, we have only classified whether a set of data points at a particular timestamp represents an epileptic seizure. Hence, classes 2,3,4,5 of the original dataset are merged here and transformed into one class. Class-wise distribution of data in this UCI Epilepsy dataset for 2 class classification is tabulated in Table 1.

5-class classification—Here, the number of classes for the classification task is 5. We are not only limited to performing the classification of EEG signals into epileptic and non-epileptic. Here, non-epileptic EEG signal data are further classified into four classes. Class-wise distribution of data in this UCI Epilepsy dataset for 5-class classification is tabulated in Table 2.

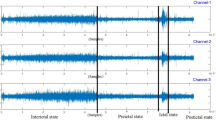

4.1.2 Mendeley dataset by renuka khati

This dataset consists of 3 folders named (i) set b, (ii) set d, (iii) set e. Each folder contains text (.txt) files containing EEG signal time series data for different persons. Set b folder contains all those normal EEG signal data. Set d folder contains all preictal EEG signal data. Set e contains all epileptic EEG signal time-series data. This dataset has been pre-processed and converted into a comma-separated value (CSV) file. Each CSV file row gives the data point at a particular timestamp. This pre-processed CSV contains 11 features. Unlike the UCI Epilepsy dataset, there are only two classes in the pre-processed version of the Mendeley dataset. Therefore, we have only performed 2-way classification for this dataset.

4.2 Hyperparameters values and train-test split details of our model framework

Our proposed HyEpiSeiD model pipeline has been implemented using the TensorFlow toolbox [108] in Python. UCI Epilepsy dataset is split into an 80:20 train-test split ratio for training. Our model has been trained for 20 epochs in this dataset with a mini-batch descent optimiser and a learning rate of 0.001. The batch size is set here as 32.

Like the UCI Epilepsy dataset, Renuka Khati’s Mendeley dataset is also split into an 80:20 train-test ratio. Our model has been trained for 20 epochs in this dataset with a mini-batch descent optimiser and a learning rate of 0.001. The batch size is set here as 32.

4.3 Performance evaluation metrics

Here, four metrics are used to determine the evaluation performance of our model framework on these datasets. These metrics are (i) Accuracy, (ii) Precision, (iii) Recall, and (iv) F1-score, respectively. Mathematical representations of these evaluation metrics are mentioned below.

where, N denotes the total number of classes in the dataset, and \(C_{ij}\) denotes the matching value of \(i^{\text {th}}\) datapoint, which belongs to the \(j^{\text {th}}\) class. We have evaluated all these metric values of our proposed model on these datasets.

5 Results and discussion

We have evaluated our proposed HyEpiSeiD framework on two publicly available datasets, namely UCI Epilepsy and the Mendeley dataset by Renuka Khati. We have calculated all of the evaluation metrics, i.e., accuracy, precision, recall, and f1-score on these datasets. Later, we compared these results with some existing state-of-the-art results. The results we have obtained on these two datasets are briefly explained in the following two sections.

5.1 Results on UCI epilepsy dataset

Our HyEpiSeiD model framework has been run on this dataset for 30 epochs with an 80:20 train-test split ratio. We have performed two types of classification as mentioned in the section 4.1.1 in this dataset. The evaluation results for both kinds of classification are tabulated in Table 3.

In our model framework, we have actually integrated GRU with a 1D convolutional neural network. To better understand the effect of using GRU and whether it is improving the model’s performance, we have also compared the results of our model with GRU and without GRU. It means that we once evaluated our model on this dataset using the GRU layers along with 1D CNN layers, and later, we removed the GRU layer from our model pipeline and again evaluated the model in the same dataset.

The results obtained from both cases are compared in Table 4. While evaluating our model, we have split 20% of our total training data as validation data. We can see that, for 2-class classification, our model framework shows 99.05% accuracy on training data and 98.87% accuracy on validation data. Finally, our proposed framework shows 99.01% accuracy, 99.01% precision, 99.04% recall and 99.02% f1-score on test data in 2-class classification. The confusion matrix gives the overall performance of our proposed framework on the dataset by showing the model’s true positive, true negative, false positive and false negative values. For both classifications, either 2-class or 5-class, we can observe that the true positive and true negative values are very high, leading our model framework to give robust classification results regarding every possible evaluation metric, i.e. precision, recall, f1-score, and accuracy.

Figure 4 shows the confusion matrix obtained in 2-class classification, whereas Fig. 5 shows the confusion matrix obtained in 5-class classification. Similarly, for 5-class classification, we observe that training data shows 79.57% accuracy and the validation data shows 77.39% accuracy. In the end, our framework gives 78.74% accuracy, 79.34% precision, 78.73% recall, and 78.52% f1-score value on the test dataset. Figure 6 shows the loss versus epoch and accuracy versus epoch curves during training for 2-class classification on the UCI Epilepsy dataset. Figure 7 shows the loss vs epoch and accuracy vs epoch curve during training in 5-class classification. We observe that the training curves in Figs. 6 and 7 converge properly with increasing epochs. Therefore, we conclude that our trained model is free from overfitting issues in both classifications.

We have also conducted an experiment to analyze whether the GRU layers give any extra performance benefits. Therefore, we have removed the GRU layers from our model pipeline and again evaluated our model using the same dataset. The comparisons of both results are tabulated in Table 4. We observe that all of the performance metrics have improved significantly while GRU layers are added. Without GRU layers, our model pipeline gives 98.00% accuracy in 2-class classification and 73.13% accuracy in 5-class classification. Therefore, we can see that there is a significant improvement in classification results when GRU layers are used.

Figures 8 and 9 show class-wise evaluation metric scores considering 2-class and 5-class classification on the UCI Epilepsy dataset, respectively. We observe that for a 2-class classification, all the metric values, i.e. precision, recall, f1-score, and accuracy for each individual epileptic class, are around 98% to 99%. It means, our HyEpiSeiD model is not biased towards classifying a particular class. On the other hand, in case of 5-class classification, the metric values of class ’1’ are the highest. It means that our model can predict class ’1’ most accurately. But, the metric values for the remaining epileptic classes ’2’, ’3’, ’4’ and ’5’ are decent but less by a significant margin than class ’1’. Finally, we can conclude that our model framework performs very well on this dataset.

5.1.1 Results on mendeley dataset (Renuka Khati)

We have also evaluated our proposed HyEpiSeiD model pipeline on the Mendeley dataset (Renuka Khati). For this dataset, we split it into an 80: 20 train-test ratio and trained our model for 20 epochs. The batch size has been set to 32. In our case, 20% of the total training data is kept for validation. Unlike the UCI Epilepsy dataset, we have only performed 2-class classification here since the dataset consists of only 2 classes: healthy and seizures. Therefore, 5-class classification is not possible here.

We observe that our model achieves 99.22% training accuracy and 99.97% validation accuracy. Finally, our model gives 97.50% accuracy, 97.61% precision, 97.5% recall, and 97.49% f1-score on the test dataset. The evaluation metric results which we have obtained in this dataset are shown in Table 5. The confusion matrix gives the overall performance of our proposed framework on the dataset by showing the model’s true positive, true negative, false positive and false negative values. In our observation, the counts of positive and true negative entries are much higher than those of false positive and false negative entries. It indicates that our model performs very well regarding all possible evaluation metrics i.e. precision, recall, f1-score, accuracy. Figure 10 shows the confusion matrix we obtained for our proposed model evaluation on the Mendeley dataset. We also observe that both training curves, i.e. loss vs epoch and accuracy vs epoch in Fig. 11 converge properly at the end. This shows that the model is free from overfitting issues.

Like the UCI Epilepsy dataset, we have also conducted the same experiment to identify whether the GRU layers significantly enhance our model’s performance in the Mendeley dataset (Renuka Khati). We have first evaluated our model, including the GRU layers on the dataset. Then, we removed the GRU layers from our model pipeline and again evaluated them on the same dataset. Lastly, we have made a comparison between these two evaluation results. The comparison is shown in Table 6. We can see that GRU layers improve the model’s accuracy by 1.1%, precision by 1%, recall by 1.12% and f1-score by 0.98%. We can claim that GRU layers have improved our model’s performance for both datasets. Finally, we have performed a class-wise metric evaluation on the Mendeley dataset. A visual representation of these class-wise evaluation metric values for the Mendeley dataset is shown in Fig. 12. We can see that the values of each evaluation metric, i.e. precision, recall, accuracy, and f1-score for each individual class, are in the range from 95% to 100%. Each metric value is very high, indicating that the trained model has no strong bias towards classifying a particular class. Therefore, we can claim that our proposed HyEpiSeiD model is robust and will give high classification accuracy for any unknown dataset.

5.2 Comparison with existing methods

After evaluating our HyEpiSeiD model framework against two publicly available datasets, i.e., the UCI Epilepsy dataset and the Mendeley dataset (Renuka Khati), we have compared our model framework with some state-of-the-art methods which are used in epilepsy recognition tasks. Here, we have compared our proposed model with popular existing methods in Tables 7 and 8.

In Table 7, we have included only those articles where a 2-class classification has been performed on the UCI Epilepsy dataset. We have only compared the 2-class classification results of our model with theirs. Similarly, in Table 8, we have included only those articles where 5-class classification has been performed on the UCI Epilepsy dataset. We have only compared the 5-class classification results of our model with theirs. The comparison values for GoogleNet, AlexNet, and VGG16 are taken from [115].

Unfortunately, there is no such kind of epilepsy detection work currently available on the Mendeley dataset(Renuka Khati). Therefore, we could not put any comparison table for the Mendeley dataset like the UCI Epilepsy dataset. As a recent work on the Mendeley dataset, we can say that our model has performed pretty well, giving a decent 97.50% classification accuracy on this dataset.

6 Conclusion

In this study, we have proposed a 1D CNN-GRU hybrid deep learning methodology, named as HyEpiSeiD, for automatically recognising epilepsy seizures from EEG data. We have evaluated our proposed HyEpiSeiD framework on (i) the UCI Epilepsy dataset and (ii) the Mendeley dataset by Renuka Khati. Our proposed hybrid model gives an overall 99.01% accuracy, 99.01% precision, 99.04% recall and 99.02% f1-score for the 2-class classification of the UCI dataset. Similarly, it also gives 78.34% accuracy, 79.38% precision, 78.73% recall, and 78.52% f1-score while considering the 5-class classification of the UCI dataset. Therefore, we can conclude that our proposed hybrid model framework has given robust classification results on the UCI dataset. Upon analysing the individual class-wise evaluation metric scores, it can be said that our model is not biased towards classifying a particular class. Like the UCI dataset, our proposed hybrid model has also been evaluated on the Mendeley dataset as well. It gives 97.50% classification accuracy on the Mendeley dataset. We can see that all individual class-wise evaluation metric scores for the Mendeley dataset are also very high. Therefore, our model is also not biased towards classifying a particular class. Upon observing the evaluation results obtained from these two datasets, we can conclude that our model framework has a robust classification ability for any general epilepsy dataset. Moreover, it has no strong bias towards classifying any particular class, making it a perfect model framework for epilepsy classification. For the betterment of the research community, the source code of our proposed model is made public, which can be downloaded from https://github.com/rajcodex/Epilepsy-Detection. In this study, we focus more on selecting a combination of models rather than the pre-processing procedure of raw EEG signal data.The datasets that have been used in this study, are already pre-processed.Therefore, in future, we aim work on a robust pre-processing framework for raw EEG signals. A robust and optimal pre-processing methodology can help us to extract proper features which can be further used in any model for epilepsy classification task.We can further explore recent state-of-the-arts computer vision models in epilepsy deection tasks.In that case, first, we have to pre-process EEG time-series data into images followed by employing a computer vision model.

Availability of data and materials

The data that support the findings of this study are openly available at: 1. https://archive.ics.uci.edu/dataset/388/epileptic+seizure+recognition 2. https://data.mendeley.com/datasets/k2mzn5zvyg/1.

References

Abraira L, Gramegna LL, Quintana M, Santamarina E, Salas-Puig J, Sarria S, Rovira A, Toledo M (2019) Cerebrovascular disease burden in late-onset non-lesional focal epilepsy. Seizure 66:31–35

Galanopoulou AS, Buckmaster PS, Staley KJ, Moshé SL, Perucca E, Engel J Jr, Löscher W, Noebels JL, Pitkänen A, Stables J et al (2012) Identification of new epilepsy treatments: issues in preclinical methodology. Epilepsia 53(3):571–582

San-Segundo R, Gil-Martín M, D’Haro-Enríquez LF, Pardo JM (2019) Classification of epileptic EEG recordings using signal transforms and convolutional neural networks. Comput Biol Med 109:148–158

Banerjee A, Sarkar A, Roy S, Singh PK, Sarkar R (2022) Covid-19 chest x-ray detection through blending ensemble of CNN snapshots. Biomed Signal Process Control 78:104000

Nashef L, Fish D, Garner S, Sander J, Shorvon S (1995) Sudden death in epilepsy: a study of incidence in a young cohort with epilepsy and learning difficulty. Epilepsia 36(12):1187–1194

Thurman DJ, Hesdorffer DC, French JA (2014) Sudden unexpected death in epilepsy: assessing the public health burden. Epilepsia 55(10):1479–1485

Hauser WA, Annegers JF, Elveback LR (1980) Mortality in patients with epilepsy. Epilepsia 21(4):399–412

Cooper R, Osselton JW, Shaw JC (2014) EEG Technology. Butterworth-Heinemann, Oxford

Gloor P (1969) Hans Berger on electroencephalography. Am J EEG Technol 9(1):1–8

Kananen J, Tuovinen T, Ansakorpi H, Rytky S, Helakari H, Huotari N, Raitamaa L, Raatikainen V, Rasila A, Borchardt V et al (2018) Altered physiological brain variation in drug-resistant epilepsy. Brain Behav 8(9):01090

Yaffe RB, Borger P, Megevand P, Groppe DM, Kramer MA, Chu CJ, Santaniello S, Meisel C, Mehta AD, Sarma SV (2015) Physiology of functional and effective networks in epilepsy. Clin Neurophysiol 126(2):227–236

Trevelyan AJ, Bruns W, Mann EO, Crepel V, Scanziani M (2013) The information content of physiological and epileptic brain activity. J Physiol 591(4):799–805

Yahaya SW, Lotfi A, Mahmud M (2020) Towards the development of an adaptive system for detecting anomaly in human activities. Piscataway, IEEE, pp 534–541

Yahaya SW, Lotfi A, Mahmud M (2021) Towards a data-driven adaptive anomaly detection system for human activity. Pattern Recognit Lett 145:200–207

Lalotra GS, Kumar V, Bhatt A, Chen T, Mahmud M (2022) Iretads: an intelligent real-time anomaly detection system for cloud communications using temporal data summarization and neural network. Secur Commun Netw 2022(1):9149164

Fabietti M et al (2020) Adaptation of convolutional neural networks for multi-channel artifact detection in chronically recorded local field potentials. Proc SSCI. https://doi.org/10.1109/SSCI47803.2020.9308165

Fabietti M et al (2020) Neural network-based artifact detection in local field potentials recorded from chronically implanted neural probes. Proc IJCNN. https://doi.org/10.1109/IJCNN48605.2020.9207320

Fabietti M et al (2020) Artifact detection in chronically recorded local field potentials using long-short term memory neural network. Proc AICT. https://doi.org/10.1109/AICT50176.2020.9368638

Fabietti M, Mahmud M, Lotfi A (2022) Artefact detection in chronically recorded local field potentials: an explainable machine learning-based approach. IEEE, Piscataway, pp 1–7

Fabietti M, Mahmud M, Lotfi A (2020) Machine learning in analysing invasively recorded neuronal signals: available open access data sources. Springer International Publishing, Cham, pp 151–162

Rahman S, Sharma T, Mahmud M (2020) Improving alcoholism diagnosis: comparing instance-based classifiers against neural networks for classifying EEG signal. Springer International Publishing, Cham, pp 239–250

Tahura S, Hasnat Samiul S, Shamim Kaiser M, Mahmud M (2021) Anomaly detection in electroencephalography signal using deep learning model. Springer Singapore, Singapore, pp 205–217

Wadhera T, Mahmud M (2022) Computing hierarchical complexity of the brain from electroencephalogram signals: a graph convolutional network-based approach. IEEE, Piscataway, pp 1–6

Fabietti MI et al (2022) Detection of healthy and unhealthy brain states from local field potentials using machine learning. Springer International Publishing, Cham, pp 27–39

Dhara T, Singh PK, Mahmud M (2023) A fuzzy ensemble-based deep learning model for EEG-based emotion recognition. Cognit Computat. https://doi.org/10.1007/s12559-023-10171-2

Shahriar MF, Arnab MSA, Khan MS, Rahman SS, Mahmud M, Kaiser MS (2023) Towards machine learning-based emotion recognition from multimodal data. Front ICT Healthcare Proc EAIT 2022. https://doi.org/10.1007/978-981-19-5191-6_9

Zawad MRS, Rony CSA, Haque MY, Banna MHA, Mahmud M, Kaiser MS (2023) A hybrid approach for stress prediction from heart rate variability. Front ICT Healthcare Proc EAIT 2022. https://doi.org/10.1007/978-981-19-5191-6_10

Bhagat D, Ray A, Sarda A, Dutta Roy N, Mahmud M, De D (2023) Improving mental health through multimodal emotion detection from speech and text data using long-short term memory. Front ICT Healthcare ProcEAIT 2022. https://doi.org/10.1007/978-981-19-5191-6_2

Sumi AI et al (2018) fassert: a fuzzy assistive system for children with autism using internet of things. Proc Brain Inform. https://doi.org/10.1007/978-3-030-05587-5_38

Al Banna M et al (2020) A monitoring system for patients of autism spectrum disorder using artificial intelligence. Proc Brain Inform. https://doi.org/10.1007/978-3-030-59277-6_23

Akter T et al (2021) Towards autism subtype detection through identification of discriminatory factors using machine learning. Proc Brain Inform. https://doi.org/10.1007/978-3-030-86993-9_36

Biswas M, Kaiser MS, Mahmud M, Al Mamun S, Hossain M, Rahman MA et al (2021) An XAI based autism detection: the context behind the detection. Proc Brain Inform. https://doi.org/10.1007/978-3-030-86993-9_40

Ghosh T et al (2021) Artificial intelligence and internet of things in screening and management of autism spectrum disorder. Sustain Cities Soc 74:103189

Ahmed S et al (2022) Toward machine learning-based psychological assessment of autism spectrum disorders in school and community. Proc TEHI. https://doi.org/10.1007/978-981-16-8826-3_13

Mahmud M et al (2022) Towards explainable and privacy-preserving artificial intelligence for personalisation in autism spectrum disorder. Proc HCII. https://doi.org/10.1007/978-3-031-05039-8_26

Wadhera T, Mahmud M (2022) Influences of social learning in individual perception and decision making in people with autism: a computational approach. Proc Brain Inform. https://doi.org/10.1007/978-3-031-15037-1_5

Wadhera T, Mahmud M (2023) Computational model of functional connectivity distance predicts neural alterations. IEEE Trans Cognit Dev Syst. https://doi.org/10.1109/TCDS.2023.3320243

Akhund NU et al (2018) Adeptness: AlzheimerÕs disease patient management system using pervasive sensors-early prototype and preliminary results. Proc Brain Inform. https://doi.org/10.1007/978-3-030-05587-5_39

Jesmin S, Kaiser MS, Mahmud M (2020) Towards artificial intelligence driven stress monitoring for mental wellbeing tracking during covid-19. Proc WI-IAT. https://doi.org/10.1109/WIIAT50758.2020.00130

Al Mamun S, Kaiser MS, Mahmud M (2021) An artificial intelligence based approach towards inclusive healthcare provisioning in society 5.0: a perspective on brain disorder. Proc Brain Inform. https://doi.org/10.1007/978-3-030-86993-9_15

Biswas M, Rahman A, Kaiser MS, Al Mamun S, Ebne Mizan KS, Islam MS, Mahmud M (2021) Indoor navigation support system for patients with neurodegenerative diseases. Proc Brain Inform. https://doi.org/10.1007/978-3-030-86993-9_37

Shaffi N, Hajamohideen F, Mahmud M, Abdesselam A, Subramanian K, Sariri AA (2022) Triplet-loss based Siamese convolutional neural network for 4-way classification of Alzheimer’s disease. Springer International Publishing, Cham, pp 277–287

Haque Y, Zawad RS, Rony CSA, Banna HA, Ghosh T, Kaiser MS, Mahmud M (2024) State-of-the-art of stress prediction from heart rate variability using artificial intelligence. Cognit Computat 16(2):455–481

Javed AR, Saadia A, Mughal H, Gadekallu TR, Rizwan M, Maddikunta PKR, Mahmud M, Liyanage M, Hussain A (2023) Artificial intelligence for cognitive health assessment: state-of-the-art, open challenges and future directions. Cognit Computat 15:1767–1812

Jesmin S, Kaiser MS, Mahmud M (2020) Artificial and internet of healthcare things based Alzheimer care during COVID 19. Proc Brain Inform. https://doi.org/10.1007/978-3-030-59277-6_24

Satu MS et al (2021) Short-term prediction of covid-19 cases using machine learning models. Appl Sci 11(9):4266

Bhapkar HR, Mahalle PN, Shinde GR, Mahmud M (2021) Rough sets in covid-19 to predict symptomatic cases. In: COVID-19: prediction, decision-making, and its impacts. 57–68

Kumar S, Viral R, Deep V, Sharma P, Kumar M, Mahmud M, Stephan T (2021) Forecasting major impacts of COVID-19 pandemic on country-driven sectors: challenges, lessons, and future roadmap. Pers Ubiquitous Comput 27:807–830

Mahmud M, Kaiser MS (2021) Machine learning in fighting pandemics: a covid-19 case study. In: COVID-19: Prediction, Decision-making, and Its Impacts.

Prakash N, Murugappan M, Hemalakshmi G, Jayalakshmi M, Mahmud M (2021) Deep transfer learning for covid-19 detection and infection localization with superpixel based segmentation. Sustain Cities Soc 75:103252

Paul A, Basu A, Mahmud M, Kaiser MS, Sarkar R (2022) Inverted bell-curve-based ensemble of deep learning models for detection of COVID-19 from chest X-rays. Neural Comput Appl 35:16113

Banna MHA, Ghosh T, Nahian MJA, Kaiser MS, Mahmud M, Taher KA, Hossain MS, Andersson K (2023) A hybrid deep learning model to predict the impact of covid-19 on mental health from social media big data. IEEE Access 11:77009–77022

Nahiduzzaman M, Tasnim M, Newaz NT, Kaiser MS, Mahmud M (2020) Machine learning based early fall detection for elderly people with neurological disorder using multimodal data fusion. Proc Brain Inform. https://doi.org/10.1007/978-3-030-59277-6_19

Farhin F, Kaiser MS, Mahmud M (2020) Towards secured service provisioning for the internet of healthcare things. Proc AICT. https://doi.org/10.1109/AICT50176.2020.9368580

Farhin F, Sultana I, Islam N, Kaiser MS, Rahman MS, Mahmud M (2020) Attack detection in internet of things using software defined network and fuzzy neural network. Proc ICIEV and icIVPR. https://doi.org/10.1109/ICIEVicIVPR48672.2020.9306666

Ahmed S et al (2021) Artificial intelligence and machine learning for ensuring security in smart cities. Data-Driven Min Learn Anal Secured Smart Cities. https://doi.org/10.1007/978-3-030-72139-8_2

Islam N et al (2021) Towards machine learning based intrusion detection in IoT networks. Comput Mater Contin 69(2):1801–1821

Esha NH et al (2021) Trust IoHT: A trust management model for internet of healthcare things. Proc ICDSA. https://doi.org/10.1007/978-981-15-7561-7_3

Zaman S et al (2021) Security threats and artificial intelligence based countermeasures for internet of things networks: a comprehensive survey. IEEE Access 9:94668–94690

Singh R, Mahmud M, Yovera L (2021) Classification of first trimester ultrasound images using deep convolutional neural network. Proc AII. https://doi.org/10.1007/978-3-030-82269-9_8

Zohora MF, Tania MH, Kaiser MS, Mahmud M (2020) Forecasting the risk of type II diabetes using reinforcement learning. Proc ICIEV icIVPR. https://doi.org/10.1109/ICIEVicIVPR48672.2020.9306653

Mukherjee H et al (2021) Automatic lung health screening using respiratory sounds. J Med Syst 45(2):1–9

Deepa B, Murugappan M, Sumithra M, Mahmud M, Al-Rakhami MS (2021) Pattern descriptors orientation and map firefly algorithm based brain pathology classification using hybridized machine learning algorithm. IEEE Access 10:3848–3863

Mammoottil MJ et al (2022) Detection of breast cancer from five-view thermal images using convolutional neural networks. J Healthc Eng. https://doi.org/10.1155/2022/4295221

Chen T et al (2022) A dominant set-informed interpretable fuzzy system for automated diagnosis of dementia. Front Neurosci 16:86766

Kumar I et al (2022) Dense tissue pattern characterization using deep neural network. Cogn Comput 14(5):1728–1751

Mukherjee P et al (2021) iConDet: an intelligent portable healthcare app for the detection of conjunctivitis. Proc AII. https://doi.org/10.1007/978-3-030-82269-9_3

Rai T, Shen Y, Kaur J, He J, Mahmud M, Brown DJ, Baldwin DR, O’Dowd E, Hubbard R (2023) Decision tree approaches to select high risk patients for lung cancer screening based on the UK primary care data. Springer Nature Switzerland, Cham, pp 35–39

Farhin F, Kaiser MS, Mahmud M (2021) Secured smart healthcare system: blockchain and Bayesian inference based approach. Proc TCCE. https://doi.org/10.1007/978-981-33-4673-4_36

Kaiser MS et al (2021) 6G access network for intelligent internet of healthcare things: opportunity, challenges, and research directions. Proc TCCE. https://doi.org/10.1007/978-981-33-4673-4_25

Biswas M et al (2021) Accu3rate: a mobile health application rating scale based on user reviews. PloS ONE 16(12):0258050

Adiba FI, Islam T, Kaiser MS, Mahmud M, Rahman MA (2020) Effect of corpora on classification of fake news using naive bayes classifier. Int J Autom Artif Intell Mach Learn 1(1):80–92

Rabby G et al (2018) A flexible keyphrase extraction technique for academic literature. Procedia Comput Sci 135:553–563

Ghosh T et al (2021) An attention-based mood controlling framework for social media users. Proc Brain Inform. https://doi.org/10.1007/978-3-030-86993-9_23

Rahman MA et al (2022) Explainable multimodal machine learning for engagement analysis by continuous performance test. Proc HCII. https://doi.org/10.1007/978-3-031-05039-8_28

Ahuja NJ et al (2021) An investigative study on the effects of pedagogical agents on intrinsic, extraneous and germane cognitive load: experimental findings with dyscalculia and non-dyscalculia learners. IEEE Access 10:3904–3922

Mursalin M, Zhang Y, Chen Y, Chawla NV (2017) Automated epileptic seizure detection using improved correlation-based feature selection with random forest classifier. Neurocomputing 241:204–214

Alkan A, Koklukaya E, Subasi A (2005) Automatic seizure detection in EEG using logistic regression and artificial neural network. J Neurosci Methods 148(2):167–176

Ibrahim SW, Djemal R, Alsuwailem A, Gannouni S (2017) Electroencephalography (EEG)-based epileptic seizure prediction using entropy and k-nearest neighbor (KNN). Commun Sci Technol. https://doi.org/10.21924/cst.2.1.2017.44

Shoeibi A, Khodatars M, Ghassemi N, Jafari M, Moridian P, Alizadehsani R, Panahiazar M, Khozeimeh F, Zare A, Hosseini-Nejad H et al (2021) Epileptic seizures detection using deep learning techniques: a review. Int J Environ Res Public Health 18(11):5780

Usman SM, Khalid S, Aslam MH (2020) Epileptic seizures prediction using deep learning techniques. IEEE Access 8:39998–40007

Dhara T, Singh PK (2023) Emotion recognition from EEG data using hybrid deep learning approach. In: frontiers of ICT in healthcare: proceedings of EAIT 2022. Springer Nature Singapore, Singapore, pp 179–189

Golmohammadi M, Ziyabari S, Shah V, Von Weltin E, Campbell C, Obeid I, Picone J (2017) Gated recurrent networks for seizure detection. In: 2017 IEEE signal processing in medicine and biology symposium (SPMB). IEEE, Piscataway, pp 1–5

Andrzejak RG, Lehnertz K, Mormann F, Rieke C, David P, Elger CE (2001) Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: dependence on recording region and brain state. Phys Rev E 64(6):061907

Khati R (2020) Epileptic seizure detection using machine learning techniques. https://data.mendeley.com/datasets/k2mzn5zvyg/1. Accessed 11 Nov 2023.

Dubey AK, Chabert GL, Carriero A, Pasche A, Danna PS, Agarwal S, Mohanty L, Nillmani Sharma N, Yadav S et al (2023) Ensemble deep learning derived from transfer learning for classification of COVID-19 patients on hybrid deep-learning-based lung segmentation: a data augmentation and balancing framework. Diagnostics 13(11):1954

Chattopadhyay S, Dey A, Singh PK, Sarkar R (2022) DRDA-net: Dense residual dual-shuffle attention network for breast cancer classification using histopathological images. Comput Biol Med 145:105437

Chattopadhyay S, Dey A, Singh PK, Geem ZW, Sarkar R (2021) COVID-19 detection by optimizing deep residual features with improved clustering-based golden ratio optimizer. Diagnostics 11(2):315

Chandaka S, Chatterjee A, Munshi S (2009) Cross-correlation aided support vector machine classifier for classification of eeg signals. Expert Systems with Applications 36(2):1329–1336

Aarabi A, Wallois F, Grebe R (2006) Automated neonatal seizure detection: a multistage classification system through feature selection based on relevance and redundancy analysis. Clin Neurophysiol 117(2):328–340

Yuan Q, Zhou W, Li S, Cai D (2011) Epileptic EEG classification based on extreme learning machine and nonlinear features. Epilepsy Res 96(1–2):29–38

Subasi A (2007) EEG signal classification using wavelet feature extraction and a mixture of expert model. Expert Syst Appl 32(4):1084–1093

Khan YU, Rafiuddin N, Farooq O (2012) Automated seizure detection in scalp EEG using multiple wavelet scales. IEEE Int Conf Signal Process Comput Control. https://doi.org/10.1109/ISPCC.2012.6224361

Kumar A, Kolekar MH (2014) Machine learning approach for epileptic seizure detection using wavelet analysis of EEG signals. Int Conf Med Imag m-Health Emerg Commun Syst. https://doi.org/10.1109/MedCom.2014.7006043

Nicolaou N, Georgiou J (2012) Detection of epileptic electroencephalogram based on permutation entropy and support vector machines. Expert Syst Appl 39(1):202–209

Song Z, Wang J, Cai L, Deng B, Qin Y (2016) Epileptic seizure detection of electroencephalogram based on weighted-permutation entropy. 12th World Congress Intell Control Automat. https://doi.org/10.1109/WCICA.2016.7578764

Rohan TI, Yusuf MSU, Islam M, Roy S (2020) Efficient approach to detect epileptic seizure using machine learning models for modern healthcare system. IEEE Region 10 Symposium (TENSYMP). https://doi.org/10.1109/TENSYMP50017.2020.9230731

Shankar RS, Raminaidu C, Raju VS, Rajanikanth J (2021) Detection of epilepsy based on EEG signals using PCA with ANN model. J Phys Conf Series 2070:012145

Rahman AA, Faisal F, Nishat MM, Siraji MI, Khalid LI, Khan MRH, Reza MT (2021) Detection of epileptic seizure from EEG signal data by employing machine learning algorithms with hyperparameter optimization. 4th Int Conf Bio-Eng Smart Technol (BioSMART). https://doi.org/10.1109/BioSMART54244.2021.9677770

Prakash V, Kumar D (2023) A modified gated recurrent unit approach for epileptic electroencephalography classification. J Inform Commun Technol 22(4):587–617

Raibag MA, Franklin JV (2021) PCA and SVM technique for epileptic seizure classification. IEEE Int Conf Distribut Comput VLSI Electrical Circuits Robotics (DISCOVER). https://doi.org/10.1109/DISCOVER52564.2021.9663616

Osman AH, Alzahrani AA (2018) New approach for automated epileptic disease diagnosis using an integrated self-organization map and radial basis function neural network algorithm. IEEE Access 7:4741–4747

Upadhyaya Y, Sachin S, Tripathi A, Jain R (2018) Comparative analysis of feature selection in epilepsy seizure recognition using cuckoo, gravitational search and bat algorithm. Int J Inform Syst Manag Sci 1:8

Woodbright M, Verma B, Haidar A (2021) Autonomous deep feature extraction based method for epileptic EEG brain seizure classification. Neurocomputing 444:30–37

Wang G, Deng Z, Choi K-S (2017) Detection of epilepsy with electroencephalogram using rule-based classifiers. Neurocomputing 228:283–290

Guha A, Ghosh S, Roy A, Chatterjee S (2020) Epileptic seizure recognition using deep neural network. Emerg Technol Modell Graphics Proc IEM Graph 2018. https://doi.org/10.1007/978-981-13-7403-6_3

Medsker LR, Jain L et al (2001) Recurrent neural networks. Design Appl 5(64–67):2

Singh P, Manure A, Singh P, Manure A (2020) Introduction to tensorflow 2.0 Learn TensorFlow 2.0. Implement Machine Learn Deep Learn Models Python. https://doi.org/10.1007/978-1-4842-5558-2

Xu G, Ren T, Chen Y, Che W (2020) A one-dimensional CNN-LSTM model for epileptic seizure recognition using EEG signal analysis. Front Neurosci 14:578126

Polat K, Nour M (2020) Epileptic seizure detection based on new hybrid models with electroencephalogram signals. Irbm 41(6):331–353

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. Proc IEEE Conf Comput Vision Pattern Recognit. https://doi.org/10.48550/arXiv.1409.4842

Iandola FN, Han S, Moskewicz MW, Ashraf K, Dally WJ, Keutzer K (2016) Squeezenet: Alexnet-level accuracy with 50x fewer parameters and< 0.5 mb model size. arXiv. https://doi.org/10.48550/arXiv.1602.07360

Qassim H, Verma A, Feinzimer D (2018) Compressed residual-vgg16 CNN model for big data places image recognition. Annual Comput Commun Workshop Conf (CCWC). https://doi.org/10.1109/CCWC.2018.8301729

Mao W, Fathurrahman H, Lee Y, Chang T (2020) EEG dataset classification using CNN method. J Phys Conf Series 1456:012017

Naseem S, Javed K, Khan MJ, Rubab S, Khan MA, Nam Y (2021) Integrated cwt-CNN for epilepsy detection using multiclass EEG dataset. Comput Mater Continua 69(1):471–486

Acknowledgements

The authors would like to express their heartfelt gratitude to the scientists who had kindly released the data from their experiments.

Funding

This work is supported by UKRI through the Horizon Europe Guarantee Scheme (project number: 10078953).

Author information

Authors and Affiliations

Contributions

This work was done in close collaboration among the authors. P.K.S. and M.M. conceived the idea and designed the app’s initial prototype; R.B. and P.K.S. refined the app, performed the analysis, and wrote the paper. All authors have contributed to, seen and approved the paper.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This work is based on secondary datasets available online. Hence, ethical approval was not necessary.

Consent for publication

All authors have seen and approved the current version of the paper.

Competing interests

Mufti Mahmud is an editorial board member of the Brain Informatics journal and was not involved in the editorial review or the decision to publish this article. The authors declare no Competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bhadra, R., Singh, P.K. & Mahmud, M. HyEpiSeiD: a hybrid convolutional neural network and gated recurrent unit model for epileptic seizure detection from electroencephalogram signals. Brain Inf. 11, 21 (2024). https://doi.org/10.1186/s40708-024-00234-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40708-024-00234-x