Abstract

Background

Bipolar disorder type-I (BD-I) patients are known to show emotion regulation abnormalities. In a previous fMRI study using an explicit emotion regulation paradigm, we compared responses from 19 BD-I patients and 17 matched healthy controls (HC). A standard general linear model-based univariate analysis revealed that BD patients showed increased activations in inferior frontal gyrus when instructed to decrease their emotional response as elicited by neutral images. We implemented multivariate pattern recognition analyses on the same data to examine if we could classify conditions within-group as well as HC versus BD.

Methods

We reanalyzed explicit emotion regulation data using a multivariate pattern recognition approach, as implemented in PRONTO software. The original experimental paradigm consisted of a full 2 × 2 factorial design, with valence (Negative/Neutral) and instruction (Look/Decrease) as within subject factors.

Results

The multivariate models were able to accurately classify different task conditions when HC and BD were analyzed separately (63.24%–75.00%, p = 0.001–0.012). In addition, the models were able to correctly classify HC versus BD with significant accuracy in conditions where subjects were instructed to downregulate their felt emotion (59.60%–60.84%, p = 0.014–0.018). The results for HC versus BD classification demonstrated contributions from the salience network, several occipital and frontal regions, inferior parietal lobes, as well as other cortical regions, to achieve above-chance classifications.

Conclusions

Our multivariate analysis successfully reproduced some of the main results obtained in the previous univariate analysis, confirming that these findings are not dependent on the analysis approach. In particular, both types of analyses suggest that there is a significant difference of neural patterns between conditions within each subject group. The multivariate approach also revealed that reappraisal conditions provide the most informative activity for differentiating HC versus BD, irrespective of emotional valence (negative or neutral). The current results illustrate the importance of investigating the cognitive control of emotion in BD. We also propose a set of candidate regions for further study of emotional control in BD.

Similar content being viewed by others

Background

Bipolar disorder type I (BD-I) is characterized by fluctuating affective states, which can be attributed, at least in part, to impaired emotion regulation (ER). For instance, studies have identified the overuse of maladaptive ER strategies and the reduced use of adaptive cognitive techniques in BD-I (Dodd et al. 2019; Green et al. 2007, 2011; Wolkenstein et al. 2014). It has been suggested that the abnormal behaviour such as altered ER observed in BD patients is a result of the ineffective management of attentional and inhibitory resources (Picó-Pérez et al. 2017; Morris et al. 2012; Zhang et al. 2020). The functional and structural neuroimaging literature has identified the possible dysfunction of the inferior frontal gyrus (IFG), a region implicated in voluntary ER (Li et al. 2021; Ochsner and Gross 2005; Goldin et al. 2008), as a possible candidate brain region involved in this behavioural impairment. For instance, contrary to that of healthy controls (HC), BD patients’ lateral prefrontal cortex (PFC) grey matter volume did not correlate with measures of inhibitory mechanisms (Haldane et al. 2008) and structural alteration of PFC is linked to cognitive deficits in BD patients (Ajilore et al. 2015; Cipriani et al. 2017; Oertel-Knöchel et al. 2015). Also, although explicitly controlled ER activates IFG in HC (Braunstein et al. 2017), studies of ER in BD often report a hypoactive PFC, a hyperactive amygdala, and a disconnection between these two structures (Townsend and Altshuler 2012; Zhang et al. 2018; Kjærstad et al. 2021; Kanske et al. 2015; Morris et al. 2012). The hypoactivity of the PFC and the hyperactivity of the amygdala have been shown to enhance emotional response and impair regulation (Koenigsberg et al. 2010; McRae et al. 2010; Townsend et al. 2013). These findings support the characterization of BD as a condition that involves the PFC’s ineffective control over limbic regions, such as the amygdala, during ER.

In contrast to several aforementioned studies (Li et al. 2021; Townsend et al. 2013; Zhang et al. 2018; Kjærstad et al. 2021; Goldin et al. 2008; McRae et al. 2010; Kanske et al. 2015; Morris et al. 2012), our previous functional magnetic resonance imaging (fMRI) study (Corbalán et al. 2015) used a full-factorial ER paradigm (Ochsner et al. 2004), independently manipulating emotions and instructions. In doing so, we observed that BD-I patients could downregulate emotion when instructed, engaging largely the same regions as controls, particularly the left IFG. However, unlike HC, BD-I patients also activated these regions during the control condition, namely, when subjects were instructed to downregulate emotional responses associated with neutral images (for details, see Corbalán et al. 2015). These results reflected the patients’ responses when they were told to regulate their emotions, regardless of whether it was necessary (i.e. when presented with negatively valence stimuli) or not (i.e. in the presence of emotionally neutral images). This is consistent with the observation that BD patients show greater effort but are less successful in spontaneously regulating their emotions (Gruber et al. 2012). Additionally, while amygdala activity in HC decreased during ER, it was high in BD patients when exposed to negative stimuli regardless of instructions, which is similar to results found by Kanske et al. (2015). This suggests that BD patients demonstrated an exaggerated response to emotional stimuli, even when performing voluntary emotion downregulation.

Despite the important role of IFG, ER engages a network of frontal and parietal regions, likely acting in concert. For instance, past studies that used comparable ER paradigms observed the activation of parietal regions in addition to prefrontal areas during ER conditions (e.g. Ochsner et al. 2002; Townsend et al. 2013; Buhle et al. 2014; Diekhof et al. 2011). This was also demonstrated in our previous study, in which we observed inferior parietal lobe (IPL), middle frontal gyrus, cuneus, and lateral occipital complex activation, in addition to IFG, during ER conditions compared to control conditions (Corbalán et al. 2015). Beyond ventral frontal regions, response inhibition is accomplished through a distributed cortical network, including middle/inferior frontal gyri, limbic areas, insulae, and IPLs (Garavan et al. 1999). ER strategies also involve more general information processing systems, such as working memory and cognitive control, which recruit regions of the lateral frontal, inferior/superior parietal, and lateral occipital cortices (Kohn et al. 2014; Pozzi et al. 2021; Morawetz et al. 2017; Stephanou et al. 2016). Reappraisal has been shown to activate the lateral occipital cortex and IPL, including the supramarginal gyrus (Picó-Pérez et al. 2017; Ochsner et al. 2012; Buhle et al. 2014), regions associated with attentional selection and the storage of information for working memory (Culham and Kanwisher 2001). Notably, the parietal region acts as an association region that manages different functions, such as attention, working memory, and the guidance of actions, through activity modulation in the visual system. Additionally, the lateral PFC demonstrates strong connections to various secondary cortical association areas, including temporal, parietal, and occipital areas (Phillips et al. 2008; Moratti et al. 2011; Nguyen et al. 2019).

While a traditional univariate fMRI analysis can identify regions that significantly differ in activity between conditions and/or groups, it is less sensitive to small-magnitude differences in distributed networks (Lewis-Peacock and Norman 2013). In such a case, multivariate approaches, such as a multivariate pattern analysis (MVPA), may be better suited, through the computation of relative differences in the activation patterns and combining information from multiple voxels, leading to the detection of small pattern differences (Norman et al. 2006). Moreover, while a univariate General Linear Model (GLM) analysis reveals neural regions that are more active in response to one condition or group versus another, MVPA can detect differences in neural activation patterns across the brain and test whether different conditions or groups may be distinguished based on those distinct patterns. Thus, these analyses address two different yet complementary questions and can thus provide greater insight on brain functions, especially when investigating possible differences between psychiatric patients and HC.

Indeed, MVPA has been proposed as a potentially powerful tool for detecting certain mental illnesses (Schrouff et al. 2013a). In recent years, this approach has been applied to fMRI data in the study of psychiatric disorders, such as major depressive disorder (MDD), schizophrenia, and autism (Sundermann et al. 2014; Wolfers et al. 2015). The use of MVPA with fMRI data has been shown to be a promising tool for the early identification of psychiatric disorders, as models derived from previously acquired data can be used to predict the diagnostic outcome of a new sample (Mourão-Miranda et al. 2012). For example, Fu et al. (2008) demonstrated the potential of linear support-vector machines (SVM; Cortes and Vapnik 1995) for neuroimaging-based prediction of depression. In fact, machine learning has been applied in psychiatric fields using different imaging modalities including structural, functional, and diffusion MRI as a potential application to diagnose psychiatric disorders such as schizophrenia (see Steardo et al. 2020 for a review), MDD (Patel et al. 2016; Bhadra and Kumar 2022 for a review), and BD (Achalia et al. 2020; Li et al. 2020; Jan et al. 2021; Claude et al. 2020 for a review). MVPA has also been used for distinguishing between different psychiatric disorders (Sundermann et al. 2014), for example, between unipolar (major depressive disorder, MDD) and bipolar depression (Han et al. 2019; Grotegerd et al. 2014; Bürger et al. 2017; Rubin-Falcone et al. 2018). Moreover, it has also been applied to predict the risk of depression (Zhong et al. 2022) and BD (Hajek et al. 2015), as well as to identify clinical phenotypes of BD (Wu et al. 2017), brain volume changes in BD (Sartori et al. 2018), and to differentiate treatment response to electroconvulsive therapy in MDD (Redlich et al. 2016). Taken together, these studies confirm the potential of machine leaning techniques in clinical settings, particularly to discover novel biomarkers. However, many of these studies used structural MRI, diffusion-weighted imaging, resting-state or simple facial emotion processing fMRI tasks, and therefore the potential contributions of MVPA to a better understanding of the neural correlates of more complex cognitive and emotional processes, such as ER, remains less explored. This is particularly relevant, given that regulation of emotion involves, as mentioned above, the interaction between affective processing and executive functions, engaging a large network of cortical and subcortical regions. Because of this, multivariate approaches are ideally suited for investigating the neural correlates of such a process, and to characterize to what extent they may be affected in BD.

To address this issue, we conducted a new analysis of previously acquired fMRI data (Corbalán et al. 2015) using MVPA to determine whether this approach, when paired with an ER paradigm, may be used to distinguish between BD patients and HCs and determine whether the classification depends on the tasks performed by the subjects. Specifically, we assessed whether different experimental conditions could be accurately classified as a function of valence (negative vs. neutral) and instruction (passive viewing [PV] vs. downregulation of emotion [ER]) within each group and whether HC and BD individuals could be distinguished based on neural patterns of activity for each condition. We first conducted MVPA for four within-group classifications to assess whether the models differed in terms of performance and/or contributing regions between groups. We then conducted an MVPA with all subjects to determine if the model could classify individuals to their appropriate group using each condition of the ER paradigm and, if so, which brain regions contributed most to that classification. We hypothesized that the models would successfully classify different instruction (Look/Decrease) conditions, especially in the case of negative images. Moreover, we expected that the group classification model would perform better for the active ER conditions (i.e. downregulation) than for the passive viewing ones.

Methods

The current study consisted in a novel analysis of the experiment described in Corbalán et al. (2015). A brief description of the participants, protocol and data acquisition is presented below. Further details can be found in the original publication.

Participants

Nineteen euthymic BD-I patients (mean age: 41.0 ± 12.5 years) from the Bipolar Disorders Program at Douglas Mental Health Institute and seventeen healthy controls (mean age: 41.4 ± 13.3 years) participated in the study. The Faculty of Medicine Research Ethics Board of McGill University approved the study protocol. The study complied with the Helsinki Declaration of 1975, as revised in 2008. Written informed consent was provided by all participants. Diagnosis was carried out using the Structured Clinical Interview for Psychiatric Disorders of Axis I and II of the Diagnostic and Statistical Manual of the American Psychiatric Association (SCID-I/II DSM-IV; First et al., 1997a, b), as well as a documented manic phase. The Hamilton Depression Rating Scale (HAMD-29; Hamilton 1960), Montgomery–Asberg Depression Rating Scale (MADRS; Montgomery and Åsberg 1979), and the Young Mania Rating Scale (YMRS; Young et al. 1978) were used to confirm that patients were in a euthymic state at the time of the study. Exclusion criteria included the presence of any major Axis I psychiatric disorder for the HC group and the presence of an active co-morbid disorder associated to the diagnosis of bipolar-I disorder for the BD-I group. For both groups, exclusion criteria included a history of alcohol or drug abuse during the last year and use of benzodiazepines, as well as MR contraindications.

Materials and procedure

The paradigm was adapted from Ochsner et al. (2004). Each trial started with a cue instructing participants to either look at the upcoming picture letting their emotions flow spontaneously (Look condition), or to decrease the negative affect induced by it through the previously practiced technique of reappraisal (Decrease condition). A picture selected from the International Affective Pictorial System (IAPS; Lang et al., 2008), showing either a negative or neutral scene, was then presented for 10 s, after which subjects rated their negative affect using a 7-point scale. Trials were separated by a fixation cross lasting between 4 and 10 s. Thus, the experiment was a full 2 × 2 factorial event-related design, with the valence (Negative/Neutral) of images and instructions (Look/Decrease) as the within-subject orthogonal factors, resulting in four event types (Neutral Look, Neutral Decrease, Negative Look and Negative Decrease). Each category contained sixteen images, presented in a pseudo-random order. The experiment was divided into two equivalent runs, each containing 32 trials and lasting about 15 min. A schematic of the experimental design is shown in Fig. 1.

Schematic of the experimental paradigm. The experiment, in a full factorial, event-related design, consisted of 4 trial types, depending on the valence of the image (Negative/Neutral) and the instruction (Look/Decrease). Following each image, subjects rated how much negative affect they felt when viewing the picture. Trials were separated by a fixation cross lasting 4–10 s. For more details, see Methods and Corbalán et al. (2015)

fMRI acquisition and analysis

Acquisition

A total of 820 functional images (410 per run) were acquired using a 3 T Siemens Trio scanner with a 12-channel head coil at the Montreal Neurological Institute (MNI) in an interleaved fashion parallel to the anterior–posterior commissures, covering the whole-brain except the cerebellum (TE/TR: 30/2020 ms; flip angle: 90°; FOV: 256 × 256 mm2; matrix size: 64 × 64; voxel size = 4 × 4 × 4 mm3). A high-resolution T1-weighted scan (176 sagittal slices, voxel size: 1 mm3, TE/TR = 2.98/2300 ms) was obtained for spatial normalization of the functional images to a common template.

Preprocessing and univariate analysis

Image preprocessing was done using SPM12 (v. 7771; Wellcome Trust Centre for Neuroimaging, UK), following the same approach as in our original analysis (Corbalán et al. 2015), but using a smaller smoothing kernel, as recommended for multivariate analyses (Gardumi et al. 2016). Briefly, the preprocessing steps included (1) slice timing correction (with the slice acquired at TR/2 as reference), (2) realignment to the first image of the first run, (3) co-registration to the participant’s T1, (4) normalization to the MNI template using the deformation field obtained in the T1 segmentation and normalization (final voxel size: 2 mm isotropic, bounding box: [− 78:78 − 112:76 − 70:85]), and (5) spatial smoothing with a 4 mm full-width half-maximum Gaussian kernel. Mean and variance images were constructed for each subject and run after every preprocessing step and inspected for possible artifacts. Based on the analysis of the six movement parameters obtained in the realignment step and the voxelwise inter-volume signal differences (see Additional file 1), there were no significant differences in motion during scanning between groups (Additional file 1: Table S1).

The univariate data analysis was also performed in SPM12, in the context of the GLM. Four event types representing the experimental categories (Neutral Look, Neutral Decrease, Negative Look, Negative Decrease) were used to build first-level design matrices for each run and participant. To account for possible temporal differences in evoked responses during the 10 s presentation of the images (Ochsner et al. 2002, 2004), the first (0–5 s) and second half (5–10 s) of the stimulus presentation were modeled separately by two adjacent 5-s boxcars convolved with the canonical hemodynamic response function (for more details, see Penny et al. 2007). That is, the beginning of the first boxcar coincided with the onset of the image, whereas the second started 5 s later. In addition, the six movement parameters from the realignment process were included as covariates. For the univariate second-level analysis, the parameter estimates (betas) for each subject and run were entered in a mixed-effects linear mixed model with image time (Early/Late), instruction (Look/Decrease) and valence (Negative/Neutral) as the within-subject factors and group (BD/HC) as the between-subjects factor, with Run as a factor of no interest (and thus averaged when computing contrasts). Statistical significance was determined using a voxel threshold of p = 0.001, with a cluster-based familywise error rate (FWE) correction for multiple comparisons of p < 0.05 (k = 248) as implemented in AFNI’s 3dClustSim (AFNI version 19.0.17).

MVPA analysis

Beta images specific to each condition, run, and subject obtained from the univariate GLM were used in the MVPA as implemented in PRoNTo v2.1.3 (Pattern Recognition Neuroimaging Toolbox; Schrouff et al. 2013b, 2016). A kernel classifier was trained to identify voxel activation patterns in beta images using a binary linear SVM (Cortes and Vapnik 1995). The SVM calculates a hyperplane, which optimally separates the two conditions of interest (Grotegerd et al. 2013) and identifies the boundary that maximizes the overall classification accuracy of each new sample (Costafreda et al. 2011). Classifiers were trained to detect voxel activation patterns of the beta images using a leave-one-subject-out (LOSO) cross-validation, in which the model is trained using all subjects minus one. Then, the remaining subject is used to test the accuracy level of the prediction. During training, the classifier assigns a weight to each voxel, and then uses these weights to calculate the summed weight of activity during testing. This information is used to determine whether the pattern of activity of each new subject falls on the predicted side of the decision boundary or not (Lewis-Peacock and Norman 2013). From this model binary classification analyses were conducted across two conditions or subject groups at a time (beta images from both runs were combined). Model performance was assessed in terms of the balanced accuracy as well as the sensitivity and specificity, i.e. the accuracies representative of each condition or group, with Decrease and BD assigned as the target (“positive”) categories, respectively. The balanced accuracy accounts for the number of samples in each class and in doing so, distributes equal weight to the classes. Significance of the classifier’s performance was set at p = 0.05, calculated through random permutations of the training labels, in which the classification model is retrained 1000 times. A mask of all the regions, except the cerebellum, from the Automated Anatomical Labeling atlas v1 (Tzourio‐Mazoyer et al. 2002), as implemented in the WFU Pickatlas Tool v2.4 (Maldjian et al. 2003, 2004) was created in MNI space; only the voxels inside the mask (size: 159,841 voxels) were included for pattern recognition. In order to make comparisons between different classifications in terms of its prediction accuracy, Cohen’s Kappa values were calculated for each classification (Forbes 1995). The Kappa calculation takes into account the estimated and observed proportions, in which larger Kappa values indicate better predictions and less error. In addition, a single binomial contrast was calculated for the comparison between proportions of two different confusion matrices (Rodríguez-Avi et al. 2018). The p-values of binomial analyses indicate if the prediction accuracy of one classification was significantly different from that of another.

Weight map

Results from the classification analysis are typically presented as a weight map, which illustrate areas that present the greatest contribution to discriminating between the different groups/conditions. Importantly, a high weight value of a specific voxel indicates a strong contribution to the discrimination boundary but does not directly mean greater activity in one group versus another within the specified voxel (Mourão-Miranda et al. 2012). To identify the regions that most contributed to the classification in each model, clusters corresponding to the top 5% of the positive and negative weights, collectively, having a minimum cluster size of 5 voxels, were extracted. The p-value associated with the weight for each voxel was obtained from the permutation test (see previous section). Threshold of significance was set at p = 0.01.

Results

Behavioral and univariate results

As described in more detail in Corbalán et al. (2015), subjective ratings for the intensity of the negative emotional experience revealed that both groups considered the Negative Decrease condition significantly less intense than the Negative Look condition, with no significant differences between groups. In terms of the fMRI analysis, results obtained in the analysis conducted here were qualitatively similar, in terms of the brain regions significantly activated in the different contrasts of interest, with those described in the original paper (Corbalán et al. 2015), as shown in Additional file 1: Fig. S1 and Additional file 1: Table S2. Briefly, a conjunction analysis (HC and BD) of the contrast Negative Decrease minus Negative Look confirmed that both groups showed comparable activations in areas previously associated with emotion regulation, including the left IFG, when asked to downregulate their emotional reaction to negative pictures, with no significant differences between groups. On the other hand, in the case of the Neutral Decrease minus Neutral Look contrast, a significant group difference was observed in the left IFG, driven by a significant activation for this contrast only in the BD group.

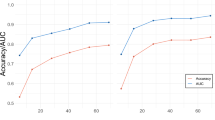

Multivariate analysis

Classifications were first conducted within group, in which the model was trained to classify the two conditions: Neutral Decrease versus Neutral Look, or Negative Decrease versus Negative Look. Classifier model performance reported in Tables 1 and 2 is expressed in terms of classification accuracy and Kappa scores. The multivariate approach produced statistically significant accurate classifications across task conditions (Decrease and Look) when HC and BD were analyzed separately, for both Negative (HC: 64.7%; BD: 71.1%) and Neutral (HC: 63.2%; BD: 75.0%) conditions. That is, above-chance accuracies were obtained in each within-group classification, with slightly higher levels of both sensitivity (i.e. correctly classified Decrease trials) and specificity (i.e. correctly classified Look trials) for the BD than the HC group (Table 1). Likewise, Kappa values were higher in BD compared to HC in each classification; Neutral Decrease vs. Neutral Look (BD: 0.50, HC: 0.26), and Negative Decrease vs. Negative Look (BD: 0.42, HC: 0.29) (Table 2). Finally, a single binomial contrast suggested that confusion matrices for the classification Neutral Decrease versus Neutral Look were significantly different between HC and BD (p = 0.03), while confusion matrices for Negative Decrease versus Negative Look did not significantly differ between groups (p = 0.25) (Table 3A).

Subsequently, the model was trained to distinguish HC and BD individuals for each of the four different conditions. The classification of HC and BD was above chance for Negative and Neutral Decrease conditions (60.8% and 59.6%), but not for Negative or Neutral Look, which yielded an accuracy of only 48.1% and 42.4%, respectively. For the HC versus BD classifications, average value for sensitivity (true positives for BD) was 59.5% (significant models alone = 63.8%) and for specificity (true positives for HC) was 46.0% (significant models alone = 56.6%), suggesting the classifier was better at discriminating the BD subjects, while HC were more often misclassified as BD. Kappa values revealed that Negative Decrease condition produced the most successful prediction (Kappa = 0.22), followed by Neutral Decrease (Kappa = 0.19), indicating that, consistent with the prediction accuracy rates described above, the two groups could be better classified in the Decrease conditions, compared to Look conditions (Table 2). This was confirmed by a single binomial contrast revealing that HC and BD subjects were significantly more accurately classified for Neutral Decrease condition compared to Neutral Look condition (p = 0.005), and for Negative Decrease than Negative Look (p = 0.03) (Table 3B). The ranking of balanced accuracy for the significant classifications is shown in Table 4.

Brain regions

Weight maps showing the top 5% voxels that contributed most to the classification (see Methods) are shown in Fig. 2 for the between-group classifications and Additional file 1: Fig. S2 for the within-group classifications. Discrimination between conditions engaged, in both groups, a number of regions spanning across the entire brain, several of them within the frontal lobes, including those considered part of the Salience Network (SN), such as ACC and Insula, as well as occipital visual-related, areas. No obvious difference in the weight patterns appeared to exist between image valence (Negative/Neutral) or group (HC/BD), suggesting that in all cases, discrimination of conditions as a function of Instruction (Look/Decrease) engaged a large distributed network of cortical and subcortical regions.

Weight map of positive and negative voxel-wise weight contribution in the classification of HC versus BD. A: Negative Decrease HC versus BD. B: Neutral Decrease HC versus BD. Highlighted region depicts contribution of the top 5% positive (red) and negative (blue) weights, with a minimum extent of 5 voxels. Abbreviations are as follows: a.u. = arbitrary units; Mid Occ = middle occipital cortex; Sup Occ = superior occipital cortex; IFG = inferior frontal gyrus; IPL = inferior parietal lobe; ACC = anterior cingulate cortex; Mid frontal; middle frontal; Sup Medial = superior medial; Sup Frontal = superior frontal. Images are shown in neurological convention (i.e. right hemisphere is on the right).

For the group classification of HC versus BD using Negative Decrease (Fig. 2A; Additional file 1: Table S3A), the clusters corresponding to the top 5% of voxels were located in right insula, right putamen, also located broadly in the visual processing regions (left middle occipital, right cuneus, left superior occipital, left calcarine), cognitive control regions (left subgenual ACC/caudate, bilateral IPL, superior parietal, right IFG). Regions that contributed the most to discriminating the HC versus BD for Neutral Decrease condition (Fig. 2B; Additional file 1: Table S3B) included visual processing regions (left middle/superior occipital), cognitive control regions (right superior medial, left middle/superior frontal, right supramarginal gyrus), and right insula. Hence, for the significant classifications of HC versus BD, the weight maps of the most discriminative voxels were located primarily in the occipital regions, as well as prefrontal areas, insula, also parietal regions such as IPL/supramarginal gyrus.

Discussion

The current study implemented a multivariate classification analysis in an emotion regulation fMRI task to identify differences in whole-brain patterns across conditions (regulations vs. passive viewing) and subject populations (HC vs. BD patients). The results suggest that reappraisal conditions provide the most informative activity for differentiating HC versus BD, irrespective of emotional valence (Negative or Neutral). Nevertheless, when no instructions were given, the patterns across groups were relatively similar at the whole-brain pattern level, which may be due to lower cognitive demands required, compared to higher cognitive demands during the Decrease conditions (i.e. regulation). It has been reported that when tasks only require simple emotion reactivity by bottom-up processing, in this case passive viewing, euthymic BD patients tend to exhibit similar activation as HC (Townsend et al. 2013; Foland-Ross et al. 2012; Hassel et al. 2009), where both groups demonstrate amygdala activation and no difference in frontolimbic functioning is present.

Interestingly, contrary to the significant classifications by the current multivariate model, neither Decrease condition (NGD, NED) demonstrated group differences behaviourally in terms of negative emotional experience (Corbalán et al. 2015). Moreover, there was no group (HC vs. BD) difference for the univariate NGD–NGL contrast, but the MVPA demonstrated the highest group classification accuracy for NGD, while there was below-chance level classification for NGL. As BD performed as well as HC behaviourally, distinct neural patterns between HC vs. BD in NGD may represent a compensatory processing in BD to achieve the same results as HC while performing emotion regulation, which was not observed in the univariate analysis. This process likely occurs to compensate for interference from autonomic emotional reaction (Strakowski et al. 2005; Picó-Pérez et al. 2017) to maintain emotion regulation performance, which can be seen in the high negative weight of subgenual ACC and IFG in the group classification of NGD, as both regions are involved in modulation of amygdala processing toward emotional stimuli in patients with mood disorders (Campbell-Sills et al. 2011; Drevets et al. 2008). The development of such compensatory mechanisms may be the result of repeated trial-and-error experiences with this disorder, when individuals with BD-I encounter situations requiring emotion regulation (Kollmann et al. 2017).

As for the NED condition, univariate analysis showed significant group difference in the contrast NED–NEL (see Additional file 1: Table S2 and Additional file 1: Fig. S1), and the current MVPA demonstrated a significant group classification accuracy for NED, but not for NEL. These results confirm that the NED condition itself has group differences in underlying neural patterns, and not in NEL. Taken together with univariate results, it indicates an existence of heightened neural processing in BD after situation-incongruent (i.e. unnecessary) emotion regulation instruction. Heightened neural processing during NED in BD can be also presumed from within-group classifications of NED vs. NEL where the classification accuracy of BD was significantly higher than that of HC (Tables 2, 3A). This confirms the existence of distinct neural processing that emotion regulation instructions produce in HC vs. BD, even with neutral images. We speculate that this discrepancy may reflect euthymic BD patients’ elevated impulsivity (Ramírez-Martín et al. 2020; Mason et al. 2014) and impaired decision-making towards environmental stimuli (Bhatia et al. 2018; Adida et al. 2011; Martino et al. 2011); in our case, a combination of the neutral images and emotion regulation instruction.

Contrary to past MVPA studies involving patients with BD (Claude et al. 2020; Doan et al. 2017; Costafreda et al. 2011), in the current study the model yielded fewer misclassifications of BD patients compared to the HC group, especially in the case of the NGD condition (Table 1). It is known that differences in classification accuracy is determined by group differences in variance parameter (Koch et al. 2015). Although emotion regulation has high individual differences (Dickie and Armony 2008), there should be a reduction of individual differences (Drucaroff et al. 2020; Calhoun et al. 2008) in both groups because MVPA is robust towards between-subject variability in mean activation (Weaverdyck et al. 2020; Davis et al. 2014; Kryklywy et al. 2018). Since there is a clinical heterogeneity in BD patients, higher accuracy of BD may be due to an overall smaller variance in HC, which caused classification models to mis-assign any outliers in the HC group into the BD group. Our results suggest that tasks with explicit ER may be more discriminative for BD than for HC.

Brain networks

As shown in the Tables (Additional file 1: Table S3) and figures (Additional file 1: Fig. S2), and mentioned in the Results, accurate discrimination between conditions relied on a large number of cortical and subcortical regions. This is consistent with the notion that emotion regulation involves several cognitive and affective processes, such as emotional evaluation, attention and cognitive control (Gross 2015; Thompson et al. 2019; Pruessner et al. 2020), which are effected by different, yet possibly overlapping, regions and networks, such as the Salience and Fronto-Parietal/Central Executive ones (Pan et al. 2018; Li et al. 2021; Morawetz et al. 2020; Phan et al. 2005; Kalisch 2009; Menon and Uddin 2010; Peters et al. 2016). These findings also highlight the relevance of using multivariate approaches to study this process. Indeed, whereas univariate analysis typically reveals a few regions where the difference in activity between conditions is maximal (Additional file 1: Table S2 and Additional file 1: Fig. S1), MVPA can capture the distributed pattern of activity, including voxels with relatively small signal, actually involved in the different processes (Jimura and Poldrack 2012; Norman et al. 2006; Davis et al. 2014).

The observed high contribution of regions in the SN, such as the Insula, and occipital visual areas in HC vs. BD classification of NGD (Additional file 1: Table S3A) likely to reflect a high contribution of these regions in classifying NGD vs. NGL within HC (Additional file 1: Table S3C). Similarly, high contribution of the middle occipital cortex and regions in fronto-parietal network (FPN) including the postcentral/supramarginal and middle/superior frontal in HC vs. BD classification of NED (Additional file 1: Table S3B) is likely reflecting a high contribution of these regions in classifying NED versus NEL within HC (Additional file 1: Table S3D). Hence, based on top weighted regions observed in the category and group classifications, it seems that a neural pattern of the primary visual region is distinct following emotion regulation instruction vs. no instruction in HC, and also distinct from that of BD after emotion regulation instruction. This may indicate distinct visual processing in BD compared to HC after emotion regulation instruction, which is consistent with the notion of altered visual information processing in euthymic BD (Maekawa et al. 2013; Morsel et al. 2018; Yeap et al. 2009). Indeed, it has been suggested that the disturbances of the visual system could be used as a diagnostic indicator for psychotic diseases that display generalized attentional deficit (Bellani et al. 2020). It would be meaningful to examine the relationship between visual processing and cognitive/neuropsychological functions of BD patients to further understand this population’s potential sensory impairments. Moreover, distinct processing of the insula during NGD may indicate that this region, known for its role in salience detection (Uddin 2015; Menon and Uddin 2010) and assigning salience in response to contextual demands (Jiang et al. 2015; Power and Petersen 2013), is important in executing efficient emotion regulation when it is necessary. Insula was reported to be a key part of the cognitive neural system during the emotion regulation process using the reappraisal (Picó-Pérez et al. 2019; Steward et al. 2016). In particular, increased association of insula with IFG indicates a better regulation effect of reappraisal (Li et al. 2021). The insula is also known to work together with frontoparietal regions and form a frontoparietal control network (Cauda et al. 2012; Vincent et al. 2008; Molnar-Szakacs and Uddin 2022). This network controls information processing under variety of strategies to enhance the regulation of emotions (Li et al. 2021; Morawetz et al. 2017; Kanske et al. 2011; Goldin et al. 2008; Moodie et al. 2020; Dörfel et al. 2014). High contribution of the SN and visual regions in group classification of NGD may imply distinct processing in BD when focusing on the emotionally salient components of the image after the regulation instruction because directing attention to the appropriate target is the first step in the emotion regulation process (Dickstein and Leibenluft 2006). Furthermore, distinct processing of FPN regions (postcentral/supramarginal, middle/superior frontal) during NED may suggest that this network, known for its role in executing goal-oriented, cognitively demanding task (Uddin et al. 2019; Seeley et al. 2007; Vincent et al. 2008; Dosenbach et al. 2008), is contributing to accurately judging if emotion regulation is necessary. High contribution of regions relating to the salience and frontoparietal attentional networks are consistent with reported deficits in these regions in BD during regulation of cognitive-affective integration task (Ellard et al. 2018; Rai et al. 2021).

Comparison with univariate analysis

Although the BD group alone demonstrated significant activity in the univariate analysis for the contrast regulation versus viewing with neutral images (NED vs. NEL) (Additional file 1: Table S2), the classification model significantly classified these two conditions within the HC group as well. This may reflect the characteristics of MVPA to detect subtle differences of mean-responses in a whole brain network (Coutanche 2013; Kragel et al. 2012) due to the content of the stimuli or the task instructions (Todd et al. 2013). Therefore, in our case, subtle response differences following different task instructions could have been detected by the model in HC as well. That said, this classification (regulation vs. viewing of neutral images) within HC produced the lowest accuracy among all within-group classifications and was significantly lower than the same classification of BD (see Tables 3A, 4).

Surprisingly, top contributing regions reported from within-group classifications differed from regions of activity observed in univariate analyses, for example the IFG. In HC, classifying regulation versus viewing conditions with negative images showed the ACC and insula, both of which belong to the SN (Seeley 2019). Here, other effects, such as condition-related attentional processing and perception changes under different task conditions may have been detected by MVPA (Jimura and Poldrack 2012; Kragel et al. 2012; Coutanche 2013; Davis and Poldrack 2013; Ritchie et al. 2019; Todd et al. 2013) rather than emotion regulation or passive viewing processing. Similarly, although the univariate analysis revealed that BD patients did not exhibit decreased amygdala activation during NGD condition (Additional file 1: Fig. S3), as observed in HC, this region did not notably contribute to the group classification. The lack of amygdala contribution is unlikely due to the use of multivariate analysis, as our previous work observed such activity when classifying emotion-related conditions (Whitehead and Armony 2019). Rather, it could be explained by the fact that emotion regulation paradigms require engagement of more dispersed regions and broader neural networks than simple emotion-processing paradigms (Braunstein et al. 2017; Townsend and Altshuler 2012).

Limitations and future directions

A limitation of the current study is that medication use was not included in the analysis, although our univariate analysis did not show significant differences with and without the index of medication use as a covariate. Likewise, although some potential confounding factors, such as age, IQ and number of years of formal education were matched between two subject groups, and therefore unlikely to have driven the results, we cannot completely rule out their having an effect on the classification accuracy. Another important limitation is our relatively small sample size. Because of this, only limited conclusions can be drawn, and the current results must be replicated and validated on large samples in future studies. Moreover, as our study only investigated one form of cognitive control and one type of emotion regulation, future studies would benefit from including non-emotional cognitive control or other forms of emotion regulation (e.g. suppression) to explore the generalizability of the current findings. In addition, as BD patients have difficulty regulating positive stimuli (Gruber 2011a, 2011b), it would be interesting to include such stimuli in future experiments.

Conclusion

The multivariate approach provided significant contributions to our understanding of dissimilar brain activation pattern in BD compared to HC following emotion regulation instructions, adding to results obtained in the previous univariate analysis. Specifically, although univariate analysis reported that neural processing in BD is different from HC only when there is unnecessary emotion regulation instruction (Corbalán et al. 2015), the current MVPA indicates that distinct neural processing exists even when emotion regulation is context-congruent. This implies that emotion regulation instruction itself causes a distinct processing mechanism between groups regardless of the valence. Comparing these findings with behavioural and univariate results, it can be assumed that there is a compensatory mechanism in BD to achieve successful emotion regulation. Accordingly, we provided a set of candidate regions for further study. Based on the top contributing regions detected by the classifier, that is insula, visual attention regions, IPL, and IFG, it can be assumed that BD patients exhibit a distinct recruitment of attentional and cognitive control network from HC. Moreover, the neural pattern of the middle occipital cortex was distinct between groups in both emotion regulation conditions, indicating this region may contain fundamental information on how cognitive control instructions affect individuals with BD. Similarly, insula and FPN recruitment appear critical for effective use of the external information, specifically efficient emotion regulation when considering cognitive load. This may be a candidate trait marker of unique emotion regulation processing in BD and may reflect a circuit-level etiology of BD. These conclusions could not be drawn from the behavioral or univariate analyses alone, and therefore support the importance of incorporating multivariate methods when studying psychiatric patients. The current findings also suggest that future research on BD should place importance on the cognitive control of emotion versus emotion processing, as BD distinct characteristics appear to depend on the former.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- BD:

-

Bipolar disorder patients

- HC:

-

Healthy controls

- MDD:

-

Major depressive disorder

- GLM:

-

General linear model

- MVPA:

-

Multivariate pattern analysis

- SVM:

-

Support vector machine

- ER:

-

Emotion regulation

- PV:

-

Passive viewing

- PFC:

-

Prefrontal cortex

- IFG:

-

Inferior frontal gyrus

- IPL:

-

Inferior parietal lobe

- ACC:

-

Anterior cingulate cortex

- FPN:

-

Fronto-parietal network

- SN:

-

Salience network

- NGD:

-

Negative decrease

- NED:

-

Neutral decrease

- NGL:

-

Negative look

- NEL:

-

Neutral look

References

Achalia R, Sinha A, Jacob A, Achalia G, Kaginalkar V, Venkatasubramanian G, Rao NP. A proof of concept machine learning analysis using multimodal neuroimaging and neurocognitive measures as predictive biomarker in bipolar disorder. Asian J Psychiatry. 2020;1(50): 101984.

Adida M, Jollant F, Clark L, Besnier N, Guillaume S, Kaladjian A, Mazzola-Pomietto P, Jeanningros R, Goodwin GM, Azorin JM, Courtet P. Trait-related decision-making impairment in the three phases of bipolar disorder. Biol Psychiatry. 2011;70(4):357–65.

Ajilore O, Vizueta N, Walshaw P, Zhan L, Leow A, Altshuler LL. Connectome signatures of neurocognitive abnormalities in euthymic bipolar I disorder. J Psychiatr Res. 2015;1(68):37–44.

Anderson JS, Nielsen JA, Froehlich AL, DuBray MB, Druzgal TJ, Cariello AN, Cooperrider JR, Zielinski BA, Ravichandran C, Fletcher PT, Alexander AL. Functional connectivity magnetic resonance imaging classification of autism. Brain. 2011;134(12):3742–54.

Bhadra S, Kumar CJ. An insight into diagnosis of depression using machine learning techniques: a systematic review. Curr Med Res Opin. 2022;38(5):749–71.

Bhatia P, Sidana A, Das S, Bajaj MK. Neuropsychological functioning in euthymic phase of bipolar affective disorder. Indian J Psychol Med. 2018;40(3):213–8.

Braunstein LM, Gross JJ, Ochsner KN. Explicit and implicit emotion regulation: a multi-level framework. Soc Cogn Affect Neurosci. 2017;12(10):1545–57.

Buhle JT, Silvers JA, Wager TD, Lopez R, Onyemekwu C, Kober H, Weber J, Ochsner KN. Cognitive reappraisal of emotion: a meta-analysis of human neuroimaging studies. Cereb Cortex. 2014;24(11):2981–90.

Bürger C, Redlich R, Grotegerd D, Meinert S, Dohm K, Schneider I, Zaremba D, Förster K, Alferink J, Bölte J, Heindel W. Differential abnormal pattern of anterior cingulate gyrus activation in unipolar and bipolar depression: an fMRI and pattern classification approach. Neuropsychopharmacology. 2017;42(7):1399–408.

Burges CJ. A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov. 1998;2(2):121–67.

Calhoun VD, Maciejewski PK, Pearlson GD, Kiehl KA. Temporal lobe and “default” hemodynamic brain modes discriminate between schizophrenia and bipolar disorder. Hum Brain Mapp. 2008;29(11):1265–75.

Campbell-Sills L, Simmons AN, Lovero KL, Rochlin AA, Paulus MP, Stein MB. Functioning of neural systems supporting emotion regulation in anxiety-prone individuals. Neuroimage. 2011;54(1):689–96.

Cauda F, Costa T, Torta DM, Sacco K, D’Agata F, Duca S, Geminiani G, Fox PT, Vercelli A. Meta-analytic clustering of the insular cortex: characterizing the meta-analytic connectivity of the insula when involved in active tasks. Neuroimage. 2012;62(1):343–55.

Cipriani G, Danti S, Carlesi C, Cammisuli DM, Di Fiorino M. Bipolar disorder and cognitive dysfunction: a complex link. J Nerv Ment Dis. 2017;205(10):743–56.

Claude LA, Houenou J, Duchesnay E, Favre P. Will machine learning applied to neuroimaging in bipolar disorder help the clinician? A critical review and methodological suggestions. Bipolar Disord. 2020;22(4):334–55.

Corbalán F, Beaulieu S, Armony JL. Emotion regulation in bipolar disorder type I: an fMRI study. Psychol Med. 2015;45(12):2521–31.

Cortes C, Vapnik V. Support-vector networks. Mach Learn. 1995;20(3):273–97.

Costafreda SG, Fu CH, Picchioni M, Toulopoulou T, McDonald C, Kravariti E, Walshe M, Prata D, Murray RM, McGuire PK. Pattern of neural responses to verbal fluency shows diagnostic specificity for schizophrenia and bipolar disorder. BMC Psychiatry. 2011;11(1):1.

Coutanche MN. Distinguishing multi-voxel patterns and mean activation: why, how, and what does it tell us? Cogn Affect Behav Neurosci. 2013;13(3):667–73.

Culham JC, Kanwisher NG. Neuroimaging of cognitive functions in human parietal cortex. Curr Opin Neurobiol. 2001;11(2):157–63.

Davis T, Poldrack RA. Measuring neural representations with fMRI: practices and pitfalls. Ann N Y Acad Sci. 2013;1296(1):108–34.

Davis T, LaRocque KF, Mumford JA, Norman KA, Wagner AD, Poldrack RA. What do differences between multi-voxel and univariate analysis mean? How subject-, voxel-, and trial-level variance impact fMRI analysis. Neuroimage. 2014;15(97):271–83.

Dickie EW, Armony JL. Amygdala responses to unattended fearful faces: interaction between sex and trait anxiety. Psychiatry Res Neuroimaging. 2008;162(1):51–7.

Dickstein DP, Leibenluft E. Emotion regulation in children and adolescents: boundaries between normalcy and bipolar disorder. Dev Psychopathol. 2006;18(4):1105–31.

Diekhof EK, Geier K, Falkai P, Gruber O. Fear is only as deep as the mind allows: a coordinate-based meta-analysis of neuroimaging studies on the regulation of negative affect. Neuroimage. 2011;58(1):275–85.

Doan NT, Kaufmann T, Bettella F, Jørgensen KN, Brandt CL, Moberget T, Alnæs D, Douaud G, Duff E, Djurovic S, Melle I. Distinct multivariate brain morphological patterns and their added predictive value with cognitive and polygenic risk scores in mental disorders. NeuroImage Clin. 2017;15:719–31.

Dodd A, Lockwood E, Mansell W, Palmier-Claus J. Emotion regulation strategies in bipolar disorder: a systematic and critical review. J Affect Disord. 2019;1(246):262–84.

Dörfel D, Lamke JP, Hummel F, Wagner U, Erk S, Walter H. Common and differential neural networks of emotion regulation by detachment, reinterpretation, distraction, and expressive suppression: a comparative fMRI investigation. Neuroimage. 2014;1(101):298–309.

Dosenbach NU, Fair DA, Cohen AL, Schlaggar BL, Petersen SE. A dual-networks architecture of top-down control. Trends Cogn Sci. 2008;12(3):99–105.

Drevets WC, Savitz J, Trimble M. The subgenual anterior cingulate cortex in mood disorders. CNS Spectr. 2008;13(8):663.

Drucaroff LJ, Fazzito ML, Castro MN, Nemeroff CB, Guinjoan SM, Villarreal MF. Insular functional alterations in emotional processing of schizophrenia patients revealed by multivariate pattern analysis fMRI. J Psychiatr Res. 2020;1(130):128–36.

Ellard KK, Zimmerman JP, Kaur N, Van Dijk KR, Roffman JL, Nierenberg AA, Dougherty DD, Deckersbach T, Camprodon JA. Functional connectivity between anterior insula and key nodes of frontoparietal executive control and salience networks distinguish bipolar depression from unipolar depression and healthy control subjects. Biol Psychiatry Cogn Neurosci Neuroimaging. 2018;3(5):473–84.

First MB, Spitzer RL, Gibbon M, Williams JBW. Structured Clinical Interview for DSM-IV Axis I Disorders (SCID I). New York: State Psychiatric Institute; 1997a.

First MB, Gibbon M, Spitzer RL, Williams JBW, Benjamin LS. Structured Clinical Interview for DSM-IV Axis II Personality Disorders, (SCID-II). Washington, DC: American Psychiatric Press; 1997b.

Foland-Ross LC, Bookheimer SY, Lieberman MD, Sugar CA, Townsend JD, Fischer J, Torrisi S, Penfold C, Madsen SK, Thompson PM, Altshuler LL. Normal amygdala activation but deficient ventrolateral prefrontal activation in adults with bipolar disorder during euthymia. Neuroimage. 2012;59(1):738–44.

Forbes AD. Classification-algorithm evaluation: five performance measures based onconfusion matrices. J Clin Monit. 1995;11(3):189–206.

Fu CH, Mourao-Miranda J, Costafreda SG, Khanna A, Marquand AF, Williams SC, Brammer MJ. Pattern classification of sad facial processing: toward the development of neurobiological markers in depression. Biol Psychiatry. 2008;63(7):656–62.

Garavan H, Ross TJ, Stein EA. Right hemispheric dominance of inhibitory control: an event-related functional MRI study. Proc Natl Acad Sci. 1999;96(14):8301–6.

Gardumi A, Ivanov D, Hausfeld L, Valente G, Formisano E, Uludağ K. The effect of spatial resolution on decoding accuracy in fMRI multivariate pattern analysis. Neuroimage. 2016;15(132):32–42.

Goldin PR, McRae K, Ramel W, Gross JJ. The neural bases of emotion regulation: reappraisal and suppression of negative emotion. Biol Psychiat. 2008;63(6):577–86.

Green MJ, Cahill CM, Malhi GS. The cognitive and neurophysiological basis of emotion dysregulation in bipolar disorder. J Affect Disord. 2007;103(1–3):29–42.

Green MJ, Lino BJ, Hwang EJ, Sparks A, James C, Mitchell PB. Cognitive regulation of emotion in bipolar I disorder and unaffected biological relatives. Acta Psychiatr Scand. 2011;124(4):307–16.

Gross JJ. Emotion regulation: current status and future prospects. Psychol Inq. 2015;26(1):1–26.

Grotegerd D, Suslow T, Bauer J, Ohrmann P, Arolt V, Stuhrmann A, Heindel W, Kugel H, Dannlowski U. Discriminating unipolar and bipolar depression by means of fMRI and pattern classification: a pilot study. Eur Arch Psychiatry Clin Neurosci. 2013;263(2):119–31.

Grotegerd D, Stuhrmann A, Kugel H, Schmidt S, Redlich R, Zwanzger P, Rauch AV, Heindel W, Zwitserlood P, Arolt V, Suslow T. Amygdala excitability to subliminally presented emotional faces distinguishes unipolar and bipolar depression: an fMRI and pattern classification study. Hum Brain Mapp. 2014;35(7):2995–3007.

Gruber J. A review and synthesis of positive emotion and reward disturbance in bipolar disorder. Clin Psychol Psychother. 2011;18(5):356–65.

Gruber J. Can feeling too good be bad? Positive emotion persistence (PEP) in bipolar disorder. Curr Dir Psychol Sci. 2011;20(4):217–21.

Gruber J, Harvey AG, Gross JJ. When trying is not enough: emotion regulation and the effort–success gap in bipolar disorder. Emotion. 2012;12(5):997.

Hajek T, Cooke C, Kopecek M, Novak T, Hoschl C, Alda M. Using structural MRI to identify individuals at genetic risk for bipolar disorders: a 2-cohort, machine learning study. J Psychiatry Neurosci. 2015;40(5):316–24.

Haldane M, Cunningham G, Androutsos C, Frangou S. Structural brain correlates of response inhibition in Bipolar Disorder I. J Psychopharmacol. 2008;22(2):138–43.

Hamilton M. A rating scale for depression. J Neurol Neurosurg Psychiatry. 1960;23(1):56.

Han KM, De Berardis D, Fornaro M, Kim YK. Differentiating between bipolar and unipolar depression in functional and structural MRI studies. Prog Neuropsychopharmacol Biol Psychiatry. 2019;20(91):20–7.

Hassel S, Almeida JR, Frank E, Versace A, Nau SA, Klein CR, Kupfer DJ, Phillips ML. Prefrontal cortical and striatal activity to happy and fear faces in bipolar disorder is associated with comorbid substance abuse and eating disorder. J Affect Disord. 2009;118(1–3):19–27.

Jan Z, Ai-Ansari N, Mousa O, Abd-Alrazaq A, Ahmed A, Alam T, Househ M. The role of machine learning in diagnosing bipolar disorder: scoping review. J Med Internet Res. 2021;23(11): e29749.

Jiang J, Beck J, Heller K, Egner T. An insula-frontostriatal network mediates flexible cognitive control by adaptively predicting changing control demands. Nat Commun. 2015;6(1):1–1.

Jimura K, Poldrack RA. Analyses of regional-average activation and multivoxel pattern information tell complementary stories. Neuropsychologia. 2012;50(4):544–52.

Kalisch R. The functional neuroanatomy of reappraisal: time matters. Neurosci Biobehav Rev. 2009;33(8):1215–26.

Kanske P, Heissler J, Schönfelder S, Bongers A, Wessa M. How to regulate emotion? Neural networks for reappraisal and distraction. Cereb Cortex. 2011;21(6):1379–88.

Kanske P, Schönfelder S, Forneck J, Wessa M. Impaired regulation of emotion: neural correlates of reappraisal and distraction in bipolar disorder and unaffected relatives. Transl Psychiatry. 2015;5(1):e497.

Kjærstad HL, Macoveanu J, Knudsen GM, Frangou S, Phan KL, Vinberg M, Kessing LV, Miskowiak KW. Neural responses during down-regulation of negative emotion in patients with recently diagnosed bipolar disorder and their unaffected relatives. Psychol Med. 2021;31:1–2.

Koch SP, Hägele C, Haynes JD, Heinz A, Schlagenhauf F, Sterzer P. Diagnostic classification of schizophrenia patients on the basis of regional reward-related FMRI signal patterns. PLoS ONE. 2015;10(3): e0119089.

Koenigsberg HW, Fan J, Ochsner KN, Liu X, Guise K, Pizzarello S, Dorantes C, Tecuta L, Guerreri S, Goodman M, New A. Neural correlates of using distancing to regulate emotional responses to social situations. Neuropsychologia. 2010;48(6):1813–22.

Kohn N, Eickhoff SB, Scheller M, Laird AR, Fox PT, Habel U. Neural network of cognitive emotion regulation—an ALE meta-analysis and MACM analysis. Neuroimage. 2014;15(87):345–55.

Kollmann B, Scholz V, Linke J, Kirsch P, Wessa M. Reward anticipation revisited-evidence from an fMRI study in euthymic bipolar I patients and healthy first-degree relatives. J Affect Disord. 2017;1(219):178–86.

Kragel PA, Carter RM, Huettel SA. What makes a pattern? Matching decoding methods to data in multivariate pattern analysis. Front Neurosci. 2012;23(6):162.

Kryklywy JH, Macpherson EA, Mitchell DG. Decoding auditory spatial and emotional information encoding using multivariate versus univariate techniques. Exp Brain Res. 2018;236(4):945–53.

Lang PJ, Bradley MM, Cuthbert BN. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. 2nd ed. Gainesville, FL: University of Florida; 2008.

Lewis-Peacock JA, Norman KA. Multi-voxel pattern analysis of fMRI data. Cogn Neurosci. 2014;512:911–20.

Li H, Cui L, Cao L, Zhang Y, Liu Y, Deng W, Zhou W. Identification of bipolar disorder using a combination of multimodality magnetic resonance imaging and machine learning techniques. BMC Psychiatry. 2020;20(1):1–2.

Li W, Yang P, Ngetich RK, Zhang J, Jin Z, Li L. Differential involvement of frontoparietal network and insula cortex in emotion regulation. Neuropsychologia. 2021;15(161): 107991.

Maekawa T, Katsuki S, Kishimoto J, Onitsuka T, Ogata K, Yamasaki T, Ueno T, Tobimatsu S, Kanba S. Altered visual information processing systems in bipolar disorder: evidence from visual MMN and P3. Front Hum Neurosci. 2013;26(7):403.

Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19(3):1233–9.

Maldjian JA, Laurienti PJ, Burdette JH. Precentral Gyrus Discrepancy in Electronic Versions of the Talairach Atlas. Neuroimage 2004;21(1):450–5.

Martino DJ, Strejilevich SA, Torralva T, Manes F. Decision making in euthymic bipolar I and bipolar II disorders. Psychol Med. 2011;41(6):1319–27.

Mason L, O’Sullivan N, Montaldi D, Bentall RP, El-Deredy W. Decision-making and trait impulsivity in bipolar disorder are associated with reduced prefrontal regulation of striatal reward valuation. Brain. 2014;137(8):2346–55.

McRae K, Hughes B, Chopra S, Gabrieli JD, Gross JJ, Ochsner KN. The neural bases of distraction and reappraisal. J Cogn Neurosci. 2010;22(2):248–62.

Menon V, Uddin LQ. Saliency, switching, attention and control: a network model of insula function. Brain Struct Funct. 2010;214(5):655–67.

Molnar-Szakacs I, Uddin LQ. Anterior insula as a gatekeeper of executive control. Neurosci Biobehav Rev. 2022;11: 104736.

Montgomery SA, Åsberg MA. A new depression scale designed to be sensitive to change. Br J Psychiatry. 1979;134(4):382–9.

Moodie CA, Suri G, Goerlitz DS, Mateen MA, Sheppes G, McRae K, Lakhan-Pal S, Thiruchselvam R, Gross JJ. The neural bases of cognitive emotion regulation: the roles of strategy and intensity. Cogn Affect Behav Neurosci. 2020;20(2):387–407.

Moratti S, Saugar C, Strange BA. Prefrontal-occipitoparietal coupling underlies late latency human neuronal responses to emotion. J Neurosci. 2011;31(47):17278–86.

Morawetz C, Bode S, Derntl B, Heekeren HR. The effect of strategies, goals and stimulus material on the neural mechanisms of emotion regulation: a meta-analysis of fMRI studies. Neurosci Biobehav Rev. 2017;1(72):111–28.

Morawetz C, Riedel MC, Salo T, Berboth S, Eickhoff SB, Laird AR, Kohn N. Multiple large-scale neural networks underlying emotion regulation. Neurosci Biobehav Rev. 2020;1(116):382–95.

Morris RW, Sparks A, Mitchell PB, Weickert CS, Green MJ. Lack of cortico-limbic coupling in bipolar disorder and schizophrenia during emotion regulation. Transl Psychiatry. 2012;2(3):e90.

Morsel AM, Morrens M, Dhar M, Sabbe B. Systematic review of cognitive event related potentials in euthymic bipolar disorder. Clin Neurophysiol. 2018;129(9):1854–65.

Mourão-Miranda J, Oliveira L, Ladouceur CD, Marquand A, Brammer M, Birmaher B, Axelson D, Phillips ML. Pattern recognition and functional neuroimaging help to discriminate healthy adolescents at risk for mood disorders from low risk adolescents. PLoS ONE. 2012;7(2): e29482.

Nguyen T, Zhou T, Potter T, Zou L, Zhang Y. The cortical network of emotion regulation: insights from advanced EEG-fMRI integration analysis. IEEE Trans Med Imaging. 2019;38(10):2423–33.

Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 2006;10(9):424–30.

Ochsner KN, Gross JJ. The cognitive control of emotion. Trends Cogn Sci. 2005;9(5):242–9.

Ochsner KN, Bunge SA, Gross JJ, Gabrieli JD. Rethinking feelings: an FMRI study of the cognitive regulation of emotion. J Cogn Neurosci. 2002;14(8):1215–29.

Ochsner KN, Ray RD, Cooper JC, Robertson ER, Chopra S, Gabrieli JD, Gross JJ. For better or for worse: neural systems supporting the cognitive down-and up-regulation of negative emotion. Neuroimage. 2004;23(2):483–99.

Ochsner KN, Silvers JA, Buhle JT. Functional imaging studies of emotion regulation: a synthetic review and evolving model of the cognitive control of emotion. Ann N Y Acad Sci. 2012;1251(1):E1-24.

Oertel-Knöchel V, Reuter J, Reinke B, Marbach K, Feddern R, Alves G, Prvulovic D, Linden DE, Knöchel C. Association between age of disease-onset, cognitive performance and cortical thickness in bipolar disorders. J Affect Disord. 2015;15(174):627–35.

Pan J, Zhan L, Hu C, Yang J, Wang C, Gu LI, Zhong S, Huang Y, Wu Q, Xie X, Chen Q. Emotion regulation and complex brain networks: association between expressive suppression and efficiency in the fronto-parietal network and default-mode network. Front Hum Neurosci. 2018;16(12):70.

Patel MJ, Khalaf A, Aizenstein HJ. Studying depression using imaging and machine learning methods. NeuroImage: Clin. 2016;10:115–23.

Penny WD, Friston KJ, Ashburner JT, Kiebel SJ, Nichols TE, editors. Statistical parametric mapping: the analysis of functional brain images. Amsterdam: Elsevier; 2007.

Peters SK, Dunlop K, Downar J. Cortico-striatal-thalamic loop circuits of the salience network: a central pathway in psychiatric disease and treatment. Front Syst Neurosci. 2016;27(10):104.

Phan KL, Fitzgerald DA, Nathan PJ, Moore GJ, Uhde TW, Tancer ME. Neural substrates for voluntary suppression of negative affect: a functional magnetic resonance imaging study. Biol Psychiat. 2005;57(3):210–9.

Phillips ML, Ladouceur CD, Drevets WC. A neural model of voluntary and automatic emotion regulation: implications for understanding the pathophysiology and neurodevelopment of bipolar disorder. Mol Psychiatry. 2008;13(9):833–57.

Picó-Pérez M, Radua J, Steward T, Menchón JM, Soriano-Mas C. Emotion regulation in mood and anxiety disorders: a meta-analysis of fMRI cognitive reappraisal studies. Prog Neuropsychopharmacol Biol Psychiatry. 2017;3(79):96–104.

Picó-Pérez M, Alemany-Navarro M, Dunsmoor JE, Radua J, Albajes-Eizagirre A, Vervliet B, Cardoner N, Benet O, Harrison BJ, Soriano-Mas C, Fullana MA. Common and distinct neural correlates of fear extinction and cognitive reappraisal: a meta-analysis of fMRI studies. Neurosci Biobehav Rev. 2019;1(104):102–15.

Power JD, Petersen SE. Control-related systems in the human brain. Curr Opin Neurobiol. 2013;23(2):223–8.

Pozzi E, Vijayakumar N, Rakesh D, Whittle S. Neural correlates of emotion regulation in adolescents and emerging adults: a meta-analytic study. Biol Psychiat. 2021;89(2):194–204.

Pruessner L, Barnow S, Holt DV, Joormann J, Schulze K. A cognitive control framework for understanding emotion regulation flexibility. Emotion. 2020;20(1):21.

Rai S, Griffiths K, Breukelaar IA, Barreiros AR, Chen W, Boyce P, Hazell P, Foster S, Malhi GS, Bryant RA, Harris AW. Investigating neural circuits of emotion regulation to distinguish euthymic patients with bipolar disorder and major depressive disorder. Bipolar Disord. 2021;23(3):284–94.

Ramírez-Martín A, Ramos-Martín J, Mayoral-Cleries F, Moreno-Küstner B, Guzman-Parra J. Impulsivity, decision-making and risk-taking behaviour in bipolar disorder: a systematic review and meta-analysis. Psychol Med. 2020;50(13):2141–53.

Redlich R, Opel N, Grotegerd D, Dohm K, Zaremba D, Bürger C, Münker S, Mühlmann L, Wahl P, Heindel W, Arolt V. Prediction of individual response to electroconvulsive therapy via machine learning on structural magnetic resonance imaging data. JAMA Psychiat. 2016;73(6):557–64.

Ritchie JB, Kaplan DM, Klein C. Decoding the brain: neural representation and the limits of multivariate pattern analysis in cognitive neuroscience. Br J Philos Sci. 2019.

Rodríguez-Avi J, Ariza-López FJ, Alba-Fernández V. Methods for comparing two observed confusion matrices. In: Proceedings of the 21st AGILE international conference on geographic information science 2018.

Rubin-Falcone H, Zanderigo F, Thapa-Chhetry B, Lan M, Miller JM, Sublette ME, Oquendo MA, Hellerstein DJ, McGrath PJ, Stewart JW, Mann JJ. Pattern recognition of magnetic resonance imaging-based gray matter volume measurements classifies bipolar disorder and major depressive disorder. J Affect Disord. 2018;1(227):498–505.

Sartori JM, Reckziegel R, Passos IC, Czepielewski LS, Fijtman A, Sodré LA, Massuda R, Goi PD, Vianna-Sulzbach M, de Azevedo CT, Kapczinski F. Volumetric brain magnetic resonance imaging predicts functioning in bipolar disorder: a machine learning approach. J Psychiatr Res. 2018;1(103):237–43.

Schrouff J, Rosa MJ, Rondina JM, Marquand AF, Chu C, Ashburner J, Phillips C, Richiardi J, Mourao-Miranda J. PRoNTo: pattern recognition for neuroimaging toolbox. Neuroinformatics. 2013;11(3):319–37.

Schrouff J, Mourao-Miranda J, Phillips C, Parvizi J. Decoding intracranial EEG data with multiple kernel learning method. J Neurosci Methods. 2016;261:19–28.

Schrouff J, Cremers J, Garraux G, Baldassarre L, Mourão-Miranda J, Phillips C. Localizing and comparing weight maps generated from linear kernel machine learning models. In: 2013 International Workshop on Pattern Recognition in Neuroimaging 2013a (pp. 124–127). IEEE.

Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, Reiss AL, Greicius MD. Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci. 2007;27(9):2349–56.

Steardo L Jr, Carbone EA, De Filippis R, Pisanu C, Segura-Garcia C, Squassina A, De Fazio P, Steardo L. Application of support vector machine on fMRI data as biomarkers in schizophrenia diagnosis: a systematic review. Front Psych. 2020;23(11):588.

Stephanou K, Davey CG, Kerestes R, Whittle S, Pujol J, Yücel M, Fornito A, López-Solà M, Harrison BJ. Brain functional correlates of emotion regulation across adolescence and young adulthood. Hum Brain Mapp. 2016;37(1):7–19.

Steward T, Pico-Perez M, Mata F, Martinez-Zalacain I, Cano M, Contreras-Rodriguez O, Fernandez-Aranda F, Yucel M, Soriano-Mas C, Verdejo-Garcia A. Emotion regulation and excess weight: impaired affective processing characterized by dysfunctional insula activation and connectivity. PLoS ONE. 2016;11(3): e0152150.

Strakowski SM, Adler CM, Holland SK, Mills NP, DelBello MP, Eliassen JC. Abnormal FMRI brain activation in euthymic bipolar disorder patients during a counting Stroop interference task. Am J Psychiatry. 2005;162(9):1697–705.

Sundermann B, Herr D, Schwindt W, Pfleiderer B. Multivariate classification of blood oxygen level–dependent fMRI data with diagnostic intention: a clinical perspective. Am J Neuroradiol. 2014;35(5):848–55.

Thompson NM, Uusberg A, Gross JJ, Chakrabarti B. Empathy and emotion regulation: an integrative account. Prog Brain Res. 2019;1(247):273–304.

Todd MT, Nystrom LE, Cohen JD. Confounds in multivariate pattern analysis: theory and rule representation case study. Neuroimage. 2013;15(77):157–65.

Townsend J, Altshuler LL. Emotion processing and regulation in bipolar disorder: a review. Bipolar Disord. 2012;14(4):326–39.

Townsend JD, Torrisi SJ, Lieberman MD, Sugar CA, Bookheimer SY, Altshuler LL. Frontal-amygdala connectivity alterations during emotion downregulation in bipolar I disorder. Biol Psychiat. 2013;73(2):127–35.

Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15(1):273–89.

Uddin LQ. Salience processing and insular cortical function and dysfunction. Nat Rev Neurosci. 2015;16(1):55–61.

Uddin LQ, Yeo BT, Spreng RN. Towards a universal taxonomy of macro-scale functional human brain networks. Brain Topogr. 2019;32(6):926–42.

Vincent JL, Kahn I, Snyder AZ, Raichle ME, Buckner RL. Evidence for a frontoparietal control system revealed by intrinsic functional connectivity. J Neurophysiol. 2008;100(6):3328–42.

Weaverdyck ME, Lieberman MD, Parkinson C. Tools of the Trade Multivoxel pattern analysis in fMRI: a practical introduction for social and affective neuroscientists. Soc Cogn Affect Neurosci. 2020;15(4):487–509.

Whitehead JC, Armony JL. Multivariate fMRI pattern analysis of fear perception across modalities. Eur J Neurosci. 2019;49(12):1552–63.

Wolfers T, Buitelaar JK, Beckmann CF, Franke B, Marquand AF. From estimating activation locality to predicting disorder: a review of pattern recognition for neuroimaging-based psychiatric diagnostics. Neurosci Biobehav Rev. 2015;1(57):328–49.

Wolkenstein L, Zwick JC, Hautzinger M, Joormann J. Cognitive emotion regulation in euthymic bipolar disorder. J Affect Disord. 2014;1(160):92–7.

Wu MJ, Mwangi B, Bauer IE, Passos IC, Sanches M, Zunta-Soares GB, Meyer TD, Hasan KM, Soares JC. Identification and individualized prediction of clinical phenotypes in bipolar disorders using neurocognitive data, neuroimaging scans and machine learning. Neuroimage. 2017;15(145):254–64.

Yeap S, Kelly SP, Reilly RB, Thakore JH, Foxe JJ. Visual sensory processing deficits in patients with bipolar disorder revealed through high-density electrical mapping. J Psychiatry Neurosci. 2009;34(6):459–64.

Young RC, Biggs JT, Ziegler VE, Meyer DA. A rating scale for mania: reliability, validity and sensitivity. Br J Psychiatry. 1978;133(5):429–35.

Zhang L, Opmeer EM, van der Meer L, Aleman A, Ćurčić-Blake B, Ruhé HG. Altered frontal-amygdala effective connectivity during effortful emotion regulation in bipolar disorder. Bipolar Disord. 2018;20(4):349–58.

Zhang L, Ai H, Opmeer EM, Marsman JB, van der Meer L, Ruhé HG, Aleman A, Van Tol MJ. Distinct temporal brain dynamics in bipolar disorder and schizophrenia during emotion regulation. Psychol Med. 2020;50(3):413–21.

Zhong M, Zhang H, Yu C, Jiang J, Duan X. Application of machine learning in predicting the risk of postpartum depression: a systematic review. J Affect Disorders. 2022.

Seeley WW. The Salience Network: A Neural System for Perceiving and Responding to Homeostatic Demands The Journal of Neuroscience 2019;39(50):9878–82.

Acknowledgements

Not applicable.

Funding

This research was funded by the Natural Science and Engineering Research Council of Canada (NSERC, RGPIN-2017-05832) and the Canadian Institutes of Health Research (CIHR, MOP-130516) to JLA.

Author information

Authors and Affiliations

Contributions

FK analyzed and interpreted the data, and was a major contributor in writing the manuscript. JCW and JLA both contributed to the revision of the manuscript. JLA designed the research. FC and JLA contributed to the data acquisition. SB contributed to the recruitment and clinical supervision of the participants during the study. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The faculty of Medicine Research Ethics Board of McGill University approved the study protocol. The study complied with the Helsinki Declaration of 1975, as revised in 2008. Written informed consent was provided by all participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Table S1. Movement-related parameters during scanning. Table S2. Coordinates and brain regions from the univariate analysis. Table S3. Clusters corresponding to the top 5% of the weight contribution in significant classifications. Table S4. Top 10 regions contributing to the significant group classifications based on the combined cluster size. Figure S1. Statistical parametric maps of the contrast Negative Decrease—Negative Look and Neutral Decrease—Neutral Look. Figure S2. Weight map of positive and negative voxel-wise weight contribution in the within-group classifications. Figure S3. Amygdala activation for the contrast Negative Look—Negative Decrease for the HC group (x = 20, y = − 4, z = − 18).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kondo, F., Whitehead, J.C., Corbalán, F. et al. Emotion regulation in bipolar disorder type-I: multivariate analysis of fMRI data. Int J Bipolar Disord 11, 12 (2023). https://doi.org/10.1186/s40345-023-00292-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40345-023-00292-w