Abstract

Purpose

Understanding the technical efficiency of health facilities is essential for an optimal allocation of scarce resources to primary health sectors. The COVID-19 pandemic may have further undermined levels of efficiency in low-resource settings. This study takes advantage of 2019 and 2020 data on characteristics of health facilities, health services inputs and output to examine the levels and changes in efficiency of Ghanaian health facilities. The current study by using a panel dataset contributes to existing evidence, which is mostly based on pre-COVID-19 and single-period data.

Methods

The analysis is based on a panel dataset including 151 Ghanaian health facilities. Data Envelopement Analysis (DEA) technique was used to estimate the level and changes in efficiency of health facilities across two years..

Results

The results show a net increase of 26% in inputs, influenced mostly by increases in temporary non-clinical staff (131%) and attrition of temporary clinical staff and permanent non-clinical staff, 40% and 54% respectively. There was also a net reduction in output of 34%, driven by a reduction in in-patient days (37%), immunization (11%), outpatients visits and laboratory test of 9%. Nowithstanding the COVID-19 pandemic, the results indicate that 59 (39%) of sampled health facilities in 2020 were efficient, compared to 48 (32%) in 2019. The results also indicate that smaller-sized health facilities were less likely to be efficient compared to relatively bigger health facilities.

Conclusion

Based on the findings, it will be essential to examine factors that accounted for efficiency improvements in some health facilities, to enable health facilities lagging behind to learn from those on the efficiency frontier. In addition, the findings emphasise the need for CHAG to work with health facility managers to optimise inputs allocation through a redistribution of staff. Most importantly, the findings are suggestive of the resilience of CHAG health facilities in responding to a health shock such as the COVID-19 pandemic.

Similar content being viewed by others

Introduction

Globally, efficiency in health systems is a priority for decision-makers given that healthcare resources are scarce [1]. This is particularly important in regions such as Africa where resource scarcity in the health sector is acute. As such, Africa accounts for only 11% of the world’s population but carries 24% of the global burden of disease, and spends only 6.1% of its Gross Domestic Product (GDP) on health compared to 15% in developed countries [2,3,4]. Such resource scarcity in the midst of rising out-of-pocket expenditure, and the need to achieve financial protection and by extention Universal Coverage (UHC), reiterate the call for the health sector in Africa to be more efficient [5, 6]. Still, inefficient utilisation of healthcare resources persist in many African countries, with the average inefficiency rate estimated to be about 23% [7]. The inefficiency occurs as a result of misuse of health inputs in ways that do not maximise the level of health outputs produced (i.e. technical efficiency), or sub-optimal allocation of resources toward producing health outputs that are not priorities for society (allocative efficiency) [8].

However, given that health facilities consume the highest proportion of total health expenditure in many African countries (estimated to be from 45 to 81% of government health expenditure) [5, 9], they have inevitably become candidates to consider when it comes to efforts to understand and improve the levels of efficiency in the health sector. It has been suggested that efficiency in health systems, especially at the health facility level in Lower Middle Income Countries (LMICs), will not only help to ensure that limited available resources are used optimally, but can also result in savings that can be invested in the health system for improved population health [9, 10].

The existing literature abounds in peer-reviewed journal publications (40 papers identified) that have examined technical efficiency of health facilities in Africa [5]. Whiles existing studies used health facilities across different ownership types (public, mission, quasi public/ government and private) and hierarchy (health centres, hospitals etc.), they were mostly limited to using single-year data. In the specific case of Ghana, existing studies [11,12,13,14,15] are based on single-year data, making it difficult to examine trends and therefore changes in efficiency across years. Additionally, the only existing technical efficiency study in Ghana that includes mission health facilities is based on data collected in 2005 [12] and therefore can hardly be relied upon for policy decisions. The current study takes advantage of the Med4All baseline study, which collected data on inputs and output of Christian Health Association of Ghana (CHAG) health facilities for 2019 and 2020 to examine the level and changes in technical efficiency of CHAG health facilities for the two periods for which data was collected. Beside data availability, examining technical efficiency of CHAG health facilities is crucial given that it is the second largest provider of healthcare services in Ghana beside the Ghana Health Service, contributing an average of 30% of total OPD care from 2016 to 2021 [16]. CHAG facilities also serve majority of Ghana’s rural population. Most importantly, the unique nature of the Med4All data (data collected before and during the COVID-19 pandemic) makes it possible to examine whether CHAG health facilities were resilient enough to withstand shocks emanating from the COVID-19 pandemic. Specifically the paper examines:

-

1.

Level and determinants of technical efficiency among CHAG health facilities

-

2.

Changes in technical efficiency of CHAG health facilities between 2019 and 2020.

The current study is essential in terms of added value in two respects. First, the findings affords us the opportunity to know the level of improvements achieved by CHAG health facilities in technical efficiency compared to when they were last studied using 2005 data [12]. Secondly, a comparison of efficiency scores for 2019 and 2020 makes it possible not only to identify changes that occurred between the two periods, but more importantly, whether the shock arising from the COVID-19 pandemic adversely affected the levels of efficiency of CHAG health facilities or not.

Methods

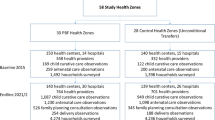

The study used health service input and output data from the Med4All baseline study, details of this project are described elsewhere [17]. The Med4All study, which has completed baseline data collection and awaiting endline data collection in 2024 seeks to examine the impact of a health facility-based medicines supply chain innovation (Med4All system) on the efficiency of participating health facilities. The baseline data was collected from 151 CHAG health facilities out of a universe of 316 yet to use the Med4All system. The sample of 151 health facilities was arrived at first, by calculating a sample size deemed to be large enough to isolate the impact of the implementation of the Med4All system on efficiency of participating health facilities. This was done via power calculations that used a design effect of 7% based on the existing literature [18,19,20] and standard deviation of 23.3% as per Jehu-Appiah etal. [12] for mission hospitals in Ghana, an alpha value of 0.05 and default power of 0.8. The power calculations resulted in a an optimal sample size of 119 health facilities to be used for the Med4All study. However, to take care of sample attrition, the sample size of 119 was increased to 151.

The Med4All baseline data was collected using a digitized data collection instrument via the KoboCollect software. For each sampled health facility, data supervisors worked with enumerators recruited from health facilities to collect and enter data on variables of interest (in the case of the current paper: service input and output and characteristics of health facilities) for the years 2019 and 2020 into a data repository. Data on each health facilitiy entered into the data repository was later sent back to senior officials of respective health facilities for validation. Additionally, data collected on each health facility was validated with a version of the same data submitted by each health facility as part of routine report to CHAG. For the purpose of the current study, specific variables used are captured in Tables 1 and 2 below. Note that there was no attrition of health facilities between 2019 and 2020.

Analysis

A two-stage process was used for the analysis. In the first stage, Data Envelopement Analysis (DEA) technique was used to seperately calculate efficiency scores for the 151 health facilities as well as changes in the scores over the 2 years. The DEA technique was also used to compute output improvements needed for inefficient health facilities to become efficient using the 2020 data. The methods are further explained in the paragraphs below.

Determination of inputs and outputs

The determination of inputs and outputs for a group of decision making units (DMUs) is an important aspect of the DEA technique. In the health sector, the main output for healthcare facilities is improved health status [11]. However, proxies are used in measuring the output of improved healthcare since it is difficult to explicitly measure this variable [11, 12, 21]. In a typical health facility, input categories will include human resources (clinical and non clinical staff), equipments (Number of beds, spending on relevant assets), medicines and supplies (spending on medicines and consumerbles etc.) and Land (floor space). Output however includes categories such as preventive and promotive services (immunisation, antenatal care and health outreach), curative care (outpatients care, inpatients care, deliveries, surgeries etc.) and anxcillary services (laboratory test, imaging etc.). In line with these proxies and availability of data in the Med4All dataset, we selected human resources (number of clinical and non-clinical staff) and capital (number of beds, general expenditure etc.). Information on floor space was however not available. In the case of input, we selected preventive and promotive services (immunisation, antenatal visits), curative services (outpatients, inpatients visits and number of deliveries) and axcillary services ( number of laboratory test) as per Table 1.

Efficiency and the DEA model

Efficiency which measures the relative performance of DMUs to the best performing DMUs on the production possibility frontier can either be technical or allocative [22]. Technical efficiency (focus of this study) is the capacity of a DMU to use the minimum set of inputs to achieve a certain level of output (input-oriented) or produce the maximum set of outputs with a given level of inputs (output-oriented).

Unlike parametric approaches that relies on economic theory, DEA which was first proposed by Farrell [22], measures relative efficiency through a linear programming technique and is guided by data to determine the location and shape of the efficiency frontier. The original model was further developed (i.e. CCR model) to assume constant returns to scale (CRS) and therefore capture sensitivity in measuring technical efficiency [23]. Intuitively, CRS means that a percentage change in inputs results in the same percentage change in outputs. However, this is implausible in real market situations due to imperfect market conditions and regulatory changes. Thus, the DEA model was further developed (i.e. the BCC model) to assume variable returns to scale (VRS).

The CCR model determines the gross efficiency of the DMU by measuring the ability of the DMU to convert its inputs into outputs. On the other hand, the BCC model decomposes technical efficiency into Pure Technical Efficiency (PTE) and Scale Efficiency (SE) [24], which is related to the size of the DMU. There are two types of SEs in an inefficiency situation. These are decreasing returns to scale (DRS), which reflect a situation where the size of a DMU may be too large for its activities and therefore results in diseconomies of scale. Increasing returns to scale (IRS) on the contrary reflect a situation where the DMU is too small for the size of its operations and therefore leads to economies of scale. Under the VRS or CRS approach, relative efficiency can be measured using the input-oriented or output-oriented approach. In DEA, each DMU can choose any combination of inputs and outputs to maximise efficiency, which technically is the ratio of total weighted output to total weighted input. Thus, DMUs that employ less input to produce more outputs are deemed to be on the efficiency frontier’s edge (i.e. efficient) and therefore have an efficiency score of 1 or 100%. On the other hand, DMUs within the production possibility set are regarded as inefficient with a score of less than 1 or 100%. DEA's main advantage is its ability to utilise multiple inputs and outputs and its simplicity in interpretation. For a detailed discussion of the advantages and limitation of the use of DEA refer to Jehu-Appiah et al. [12].

For this study, we consider N health facilities (or DMUs) where each health facility utilises \(x\) inputs and \(y\) outputs, where \({x}_{i}\) represent the \(i\text{th}\) input and \({y}_{j}\) represent the jth output. The output oriented CCR model is described by the following linear programming model:

Subject to

This study uses the BCC output-oriented approach, because inputs are limited and health facility managers are not able to change that in the short-run in lower-middle income countries like Ghana. Thus, it is more reasonable for health facility managers to focus on maximising output given the set of existing inputs. Additionally the BCC model makes it possible to decompose computed efficiency scores into pure technical efficiency and scale efficiency. The variable \({E}_{m}\) represents the virtual output of the health facility \(m,\) which is a linear combination of all the outputs of the health facility, where each output is given the weight \({a}_{j}\). The objective function seeks to choose weights \({a}_{j}\) for health facility \(m\) that maximises its outputs. The output-oriented model seeks to maximise the output given the set of input levels. Thus, the linear programming model constraints the linear combination of inputs for health facility \(m\) to be equal to 1, as shown in the first part of Eq. 1b. The quantity \({b}_{jm}\) represents the weight for input \(j\) for health facility \(m.\) The second part of the constraint indicate that for each DMU, the difference between the weighted sum of all outputs and the weighted sum of inputs of all the DMUs is less than or equal to zero. This constraint allows the respective input and output weights \({a}_{jm}\) and \({b}_{jm}\) to be selected such that the efficiencies of other DMUs are restricted to lie within the closed interval of 0 and 1.

Under the VRS approach, the output oriented CCR multiplier model is modified by introducing the quantity \({z}_{jm}\) to the objective function and constraints. The health facility or DMU exhibits increasing returns to scale if \({z}_{jm}>0\) and decreasing returns to scale if \({z}_{jm}<0\). The health facility experiences a constant returns to scale if \({z}_{jm}=0\). The BCC model is provided by the following equations.

Subject to

It is important to emphasise that traditional DEA approach can be susceptible to potential bias in the estimation of efficiency scores due to outliers and statistical noise. To address this, the study employed the Simar and Wilson bootstrapping method. This approach provides bias corrected scores with an accompanying 95% confidence interval. However, our assessment show that the distribution of the traditional efficiency scores mimics that of the computed bias corrected scores. This is also shown in the Kendall’s rank correlation coefficient which shows a substantial level of association between the ranks for the traditional efficiency scores and the bias corrected scores (See Table AP-2.1 of Appendix 2). Turkson and colleagues [25] explain that a consistent mirroring of traditional and bias corrected efficiency scores provide additional justification that the traditional efficiency scores are not subject to the risk of potential bias and outliers. Secondly, unlike the traditional efficiency scores, it is difficult to decompose the bias corrected scores into respective scale efficiencies and also assess returns to scale under scale efficiency. Thus, given the added value to the study of scale efficiency and returns to scale, the traditional efficiency scores were used. Notwithstanding, the bias corrected scores and respective confidence intervals are provided in Tables AP-2.2 to AP-2.4 in Appendix 2.

In addition to the efficiency scores, The DEA model creates an efficiency frontier where efficient DMUs who achieve the largest possible outputs for their inputs lie on the frontier (under the output-oriented model). Conversely, DMUs, (i.e. health facilities), that are inefficient do not lie on the frontier. Efficient DMUs that share similar input–output characteristic with their inefficient DMUs can act as peers. Thus, certain efficient DMUs could be used as benchmarks for inefficient DMUs. Mathematically, the DEA model determines the peers for each inefficient DMU by solving the dual of the primal envelopment model (See Ramanathan [26] for more information on the mathematical solution of the primal and dual problems). Following from the model properties, this study proceeded to identify the peers for each inefficient DMU in order to estimate the potential improvement in output required for inefficient DMUs to become efficient.

Results

Health facility characteristics

Data was collected among 151 CHAG health facilities, of which half of the sample (50.3%) constituted of lower-level health facilities, slightly less than half (45.7%) were primary care hospitals and the rest (4%) were secondary care hospitals. Sampled health facilities have been in existence for a minimum of 1 year and a maximum of 71 years, with an average of 29 years in 2019 and 30 years as of 2020. In addition, majority of health facility CEOs were males (74%), with majority of them having either a bachelor’s degree (50.3%) or a masters degree or higher (39.7%). Table 2 provides summary characteristics of health facilities studied.

Input and output characteristics

Health facilities surveyed had a minimum of 2 and a maximum of 357 beds, with an average of 56 beds for 2019 and 2020. Total expenditure including salaries increased from GHS 661 million in 2019 to about GHS 727 million in 2020, with the average increasing from GHS4.38 million to GHS4.81 million for the same period. However, average total expenditure exclusive of salaries for 2020 reduced by 9% from the 2019 figure of GHS 1.67 million. In 2019, 122 out of the 151 health facilities sampled had at least one temporary clinical staff, but reduced by 4% to 117 facilities in 2020. Temporary clinical staff reduced by 40% from 1679 in 2019 to 1015 in 2020. The number of permanent clinical staff also dropped by 2% from 13,890 in 2019 to 13,650 in 2020. On the contrary, the number of temporary nonclinical staff increased significantly between 2019 (855) and 2020 (1974). The total number of permanent non-clinical staff on the other hand reduced by 54% between 2019 and 2020.

For output, the average number of inpatient and outpatient visits reduced by 37% and 9% respectively between the 2 years. Average number of ANC visits increased by 2%, while the average number of deliveries per health facility increased by 8%. The average number of children immunized increased by 11%, whereas laboratory tests reduced by 9%. Table 3 presents summary statistics of inputs and output variables used.

Analysis of efficiency scores

The technical efficiency and related decomposed efficiency scores in 2020; pure technical and scale efficiencies, were obtained from the inputs and outputs identified in the DEA model. The results of the DEA analysis (see Table 4) show an average Technical Efficiency (TE) score of 0.73 with a standard deviation of 0.28. Also, 59 health facilities, representing 39% of the sample, were technically efficient, thus having a TE score of 1.

Overall, Scale Efficiency (SE) and Pure Technical Efficiency (PTE) scores after decomposition were 0.92 and 0.79 with standard deviations of 0.11 and 0.27 respectively (see Table 4).

The low dispersion in the distribution of individual SE scores contributed to its high average scores. The SE scores recorded the lowest standard deviation, which shows that most of the SE scores for the health facilities were not highly dispersed away from the mean. Again, Fig. 1 shows that a large proportion of health facilities sampled had higher SE scores, although these were not 1. For instance, 78 health facilities (representing 52%) had SE scores between 0.75 and 0.99, compared with 24 health facilities (representing 16%) and 27 health facilities (representing 18%) with respective PTE and TE scores ranging between 0.75 and 0.99.

Table 4 equally summarises efficiency scores based on the health facility type (Primary Care Hospitals—PCH, Secondary Care Hospitals—SCH, and Other Lower Level Health Facilities—OLHF—i.e. Clinics, CHPS Compounds and Health Centres). SCH recorded the highest average PTE score of 0.96, while PCH followed with an average PTE score of 0.81. OLHF recorded the least PTE score of 0.77, but the highest average SE score of 0.95, followed by SCH with an average SE score of 0.90 and PCH with the least SE score of 0.88. OLHF recorded the second-highest TE score of 0.74, driven mostly by a higher SE score. PCH however recorded the least TE score of 0.72.

Again, Table 4 shows that a substantial proportion of SCH were on the efficiency frontier (i.e. TE score of 1). Wheras 66.7% and 50% of SCH had a PTE and SE score of 1 respectively, only 2 (33.3%) SCH had a TE score of 1. For PCH’s, 31(44.9%) and 22 (31.9%) facilities respectively had PTE and SE scores of 1, with the number of PCH’s having a TE score of 1 being 22 (31.9%). For the OLHF sample, 51.3% (39) and 55.3% (42) had a PTE and SE score of 1 respectively, with 46.1% of the sample recording a TE of 1. This implies that PCHs had a lower proportion of their sample on the efficiency frontier.

Assessment of returns to scale

Table 5 presents the proportions of health facilities that exhibit constant, increasing and decreasing returns to scale according to the type of health facility. The results show that the OLHF had the highest proportion of health facilities exhibiting CRS (46%), followed by SCH (33%) and PCH (32%). Comparatively, PCH had the highest proportion of health facilities exhibiting DRS (65%), whereas OLHF had the highest proportion of health facilities experiencing IRS (36%).

Potential output improvement by the type of health facility

The DEA model was used to compute general efficiency scores of health facilities. Subsequently, it was used to identify possible output improvements needed for inefficient health facilities to be efficient. This section outlines improvements in the various output variables used in computing the efficiency scores.

As per Fig. 2 the highest level of improvement required for efficiency emerged from OLHF, followed closely by PCH. The DEA model recommends that the sampled health facilities within OLHF need to improve the specified outputs by 39% to ensure 100% efficiency. On the other hand, PCH require an improvement of about 33%, while SCH require an improvement of about 7% in their specified outputs. Generally, the biggest improvement required for efficiency is related to the number of immunizations (see Fig. 3). The model required that immunizations must be improved by about 45%, followed by the number of ANC visits which required improvements of 38%. Outputs requiring the least level of improvement were inpatient days (20%), outpatient days (24%) and the number of laboratory tests (26%).

As per Table 6, further analysis was conducted to estimate expected improvement (i.e. by output and type of health facility) required for health facilities to operate at the frontier.

For PCH, immunization emerged as the output requiring the most improvement (50%), followed by deliveries and laboratory tests, requiring 34% and 26% improvement respectively, outpatient visits requiring 25% improvement and average inpatient days, requiring a 21% improvement to ensure that such facilities are placed on the efficiency frontier.

The results also suggest that SCH generally require lower levels of output improvement to be efficient. The number of laboratory tests emerged as the output needing the most improvement (15%). This was followed by the need for 9%, 6%, 5%, 3% and 3% improvements/increase in immunization, outpatient days, deliveries, ANC visits and inpatient days respectively.

The most significant improvement required for lower level health facilities came from the number of immunizations. The model require that lower level health facilities improve their number of immunizations by 46% from the current value of about 2600 to about 3800. The number of inpatient and outpatient days were also to be improved by 42% and 32%, respectively. Lower level health facilities were also required to improve ANC visits, deliveries and laboratory tests by 41%, 35% and 37% respectively. Analysis of output improvement for individual health facilities is provided in Table AP-1.3 in the Appendix.

Changes in efficiency scores of health facilities

As per Table 7, Overall TE improved significantly by 11.1% from 2019 to 2020 \(\left(t=4.14;p=0.00\right).\) The analysis shows that overall TE was generally impacted mainly by changes in SE. The average SE increased significantly by about 11.5% between 2019 and 2020 \((t=6.94;p=0.00)\). On contrary, there was no significant increase in PTE between 2019 and 2020 \((t=0.9,p=0.18)\). Table 8 further indicates an improvement in the number of efficient health facilities. For instance, purely technical efficient (i.e. PTE) and technically efficient (i.e. TE) health facilities increased by 15% and 11% respectively between 2019 and 2020. There was however no substantial change in the number of scale-efficient health facilities.

Table 8 summarises efficiency scores based on health facility type. The average TE score for PCH improved by about 18%, whereas their PTE scores improved by about 4%. SE scores for PCH also improved by 15%, from 0.77 in 2019 to 0.88 in 2020. Secondary Care Hospitals, on the other hand, witnessed a substantial increase in overall TE scores by about 35. This increase was largely contributed by the significant increase in their SE scores (from 0.68 in 2019 to 0.90 in 2020, representing about 32% increase). OLHF improved their TE scores by about 4% but had a marginal reduction in PTE scores by about 3%. SE scores for lower level health facilities also improved by about 7%, from 0.89 in 2019 to 0.95 in 2020.

Table 9 provides changes in returns to scale between 2019 to 2020. The results show that in 2019 about 48 health facilities, representing approximately 32%, were scale efficient, exhibiting constant returns to scale. In 2020, the sample’s proportion of scale-efficient health facilities increased by 23% to 59 health facilities. Conversely, health facilities exhibiting decreasing returns to scale reduced from 80 to 62 in 2020. For these two years, health facilities experiencing DRS formed the majority. Health facilities exhibiting IRS increased by about 30%, from 23 health facilities in 2019 to 30 in 2020.

As per Table 10, the number of PCH that showed CRS increased from 13 to 35 facilities. There was also a reduction in PCH experiencing DRS and IRS. The number of SCH experiencing complete scale efficiency (CRS) increased from 1 to 2 health facilities in 2020. However, the number of SCH experiencing DRS reduced by 2, and those exhibiting IRS increased from 0 to 1 in 2020. The number of OLHF exhibiting CRS increased from 34 to 35, whiles those exhibiting DRS reduced from 22 to 14 and IRS increased from 20 to 27.

Discussion

The study sought to examine the level and changes in efficiency from 2019 to 2020 as well as the determinants of variation in the efficiency of CHAG health facilities. The findings in general indicate that 59 health facilities (39%) out of a total of 151 in 2020 were efficient, with an average TE score of 0.73, compared to 48 health facilities (32%) with an average TE score of 0.67 that were efficient in 2019. The results and their implications for further research and policy are discussed below.

The results indicate that there was a net increase of 26% in inputs, driven mostly by a major increase (131%) in temporary non-clinical staff, and attrition of about 40% and 54% of temporary clinical staff and permanent non-clinical staff respectively. On the contrary, there was a net reduction of 34% in outputs, driven mainly by a reduction in in-patient days (37%), immunization (11%), outpatients visits and laboratory test of 9% respectively. The reason for replacing permanent non-clinical staff with temporal non-clinical staff is not directly apparent. It may well be that the permanent non-clinical staff had transitioned through the normal process (retirement, transfers etc.) and were replaced with temporal hands to help deal with the pressure arising from the COVID-19 pandemic in 2020. Notwithstanding the above explanation, it is important to emphasise that an attrition of 54% of permanent staff in a single year and the need to fill the gap with 131% increase in temporal staff of the same category may also reflect the poor nature of human resource practices and planning among the sampled health facilities. The net reduction in 2020 output may be attributed to the effect of the COVID-19 pandemic. There is evidence to suggest that the COVID-19 pandemic reduced inpatient days and OPD attendance in health facilities even though the situation improved later on [27, 28].

It is however significant to know that inspite of the COVID-19 pandemic, average TE score and sample of health facilities operating at the frontier improved from the 2019 level by 10 and 22 percentage points respectively. More importantly, the average TE score (0.74) and sample of health facilities operating at the frontier (39%) in 2020 constituted an improvement on earlier findings on mission health facilities (i.e. a 2014 paper based on 2005 data and using the same inputs/output), where the average TE score was 0.69 with 21.4% of the sample found to operate at the frontier [12]. The improvements may be related to internal efforts within CHAG and its health facilities to improve care delivery and for that matter efficiency. CHAG for example, has been collaborating with an NGO (PharmAccess) to implement strategic interventions like; Claim-IT, Med4All and recently SafeCare, to respectively improve insurance claims management, pharmaceutical supply chain and quality healthcare delivery [29,30,31]. The aggregate effect of these strategic interventions as well as local efforts may together be responsible for the improvement in average efficiency and the percentage of CHAG health facilities that were found to be efficient in 2020 compared to 2019 and 2014.

Although the overall average TE, PTE and SE scores were relatively high, it is important to point out that it is not the result of a higher number of health facilities being efficient but rather a few health facilities with very high efficiency scores. For instance, the proportion of the sampled health facilities that were efficient from a fully technical (31.8% in 2019 and 39.2% in 2020), pure technical (34.4% in 2019 and 44.4% in 2020) and scale (49% in both years) efficiency perspective were low. This implies that only a small number of health facilities were efficient. This will mean the need for health facility managers to work to improve the levels of efficiency in their health facilities. An option for health facility managers will be to improve outputs in line with the estimates for the different outputs at the different hierarchy of health facility as per the estimates in Table 7, especially for those health facilities that are experiencing increasing returns to scale. However, for most of these health facilities, increasing output may mean efforts to stimulate demand, which may depend on the size of the catchment population and ability of patients to pay especially in the absence of insurance. Given the current national health insurance coverage (54% in 2021) [32], which is likely to be lower among rural dwellers where CHAG health facilities operate, efforts to stimulate demand may end up being counterproductive, especially if the new users are not able to pay for their care.

In the absence of a bigger catchment population and higher health insurance coverage to contribute to the extra demand and also pay for services rendered, health facility managers and policy makers at CHAG can work together to consider the option of input redistribution as suggested by Jehu-Appiah et al. [12]. A typical case is PCH that experienced the lowest average efficiency score. For example, PCH exhibiting CRS had average clinical (153) and non-clinical [27] staff that were lower than average clinical (163) and non-clinical (49) staff for PCH exhibiting DRS. Even more importantly, PCH experiencing DRS had relatively smaller number of beds (88) compared to their counterparts experiencing CRS (106). This clearly indicate that PCH experiencing DRS are over staffed. Given that existing capacity will not support expansion in output in the short-term, redistribution of staff to under staffed PCH will be an option to pursue. In the current case, PCH experiencing IRS seem to be understaffed, with average clinical [20] and non-clinical [17] staff that is much lower than their CRS counterparts. Thus, PCH experiencing IRS could be candidates to receive excess staff from those experiencing DRS.The situation for OLHF seem not to be entirely different as the aggregate of clinical and non-clinical staff (41) for those experiencing CRS is lower than those experiencing DRS (64). In addition, the staff redistribution could also be from PCH to OLHF since PCH have a higher proportion of health facilities experiencing DRS wheras OLHF have a higher proportion of health facilities experiencing IRS.

It is important however, to emphasise that staff reallocation may not be easy to carry out especially if the direction of reallocation is from relatively bigger size and better resourced to smaller size health facilities located in resource-poor settings. There is vast literature on different cadre of health care staff refusing postings to resource-poor settings [33,34,35], resulting in over concentration of healthcare staff in well resourced health facilities in urban centres. Thus, without motivation and compelling incentives, a staff reallocation exercise is unlikely to succeed.

Consistent with the existing literature [11, 12], the findings equally indicate that bigger health facilities (SCH in this study) were more efficient than relatively smaller size health facilities (PCH and OLHF). In many LMICs such as Ghana, bigger hospitals are located in bigger towns and cities, making it possible for them to have access to different cadre of skilled health workforce [33,34,35] and thereby improving decision-making capacity. Additionally, their big size means that they can benefit from economies of scale in several areas of their operations. This suggest that bigger health facilities can take advantage of their decision-making capacity and size to be both technically and scale efficient compared to their relatively small counterparts.

Notwithstanding the uniqueness of the current study in terms of its access to a panel dataset of 151 facilities that covers both pre-and during COVID-19 periods, there are limitations that are worth noting. First, the study uses a sample of health facilities that are not homogeneous and therefore can result in variation in the quality of labour inputs. Also the health facilities were not adjusted for case-mix. This may have implications for changes either to inputs or whole units for purposes of improving efficiency. Finally, we acknowledge that output indcators such as Disability Adjusted-Life Expectancy (DALE) and Quality-Adjusted Life Years (QALY) better capture the objective function of health facilities [15]. However, given their unavailability, proxy indicators that have been used by prior studies [11,12,13,14,15] were used.

Conclusion

The findings of the study indicate that overall average efficiency in CHAG health facilities has improved over the past two decades. Additionally, the fact that CHAG health facilities still improved their level of efficiency even in the midst of COVID-19 suggest the extent to which they have built their levels of resilience to external shocks such as COVID-19 over the years. The results are also in line with existing literature that indicates that bigger health facilities are relatively more efficient than their smaller counterparts.

Nevertheless, it is crucial to emphasise the need for health facility managers and decision-makers at CHAG to examine the factors that promoted efficiency improvements in those health facilities that were efficient so that less efficient health facilities can learn from them to improve on their levels of efficiency. Added to this is also the need to improve health insurance coverage since that will be crucial in ensuring effective additional demand for health services and therefore improvement in output, especially for those health facilities experiencing IRS and will therefore need to improve their outputs. For those experiencing DRS, staff reallocation has been suggested. Thus, decision makers, both in health facilities and CHAG will need to collaborate and carefully plan any staff reallocation exercise in a manner that meets the needs and aspirations of the different stakeholders.Strengthening the human resources planning function will also be important in managing the movement of the different cadre of health staff to avoid adverse incidence such as the 54% attrition of permanent staff in 2020. This will be important in limiting opposition to the reallocation exercise and therefore ensure success.

Availability of data and materials

All data generated and analysed during this study are included in this manuscript.

Abbreviations

- GDP:

-

Gross Domestic Product

- UHC:

-

Universal Health Coverage

- LMICs:

-

Lower Middle Income Countries

- CHAG:

-

Christian Health Association of Ghana

- DMUs:

-

Decision Making Units

- DEA:

-

Data Envelopement Analysis

- CRS:

-

Constant Returns to Scale

- VRS:

-

Variable Returns to Scale

- PTE:

-

Pure Technical Efficiency

- SE:

-

Scale Efficiency

- DRS:

-

Decreasing Returns to Scale

- IRS:

-

Increasing returns to scale

- TE:

-

Technical Efficiency

- SE:

-

Scale Efficiency

- PTE:

-

Pure Technical Efficiency

- PCH:

-

Primary Care Hospitals

- SCH:

-

Secondary Care Hospitals

- OLHF:

-

Other Lower Level Health Facilities

- CHPS:

-

Compounds and Health Centres

- ANC:

-

Antenatal Care

References

Mbau R, Musiega A, Nyawira L, Tsofa B, Mulwa A, Molyneux S, et al. Analysing the efficiency of health systems: a systematic review of the literature. Appl Health Econ Health Policy. 2023;21(2):205–24.

Jakovljevic M, Timofeyev Y, Ekkert NV, Fedorova JV, Skvirskaya G, Bolevich S, et al. The impact of health expenditures on public health in BRICS nations. J Sport Heal Sci. 2019;8(6):516.

Corporation IF. The Business of Health in Africa: Partnering with the private sector to improve people’s lives. Washingt (District Columbia) Int Financ Corp. 2007.

Jakovljevic M, Getzen TE. Growth of global health spending share in low and middle income countries. Front Pharmacol. 2016;7:21.

Babalola TK, Moodley I. Assessing the efficiency of health-care facilities in Sub-Saharan Africa: a systematic review. Heal Serv Res Manag Epidemiol. 2020;7:2333392820919604.

Nassar H, Sakr H, Ezzat A, Fikry P. Technical efficiency of health-care systems in selected middle-income countries: an empirical investigation. Rev Econ Polit Sci. 2020;5(4):267–87.

World Health Organization. Technical efficiency of health systems in the WHO African Region. 2021.

Cylus J, Papanicolas I, Smith PC. A framework for thinking about health system efficiency. Heal Syst Effic. 2016;1:3.

Zere E, Mbeeli T, Shangula K, Mandlhate C, Mutirua K, Tjivambi B, et al. Technical efficiency of district hospitals: evidence from Namibia using data envelopment analysis. Cost Eff Resour Alloc. 2006;4(1):1–9.

Novignon J, Nonvignon J. Improving primary health care facility performance in Ghana: efficiency analysis and fiscal space implications. BMC Health Serv Res. 2017;17:1–8.

Akazili J, Adjuik M, Jehu-Appiah C, Zere E. Using data envelopment analysis to measure the extent of technical efficiency of public health centres in Ghana. BMC Int Health Hum Rights. 2008;8(1):11.

Jehu-Appiah C, Sekidde S, Adjuik M, Akazili J, Almeida SD, Nyonator F, et al. Ownership and technical efficiency of hospitals: evidence from Ghana using data envelopment analysis. Cost Eff Resour Alloc. 2014;12(1):9.

Zere E, Kirigia JM, Duale S, Akazili J. Inequities in maternal and child health outcomes and interventions in Ghana. BMC Public Health. 2012.

Akazili J, Adjuik M, Chatio S, Kanyomse E, Hodgson A, Aikins M, et al. What are the technical and allocative efficiencies of public health centres in Ghana? Ghana Med J. 2008;42(4):149.

Osei D, d’Almeida S, George MO, Kirigia JM, Mensah AO, Kainyu LH. Technical efficiency of public district hospitals and health centres in Ghana: a pilot study. Cost Eff Resour Alloc. 2005;3(1):1–13.

Christian Health Association of Ghanna. Annual Report 2021. Accra, Ghana; 2022.

Abekah-Nkrumah G, Ofori CG, Antwi M, Attachey AY, Wit TFR de, Janssens W, et al. Effect of the Implementation of Med4All Platform on Business Performance of Selected Health Facilities in Ghana: Baseline Report. Accra; 2023.

Verbeke F, Ndabaniwe E, Van Bastelaere S, Ly O, Nyssen M. Evaluating the impact of hospital information systems on the technical efficiency of 8 Central African hospitals using Data Envelopment Analysis. J Heal Inform Afr. 2013;1(1):11–20.

Verbeke F, Karara G, Nyssen M. Evaluating the impact of ICT-tools on health care delivery in sub-Saharan hospitals. Stud Health Technol Inform. 2013;192:520–3.

Lee J, McCullough JS, Town RJ. The impact of health information technology on hospital productivity. RAND J Econ. 2013;44(3):545–68.

Kohl S, Schoenfelder J, Fügener A, Brunner JO. The use of Data Envelopment Analysis (DEA) in healthcare with a focus on hospitals. Health Care Manag Sci. 2019;22(2):245–86.

Farrell MJ. The measurement of productive efficiency. J R Stat Soc Ser A. 1957;120(3):253–81.

Charnes A, Cooper WW, Rhodes E. Measuring the efficiency of decision making units. Eur J Oper Res. 1978;2(6):429–44.

Banker RD, Charnes A, Cooper WW. Some models for estimating technical and scale inefficiencies in data envelopment analysis. Manage Sci. 1984;30(9):1078–92.

Turkson C, Liu W, Acquaye A. A data envelopment analysis based evaluation of sustainable energy generation portfolio scenarios. Appl Energy. 2024;363: 123017.

Ramanathan R. An introduction to data envelopment analysis: a tool for performance measurement. Sage; 2003.

Abekah-Nkrumah G, Abor PA. Engagement of the private health sector in the delivery of Ghana’s COVID-19 Emergency Response. Congo Brazaville; 2022.

Abekah-Nkrumah G, Dumenu MY, Asante K, Armah-Attoh D, Dome MZ, Essima LO, et al. Impact Of Covid-19 on Government’s Reform Programmes in Ghana [Internet]. Accra; 2021. (Prepared by Ghana Center For Democratic Development (CDD-Ghana) and Published by Deutsche Gesellschaft für Internationale Zusammenarbeit (GIZ) GmbH). Available from: https://cddgh.org/wp-content/uploads/2021/12/CDD-GIZ_COVID-19-Studies-Ghana.pdf

Abekah-Nkrumah G, Antwi M, Attachey AY, Janssens W, Rinke de Wit TF. Readiness of Ghanaian health facilities to deploy a health insurance claims management software (CLAIM-it). PLoS ONE. 2022;17(10): e0275493.

CITI News Room. CHAG to make savings from drug procurement through CHAG–PharmAccess Med4All digital platform. 2022. Available from: https://citinewsroom.com/2022/03/chag-to-make-savings-from-drug-procurement-through-chag-pharmaccess-med4all-digital-platform/

Myjoyonline. NHIA commends CHAG, PharmAccess, and HeFRA for promoting quality healthcare in Ghana. 2023. Available from: http://myjoyonline.com/nhia-commends-chag-pharmaccess-and-hefra-for-promoting-quality-healthcare-in-ghana/

National health Insurance Authority (NHIA). NHIA clarifies issues raised by the Ranking Member on the Parliamentary Select Committee on Health | 5/11/2022. 2023. Available from: https://www.nhis.gov.gh/News/nhia-clarifies-issues-raised-by-the-ranking-member-on-the-parliamentary-select-committee-on-health-5391

Zihindulai G, MacGregor RG, Ross AJ. A rural scholarship model addressing the shortage of healthcare workers in rural areas. South Afr Heal Rev. 2018;2018(1):51–7.

Anyangwe SCE, Mtonga C. Inequities in the global health workforce: the greatest impediment to health in sub-Saharan Africa. Int J Environ Res Public Health. 2007;4(2):93–100.

Okyere E, Mwanri L, Ward P. Is task-shifting a solution to the health workers’ shortage in Northern Ghana? PLoS One. 2017;12(3): e0174631.

Acknowledgements

The authors acknowledge the contributions of health personnel in all the facilities where data for the paper were collected. Additionally, the authors acknowledge the contribution of Grace Akua Ogoe-Anderson of the CHAG secretariat in arranging for interviews with health facilities.

Funding

The article is based on a report funded by the PharmAccess Group Ghana. Pharm Access provided support in the form of consulting fees for the corresponding author (GAN), who was mainly responsible for the study design, data collection and analysis, report writing, decision to publish and preparation of the manuscript. Staff members of the funder who are co-authors of this manuscript provided inputs and review comments which are clearly articulated in the contribution section.

Author information

Authors and Affiliations

Contributions

We declare that we are all authors of this manuscript. GAN conceptualized and designed the study with inputs and comments from other authors (MA, AYA, TFRW, WJ, JD, CD GS). GAN conducted the literature review and was reviewed by TFRW and WJ. The study design and methods were carried out by GAN and CGO and reviewed by WJ. Data collection instruments were developed by GAN and reviewed with comments provided by all the remaining authors (CGO, MA, AYA, TFRW, WJ, JD, CD GS) to improve the instruments. Data collection was done by GAN, with cleaning and analysis done by GAN and CGO. The first draft of the manuscript was produced by GAN and reviewed by the remaining Authors to improve the manuscript. Final editing was done by GAN and all authors have approved the final version for submission.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval was sought from the Ethics Review Board of the Christian Health Association of Ghana (CHAG), with ethical approval number: CHAG-IRB-10032020. Written informed consent to participate was obtained from all participants. All methods used in the study were carried out in accordance with relevant regulations and guidelines.

Consent for publication

Not applicable.

Competing interests

This paper was developed from a technical report that was funded by PharmAccess Ghana. Thus, authors who work for PharmAccess have a financial competing interest, in that they receive salaries as staff from PharmAccess. Notwithstanding that PharmAccess provided funding for the paper, study design; collection, analysis, and interpretation of data; writing of the paper; and decision to submit the paper for publication was mainly taken by the lead author (researcher) with contributions from co-authors outside of PharmAccess. The role of co-authors from PharmAccess as has been detailed out in the contribution section was providing comments into the study design; collection, analysis, and interpretation of data; writing of the paper to improve the quality of the paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: List of additional tables on efficiency computation

Appendix 2: Traditional and biased corrected efficiency estimates

See Tables 14, 15, 16, and 17.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abekah-Nkrumah, G., Ofori, C.G., Antwi, M. et al. Technical efficiency of ghanaian health facilities before and during the COVID-19 pandemic. Cost Eff Resour Alloc 22, 67 (2024). https://doi.org/10.1186/s12962-024-00575-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12962-024-00575-8