Abstract

We present a family of fifth-order iterative methods for finding multiple roots of nonlinear equations. Numerical examples are considered to check the validity of the theoretical results. It is shown that the new methods are competitive with other methods for finding multiple roots. Basins of attraction for the new methods and some existing methods to observe the dynamics in the complex plane are drawn.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1. INTRODUCTION

In this study, we consider the problem of finding the multiple root \(\alpha\) of multiplicity \(m\), i.e., \(f^{(j)}(\alpha)=0\), \(j=0, 1,2,\ldots, m-1\), and \(f^{m}(\alpha)\neq0\), of a nonlinear equation \(f(x)=0\), where \(f(x)\) is a smooth real or complex function of a single real or complex variable \(x\). An important and basic method for finding multiple roots is the modified Newton method [1] defined by

which converges quadratically and requires the knowledge of the multiplicity \(m\) of the root \(\alpha\).

For improvement of the local order of convergence of scheme (1), some higher-order methods with known multiplicity \(m\) have also been proposed and analyzed. Most of these are of third-order convergence and can be divided into two classes (see [2]). The first class, so called one-point methods, requires evaluation of \(f\), \(f'\), and \(f''\) in each step; examples are the methods by Biazar and Ghanbari [3], Chun and Neta [4], Chun et al. [5], Hansen and Patrick [6], Neta [7], Osada [8], Sharma and Bahl [9], and Sharma and Sharma [10]. The second class is that of multipoint methods, which can be further divided into two subclasses. One subclass uses two evaluations of \(f\) and one evaluation of \(f'\), like the methods developed by Chun et al. [5], Sharma and Sharma [10], Dong [11], Homeier [12], Kumar et al. [13], Li et al. [14], Neta [15], Victory and Neta [16], and Zhou et al. [17]. The other subclass uses one evaluation of \(f\) and two evaluations of \(f'\), like the methods by Kumar et al. [13], Li et al. [14], and Dong [18].

In the multipoint class, some fourth-order methods are also presented. For example, Li et al. [19] have developed six fourth-order methods; four of them use one evaluation of \(f\) and three evaluations of \(f'\), whereas two methods use one evaluation of \(f\) and two evaluations of \(f'\). Li et al. [20], Neta [21], and Neta and Johnson [22] have presented fourth-order methods that require one evaluation of \(f\) and three evaluations of \(f'\).

In this paper, we propose a fifth-order multipoint family of iterative methods for finding the multiple roots of nonlinear equations. In Section 2, the scheme is developed and its convergence properties are investigated. Some particular cases of the family are presented in Section 3. For verification of the theoretical results, some numerical examples are considered in Section 4. A comparison of the new methods with existing methods is also shown in this section. Section 5 considers the basins of attraction for the new methods and some existing methods. Concluding remarks are given in Section 6.

2. DEVELOPMENT OF THE SCHEME

Our aim is to develop an iterative method that increases the convergence order of the basic Newton method. Therefore, we consider Newton’s double scheme for multiple roots:

This is a modified Newton method, two steps of which are grouped together and treated as one step. Consequently, Newton’s double scheme has the fourth order of convergence.

It is clear that scheme (2) requires two evaluations of the function and two evaluations of the derivatives per iteration, and therefore the efficiency index (see [23]) of the scheme is equal to that of basic Newton’s method (1). This means that increase in the order of convergence does not lead to increase in the efficiency of the scheme. So, to improve the efficiency of the scheme by increasing its order, we modify scheme (2) as follows:

where \(u=\sqrt[m]{\frac{f(z_k)}{f(x_k)}}\) and \(H: \mathbb{C}\rightarrow \mathbb{C}\) is an analytic function about a neighborhood of zero.

Through the following theorem we analyze the behavior of scheme (3).

Theorem 1.Let \(f: \mathbb{C}\rightarrow \mathbb{C}\) be an analytic function in a region enclosing a multiple zero \(\alpha\) with multiplicity \(m\). Assume that the initial guess \(x_0\) is sufficiently close to \(\alpha\); then for \(H(0)=1\), \(H'(0)=0\), \(H''(0)=2\), and \(|H'''(0)|<\infty\), the order of convergence of the method defined by \((3)\) is five at least.

Proof. Let \(e_k=x_k-\alpha\). Expanding \(f(x_k)\) and \(f'(x_k)\) at \(\alpha\) into the Taylor series, we have

and

where \(C_j=\frac{m!}{(m+j)!}\frac{f^{(m+j)}(\alpha)}{f^{(m)}(\alpha)}\), and \(D_j=\frac{(m-1)!}{(m+j-1)!}\frac{f^{(m+j)}(\alpha)}{f^{(m)}(\alpha)}\), \(j=1,2,3,\ldots\,\).

Using Eqs. (4) and (5), we can write

Let \(e_{z_k}=z_k-\alpha\). Substituting (6) into the first step of Eq. (3), we get

The Taylor series of \(f(z_k)\) about \(\alpha\) yields

Moreover,

With the help of Eqs. (4) and (8) we get

where

Note that

and therefore, from (10) we have that

After using (11), we can write down the Taylor expansion of \(H(u)\) around zero as follows:

Substituting (8), (9), and (12) into the second step of (3), we get

where

Thus, for us to obtain an iterative method of fifth order, the coefficients of \(e_k^2\), \(e_k^3\), and \(e_k^4\) in error equation (13) should be zero. So, we have the following equations:

solving which gives

Substituting these values into (13), we get the final error equation as

This proves the fifth order of convergence. Hence, Theorem 1 is proved.

3. SOME PARTICULAR CASES

With conditions (14), many special cases of family (3) are possible. Below we present some simple special cases.

Case 1. Suppose \(H(x)=A+Bx+Cx^2\). Then \(H'(x)=B+2Cx\) and \(H''(x)=2C\). Using (14), we get

Thus we have the following new fifth-order method

We denote this method by \(\mbox{NMM}_{5,1}\).

Case 2. Suppose

Then

and

Using conditions (14), we obtain

Subcase 1. Taking \(A=1\), \(B=1\), and \(C=1\) and solving (15), we get \(D=1\), \(E=1\), and \(F=0\). With these values, scheme (3) generates the following fifth-order method:

Let us denote this method by \(\mbox{NMM}_{5,2}\).

Subcase 2. Taking \(A=1\), \(B=0\), and \(C=-1\) and solving (15), we get \(D=1\), \(E=0\), and \(F=-2\). After substitution of these values, the corresponding fifth-order method is given by

We denote this method by \(\mbox{NMM}_{5,3}\).

Remark 1. The computational efficiency of an iterative method is measured by the efficiency index \(EI=p^{1/C}\) (see [23]), where \(p\) is the order of convergence and \(C\) is the computational cost measured as the number of new pieces of information required by the method per iterative step. A ‘piece of information’ typically is any evaluation of the function \(f\) or one of its derivatives. The presented fifth-order methods require four evaluations of the function per iteration, so the efficiency index of the fifth-order methods is \(EI=\sqrt[4]{5}\approx 1.495\) and excels the efficiency indices of the modified Newton method \((EI=\sqrt2\approx 1.414)\), existing third-order methods \((EI=\sqrt[3]{3}\approx 1.442)\), and fourth-order methods (\(EI=\sqrt[4]{4}\approx 1.414\)) presented in [19, 22].

4. NUMERICAL RESULTS

We employ the presented methods \(\mbox{NMM}_{5,1}\), \(\mbox{NMM}_{5,2}\), and \(\mbox{NMM}_{5,3}\) to solve some nonlinear equations and compare their performance with some existing methods. For example, we consider the third-order methods by Dong [11], Neta [15], and Zhou, Chen, and Song [17] and the fourth-order methods by Li, Cheng, and Neta [19] and Li, Liao, and Cheng [20]. These methods are expressed as follows:

Dong Method \((\mbox{DM}_3)\):

Neta Method \((\mbox{NM}_3)\):

Zhou–Chen–Song Method \((\mbox{ZCSM}_3)\):

Li–Cheng–Neta Method \((\mbox{LCNM}_4)\):

where

and

Li–Liao–Cheng Method \((\mbox{LLCM}_4)\):

Table 1 displays the test functions used for the comparison, the multiplicity \(m\), and the root \(\alpha\) correct up to 16 decimal places. All the computations are performed using multiple-precision arithmetic in Mathematica[24]. The computer specifications are as follows: Intel (R) Core (TM) i5-2430M CPU @ 2.40 GHz (32-bit) Microsoft Windows 7 Ultimate 2009. To verify the theoretical order of convergence, we calculate the computational order of convergence \(p_c\) using the formula (see [25])

taking into consideration the last three approximations in the iterative process.

For every method, we record the number of iterations \(k\) needed for convergence to the solution for the stopping criterion

to be satisfied. Tables 2–5 display the numerical results, which include the error \(|x_{k+1}-x_k|\) of approximation for the first three iterations (where \(A(-h)\) denotes \(A \times 10^{-h}\)), the required number of iterations \(k\), the value of \(|f(x_k)|\), and the computational order of convergence \(p_c\), computed by formula (16). The numerical results show that the computational order of convergence is in accordance with the theoretical one. The higher order of convergence of the presented fifth-order methods makes them more accurate than the existing third- and fourth-order methods. We conclude the paper with the remark that many numerical applications require high precision in computations. The results of the numerical experiments show that the high-order efficient methods associated with a multiprecision arithmetic floating point are very useful because they yield a clear reduction in the iterations.

5. DYNAMICS OF METHODS

Study of the dynamical behavior of the rational function associated with an iterative method gives important information about the convergence and stability of the method (see for example [26, 27]). To start with, let us recall some basic dynamical concepts of rational function. Let \(\phi:\mathbb{R}\rightarrow\mathbb{R}\) be a rational function; the orbit of a point \(x_0\in\mathbb{R}\) is defined as the set

of successive images of \(x_0\) by the rational function.

The dynamical behavior of the orbit of a point of \(\mathbb{R}\) can be classified depending on its asymptotic behavior. In this way, a point \(x_0\in\mathbb{R}\) is a fixed point of \(\phi(\alpha)\) if \(\phi(\alpha)=\alpha\) is true for it. Moreover, \(x_0\) is called a periodic point of period \(p > 1\) if it is such that \(\phi^p (x_0) = x_0\) and \(\phi^k (x_0) \neq x_0\) for each \(k < p\). Moreover, a point \(x_0\) is called pre-periodic if it is not periodic but there exists \(k > 0\) such that \(\phi^ k(x_0)\) is periodic. There exist different types of fixed points depending on the associated multiplier \(|\phi'(x_0)|\). Depending on the associated multiplier, a fixed point \(x_0\) is called

\(\bullet\) attractor if \(|\phi'(x_0)|<1\),

\(\bullet\) superattractor if \(|\phi'(x_0)|=0\),

\(\bullet\) repulsor if \(|\phi'(x_0)|>1\), and

\(\bullet\) parabolic if \(|\phi'(x_0)|=1\).

If \(\alpha\) is an attracting fixed point of a rational function \(\phi\), its basin of attraction \(\mathcal{A}(\alpha)\) is defined as the set of pre-images of any order such that

The set of points whose orbits tend to an attracting fixed point \(\alpha\) is referred to as the Fatou set. Its complementary set, called the Julia set, is the closure of the set consisting of repelling fixed points, and establishes the borders between the basins of attraction. That means the basin of attraction of any fixed point belongs to the Fatou set and the boundaries of these basins of attraction belong to the Julia set.

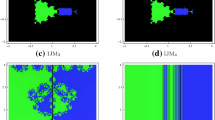

From the dynamical considerations, as the initial point we take \(z_0 \in D\), where \(D\) is a rectangular region in \(\mathbb{C}\) containing all the roots of \(f(z) = 0\). The numerical methods starting at a point \(z_0\) in a rectangle can converge to the zero of the function \(f(z)\) or eventually diverge. We consider the stopping criterion for convergence as \(10^{-3}\) up to a maximum of 25 iterations. If we have not obtained the desired tolerance in 25 iterations, we do not continue and we decide that the iterative method starting at \(z_0\) does not converge to any root. The strategy is as follows. A color is assigned to each starting point \(z_0\) in the basin of attraction of the zero. If the iteration starting from the initial point \(z_0\) converges, then it represents basins of attraction with the particular color assigned to it, and if it fails to converge in 25 iterations, then its color is black.

To study dynamical behavior, we analyze the basins of attraction of the methods on the following two polynomials:

Example 1. In the first example, we consider the polynomial

which has zeros \(\{1,-1\}\) with multiplicity 2. In this case, we used a grid of \(400 \times 400\) points in a rectangle \(D\in\mathbb{C}\) of size \([-2.5,2.5]\times [-2.5,2.5]\). Figure 1 shows the pictures obtained. If we observe the behavior of the methods, we see that the presented new fifth-order method \(\mbox{NMM}_{5,3}\) is more demanding as it possesses larger and brighter basins of attraction. Further, the methods \(\mbox{LCNM}_4\) and \(\mbox{LLCM}_4\) have higher number of divergent points as compared with the other methods.

Example 2. In the second example, let us take the polynomial

having zeros \(\{1, 0.309017\pm 0.951057 i,-0.809017 \pm 0.587785 i\}\). Here, to see the dynamics, we consider a rectangle \(D=[-1.5,1.5]\times [-1.5,1.5]\in\mathbb{C}\) with \(400 \times 400\) grid points. Figure 2 shows basins of attraction for this problem. It can be seen that the method \(\mbox{NMM}_{5,3}\) is the best and the method \(\mbox{LCNM}_4\) contains the highest number of divergent points. Among the rest methods, \(\mbox{LLCM}_4\) has stable basins of attraction.

6. CONCLUSIONS

In this paper, an attempt has been made to develop iterative methods for computing multiple roots. A two-point family of fifth-order methods has been derived in this way, which is based on a modified Newton method and requires two evaluations of \(f\) and two evaluations of \(f'\) per step. Some special cases of the family are presented. The efficiency index of the fifth- order methods is \(E=\sqrt[4]{5}\approx 1.495\), which is better than that of the modified Newton method \((E=\sqrt{2}\approx1.414)\), third- order methods \((E=\sqrt[3]{3} \approx 1.442)\), and fourth-order methods, which require the same number of function evaluations as the presented fifth-order methods. The algorithms are employed to solve some nonlinear equations and are also compared with existing techniques. The numerical results show that the presented algorithms are competitive with methods available in literature. Moreover, the presented basins of attraction have also confirmed better performance of the methods as compared with other established methods in literature. We conclude the paper with the remark that many numerical applications require high precision in computations. The results of the numerical experiments show that the high-order efficient methods associated with a multiprecision arithmetic floating point are very useful because they yield a clear reduction in the iterations.

ACKNOWLEDGMENTS

We are very thankful to the reviewers whose suggestions enabled us to improve the article.

REFERENCES

Schröder, E., Über unendlich viele Algorithmen zur Auflösung der Gleichungen, Math. Ann., 1870, vol. 2, pp. 317–365.

Traub, J.F., Iterative Methods for the Solution of Equations, Englewood Cliffs: Prentice-Hall, 1964.

Biazar, J. and Ghanbari, B., A New Third-Order Family of Nonlinear Solvers for Multiple Roots, Comput. Math. Appl., 2010, vol. 59, no. 10, pp. 3315–3319.

Chun, C. and Neta, B., A Third-Order Modification of Newton’s Method for Multiple Roots, Appl. Math. Comput., 2009, vol. 211, no. 2, pp. 474–479.

Chun, C., Bae, H.J., and Neta, B., New Families of Nonlinear Third-Order Solvers for Finding Multiple Roots, Comput. Math. Appl., 2009, vol. 57, no. 9, pp. 1574–1582.

Hansen, E. and Patrick, M., A Family of Root Finding Methods,Num. Math., 1976, vol. 27, pp. 257–269.

Neta, B., New Third Order Nonlinear Solvers for Multiple Roots,Appl. Math. Comput., 2008, vol. 202, pp. 162–170.

Osada, N., An Optimal Multiple Root-Finding Method of Order Three,J. Comput. Appl. Math., 1994, vol. 51, no. 1, pp. 131–133.

Sharma, R. and Bahl, A., General Family of Third Order Methods for Multiple Roots of Nonlinear Equations and Basin Attractors for Various Methods, Adv. Num. An., 2014, vol. 2014, Article ID 963878; https://doi.org/10.1155/2014/963878.

Sharma, J.R. and Sharma, R., Modified Chebyshev–Halley Type Method and Its Variants for Computing Multiple Roots, Num. Algor., 2012, vol. 61, pp. 567–578.

Dong, C., A Basic Theorem of Constructing an Iterative Formula of the Higher Order for Computing Multiple Roots of an Equation,Math. Num. Sinica, 1982, vol. 11, pp. 445–450.

Homeier, H.H.H., On Newton-Type Methods for Multiple Roots with Cubic Convergence, J. Comput. Appl. Math., 2009, vol. 231, no. 1, pp. 249–254.

Kumar, S., Kanwar, V., and Singh, S., On Some Modified Families of Multipoint Iterative Methods for Multiple Roots of Nonlinear Equations, Appl. Math. Comput., 2012, vol. 218, pp. 7382–7394.

Li, S., Li, H., and Cheng, L., Some Second-Derivative-Free Variants of Halley’s Method for Multiple Roots, Appl. Math. Comput., 2009, vol. 215, iss. 6, pp. 2192–2198.

Neta, B., New Third Order Nonlinear Solvers for Multiple Roots,Appl. Math. Comput., 2008, vol. 202, pp. 162–170.

Victory, H.D. and Neta, B., A Higher Order Method for Multiple Zeros of Nonlinear Functions, Int. J. Comput. Math., 1983, vol. 12, nos. 3/4, pp. 329–335.

Zhou, X., Chen, X., and Song, Y., Families of Third and Fourth Order Methods for Multiple Roots of Nonlinear Equations, Appl. Math. Comput., 2013, vol. 219, pp. 6030–6038.

Dong, C., A Family of Multipoint Iterative Functions for Finding Multiple Roots of Equations, Int. J. Comput. Math., 1987, vol. 21, nos. 3/4, pp. 363–367.

Li, S.G., Cheng, L.Z., and Neta, B., Some Fourth-Order Nonlinear Solvers with Closed Formulae for Multiple Roots, Comput. Math. Appl., 2010, vol. 59, no. 1, pp. 126–135.

Li, S.G., Liao, X., and Cheng, L.Z., A New Fourth-Order Iterative Method for Finding Multiple Roots of Nonlinear Equations,Appl. Math. Comput., 2009, vol. 215, pp. 1288–1292.

Neta, B., Extension of Murakami’s High-Order Non-Linear Solver to Multiple Roots, Int. J. Comput. Math., 2010, vol. 87, no. 5, pp. 1023–1031.

Neta, B. and Johnson, A.N., High-Order Nonlinear Solver for Multiple Roots, Comput. Math. Appl., 2008, vol. 55, no. 9, pp. 2012–2017.

Ostrowski, A.M., Solution of Equations and Systems of Equations, New York: Academic Press, 1966.

Wolfram, S., The Mathematica Book, 5th ed., Wolfram Media, 2003.

Jay, L.O., A Note on Q-Order of Convergence, BIT Num. Math., 2001, vol. 41, pp. 422–429.

Scott, M., Neta, B., and Chun, C., Basin Attractors for Various Methods, Appl. Math. Comput., 2011, vol. 218, pp. 2584–2599.

Magreñan, Á.A., A New Tool to Study Real Dynamics: The Convergence Plane, Appl. Math. Comput., 2014, vol. 248, pp. 215–224.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Translated from Sibirskii Zhurnal Vychislitel’noi Matematiki, 2021, Vol. 24, No. 2, pp. 213–227.https://doi.org/10.15372/SJNM20210207.

Rights and permissions

About this article

Cite this article

Sharma, J.R., Arora, H. A Family of Fifth-Order Iterative Methods for Finding Multiple Roots of Nonlinear Equations. Numer. Analys. Appl. 14, 186–199 (2021). https://doi.org/10.1134/S1995423921020075

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1995423921020075