Abstract

It is noticed that the conventional interpretation of systematic errors (or, what is the same, the list of varieties of systematic errors) generally reflects the actual situation, which does not exclude some refilling and specification with the appearance of new types of measurements and the sophistication of the existing ones. These additions should not mean the abolition of the general metrological, time-tested classification. In recent decades, trends have appeared aimed at replacing the meanings of terms and definitions with those uncharacteristic of them, or at introducing terms that have no real meaning at all into metrological practice. This, in turn, led to the emergence of erroneous concepts ensuring the accuracy of the results of quantitative chemical analysis and to the creation of far from perfect regulatory documents, which, among other reasons, was due to an uncritical attitude to international standards. Such concepts bring inaccuracies and erroneous recommendations into monographs and textbooks containing sections related to the metrological aspects of chemical analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Some general remarks. By the middle of the 20th century, a problem has appearred the essence of which was that the experimental data obtained for identical sampless and under nominally identical conditions, but in different laboratories by different researchers, were frequently inconsistent. These included data concerning the composition of substances, the values of physicochemical parameters characterizing numerous indicators (kinetic, thermodynamic, etc.) of processes, and fundamental constants. This problem has remained valid and is becoming increasingly important because of the inclusion of an exponentially increasing number of substances into research and practical applications, increasing requirements for the performance of high-duty materials, and the quality of foods regarding the tightening of environmental standards. This problem is common for various measurements, implementing which is aggravated by the difficulty or the impossibility of ensuring sufficient control over a significant part of the experimental conditions or, in other words, by many uncontrolled and difficult-to-control sources of errors. These sources ultimately lead to shifts in an entire series of the results of measurement from the true value of the measured quantity, thus forming an error acting as a systematic error under stable experimental conditions. Systematic errors have increasingly become the predominant factor in the total error of the results of measurement, thus leading to discrepancies noted in the experimental data.

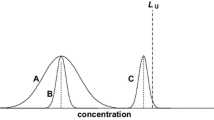

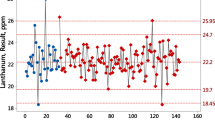

As for the data characterizing the results of quantitative chemical analysis, the problem of their validity (completeness of the exclusion of systematic errors), which remains common and relevant for various types of measurements, becomes most acute here, especially for low and trace concentrations. This is explained by the complexity of the processes on which chemical analysis as a measurement process is used, numerous sources of errors, and the superposition of their effects [1]. As we move towards ever-lower concentrations of components as analytes, the relative errors of the results of determinations increase, often reaching the order of the determined concentrations and even higher (see, for example, [2–6]). Estimation of such errors in validating the results of analysis is a challenging task. The problem of accuracy control is even more complicated for small and trace concentrations because of the increased role of interfering factors that remain out of the control, which leads to a significant decrease in the efficiency of the standard addition method and interlaboratory experiments. Because of large discrepancies in the results obtained by different laboratories—participants in an interlaboratory experiment,—caused by the dominant role of systematic errors, the difficulties in creating certified reference materials (CRMs) increase.

Varieties of systematic errors (components of the systematic error of a result of measurement) were listed in Fundamentals of Metrology, a classic work by M.F. Malikov [7], and in educational literature [8, 9]. The classification of the reasons for the occurrence of systematic errors in the results of quantitative analysis is multifaceted, because their sources are numerous, and they can often be both different and coinciding for different test samples, methods, and implementations of methods in analytical procedures. Sources of errors typical of quantitative chemical analysis were listed in sufficient publications, for example, [1, 10, 11]. The most significant in terms of their effect on the quantitative determinations of low concentrations were systematized in [11]. A detailed list of systematic errors, specifying and supplementing the general metrological classification in relation to chemical analysis, was presented in [1]. However, this does not mean the abolition of the conventional classification: all the sources listed in it reflect the actual situation, which does not exclude, if necessary, additions and specifications as new types of measurements appear, the old ones become more complicated, and the range of tasks is expanded. The terms and definitions used in metrological practice (or newly developed ones) should not contradict the general, time-tested classification of errors. However, trends have appeared in recent decades aimed at replacing the meanings of terms and definitions with those uncharacteristic of them, or at introducing terms that have no actual meaning at all into metrological practice. This, in turn, led to the emergence of erroneous but stable concepts in validating the accuracy of the results of analysis or in creating regulatory and technical documents, the careful adherence to which can lead to results that have nothing in common with reality. This sutuation is due to the absence of well-defined ideas in many researchers regarding the sources of systematic errors that are common for all kinds of measurements, and many reasons that are specific to chemical analysis. Erroneous concepts, confusion of concepts, and the use of low-quality terminology in publications and normative documents can be traced back to the lack of a connection between the essential works on the theory of measurements in general and the works developed the fundamentals of chemical metrology, which also solved many of fundamental issues of quantitative analysis as a measuring process.

Below is our view of the current situation in interrelated directions.

Incorrect terms and definitions related to the problem of the accuracy of the results of measurement. Erroneous conclusions and recommendations. To characterize objectively the current situation, it is sufficient to confine ourselves to some typical examples of the use of terms, definitions, recommendations, and prescriptions that are fundamentally inconsistent with the basic provisions of metrology.

Our primary interest was in the consideration of an example of the international standard ISO Standard 5725-1:1994. Part 1 (Russian version is GOST R ISO 5725-1-2002) [12], being restricted to its first part, which contains the main provisions and definitions.

Considering the terms recommended by the ISO standard, we first focus on the term “reproducibility standard deviation” (Clause 3.19, Section 3. Definitions).

The reproducibility of measurements characterizes the closeness of individual values in a series of measurement results obtained under different conditions. In other words, this is the degree of spread relative to the obtained average result in a series of measurements, i.e., standard deviation.

It would seem that everything is clear. However, the term “standard deviation of reproducibility” was revealed as “the standard (root-mean-square) deviation of the results of measurements (or tests) obtained under the conditions of reproducibility” (Clause 15), which did not previously exist in the domestic regulatory and technical documents, appeared. If this term is interpreted based only on its formulation (there is no need to prove that the name of the term must correspond to its definition), we will come to an absurdity: as, according to the formulation of the term, reproducibility itself acts as a random variable, then a series of reproducibility is required to determine the “standard deviation of reproducibility.” The situation is so far from mathematical rigor that an analogy with the well-known “salty salt” suggests itself. Regarding the term “reproducibility condition,” it is disclosed as “the condition under which the results of measurements (or tests) are obtained by the same method on identical test samples in different laboratories, different operators using different equipment” (Clause 3.18, Section 3. Definitions). However, acceptable reproducibility (we emphasize, in the region of analytically accessible concentrations) can also be achieved when an interlaboratory experiment is performed using different methods. This circumstance is considered in the Russian regulatory document GOST 16263-70 [13], where the reproducibility of measurements is defined as the quality of measurements, reflecting the proximity of the results of measurements performed under different conditions (in different times, in different places, by different methods and means). As is known, different methods are used to determine the certified characteristics of certified reference materials in different laboratories. The very term “reproducibility conditions” does not seem appropriate from the standpoint of linguistic norms either.

Examining the terms proposed by the ISO standard, it is evident that their names do rarely reflect their meaning due to the mix-up of concepts. Thus, in Section 1. Scope (Clause 3.9), the term “laboratory bias” is named among the recommended terms, which is defined as the difference between the mathematical expectation of the measurement results obtained in a separate laboratory and the accepted reference value. If the certified value of the determined component in the standard sample is taken as the reference value, then, to obtain the indicated difference, the certified value should be reproduced under the conditions of this laboratory. In other words, the reference material should be analyzed as an unknown sample. Next, the average value of the results of replicate determinations should be found. However, such a difference, by definition, is nothing more than an estimate of the systematic error of the result of measurements obtained in a given laboratory using a particular method for analyzing a particular sample. If we apply the concept of “laboratory systematic error” to a diverse set of samples, techniques, and concentrations, we end up with a lot of “laboratory systematic errors,” which can only cause chaos because one of the key notions of metrology, the systematic error of the result of measurement, has been replaced with the term “laboratory bias.” Systematic errors in the results of analysis can also be caused by factors during the analysis under the conditions of other laboratories. It is not possible to isolate errors that are exclusive to this laboratory from those that are common among other laboratories, because of the complexity and inseparability of the processes leading to errors in quantitative analysis.

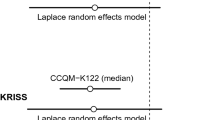

In Clause 3.10 and on, the term “bias of the measurement” is used. This term is revealed as “the difference between the mathematical expectation of the results of measurements obtained in all laboratories using this method and the true (or, in its absence, the accepted reference value of the measured characteristic),” therefore, the reference value. (We assume that the term “all laboratories” does not mean all the laboratories of the world, but only those taking part in the interlaboratory experiment, and it would also be helpful to find out from the compilers of the standard when the “presence” of the true value, which always remains unknown, is ensured.) Let us consider whether the specified difference can be of cognitive and applied value, given that the analyst is interested in the final error of the result of analysis when implementing this method in a particular laboratory, as well as the error limits for a given confidence probability, which can be calculated on when implementing the procedure. Note 6 to Clause 3.10 states: “The systematic error of a measurement method is estimated by the deviation of the mean value of the results of measurements obtained from numerous different laboratories using the same method.” It is unclear from which value the mean value deviates, apparently, from the reference value. If the certified value of the concentration in the standard sample is taken as the reference value as the most reliable means of controlling the accuracy, then, to find the difference between the mathematical expectation and the reference value, the material of the standard sample should be subjected to an interlaboratory analysis like an unknown sample. Moreover, as provided by the standard, the method should be the same for all laboratories. Then, we determine the difference between the obtained overall average value and the certified concentration. However, the use of one and the same method is still associated with the dominant action of many uncontrolled sources of errors that are not related to the core of this method. The results of laboratories remain inconsistent even when the same method is used [14]. The difference between the obtained overall average result and the certified value for a standard sample, which is also established as a generalized average result according to the data of an interlaboratory experiment, even performed using different methods, may be insignificant, which gives reason to doubt its cognitive and applied value.

Another term introduced by ISO 5725-1-2002, “laboratory component bias”, is defined as “the difference between the laboratory bias and the measurement method bias.” Regarding the real components of this difference, such a term cannot be considered as having a meaning. In addition, with its introduction into the metrological circulation, we get, similar to the “laboratory systematic error”, a set of “laboratory components of the systematic error.”

The need in the very abundance of terms also raises doubts.

The consequence of an uncritical attitude to the international standards was the development and implementation of Russian and interstate regulatory documents that duplicate the shortcomings of ISO standards, for example, RMG 61-2010 [15]. The set of terms and their meanings recommended by this document coincide with the terminology of ISO, so the conclusions drawn from the results of the analysis of ISO terms can be extended to the considered recommendations. However, we additionally note some inaccuracies. The term “assigned analysis accuracy” seems to be unhelpful, because it can be interpreted as estimated as a deterministic and stable value in time, rather than probabilistic. In implementing a procedure in analytical practice, it is customary, and not without reason, to reuse reference materials after a certain period to control the accuracy of the results of analysis and take measures to maintain it. It seems appropriate to indicate the accuracy that can be officially guaranteed (for example, by a standard for an analysis method) instead of using the concept of “fixed error,” allowing for ambiguous interpretation. Accuracy can be specified with the error limits of the result of analysis, estimated probabilistically.

The inadequate preparation of the document is demonstrated by the renaming of the means for monitoring the accuracy of the result f analysis, which were always given identical according to their metrological purposes, to “means for monitoring the indicator of the accuracy of the analysis procedure” (section “General Provisions”).

Erroneous recommendations and other inaccuracies can be found in monographs and textbooks. Below are just some of numerous examples that testify to the systemic rather than random nature of the mixing of concepts. Such a situation could not develop without connection with low-quality regulatory and technical documentation.

For example, in the section “Systematic Errors” of the fundamental textbook [16], the accuracy of analysis is defined as “a collective characteristic of a method or procedure, including their accuracy and reproducibility.” However, the accuracy of the results of a quantitative chemical analysis is mainly determined not so much by the features of a particular method or procedure, but by the conditions for implementing the analysis procedure. Therefore, if we discuss the accuracy of the analysis, it is more correct to use the term “accuracy of the result of analysis (or result of measurement) in using this procedure.” It was noted in the recommendations [17] that {… one cannot abstractly speak about the accuracy of a particular method “in general,” because different methods of analysis of a specific substance often give results of approximately equal accuracy; i.e., after the sources of gross errors are eliminated (‘the procedure has been worked out’ in the words of analysts), several corresponding methods become almost equally accurate.}

The same section of the textbook [16] proposes the statement that “The main contribution to the total error is made by methodological errors due to the method of determination. The methodological error includes errors in sampling, converting the sample into a form convenient for analysis (dissolution, fusion, sintering), and errors in the operations of preconcentration and separation of components.”

However, such a conclusion conflicts with the generally accepted definition of the error of a method and the actual state of affairs. Metrology recognizes a method error as a component of systematic measurement error, which is caused by deficiencies in the method or the model it is based on [7, 8]. This definition essentially coincides with the definition in GOST R ISO 5725-1-2002, where the method error is defined as “a component of the systematic measurement error due to the imperfection of implementing the accepted measurement principle.” Consequently, no components of the systematic error of the result of analysis (most of them) fall under the definition of the systematic error of the method. Insufficient perfection of the method may be due to the incompleteness of the chemical reaction used in the method, the incompleteness of precipitation, etc. In the same quotation, we read further on the same reasons: “Particular attention should be paid to the errors related to the chemical reaction used for detecting or determining a component. In gravimetry, such errors are caused by at least a small but noticeable solubility of the sedimentation or coprecipitation processes.” The causes of errors can usually be pinpointed and the magnitude of the error can be lowered to an acceptable level, though this does not guarantee the elimination of great discrepancies when using the same method in an interlaboratory experiment. This verifies that the major contributor to the error of the result of the examination of many, mostly uncontrolled, sources is not the failure of the system. The sampling error and the errors associated with the conversion of the sample into a form convenient for analysis (contamination, loss), contributing significantly to the formation of the overall systematic error along with other sources and often being common for different methods, should be attributed to operational rather than methodological errors [1]. For the same reason, instrumental errors cannot be attributed to methodological ones either. We emphasize that the use of even an impeccable procedure in a particular laboratory is accompanied by the effect of numerous sources of errors and their superposition, which requires using such means of accuracy control as certified reference materials.

The study guide [18] includes the section “Systematic Error of the Procedure (Method) of Analysis” and proposes a mathematical model that essentially corresponds to the meaning of the term “systematic error of the analysis method” given by the ISO standard. We quote verbatim:

{The non-excluded systematic error of the analysis procedure can be judged from the results of the analysis of a standard sample (CRM) with a known analyte concentration, obtained in different laboratories using different portions of the same CRM. The systematic error of the CRM thus passes into the category of random ones. In this case, we can accept that

where θ is the total non-excluded systematic error, \({{\bar {x}}_{{{\text{meas}}}}}\) is the analysis result for the CRM, and xCRM is the analyte concentration in the CRM.}

This difference is actually the difference between the two generalized average concentrations obtained from the results of two interlaboratory experiments in the analysis of an identical sample (see above). We note once again that we make this conclusion if we take into account that the certified characteristics of a standard sample are determined as generalized averages based on the results of an interlaboratory experiment (see above). The cognitive value of such a difference seems rather doubtful, if we acknowledge our arguments given in considering the term “systematic error of the method.” Considerable costs of actually repeating the interlaboratory experiment should also be taken into account.

Monograph [19] contains the following statement: “Modern research methods (in particular, mass spectrometry) make it possible to determine the relative atomic (molecular) mass with an accuracy that significantly exceeds practical needs.” The quote continues: “Hidden systematic errors are inherent in most methods used to analyze samples of complex composition. However, several methods are practically free from such errors (for example, isotope dilution mass spectrometry), but they are extremely expensive, applicable only to individual cases, and rarely used for routine analyses.”

However, it is known that systematic errors are inherent to all measurements without exception rather than to “most methods,” no matter where and by whom they are carried out. One can only talk about a negligibly small or, depending on the task, an admissible value of the error.

As for the conclusion that the accuracy of atomic mass measurements using the most efficient methods of analysis exceeds practical needs, this statement cannot be considered true: significant interlaboratory discrepancies also occur in using mass spectrometry methods, which was confirmed, for example, by studies performed by IAEA to determine trace levels of elements in fish samples [5]. However, isotope dilution mass spectrometry was not used in these studies. Even for the most efficient methods, no stages of chemical analysis can be completely controlled, which necessitates the use of certified reference materials. Unfortunately, objective information about the actual accuracy of the results of analysis performed specifically by isotope dilution mass spectrometry could not be found. The estimate, taking into account the high efficiency of the method, seems to be relevant. The monograph claims the accuracy of the analysis using this method is higher than necessary, but the evidence provided is not persuasive, as it is assumed that all stages of the analysis can be controlled completely.

The same monograph uses the term “reliably recorded systematic error of the method.” The appearance of this term was probably a consequence of introducing the term “assigned error of the analysis procedure” (see, for example, [15]). As was already noted, the accuracy that can be guaranteed in advance using a specific procedure can only be estimated probabilistically. Consequently, it is suitable to use a particular concept of accuracy, which can be ensured (for example, by a standard sample for an analysis method) indicating potential margins of error.

Summarizing the results of the consideration of the terms used in modern regulatory and technical documents and publications on the metrological aspects of chemical analysis, we found it is often in conflict with the terms adopted in metrology or allows their incorrect interpretation. Still, the need in keeping to the precise terms should not mean the cancellation of ideas that are not written into regulatory documents, but clearly show the essence of the matter and are not in opposition to the precise terms.

Erroneous interpretation of the problem of the adequacy of certified reference materials and test samples. One of the most important parts of the strategy for reference materials is to guarantee their adequacy to the test samples. This field is also full of statements that are far from the principles of the practical use of certified reference materials. Let us dwell on an example, even though the only of its kind, published in a reputable Encyclopedia of Modern Natural Science [20], and we quote:

{From the point of view of practical application, certified reference materials of composition are divided into adequate and inadequate with respect to the sample. Adequate certified reference materials are as close as possible in chemical composition to the test sample. They are necessary when using analytical methods that react sharply to the presence of “third” elements, i.e. to all components of the sample, and not only to the elements being determined. These methods include, for example, X-ray fluorescence, spark source atomic emission analysis, solid-state mass spectrometry, etc. Neutron activation analysis, wet chemical and physicochemical methods, and inductively coupled plasma–atomic emission spectrometry do not usually require the use of adequate reference standards. This allows for producing fewer kinds of reference materials than the number of samples that require chemical analysis (steels, alloys, raw materials, environmental samples, etc.).}

However, the use of certified reference materials always requires their adequacy to the samples under study. The choice of whether to use one or the other standard sample should be determined by how compatible they are with the samples. If we cannot achieve an acceptable level of adequacy, using those certified reference materials is not an option. Samples that do not accurately represent the ones being analyzed only gives distorted information about the analyte concentration. In this case, we should select other certified reference materials according to the analytical task. The statement that activation analysis, wet physicochemical and chemical methods, and inductively coupled plasma–atomic emission spectroscopy do not necessitate the use of reference standards, opposes the conventional practice of chemical analysis: a major number, if not most, of certified reference materials are used in these methods. As for the attempts to shrink the variety of certified reference materials, the implementation of such possibilities is not due to the mythical absence of the need in creating reference materials adequate to the samples. If a standard sample (or a set of certified reference materials) can be used to analyze several varieties of a specific material (substance), then we can consider it as a group standard sample or a set of such samples [21]. Their applicability is determined by the principle and features of the method (methods) of sample analysis and the requirements for the accuracy of its results [21]. Most types and sets of certified reference materials are developed and used precisely as group samples. Using these certified reference materials is a valuable resource, so long as the increasing gap between the certified reference materials and the substances being studied does not cause any improper results [21].

Ratio of systematic and random errors in the results of quantitative chemical analysis. Surprisingly, recent textbooks and generalizing monographs do not reflect the dominant role of systematic errors and the increase of this role with a decrease in the determined. Moreover, in [18], the dominant role was given to random rather than systematic errors: “… the need to find out the causes of occurrence and to eliminate systematic errors does not always arise, but only where they are comparable with a random error and do not allow measurement with a given accuracy”. Thus, according to the text given, the systematic error is lower than random until it becomes comparable to random. This is although the dominant role of systematic errors in many types of measurements and, especially, in quantitative chemical analysis has been multiply confirmed since the last quarter of the 19th century. Let us cite the only statement contained in the monograph devoted to the spectral analysis of pure substances [22]: “Extensive experimental data indicate that interlaboratory errors, which can be considered a characteristic of systematic errors …, sometimes exceed random intralaboratory errors by an average of 3–4 times, and sometimes by 10 or more times; discrepancies are usually the greater, the lower the concentration of the element being determined.” This situation remains a fact today (see section “Some general remarks”). Assessing the numerous data in publications and documents has revealed patterns that describe how errors in interlaboratory measurements vary with decreasing concentration, depending on the type of base and method of quantitative analysis [1, 23]. Namely the dominance of the systematic error that necessitated the creation of such means of accuracy control as certified reference materials.

Lack of attention to the limitations of the recommended methods for controlling the accuracy. The essence, possibilities, and limitations of the known methods for controlling and ensuring accuracy (including the use of certified reference materials, the standard addition method, etc.) were described in several sources. They were most fully considered in the monographs [1, 17, 21] and publications [23–26], including methods that can only be used to a limited extent as means of accuracy control in a particular laboratory. However, recommendations for the use of these particular methods in educational and methodological literature were often given without indicating the need in considering actual situations limiting their capabilities. Such methods are presented as “reference,” “exemplary,” “independent,” “trustworthy,” etc. It is assumed that their use gives more chances to obtain accurate analysis results. However, using even the best methods in a particular laboratory does not solve the problem of proving accuracy, the need for which is obvious given the effect of numerous sources of contamination and loss. The development of hundreds of reference, independent, trustworthy, etc. methods is not an easy task, if a method is understood as its implementation in a specific procedure [6].

The necessity in an unbiased approach in setting requirements on the accuracy of the result of analysis. Solutions that enable determining the required accuracy were once found for two types of tasks, the difference between which is due to objective circumstances, and based on the fact that “… the most important characteristic of the quality of analytical control should not be accuracy taken in isolation but its reliability” [17]. If a generalized assessment is feasible, then reliability can be decided by establishing a relationship between the size of the technological tolerance field and the controlled parameter, its actual distribution, and sometimes other parameters. This approach enables, given the projected reliability, the determination of the required accuracy or, without a possibility of improving the accuracy, expansion of the tolerance field. In terms of serial production, this is the foundation for controlling industries in which chemical composition is a measure of quality. This problem is divided into several particular ones, for which solutions have been found in relation to specific circumstances.

Solutions were also found for another class of problems, related to the fact that control is individual, because the law of distribution of controlled parameters is unknown or unstable. Approaches that ensure the determination of the permissible values of errors are based on establishing relationships between the accuracy of analytical control and the accuracy of indicating the concentration of the determined component [1, 17], considering the concept of the minimum of distinction [27]. These solutions were at one time enshrined in regulatory documents, and later canceled for reasons that remained unknown.

The need in ensuring reliable quality control of substances and materials also arises in the absence of a standard for the concentration of a component, for example, in the analytical control of products, the quality criterion of which is purity (reagents, pure metals, chemical and pharmaceutical preparations). Different strategies have been crafted for these kinds of samples too.

All of the above has created prerequisites for streamlining the system of standards for substances and materials and their test methods. Obviously, what has been started in this direction is expedient to resume and continue.

A detailed presentation of these solutions, the relevance and effectiveness of which are still in force (contrary to the allegations in the introduction to the monograph [19]), is beyond the scope of this publication. If necessary, they can be consulted from primary sources (see, for example, [1, 17, 21]).

Let us consider what is offered by the current regulatory and technical documentation using one rather eloquent example. Under Clause 5.2.1 of the section “Development of Measurement Methods” of GOST R 8.563-2009 [28], “… requirements for measurement accuracy are established considering all components of the error (methodological, instrumental, introduced by the operator, introduced during sampling and sample preparation).” A special appendix lists the “typical components” of the measurement error. Taking into account the limitations of element-by-element accounting for systematic errors (see, for example, [6, 7]), the approach recommended by the standard can lead to an unreasonable expansion of the tolerance field for the concentration of the controlled component and, therefore, an unreasonable underestimation of the requirements to the accuracy.

Considering what has been stated in this report, we conclude that the current regulatory and technical documents related to the problems of quantitative chemical analysis, including at the international level, should be at least revised. Appropriate steps should be taken, focusing on the fundamentals of metrology, plus the aforementioned recommendations for the metrological advancement of the system of standards for substances and materials and analysis methods.

Researchers and authors of educational and methodological literature should also be aware of the basic concepts of metrology. Returning to continuity, we can also note the need to consider the solutions found in the past, which not only keep their relevance but also may be even more in demand as the tasks facing chemical analysis become more complex.

REFERENCES

Shaevich, A.B., Analiticheskaya sluzhba kak Sistema (Analytical Service as a System), Moscow: Khimiya. 1981.

Dorner, W.G., Chem. Rdsch., 1984, no. 23, p. 25.

Department of Earth Sciences. The Open University. GeoPT 11—An International Proficiency Test for Analytical Geochemistry Laboratories, Report on July 11, 2001.

Magnusson, B. and Koch, M., in Reference Module in Earth Systems and Environmental Science. Quality of Drinking Water Analysis, 2013, p. 169.

IAEA Analytical Quality in Nuclear Applications Series no. 55: Wolrdwide Interlaboratory Comparison on the Determination of Trace Elements in a Fish Sample, IAEA-MESL-ILC-TE-BIOTA-2017, Vienna: Int. At. Energy, 2018.

Shaevich, R.B., Meas. Tech., 2016, vol. 59, no. 9, p. 1017.

Malikov, M.F., Osnovy metrologii (Fundamentals of Metrology), part 1: Uchenie ob izmerenii (The Concept of Measurement), Moscow, 1949.

Burdun, G.D., Markov, B.N., Osnovy metrologii (Fundamentals of Metrology), Moscow: Izd. Standartov, 1972.

Tyurin, N.I., Vvedenie v metrologiyu (Introduction to Metrology) Moscow: Izd. Standartov, 1973.

Prokof’ev, V.K., Fotograficheskie metody kolichestvennogo spektral’nogo analiza metallov i splavov (Photographic Methods for Quantitative Spectral Analysis of Metals and Alloys), part 2, Moscow: Gos. Izd. Tekh.-Teor. Lit., 1951.

Korenman, I.M., Analiticheskaya khimiya malykh kontsentratsii (Analytical Chemistry at Low Concentrations), Moscow: Khimiya, 1966.

ISO Standard 5725-1:1994, Part 1: General Principles and Definitions.

GOST (State Standard) 16263-70: State System of Ensuring the Uniformity of Measurements. Metrology. Terms and Definitions, Moscow: Izd. Standartov, 1986.

Kambulatov, N.N., Genshaft, S.A., Nalimov, V.V., and Pines, V.G., Zavod. Lab., 1954, vol. 20, no. 3, p. 374.

RMG 61-2010: State System for Ensuring the Uniformity of Measurements. Recommendations for Interstate Standardization. Indicators of Accuracy, Correctness, Precision of Methods of Quantitative Chemical Analysis. Assessment Methods, Moscow: Standartinform, 2013.

Osnovy analiticheskoi khimii (Fundamentals of Analytical Chemistry), Zolotov, Yu.A., Ed., Moscow: Akademiya, 2012.

Shaevich, A.B., Izmerenie i normirovanie khimicheskogo sostava veshchestv (Measurement and Regulation of the Chemical Composition of Substances), Moscow: Izd. Standartov, 1971.

Rodinkov, O.V., Bokach, N.A., and Bulatov, A.V., Osnovy metrologii fiziko-khimicheskikh izmerenii i khimicheskogo analiza. Uchebno-metodicheskoe posobie (Fundamentals of Metrology of Physical and Chemical Measurements and Chemical Analysis: Textbook) St. Petersburg: VVM, 2010.

Dvorkin, V.I., Metrologiya i obespechenie kachestva khimicheskogo analiza (Metrology and Quality Assurance of Chemical Analysis), Moscow: Tekhnosfera, 2020.

Karpov, Yu.A., in Entsiklopediya “Sovremennoe estestvoznanie” (Encyclopedia “Modern Natural Science”), vol. 6: Obshchaya khimiya (General Chemistry), Moscow: Magistr, 2000, p. 265.

Shaevich, A.B., Standartnye obraztsy dlya analiticheskikh tselei (Standard Samples for Analytical Purposes), Moscow: Khimiya, 1987.

Spektral’nyi analiz chistykh veshchestv (Spectral Analysis of Pure Substances), Zil’bershtein, Kh.I., Ed., Leningrad: Khimiya, 1971.

Shaevich, A.B., Crit. Rev. Anal. Chem., 1985, vol. 15, no. 3, p. 361.

Shaevich, A.B., Zavod. Lab., 1987, vol. 53, no. 6, p. 4.

Shaevich, A.B., Zh. Anal. Khim., 1989, no. 335, p. 9.

Kaplan, B.Ya., Karpov, Yu.A, and Filimonov, L.N., in Problemy analiticheskoi khimii (Problems of Analytical Chemistry), Moscow: Nauka, 1987, vol. 7, p. 41.

Borel, É., Probabilité et certitude, Paris: Press. Univ. de France, 1950.

GOST (State Standard) R 8.563-2009: State System for Ensuring the Uniformity of Measurements. Procedures of Measurements, Moscow: Standartinform, 2010.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

The authors declare that they have no conflicts of interest.

Additional information

Translated by O. Zhukova

Rights and permissions

About this article

Cite this article

Shaevich, R.B., Budnikov, H.C., Gromov, I.Y. et al. Systematic Errors in the Results of Quantitative Chemical Analysis: from Incorrect Terms to Erroneous Concepts. J Anal Chem 78, 1097–1104 (2023). https://doi.org/10.1134/S1061934823060102

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1061934823060102