Abstract

We consider the problem of maximization of an integral functional on the space of increasing functions, which is motivated by economic concerns for tax mechanisms optimization. An analytical description of the optimal value is obtained, as well as an approximation method for finding the solution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1. Introduction

Optimization of taxation mechanisms has become a subject of pure mathematical research only comparatively recently. This is natural, because such systems contain a very large number of parameters, especially when considered in detail. In order to overcome this complexity, researchers usually introduce a simple model of an economic environment (we will describe it later) and address the issue within it. For example, the authors of the recent papers [1] and [2] use dynamical methods for analyzing tax function properties. After making some assumptions, it is possible to find an analytical solution to the optimization problem in that simplified environment, which is the main result of the present paper.

Several modifications of the optimization problem have been explored in many papers in mathematical economics; we only mention the celebrated paper [3] and the survey [4] by the Nobel prize winner Mirrlees and also the papers [5] and [6]. The exact problem considered in this paper has been motivated by the papers [1] and [2] and our discussions with A. Tsyvinski. This problem is concerned with the maximization of an integral functional on the space of increasing functions subject to an additional nonlinear constraint, which generates quite singular objects. This is why a rigorous mathematical analysis of the problem leads to interesting questions in the theory of functions, in contrast to most works in applied economics, where all functions are assumed to be differentiable and to have zero derivatives at all extremum points. It should also be noted that this problem differs from the classical problems of optimal control (see, e.g., [7] [8]).

2. Statement of the Problem

Assume that every economic agent is an object of a certain class completely characterized by its productivity type \(\theta\in\Theta\subset\mathbb R_+\). Each agent’s activity is characterized by labor effort \(l>0\). As a result, we have the agent’s individual income \(u=\theta l\). This income is liable to tax \(T(u)\), which does not exceed the income and does not increase.

We also introduce the notion of the costs uncurred by an agent exerting effort \(l\); it is described by an increasing function \(f(l)\). In this paper, we make a change of variables and consider the problem for variables \(u\) and \(\theta\) instead of \(\theta\) and \(l\), which transforms \(f(l)\) into \(f(u,\theta)\). We impose the following constraints on the functions mentioned above:

-

•

\(f(u,\theta)\) is a nonnegative continuous function defined for \(u\ge 0\) and \(\theta>0\) (or for \(\theta\) belonging to some interval \([\theta_{\min},\theta_{\max}]\subset(0,+\infty)\));

-

•

\(f(0,\theta)=0\) for every \(\theta\); the function \(f(u,\theta)\) increases in \(u\);

-

•

the derivative \(\partial_\theta f(u,\theta)\) exists and is continuous in \(\theta\) and is decreasing in \(u\); there exists a derivative \(\partial_uf(u,\theta)>0\);

-

•

\(T\colon[0,+\infty)\to[0,+\infty)\) is a continuous increasing function, \(T(0)=0\), and the function given by \(u\mapsto u-T(u)\) is increasing.

We denote the class of such functions by \(\mathscr T\).

Under these conditions, \(\partial_\theta f(u,\theta)\le\partial_\theta f(0,\theta)=0\) for all \(u\) and \(\theta\); so \(f(u,\theta)\) is decreasing in \(\theta\).

Suppose also that, for every fixed \(\theta>0\) and all \(u\) large enough, we have

A typical example is the function \(f(u,\theta)=u^2/(2\theta^2)\). For this function, the equality \(\partial_\theta f(0,\theta)=-u^2/\theta^3\) holds.

The class \(\mathscr T\) can be described as the set of all increasing \(1\)-Lipschitz functions vanishing at zero. Indeed, from the increasing of \(T(u)\) and \(u-T(u)\) we obtain

Note that \(T(u)\le u\). For example, the class \(\mathscr T\) contains all linear functions \(ku\) with \(0\le k\le 1\).

For a given type \(\theta\) and a fixed tax function \(T\), we denote

which corresponds to the maximal net income of the agent after paying taxes and other costs. It has the meaning of the optimal effort of an agent of the given type in the given tax system. Let \(u_T(\theta)\) denote the minimal point of maximum for (2.1). Such a point exists due to the constraints imposed on \(f\) and \(T\). We set \(u_T(0)=0\).

Suppose that we are given a Borel probability measure \(P=p\,dx\) on \((0,+\infty)\). We will assume that either the density \(p\) is positive on \((0,+\infty)\) or the measure \(P\) is concentrated on a bounded interval and the density \(p\) is positive on this interval. We set

We will assume that there is a \(P\)-integrable locally bounded function \(R>0\) for which \(u-f(u,\theta)\le 0\) for all \(u\ge R(\theta)\). Then \(u_T(\theta)\le R(\theta)\), so the estimate \(T(u_T(\theta))\le u_T(\theta)\le R(\theta)\) implies that the functions \(T(u_T(\theta))\) and \(u_T(\theta)\) are integrable with respect to \(P\).

Then we define the government revenue

where we adhere to a scenario assuming that each agent exerts optimal effort and strives for increasing net income.

We study the problem of finding the value

that is, of maximizing the collected tax.

Here it is of interest to find both the maximal value and the maximizing mapping \(T\). Our main results give a constructive answer to the first question for a broad class of functions \(f\), and for more special functions, we manage to obtain some information about the optimal mapping \(T\).

We observe that it follows from what has been said above that \(J(f,P)\le\|R\|_{L^1(P)}\).

Note that, although we have to maximize the integral of the composition of \(T\), simply increasing this function \(T\) may decrease the integral, because the point of maximum \(u_T\) may become smaller. For example, when taxation is excessively high, the actually collected tax may be zero.

3. Main Results

Let \(\mathscr U\) denote the set of all increasing left continuous functions \(u\colon[0,+\infty)\to[0,+\infty)\) such that \(u(0)=0\) and, for all \(\theta>0\), the inequality

holds. For every function \(u\in\mathscr U\), we define the right inverse function by the formula

The function \(v\) also increases and is left continuous. In addition, \(u(v(s))=s\) at the points in the range of \(u\), since, for such points, \(v(s)\) is the maximal point in \(u^{-1}(s)\).

The main result of the paper is the following theorem.

Theorem 1.

The following equalities hold :

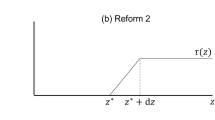

where the supremum can be taken over increasing infinitely differentiable functions \(u\) with \(u'>0\) . Approximations for the supremum in \(T\) can be obtained by means of mappings of the form

where \(u\in\mathscr U\) is an infinitely differentiable function and \(v\) is the inverse function for \(u\) .

Proof.

Given a fixed mapping \(T\in\mathscr T\), we denote the function \(u_T(\,\cdot\,)\) simply by \(u(\,\cdot\,)\) for brevity. Let us show that the function \(\theta\mapsto u(\theta)\) possesses the following properties:

-

(1)

\(u\in \mathscr U\);

-

(2)

whenever \(\theta>0\) and \(0\le u<u(\theta)\), the inequality

$$u-f(u,\theta)<u(\theta)-f(u(\theta),\theta)$$holds.

We first verify that the function \(\theta\mapsto u(\theta)\) increases. Let \(\theta_1<\theta_2\). Then

whenever \(u<u(\theta_1)\). In addition, \(f(u,\theta_1)-f(u,\theta_2)\) increases in \(u\) if \(\theta_1<\theta_2\) by the condition that the function \(-\partial_\theta f(u,\theta)\) increases in \(u\). Therefore,

for all \(u<u(\theta_1)\), i.e., \(u(\theta_2)\ge u(\theta_1)\).

We now verify that this function is left continuous. Suppose that \(\lim_{\theta\to\theta_0-0}u(\theta)=u_0<u(\theta_0)\) for some \(\theta_0>0\). For every \(\theta<\theta_0\) we have

since \(u(\theta)\) is a point of maximum of (2.1). Passing to the limit as \(\theta\) tends to \(\theta_0\) from the left, we obtain

This means that, actually, this is an equality, since \(u(\theta_0)\) is a point of maximum. Hence \(u_0\) is also a point of maximum, which contradicts the fact that \(u(\theta_0)\) is the minimal point of maximum of (2.1). Thus, the function \(u(\,\cdot\,)\) is left continuous. By definition, \(0\le u_T\le R(\theta)\) and \(u_T(0)=0\).

Finally, we show that property (2) is fulfilled. Let \(\theta>0\) and \(0\le u<u(\theta)\). Then

because \(u(\theta)\) is the minimal point of maximum of (2.1). It follows that

since \(T\) is increasing. Thus, property (2) is established. It obviously yields the estimate \(\partial_u f(u(\theta),\theta)\le 1\), which completes the proof of the inclusion \(u\in\mathscr U\).

Since \(\max_{u\ge 0}(u-T(u)-f(u,\theta))\ge 0\), we have

Therefore, we have

Let us verify that the function \(r\) is increasing. Let \(\theta_1<\theta_2\). Then \(u(\theta_1)\le u(\theta_2)\) and

because \(u(\theta_2)\) is a point of maximum of (2.1). It follows that

In particular, \(r(\theta_2)\ge r(\theta_1)\), since \(f(u(\theta_1),\theta_1)\ge f(u(\theta_1),\theta_2)\).

However, we have proved even more, namely, the estimate

Let us show that this estimate implies the inequality

in the sense of distributions (see, e.g., [9]), where \(Dr\) does not denote the pointwise derivative, but stands for the measure that is the generalized derivative of the function \(r\) (this measure is finite on all intervals \([0,N]\)). We first observe that the nonnegative increasing function \(r\) has a finite limit \(r_0=\lim_{\theta\to 0+}r(\theta)\). In addition, the increasing function \(r\) has the usual derivative \(r'\) almost everywhere, and \(r'\) is integrable on all intervals \([0,N]\), since \(r_0\) is finite. It follows from this and the estimate \(r'(\theta)\ge -\partial_\theta f(u(\theta),\theta)\ge 0\) that the function \(\partial_\theta f(u(\theta),\theta)\) is integrable in \(\theta\) on all intervals \([0,N]\). We observe that the function \(\partial_\theta f(u,\theta)\) with fixed \(u\) may fail to be integrable in \(\theta\) up to zero: take \(f(u,\theta)=u^2/\theta^2\).

From the estimate proved above for the difference quotient we obtain

Hence, for every smooth function \(g\ge 0\) with support in \((0,+\infty)\), we have the inequality

Making a change of variable, we can write the left-hand side in the form

as \(n\to\infty\). On the right-hand side of the indicated inequality, under the integral sign, we have a nonnegative function whose limit as \(n\to\infty\) equals \(-g(\theta)\,\partial_\theta f(u(\theta),\theta)\). By Fatou’s theorem,

where the right-hand side coincides with the action of the generalized function (distribution) \(Dr\) on \(g\), i.e., equals the integral of \(g\) against the measure \(Dr\).

Thus, the desired inequality in the sense of distributions is established. This inequality, along with the integrability of the function \(\theta\mapsto\partial_\theta f(u(\theta),\theta)\) on all intervals \([0,N]\), implies the inequality

Thus, we have

Therefore, the left-hand side of (3.1) is not greater than the right-hand side. Note that the conditions \(f\ge 0\), \(\partial_\theta f\le 0\), and \(T\ge 0\) yield that, on the right-hand side of the last estimate, every term does not exceed \(u(\theta)\) in absolute value.

We now fix a function \(u\in\mathscr U\). Let us take the sequence of infinitely differentiable functions \(u_n\ge 0\) on \([0,+\infty)\) defined by the formula

where \(\varrho\) is an infinitely differentiable probability density vanishing on \((-\infty,0]\) and positive on \((0,+\infty)\). We observe that

In addition, the values \(u_n(\varphi)\) increase to \(u(\varphi)\) for all \(\varphi\ge 0\). We can assume that \(u\) is not identically zero. Then there exists an interval \([0,a]\) on which the nonnegative measure \(Du\) is not zero. Let \(a_0\) be the infimum of such \(a\). It is seen from the previous formula that \(u_n'(t)>0\) for all \(t>a_0\). If \(a_0>0\), then \(u(\theta)=0\) for all \(\theta\le a_0\) and \(u(\theta)>0\) for all \(\theta>a_0\). Then the functions \(v_n=u_n^{-1}\) exist and are smooth, and \(v_n(0)=a_0\). Let us now consider the smooth functions

We observe that

since \(v_n(s)\ge v(s)\) in view of the estimate \(u_n\le u\), which ensures the inequality

Since \(\partial_u f\ge 0\), we also have \(T_n'(s)\le 1\). In addition, \(T_n(0)=0\), because, for any \(\tau\le a_0\), we have \(u_n(\tau)=0\), whence \(\partial_u f(u_n(\tau),\tau)=0\). Thus, \(T_n\in\mathscr T\).

Let us show that, for the mapping \(T_n\), the function \(u_n\) is exactly \(u_{T_n}\). Indeed,

The derivative of this expression equals

By our condition on \(\partial_u f\), the equality to zero is only possible for \(\theta=v_n(u)\), that is, for \(u=u_n(\theta)\).

Let us verify that the integrals of the functions \(T_n(u_n(\theta))\) against the measure \(P\) tend to the integral of \(T(u(\theta))\). We have

Since \(u_n(\theta)\) increases to \(u(\theta)\), the convergence of the integrals holds for the first two terms. The negative functions \(\partial_\theta f(u_n(\tau),\tau)\) decrease to \(\partial_\theta f(u(\tau),\tau)\), which implies the decreasing of their integrals over the interval \([0,\theta]\) to the integral of \(\partial_\theta f(u(\tau),\tau)\) for every \(\theta\). Since \(f\ge 0\), \(\partial_\theta f\le 0\), and \(T_n\ge 0\), we have the estimates

Taking into account the integrability of \(u(\theta)\) with respect to \(P\), we obtain the desired convergence of the integrals with respect to the measure \(P\).

4. Conclusions

So far, we have reduced one supremum over a class of functions to another supremum over another class of functions, but without evaluating intermediate maxima, which simplifies the problem, although does not enable us to find the solution explicitly. However, we now introduce a number of conditions on \(f\) and \(p\), which lead to further simplifications.

For every \(\theta>0\) (or for every \(\theta\) from a given interval on which the measure \(P\) is concentrated), we consider the quantity

Due to our conditions on \(f\), this maximum exists. Suppose that there is a function \(u(\,\cdot\,)\in\mathscr U\) for which this maximum is attained at some point \(u(\theta)\) for every \(\theta>0\). Then, obviously,

If this function \(u(\,\cdot\,)\) is continuously differentiable and has positive derivative, then, as in the proof of the theorem, by means of its inverse \(v\), we can construct a function \(T\in\mathscr T\), for which \(u=u_T\), so \(T\) gives a maximum in our problem. However, one can hardly evaluate \(T\) in nontrivial examples.

Let us consider the special case

In this case, \(M(\theta)\) is attained at the point

If this function is continuous and increasing, then a straightforward verification shows that it belongs to the class \(\mathscr U\). Therefore,

For a differentiable function \(p\) we arrive at the condition

This condition is fulfilled for the uniform distributions in intervals (and, more generally, for distributions in interval with nondecreasing densities), for the standard Gaussian measure, for the exponential distribution, and for the Cauchy distribution.

With the aid of the explicitly found function \(u\), it is possible in principle to find the corresponding functions \(v\) and \(T\), but one should not expect to have simple explicit formulas for them. For example, if \(P\) is the uniform distribution in \([0,1]\), then \(p=1\) in \([0,1]\), \(F(\theta)=2-2\theta\), and \(u(\theta)=\theta^3/(2-\theta)\). In this case, \(v\) is found from the cubic equation

Note that if we take only the linear functions \(T(u)=ku\) for \(f(u,\theta)=u^2/(2\theta^2)\), then the maximum is attained for \( k=1/2\) and equals

This quantity is less than the exact maximum indicated above. On the other hand, it follows from the Cauchy inequality that we can obtain more than

for no \(T\). Indeed,

whence

Thus, the obtained value is between the quarter and the half of the second moment of \(p\).

Let us consider a more general example. Suppose, in addition, that

If the measure \(P\) is concentrated on an interval, then, under these conditions, \(\theta\) is taken from the corresponding interval. We set

Let us show that if \(G'(\theta)\le 1\) for all \(\theta>0\), then there is a unique point \(u(\theta)\) at which \(M(\theta)\) is attained, and \(M(\theta)\) increases in \(\theta\).

It follows from the indicated conditions that the function

is strictly decreasing (since it has negative derivative). Hence it can have only one zero. Let \(\theta_1<\theta_2\). Then

for all \(u<u(\theta_1)\), because \(u(\theta_1)\) is a point of maximum. Let us show that

increases in \(u\); it will follow from this that

for all \(u<u(\theta_1)\), that is, \(u(\theta_2)\ge u(\theta_1)\). The increase of this function in \(u\) follows from the decrease in \(u\) of the function

The condition \(G'\le 1\) can be written in the form

It is fulfilled for any increasing density on an interval (but is not fulfilled for the typical decreasing densities on the half-line).

Remark.

We can take a sequence of functions \(T_n\in\mathscr T\), for which the integrals of \(T_n(u_{T_n}(\theta))\) with respect to the measure \(P\) increase to \(J(f,P)\). Since these functions are \(1\)-Lipschitz and \(T_n(0)=0\), we can pick a subsequence uniformly converging on bounded intervals to some function \(T\in\mathscr T\). The increasing functions \(u_{T_n}\) are estimated by the function \(R\); hence we can pass to a further subsequence (numbered by the same indices) that converges pointwise to some increasing function \(u\). The function \(u(\theta)-T(u(\theta))-f(u(\theta),\theta)\) is the pointwise limit of the functions \(u_{T_n}(\theta)-T_n(u_{T_n}(\theta))-f(u_n(\theta),\theta)\). Indeed, it follows by the uniform Lipschitz continuity of the functions \(T_n\) that \(T_n(x_n)\to T(x)\) as \(x_n\to x\). This implies that \(u(\theta)\) is a point of maximum of the function \(x-T(x)-f(x,\theta)\). However, it is not clear whether this point of maximum is minimal. If this is true, then the limit mapping \(T\) gives the maximum of the function under consideration.

References

S. Steinerberger and A. Tsyvinski, Tax Mechanisms and Gradient Flows, arXiv: 1904.13276 (2019).

D. Sachs, A. Tsyvinski, and N. Werquin, “Nonlinear tax incidence and optimal taxation in general equilibrium,” Econometrica 88 (2), 469–493 (2020).

J. A. Mirrlees, “An exploration in the theory of optimum income taxation,” Rev. Econom. Stud. 38 (2), 175–208 (1971).

J. A. Mirrlees, “The theory of optimal taxation,” in Handbooks in Econom. III, Vol. 1: Handbook of Mathematical Economics (North-Holland, Amsterdam, 1986), pp. 1197–1249.

M. F. Hellwig, “Incentive problems with unidimensional hidden characteristics: a unified approach,” Econometrica 78 (4), 1201–1237 (2010).

E. Saez, “Using elasticities to derive optimal income tax rates,” Rev. Econom. Stud. 68, 205–229 (2001).

V. M. Alekseev, V. M. Tikhomirov, and S. V. Fomin, Optimal Control (Consultants Bureau, New York, 1987).

S. M. Aseev and V. M. Veliov, “Another view of the maximum principle for infinite-horizon optimal control problems in economics,” Russian Math. Surveys 74 (6), 963–1011 (2019).

A. N. Kolmogorov and S. V. Fomin, Introductory Real Analysis, Transl. from the 2nd Russian ed. (Dover, New York, 1975).

Acknowledgments

The authors thank A. Tsyvinski for useful discussions.

Funding

This work was supported by the Russian Science Foundation under grant 17-11-01058 at Moscow State University.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bogachev, T.V., Popova, S.N. On Optimization of Tax Functions. Math Notes 109, 163–170 (2021). https://doi.org/10.1134/S000143462101020X

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S000143462101020X