Abstract

The US government creates astonishingly complete records of policy creation in executive agencies. In this article, we describe the major kinds of data that have proven useful to scholars studying interest group behavior and influence in bureaucratic politics, how to obtain them, and challenges that we as users have encountered in working with these data. We discuss established databases such as regulations.gov, which contains comments on draft agency rules, and newer sources of data, such as ex-parte meeting logs, which describe the interest groups and individual lobbyists that bureaucrats are meeting face-to-face about proposed policies. One challenge is that much of these data are not machine-readable. We argue that scholars should invest in several projects to make these datasets machine-readable and to link them to each other as well as to other databases.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

If the US federal government is unquestionably good at one thing, it is pushing out paper. In theory, governmental records relevant to the creation of policy via agency rulemaking have long been available to researchers. Given its volume and quasi-legislative nature, rulemaking is generally required to be more transparent than more individualized decision-making such as adjudication, enforcement, awarding grants, or making contracts. In practice, however, obtaining information on what is happening during rulemaking has been costly and challenging. In the 1990s and early 2000s, leading research was limited to data on just a few rules (e.g., Golden 1998) or surveys (e.g., Furlong 2004). Since 1994, when the government first released the Federal Register online, data on notice-and-comment rulemaking have become increasingly detailed and now include data on draft policies, the activities of policymakers, and interest group advocacy (see Yackee 2019 for a recent review).

In this article, we describe data sources that have proven useful to scholars studying interest group lobbying of federal agencies, how to obtain them, and also challenges in working with these data. Some of these data sources we discuss are machine-readable records of all agency rules published in the Federal Register, comments posted on regulations.gov, metadata about rules contained in the Unified Agenda, and Office of Management and Budget (OMB) reports. We also describe sources that have become more available in recent years, such as ex-parte meeting logs and individually identified personnel records of nearly all federal employees since 1973. For background theory on lobbying and interest group influence over agency policymaking, see Carpenter and Moss (2013).

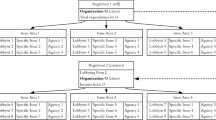

These data sources do not exhaust the kinds of records relevant to researchers. They reflect what is available at present, but new data sources emerge constantly. Thus, we also highlight datasets that may soon become more accessible or available, perhaps after enterprising researchers submit the necessary Freedom Of Information Act (FOIA) requests. Examples include agency press releases (see, e.g., Libgober 2019; Libgober and Carpenter 2018) and the Foreign Agents Registration Act (FARA) reports (Shepherd and You 2019).Footnote 1 To orient potential students of agency policymaking to available sources of data, we identify four units of analysis: (1) participants, (2) policymakers, (3) policy texts, and (4) metadata (e.g., policy timing). We describe where to find data on each in roughly the order that they appear in the process of developing a rule.

Background: rulemaking and the Administrative Procedures Act

For decades, the volume of legal requirements emerging from executive agencies has dwarfed the lawmaking activity of Congress. Each year, agencies publish three thousand or more regulations. In doing so, agencies must generally follow a process prescribed by the Administrative Procedures Act (APA), 5 U.S.C. §553(c). For each rule, agencies create a collection of documents at each stage of their policymaking process. Section 553 requires agencies to issue a Notice of Proposed Rulemaking (NPRM) in the Federal Register to notify potentially affected parties that the regulatory environment or program administration may change. Following the NPRM, agencies post these proposed rules and comments from interested stakeholders and individuals on their websites or regulations.gov. Agencies are required to consider public comments but are not required to alter rules based on them. These documents are organized in a ‘docket’ folder with a unique name and ID number. Once kept in literal folders in each agency’s ‘docket room,’ where visitors could make photocopies, these documents are now online. For example, the Centers for Medicare & Medicaid Services rulemaking docket on health plans disclosing costs can be accessed at https://www.regulations.gov/docket?D=CMS-2019-0163. The fact that the APA requires agencies to assemble a comprehensive record of who sought to influence the policymaking process—and that such records are relatively complete—is unusual for federal policymaking. This makes agency policymaking particularly exciting for interest group scholars.

Who participates in the rulemaking process?

Public attention in rulemaking is remarkably skewed. A few rules, such as the Federal Communication Commission’s rules on net neutrality, receive millions of comments. In contrast, half of the proposed rules open for comment on https://www.regulations.gov/ received no comments at all (Libgober 2020). Such dramatic variation in public participation challenges the idea of a “typical” rule or rulemaking participant. Participants include businesses, public interest groups, trade associations, unions, law firms, and academics (Cuéllar 2005; Yackee and Yackee 2006). While businesses are the most consistent and influential participants in most rulemakings (Yackee and Yackee 2006; Libgober 2020), most comments come from public pressure campaigns targeting a relatively small number of rules; at least 39 million of the 48 million comments on proposed rules on regulations.gov were mobilized by just 100 advocacy organizations such as the Sierra Club (Judge-Lord 2019).

Because interest groups seek to influence rules, scholars are interested in patterns of participation in rulemaking. For example, You (2017) finds that half of all spending on lobbying legislation occurs after a bill becomes law. Participation in this process can begin even before a proposed rule is issued. de Figureido and Kim (2004) show that meetings between agency officials and firms spike before an agency issues a policy. Libgober (2019) finds that firms that meet with federal regulators before a rule is issued may receive abnormally high stock market returns upon its release. Leveraging high-frequency trading data, Libgober shows that in the minutes and hours following the publication of proposed rules at the Federal Reserve, the firms that met with the Board during rule-development significantly outperformed matched market competitors that did not obtain such early access. These findings are consistent with the analysis of qualitative researchers that commenters who participate early in the rulemaking process can shape the content of the rule (Naughton et al. 2009).

Scholars are also interested in how participation in rulemaking may affect a rule’s ultimate fate when it is sent to the White House Office of Management and Budget or in judicial review. Interest groups can secure policy concessions by lobbying during OMB review (Haeder and Yackee 2015). They also use the rulemaking record to build a case for litigation. Yet, there are few large-N studies linking lobbying in rulemaking to litigation or court decisions. Libgober and Rashin (2018) analyzed comments submitted to financial regulators and found that threats of litigation were rare, and even comments that seemed to “threaten” litigation rarely culminated in a judicial decision. Yet, most court decisions involving challenges to rules did follow comments that threatened litigation. Judge-Lord (2016) found no relationship between the number of comments and the likelihood that the Supreme Court upheld or struck down an agency rule.

To analyze patterns of participation, scholars use data from a variety of sources. Sources for data on participation are agency rulemaking dockets (Golden 1998; Yackee 2006; Young et al. 2017; Ban and You 2019), the Federal Register (Balla 1998; West 2004), and regulations.gov (Balla et al. 2019; Gordon and Rashin nd). Though commenters are not generally required to disclose their names and affiliations, many do. The best current data sources for obtaining the names of the organizations that submit comments on federal regulations are regulations.gov and the websites of the independent agencies themselves.

Obtaining and working with data on comment participants

Scholars wishing to obtain data on comments and commenters from executive agencies generally use the website regulations.gov. Obtaining these data requires overcoming several technical and bureaucratic hurdles. First, retrieving bulk data from regulations.gov requires the use of an Application Programming Interface (API).Footnote 2 Second, getting an accurate count of comments is not straightforward as agencies have different policies regarding duplicated commentsFootnote 3 and confidential business information (Lubbers 2012).

Third, downloading these data can be time-consuming. As of February 29, 2020, Regulations.gov has 12,227,522 public submission documents representing over 70 million public comments, over 80,000 rules, and nearly 1.5 million other documents. Regulations.gov is subject to a rate limit of 1000 queries per hour, making it difficult for scholars seeking to analyze rules with tens of thousands of comments. This limit is particularly problematic for rules where the majority of comments are attachments, as downloading an individual document requires calling the API twice (once for the docket information, which includes the attachment URL(s), and then a second time to download the linked file). Fourth, not all federal agencies post rulemaking documents to regulations.gov. For these agencies, scholars studying participation in rulemaking can often obtain data from the agency’s website.Footnote 4

After obtaining comment data, scholars must choose how they want to preprocess the names of the organizations that comment. This step is vital as organizations such as Goldman Sachs and the American Bar Association, for example, submit comments using multiple versions of their names. These decisions can have a substantial impact on the analysis as misidentifying organizations may result in them being dropped or double-counted. Fuzzy matching, using an algorithm to identify the similarity (or “distance”) between two strings of text, can help but is not a panacea. For example, the algorithm will show a small distance between Goldman Sachs and Goldman Sachs & Co but can show similarly small distances between distinct entities and it cannot separate multiple organizations with the same acronym (e.g., the American Bankers’ Association and American Bar Association both comment as the ABA).

Who writes rules?

Political scientists have long used personal identity and social networks to understand political outcomes. In the last decade, the emergence of new data sources made it easier to learn about rulewriters’ identities and networks. Some of the most important data sources in this regard are the US Office of Personnel Management’s (OPM) data on government employees (e.g., Bolton et al. 2019),Footnote 5 Open Secrets’ lobbying (e.g., Baumgartner et al. 2009) and revolving door databases (e.g., i Vidal et al. 2012; Bertrand et al. 2014), machine-readable lobbying disclosure act reports (e.g., Boehmke et al. 2013; You 2017; Dwidar 2019), meeting logs (Libgober 2019), and datasets of corporate board membership such as Boardex (e.g., Shive and Forster 2016). Carrigan and Mills (2019) illustrate the possibilities of these new data sources. They find that the number of job functions of the bureaucrats who write the rules is associated with both decreases in the time an agency takes to promulgate a rule and increases in the probability that the rule will be struck down in court. In an innovative study of unionization, Chen and Johnson (2015) link OPM data to Adam Bonica’s DIME ideology scores to classify the ideology of agency staff over time. These early efforts suggest that there are exciting opportunities to study who writes the rules and how the identities of the rulewriters affect policy.

Obtaining and working with personnel and lobbying data

Scholars can obtain data on agency personnel from the BuzzFeed personnel data release, which contains information such as employee salaries, job titles, and demographic data from 1973 through 2016.Footnote 6 These are the most comprehensive personnel records publicly accessible, but they have limitations. First, not all agencies and occupations are a part of this release.Footnote 7 Second, some of the employees in these data do not have unique identification numbers, and common names such as ‘John Smith’ match multiple employees. For example, the Veterans Health Administration employed 24 John Smiths in 2014, five with the same middle initial.

To obtain machine-readable data on the identities of domestic lobbyists, scholars use two databases from Open Secrets—a nonprofit that tracks spending on politics—on administrative and Congressional lobbying. Downloading the lobbying data is straightforward; it only requires an account to access the ‘bulk data’ page. The lobbying data comes from the required disclosures under the Lobbying Disclosure Act of 1995 (LDA). Note that the reporting threshold varies by type of firm (in-house have a higher minimum reporting threshold than lobbying firms) and over time.Footnote 8 The lobbying data cover 1999 through 2018 and are broken up into seven machine-readable tables.Footnote 9 We prefer the Open Secrets data to the raw Senate data as the Open Secrets version contains more machine-readable information. Lobbying data are subject to several limitations; the reports do not contain exact monetary amounts and some lobbyists do not disclose required contacts.Footnote 10 Open Secrets also has a database on revolving door employees that shows the career paths of federal government workers that went to private sector work that depends on interacting with the federal government.Footnote 11

Lobbyists advocating for foreign clients are required to disclose these contacts under the Foreign Agent Registration Act. Foreign agents often lobby bureaucratic agencies; Israel, for example, retained law firm Arnold & Porter for advice on registering securities with the Securities and Exchange Commission (SEC).Footnote 12 In addition to the participants, the reports also contain the nature of the contact and the specific officials contacted. These data are astonishingly complete—the website contains all 6264 FARA registrants and their foreign contacts since 1942.Footnote 13 The FARA data are not all in machine-readable form.Footnote 14 Consequently, scholars using these data only focus on a subset such as You (2019) who focuses on lobbying activities by Colombia, Panama, and South Korea. We caution users that the same participant may appear under multiple names.

Corporate executives, lawyers, and lobbyists often have contacts with agency officials, called ex-parte communications (Lubbers 2012), to discuss rules outside of the public comment process. While record-keeping practices for these meetings vary considerably, they are often publicly available on agency websites. Because meetings data are held in different places on each agency’s website, obtaining these data requires writing a web scraper for each agency. As there are no uniform standards for reporting meeting data, these data differ substantially from agency to agency in both content and organization. The Federal Reserve, for example, groups meetings by subject, not by rule,Footnote 15 the SEC groups meetings by rule, and the Commodity Futures Trading Commission (CFTC) and Federal Communication Commission (FCC) meeting records are not grouped at all.Footnote 16 Meeting participants are typically individually identified, but some agencies only report the names of the organization represented, and others report meeting topics but not participants. We suspect that missingness varies by agency, but are not aware of any study evaluating missingness in meeting record disclosures.

What do the rules say?

The literature on rulemaking in political science and public administration has focused on whether public participation influences the content of rules (e.g., Balla 1998; Cuéllar 2005; Yackee and Yackee 2006; Naughton et al. 2009; Wagner and Peters 2011; Haeder and Yackee 2015, 2018; Gordon and Rashin nd; Rashin 2018). Knowing what the rules say and how these texts have changed from proposal to finalization is crucial studying commenter influence. Rule preambles, which describe what the agency has tried to accomplish and how it has engaged with stakeholders, are especially useful. The legally operative text is often less useful to social scientists because interpreting legal text usually requires substantial domain expertise. However, where interpretation is straightforward, such as rate-setting, scholars can capture variation in legally operative texts (Balla 1998; Gordon and Rashin nd).

Obtaining and working with data on rule text

The federal government publishes all rules in the Federal Register, accessible via FederalRegister.gov. This database is comprehensive and has machine-readable records of all regulations published after 1994 and PDF versions from 1939–1993.Footnote 17 The Federal Register API does not have any rate limits. The Federal Register office assigns every regulatory action a document number. In our experience, this ‘FR Doc Number’ is the only truly unique and consistently maintained identifier for proposed and final rules.

While the raw text of rules is relatively straightforward to obtain, there are several thorny theoretical and methodological issues that scholars must overcome.Footnote 18 First, the standard path from NPRM to public comments to final rule is not always straightforward. Some rules are withdrawn before a final rule. Other agencies issue interim final rules subject change. For studies that seek to compare the proposed and final rules, the most problematic rules are the ones where one proposed rule gets broken up into a few smaller final rules or the reverse, where short rules become bundled into one final rule. These rules pose challenges for inference as the processes that lead to amalgamation or separation are not well understood. Second, scholars must determine how to preprocess the rulemaking data before feeding these data to a text analysis algorithm.

Obtaining and working with rule metadata

Rulemaking metadata, such as the time rules are released, allow scholars to answer questions about factors that affect the rulemaking environment. Scholars use this data to study questions about regulatory delay (e.g., Acs and Cameron 2013; Thrower 2018; Potter 2017; Carrigan and Mills 2019; Carpenter et al. 2011), agenda-setting (e.g., Coglianese and Walters 2016), and the financial impact of rules (e.g., Libgober 2019). These scholars relied on data from the Office of Information and Regulatory Affairs (OIRA) (Acs and Cameron 2013; Carrigan and Mills 2019), the Unified Agenda (Coglianese and Walters 2016; Potter 2017), the Federal Register (Thrower 2018), press releases (Libgober and Carpenter 2018), and the Food and Drug Administration’s drug approval and postmarket experience database (Carpenter et al. 2011).

Obtaining data from the Unified Agenda is relatively straightforward as all of these documents since 1995 are available online in machine-readable form.Footnote 19 The Unified Agenda contains many of the proposed regulations that agencies plan to issue in the near future, making it an extremely useful data source for studying questions about agenda setting and timing (e.g., Potter 2019). There are, however, significant limitations to these data. First, agencies report early stage rulemaking to the Unified Agenda strategically (Nou and Stiglitz 2016). Second, agencies do not list all ‘failed’ rules that did not become final rules in the Unified Agenda (Yackee and Yackee 2012). Third, Coglianese and Walters (2016) note that the Unified Agenda misses much of the regulatory agency’s work, including enforcement actions, adjudicatory actions, and decisions not to act.

As addressed elsewhere in this issue (Haeder and Yackee 2020 in this issue), many rules, especially rules that are controversial or deemed economically significant, are reviewed by OIRA (a subagency of OMB). Obtaining OIRA data is relatively straightforward, as all of these documents since 1981 are available online in machine-readable form. These data include the date on which OIRA received the draft rule from the agency, whether the review was expedited, and whether the rule is determined to be ‘economically significant’ or affect ‘federalism.’

In addition to disclosures mandated by law, agencies often issue press releases for agency actions. Much like the meetings data discussed above, policies regarding the storage and dissemination of press releases differ from agency to agency. The Federal Reserve’s website, for example, lists all press releases since 1996, while the SEC only has them since 2012.Footnote 20 When working with press releases, scholars often need data on the exact time documents were made available to the general public (see, e.g., Libgober 2020). Press release metadata, such as the exact time a press release becomes public, can often be extracted from Really Simple Syndication (RSS) feeds.

Each data source has different types of missingness and different limitations regarding the information it contains about each rule. For example, data from OIRA only contains rules reviewed by OMB. The Unified Agenda covers a broader scope of policies, but due to strategic reporting and frequent reporting errors, desired cases may be missing. For published draft and final rules, these missing cases may be found in a more reliable source like the Federal Register, OIRA reports, or regulations.gov. The Federal Register is the most reliable source for rule texts but contains the least amount of rule metadata and only includes published draft and final rules. Some rulemaking projects that did not reach the published draft (NPRM) stage may be missing from all datasets. More importantly, the diversity of these data sources means that, for a given query, one source will often include cases that a second does not, while the second source includes variables that first does not.

Discussion: assembling complete databases

The US government releases troves of data on rulemaking. Yet, these data require substantial effort from scholars to be useful for research. Scholars working on bureaucratic politics face two primary data tasks: (1) assembling complete, machine-readable datasets of agency rulemaking activity and (2) linking observations across datasets. In the near term, we see four major projects to make data more accessible, prevent duplicated efforts to download and clean data, and thus increase the efficiency of research efforts. First, a complete database is needed to link commenting activity throughout the Federal government. Researchers should be able to query and download these data in bulk, including comment text and metadata. Second, a comprehensive database could link observations of the revolving door for rulewriters and participants across OPM personnel records, LDA disclosure forms, and FARA data. This database could be augmented with data from networking sites like LinkedIn. Third, a database could link all meeting activities throughout the federal government. Finally, creating unique identifiers for each commenter would allow researchers to link commenting behavior across datasets and to other information about these individuals and organizations. These projects would allow scholars to pursue novel research on political participation, influence, and public management.

Notes

We link to more relevant data sources on our GitHub page: https://github.com/libgober/regdata/blob/master/README.md.

An API is a set of procedures that allow a user to access data from a website in a structured way. Some websites, like regulations.gov, limit API usage by requiring users to get an API key, see https://regulationsgov.github.io/developers/.

The difference between the number of reported comments and the number of comments on regulations.gov is often because some agencies group mass-comment campaigns into a single document. A small number of comments on regulations.gov are duplicates posted in error. To resolve a discrepancy, we recommend searching the agency’s website or contacting the agency directly.

For example, the Federal Energy Regulatory Commission has posted all comments they receive on their eLibrary website, but not all of these appear on regulations.gov. Unlike regulations.gov, most agency sites do not have an API and thus require bespoke web scrapers. We offer examples of scrapers for regulations.gov and several of these agencies on https://github.com/libgober/regdata.

Note that the dataset (Bolton et al. 2019) used is not public.

Available at https://archive.org/details/opm-federal-employment-data/page/n1. Updated personnel files are available through 2018 from the OPM itself here: https://www.fedscope.opm.gov/datadefn/index.asp.

These data exclude at least 16 agencies and, within the covered agencies, law enforcement officers, nuclear engineers, and certain investigators (Singer-Vine 2017).

See https://lobbyingdisclosure.house.gov/ldaguidance.pdf for details.

These data are here: https://www.opensecrets.org/bulk-data/download?f=Lobby.zip.

See https://www.opensecrets.org/revolving/methodology.php. Unlike their lobbying database, this dataset has no bulk data option and must be scraped.

Only the metadata (e.g., names) are machine-readable.

However, because rules are usually published as PDFs, converting them to raw text for analysis introduces errors.

The Unified Agenda from 1983 through 1994 is available in the Federal Register.

References

Acs, A., and C. Cameron. 2013. Does White House Regulatory Review Produce a Chilling Effect and “OIRA Avoidance” in the Agencies? Presidential Studies Quarterly 43(3): 443–467.

Balla, S. 1998. Administrative Procedures and Political Control of the Bureaucracy. American Political Science Review 92(3): 663–673.

Balla, S., A. Beck, W. Cubbison, and A. Prasad. 2019. Where’s the Spam? Interest Groups and Mass Comment Campaigns in Agency Rulemaking. Policy & Internet 11(4): 460–479.

Ban, P., and H.Y. You. 2019. Presence and Influence in Lobbying: Evidence from Dodd–Frank. Business and Politics 21(2): 267–295.

Baumgartner, F., J. Berry, M. Hojnacki, D. Kimball, and B. Leech. 2009. Lobbying and Policy Changes: Who Wins, Who Loses, and Why. Chicago: University of Chicago Press.

Bertrand, M., M. Bombardini, and F. Trebbi. 2014. Is It Whom You Know or What You Know? An Empirical Assessment of the Lobbying Process. American Economic Review 104(12): 3885–3920.

Boehmke, F., S. Gailmard, and J. Patty. 2013. Business as Usual: Interest Group Access and Representation Across Policy-Making Venues. Journal of Public Policy 33(1): 3–33.

Bolton, A., J. D. Figueiredo, and D. Lewis. 2019. Elections, Ideology, and Turnover in the U.S. Federal Government, Working Paper, National Bureau of Economic Research.

Carpenter, D., J. Chattopadhyay, S. Moffitt, and C. Nall. 2011. The Complications of Controlling Agency Time Discretion: FDA Review Deadlines and Postmarket Drug Safety. American Journal of Political Science 56(1): 98–114.

Carpenter, D., and D. Moss. 2013. Preventing Regulatory Capture: Special Interest Influence and How to Limit it. Cambridge: Cambridge University Press.

Carrigan, C., and R. Mills. 2019. Organizational Process, Rulemaking Pace, and the Shadow of Judicial Review. Public Administration Review 79(5): 721–736.

Chen, J., and T. Johnson. 2015. Federal Employee Unionization and Presidential Control of the Bureaucracy. Journal of Theoretical Politics 27(1): 151–174.

Coglianese, C., and D. Walters. 2016. Agenda-Setting in the Regulatory State: Theory and Evidence. Administrative Law Review 68(1): 865–890.

Cuéllar, M.-F. 2005. Rethinking Regulatory Democracy. Administrative Law Review 57(2): 411–499.

de Figureido, J., and J. Kim. 2004. When do Firms Hire Lobbyists? The Organization of Lobbying at the Federal Communications Commission. Industrial and Corporate Change 13(6): 883–900.

Dwidar, M. 2019. (Not So) Strange Bedfellows? Lobbying Success and Diversity in Interest Group Coalitions. In Southern Political Science Association Annual Conference.

Furlong, S. 2004. Interest Group Participation in Rule Making: A Decade of Change. Journal of Public Administration Research and Theory 15(3): 353–370.

Golden, M. 1998. Interest Groups in the Rule-Making process: Who Participates? Whose Voices Get Heard? Journal of Public Administration Research and Theory 8(2): 245–270.

Gordon, S., and S. Rashin. nd. Stakeholder Participation in Policymaking: Evidence from Medicare Fee Schedule Revisions. Journal of Politics. https://doi.org/10.1086/709435.

Haeder, S., and S.W. Yackee. 2015. Influence and the Administrative Process: Lobbying the U.S. President’s Office of Management and Budget. American Political Science Review 109(3): 507–522.

Haeder, S., and S.W. Yackee. 2018. Presidentially Directed Policy Change: The Office of Information and Regulatory Affairs as Partisan or Moderator? Journal of Public Administration Research and Theory 28(4): 475–488.

i Vidal, J.B., M. Draca, and C. Fons-Rosen. 2012. Revolving Door Lobbyists. American Economic Review 102(7): 3731–3748.

Judge-Lord, D. 2016. Why Courts Defer to Administrative Agency Judgement? In Midwest Political Science Association Annual Conference.

Judge-Lord, D. 2019. Why Do Agencies (Sometimes) Get So Much Mail? In Southern Political Science Association Annual Conference.

Libgober, B. 2019. Meetings, Comments, and the Distributive Politics of Administrative Policymaking. In Southern Political Science Association Annual Conference.

Libgober, B. 2020. Strategic Proposals, Endogenous Comments, and Bias in Rulemaking. Journal of Politics 82: 642–656.

Libgober, B. and D. Carpenter. 2018. What’s at Stake in Rulemaking? Financial Market Evidence for Banks’ Influence on Administrative Agencies. https://libgober.files.wordpress.com/2018/09/libgober-and-carpenter-unblinded-what-at-stake-in-rulemaking.pdf.

Libgober, B. and S. Rashin. 2018. What Public Comments During Rulemaking Do (and Why). In Southern Political Science Association Annual Conference.

Lubbers, J.S. 2012. A Guide to Federal Agency Rulemaking. Chicago: American Bar Association.

Naughton, K., C. Schmid, S.W. Yackee, and X. Zhan. 2009. Understanding Commenter Influence During Agency Rule Development. Journal of Policy Analysis and Management 28(2): 258–277.

Nou, J., and E. Stiglitz. 2016. Strategic Rulemaking Disclosure. Southern California Law Review 89: 733–786.

Potter, R.A. 2017. Slow-Rolling, Fast-Tracking, and the Pace of Bureaucratic Decisions in Rulemaking. The Journal of Politics 79(3): 841–855.

Potter, R.A. 2019. Bending the Rules. Chicago: The University of Chicago Press.

Rashin, S. 2018. Private Influence over the Policymaking Process. In Southern Political Science Association Annual Conference.

Shepherd, M., and H.Y. You. 2019. Exit Strategy: Career Concerns and Revolving Doors in Congress. American Political Science Review 114(1): 270–284.

Shive, S., and M. Forster. 2016. The Revolving Door for Financial Regulators. Review of Finance 21(4): 1445–1484.

Singer-Vine, J. 2017. We’re Sharing a Vast Trove of Federal Payroll Records. https://www.buzzfeednews.com/article/jsvine/sharing-hundreds-of-millions-of-federal-payroll-records.

Thrower, S. 2018. Policy Disruption Through Regulatory Delay in the Trump Administration. Presidential Studies Quarterly 48(3): 517–536.

Wagner, W., K. Barnes, and L. Peters. 2011. Rulemaking in the Shade: An Empirical Study of EPA’s Air Toxic Emissions Standards. Adminstrative Law Review 63(1): 99–158.

West, W. 2004. Formal Procedures, Informal Processes, Accountability, and Responsiveness in Bureaucratic Policy Making: An Institutional Policy Analysis. Public Administration Review 64(1): 66–80.

Yackee, J.W., and S.W. Yackee. 2006. A Bias Towards Business? Assessing Interest Group Influence on the U.S. Bureaucracy. The Journal of Politics 68(1): 128–139.

Yackee, J.W., and S.W. Yackee. 2012. An Empirical Examination of Federal Regulatory Volume and Speed, 1950–1990. George Washington Law Review 80: 1414–92.

Yackee, S.W. 2006. Sweet-Talking the Fourth Branch: The Influence of Interest Group Comments on Federal Agency Rulemaking. Journal of Public Administration Research and Theory 16(1): 103–124.

Yackee, S.W. 2019. The Politics of Rulemaking in the United States. Annual Review of Political Science 22(1): 37–55.

You, H.Y. 2017. Ex Post Lobbying. The Journal of Politics 79(4): 1162–1176.

You, H. Y. 2019. Dynamic Lobbying: Evidence from Foreign Lobbying in the US Congress. Working Paper.

Young, K., T. Marple, and J. Heilman. 2017. Beyond the Revolving Door: Advocacy Behavior and Social Distance to Financial Regulators. Business and Politics 19(2): 327–364.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Carpenter, D., Judge-Lord, D., Libgober, B. et al. Data and methods for analyzing special interest influence in rulemaking. Int Groups Adv 9, 425–435 (2020). https://doi.org/10.1057/s41309-020-00094-w

Published:

Issue Date:

DOI: https://doi.org/10.1057/s41309-020-00094-w