Abstract

To date, pricing and revenue management literature has mostly concerned itself with how firms can maximize revenue growth and minimize opportunity cost. Rarely has the ethical and legal nature of the field been subjected to substantial comment and discussion. This viewpoint article draws attention to some inherent ethical concerns and legal challenges that may come with future developments in pricing, in particular online personalized pricing, thereby seeking to initiate a broader discussion about issues such as dishonesty, unfairness, injustice, and misconduct in pricing and revenue management practices. Reflecting on how legislators and regulators in Europe seek to limit recent developments in personalized pricing, we argue that not much is to be expected from the legal system, at least not in the short run, with regard to guiding the pricing and revenue field in setting and implementing minimum standards of behavior. Scholarly attention should however not only be directed to the legal challenges of new forms of direct price discrimination, such as algorithmic personalized dynamic pricing, but also to the ethical and legal implications of more granular forms of indirect price discrimination, through which consumers will be allowed to ‘freely’ sort themselves into different microsegments, especially when the ‘self-selection’ is enticed by deceptive personalized applications of psychological pricing and neuromarketing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

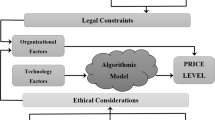

Algorithmic pricing is on the rise. Fueled by technological advances, the effectiveness of big data analytics, and innovations in e-commerce, particularly with regard to online retailing, automated algorithms increasingly support firms in dynamically optimizing prices either at the market (segment) level or the personal level (Seele et al. 2019). Capable of independently setting and changing prices dynamically and personally over time, the algorithms are inherently value-laden, meaning that their use is not divorced from ethical consequences (Martin 2019). While ethical concerns with regard to dynamic pricing have received little research attention (e.g., Haws and Bearden 2006), personalized pricing seems to spur a vigorous debate. As Yeoman (2016, p. 1) observes, “many in the public policy community are aligned with consumerist lobbies in being at least suspicious of (if not directly hostile to) personalized pricing—seeing something dangerously Orwellian in this whole evolution.” Or, in the words of Miller (2014, p. 103):

The secrecy of pricing decisions contributes to the popular feeling that they are deceptive, harmful to consumers, and unfair. The social injustices and market harms that are caused by price discrimination go untreated because public scrutiny is unavailable. This must change. Price discrimination has become a matter of serious public concern. The public is entitled to answers from the companies that buy and sell their information.

Although research in this field is still in its infancy, work on mathematical modeling lends support for some fairness concerns regarding the use of personal information for pricing. For example, Esteves (2009) and Chen and Zhang (2009) show that the use of behavioral data reduces price competition even if consumers behave strategically. De Nijs (2017) shows that if competitors share these data, profit increases at the expense of consumers. Esteves and Cerqueira (2017) and Esteves and Resende (2019) demonstrate that in a duopoly, all consumers are expected to pay higher prices when firms use personalized pricing through targeted advertising, with detrimental outcomes for consumer welfare. Also, Belleflamme and Vergote (2016) show that when a firm is able to personalize prices, consumers may be collectively better off without privacy-protecting measures as consumers who cannot hide their digital activities pay the (higher) price. Conversely, on examining the impact of privacy policies, Baye and Sappington (2019, p. 13) found that policies that “best protect unsophisticated consumers may do so at the expense of sophisticated consumers,” thereby reducing both total welfare and firm profit. Thus, overall there is evidence for concerns regarding distributive justice (Wagner and Eidenmuller 2019).

This article draws attention to some inherent ethical concerns and legal challenges that may emerge with future developments in pricing and revenue management, in particular online personalized pricing. The intention is to initiate a broader discussion about issues such as dishonesty, unfairness, injustice, and misconduct in pricing and revenue management practices.

Legal challenges

Limiting our analysis to Europe, few legal provisions speak directly to online personalized pricing. However, four specific areas of law may influence the extent to which this discriminatory pricing practice is limited, although it is doubtful whether this will happen, at least in the short run (Sears 2020).

For example, there are major general hurdles to overcome before a personalized pricing claim can be successful under antitrust law. One of the obstacles is the extent to which competition law will actually be enforced against business-to-consumer (B2C) transactions, inasmuch as there are no “uniform measures for reporting discriminatory practices” or a “harmonised approach to collective redress” (European Data Protection Supervisor 2014, p. 31). Also, ‘exploitative abuses,’ under which personalized pricing is most likely to be challenged, only amount to a fraction of the abuse of dominance cases enforced under competition law over the past decades (OECD 2018). Moreover, if successful, compensation will be limited to the ‘actual loss with interest.’ Given the legal fees, though, it may not be worth pursuing. As such, not much is to be expected from antitrust legislation, even if—as Botta and Wiedeman (2019) argue—a case-by-case approach is utilized, given that a dominant position in the market must first still be found, which may be the greatest hurdle.

As part of European consumer law, there is the Unfair Contract Terms Directive 93/13/EEC (UCTD), the Unfair Commercial Practices Directive 2005/29/EC (UCPD), and the Consumer Rights Directive 2011/83/EU (CRD). These directives could potentially be applicable to limit personalized pricing practices. In this context, the UCTD is unlikely to impact online personalized pricing as the adequacy of a price is not a factor in itself to assess whether the terms of a contract are unfair. Furthermore, under the UCPD, which specifically applies to deceptive and aggressive B2C transactions that harm consumers’ economic interests, personalized pricing is permitted so long as firms “duly inform consumers about the prices or how they are calculated” (European Commission 2016a, p. 134). Similarly, the CRD requires consumers to be “clearly informed when the price presented to them is personalised on the basis of automated decision-making” (European Commission 2019, p. 14). However, price framing literature shows that when comparing prices, consumers tend to “only base their decisions on the salient characteristics of the situation rather than on the objective price information” (Bayer and Ke 2013, p. 215), and that a promotion signal alone can be sufficient to induce consumers to choose the promoted product, independent of the relative price information (Inman et al. 1990). In fact, recent behavior-based pricing research shows that “requiring firms to disclose collection and usage of consumer data could hurt consumers and lead to unintended consequences” (Li et al. 2020). Drawing attention to the potential unanticipated effects of information regulation, in light of concerns about the effectiveness of mandated disclosures (Ben-Shahar and Schneider 2014) Van Boom et al. (2020, p. 1) indeed found that when such disclosures hint at one’s self-interest in making a purchase, they may “inadvertently appeal to consumers’ wishes, desires, and in the process of doing so, increase the likelihood of (over)spending.” As such, the positive effects of consumer protection law may currently be limited in this area.

The third area of EU law that may impact the use of online personalized pricing is data protection law. Under the ePrivacy Directive, online retailers need to clearly inform (and obtain the consent of) consumers when they use cookies to collect personal data, which is a primary method of collection used to engage in price discrimination (European Commission 2009). Given that consumers must also be offered the right to refuse the placing of such cookies, most online environments use (tracking-specific) cookie notifications, although few appear to read them (Bakos et al. 2014). Interestingly, when automated decision-making algorithms are used in online personalized pricing, consumers have the right “not to be subject to a decision based solely on automated processing, including profiling” (European Commission 2016b, p. 46). While these rights enhance the level of transparency to consumers, there is little guidance on whether a particular implementation complies; hence, a wide variety of implementations and a lack of uniformity between websites can be observed.

Finally, as algorithmic personalized pricing may also affect protected classes of EU consumers, non-discrimination provisions, such as those aimed at addressing racial or gender equality, or acts discriminating against people on the basis of nationality or place of residence, such as geo-blocking, may apply. Whereas the directives addressing race and gender discrimination have the potential to limit firms’ abilities to engage in online price personalization, difficulties—such as the ability to detect and show the discriminatory practice—may arise in establishing a prima facie case. Also, while various complaints were made under the Services in the Internal Market Directive which resulted in positive outcomes for some individuals, more action is needed to address the actual practice in industry.

At present in the EU, and this may be not so different from other jurisdictions, online personalized pricing tends to be challenged in a limited and indirect way. If the ability to personalize prices and engage in online price discrimination becomes more common in the future, it still remains to be seen whether this pricing practice will be viewed as undesirable enough to warrant more stringent regulation going forward.

Ethical concerns

The debate about personalized pricing has been fed by ethical concerns, such as a decrease in trust (Garbarino and Lee 2003), price unfairness (Richards et al. 2016), loss of personal freedom (Bock 2016), privacy loss (Zuiderveen Borgesius and Poort 2017), unequal distribution of power (Seele et al. 2019), and welfare loss (Li et al. 2020). In this context, the term personalized pricing refers to first-degree (individual) or third-degree (group) price discrimination where firms use observable consumer characteristics to capture a larger portion—though not necessarily all—of the reservation price. It does not include second-degree (indirect) price discrimination (Bourreau and De Streel 2018) where firms use a pricing scheme to allow consumers to self-select among different price-quantity or price-quality tradeoffs. A formal condition for personalized pricing thus is the absence of arbitrage. But what if firms find new ways to limit the scale of arbitrage, or personalize it without observing consumer characteristics, while offering different product/service options to all consumers and allowing consumers to self-select? What if firms learn to indirectly discriminate against consumers at a more granular (personal) level? Or, what if the designed self-selection devices draw upon common causes of misperception? Would this variation of personalized pricing not be difficult to regulate, let alone challenge in court? Would such variation of personalized pricing be possible, and more importantly would it be ethical?

There are many ways to make consumers feel that they are getting a good deal, so that they shorten their price searches (Lindsey-Mullikin and Petty 2011). Moreover, deceptive tactics such as ‘price-matching refunds’ (e.g., Kukar-Kinney et al. 2007), vague price comparisons (Compeau et al. 2004; Grewal and Compeau 1992), or comparative price advertising (Choi and Coulter 2012) have been a thorn in the side of regulators for years (Compeau et al. 2002; Grewal and Compeau 1999). Bar-Gill (2019, p. 218) argues that when algorithmic price discrimination targets misperceptions, “in particular, when sellers use personalized pricing, regulators should fight fire with fire and seriously explore the potential of personalized law.” We argue that scholarly attention should not only be directed towards the ethical concerns of new forms of direct price discrimination, such as algorithmic personalized dynamic pricing, but should specifically encompass the ethics of indirect price discrimination, through which ‘free will’ enables consumers to sort themselves into different microsegments. This is particularly important when the self-selection is enticed by deceptive applications of psychological pricing and neuromarketing. In other words, what if personalized pricing becomes so complex and ingenious that it is difficult to establish whether there is an issue of dishonesty, unfairness, injustice, or ethical misconduct? Thus far, courts and government agencies have already recognized that truthful advertising can mislead consumers, for example, by using confusing language or omitting important information (Hastak and Mazis 2011). The pricing literature in this area, however, is scarce. For example, research on ‘scanner fraud’—i.e., ‘accidentally’ forgetting to enter a discount in the cash register—shows that more mistakes are made in wealthy than in poor postal code areas (Goodstein 1994; Hardesty et al. 2014). It is questionable whether ‘electronic shelf labels,’ which would be less susceptible to errors, and which could be used for dynamic pricing, will solve the problem. As with omissions and errors, little is also known about the different forms of ‘price confusion’ (Grewal and Compeau 1999). This is particularly worrisome because pricing psychology indicates that there are a vast number of ways to make prices seem lower than they actually are (Kolenda 2016).

But what if neuromarketing, which has been associated in the USA with potential legal issues in the field of tort claims, privacy, and consumer protection (Voorhees et al. 2011), alongside numerous ethical issues (Hensel et al. 2017), enters the stage? Although research into the “neuro-foundations” of pricing is still in its infancy (Hubert and Kenning 2008; Stanton et al. 2017), advances in technology may allow for business opportunities to arise sooner than we can imagine. For example, Plassmann et al. (2007) report that willingness to pay is determined in the medial prefrontal cortex, while Ramsøy et al. (2018) observe that brain activation significantly varies with consumers’ willingness to pay. Adaval and Wyer (2011) show that subliminally presented price anchors have an effect on willingness to pay. Also, Fu et al. (2019) find that deceptive pricing can be associated with more cognitive and decisional conflict and less positive evaluation at the neural level. Another interesting finding from neuromarketing is that the perception of unfair prices, monetary sacrifices, and high prices activates the part of the brain that processes punishment (Sanfey et al. 2003). Conversely, Knutson et al. (2007) find that the brain’s reward system is not only activated by food, but also by price reductions. In other words, there is much ‘food for thought’…

Concluding remarks

There is a paucity of academic research that relates dishonesty, unfairness, injustice, and misconduct to pricing and revenue management practices. This article seeks to bring to the attention of the academic community the need for future research on ethical and legal issues in revenue and pricing management, a significant and as yet under-researched topic. In doing so, it focused on personalized pricing, its legal challenges, and future possibilities to extend the practice of targeted pricing to new forms of indirect price discrimination, through which consumers are allowed to sort themselves into different microsegments, enticed by deceptive personalized applications of psychological pricing and neuromarketing.

We need more high-quality research offering insights into emerging forms of unethical practices in revenue and pricing management. This should help companies to understand how they can meet their objectives without denying consumers (or competitors) a fair market. While our viewpoint focused on pricing, the future research agenda should welcome all work on the ethical and legal aspects of the field. Importantly, this agenda should not be limited to a specific discipline or (empirical) methodology. As a start, literature review articles would fit the aim of the research agenda very well. Practitioner insights should also be accepted, as are surveys among revenue and pricing professionals, as long as there are significant ethical or legal implications. Some suggested research areas can be found here, but this list is far from complete:

Antitrust empirical analysis of current topics, such as the potential abuse of economic power by large online players through data analysis or contracting.

Automation ethical dilemmas and consequences of design on people and society.

Deception insights into how consumers perceive, process, and especially respond to dishonest or unfair psychological effects in pricing and revenue management.

Legal analysis societal implications of pricing at the level of producer, retailer, and consumer, including competition issues.

Organizational behavior ethical misconduct by pricing and revenue managers, and the organizational dynamics that foster dishonesty.

Personalized dynamic pricing insights on business performance or economic effects, and any technical matter, or any ethical or legal issue that may arise from using behavioral data in managing demand.

Privacy all privacy concerns and human behaviors related to (capturing [big] data via) advanced infrastructures that collect, store, and analyze demand data to automate the optimization of revenue management decisions.

Revenue analytics questionable metrics or illegal practices to assess revenue management performance.

Rivalry and ethical behavior greater understanding of the impact of dishonesty, unfairness, injustice, and misconduct in pricing and revenue management on competitive behavior (or vice versa).

Search discrimination insights into whether search engines are problematic from an ethical perspective.

Subliminal perceptions recent advances from the field of neuromarketing applicable to the pricing and revenue management field.

Collectively, these research areas may help answer the question of whether pricing professionals should exploit every technological innovation, pricing capability, and marketing opportunity, just because they can, or whether minimum ethical and moral standards are required before (algorithmic) pricing is taken to the next level.

Lastly, for regulators and policy makers, we hope this article serves the purpose of provoking more thoughts and discussions on the gray areas listed above. As a result, legislative and directive guidance in ethical pricing and revenue management practices will be further developed to advise businesses that increasingly adopt algorithmic methods in their decision-making processes.

References

Adaval, R., and R.S. Wyer. 2011. Conscious and Nonconscious Comparisons with Price Anchors: Effects on Willingness to Pay for Related and Unrelated Products. Journal of Marketing Research 48 (2): 355–365.

Bakos, Y., F. Marotta-Wurgler, and D.R. Trossen. 2014. Does Anyone Read the Fine Print? Consumer Attention to Standard-Form Contracts. The Journal of Legal Studies 43 (1): 1–35.

Bar-Gill, O. 2019. Algorithmic Price Discrimination When Demand is a Function of Both Preferences and (Mis)perceptions. University of Chicago Law Review 86 (2): 217–254.

Baye, M.R., and D.E.M. Sappington. 2019. Revealing Transactions Data to Third Parties: Implications of Privacy Regimes for Welfare in Online Markets. Journal of Economics & Management Strategy, Online first.

Bayer, R.C., and C. Ke. 2013. Discounts and Consumer Search Behavior: The Role of Framing. Journal of Economic Psychology 39 (December): 215–224.

Belleflamme, P., and W. Vergote. 2016. Monopoly Price Discrimination and Privacy: The Hidden Cost of Hiding. Economics Letters 149: 141–144.

Ben-Shahar, O., and C.E. Schneider. 2014. More than you Wanted to Know—The Failure of Mandated Disclosure. Princeton: Princeton University Press.

Bock, C. 2016. Preserve Personal Freedom in Networked Societies. Nature 537: 9.

Botta, M., and K. Wiedemann. 2019. To Discriminate or Not to Discriminate? Personalised Pricing in Online Markets as Exploitative Abuse of Dominance. European Journal of Law and Economics, Online first.

Bourreau, M., and A. De Streel. 2018. The regulation of personalised pricing in the digital era—Note by Marc Bourreau and Alexandre de Streel. Paris: OECD.

Chen, Y., and Z.J. Zhang. 2009. Dynamic Targeted Pricing with Strategic Consumers. International Journal of Industrial Organization 27 (1): 43–50.

Choi, P., and K.S. Coulter. 2012. It’s Not All Relative: The Effects of Mental and Physical Positioning of Comparative Prices on Absolute versus Relative Discount Assessment. Journal of Retailing 88 (4): 512–527.

Compeau, L.D., D. Grewal, and R. Chandrashekaran. 2002. Comparative Price Advertising: Believe It or Not. Journal of Consumer Affairs 36 (2): 284–294.

Compeau, L.D., J. Lindsey-Mullikin, D. Grewal, and R.D. Petty. 2004. Consumers’ Interpretations of the Semantic Phrases Found in Reference Price Advertisements. Journal of Consumer Affairs 38 (1): 178–187.

De Nijs, R. 2017. Behavior-Based Price Discrimination and Customer Information Sharing. International Journal of Industrial Organization 50 (January): 319–334.

Esteves, R.B. 2009. Customer Poaching and Advertising. The Journal of Industrial Economics 57 (1): 112–146.

Esteves, R.B., and S. Cerqueira. 2017. Behavior-Based Pricing Under Imperfectly Informed Consumers. Information Economics and Policy 40 (September): 60–70.

Esteves, R.B., and J. Resende. 2019. Personalized Pricing and Advertising: Who are the Winners? International Journal of Industrial Organization 63 (March): 239–282.

European Commission. 2009. Directive 2009/136/EC of the Euopean Parliament and of the Coucil of 25 November 2009 amending Directive 2002/22/EC on universal service and users’ rights relating to electronic communications networks and services, Directive 2002/58/EC concerning the pro.

European Commission. 2016a. Guidance on the Implementation/Application of Directive 2005/29/EC on Unfair Commercial Practices.

European Commission. 2016b. I (Legislative acts) Regulations Regulation (EU) 2016/679 of the European Partliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repeal.

European Commission. 2019. Directive (EU) 2019/2161 of the European Partliament and of the Council of 27 November 2019 amending Council Directive 93/13/EEC and Directives 98/6/EC, 2005/29/EC and 2011/83/EU of the European Parliament and of the Council as regards the better enforcem.

European Data Protection Supervisor. 2014. Preliminary Opinion of the European Data Protection Supervisor. www.edps.europa.eu.

Fu, H., H. Ma, J. Bian, C. Wang, J. Zhou, and Q. Ma. 2019. Don’t Trick Me: An Event-Related Potentials Investigation of How Price Deception Decreases Consumer Purchase Intention. Neuroscience Letters 713: 134522.

Garbarino, E., and O.F. Lee. 2003. Dynamic Pricing in Internet Retail: Effects on Consumer Trust. Psychology and Marketing 20 (6): 495–513.

Goodstein, R.C. 1994. UPC Scanner Pricing Systems: Are They Accurate? Journal of Marketing 58 (2): 20–30. https://doi.org/10.1177/002224299405800202.

Grewal, D., and L.D. Compeau. 1992. Comparative Price Advertising: Informative or Deceptive? Journal of Public Policy & Marketing 11 (1): 52–62.

Grewal, D., and L.D. Compeau. 1999. Pricing and Public Policy: A Research Agenda and an Overview of the Special Issue. Journal of Public Policy & Marketing 18 (1): 3–10.

Hardesty, D.M., R.C. Goodstein, D. Grewal, A.D. Miyazaki, and P. Kopalle. 2014. The Accuracy of Scanned Prices. Journal of Retailing 90 (2): 291–300.

Hastak, M., and M.B. Mazis. 2011. Deception by Implication: A Typology of Truthful but Misleading Advertising and Labeling Claims. Journal of Public Policy & Marketing 30 (2): 157–167.

Haws, K.L., and W.O. Bearden. 2006. Dynamic Pricing and Consumer Fairness Perceptions. Journal of Consumer Research 33 (3): 304–311.

Hensel, D., A. Iorga, L. Wolter, and J. Znanewitz. 2017. Conducting Neuromarketing Studies Ethically-Practitioner Perspectives. Cogent Psychology 4 (1): 1–13.

Hubert, M., and P. Kenning. 2008. A Current Overview of Consumer Neuroscience. Journal of Consumer Behaviour 7 (4–5): 272–292.

Inman, J.J., L. McAlister, and W.D. Hoyer. 1990. Promotion Signal: Proxy for a Price Cut? Journal of Consumer Research 17 (1): 74.

Knutson, B., S. Rick, G.E. Wimmer, D. Prelec, and G. Loewenstein. 2007. Neural Predictors of Purchases. Neuron 53 (1): 147–156.

Kolenda, N. 2016. The Psychology of Pricing. Kolenda Entertainment.

Kukar-Kinney, M., L. Xia, and K.B. Monroe. 2007. Consumers’ Perceptions of the Fairness of Price-Matching Refund Policies. Journal of Retailing 83 (3): 325–337.

Li, X., K.J. Li, and X.(Shane) Wang. 2020. Transparency of Behavior-Based Pricing. Journal of Marketing Research 57 (1): 78–99.

Lindsey-Mullikin, J., and R.D. Petty. 2011. Marketing Tactics Discouraging Price Search: Deception and Competition. Journal of Business Research 64 (1): 67–73.

Martin, K. 2019. Ethical Implications and Accountability of Algorithms. Journal of Business Ethics 160 (4): 835–850.

Miller, A. 2014. What Do We Worry About When We Worry About Price Discrimination? The Law and Ethics of Using Personal Information for Pricing. Journal of Technology Law & Policy 19 (1): 41–104.

OECD. 2018. Personalised Pricing in the Digital Era. https://www.oecd.org/competition/personalised-pricing-in-the-digital-era.htm.

Plassmann, H., J. O’Doherty, and A. Rangel. 2007. Orbitofrontal Cortex Encodes Willingness to Pay in Everyday Economic Transactions. Journal of Neuroscience 27 (37): 9984–9988.

Ramsøy, T.Z., M. Skov, M.K. Christensen, and C. Stahlhut. 2018. Frontal Brain Asymmetry and Willingness to Pay. Frontiers in Neuroscience 12: 1–12.

Richards, T.J., J. Liaukonyte, and N.A. Streletskaya. 2016. Personalized Pricing and Price Fairness. International Journal of Industrial Organization 44: 138–153.

Sanfey, A.G., J.K. Rilling, J.A. Aronson, L.E. Nystrom, and J.D. Cohen. 2003. The Neural Basis of Economic Decision-Making in the Ultimatum Game. Science 300 (5626): 1755–1758.

Sears, A.M. 2020. The Limits of Online Price Discrimination in Europe. Columbia Law Journal, Forthcoming.

Seele, P., C. Dierksmeier, R. Hofstetter, and M.D. Schultz. 2019. Mapping the Ethicality of Algorithmic Pricing: A Review of Dynamic and Personalized Pricing. Journal of Business Ethics, Online first.

Stanton, S.J., W. Sinnott-Armstrong, and S.A. Huettel. 2017. Neuromarketing: Ethical Implications of Its Use and Potential Misuse. Journal of Business Ethics 144 (4): 799–811.

Van Boom, W.H., J.I. Van der Rest, K. Van den Bos, and M. Dechesne. 2020. Consumers Beware: Online Personalized Pricing in Action! How the Framing of a Mandated Discriminatory Pricing Disclosure Influences Intention to Purchase. Social Justice Research, Online first.

Voorhees, T., W. Daniel, L. Spiegel, and W.D. Cooper. 2011. Neuromarketing: Legal and Policy Issues.

Wagner, G., and H. Eidenmuller. 2019. Down by Algorithms: Siphoning Rents, Exploiting Biases, and Shaping Preferences: Regulating the Dark Side of Personalized Transactions. University of Chicago Law Review 86 (2): 581–609.

Yeoman, I. 2016. Personalised price. Journal of Revenue and Pricing Management 15 (1): 1.

Zuiderveen Borgesius, F., and J. Poort. 2017. Online Price Discrimination and EU Data Privacy Law. Journal of Consumer Policy 40 (3): 347–366.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

van der Rest, JP.I., Sears, A.M., Miao, L. et al. A note on the future of personalized pricing: cause for concern. J Revenue Pricing Manag 19, 113–118 (2020). https://doi.org/10.1057/s41272-020-00234-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1057/s41272-020-00234-6