Abstract

Risk is the situation under which the decision outcomes and their probabilities of occurrences are known to the decision-maker, and uncertainty is the situation under which such information is not available to the decision-maker. Research on decision-making under risk and uncertainty has two broad streams: normative and descriptive. Normative research models how decision should be made under risk and uncertainty, whereas descriptive research studies how decisions under risk and uncertainty are actually made. Descriptive studies have exposed weaknesses of some normative models in describing people’s judgment and decision-making and have compelled the creation of more intricate models that better reflect people’s decision under risk and uncertainty.

Access provided by CONRICYT-eBooks. Download reference work entry PDF

Similar content being viewed by others

Definition

Risk refers to decision-making situations under which all potential outcomes and their likelihood of occurrences are known to the decision-maker, and uncertainty refers to situations under which either the outcomes and/or their probabilities of occurrences are unknown to the decision-maker. How decision-makers perceive risk and uncertainty depends on the context of the decision and the characteristic of the decision-maker.

Body of Text

Risk and uncertainty are commonly used terms both in decision-making research and in life. The lay usages of the words, however, significantly diverge from the researcher’s definitions. Oxford English Dictionary defines risk as “a situation involving exposure to danger,” and it defines uncertainty as “the state of being not able to be relied on, not known or definite.” The definitions that decision-making researchers assign to these terms are very different. Conceptually, risk defined as variance (or standard deviation) does not only involve exposure to danger, but it can also include potential for gain. Researcher’s definition of uncertainty is not too different from that of Oxford English Dictionary, but there are different types of uncertainty that require more detailed descriptions. In this entry, we first discuss the definitions of risk and uncertainty and then outline few broad streams of research on this topic. Generally speaking, two different research perspectives exist in this domain: normative and descriptive. The two perspectives study different aspects of decision-making under risk and uncertainty, and they complement each other.

Early Conception of Risk and Uncertainty

Knight (1921), in defining risk and uncertainty, made an explicit distinction between the two terms. Knight defined risk as decision-making situations in which all potential outcomes are identified and their respective probabilities of occurrences are known. He characterized uncertainty as situations in which either the possible decision outcomes or the specific probabilities associated with such outcomes are unknown to the decision-maker. Luce and Raiffa (1957, p. 13) echoed Knight’s distinction between risk and uncertainty. They made distinctions among certainty, risk, and uncertainty. Whereby decision-makers are in the realm of certainty, if they know the outcome that their decision will lead to. Decision-makers are in the domain of risk, if their decision is known to lead to a certain outcome with certain probability, and they are in the realm of uncertainty, if the probabilities associated with the outcomes are either unknown or meaningless.

The key distinction between risk and uncertainty lies in the quantifiability of the number of possible decision outcomes and the probabilities of their occurrences. Uncertainty often refers to aspects of decision-making which are not easily quantified. As Knight argued, there can be uncertainty regarding both the decision outcomes and the probabilities of the outcome occurrences. There can also be uncertainty about decision-maker’s preferences (March 1978). Quantifiability is the key characteristic of risk, but the definition of risk and the measurement of risk are not straightforward. In fact, the process of defining risk in real life often reflects a political process that depends on the decision-maker, the technologies being considered, and the characteristics of decision problem (Fischhoff et al. 1984). These factors affect the two-step process of defining risk; the first step is to determine which consequences or outcome dimensions to include and the next step is to construct risk indices based on the consequences selected in the previous step.

The St. Petersburg Paradox: Expected Value and Expected Utility

Once the definition and the measurement of risk are available, we then need a rule for making decision under risk. According to statistical decision theory, the expected value rule is considered as the best rule to follow when making risky decisions (Raiffa 1968). The expected value rule entails multiplying the value of the outcomes by their respective probabilities of occurrences and then summing them up. Decisions that have higher values after this calculation are considered better than decisions with lower values. While simplistic, this rule fails to accurately depict people’s actual decision-making. Consider this coin-flipping game. In this game, a player is asked to guess the face of a coin toss. If she guesses wrong on the first (n – 1) times but guesses correctly on the nth trial, she wins $2n. Thus, the expected value of this game is the summation of $1 infinite number of times or simply infinity. In other words, under the expected value rule, people should be willing to pay an infinite amount of money to play this game. In reality, however, people are willing to pay only about $2.50 to play this game. This finding is known as the St. Petersburg Paradox, and Bernoulli (1738) offered a different decision rule that can account for this paradox: the expected utility rule. The term utility refers to the psychological value that people assign to certain decision outcomes (Savage 1954; von Neumann and Morgenstern 1944). Utility differs from value in that utility may differ from one context to another for the same decision-maker and from one decision-maker to another, that is, the utility that people assign to certain outcomes are subjective and context dependent. Under the expected utility rule, people’s utility from playing the aforementioned coin-flipping game may differ from the game’s expected value. People’s marginal utility decreases as outcome value increases, and people’s expected utility function is concave. As a consequence, players may be unwilling to pay an infinite amount of money to play the game. People, however, not only assign subjective utility to outcome values, but they also assign subjective probabilities to achieving those outcomes. The theory of subjective expected utility accounts for such tendency as well (Savage 1954).

Two Additional Paradoxes and Different Theories

The Allais Paradox

The theory of expected utility, however, also falls short in accurately depicting people’s decision-making in many cases. The Allais paradox is a classic example that illustrates the violation of expected utility rule in people’s actual decision-making (Allais 1953). Allais presented two different sets of gambles to people and found that people’s shift in preference between the two presentations of gambles violated the expected utility rule’s notion of consistency. The Allais paradox reflects the expected utility rule’s failure to account for people’s preference for certainty, and alternative theories of risk have been offered to better capture such tendency.

Rank-Dependent Utility Theory and Cumulative Prospect Theory

In reviewing a large number of studies, Weber (1994) pointed out that the assumption of independence between the probability and utility of an outcome, which is essential to expected utility, is often violated in practice. She discussed a class of non-expected utility models for choice under risk and uncertainty, called rank-dependent utility (RDU) models. These models originally proposed by Quiggin (1982) and Yaari (1987) hold that people evaluate the probabilities of outcome by ranking those outcomes initially and then looking at the cumulative probabilities of obtaining these outcomes. Quiggin’s (1982) theory of anticipated utility theory extended the expected utility theory partly by using a weaker form of the independence of irrelevant alternatives axiom. Quiggin’s theory is essentially a generalization of the expected utility theory set forth by von Neumann and Morgenstern (1944). Quiggin’s theory overweighs unlikely extreme outcomes, which is analogous to people’s tendency to overweight low probability events such as winning a lottery on the one hand and getting in a plane accident on the other. Schmeidler’s (1989) extension of the expected utility model covers situations involving uncertainty to account for the Ellsberg paradox (that will be discussed below).

As Weber (1994) noted, RDU models hold that decision weights can be nonlinear and as such they can describe phenomena that were characterized earlier as optimistic and pessimistic behavior. Indeed, Kahneman and Tversky revised their notion of nonlinear decision weights in prospect theory (1979) into cumulative probabilities (Tversky and Kahneman 1992) in a way that appear to avoid the problem of violation of stochastic dominance.

Extreme Events

People’s tendency to overweight unlikely extreme events has many implications. For instance, in finance, a premium for risk (i.e., fat tail) exists due to investors’ fear of disasters (Bollerslev and Todorov 2011a, b). Approximately 5% of equity premium is thought to be attributable to the compensation for rare disaster events. People, however, only overweight the probability of rare events when they are provided with summary description of possible outcomes; when people make decisions based on their experience, they instead underweight the probability of rare events (Hertwig et al. 2004; Ungemach et al. 2009).

Lampel et al. (2009) argued that rare events have two elements, a probability estimate on the one hand and a non-probability element that is based on the enacted salience of such events. The latter implies that in addition to the probability component of rare events, people enact such event by focusing on the unique and unusual features of such events in a constructionist manner that differs from the scientific way of assigning probabilities to such events.

Recent research by Ülkumen et al. (2016) suggests that in conceiving of uncertain events, people distinguished between events that are knowable (like the length of the river Nile, which the decision-maker does not to know) and those that are completely random (a toss of a fair coin) and that natural language provides us with cues about the way they conceive of these two elements. Extreme events pose a big problem for calculated probabilities of such events (i.e., fat tails), and focusing on the different elements in people’s conceptualization of such events may lead to a better understanding of the creation of subjective estimates of probabilistic events.

The Ellsberg Paradox

So far we have addressed people’s decision-making under risk and have not considered their decision-making under uncertainty. Savage (1954) argued that all uncertainties can be reduced to risk. More specifically, under Savage’s theory of subjective expected utility, decision-makers are thought to behave similarly under uncertainty as they do under risk, if their subjective assessment of the probabilities of the outcomes is the same in both cases. The Ellsberg (1961) paradox, however, illustrates that this may not be the case. Ellsberg showed that people’s decision-making under risk and uncertainty violates the assumptions of the expected utility theory, and he asserted that such violation occurs because people are averse to ambiguity, which refers to a condition between complete ignorance and risk. Such aversion to ambiguity has been shown empirically as well in the context of decisions of insurance companies (Kunreuther et al. 1993) and has been shown to be moderated by decision-maker’s confidence in his or her judgment and skill (Heath and Tversky 1991). Ambiguity aversion, however, is only present when a person is presented with both clear and vague prospects, and it disappears in a noncomparative context in which comparison between prospects is absent (Fox and Tversky 1995).

Descriptive Approaches

It is difficult to model people’s decision-making under risk and uncertainty. Another stream of research, instead, describes how people make decisions. These researchers focus on how people perceive and interpret risk and uncertainty and how dispositional characteristics and contextual factors influence their choices under risk and uncertainty. Some researchers attempt to establish attitude toward risk as a stable individual trait that is linked to personality or culture (Douglas and Wildavsky 1982), but there is lack of consensus and consistency among studies that address the potential link between risk taking and dispositional characteristics (Slovic 1964). Factors other than dispositional characteristics have also been studied for their effect on risk taking. People’s risk preference has been found to hinge on the framing of the decision problem (Tversky and Kahneman 1981) and on people’s mood and feelings at the time of decision-making (Hastorf and Isen 1982; Loewenstein et al. 2001; Rottenstreich and Hsee 2001). Thaler and Johnson (1990) illustrated that prior outcomes affect people’s risk preference. More specifically, people become relatively more risk seeking after a prior gain, which they term as the house-money effect. The reason is that immediately after a gain, people do not assimilate the gain into their own assets and treat it as the “house money” that they can be more risk seeking with. People become more risk seeking, if they get a chance to break even, like gamblers on horse races at the end of the day. They term this as the break-even effect. Those effects can be explained by shifts of the reference point or by introducing another reference point such as survival (March and Shapira 1992). Moreover, learning from experience is thought to make people more risk averse over time (March 1996).

Risk Measurement and Absolute Risk

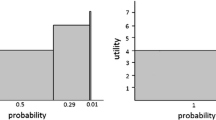

To better assess people’s risk preferences, we need a measurement of risk. Descriptive research on risk measurement has compared and contrasted verbal and numerical measures of risk. Erev and Cohen (1990) found that people are more comfortable expressing risk verbally, but they prefer to receive numerical information about risk. There is consistency in the interpretation of verbally represented outcome probabilities within a decision-maker but not between different decision-makers (Budescu and Wallsten 1985). Furthermore, when provided with numerical estimates of probabilities, people tend to invoke rule-based decision-making process, whereas when given verbal measures of risk, people become more associative, and their decisions become more intuitive (Windschitl and Wells 1996). More closely related to the issue of risk measurement, Zimmer (1983) found that people use about five to six expressions to describe an entire range of probabilities. Beyth-Marom (1982) found that seven category scales to be sufficient to capture risk. In sum, people utilize a small number of expressions to conceptualize risk; thus, risk measurement may require a small number of category scales to capture people’s risk preferences.

Normative perspective on decision-making under risk and uncertainty attempts to avoid all the complications that arise from individual characteristics of decision-makers. These researchers have created a riskiness measure that is independent of the decision-maker. Aumann and Serrano (2008) defined the riskiness of a gamble based on an individual who is indifferent between taking and not taking the gamble in question. More specifically, they measured risk by taking the reciprocal of the absolute risk aversion of that individual. Foster and Hart (2009), on the other hand, defined risk by identifying the critical wealth level of decision-maker. When the decision-maker’s wealth falls below that critical level, a gamble is defined to be risky.

Neural Correlates

Some researchers have taken the descriptive approach to studying people’s decision-making under risk and uncertainty deeper into people’s brains. Because it is our brain where all the decision-making process takes place, these researchers argue that it is important to study brain activities to understand decision-making (Camerer et al. 2005). These researchers investigate where risk attitude is located in our brains and how certain brain activity is related to people’s decision-making under risk and uncertainty. Risk-seeking deviations from optimal financial decisions activate a certain region in the brain that differs from brain regions that get activated when risk-averse deviations from optimal choices are made (Kuhnen and Knutson 2005). Hsu et al. (2005) have also shown that high level of ambiguity is associated with higher activation of amygdala and orbitofrontal cortex of our brains. Furthermore, the amygdala is also responsible for people’s general tendency to be averse to losses (De Martino et al. 2010).

Risk and Uncertainty in Strategy Research

Strategy research often borrows measurement of risk from other fields such as finance and decision theory. Studies that borrow risk measurement from finance literature use β from the capital asset pricing model, while studies that use the measurements of decision theory literature create certain variance measurements of accounting returns such as ROA (Reuer and Leiblein 2000; Ruefli et al. 1999). Such measurements of risk are used to study the relationship between risk and firm strategy or firm performance. On the other hand, uncertainty in strategy literature has its distinct definition. Strategy literature defines a firm’s uncertainty to be arising from conflict and interdependence with its environmental elements, and strategy research studies how firms reduce this type of uncertainty by managing their environmental conflict and interdependence (e.g., Hoffmann 2007; Martin et al. 2015).

Conclusion

There are many different approaches to studying people’s decision-making under risk and uncertainty. Normative and descriptive approaches are the two main perspectives, which complement each other. Modeling people’s choices under risk and uncertainty is becoming more intricate as we know more about how people actually make decisions under risk and uncertainty. Simply put, risk can be considered as a subset of uncertainty that is quantifiable or measurable, whereas uncertainty refers to ignorance about potential outcomes or their respective likelihood of occurrences.

References

Allais, M. 1953. Le comportement de l’homme rationnel devant le risque: Critique des postulats et axiomes de l’école américaine. Econometrica 21 (4): 503–546.

Aumann, R.J., and R. Serrano. 2008. An economic index of riskiness. Journal of Political Economy 116 (5): 810–836.

Bernoulli, D. 1738. Specimen theoriae novae de mensura sortis. Commentarii Academiae Scientiarum Imperiales Petropolitanae 5: 175–192.

Beyth-Marom, R. 1982. How probable is probable? A numerical translation of verbal probability expressions. Journal of Forecasting 1 (3): 257–269. https://doi.org/10.1002/for.3980010305.

Bollerslev, T., and V. Todorov. 2011a. Tails, fears, and risk premia. Journal of Finance 66 (6): 2165–2211. https://doi.org/10.1111/j.1540-6261.2011.01695.x.

Bollerslev, T., and V. Todorov. 2011b. Estimation of jump tails. Econometrica 79 (6): 1727–1783.

Budescu, D.V., and T.S. Wallsten. 1985. Consistency in interpretation of probabilistic phrases. Organizational Behavior and Human Decision Processes 36 (3): 391–405. https://doi.org/10.1016/0749-5978(85)90007-X.

Camerer, C.F., G.F. Loewenstein, and D. Prelec. 2005. Neuroeconomics: How neuroscience can inform economics. Journal of Economic Literature 43 (1): 9–64.

De Martino, B., C.F. Camerer, and R. Adolphs. 2010. Amygdala damage eliminates monetary loss aversion. Proceedings of the National Academy of Sciences of the United States of America 107 (8): 3788–3792. https://doi.org/10.1073/pnas.0910230107.

Douglas, M., and A. Wildavsky. 1982. How can we know the risks we face? Why risk selection is a social process. Risk Analysis 2 (2): 49–58. https://doi.org/10.1111/j.1539-6924.1982.tb01365.x.

Ellsberg, D. 1961. Risk, ambiguity, and the savage axioms. Quarterly Journal of Economics 75 (4): 643–669.

Erev, I., and B.L. Cohen. 1990. Verbal versus numerical probabilities: Efficiency, biases, and the preference paradox. Organizational Behavior and Human Decision Processes 45 (1): 1–18. https://doi.org/10.1016/0749-5978(90)90002-Q.

Fischhoff, B., S.R. Watson, and C. Hope. 1984. Defining risk. Policy Sciences 17 (2): 123–139. https://doi.org/10.1007/BF00146924.

Foster, D.P., and S. Hart. 2009. An operational measure of riskiness. Journal of Political Economy 117 (5): 785–814.

Fox, C.R., and A. Tversky. 1995. Ambiguity aversion and comparative ignorance. Quarterly Journal of Economics 110 (3): 585–603.

Hastorf, A., and A.M. Isen, eds. 1982. Cognitive social psychology. New York: Elsevier.

Heath, C., and A. Tversky. 1991. Preference and belief: Ambiguity and competence in choice under uncertainty. Journal of Risk and Uncertainty 4 (1): 5–28. https://doi.org/10.1007/BF00057884.

Hertwig, R., G. Barron, E.U. Weber, and I. Erev. 2004. Decisions from experience and the effect of rare events. Psychological Science 15 (8): 534–539.

Hoffmann, W.H. 2007. Strategies for managing a portfolio of alliances. Strategic Management Journal 28 (8): 827–856.

Hsu, M., M. Bhatt, R. Adolphs, D. Tranel, and C.F. Camerer. 2005. Neural systems responding to degrees of uncertainty in human decision-making. Science 310 (5754): 1680–1683. https://doi.org/10.1126/science.1115327.

Kahneman, D., and A. Tversky. 1979. Prospect theory: An analysis of decision under risk. Econometrica 47 (2): 263–292.

Knight, F.H. 1921. Risk, uncertainty, and profit. New York: Hart, Schaffer & Marx.

Kuhnen, C.M., and B. Knutson. 2005. The neural basis of financial risk taking. Neuron 47 (5): 763–770. https://doi.org/10.1016/j.neuron.2005.08.008.

Kunreuther, H., R.M. Hogarth, and J. Meszaros. 1993. Insurer ambiguity and market failure. Journal of Risk and Uncertainty 7 (1): 71–87. https://doi.org/10.1007/BF01065315.

Lampel, J., J. Shamsie, and Z. Shapira. 2009. Experiencing the improbable: Rare events and organizational learning. Organization Science 20 (5): 835–845. https://doi.org/10.1287/orsc.1090.0479.

Loewenstein, G.F., C.K. Hsee, E.U. Weber, and N. Welch. 2001. Risk as feelings. Psychological Bulletin 127 (2): 267–286.

Luce, R.D., and H. Raiffa. 1957. Games and decisions: Introduction and critical survey. New York: Wiley.

March, J.G. 1978. Bounded rationality, ambiguity, and the engineering of choice. Bell Journal of Economics 9 (2): 587–608.

March, J.G. 1996. Learning to be risk averse. Psychological Review 103 (2): 309–319. https://doi.org/10.1037/0033-295X.103.2.309.

March, J.G., and Z. Shapira. 1992. Variable risk preferences and the focus of attention. Psychological Review 99 (1): 172–183.

Martin, G., R. Gözübüyük, and M. Becerra. 2015. Interlocks and firm performance: The role of uncertainty in the directorate interlock-performance relationship. Strategic Management Journal 36 (2): 235–253. https://doi.org/10.1002/smj.2216.

Quiggin, J. 1982. A theory of anticipated utility. Journal of Economic Behavior and Organization 3 (4): 323–343. https://doi.org/10.1016/0167-2681(82)90008-7.

Raiffa, H. 1968. Decision analysis: Introductory lectures on choices under uncertainty. Oxford: Addison-Wesley.

Reuer, J.J., and M.J. Leiblein. 2000. Downside risk implications of multinationality and international joint ventures. Academy of Management Journal 43 (2): 203–214. https://doi.org/10.2307/1556377.

Rottenstreich, Y., and C.K. Hsee. 2001. Money, kisses, and electric shocks: On the affective psychology of risk. Psychological Science 12 (3): 185–190. https://doi.org/10.1111/1467-9280.00334.

Ruefli, T.W., J.M. Collins, and J.R. Lacugna. 1999. Risk measures in strategic management research: Auld lang syne? Strategic Management Journal 20 (2): 167–194.

Savage, L.J. 1954. The foundations of statistics. New York: Wiley.

Schmeidler, D. 1989. Subjective probability and expected utility without additivity. Econometrica 57 (3): 571–587.

Slovic, P. 1964. Assessment of risk taking behavior. Psychological Bulletin 61 (3): 220–233.

Thaler, R.H., and E.J. Johnson. 1990. Gambling with the house money and trying to break even: The effects of prior outcomes on risky choice. Management Science 36 (6): 643–660. https://doi.org/10.1287/mnsc.36.6.643.

Tversky, A., and D. Kahneman. 1981. The framing of decisions and the psychology of choice. Science 211 (4481): 453–458. https://doi.org/10.1126/science.7455683.

Tversky, A., and D. Kahneman. 1992. Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty 5 (4): 297–323. https://doi.org/10.1007/BF00122574.

Ülkümen, G., C.R. Fox, and B.F. Malle. 2016. Two dimensions of subjective uncertainty: Clues from natural language. Journal of Experimental Psychology: General 145 (10): 1280–1297. https://doi.org/10.1037/xge0000202.

Ungemach, C., N. Chater, and N. Stewart. 2009. Are probabilities overweighted or underweighted when rare outcomes are experienced (rarely)? Psychological Science 20 (4): 473–479. https://doi.org/10.1111/j.1467-9280.2009.02319.x.

von Neumann, J., and O. Morgenstern. 1944. Theory of games and economic behavior. Princeton: Princeton University Press.

Weber, E.U. 1994. From subjective probabilities to decision weights: The effect of asymmetric loss functions on the evaluation of uncertain outcomes and events. Psychological Bulletin 115 (2): 228–242. https://doi.org/10.1037/0033-2909.115.2.228.

Windschitl, P.D., and G.L. Wells. 1996. Measuring psychological uncertainty: Verbal versus numeric methods. Journal of Experimental Psychology: Applied 2 (4): 343–364. https://doi.org/10.1037/1076-898X.2.4.343.

Yaari, M.E. 1987. The dual theory of choice under risk. Econometrica 55 (1): 95–115. https://doi.org/10.2307/1911158.

Zimmer, A.C. 1983. Verbal versus numerical processing of subjective probabilities. In Decision making under uncertainty, ed. R.W. Scholz, 159–182. Amsterdam: North-Holland.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Section Editor information

Copyright information

© 2018 Macmillan Publishers Ltd., part of Springer Nature

About this entry

Cite this entry

Park, K.F., Shapira, Z. (2018). Risk and Uncertainty. In: Augier, M., Teece, D.J. (eds) The Palgrave Encyclopedia of Strategic Management. Palgrave Macmillan, London. https://doi.org/10.1057/978-1-137-00772-8_250

Download citation

DOI: https://doi.org/10.1057/978-1-137-00772-8_250

Published:

Publisher Name: Palgrave Macmillan, London

Print ISBN: 978-0-230-53721-7

Online ISBN: 978-1-137-00772-8

eBook Packages: Business and ManagementReference Module Humanities and Social SciencesReference Module Business, Economics and Social Sciences