Abstract

Patent transfer is a common practice for companies to obtain competitive advantages. However, they encounter the difficulty of selecting suitable patents because the number of patents is increasingly large. Many patent recommendation methods have been proposed to ease the difficulty, but they ignore patent quality and cannot explain why certain patents are recommended. Patent quality and recommendation explanations affect companies’ decision-making in the patent transfer context. Failing to consider them in the recommendation process leads to less effective recommendation results. To fill these gaps, this paper proposes an interpretable patent recommendation method based on knowledge graph and deep learning. The proposed method organizes heterogeneous patent information as a knowledge graph. Then it extracts connectivity and quality features from the knowledge graph for pairs of patents and companies. The former features indicate the relevance of the pairs while the latter features reflect the quality of the patents. Based on the features, we design an interpretable recommendation model by combining a deep neural network with a relevance propagation technique. We conduct experiments with real-world data to evaluate the proposed method. Recommendation lists with varying lengths show that the average precision, recall, and mean average precision of the proposed method are 0.596, 0.636, and 0.584, which improve corresponding performance of best baselines by 7.28%, 18.35%, and 8.60%, respectively. Besides, our method interprets recommendation results by identifying important features leading to the results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Patent transfer refers to the movement of patent rights from one party to another. With the advent of open innovation, patent transfer has become a common practice for companies to obtain competitive advantages. Both patent owners and demanders have incentives to transfer patents. For the former, patent transfer provides monetary benefits (e.g., royalty payment) and strategic benefits (e.g., strengthening companies’ product market and technological positions)1. For the latter, patent transfer reduces the risks and costs associated with technological innovations, provides industry standards and advanced technologies, and facilitates technological learning2. Previous research has shown that patent transfer has significant impacts not only on companies’ performance but also on national economies2,3. For example, Han and Lee4 demonstrated that patent transfer significantly increases the market value of firms in Korea. Kwon5 found that firms’ patent transfer makes their rivals deter innovative activities. Roessner et al.6 combined university licensing data of the United States (US) from 1996 to 2010 with economic input–output models and found that the contribution of university licensing to the US economy during that period was at least 162.1 billion dollars.

Due to the importance of patent transfer, online patent platforms have been developed to facilitate the transfer process7,8. However, patent demanders still face an information overload problem as the number of patents is getting large. The number of yearly granted patents has exceeded 0.5 million since 2005 and reached 1.5 million in 2019 as recorded by the World Intellectual Property Organization (https://www.wipo.int/publications/en/details.jsp?id=4526&plang = EN). Consequently, the cost of searching for suitable patents is high. Many patent recommendation methods have been developed to suggest suitable patents to users9,10,11,12,13. However, previous methods fail to consider patent quality and explain recommendation results. The quality of patents reflects their value and is essential to firms. Previous studies show that patent quality is positively correlated to firms’ stock returns14, high-quality patents can protect firms from patent trolls and product infringement15, and the uncertainty of patent quality may cost enterprises billions16. Interpretability of the recommendation results also matters. Patents have complex information and cost companies much to select a suitable one. The interpretability increases information transparency and thus promotes persuasiveness and acceptance of recommender systems17. Besides, interpretable recommendations benefit users and platforms because of the improved user-friendliness18. Therefore, providing interpretable recommendations is necessary to improve companies’ decision-making in selecting suitable patents.

To bridge these gaps, this research proposes an interpretable patent recommendation method to facilitate companies to select suitable patents. The proposed method organizes heterogeneous patent information as a knowledge graph, a graph-structured knowledge base that enables efficient integration and semantic interpretation of heterogeneous information19. It then extracts two types of features from the knowledge graph, i.e., connectivity features and quality features that indicate the relevance of company-patent pairs and patent quality. Last, the proposed method builds an interpretable deep learning model for recommending patents and providing explanations to companies. Deep learning is popular in personalized recommendations for its superior performance, but it lacks interpretability. Therefore, this research develops an interpretable deep learning model by combining a deep neural network (DNN) with a layer-wise relevance propagation technique (LRP)20. DNN predicts the probabilities that target companies will select candidate patents while LRP generates interpretations by identifying the features that contribute the most to the predictions. The proposed method is evaluated with data obtained from the United States Patent and Trademark Office (USPTO, https://www.uspto.gov/learning-and-resources/electronic-data-products/patent-assignment-dataset) and PatentsView (http://www.patentsview.org/download/) databases. The evaluation results show that the proposed method not only outperforms the state-of-the-art patent recommendation methods in terms of the precision, recall, and mean average precision (MAP) measures but also provides valid interpretations.

The rest of this paper is organized as follows. Section "Related work" reviews studies on patent recommendations and patent quality analysis. Section "The proposed patent recommendation method" presents the proposed knowledge graph-based method for interpretable patent recommendations. Section "Experimental evaluation" introduces the details of the experimental evaluation. Section "Results and discussions" presents and discusses the evaluation results. Section "Conclusions" concludes this research with major contributions and possible future works.

Related work

Patent recommendations

Recommendation methods have been widely used to solve information overload problems in different contexts by proactively delivering personalized recommendations21. In the patent domain, recommendation methods have also been proposed to help users to find suitable patents. Previous patent recommendation methods differ mainly in application contexts, the information involved in the recommendations, and the techniques applied for the recommendations. The application contexts mainly contain query-driven patent search and patent transfer contexts8. The former provides specific user needs like keywords or patent documents while the latter requires the mining of company needs9,22. Patents have heterogeneous information that can be used for recommendation, including texts (e.g., patent titles and abstracts), categories (e.g., the International Patent Classification), interactions (e.g., patent searching and assignment behavior of users), citations, and inventors of patents23. Besides, the applied techniques of patent recommendations can be divided into content-based filtering (CB), collaborative filtering (CF), and hybrid methods12,24. Table 1 summarizes major patent recommendation studies based on the above aspects.

Several conclusions and research gaps can be identified from Table 1. First, previous studies mainly focus on the query-driven patent search context and pay less attention to the patent transfer context. In the former context, users provide explicit needs and care little about patent quality. For example, patent inventors provide key terms to search relevant prior arts before applying patents28. Patent examiners use patent applications to search missing references and examine the novelty of the applications23. Patent users identify similar patents for a given target patent9. Only several methods have been proposed for the patent transfer context and most of them were proposed recently. This situation indicates the increasing importance of patent transfer and calls for greater attention to improving patent transfer.

Second, previous studies have used various patent information but ignored patent quality. As shown in Table 1, previous studies on patent recommendations have different types of patent information, including texts, categories, citations, inventors, and interactions. However, they failed to consider patent quality, which is important to companies’ value and competitiveness14,15. Ignoring the quality of acquired patents can impede business and result in costly lawsuits16. Therefore, incorporating patent quality is imperative to recommending suitable patents to companies.

Third, CB and hybrid methods gain more popularity than CF methods in patent recommendation. CF methods recommend patents based on like-minded users. For example, Trappey et al.28 used a user-based CF method to identify users with similar patent searching behaviors and recommend patents that are liked by similar users. CF methods are less suitable in the technology transfer context because most patents are transferred only once to only one company. Consequently, CF methods suffer from the data sparsity problem in the technology transfer context. CB methods recommend patents that are similar to the patents liked by target users. For example, Krestel and Smyth29 used a latent Dirichlet allocation model to recommend patents with topics similar to a query patent. Chen and Chiu25 developed an IPC-based vector space model to find similar patents for a given patent. CB methods are suitable for the query-driven context because it mainly requires the matching between patents and queries. However, CB methods have difficulty in involving heterogeneous information and face an over-specialization problem, i.e., they recommend only patents with features previously liked by target users. Hybrid methods combine different methods to overcome their disadvantages. One frequently used hybrid mechanism is to learn a unified model based on the collaborative and content information. For example, Mahdabi and Crestani31 developed a citation query model which combines information including patent text, citations, categories, and inventors. Hybrid methods are popular in patent recommendation because of their advantages in overcoming the drawbacks of CF and CB methods. Consequently, this study proposes a hybrid method that incorporates heterogeneous patent information for patent recommendation.

Last, previous studies ignore the interpretability of patent recommendations. Given the importance of interpretability to companies’ decision-making17,18, there is a need for endowing recommendation results with interpretability. Therefore, this study develops an interpretable patent recommendation method, which can identify the features that contribute the most to each recommendation. Based on the identified features, we can explain why a specific patent is recommended to a specific company.

Patent quality analysis

Patent quality analysis focuses on identifying potential indicators of patent quality. Multiple indicators of patent quality have been proposed34. Among the proposed indicators, we summarize eight frequently used indicators, i.e., the number of forward citations, the number of backward citations, the number of patent claims, patent scope, previous transfer, patent family size, patent generality, and patent originality.

Forward citations are the citations that patents receive. A higher number of forward citations indicates a greater value of current inventions and their importance for subsequent technologies35,36. A patent’s backward citations are the references the patent has. More references indicate the more developed a technological field is and more incrementally the patent contributes to the field. Previous studies36,37 have consistently shown that there is a positive correlation between the number of backward citations and patent quality. A patent’s claims delineate the property rights protected by the patent and a larger number of patent claims indicates higher patent quality38. Patent scope is defined as the number of distinct patent classes to which patents are allocated. The broader the patent scope, the more attractive the patents’ exclusive rights, and hence the higher quality of the patents38. Previous transfer refers to the number of times a patent has been previously traded. It indicates the patent’s value as recognized by the technological market39. Patent family size is the number of countries in which a patented invention is protected. A larger patent family size indicates a higher quality of patents because the patented technologies are protected in a broader geographical scope40. Patent generality measures the generality of a patent’s impact. A patent has a general impact if it is cited by other patents that belong to a wide range of technological fields40,41. Patent originality measures the originality of a patent. A patent is believed to be more original if it relies on diverse knowledge sources (i.e., its backward citations belong to a wide range of technological fields)40,41. According to previous research, this study uses these eight indicators to measure patent quality.

The proposed patent recommendation method

Overview of the proposed method

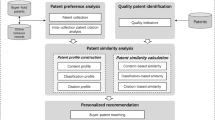

Figure 1 shows the framework of the proposed patent recommendation method. In the proposed method, we organize heterogeneous patent information as a patent knowledge graph that stores the information in the form of semantic relations between entities. The proposed method comprises three modules, namely, knowledge graph construction, feature extraction, and patent recommendation. The first module extracts patent and company information to construct a knowledge graph. The second module extracts, from the knowledge graph, connectivity and quality features that indicate the relevance between companies and patents and the quality of patents, respectively. Given the extracted features, the third module develops an interpretable patent recommendation model to recommend suitable patents and to provide interpretations to target companies. The following subsections present the details of these three modules.

Knowledge graph construction

Concepts and definitions

Definition 1 (Knowledge Graph). A knowledge graph is a graph-structured knowledge base denoted as \(\mathcal{G}=\left\{\left(h,r,t\right)\right\}\subseteq \mathcal{E}\times \mathcal{R}\times \mathcal{E}\), where \(\mathcal{E}\) is a set of entities, \(\mathcal{R}\) is a set of relations, \(h,t\in \mathcal{E}\), and \(r\in \mathcal{R}\). A triple \(\left(h,r,t\right)\) represents the fact that there is a relation \(r\) between entities \(h\) and \(t\). For example, \(\left(paten{t}_{i},has term,ter{m}_{j}\right)\) indicates the fact that \(paten{t}_{i}\) contains \(ter{m}_{j}\).

Definition 2 (Meta Path). A meta path is a type of composite relations between two types of entities. It is denoted as \(P = \left( {H,r_{1} ,E_{1} ,r_{2} , \ldots ,r_{l} ,T} \right)\), where \(H\) and \(T\) are the types of head and tail entities respectively, \({r}_{l}\in \mathcal{R}\) is a relation, \({E}_{1}\) is the type of entities reached through \({r}_{1}\), and \(l\) denotes the length of the meta path. Meta paths capture the semantic connections between entities and can be used to measure relevance between entities. For example, (Patent, has_term, Term, has_term, Patent, is_transfered_to, Company) is a meta path between patents and companies. The meta path indicates that the head patents are relevant to the companies because the head patents have the same terms as the patents transferred to the companies. A meta path can contain multiple concrete paths. For example, (patent1, has_term, term1, has_term, patent2, is_transfered_to, company1) and (patent1, has_term, term2, has_term, patent2, is_transfered_to, company1) are two concrete paths that belong to the above meta path.

Knowledge graph

A domain-specific knowledge graph is constructed for patent recommendation in the current context. The types of facts in the knowledge graph are shown in Fig. 2. Specifically, the knowledge graph comprises seven types of entities (i.e., patents, companies, inventors, terms, categories, countries, and claims) and eight types of relations (i.e., \({patent}_{i}\) cites \({patent}_{j}\), \({patent}_{i}\) is invented by \({inventor}_{j}\), \({patent}_{i}\) is transferred to \({company}_{j}\), \({patent}_{i}\) has term \({term}_{j}\), \({patent}_{i}\) has category \({category}_{j}\), \({patent}_{i}\) is protected in \({country}_{j}\), and \({patent}_{i}\) has claim \({claim}_{j}\)).

The knowledge graph is constructed by extracting the above types of entities and relations from patent databases. For example, we can extract, from Google’s patent database, two patents “5,208,847” and “4,893,327”, a company “Rockstar Bidco”, an inventor “Daniel L. Allen”, two terms “cellular” and “network”, two categories “H04W16/00” and “H04W8/26”, a country “the US”, and a claim “A method for providing an overlay cellular network”. When extracting terms from patents, text-processing techniques developed by Stanford42 are used to converse cases, remove stop words, standardize derivate words, etc. We can further extract relations between these entities, i.e., “5,208,847” cites “4,893,327”, “5,208,847” is transferred to “Rockstar Bidco”, “5,208,847” is invented by “Daniel L. Allen”, “5,208,847” has terms “cellular” and “network”, “5,208,847” has categories “H04W16/00” and “H04W8/26”, “5,208,847” is protected in “the US”, and “5,208,847” has a claim “A method for providing an overlay cellular network”. The knowledge graph is an accumulative knowledge base and can include more facts to be extracted.

Patent data except terms are structured and can be imported from public databases (e.g., the USPTO and PatentsView databases) to the knowledge graph. For patent terms, this study uses the rapid automatic keyword extraction (RAKE) method43 to extract three representative terms from the titles and abstracts of patents. RAKE is an unsupervised and domain-independent method for term extraction. It has higher accuracy and efficiency than other term extraction methods and thus is selected in this study.

Feature extraction

Connectivity features

Connectivity features are the connections between patents and companies and indicate their relevance based on various connecting entities. For example, a path (patent1, cites, patent2, is_transfered_to, company1) indicates that patent1 is relevant to company1 because it cites a patent transferred to the company. We define meta paths that connect companies to patents as the connectivity features. Meta paths involving claims are not considered because patents have unique claims, meaning that these meta paths have no concrete paths connecting any patent to any company. Besides, this study considers only meta paths within a certain length (e.g., 3) because long meta paths provide trivial information while causing high computational costs44. Therefore, from the constructed knowledge graph, this study extracts six connectivity features, which are presented along with their descriptions in Table 2.

The connectivity features need to be quantified before being used for patent recommendations. Given a patent-company pair \(\left({patent}_{i},{company}_{j}\right)\), let \(\#{path}_{ij}\left({P}_{k}\right)\) be the number of concrete paths that connect \({patent}_{i}\) to \({company}_{j}\) and belong to connectivity feature \({P}_{k}\). Then, this study quantifies the feature between \({patent}_{i}\) and \({company}_{j}\) as follows:

where \({Pat}_{j}\) is a set of candidate patents for \({company}_{j}\), \({max}_{r\in {Pat}_{j}}\left(\#{path}_{rj}\left({P}_{k}\right)\right)\) and \({min}_{r\in {Pat}_{j}}\left(\#{path}_{rj}\left({P}_{k}\right)\right)\) are the maximum and minimum numbers of concrete paths that belong to \({P}_{k}\) and connect any \({patent}_{r}\) in \(Pat\) to \({company}_{j}\), and \({x}_{ij}\left({P}_{k}\right)\) equals 0 if \({max}_{r\in Pat}\left(\#{path}_{rj}\left({P}_{k}\right)\right)={min}_{r\in {Pat}_{j}}\left(\#{path}_{rj}\left({P}_{k}\right)\right)\). \({x}_{ij}\left({P}_{k}\right)\) reflects the connection strength between \({patent}_{i}\) and \({company}_{j}\) through \({P}_{k}\) considering all candidate patents that can connect \({company}_{j}\) via \({P}_{k}\).

Quality features

The quality features indicate the quality of patents. As discussed in Section "Patent quality analysis", eight quality indicators are used to quantify patent quality and are mathematically defined as follows.

First, the number of forward citations (NFC). It suffers from the anti-recency problem that older patents have longer periods to accumulate forward citations than newer patents. To address the problem, we consider the publication time of patents and define NFC as follows:

where \(Patent\_set\) is the set of patents in the knowledge graph, \(\#\left({patent}_{j},cites,{patent}_{i}\right)\) equals 1 if \(\left({patent}_{j},cites,{patent}_{i}\right)\) exists in the knowledge graph and 0 otherwise, \({\sum }_{j=1}^{\left|Patent\right|}\#\left({patent}_{j},cites,{patent}_{i}\right)\) counts the number of patents that cite \({patent}_{i}\), and \(y\) is the number of years since the publication of \({patent}_{i}\).

Second, the number of backward citations (NBC). It is defined as follows:

where \({\sum }_{j=1}^{\left|Patent\_set\right|}\#\left({patent}_{i},cites,{patent}_{j}\right)\) counts the number of patents cited by \({patent}_{i}\).

Third, the number of claims (NCL). It is defined as follows:

where \(Claim\_set\) is the set of claims in the knowledge graph and \({\sum }_{j=1}^{\left|Claim\_set\right|}\#\left({patent}_{i},has\_claim,{claim}_{j}\right)\) counts the number of claims contained by \({patent}_{i}\).

Fourth, patent scope (PS). It is defined as follows:

where \(Category\_set\) is the set of categories in the knowledge graph and \({\sum }_{j=1}^{\left|Category\_set\right|}\#\left({patent}_{i},has\_category,{category}_{j}\right)\) counts the number of categories owned by \({patent}_{i}\).

Fifth, previous transfers (PT). It is defined as follows:

where \(Company\_set\) is the set of companies in the knowledge graph and \({\sum }_{j=1}^{\left|Company\_set\right|}\#\left({patent}_{i},is\_transferred\_to,{company}_{j}\right)\) counts the number of companies to which \({patent}_{i}\) has been transferred.

Sixth, patent family size (PFS). It is defined as follows:

where \(Country\_set\) is the set of countries in the knowledge graph and \({\sum }_{j=1}^{\left|Country\_set\right|}\#\left({patent}_{i},is\_protected\_in,{country}_{j}\right)\) counts the number of companies in which \({patent}_{i}\) is protected.

Seventh, patent generality (PG). Eighth, patent originality (PO). These two indicators are defined as follows:

where \({Patent\_set}_{i,f}\) (\({Patent\_set}_{i,b}\)) denotes the set of forward (backward) citations of \({patent}_{i}\), \(\left\{category|\#\left({patent}_{m},ha{s}_{category},category\right)=1,{patent}_{m}\in {Patent\_set}_{i,f}\right\}\) is the set of unique categories to which these forward citations belong, and \(\left|set\right|\) is the number of items in the \(set\). \({PG}_{{patent}_{i}}\) (\({PO}_{{patent}_{i}}\)) counts the average number of unique categories to which these forward (backward) citations belong.

Patent recommendation

In the patent recommendation module, we design an interpretable recommendation model based on DNN and LRP techniques. Given a set of patent-company pairs, the study transmits their connectivity and quality features to a basic DNN, a feed-forward neural network with multiple hidden layers. The DNN maps the features non-linearly from its input layer to a sequence of hidden layers and, at last, its output layer, which produces the probability that a specific company will select a specific patent. Let \({a}^{0}\) be the input vector of the input layer of an L-layer DNN and \({a}^{l}\) (\(l=1, 2, 3, \dots , L\)) be the output vector of its l-th layer (the output of the (l-1)-th layer is the input of the l-th layer). Then, the DNN is defined as follows:

where \({\phi }^{l}\left(\right)\) represents an activation function that performs a nonlinear mapping from the (l-1)-th layer to the l-th layer, \({\omega }^{l}\) is a weight matrix for the mapping, and \({b}^{l}\) is a bias vector for the l-th layer. For hidden layers, we use the rectified linear unit (ReLU) as the activation function because it overcomes gradient vanish. The activation function of the output layer is Sigmoid because it constrains the output value within the range of (0, 1). After training the DNN, we use it to estimate the probabilities that target companies will select candidate patents and thus generate recommendation lists for the target companies.

The DNN is a black-box model, which fails to explain why certain patents are recommended to a target company. To empower the DNN with interpretability, we combine it with the LRP technique, which infers the relevance of input features to the output value of the DNN. The core mechanism of LRP is that it assigns relevance scores to the input features by tracing their contributions, layer by layer, back to the output value. Consequently, we can interpret recommendation results by describing which pieces of information dominate in generating each recommendation. The relevance of each input feature is inferred as follows:

where \({r}_{j}^{l}\) is the relevance score of neuron \(j\) of layer \(l\), \({a}_{i,j}^{l-1}\) is the contribution made by neuron \(i\) of layer \((l-1)\) to neuron \(j\) of layer \(l\) in the forward propagation, and \({\sum }_{k}{a}_{k,j}^{l-1}\) is the total contribution made by all neurons of layer \((l-1)\) to neuron \(j\) of layer \(l\) in the forward propagation. After obtaining the relevance scores of the input features for each recommendation, we can visualize them for better interpretation.

Experimental evaluation

Experimental setup

Experiments are conducted on a computer with a Windows 11 operating system and an AMD Ryzen 5 PRO 4650U @ 2.10 GHz CPU. We implement codes using Python, TensorFlow, and other tools or packages like NumPy, Keras, Pandas, etc. The model optimizer, learning rate, and batch size are set to the Adam optimizer, 0.001, and 128, respectively. Besides, we set the dropout rate of turning off neurons to 0.2 to avoid overfitting.

We evaluate the proposed patent recommendation method with real-world data from the USPTO and PatentsView databases. The former contains information of patent transactions, namely, which patents have been assigned to which patent assignees. The latter includes details of patents, such as their titles, abstracts, categories, inventors, and references. The two share the same identities of patents and thus can be merged based on the identities. To evaluate the proposed method, we randomly select 500 companies that have at least 50 records of patent assignments and collect all patents owned by the companies from the USPTO database. Then, we collect patent information from the PatentsView database, namely, inventors, claims, categories, references, terms, and protected countries. The collected information constitutes a knowledge graph for the selected companies and its statistics are reported in Table 3.

For each selected company, we consider the first 50% of patents it purchased as known information in the knowledge graph and keep the rest unknown for model training or testing. This study randomly chooses 70% of the selected companies, along with their hypothetically unknown patents, as training data and the rest as test data.

Baseline methods

To evaluate the effectiveness of the proposed method (denoted as Ours), we compare it with the following baselines.

DNN+C a deep neural network with the connectivity features, which indicate the relevance between patents and companies. This method evaluates the role of the relevance in recommending suitable patents without considering their quality.

DNN+Q a deep neural network with the quality features, which reflect the quality of patents. The baseline evaluates the role of patent quality in recommending patents to companies without considering their relevance to the companies.

CF a collaborative filtering method that uses a user-item matrix to identify the nearest neighbors of a target user and leverages their preferences to infer the preference of the target user.

CB a content-based filtering method that represents patents and companies as vectors of terms and identifies relevant patents for target companies by calculating the cosine similarities of their vectors.

SVM a support vector machine model with the connectivity and quality features.

RF a random forest model with the connectivity and quality features.

HIN a patent recommendation method that leverages meta paths in knowledge graph for inferring the relevance between companies and patents8.

RippleNet a state-of-the-art recommendation method that leverages knowledge graph and deep learning for recommendations45.

Comparing the proposed method with the first two baselines reflects whether combing the connectivity and quality features outperforms using only one type of features. The remaining baselines are compared to evaluate whether the proposed method has superior performance in recommending patents to companies.

Evaluation metrics

This study selects three common metrics to evaluate the recommendation performance, namely, precision, recall, and MAP. Given a target company, precision shows the percentage of recommended patents that were transferred to the company. Recall represents the ratio of correctly recommended patents to the patents that were transferred to the company. Precision and recall fail to consider the rankings of correctly recommended patents in recommendation lists. MAP overcomes the drawback by considering both the precision and the rankings of recommended patents. A high MAP means that many suitable patents are recommended and they rank high on recommendation lists. We use \(RS\) to denote the set of patents recommended to the target company, \(TS\) the set of patents that were transferred to the target company, and \(RS\cap TS\) the set of patents in both \(RS\) and \(TS\). Then, we define the above metrics as follows:

where @k means the top k patents recommended to companies, \(\left|RS\right|\) and \(\left|TS\right|\) represent the number of patents in corresponding sets, and \(rel\left(i\right)\) equals 1 if the \(i\)-th patent of \(RS\) is in \(TS\) and 0 otherwise.

Results and discussions

Recommendation results

To evaluate the effectiveness of the proposed method, we compare its recommendation performance with the baseline methods. We vary the number of recommended patents from 10 to 50 and present recommendation results in Table 4. The table uses bold numbers to represent the best performance of corresponding metrics and numbers in brackets to indicate the improvement of the proposed method compared to the best baseline method.

Several conclusions can be drawn from the above results. First, the proposed method outperforms the baseline methods in terms of precision, recall, and MAP. This demonstrates the effectiveness and the necessity of considering patent quality in recommending patents to companies. Second, the MAP improvements are higher than the improvements in precision. This means that considering patent quality not only helps to find more patents that are relevant to the companies but also helps to improve the rankings of relevant patents in the recommendation lists. Third, the CF method performs poorly for the patent recommendation. This result meets our expectations because most patents have been transferred to only one company. Hence, the CF method suffers from the data sparsity problem in this recommendation context. Fourth, CF and CB methods perform much worse than the other methods. One likely reason is that only a small part of patent information is involved in the two methods. Fifth, our method outperforms SVM and RF even though they use the same information. This indicates that the deep learning model can better capture companies’ preferences than the traditional machine learning models in the current context.

Interpretability analysis

We conduct qualitative analysis to evaluate the interpretability of our method. Specifically, we design a module that visualizes the importance of features to each recommendation. Figure 3 illustrates the visualization of a randomly selected company and a patent recommended to it. The figure shows the main information based on which the patent is recommended to the company. Specifically, patent family size is the overriding reason, followed by P5 and P3, which can be referred to Table 2. The results show that being protected in multiple countries, having a direct citation relation, as well as having common inventors, with the patents owned by the company are the main reasons why the patent is recommended. In contrast, P6, the number of forward citations, and the number of backward citations barely impact the recommendation. Averagely, the connectivity features contribute more to the recommendation than the quality features.

Ablation study

To analyze the impacts of key components of the proposed method in generating accurate and interpretable results, we conduct additional experiments. First, to examine the role of connectivity and quality features in patent recommendation, we experiment with the proposed method using different features and present results in Fig. 4. The results show that connectivity features generate good performance, meaning that they are helpful in finding relevant patents for companies. On the contrary, the recommendation performance of DNN+Q is about 0, meaning that quality features alone lead to very bad performance. This is because many high-quality patents are irrelevant to the business of target companies and are unnecessary for the companies. Consequently, recommending these patents to the target companies results in low performance. Besides, combining both types of features yields the best performance. This confirms the need of considering both types of features in patent recommendation.

Second, we examine the impacts of important features on recommendation performance. This also helps to evaluate the interpretability of our method. To this end, we remove features identified as important from the proposed method and track its changes in recommendation performance. Specifically, we remove the top five most important features one by one and measure the resulting recommendation performance, which is presented in Fig. 5. Results show that the recommendation performance decreases as the number of removed features increases. However, the drops in performance may simply be caused by discarding features. To demonstrate whether removing the identified important features leads to larger drops compared to removing the unimportant features, we remove the least important features one by one and calculate the resulting recommendation performance.

Figure 6 presents the recommendation results of removing the least important features one by one. The results show that removing the least important features also leads to drops in recommendation performance, but the drops are much smaller than those of removing the most important features. Besides, the performance difference between removing important and unimportant features widens as the number of removed features increases. These observations show that the identified important features do contribute to the recommendations and removing them leads to dramatic drops in precision, recall, and MAP. The results confirm that the identified important features are more significant to recommendation results and can be used to interpret the results.

Based on the above analysis and discussion, we conclude the impact of the proposed method on the research problems. Regarding the problem that previous patent recommendation methods ignore patent quality, the proposed method applies eight quality features to quantify patent quality and involves it in the recommendation process. Experimental results demonstrate that considering patent quality improves patent recommendation performance. In terms of lacking interpretability, the proposed method addresses it by combining a deep neural network with a relevance propagation technique, which can identify important features to each recommendation. Consequently, our method can generate explanations based on the important features and thus ease the lacking of interpretability.

Conclusions

In this study, we propose an interpretable patent recommendation method based on knowledge graph and deep learning. The proposed method organizes various patent information as a knowledge graph. It then defines and extracts connectivity and quality features that indicate the relevance of patent-company pairs and patent quality. Given the extracted features, we design an interpretable recommendation model by combining DNN and LRP techniques. We conduct experiments to evaluate the proposed method and experimental results show that its average precision@k, recall@k, and MAP@k (k = 10, 20, 30, 40, 50) are 0.596, 0.636, and 0.584, respectively. The results are 7.28%, 18.35%, and 8.60% higher than those of best baseline methods. We qualitatively and quantitatively analyze the interpretability of the proposed method. The qualitative and quantitative analyses show that our method can provide interpretable results.

This research extends existing studies on patent recommendations. First, this is the first study that combines knowledge graph, DNN, and LRP techniques for effective and interpretable patent recommendations. Knowledge graph models heterogeneous patent data and facilitates feature extraction and interpretation. DNN enables better patent recommendation by capturing nonlinear and nontrivial relationships between patents and companies. LRP endows our patent recommendation model with interpretability by identifying features that contribute the most to recommendation results. Experiments show that our method is superior to baseline methods. Second, this research incorporates patent quality into patent recommendation. Experiments demonstrate its effectiveness in enhancing recommendation performance. Third, this study provides a practical solution to facilitate technology transfer. Through the proposed patent recommendation method, companies can find suitable patents with explanations.

Future research can extend this study from different aspects. The first one is to incorporate more company information into the patent recommendation model. For example, the industries and business scopes of companies can be used to identify relevant patents. Future work can include such information into knowledge graph and extract more features for patent recommendations. The second one is to include patent portfolios into patent recommendations because companies may consider their existing patents when obtaining new ones. Future research can design new recommendation models with features that indicate the relationships between candidate patents and the existing patents.

Data availability

The data used in this paper are public and can be downloaded from the following links: https://www.uspto.gov/learning-and-resources/electronic-data-products/patent-assignment-dataset and http://www.patentsview.org/download/.

References

Lichtenthaler, U. Technology exploitation in the context of open innovation: Finding the right ‘job’ for your technology. Technovation 30, 429–435 (2010).

Lee, J.-S., Park, J.-H. & Bae, Z.-T. The effects of licensing-in on innovative performance in different technological regimes. Res. Policy 46, 485–496 (2017).

Wang, Y., Ning, L. & Chen, J. Product diversification through licensing: Empirical evidence from Chinese firms. Eur. Manag. J. 32, 577–586 (2014).

Han, J.-S. & Lee, S.-Y.T. The impact of technology transfer contract on a firm’s market value in Korea. J. Technol. Transf. 38, 651–674 (2013).

Kwon, S. How does patent transfer affect innovation of firms?. Technol. Forecast. Soc. Change 154, 119959 (2020).

Roessner, D., Bond, J., Okubo, S. & Planting, M. The economic impact of licensed commercialized inventions originating in university research. Res. Policy 42, 23–34 (2013).

Galasso, A., Schankerman, M. & Serrano, C. J. Trading and enforcing patent rights. RAND J. Econ. 44, 275–312 (2013).

Wang, Q., Du, W., Ma, J. & Liao, X. Recommendation mechanism for patent trading empowered by heterogeneous information networks. Int. J. Electron. Commer. 23, 147–178 (2019).

Chen, J., Chen, J., Zhao, S., Zhang, Y. & Tang, J. Exploiting word embedding for heterogeneous topic model towards patent recommendation. Scientometrics 125, 2091–2108 (2020).

He, X. et al. Weighted meta paths and networking embedding for patent technology trade recommendations among subjects. Knowl. Based Syst. 184, 104899 (2019).

Moeller, A. & Moehrle, M. G. Completing keyword patent search with semantic patent search: introducing a semiautomatic iterative method for patent near search based on semantic similarities. Scientometrics 102, 77–96 (2015).

Trappey, A., Trappey, C. V. & Hsieh, A. An intelligent patent recommender adopting machine learning approach for natural language processing: A case study for smart machinery technology mining. Technol. Forecast. Soc. Change 164, 120511 (2021).

Zhang, Y. et al. Semantic based heterogeneous information network embedding for patent citation recommendation. in 2020 International Conference on Artificial Intelligence and Computer Engineering (ICAICE) 518–527 (2020). https://doi.org/10.1109/ICAICE51518.2020.00106.

Shu, T., Tian, X. & Zhan, X. Patent quality, firm value, and investor underreaction: Evidence from patent examiner busyness. J. Financ. Econ. 143, 1043–1069 (2022).

Trappey, A. J. C., Trappey, C. V., Wu, C.-Y. & Lin, C.-W. A patent quality analysis for innovative technology and product development. Adv. Eng. Inform. 26, 26–34 (2012).

Leiponen, A. & Delcamp, H. The anatomy of a troll? Patent licensing business models in the light of patent reassignment data. Res. Policy 48, 298–311 (2019).

Liu, N. et al. Explainable recommender systems via resolving learning representations. in Proceedings of the 29th ACM International Conference on Information & Knowledge Management 895–904 (Association for Computing Machinery, 2020). https://doi.org/10.1145/3340531.3411919.

Zheng, Y. et al. Disentangling User Interest and Conformity for Recommendation with Causal Embedding. arXiv:2006.11011 [cs] (2021).

Oh, B., Seo, S. & Lee, K.-H. Knowledge graph completion by context-aware convolutional learning with multi-hop neighborhoods. in Proceedings of the 27th ACM International Conference on Information and Knowledge Management 257–266 (2018). https://doi.org/10.1145/3269206.3271769.

Montavon, G., Binder, A., Lapuschkin, S., Samek, W. & Müller, K.-R. Layer-wise relevance propagation: an overview. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning (eds Samek, W. et al.) 193–209 (Springer International Publishing, 2019). https://doi.org/10.1007/978-3-030-28954-6_10.

Deldjoo, Y., Schedl, M., Cremonesi, P. & Pasi, G. Recommender Systems Leveraging Multimedia Content. ACM Comput. Surv. 53, 106:1-106:38 (2020).

Du, W., Jiang, G., Xu, W. & Ma, J. Sequential patent trading recommendation using knowledge-aware attentional bidirectional long short-term memory network (KBiLSTM). J. Inf. Sci. https://doi.org/10.1177/01655515211023937 (2021).

Fu, T., Lei, Z. & Lee, W. Patent citation recommendation for examiners. in 2015 IEEE International Conference on Data Mining 751–756 (2015). https://doi.org/10.1109/ICDM.2015.151.

Deng, W. & Ma, J. A knowledge graph approach for recommending patents to companies. Electron. Commer. Res. https://doi.org/10.1007/s10660-021-09471-2 (2021).

Chen, Y.-L. & Chiu, Y.-T. An IPC-based vector space model for patent retrieval. Inf. Process. Manag. 47, 309–322 (2011).

Ji, X., Gu, X., Dai, F., Chen, J. & Le, C. Patent collaborative filtering recommendation approach based on patent similarity. in 2011 Eighth International Conference on Fuzzy Systems and Knowledge Discovery (FSKD) Vol. 3 1699–1703 (2011).

Oh, S., Lei, Z., Lee, W.-C., Mitra, P. & Yen, J. CV-PCR: a context-guided value-driven framework for patent citation recommendation. in Proceedings of the 22nd ACM International Conference on Conference on Information & Knowledge Management 2291–2296 (ACM, 2013). https://doi.org/10.1145/2505515.2505659.

Trappey, A. J. C., Trappey, C. V., Wu, C.-Y., Fan, C. Y. & Lin, Y.-L. Intelligent patent recommendation system for innovative design collaboration. J. Netw. Comput. Appl. 36, 1441–1450 (2013).

Krestel, R. & Smyth, P. Recommending patents based on latent topics. in Proceedings of the 7th ACM Conference on Recommender Systems 395–398 (ACM, 2013). https://doi.org/10.1145/2507157.2507232.

Oh, S., Lei, Z., Lee, W. & Yen, J. Recommending missing citations for newly granted patents. in 2014 International Conference on Data Science and Advanced Analytics (DSAA) 442–448 (2014). https://doi.org/10.1109/DSAA.2014.7058110.

Mahdabi, P. & Crestani, F. Query-driven mining of citation networks for patent citation retrieval and recommendation. in Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management 1659–1668 (ACM, 2014). https://doi.org/10.1145/2661829.2661899.

Deng, N., Chen, X. & Li, D. Intelligent recommendation of Chinese traditional medicine patents supporting new medicine’s R&D. J. Comput. Theor. Nanosci. 13, 5907–5913 (2016).

Rui, X. & Min, D. HIM-PRS: A patent recommendation system based on hierarchical index-based MapReduce framework. In Advances in Computer Science and Ubiquitous Computing (eds Park, J. J. et al.) 843–848 (Springer Singapore, 2017).

Squicciarini, M., Dernis, H. & Criscuolo, C. Measuring patent. Quality https://doi.org/10.1787/5k4522wkw1r8-en (2013).

Ciaramella, L., Martínez, C. & Ménière, Y. Tracking patent transfers in different European countries: Methods and a first application to medical technologies. Scientometrics 112, 817–850 (2017).

Odasso, C., Scellato, G. & Ughetto, E. Selling patents at auction: An empirical analysis of patent value. Ind. Corp. Change 24, 417–438 (2015).

Reitzig, M. Improving patent valuations for management purposes—Validating new indicators by analyzing application rationales. Res. Policy 33, 939–957 (2004).

Fischer, T. & Leidinger, J. Testing patent value indicators on directly observed patent value—An empirical analysis of Ocean Tomo patent auctions. Res. Policy 43, 519–529 (2014).

Serrano, C. J. The dynamics of the transfer and renewal of patents. RAND J. Econ. 41, 686–708 (2010).

Sreekumaran Nair, S., Mathew, M. & Nag, D. Dynamics between patent latent variables and patent price. Technovation 31, 648–654 (2011).

Mathew, M., Mukhopadhyay, C., Raju, D., Nair, S. S. & Nag, D. An analysis of patent pricing. Decision; Kolkata 39, 28–39 (2012).

Manning, C. et al. The Stanford CoreNLP natural language processing toolkit. in Proceedings of 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations 55–60 (Association for Computational Linguistics, 2014). https://doi.org/10.3115/v1/P14-5010.

Rose, S. J., Cowley, W. E., Crow, V. L. & Cramer, N. O. Rapid automatic keyword extraction for information retrieval and analysis. (2012).

Liu, H., Jiang, Z., Song, Y., Zhang, T. & Wu, Z. User preference modeling based on meta paths and diversity regularization in heterogeneous information networks. Knowl. Based Syst. 181, 104784 (2019).

Wang, H. et al. RippleNet: Propagating user preferences on the knowledge graph for recommender systems. in Proceedings of the 27th ACM International Conference on Information and Knowledge Management 417–426 (ACM, 2018). https://doi.org/10.1145/3269206.3271739.

Acknowledgements

We acknowledge the financial support from the Natural Science Foundation of Guangdong Province (No. 2022A1515011363), the Guangdong Philosophy and Social Science Planning Project (No.GD21CTS05), the Guangzhou Science and Technology Plan Project (No. 202002030384), and the 2021 Major Cultivation Project of Philosophy and Social Sciences in South China Normal University.

Author information

Authors and Affiliations

Contributions

H.C. wrote the manuscript, W.D. proposed the method, conducted experiments, and analyzed results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, H., Deng, W. Interpretable patent recommendation with knowledge graph and deep learning. Sci Rep 13, 2586 (2023). https://doi.org/10.1038/s41598-023-28766-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-28766-y

- Springer Nature Limited

This article is cited by

-

A Knowledge Graph Based Disassembly Sequence Planning For End-of-Life Power Battery

International Journal of Precision Engineering and Manufacturing-Green Technology (2024)

-

From PARIS to LE-PARIS: toward patent response automation with recommender systems and collaborative large language models

Artificial Intelligence and Law (2024)

-

Knowledge graph enhanced citation recommendation model for patent examiners

Scientometrics (2024)

-

A deep learning method for recommending university patents to industrial clusters by common technological needs mining

Scientometrics (2024)