Abstract

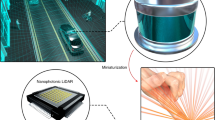

Thanks to its superior imaging resolution and range, light detection and ranging (LiDAR) is fast becoming an indispensable optical perception technology for intelligent automation systems including autonomous vehicles and robotics1,2,3. The development of next-generation LiDAR systems critically needs a non-mechanical beam-steering system that scans the laser beam in space. Various beam-steering technologies4 have been developed, including optical phased array5,6,7,8, spatial light modulation9,10,11, focal plane switch array12,13, dispersive frequency comb14,15 and spectro-temporal modulation16. However, many of these systems continue to be bulky, fragile and expensive. Here we report an on-chip, acousto-optic beam-steering technique that uses only a single gigahertz acoustic transducer to steer light beams into free space. Exploiting the physics of Brillouin scattering17,18, in which beams steered at different angles are labelled with unique frequency shifts, this technique uses a single coherent receiver to resolve the angular position of an object in the frequency domain, and enables frequency–angular resolving LiDAR. We demonstrate a simple device construction, control system for beam steering and frequency domain detection scheme. The system achieves frequency-modulated continuous-wave ranging with an 18° field of view, 0.12° angular resolution and a ranging distance up to 115 m. The demonstration can be scaled up to an array realizing miniature, low-cost frequency–angular resolving LiDAR imaging systems with a wide two-dimensional field of view. This development represents a step towards the widespread use of LiDAR in automation, navigation and robotics.

Similar content being viewed by others

Main

The optical beam-steering device is a crucial component of a scanning light detection and ranging (LiDAR) system19. To develop the next-generation LiDAR technology, it is necessary to replace the current mechanical scanners with non-mechanical beam-steering devices that have the advantages of compactness, robustness, speed and cost4. Methods of non-mechanical optical beam steering are generally based on diffractive or dispersive principles4. Diffractive methods control the wavefront of the optical beam through a synthetic aperture that emits light with a tunable phase front towards a controlled direction. Such an artificial aperture can be created using an optical phase array (OPA)5,6,7,8 or a spatial light modulator (SLM)9,10,11. An alternative technology is the focal plane switch array (FPSA)12,13, in which beams from an array of emitters placed at the focal plane of a lens are refracted to different angles. To achieve a large steering angle and a high angular resolution, OPA, SLM and FPSA universally require a large array of discrete, wavelength-scale elements12,13,20, each individually controlled. The sophisticated control systems and the complex fabrication processes needed are outstanding challenges faced by these technologies.

On the other hand, dispersive optical elements, such as prisms and gratings, can diffract light of different wavelengths in different directions. Therefore, beam steering can be achieved by using a tunable laser, a broadband source or a frequency comb as the light source14,15,16. These sources, however, are sophisticated and expensive. If the dispersive property of the optical element can be tuned, rather than tuning the wavelength, in principle, beam steering can also be realized. The dispersion of an optical element depends on the properties of its material (for example, for a prism) and its structure (for example, for a grating). It is, however, impractical to tune those over a wide enough range to achieve realistic beam steering.

Interestingly, nature provides a means to generate a dynamically tunable index grating—acoustic waves propagating in a material mechanically undulate its refractive index and thus produce a moving index grating17. For a given material, the spatial period of this grating is determined by the acoustic wavelength, and the phase contrast is controlled by the acoustic intensity. This moving grating can scatter light through Brillouin scattering17,18. Given the acoustic frequency (Ω) and phase velocity (v), momentum conservation (or equivalently, a phase-matching condition) determines the angle of light scattering, whereas energy conservation dictates that the frequency of the scattered light is shifted by the amount of the acoustic frequency. These principles have been utilized in acousto-optic deflectors, switches, frequency shifters and filters21. Conventional acousto-optic devices use bulk crystals and MHz frequency to realize a large optical aperture and achieve a high angular resolution22, but deflect light only by a small angle. A paradigm shift is the advancement of guided-wave acousto-optic devices, which confine both optical and acoustic waves in planar waveguiding structures, leading to substantially enhanced light–sound interaction and, consequently performance and efficiency23,24,25,26,27. In those devices, the scattering of light mainly remains in the plane of the two-dimensional (2D) waveguide.

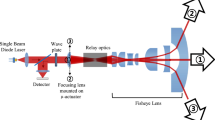

If the acoustic frequency is sufficiently high, the acoustic wavenumber K = Ω/v will be large enough to scatter an optical waveguide mode into the light cone, thereby steering a beam into free space28,29. Figure 1a illustrates this effect, which is the basis of the acousto-optic beam steering (AOBS) that we report here. The dispersion diagram in Fig. 1b depicts the phase-matching condition of AOBS: \({k}_{0}\cos \left(\theta \right)={k}_{{\rm{g}}}-K\), where kg is the guided optical mode wavenumber, k0 = ω0/c is the free space wavenumber and θ is the scattering angle measured from the surface of the waveguide. A perturbation theory treatment of the scattering is included in Supplementary Information 1. Meanwhile, the scattered light frequency is shifted up to the anti-Stokes sideband at ω0 + Ω. Therefore, one can find a frequency–angular relation (Ω–θ):

where ne = kg/k0 is the effective mode index of the waveguide mode. The relationship in equation (1) has two implications. First, the scattering angle θ is controlled by the acoustic frequency Ω such that beam steering out of the substrate (that is, \({\rm{\cos }}\left(\theta \right) < 1\)) can be achieved with Ω/2π in the GHz range for typical planar waveguides (for example, with ne > 1.5) and near-infrared (IR) light. Second, because the frequency of the scattered light is shifted up to ω0 + Ω, by measuring the frequency of the reflected light from an object, one can use equation (1) to resolve the angular position θ of the object. Thereby, an image of the object can be reconstructed from frequency domain measurements when the steered light beam is scanned in the scene by AOBS. Combining these two essential principles, we propose the frequency–angular resolving (FAR) LiDAR, as illustrated in Fig. 1a, which consists of a transmitter using AOBS and a coherent receiver to measure the frequency of the reflected light. FAR LiDAR has several important advantages. First, as the angular position of the object is ‘labelled’ by the frequency of the reflected light, the receiver can image the object completely in the frequency domain. This novel scheme thus allows the transmitter and the receiver to be separated in a bistatic configuration and to be operated asynchronously, affording much flexibility in designing the receiver to improve the signal-to-noise ratio (SNR) and detection speed. Second, AOBS uses a single microwave drive to excite the acoustic wave, the frequency of which determines the steering angle. Random access is achieved by changing the drive frequency arbitrarily within the system bandwidth. Third, AOBS uses coherent acoustic waves so that multiple tones of different frequencies can copropagate in the device to steer light into multiple directions simultaneously. Therefore, parallel scanning and detection of multiple beams can be achieved. Finally, as the acoustic frequency used is in the GHz range, FAR LiDAR affords sufficient bandwidth to perform frequency-modulated continuous-wave (FMCW) for coherent ranging. Combining FAR and FMCW, full three-dimensional (3D) imaging will be achieved. In this paper, we demonstrate the above-mentioned capabilities with a prototype FAR LiDAR system based on a chip-scale AOBS device.

a, Schematic illustration of the FAR LiDAR scheme based on AOBS. b, Dispersion diagram of the acousto-optic Brillouin scattering process. The dispersion curve of the TE0 mode of the LN planar waveguide is simulated and plotted as the red curve. At frequency ω0 (wavelength 1.55 μm), the mode wavenumber is 1.8k0 (red circle). The counter-propagating acoustic wave (green arrow) scatters the light into the light cone of air (purple circle in the grey shaded area). For clarity, the frequency axis is not to the scale. Inset: momentum vector relation of the Brillion scattering. The light is scattered into space at an angle θ from the surface. c, Photograph of an LNOI chip with ten AOBS devices. d, Scanning electron microscope image of the IDT. The period is chirped from 1.45 to 1.75 μm. e, Finite-element simulation of the AOBS process showing that light is scattered into space at 30° from the surface.

We build the AOBS devices using a lithium niobate on insulator (LNOI) substrate. Figure 1c shows an array of ten AOBS devices fabricated on a LNOI chip. Each AOBS device has a very simple construction of only two components. On one end, an interdigital transducer (IDT) (Fig. 1d) is patterned and used to excite acoustic waves utilizing the strong piezoelectricity of the lithium niobate (LN). We chose the x-cut, y-propagation configuration for the acoustic wave generation, which shows the best combination of propagation loss and electromechanical coupling efficiency. On the other end, an optical grating coupler is patterned in hydrogen silsesquioxane. The grating couples light from a laser to the transverse electric field (TE) mode of the planar waveguide formed by the LN layer. The space between the IDT and the grating coupler, which is w = 100 μm wide and l = 2 mm long, is the nominal aperture of the AOBS. The acoustic waves are generated by the IDT and propagate to fill this aperture, scattering the counter-propagating light. From the dispersion relation of the TE mode (Fig. 1e) at 1.55 μm optical wavelength (Fig. 1b), we calculate the required acoustic wavenumber from equation (1). At a given frequency, the acoustic wavelength (Λ) and wavenumber (K) are determined by the period of the IDT and the phase velocity (v) of the acoustic mode that is excited. Of interest for AOBS is the Rayleigh-type mode (Extended Data Fig. 1) which is confined in the LN layer and thus efficiently interacts with the TE mode. The Rayleigh mode scatters light into free space mainly through the boundary movement effect, which is two orders of magnitude more effective than the photoelastic effect. This is different from the shear modes27,30. To achieve a large field of view (FOV), the AOBS device needs to have a widely tunable acoustic frequency. We use a broadband IDT design with chirped periods to achieve Δf = 350 MHz bandwidth at a central frequency of 1.8 GHz. Our simulation (Fig. 1e) shows that the TE mode is scattered into free space at an angle (θ) of 30º with a theoretical FOV of 20º. Another important metric for acousto-optic devices is the number of resolvable spots22, which is given by N = Δf × τ. τ = l/v is the acoustic transit time across the aperture of length l, where v = 3,100 m s−1 is the phase velocity of the Rayleigh mode. The theoretical value is N = 226, comparable to bulk acousto-optic devices, despite a much smaller aperture.

The beam-steering results of the AOBS device are shown in Fig. 2. A fibre-coupled, near-IR diode laser is used as the light source. An IR camera is placed at the focal plane of a lens to image the steered beam in the momentum space (k space) (Extended Data Fig. 2). Figure 2a shows the superimposed images captured by the camera, showing 66 spots when the beam is scanned from 22º to 40º (18º FOV). The variation in spot intensity is attributed to the uneven electromechanical conversion efficiency within the bandwidth of the IDT (Extended Data Fig. 1). Figure 2b shows the detailed profile of one spot, which has an angular divergence of 0.11º (full-width half-maximum) along the kx axis and 1.6º along the ky axis. The elliptical spot shape is diffraction-limited by the rectangular aperture. The average kx axis angular divergence of the beams across the FOV is 0.12º so the number of resolvable spots along the kx axis is N = 150, lower than the theoretical value. Figure 2c shows the real-space image of light scattering from the AOBS aperture. The intensity of scattered light decays from the front edge (x = 0) of the IDT towards the grating coupler. Because the optical propagation loss of the TE mode is expected to be low, fitting the results in Fig. 2c with a model (Supplementary Information 3) reveals that the acoustic wave suffers a high loss with a propagation length (1/e) of approximately 0.6 ± 0.1 mm, which reduces the effective AOBS aperture length. In comparison, acoustic waves of similar frequencies in bulk LiNbO3 have a propagation length of centimetres31,32. The relatively high acoustic loss can be attributed to the bonding interfaces of the LNOI wafer and the leakage to the substrate. By using a free-standing LN membrane or LN on sapphire substrates in which the acoustic wave is confined in the LN layer30,33, the acoustic loss can be substantially reduced. The highest overall beam-steering efficiency is determined to be 2.8% at 30°, based on fitting the results in Fig. 2c with a model, when 20 dBm microwave power is applied. The limiting factors of the current device in efficiency include the low electromechanical conversion efficiency of the broadband IDT of only approximately 6.4% (Extended Data Fig. 1 and Supplementary Information 4) and the small effective aperture size owing to high acoustic loss. The efficiency can be improved if the effective aperture can be increased by reducing the acoustic loss. Our simulation (Fig. 1e and Supplementary Information 2) shows that the efficiency on LNOI can be improved to 50% with a 5 mm aperture length, 100 μm aperture width and a moderate acoustic power of 23 dBm, exceeding that of other solid-state beam-steering technologies.

a, Superimposed image of the focal plane when the beam is scanned across a FOV from 22° to 40°, showing 66 well-resolved spots. b, Magnified image of one spot at 38.8°. The beam angular divergence along kx is 0.11° (bottom inset) and along ky is 1.6° (left inset), owing to the rectangular AOBS aperture. c, Real-space image of light scattering from the AOBS aperture. The light intensity decays exponentially from the front of the IDT (x = 0), owing to the propagation loss of the acoustic wave. Fitting the integrated intensity along the x axis (bottom inset, yellow line) gives an acoustic propagation length of 0.6 ± 0.1 mm. d, The measured frequency–angle relation when the beam is steered by sweeping the acoustic frequency. a.u., arbitrary units. e–h, Dynamic multi-beam generation and arbitrary programming of 16 beams (e) at odd (f) and even (g) sites, and in a sequence of the American Standard Code for Information Interchange code of characters ‘WA’ (h).

The AOBS frequency–angular relation θ(Ω) described by equation (1) is measured and plotted in Fig. 2d. This measurement provides the calibration needed for using the AOBS in FAR LiDAR. In addition, multiple tones of acoustic waves can copropagate in the AOBS aperture to generate multiple beams simultaneously, as demonstrated in Fig. 2e–h. Each beam is independently controlled in phase and amplitude by the corresponding radio frequency drive. We drive the IDT with multi-tone waveforms that consist of 16 equally spaced frequency components to generate an array of 16 beams (Fig. 2e). To demonstrate arbitrary programming of the beam array, in Fig. 2f–h, respectively, we show beams generated at even and odd sites, and a sequence representing the American Standard Code for Information Interchange code of ‘WA’. It is worth noting that the multi-beam steering capability of acousto-optic deflectors plays an important role in neutral atom and trapped ion quantum computing for performing parallelized quantum gates34,35. The LNOI platform can support a broad optical spectral range, including those used in atom and ion quantum computing. Therefore, we expect that the integrated AOBS system we report will also impact quantum technology.

We then demonstrate 2D LiDAR imaging. Figure 3a shows the schematic of the FAR LiDAR system. In the transmitter, an AOBS device steers the laser beam at an angle θ(Ω) towards the object in the far field. If FMCW ranging is performed, an electro–optic phase modulator (EOM) is used to generate a chirped light source. Without FMCW, the frequency of the steered beam is shifted to the anti-Stoke frequency at ω0 + Ω. To resolve this frequency shift, we use a coherent receiver scheme. The receiver taps 1% of the laser source as the local oscillator (LO). The reflected light from the object is collected with a lens and a single-mode fibre placed in front of the focal plane to collect reflected light within the FOV, although the collection efficiency of approximately −55 dB is low (Supplementary Information 7). The other end of the fibre is connected to a 50/50 fibre coupler, in which the received signal combines with the LO. A balanced photodetector (BPD) is used to detect their beating signal at frequency Ω, which is digitized and analysed. Figure 3b shows various beating signals measured at the receiver when the AOBS scans the beam across the FOV. We can transform frequency Ω to the angle θ’ and reconstruct an image of the object. In Fig. 3c, we demonstrate imaging of a cutout of a husky dog logo made of a retroreflective film with a size of 60 × 50 mm and placed at a distance of 1.8 metres from the LiDAR. Because AOBS scans the beam in the horizontal dimension, a galvo mirror is used to scan in the vertical direction. The position of each pixel is resolved from the corresponding beating frequency and the brightness from the signal intensity. Figure 3d,e show the signals of two pixels, centred at 1.6575 GHz and 1.7125 GHz, respectively. We also measured the point-to-point switch speed of AOBS. The measured rising time is 1.5 μs (Extended Data Fig. 3), which is probably limited by the electronic system as it is much longer than the acoustic wave transit time of approximately 0.19 μs in the effective aperture.

a, Schematics of the FAR LiDAR system. The transmitter includes a fixed-wavelength, fibre-coupled laser source, an EOM for FMCW (used in Fig. 4) and an AOBS device driven by a radio frequency source to steer the beam. An additional mirror is used to deflect the light towards the object. The coherent receiver uses homodyne detection to resolve the frequency shift of the reflected light by beating it with the LO, which is tapped from the laser source. A BPD is used to measure the beating signal, which is sampled by a digital data acquisition (DAQ) system or analysed by a real-time spectrum analyser. As a demonstration, a 60 × 50 mm cutout of a husky dog image made of retroreflective film is used as the object. It is placed 1.8 metres from the LiDAR system. b, Spectra of the beating signal at the receiver when the AOBS scans beam across the FOV. Using the measured frequency–angle relation in Fig. 2d, the beating frequency can be transformed to the angle of the object. c, FAR LiDAR image of the object. The position and brightness of each pixel are resolved from the beating frequency and power of the signal, respectively. d,e, The raw beating signal of two representative pixels (orange, d; purple, e).

To achieve 3D imaging, we add FMCW ranging to the FAR LiDAR. The AOBS affords enough bandwidth to accommodate both FAR and FMCW. To chirp the optical frequency, we drive the EOM (Fig. 3a) at frequency Δω, which creates two sidebands at ω0 ± Δω. Both can be used as the chirped source by modulating the drive frequency with a triangular waveform Δω(t) at a chirping rate g = d(Δω/2π)/dt. The receiver measures the beating frequency fB between the local reference signal and the reflected light to determine the distance of the object: \(d=c{f}_{{\rm{B}}}/2{\rm{g}}-{d}_{0}\), where d0 is the extra optical path length difference. Figure 4a shows the time–frequency spectrogram of the chirped optical source at the transmitter and the reflected optical signal at the receiver, when a small frequency chirp excursion fE = 10 MHz is used. The chirp rate is g = 1 MHz μs−1 and the integration time is 2 μs. Note that the reflected signal has an additional frequency offset of the acoustic frequency Ω/2π and has a delay due to the time-of-flight 2d/c. The beating frequency between the reference and the signal thus alternates between Ω/2π ± fB (Fig. 4b, upper panel). However, because both sidebands are involved and their frequencies are chirped in opposite directions, the beating signal at the receiver has frequencies at both Ω/2π ± fB all the time. The frequency Ω/2π is also present owing to the unsuppressed carrier. This is shown in Fig. 4b (lower panel). When measuring a target at a distance, fE is increased to 1 GHz while g is kept unchanged. Figure 4c shows the spectra of the beating signal when three different acoustic frequencies Ω/2π are used to steer beams in different directions, where they are reflected by reflectors placed at different distances up to 3 metres. The spectra contain a frequency component at the acoustic frequency Ω/2π, which is used for FAR imaging, and a frequency component at Ω/2π + fB (Ω/2π − fB is not shown) with a MHz beating frequency fB, which increases with the distance of the reflector. By resolving all these frequency components, simultaneous FAR and FMCW measurements and a full 3D LiDAR image can be acquired in one scan. We demonstrate this in Fig. 4d, in which we image a pair of 1/2–20 stainless steel bolts and nuts (inset, Fig. 4d) placed 0.5 m from the LiDAR. The acquired point cloud image in Fig. 4d clearly shows the shape of the two objects separated by 8 cm in depth. Figure 4e shows the raw data for two points, A on the bolt and B on the nut. Figure 4f shows the detail of the FMCW beating signals in Fig. 4e. To improve the SNR, each data point is averaged for 20 chirping periods, so the integration time is 80 ms per point. The distance measurement resolution in Fig. 4d is 7.5 cm, which is mainly determined by the frequency excursion fE (refs. 36,37). In Extended Data Fig. 4, we increase fE to 10 GHz and consequently improve the distance resolution to 0.75 cm. In Extended Data Fig. 5, we demonstrate an even longer LiDAR ranging distance up to 11.5 m with greater than 23 dB SNR. From the measured SNR dependence on the target distance and fitting with a 1/R2 model, we can extrapolate to a 3 dB SNR at 115 metres, which is the ranging distance limit of the current system (Supplementary Information 5).

a, Time–frequency map of the transmitted light (bottom, Tx) and received light (top, Rx), both are chirped by a triangular waveform. The chirping rate is g = 1 MHz μs−1. The frequency of the received light is upshifted by the acoustic frequency Ω/2π (RBW, resolution bandwidth). a.u., arbitrary units. b, Top, schematic illustration of the frequency of the FMCW signal as a function of time. The frequency alternates between Ω/2π ± fB. Bottom, measured time–frequency map of the FMCW signal. Because of the upper sideband and the lower sideband) generated by the EOM, the FMCW frequencies at Ω/2π ± fB are present all the time. Also present is the frequency component at Ω/2π, which is from the unsuppressed optical carrier and used for FAR imaging. c, Spectra of FMCW signals when different acoustic frequencies (red, 1.6 GHz; green, 1.7 GHz; purple, 1.8 GHz) are used to steer the beam to reflectors placed at different angles and distances. d, 3D LiDAR image of a stainless steel bolt and a nut, placed 8.0 cm apart from each other, acquired by combining FAR and FMCW schemes. The FMCW chirping rate is g = 1 MHz μs−1 and frequency excursion fE = 1 GHz. Inset: photograph of the bolt and nut as the imaging objects. e, FMCW spectra of two representative points (A and B) in d, showing signals at Ω/2π (offset to zero frequency) and Ω/2π ± fB (offset to ±fB). f, Zoomed-in view of the FMCW signals at fB for point A and B. g, Our vision of a monolithic, multi-element AOBS system for 2D scanning, which, with a coherent receiver array (not shown), can realize 2D LiDAR imaging.

In conclusion, we have demonstrated 3D imaging using a FAR and FMCW LiDAR scheme enabled by an AOBS device. AOBS uniquely transforms the angle and frequency of the steered light, enabling imaging in the frequency domain. The single AOBS prototype has an electronics-limited switching speed of 1.5 μs, corresponding to an imaging rate of 0.67 megapixels per second when high-speed detectors are used for time-of-flight or FMCW detection. If using 16 channels (Fig. 2e) for imaging, one AOBS device provides an imaging rate of more than 10 megapixels per second. There is much room to improve the performance of the system. 2D scanning can be achieved with an array of AOBS devices (Fig. 1c) placed at the focal plane of a cylindrical lens38, each scanning independently to cover the horizontal dimension, as illustrated in Fig. 4g. In the receiver, using an array of coherent receivers13,39 or fibre bundles38 to collect light within the FOV can substantially improve the collection efficiency (Supplementary Information 6). Advanced IDT designs, such as single-phase unidirectional transducers (SPUDTs)40,41, can increase the acoustic bandwidth and thus the FOV, and the beam-steering efficiency. By using higher quality material platforms30,33, the acoustic loss can be reduced so that a much longer aperture can be achieved (Supplementary Information 2). Moreover, the electro–optic modulator needed for FMCW can also be co-integrated on the LN platform (Fig. 4g)33,42, making a fully monolithic transmitter module. With these improvements and innovations, a multi-element, chip-scale AOBS system can afford efficient 2D beam steering covering a large FOV. The combined advantages of simple device structures, simple beam-steering control, frequency domain resolving capability, miniature form factor and low cost make the demonstrated AOBS-based LiDAR a promising technology.

Methods

Device fabrication

The AOBS devices were fabricated on an x-cut LNOI substrate with 300 nm thick LN, 2 μm thick silicon dioxide on a 500 μm silicon substrate. The optical grating coupler was patterned with electron-beam lithography using negative resist hydrogen silsesquioxane. The IDT was then patterned with electron-beam lithography using a positive resist (ZEP-520), followed by a lift-off process of 180 nm thick aluminium film. The IDT has periods chirped from 1.45 to 1.75 μm and a total of 45 pairs of electrode fingers for better impedance matching.

Optical characterization setup

The beam-steering patterns and profiles shown in Fig. 2 were captured by the optical measurement setups shown in Extended Data Fig. 6. An IR camera (Xenics Xeva 320) was used to capture the images. For real-space imaging of the beam profile at the ABOS aperture (Fig. 2c), a 4f system as shown in Extended Data Fig. 6a was used. For k-space imaging of the beam profiles across the whole FOV, a ×10 near-IR objective lens was used to project the steered beam onto the Fourier plane, where the patterns were captured by a 4f imaging system with tunable magnification. To calibrate the k-space measurement, a collimated laser beam was directed to a reflective diffractive grating (Thorlabs GR1325) at an incident angle θi and the diffraction pattern was measured and used to calibrate the system following the standard grating equation.

Coherent receiver setup

The FAR and FMCW measurements shown in Figs. 3 and 4 were achieved with a coherent receiver in a configuration as shown in Fig. 3a. The output of a diode laser (Thorlabs ULN15PC) was first modulated by an EOM (Thorlabs LNP6118) to generate two modulation sidebands, which were chirped for FMCW measurement (unneeded for the FAR measurement). The EOM was driven by an AWG (Tektronix AWG70000B) and an amplifier. One per cent of the optical power was tapped out to be used as the LO in the receiver, and the remaining power was coupled into the AOBS, which was driven by an amplified microwave source, either from an AWG or a vector network analyser (Keysight E8362B) for multi-beam or single beam steering, respectively. The reflected light from the object is collected with a lens (focal length f = 75 mm; aperture D = 50 mm) and a single-mode fibre (NA = 0.14). The end of the fibre is placed in front of the focal plane (d = 66.3 mm) to collect reflected light within the FOV, although the collection efficiency is low at an estimated value of −55 dB (Supplementary Information 7). The signal is amplified by a low-noise erbium-doped fibre amplifier (Pritel LNHP-PMFA-23), and fed into a 50/50 fibre coupler, where it beats with the LO. The beat signal was measured with a BPD (Thorlabs PDB482C-AC), the output signal of which was digitalized and analysed with a spectrum analyser (Tektronix RSA5100B).

Data availability

Source data are provided with this paper.

Code availability

No custom computer code or mathematical algorithm was used to generate the results that are reported in this study.

References

Amann, M.-C., Bosch, T. M., Lescure, M., Myllylae, R. A. & Rioux, M. Laser ranging: a critical review of unusual techniques for distance measurement. Opt. Eng. 40, 10–19 (2001).

Berkovic, G. & Shafir, E. Optical methods for distance and displacement measurements. Adv. Opt. Photonics 4, 441–471 (2012).

Schwarz, B. Mapping the world in 3D. Nat. Photon. 4, 429–430 (2010).

Kim, I. et al. Nanophotonics for light detection and ranging technology. Nat. Nanotechnol. 16, 508–524 (2021).

Sun, J., Timurdogan, E., Yaacobi, A., Hosseini, E. S. & Watts, M. R. Large-scale nanophotonic phased array. Nature 493, 195–199 (2013).

Poulton, C. V. et al. Coherent solid-state LIDAR with silicon photonic optical phased arrays. Opt. Lett. 42, 4091–4094 (2017).

Liu, Y. & Hu, H. Silicon optical phased array with a 180-degree field of view for 2D optical beam steering. Optica 9, 903–907 (2022).

Hutchison, D. N. et al. High-resolution aliasing-free optical beam steering. Optica 3, 887–890 (2016).

McManamon, P. F. et al. Optical phased array technology. In Proc. IEEE 84, 268–298 (IEEE, 1996).

Shaltout, A. M. et al. Spatiotemporal light control with frequency-gradient metasurfaces. Science 365, 374–377 (2019).

Li, S. Q. et al. Phase-only transmissive spatial light modulator based on tunable dielectric metasurface. Science 364, 1087–1090 (2019).

Zhang, X., Kwon, K., Henriksson, J., Luo, J. & Wu, M. C. A large-scale microelectromechanical-systems-based silicon photonics LiDAR. Nature 603, 253–258 (2022).

Rogers, C. et al. A universal 3D imaging sensor on a silicon photonics platform. Nature 590, 256–261 (2021).

Trocha, P. et al. Ultrafast optical ranging using microresonator soliton frequency combs. Science 359, 887–891 (2018).

Riemensberger, J. et al. Massively parallel coherent laser ranging using a soliton microcomb. Nature 581, 164–170 (2020).

Jiang, Y., Karpf, S. & Jalali, B. Time-stretch LiDAR as a spectrally scanned time-of-flight ranging camera. Nat. Photon. 14, 14–18 (2020).

Brillouin, L. Diffusion of Light and X-rays by a transparent homogeneous body. Ann. Phys. 17, 88–122 (1922).

Boyd, R. W. Nonlinear Optics 3rd edn (Academic Press, 2008).

Behroozpour, B., Sandborn, P. A., Wu, M. C. & Boser, B. E. Lidar system architectures and circuits. IEEE Commun. Mag. 55, 135–142 (2017).

Poulton, C. V. et al. Coherent LiDAR with an 8,192-element optical phased array and driving laser. IEEE J. Sel. Top. Quantum Electron. 28, 1–8 (2022).

Korpel, A. Acousto-optics 2nd edn (Marcel Dekker, 1997).

Lohmann, A. W., Dorsch, R. G., Mendlovic, D., Zalevsky, Z. & Ferreira, C. Space–bandwidth product of optical signals and systems. J. Opt. Soc. Am. A 13, 470–473 (1996).

Shelby, R. M., Levenson, M. D. & Bayer, P. W. Guided acoustic-wave Brillouin scattering. Phys. Rev. B Condens. Matter 31, 5244–5252 (1985).

Tsai, C. S. Guided-wave Acousto-optics: Interactions, Devices, and Applications (Springer-Verlag, 1990).

Liu, Q., Li, H. & Li, M. Electromechanical Brillouin scattering in integrated optomechanical waveguides. Optica 6, 778–785 (2019).

Li, H., Liu, Q. & Li, M. Electromechanical Brillouin scattering in integrated planar photonics. APL Photonics 4, 080802 (2019).

Shao, L. et al. Integrated microwave acousto-optic frequency shifter on thin-film lithium niobate. Opt. Express 28, 23728–23738 (2020).

Hinkov, V. Proton exchanged waveguides for surface acoustic waves on LiNbO3. J. Appl. Phys. 62, 3573–3578 (1987).

Smalley, D. E., Smithwick, Q. Y., Bove, V. M. Jr., Barabas, J. & Jolly, S. Anisotropic leaky-mode modulator for holographic video displays. Nature 498, 313–317 (2013).

Mayor, F. M. et al. Gigahertz phononic integrated circuits on thin-film lithium niobate on sapphire. Phys. Rev. Appl. 15, 014039 (2021).

Tsai, C. Guided-wave acoustooptic Bragg modulators for wide-band integrated optic communications and signal processing. IEEE Trans. Circuits Syst. 26, 1072–1098 (1979).

Bajak, I., McNab, A., Richter, J. & Wilkinson, C. Attenuation of acoustic waves in lithium niobate. J. Acoust. Soc. Am. 69, 689–695 (1981).

Xu, Y. et al. Bidirectional interconversion of microwave and light with thin-film lithium niobate. Nat. Commun. 12, 4453 (2021).

Endres, M. et al. Atom-by-atom assembly of defect-free one-dimensional cold atom arrays. Science 354, 1024–1027 (2016).

Barnes, K. et al. Assembly and coherent control of a register of nuclear spin qubits. Nat. Commun. 13, 2779 (2022).

Kunita, M. Range measurement in ultrasound FMCW system. Electron. Commun. Jpn 3 90, 9–19 (2007).

Hao-Hsien, K., Kai-Wen, C. & Hsuan-Jung, S. Range resolution improvement for FMCW radars. In Proc. 5th European Radar Conference 352–355 (IEEE, 2008).

Li, C. et al. Blind zone-suppressed hybrid beam steering for solid-state Lidar. Photonics Res. 9, 1871–1880 (2021).

Simpson, M. L. et al. Coherent imaging with two-dimensional focal-plane arrays: design and applications. Appl. Opt. 36, 6913–6920 (1997).

Morgan, D. P. Surface Acoustic Wave Filters: With Applications to Electronic Communications and Signal Processing 2nd edn (Academic Press, 2007).

Lehtonen, S., Plessky, V. P., Hartmann, C. S. & Salomaa, M. M. Unidirectional SAW transducer for gigahertz frequencies. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 50, 1404–1406 (2003).

Zhang, M., Wang, C., Kharel, P., Zhu, D. & Lončar, M. Integrated lithium niobate electro-optic modulators: when performance meets scalability. Optica 8, 652–667 (2021).

Acknowledgements

This work is supported by the Convergence Accelerator programme of the National Science Foundation (award no. ITE-2134345) and the DARPA MTO SOAR programme. Part of this work was conducted at the Washington Nanofabrication Facility/Molecular Analysis Facility, a National Nanotechnology Coordinated Infrastructure (NNCI) site at the University of Washington with partial support from the National Science Foundation via award nos. NNCI-1542101 and NNCI-2025489.

Author information

Authors and Affiliations

Contributions

M.L. conceived and supervised the research. B.L. and Q.L. designed and fabricated the devices, performed the experiments, conducted the simulation and derived the theory. All authors jointly analysed the data and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

B.L., Q.L. and M.L. have filed a patent application (application serial number PCT/US2023/061401) on the FAR LiDAR by AOBS technology presented in this paper. M.L. has filed one US patent application (application number 17/602,415) on the integrated AOBS technology presented in this paper.

Peer review

Peer review information

Nature thanks Linbo Shao, Kan Wu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Acoustic mode characteristics.

a, The RF reflection coefficient S11 and the electromechanical conversion efficiency of the chirped IDT based on the modified Butterworh-Van Dyke (mBVD) model. The red shaded area marks the Rayleigh mode acoustic wave that is responsible for acousto-optic beam steering in our device and has an electromechanical conversion efficiency of ~6.4% within a bandwidth of 350 MHz. The grey shaded area marks the bands of multiple bulk modes that are inefficient in acousto-optic beam steering because they are largely confined in the SiO2 layer, although more efficiently excited. b, Simulation of the Rayleigh mode acoustic wave, showing the mode is confined in the LN layer.

Extended Data Fig. 2 Beam profile analysis.

a, k-space beam profile (gray circles) and their Lorentzian fit (orange line) at two different steering angles. b, Experimental (gray circle) and theoretical (orange line) results of the frequency-angle relation of the AOBS device. The theoretical results were calculated using the SAW dispersion and TE0 mode effective index simulated by COMSOL, in excellent agreement with the experimental results. c, Fitted beam width (FWHM) of the measured beam spots at different angles (blue circles) and theoretical beam width (orange line), showing an average divergence angle of 0.123°±0.022°.

Extended Data Fig. 3 AOBS dynamic response.

The temporal response of one AOBS pixel was characterized by measuring the output beam optical power (b) when the RF drive signal was modulated by a square wave (a). The 20%–80% rise time is measured to be 1.53 μs.

Extended Data Fig. 4 3D imaging of an object.

a, A 3D FMCW and FAR LiDAR pointcloud image of a letter “W” cutout made of a retroreflective film and placed 1.8 meters away. The frequency chirp excursion is increased to fE = 10 GHz to improve the depth measurement resolution. b, Photograph of the “W” cutout, which is 4.6 cm wide and 5.0 cm tall. The cutout is tilted to demonstrate the depth measurement. c, X-Z plane projection of the captured image, showing a depth measurement resolution (standard deviation, shaded area) of 7.5 mm. The variation of the standard deviation is due to the finite spot size (3.0 mm horizontally and 3.8 mm vertically) on the target and the geometry of the target.

Extended Data Fig. 5 Demonstration of longer range LiDAR Imaging.

a. FAR LiDAR signal from a target at varying distances between 2-12 meters. The SNR remains >20 dB in the range. b. Photo of our laboratory LiDAR demonstration, where targets as far as ~12 meters away are imaged by our LiDAR system. c. LiDAR signal SNR dependence on the distance R and fitted with 1/R2. Extrapolating to 3 dB SNR shows a measurement distance up to 115 meters. d. and e. 3D LiDAR imaging of a circular target (a driveway marker) and a triangular target (a retroreflective tape cut-out) at 8.55 m.

Extended Data Fig. 6 Schematics of optical characterization setups.

a, 4f system directly imaging the real-space beam profile at the emission aperture. b, Setup for imaging the k-space beam profile.

Supplementary information

Supplementary Information

Supplementary Sections 1–9, including Figs. 1–10 and references; see Table of Contents for details.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, B., Lin, Q. & Li, M. Frequency–angular resolving LiDAR using chip-scale acousto-optic beam steering. Nature 620, 316–322 (2023). https://doi.org/10.1038/s41586-023-06201-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-023-06201-6

- Springer Nature Limited

This article is cited by

-

Deterministic quasi-continuous tuning of phase-change material integrated on a high-volume 300-mm silicon photonics platform

npj Nanophotonics (2024)

-

Twist piezoelectricity: giant electromechanical coupling in magic-angle twisted bilayer LiNbO3

Nature Communications (2024)

-

Advances in information processing and biological imaging using flat optics

Nature Reviews Electrical Engineering (2024)

-

Spatio-spectral 4D coherent ranging using a flutter-wavelength-swept laser

Nature Communications (2024)

-

Evolution of laser technology for automotive LiDAR, an industrial viewpoint

Nature Communications (2024)