Abstract

The Joint Task Force for Clinical Trial Competency (JTF) conducted a global survey of clinical research professionals requesting respondents to self-assess their competencies in each of the eight domains of its Core Competency Framework version 3.1. The results were analyzed based upon role, years of experience, educational level, professional certification, institutional affiliation, and continuing education participation. Respondents with professional certification self-assessed their competencies in all domains at higher levels than those without professional certification. The survey demonstrated that irrespective of role, experience, or educational level, training curricula in both pre-professional and continuing professional education should include additional content relating to research methods, protocol design, medical product development and regulation, and data management and informatics. These results validate and extend the recommendations of a similar 2016 JTF and other surveys. We further recommend that clinical and translational research organizations and clinical sites assess training needs locally, using both subjective and objective measures of skill and knowledge.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In 2014, the Joint Task Force for Clinical Trial Competency (JTF) published its Core Competency Framework [1] that described eight domains and 51 core competencies to define the knowledge, skills, and attitudes necessary for professionals to conduct ethical, high quality, and safe clinical research. Several issues relating to the conduct of clinical research prompted the JTF to develop the Framework: there was little or no standardization of role descriptions; no generally accepted knowledge base or skill set that defined an entry level, skilled, or expert professional; no required educational preparation for entry into the field; and hiring and promotions were based primarily on previous experience with no definition of experiential content. Since its original publication, the JTF Framework has been utilized internationally by academic institutions, corporate entities, professional associations, educational and training programs, and regulatory agencies [2,3,4,5,6,7,8,9] and integrated into their activities. In 2017, the Multi-Regional Clinical Trials Center of Brigham and Women’s Hospital and Harvard (MRCT Center) assumed responsibility for the JTF and helped to broaden the recognition and integration of the Framework into the clinical research enterprise through facilitation of an ongoing update process, translation of the Framework into Spanish and Japanese, and creation of a comprehensive and highly interactive web site (https://mrctcenter.org/clinical-trial-competency/). The JTF has continually updated and expanded the scope of the Framework to include additional roles (e.g., project management) and reflect changes in the scientific understanding and methodology utilized in conducting clinical research. In 2018, the Framework version 3.1 was expanded to reflect the different competencies required of Entry (Fundamental) level, Mid-level (Skilled), and Expert (Advanced) level professionals [10].

In 2016, the JTF published the results of a global survey [11] where members of the clinical research enterprise self-assessed their competency based upon the JTF Framework. The results of that survey were used to help better define the varying roles within the clinical research enterprise and inform the major educational and training needs within the workforce. With further global dissemination over time, partitioning the Framework to include Fundamental, Skilled, and Advanced competencies, and the significant expansion and societal recognition of the clinical research enterprise, the JTF wished to reassess the potential of the Framework to guide ongoing professional development. The JTF also considered it important to characterize the current competencies of different roles in this workforce to determine how integration of the Framework will continue to impact the enterprise. Consequently, the JTF developed a second iteration of the global survey of self-assessed competency utilizing the latest version of the Framework, to determine how self-assessed competency can vary across the roles, experience, professional certifications, and academic preparation of the workforce. This publication describes that survey, the global response, and current recommendations for use of the framework to guide the ongoing education and training of the clinical research workforce.

Methods

Survey Tool and Participant Recruitment

An electronic survey tool was developed which was based on the 2016 survey but asked the respondent to self-assess competence in each domain rather than for each specific competency and utilized the online survey RedCap platform. These modifications enabled comparison of the results to the 2016 survey but minimized survey fatigue and increased the ease of digital distribution and response. The survey was provided in both English and Spanish. The survey included a self-assessment of perceived personal competence in each of the eight domains of the JTF Core Competency Framework. Responses were requested based upon a Likert scale from 1 to 10 and specific examples of competency for each domain were described at the Fundamental Level (1–3), the Skilled Level (4–7), and the Expert Level (8–10). A demographic component of the survey followed asking for professional role, experience and academic degree level, geographic location, and several questions concerning participation in education and training programs. Respondents were also asked if they received certifications by recognized professional bodies in clinical research, since such certification is a common mechanism used to recognize qualification in the field.

A copy of the survey instrument is provided as Supplemental Table 1.

Individuals working in clinical research, inclusive of the roles of principal/co-principal investigator (PI/Co-PI), clinical research associate/monitor (CRA), clinical research coordinator/nurse (CRC/CRN), regulatory affairs professional (RA), clinical project manager/research manager (PM/RM), and educator/trainer were targeted as survey participants.

A snowball sampling approach was used for survey dissemination that included outreach through professional contacts, social media, and the collaboration of related professional organizations. The survey was launched on June 1, 2020 and closed on November 30, 2020. Participation in the survey was anonymous and no record of the IP addresses of respondents was collected. Because this survey was devised as a snowball sample, population denominators could not be estimated, and the results were not interpreted as being representative of the clinical and translational research workforce considered as a whole. Descriptive statistics were used to evaluate the distribution of respondents’ assessment scores within and across subgroups of the workforce.

The research protocol was approved as “exempt” research by the Massachusetts General Brigham (Partners) Institutional Review Board.

Results

Survey responses were received from 825 individuals across the globe. A total of 661 (80%) completed the entire survey; only the results of completed surveys were analyzed.

Perceptions of Competency by Role

Table 1 shows the average level of self-assessed competency by domain and by role. As expected, roles with supervisory or education/training responsibilities scored higher. Based upon the average rating, all members of the clinical research team rate themselves as functioning within the Skilled or Advanced category. Respondents reported having the lowest levels of self-confidence in the domains of Scientific Concepts and Research Design, Investigational Product Development and Regulation, and in Data Management and Informatics.

Competency by Experience

Table 2 shows the average level of self-assessed competency by experience for each of the domains of the JTF Competency Framework for individuals working in clinical research. As would be expected, the competency level rises as years of experience increases. Generally, the hiring requirements for positions working with sponsors and CROs state that applicants must have more than 2 years of experience [12]. The Association of Clinical Research Professionals (ACRP) and the Society of Clinical Research Associates (SoCRA), the two major professional certifying bodies for clinical research professionals, require 2 years of experience to qualify to take their certification examinations [13, 14]. It is notable that respondents with 0–2 years of experience self-assess at levels much lower than those with 3 years or more experience for all domains.

Competency by Academic Degree Level

Table 3 shows the average self-assessed competency level by academic degree level for the eight domains of the JTF Competency framework. Considered overall, the level of self-assessed competency increases steadily for each domain with the level of academic degree. Respondents holding no academic degree rated themselves with lower levels of competency in all of the domains. Irrespective of educational degree, lower levels of competency are observed in the domains of Ethical and Safety Considerations, Investigational Product Development and Regulation, and Data Management and Informatics. Ratings of Study and Site Management were more variable.

Professional Certification and Self-assessed Competency

Two professional credentialling organizations for the clinical research profession are the Association of Clinical Research Professionals (ACRP) and the Society of Clinical Research Professionals (SoCRA). Of the respondents who completed the survey, 274 reported being certified by either ACRP or SoCRA or both and 306 reported holding no professional certification. Table 4 shows the average self-assessed competency ratings of ACRP and SoCRA certified and non-certified clinical research professionals for each of the JTF Competency Framework domains. Respondents with no professional certification rate themselves lower in each of the JTF Framework domains than those who are professionally certified by ACRP and/or SoCRA.

Competency by Organizational Affiliation

The clinical research enterprise is composed of sponsors, contract research organizations (CROs), clinical sites (both in academic institutions and private), and academic institutions that educate clinical research professionals. Table 5 shows the average self-assessed competency level by organizational affiliation and by domain. As can be seen, the averaged self-assessments are at a high level for all domains, approaching Expert in many domains and there is little variation in self-assessed competency between organizational affiliations. In general, respondents reported lower levels of self-assessed competency in Scientific Concepts and Research Design, Investigational Product Development and Regulation, and Data Management and Informatics regardless of their organizational Concepts and Research Design than other respondents. Respondents in corporate pharmaceutical organizations reported higher levels of competency in Investigational Product Development and Regulation and in Communication and Teamwork, while individuals in private clinical sites reported lower levels of competency in the Scientific Concepts and Research Design and the Communication and Teamwork domains.

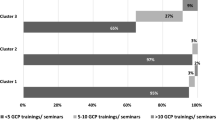

Competency and Education/Training

One of the original objectives of the JTF in developing the Core Competency Framework was to help make clinical research professionals aware of their education and training needs and to stimulate further efforts to enhance their competency. Of the 661 respondents that completed the survey, only 174 (35.7%) indicated that they had attended academic coursework and/or continuing education or training (“coursework/training”) in the past 2 years. Table 6 shows the percentage of respondents that received coursework/training in each of the domains. Of those who did attend coursework or training, the topics with the highest percentages included the domains Clinical Study Operations (GCPs) (57%), and Ethical and Safety Considerations (46%). These were the domains showing the highest levels of competency in almost all groups. The areas of education/training with the lower percentages of attendance were Data Management and Informatics (25%), Investigational Products Development and Regulation (28%), Communication and Teamwork (28%), and Scientific Concepts and Research Design (30%), the domains consistently showing the lowest levels of competency for almost all groups.

Discussion

The 2016 study published by the JTF [11] concluded that the workforce assessed itself as competent in the domains of Ethical and Safety Considerations, Clinical Study Operations (GCPs), and Leadership and Professionalism, but there were significant gaps in the self-assessed competency of individuals employed in the enterprise for other domains, irrespective of role, experience, or educational level. Those domains where lower levels of competency were noted were Scientific Concepts and Research Design and Investigational Product Development and Regulation. Competency levels in the domains of Study and Site Management, Data Management and Informatics, and Communications and teamwork were found to be dependent on role, educational level, and experience.

The findings of this study update and extend this past work in important ways. As with the 2016 study, the results of the 2020 study support the claim that the self-confidence of the clinical and translational research workforce is lowest in the domains of Scientific Concepts and Research Design and Investigational Product Development and Regulation. Similarly, the results of this study suggest that key portions of the workforce lack some confidence in skills associated with Data Management and Informatics and Ethical and Safety Considerations.

Like the 2016 study, the current work also found substantive differences in the self-confidence of respondents based on their role on a study team. Further definition of the JTF Domains to include Fundamental, Skilled, and Advanced levels of competency enabled their inclusion in the current study questionnaire. By the average rating, all member roles of the clinical research team rated themselves as functioning within the Skilled or Advanced categories in each domain; the 2020 results, however, suggest that both Educators/Trainers and Project Managers/Research Managers report comparatively high levels of confidence across domains compared to respondents whose role may include more direct contact with study participants. In the case of Project Managers/Research Managers, the self-assessments are higher than those of PI/CoI’s. Comparatively high levels of variation in the domain scores were reported by Regulatory Affair Professionals. And while 2020 respondents working in teaching-related academic organizations reported having a higher level of competency in Scientific Concepts and Research Design, those in corporate pharmaceutical organizations reported higher levels of competency in Investigational Product Development and Regulation and in Communication and Teamwork.

Key differences in the confidence of the workforce persist across education and experience, as was also found in the 2016 study. Specifically, respondents with higher levels of postsecondary education reported higher levels of self-confidence in their research skills, as did respondents who are professionally certified by ACRP and/or SoCRA compared to those who were not. Interestingly, 2020 respondents’ self-confidence in their research skills increased with experience, but not uniformly across all domains, with the scores in the domains of Clinical Operations (GCP) and Data Management & Informatics showing more variability with experience compared to the other domains. Taken together, these results suggest that the clinical and translational workforce may need further training not only in the domains of Scientific Concepts and Research Design and Investigational Product Development and Regulation, but also in the domains of Data Management and Informatics, and Ethical and Safety Considerations. In addition, this evidence indicates that the composition of clinical research teams should be carefully considered since researchers in specific roles may have considerable expertise in specific areas that their colleagues lack. For example, there may be opportunities for experienced Regulatory Affair Professionals working in corporate Pharmaceutical/Biotech organizations to impart their distinctive skills in Investigational Product Development and Regulation to their colleagues and for experienced Educators/trainers working in academic teaching organizations to do so in the domain Scientific Concepts and Research Design. The results of this study reinforce the value of formal education and ACRP/SoCRA certification in increasing researchers’ confidence in skills in every domain.

In order to inform new recommendations for further enhancing the professional development of the clinical and translational workforce, this study also assessed respondents’ professional development activities. Notably, only 35.7% of respondents reported participating in education/training in the past 2 years. Additionally, the respondents were least likely to report participating in training related to domains showing the lowest levels of self-confidence. Comparatively low percentages of respondents reported that they received training in Investigational Product Development and Regulation (28%) and Scientific Concepts and Research Design (30%), whereas higher percentages of respondents reported participated in training in Ethical Considerations and Safety (46%) and Clinical Study Operations/GCP (57%). Additional training correlated with the higher levels of reported self-confidence. This study may be used to guide the professional development of the workforce and to benchmark progress over time, but with certain limitations.

Limitations

The limitations of this study relate to both the methods and applicability of the findings. First, the survey was administered using a snowball sampling procedure given the inherent difficulties in identifying a sample of individuals that would be representative of the clinical and translational research workforce considered as a whole. While the use of snowball sampling was convenient, its use in this study necessarily has the potential to limit the reliability and validity of the results. For this reason, the recommended training needs identified in this study should be further validated by replicating this study within research organizations and groups before being used to guide relevant professional development or advancement opportunities provided to their members.

Second, there are inherent limitations associated with the use of self-assessments for the purpose of measuring clinical and translational research skills and knowledge since these types of assessments are not objective [15]. Specifically, although subjective and objective competency-assessment scores have been found to increase as a result of completing clinical research training programs [16,17,18,19,20,21,22], and as a result of accruing research experience [23,24,25], some empirical studies have also found few or no significant differences in self-assessed competence of more advanced compared to those of less advanced clinical research professionals [26,27,28]. Although the known groups validity or between-groups validity [29,30,31] of the assessment used in this study can be supported by comparing the results to those found by other validation studies of these types of assessments, rigorous empirical research demonstrates that the results of subjective and objective assessments of skills often diverge [32, 33]. This limitation not only further reinforces the need to replicate this study within clinical and translational research organizations and groups, but also to conduct and compare the results of self-assessments to objective assessments of research skills. To date there have been very few studies published of clinical research competency utilizing rigorous, objective, and reproducible methods of assessment. Such methods of evaluation may be utilized to assess competence in corporate environments, but they are not cited in the professional literature.

Conclusions

Over the past decade, there has been a significant increase in both the number and the complexity of clinical trials [34]. The result has been a growing global demand for clinical research professionals to support the workforce needs and has resulted in a severe shortage of personnel [35].

Clinical research is one of the few health professions where there is no entry level educational requirement. In addition, there is no required educational background or defined set of competencies that are required to become a clinical research professional [12] and a majority of the current workforce has been trained “on the job.” Although an increasing number of sponsors, clinical sites, and CROs have acknowledged that professional certification improves the level of competency [36] and are requiring professional certification for their new employees, this requirement is not yet standard across the field of clinical and translational science.

This dynamic has motivated efforts by many professional organizations to develop frameworks of defined competencies for the many roles within the clinical research enterprise [37,38,39,40]. The 2014 Joint Taskforce for Clinical Trial Competency (JTF) framework has been widely adopted and utilized to standardize role descriptions [2], define onboarding training and continuing education content [3, 41], inform upward mobility and promotion criteria [5], and define the content for academic programs which educate and train new CRPs [42].

The 2016 JTF global survey of clinical research competency [11] concluded that the workforce self-assessed as generally competent in the domains of Ethical and Safety Considerations, Clinical Study Operations (GCPs), and Leadership and Professionalism. These are the most common domains where continuous training is offered by professional organizations and required by regulatory bodies. The findings of the current survey demonstrate how self-assessed competency in the JTF domains vary by role, experience, education, certification, and organizational type of the respondents. The results inform the recommendation that training be provided in the domains of Scientific Concepts and Research Design, Investigational Product Development and Regulation, and Data Management and Informatics, irrespective of role, educational level, or experience. Equally important is the recommendation that clinical and translational research organizations and clinical sites assess training needs locally, using both subjective and objective measures of skill and knowledge.

The 2016 survey questioned the efficacy of the “on the job” training model and recommended that education in research methods should be required for physicians in their medical school curriculum and that clinical research coordinators and monitors be required to have basic education in the sciences prior to employment. The current survey validates those recommendations and those of others [43, 44] that training curricula include additional content relating to research methods, protocol design and medical product development and regulation, and extends those recommendations to include further training in data management and informatics. The JTF Framework will continue to inform, identify, assess, and address the need for relevant and rigorous training for the workforce.

References

Sonstein SA, Seltzer J, Li R, et al. Moving from compliance to competency: a harmonized core competency framework for the clinical research professional. Clin Res. 2014;28(3):17–235.

Brouwer RN, Deeter C, Hannah D, et al. Using competencies to transform clinical research job classifications. J Res Admin. 2017;48(2):11–25.

Behar-Horenstein LS, Potter JE, Prikhidko A, et al. Training impact on novice and experienced research coordinators. Qual Rep. 2017. https://doi.org/10.46743/2160-3715/2017.3192.

Calvin-Naylor NA, Jones CT, Wartak M, et al. Education and training of clinical and translational study investigators and research coordinators: a competency-based approach. J Clin Transl Sci. 2017;1(1):16–25. https://doi.org/10.1017/cts.2016.2.

Stroo M, Asfaw K, Deeter C, et al. Impact of implementing a competency-based job framework for clinical research professionals on employee turnover. J Clin Transl Sci. 2020; 4(4):331–335. https://doi.org/10.1017/cts.2020.22.

Saunders J, Pimenta K, Zuspan S, et al. Inclusion of the Joint Task Force competency domains in onboarding for CRC’s. Clin Res. 2017. https://doi.org/10.14524/CR-17-0007.

Zozus M, Lazarov A, Smith L. Analysis of professional competencies for the clinical research data management profession: implications for training and professional certification. J Inform Health Biomed. 2017;24(4):737–45.

Shimoda K, Watanabe H. Joint Task Force for Clinical Trial Competency (Japanese translation) Joint Task Force for Clinical Trial Competency 日本語訳. Rinsho/Yakuri Jpn J Clin Pharmacol Ther. 2020. https://doi.org/10.3999/jscpt.51.131.

Lee JY, Lensing SV, Botello-Harbaum MT, Medina R, Zosus M. Assesssing clinical investigator’s perceptions of relevance and competency of clinical trial skills: an international AIDS Malignancy Consortium (AMC) study. J Clin Transl Sci. 2020;5:e28. https://doi.org/10.1017/cts.2020.520.

Sonstein SA, Namenek Brouwer RJ, Gluck W, et al. Leveling the Joint Task Force Core competencies for clinical research professionals. Ther Innov Regul Sci. 2018. https://doi.org/10.1177/2168479018799291.

Sonstein S, Silva H, Thomas-Jones C, et al. Global self-assessment of competencies, role relevance, and training needs among clinical research professionals. Clin Res. 2016;30(6):42–9.

Hinkley, T. Workforce innovation: let’s focus on competency, not tenure. Clinical Researcher. 2017. https://acrpnet.org/2017/02/01/workforce-innovation-lets-focus-on-competency-not-tenure/. Accessed on 23 May 2021.

ACRP-CP Certification. https://acrpnet.org/certifications/acrp-cp-certification/. Accessed on 1 Nov 2021.

SoCRA Candidate Eligibility. https://www.socra.org/certification/ccrp-certification-exam/candidate-eligibility/. Accessed on 1 Nov 2021.

Ianni PA, Samuels EM, Eakin BL, et al. Assessments of research competencies for clinical investigators: a systematic review. Eval Health Prof. 2019. https://doi.org/10.1177/0163278719896392.

Awaisu A, Kheir N, Alrowashdeh HA, et al. Impact of a pharmacy practice research capacity-building programme on improving the research abilities of pharmacists at two specialised tertiary care hospitals in Qatar: a preliminary study. J Pharm Health Serv Res. 2015;6(3):155–64. https://doi.org/10.1111/jphs.12101.

Ellis JJ, McCreadie SR, McGregory M, et al. Effect of pharmacy practice residency training on residents’ knowledge of and interest in clinical research. Am J Health Syst Pharm. 2007;64(19):2055–63. https://doi.org/10.2146/ajhp070063.

Jeffe DB, Rice TK, Boyington JEA, et al. Development and evaluation of two abbreviated questionnaires for mentoring and research self-efficacy. Ethn Dis. 2017;27(2):179–88. https://doi.org/10.18865/ed.27.2.179.

Lowe B, Hartmann M, Wild B, et al. Effectiveness of a 1-year resident training program in clinical research: a controlled before-and-after study. J Gen Intern Med. 2008;23(2):122–8. https://doi.org/10.1007/s11606-007-0397-8.

Patel MS, Tomich D, Kent TS, et al. A program for promoting clinical scholarship in general surgery. J Surg Educ. 2018;75(4):854–60. https://doi.org/10.1016/j.jsurg.2018.01.001.

Robinson GFWB, Moore CG, McTigue KM, et al. Assessing competencies in a master of science in clinical research program: the comprehensive competency review. Clin Transl Sci. 2015;8(6):770–5. https://doi.org/10.1111/cts.12322.

Robinson GFWB, Switzer GE, Cohen ED, et al. A shortened version of the clinical research appraisal inventory: CRAI-12. Acad Med. 2013;88(9):1340–5. https://doi.org/10.1097/ACM.0b013e31829e75e5.

Lipira L, Jeffe DB, Krauss M, et al. Evaluation of clinical research training programs using the clinical research appraisal inventory. Clin Transl Sci. 2010;3(5):243–8. https://doi.org/10.1111/j.1752-8062.2010.00229.x.

Mullikin EA, Bakken LL, Betz NE. Assessing research self-efficacy in physician-scientists: the clinical research appraisal inventory. J Career Assess. 2007;15(3):367–87. https://doi.org/10.1177/1069072707301232.

Streetman DS, McCreadie SR, McGregory M, et al. Evaluation of clinical research knowledge and interest among pharmacy residents: survey design and validation. Am J Health Syst Pharm. 2006;63(23):2372–7. https://doi.org/10.2146/ajhp060099.

Cuser DA, Brown SK, Ingram JR, et al. Learning outcomes from a biomedical research course for second year osteopathic medical students. Osteopath Med Prim Care. 2010;4:4. https://doi.org/10.1186/1750-4732-4-4.

Cruser DA, Dubin B, Brown SK, et al. Biomedical research competencies for osteopathic medical students. Osteopath Med Prim Care. 2009;3:10. https://doi.org/10.1186/1750-4732-3-10.

Ameredes BT, Hellmich MR, Cestone CM, et al. The Multidisciplinary Translational Team (MTT) model for training and development of translational research investigators. Clin Transl Sci. 2015;8(5):533–41. https://doi.org/10.1111/cts.12281.

DeVellis RF. Scale development. In: Theory and applications. 2nd ed. Newbury Park: Sage; 2003.

Kane MT. An argument-based approach to validity. Psychol Bull. 1992;112(3):527–35.

Sullivan GM. A primer on the validity of assessment instruments. J Med Grad Educ. 2011;3(2):119–20.

Dunning D, Heath C, Suls JM. Flawed self-assessment: implications for health, education, and the workplace. Psychol Sci Public Interes. 2004;5(3):69–106. https://doi.org/10.1111/j.1529-1006.2004.00018.x.

Dunning D. The Dunning–Kruger effect: on being ignorant of one’s own ignorance. In: Advances in experimental social psychology, vol. 44. Cambridge: Academic Press; 2011. p. 247–96.

Centerwatch. (n.d.). Report: global clinical trial service market will reach $64B by 2020. http://www.centerwatch.com/news-online/2017/02/08/report-global-clinical-trial-service-market-will-reach-64b-2020/. Accessed on 19 May 2021.

Sonstein SA, Jones CT. Joint task force for clinical trial competency and clinical research professional workforce development. Front Pharmacol. 2018. https://doi.org/10.3389/fphar.2018.01148.

Vulcano D. CPI certification as predictor of clinical investigators’ regulatory compliance. Drug Inf J. 2012;46(1):84–7.

Silva H, Stonier P, Buhler F, et al. Core competencies for pharmaceutical physicians and drug development scientists. Front Pharmacol. 2013;4(105):1–7.

Koren M, Koski G, Reed DP, et al. APPI physician investigator competencies statement. Monitor. 2011;25(4):79–82.

Association of Clinical Research Professionals. ACRP core competency guidelines for clinical research coordinators. 2017. https://acrpnet.org/acrp-partners-in-workforce-advancement/core-competency-guidelines-clinical-research-coordinators-crcs/. Accessed on 1 Nov 2021.

Regulatory Affairs Professionals Society. Regulatory competency framework. (2016). https://www.raps.org/careers/regulatory-competency-framework. Accessed on 1 Nov 2021.

Ji P, Wang H, Zhang C, et al. A survey on clinical research training status and needs in public hospitals from Shenzhen. J Educ Train Stud. 2017;12:56–49.

CAAHEP—Clinical Research. (n.d.). https://www.caahep.org/CAAPCR.aspx. Accessed on 25 May 2021.

Shanley TP, Calvin-Naylor NA, Divecha R, et al. Enhancing clinical research professionals’ training and qualification (ECRPTQ): recommendations for good clinical practice (GCP) training for investigators and study coordinators. J Clin Transl Sci. 2017. https://doi.org/10.1017/cts.2016.1.

Moskowitz J, Thompson JN. Enhancing the clinical research pipeline: training approaches for a new century. Acad Med. 2001;76(4):307–15.

Funding

There was no external funding received related to this study.

Author information

Authors and Affiliations

Contributions

SS: participation in study concept, questionnaire development, analysis of results, and manuscript development. ES: participation in analysis of results and manuscript development. CA: participation in questionnaire development, IRB submission, analysis of results, and manuscript development. SW: participation in questionnaire development, analysis of results, and manuscript development. BB: participation in study concept, questionnaire development, IRB submission, analysis of results, and manuscript development.

Corresponding author

Ethics declarations

Conflict of interest

None of the authors declare any potential conflict of interest relevant to this work.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Sonstein, S.A., Samuels, E., Aldinger, C. et al. Self-assessed Competencies of Clinical Research Professionals and Recommendations for Further Education and Training. Ther Innov Regul Sci 56, 607–615 (2022). https://doi.org/10.1007/s43441-022-00395-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43441-022-00395-z