Abstract

Moving from the historical experience of the Free Banking Era characterizing the US banking system from 1837 to 1863, the purpose of this paper is to investigate the rationale for the endogenous emergence of a clearinghouse, that is, a centralized institution established to manage monetary payments among many economic units. To this end, we propound an agent-based network model suitable for analysing the economic dynamics which develop whenever decentralized agents must decide on the type of payment settlement that they can perform by means of coordinated optimizing behaviours. We test the model for competing settlement modes, different economic scenarios and payment networks. We find that the topology and the density of the payment network influence the choice of agents as much as the interest rate and the probability that the clearinghouse will fail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The payment system is one of the key components of a modern economy. Its purpose is to enable the exchange of goods and services while avoiding the complications and the inefficiencies tied to barter. According to the different procedures used to orchestrate the clearance of monetary obligations between two or more agents, payment systems are usually divided between two archetypal types of settlement architecture: real-time gross settlement (RTGS) and net settlement (NS).Footnote 1 RTGS is a system in which credits and debts between agents are regulated through payments as soon as they arise. The main advantage of RTGS is the elimination of the insolvency risk, since contracts among the parties involved are settled through immediate payments. However, the adoption of the RTGS system entails liquidity costs due to the need to employ financial resources when entering into a financial obligation, to which the operational risk during the execution of the payment should be added. NS deviates from RTGS in both timing and regulatory procedures. In specific terms, the structure of NS makes it possible to clear two or more obligations at the same time through a bilateral netting settlement (BNS) or a multilateral netting settlement (MNS).Footnote 2 Netting procedures make it possible to lower the expository level among agents, shrinking the liquidity costs necessary at the time of execution. Thus, netting reduces both liquidity costs and operational risk. However, by postponing the execution time of the payment, there arises a credit risk which disincentivizes the use of the procedure altogether.

In order to deal with credit, liquidity and operational risks, the actual functioning of payment systems usually relies on an economic institution, i.e. a central clearing counterparty or clearinghouse, whose roles are those of securing the execution of trades, providing insurance and disclosing information. While there is a large body of literature on the optimal design of clearinghouses (Duffie and Zhu 2011; Duffie et al. 2015; France and Kahn 2016), far less attention has been paid to the preconditions favouring the endogenous emergence of such an institutional device in the first place.

To address this issue, we take inspiration from the historical experience of the New York Clearinghouse, a private arrangement established in 1853 during the American Free Banking Era as described by Chauduri (2014). Theoretically speaking, free banking consists of a monetary system with no barriers to entry into the banking sector and where all banks can issue their own currency, the value of which is determined by market forces just like the value of a commodity. The most renowned cases of free banking occurred in Scotland (1716–1845) and in the USA (1837–1863). While the former is considered a success storey, the latter is regarded as a case of high monetary instability which led to the spontaneous formation of some key financial institutions, like regional central clearing counterparts and the Federal Reserve System, intended to provide emergency liquidity and coinsurance arrangements (Anderson et al. 2018; Gorton 1984, 1985; Hoag 2011; Jaremsky 2015, 2018).

In this paper, we employ agent-based simulations to identify what circumstances enable the endogenous formation of a clearinghouse when BNS and MNS protocols are in competition with each other. We have two purposes: (i) to reflect on whether the multilateral netting may spread once the netting circuit is managed by a clearinghouse and (ii) to study under what conditions such an institution is likely to emerge spontaneously. We frame our analysis in terms of experiments performed in a network-based computational laboratory, an approach that has been largely applied to the study of financial systems, with emphasis on systemic risk (Battiston et al. 2012a, b; Grilli et al. 2015), financial-real market interactions (Delli Gatti et al. 2010; Riccetti et al. 2013; Bargigli et al. 2014) and the analysis of interbank markets (Tedeschi et al. 2012; Gaffeo and Molinari 2015, 2016; Gaffeo et al. 2019).

The novelty of our work consists mainly in its presentation of a process of endogenous formation of a central clearing counterpart vis-à-vis the extant literature on netting rules, which usually considers the presence of clearinghouses as exogenously determined.Footnote 3 In this regard, our computational agent-based model is suitable for analysing the economic dynamics which emerge in the payment systems whenever the agents can freely decide the type of settlement to which they resort. We test the model for two competing systems of settlement, namely the BNS and the MNS, and for different economic scenarios, payment networks and transaction costs.

Therefore, our model fits into the literature on payment systems. A large part of this literature focuses on the trade-off between net and gross settlement.

Without pretending to be exhaustive, Angelini (1998) investigates how, in RTGS systems, banks tend to postpone interbank payments in order to save the costs of reserves. Such rational behaviour for the debtor banks turns into a problem for the creditor banks, which must increase their reserves in order to unravel the uncertainty about their inflows. Chakravorti (2000) develops a model in which banks use a MNS in order to analyse the liquidity cost-default risk trade-off. The main result of the model consists in identifying the maximum number of defaults sustainable by the banking system compatible with an orderly functioning of the payments settlement. Kahn and Roberds (2001) investigate the liquidity constraint induced by an RTGS system in an interbank network. They show how, in the absence of intraday credit, RTGS distorts the interbank market, causing suboptimal liquidity allocations. Galbiati and Soramaki (2013) analyse how the topology of a financial network can influence a clearinghouse’s need for margins. In general, clearinghouses require margins from debtors in order to mitigate credit risk. The authors find that the need for margins is higher for tiered networks than for highly concentrated ones. This is mainly due to the fact that a tiered network displays unbalanced positions towards the clearinghouse. Gaffeo et al. (2019) study how, for banking networks hit by shocks on external assets, the GSFootnote 4 and BNS systems perform differently in terms of the number of defaults and losses absorbed by equity and bank deposits respectively. Considering the different levels of capitalization, depth and topologies of the interbank markets, the authors find the BNS to be clearly dominant on the metrics considered. This result does not appear to be straightforward for liquidity shocks (Gaffeo and Gobbi 2021).

Furthermore, our work can be included within the literature dealing with market evolution. In particular, our paper pertains to the literature that analyses the competition between two institutional systems. Among others, Kugler et al. (2006) investigate the competition between decentralized bargaining and centralized markets. The authors show how the choice of the system by the agents depends crucially on their set of preferences and on the fact that these can change endogenously over time. Moreover, Neeman and Vulkan (2005) show that agents tend to prefer centralized markets over decentralized ones. Nevertheless, exchanges on decentralized real markets are not zero. This may depend on the presence of assets that are not perfectly homogeneous, on risk-averse agents, as well as on the fact that transaction and operational costs are higher in the centralized case than in the decentralized one. Gerber and Bettzuege (2007) study the competition between two markets in the presence of trading frictions and liquidity effects. Their main findings are that in an evolutionary process when the costs of choosing one or the other market are low, agents tend to choose only one of them. Conversely, whenever costs exceed a certain threshold, agents spread over both.

The presence of several institutional models in which the same type of goods or services are exchanged is a very common case in reality. Alós-Ferrer et al. (2010) try to explain this phenomenon through a model that develops the competition between market designers by comparing it with the case in which there is only one monopolistic designer. The authors show that whenever traders can choose between multiple platforms, they tend to coordinate on the platform with prices that are structurally higher than the market clearing price. The implication of this phenomenon is that market designers tend to develop non-market clearing platforms, while the monopolist-market designer systematically introduces a market clearing platform.

The long-term persistence of inefficient institutions has also been investigated by Alós-Ferrer and Kirchsteiger (2006) and Alós-Ferrer et al. (2021). Alós-Ferrer and Kirchsteiger (2006) study a pure exchange economy in which there are several trading institutions that lead to rationing and only one characterized by market clearing. Their artificial economy is populated by backward-looking traders who choose which institution to join according to the performance achieved in the previous period. In this context, the authors find that the market clearing institution is achieved independently of the features of the other non-market clearing institutions. Nonetheless, some non-market clearing institutions are stochastically stable. Alós-Ferrer et al. (2021) built a system in which two institutional frameworks coexist. One is a double-auction institution where buyers and sellers trade without a specific auctioneer. The other is an institution where the seller is the price maker and the buyer can accept the offer or not. The paper shows that when sellers face decreasing return to scale, there is a rapid convergence towards the double-auction market. Conversely, where sellers are characterized by constant returns to scale, both institutional models persist.

Finally, we developed our model in order to explain a specific historical case. Nevertheless, our framework could be used to explain the clearinghouse formation mechanism for B2B networks, as in the case of the WIR Cooperative Bank and the Sardex clearing circuit (Stodder 2009; Stodder and Lietaer 2016; Lucarelli and Gobbi 2016).

The remainder of the paper is divided into three further sections. In Section 2, we describe the technical aspects of the model and its underlying dynamics. Section 3 illustrates and discusses simulation outcomes. The last section is devoted to final remarks.

2 The model

Gorton (1984) provides a neat description of how, during the first phase of the American Free Banking Era, the financial system was populated by small-size local banksFootnote 5 issuing checks to their account holders. Checks played a particularly prominent role in the large US cities where most economic activities were concentrated, for businesspeople considered checks to be more practical and secure means to regulate their transactions compared to cash. The following quotation vividly illustrates how the check clearing mechanism in operation among free banks worked:

Before 1850 banks cleared checks with a daily exchange and settlement - each bank sent a porter to make the round of all the other banks. The porter carried a ledger book, checks drawn on the other banks, and bags of gold. At each stop the porter turned over checks drawn on that bank and picked up checks drawn on his bank. If the value of the checks he presented exceeded the value of those he picked up, he collected the difference in gold. If the balance netted out against his bank, he paid in gold. Porters crossed and recrossed each other’s tracks, lugging bags of gold, hoping to reach each of the other banks by the end of the day. The system had the simplicity of Indian camps in which each tepee had a path leading to every other tepee. But as the number of banks grew, these paths became a tangled web. Gorton (1984, pp.4)

In light of this narrative, such a payment system can be categorized as a bilateral netting arrangement whereby banks cleared the net value of their mutual exposures in cash (i.e. gold). Besides liquidity costs, the “porter” system was subject to the operational risk of a robbery during the settlement tour. Accordingly, very quickly the number of transactions involved in the system increased to the point that inefficiencies due to the parallel escalation in transaction, liquidity and operational costs led banks to conduct their settlements multilaterally, at a specific time and in a specific place. Hence, in 1853 the first US clearinghouse saw the light of day in New York, and thereafter, many others came into being across the country. Therefore, in accordance with Gorton’s argument, as soon as the payment network became complex, multilateral netting governed by a centralized settlement device overtook decentralized bilateral netting.

The methodology used for the analysis conducted in this paper is rooted in network theory. Such a methodology is well-suited to the study of credit networks. It is widely employed for the analysis of both financial and real marketsFootnote 6, and it has been already applied for empirical investigations into the main characteristics of the American Free Banking system (Calomiris and Carlson 2017).

2.1 A network approach to payment systems

The web of credit and debit relations among the participants in a financial system is exactly what network analysis enables us to detect, and it allows us to identify what obligations can be cleared bilaterally or multilaterally. In this sub-section, we present the network model, and we show some basic dynamics which guide the simulations. We first describe the shaping of the payment network and, subsequently, the agents’ behavioural schemes. The aim of our inquiry is to reveal what forces compel agents either to join a multilateral netting circuit or to regulate their transactions through a bilateral one.

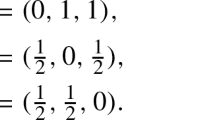

To this end, we build an artificial economic system composed of N nodes, where each node represents a bank, while nodes are linked to each other by debit-credit obligations. The simplest way to model a network of this type is through an adjacency matrix X composed of N rows and N columns in which each single value of xi, j ∀ i, j ∈ N can be the value of zero or one in the presence or the absence of a debt-credit relationship between banks i and j, respectively. In particular, the i-th row represents the credit agreements that agent i holds towards other agents, while the j-th column represents the agent’s j debit contracts towards the rest of the network. It follows that, by construction, the main diagonal of X is composed of zeros, as shown in Fig. 1. For expositional purposes, the matrix Xexample has been generated randomly, among all the possible adjacency matrixes with 3 banks, so that x1,2 =1, x1,3 =1 , x2,1=1, x2,3=1, x3,1=0, x3,2=1 and the principal diagonal is zero.

A necessary parameter for contracts attribution is the probability pi, j that two agents i and j are connected to each other, so that p is the main driver determining the topology of payments. In the present study, we consider one specific network topology commonly known as Erdos-Renyi (Erdős and Rényi 1959). This type of network is characterized by the fact that the probability of two agents being connected to each other is the same for every pair (Fig. 2).

Following the identification of the adjacency matrix, attributing value to the obligations between agents is necessary in order to define the payment network. We therefore define the weighted adjacency matrix by means of a procedure which is split into three phases. The first one consists in identifying the total value of the obligations in the system, TV. Once TV has been defined, a debt quota of TV/N is attributed to each bank. In the last phase, for each bank, the debt quota is divided by the equivalent number of creditors. The value of debits is obtained by summing the values of the corresponding adjacency matrix column. This procedure yields a network characterized by agents who have an equal total debt value divided into a number of different—and therefore heterogeneous—contracts. The net financial position of each agent, calculated as the sum of the receivables minus the sum of the debts of each agent, may be in debit, credit, or balanced position.

In order to facilitate understanding of the procedure, we give an example. Consider the topology defined by the adjacency matrix Xexample and a TV value equal to 300. Following the aforementioned procedure, we first identify the final quota of debts for each agent as equal to 100 (300/3). We then divide the said quotas by the number of creditors confronting each agent, respectively 1, 2 and 2. The weighted adjacency matrix X’ in Fig. 3 represents the outcome of the process. As a result, agent 1 has a balanced position of 0 to the system, as it has a credit of 50 to agent 2 and agent 3 and a debt of 100 to agent 2. Agent 2, on the other hand, has a positive net position equal to 50 given that the value of its credits (150) is higher than the value of its debt (100). Finally, agent 3 has a negative position of 50 given that its total credit is 50 and its debit has a value of 100.

In Sub-section 3.4, we remove the hypothesis of equality of the level of aggregated interbank debts in order to verify whether the results obtained can be generalized to a network of heterogeneous banks.

In particular, we assign random values to interbank obligations, calibrating them in order to obtain networks of a size comparable to that of banks with homogeneous debt levels. The procedure consists in increasing or decreasing the value of all financial obligations randomly assigned until the size of the network is around those previously tested.

As already said, the analysis focuses on the Erdos-Renyi topology for various density levels. The choice of this topology was determined by the historical case considered. Nevertheless, for completeness of analysis, we will try to test the model for small-world and scale-free topologies.

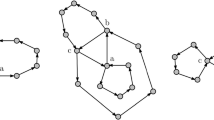

As regards the small-world structure, we will follow the procedure of Watts and Strogatz (1998). Therefore, we start from a ring of 25 banks with a mean degree of 2*K (Fig. 4) to which we apply different levels of rewiring beta. Rewiring is a procedure that eliminates a link between nodes A and B in order to reassign the same link as between nodes A and C. For beta = 0, the ring remains unchanged; for beta = 1, the ring becomes a random network.

However, the Watts and Strogatz algorithm produces a symmetric adjacency matrix. In our case, this implies that all banks are mutually connected. In this context, the BNS tends to be clearly preferred over the MNS. Therefore, we apply a light pruning to the adjacency matrix in order to test the two payment systems in topologies characterized by a high clustering coefficient and short average path length. The pruning procedure consists in cutting edges with a fixed probability. Figure 5 shows two networks before and after the pruning procedure.

Finally, we test a scale-free topology. Using the algorithm of Barabási and Albert 1999we obtain networks characterized by a scale-free link distribution given a preferential attachment. Our procedure begins with a network populated by a core group of m0 banks. Then, we let the network grow by adding 25-m0 banks one at a time so that the last node added is connected with the existing nodes. Figure 6 represents a scale-free network generated by a core group of five banks.

2.2 Settlement methods

Once the payment matrix has been determined, we proceed by describing the possible settlement methods that agents are allowed to use. Two systems are considered: the BNS and the MNS procedures. For the payment network identified by X’, we obtain the payment matrix X” associated with a BNS scheme by netting bilaterally all the credit and debit obligations for each bank. Technically, the bilateral positions are compensated according to the formula xi, j − xj, i (Fig. 7).

In turn, X”’ represents the outcome of MNS. Crucially, a feature of a multilateral netting architecture is the existence of a new institutional arrangement within the payment system—a clearinghouse—that behaves like a middleman between the two parties involved in a financial transaction, so that banks can decide to join a clearinghouse if they are eager to compensate their positions. Usually, this procedure takes the form of a novation agreement, that is, a transformation of bilateral exposures between two agents into a single net position towards the clearinghouse itself. Therefore, the task of the clearinghouse is to offset all the debit and credit positions of the agents, and as a consequence to collect its own debits and to liquidate its own creditors. Each i-th row of the payment matrix now represents the account of the i-th agent to the clearinghouse, while by construction, the sum of the exposures to the clearinghouse for the whole network of participants is zero. This is due to the fact that the agent’s i credit to agent j is at the same time the agent’s j debt to agent i. Thus, when all exposures are added, agent’s i credit to agent j is eliminated by agent’s j debt to agent i.

2.3 Model operability, time frame and variables

Having set out the payment network and the settlement protocols, we now describe the procedure according to which each agent selects, among the settlement schemes offered, the one that it prefers. The model that we present serves to investigate the dynamics underneath the activation of a clearinghouse, and how this can be influenced by transaction costs, payment topologies, credit risk and credit costs. In our model, the clearinghouse is a non-profit public entity that charges a fee in order to cover management and operational costs and behaves like a club, membership of which can be freely requested. Upon the club’s activation, there exists a mass of agents who rationally decide to join it on the basis of their preferences, the economic context and the topology of the credit network. For this reason, the choice of static networks and optimizing behaviour of the agents can provide a good approximation of the phenomenon of coordination of a group of agents. We admit that our model is not suitable for the analysis of the functioning and growth in size of a clearinghouse once the institution has been set up. For this kind of analysis, it would be necessary to use dynamic models and networks characterized by the strategic and repeated interactions among players. To be more precise, in what follows, we limit ourselves to investigating the agents’ behaviour in the case of a binary choice between BNS and MNS.

The system is made up of two periods, and each of them is, for explanatory purposes, divided into two more sub-intervals. (I) Within the first half of the first interval, exchanges arise among agents, and credit and debit obligations are assigned to each agent. (II) Within the second half of the first interval, agents are required to decide whether to settle their positions by means of BNS or to compensate them through the MNS instead. As shown in Sub-section 2.4, the MNS system is activated only if each agent has the incentive to settle its obligations in the clearinghouse. Agents then ask for the amount of liquidity (i.e. gold) needed to regulate their transactions and eventually to pay the fee to the clearinghouse. (III) In the first half of the second interval, if the clearinghouse has been activated in the previous period, it compensates the positions among its members, and it levies a commission from all the members for the service provided. In particular, it recovers credits from the debtors, and, if it does not fail, it makes payments to its creditors. (IV) In the second half of the second interval, agents receive the liquidity for their net credits if the clearinghouse has not failed. On the other hand, whenever the clearinghouse is not activated in period one, during the first part of period two payments among agents are executed. Then, in the second part of the second period, all agents receive the money, and all credit and debit obligations among agents are settled. Figure 8 depicts the model’s sequence.

2.4 Decision-making process

In this section, we illustrate the variables of the model and the analytics of the decision-making process of agents.

First, we establish that the utility function of the agents depends solely on the monetary variable in linear manner. Agents decide which payment system to use, jointly analysing a set of variables such as interest rate, probability of default, transaction costs, fees and net exposure.

Interest rate (i) represents the cost necessary to obtain liquidity (gold) that the agents must bear. In our model, agents are not provided with initial liquidityFootnote 7; however, we hypothesize that they are not credit constrained and that they can borrow money from banks located outside the system. The borrowed currency can only be spent on settling interbank debts. Agents are aware that the interest rate is constant during the entire process.

The variable pd represents the probability of default by the clearinghouse, a term that we have introduced in order to increase the realism of our model, given the significant number of failures registered during the American Free Banking Era. Operationally, we assume that the clearinghouse is always able to obtain payment from its debtors in the first half of period 2, but, for technological reasons or because of fraud, it may fail before the liquidation of its own debts to agents. Since we model participants as being aware of the clearinghouse’s probability of failure, we are able to incorporate credit risk into our framework without considering the possibility of agent’s failure. Therefore, potential creditors of the clearinghouse must carefully assess the risk of the clearinghouse going bankrupt, given that in the event of a manifestation of this risk, the entire credit would become uncollectable. Extensions of our model could include margins, collaterals as insurance tools and agent’s default.

The variable fee represents a sum to be paid in order to benefit from clearing services, and it can be seen as the payment that has to be made to be admitted to the club. This fee is applied proportionally to the volume of obligations effectively cleared, and it encompasses also the cost of collecting the funds externally.

The transaction-operational cost (trc) is a variable that comprises all the costs related to the activity carried out by the porters in the system described by Gorton (1984), including the operational cost entailed by the procedure. This cost is proportional to the value of the payments that every bank has to make. This is for three reasons. The first is that the operational risk is directly connected to the amount of gold carried by the porters, which in turn is directly connected to the value of the payments to be made. The second reason is that, in the banking system that we are mimicking, the size of all the banks is similar;Footnote 8 as a consequence, it seems reasonable to assume that the variance of the payment amounts is quite low. This implies that the higher the value of the payments to be made, the larger the number of banks to which the porter has to go, thus increasing transaction costs. Finally, the third reason is that the greater the value of the payments to be made in gold, the more expensive transportation of the commodity becomes.

The last variable is the net exposure ∆OFootnote 9. It indicates the net value of each agent’s obligations. The lower the value of ∆O, the greater the clearing potential in reducing liquidity costs. This variable critically depends on the topology and the density of the interbank network and the value of the interbank payments.

During period 1, each agent needs to decide whether to take part in the clearinghouse according to Conditions (1) and (2) below, which apply to the net creditors and the net debtors, respectively. We define each single credit from the agent i to agent s as Li, s and each single debt as Bi, s.

for \(\sum_{s=1}^y{L}_{i,s}-\sum_{s=1}^y{B}_{i,s}<0\), i, s ∈ (1, 2, 3…size), i ≠ s; y = size

Given that \(\sum_{s=1}^y{L}_{i,s}-\sum_{s=1}^y{B}_{i,s}=\Delta {O}_{i,s}\), Conditions (1) and (2) can be written as:

The left side of (1) and (3) includes the net creditor’s expected payoff if it makes use of the BNS to regulate its payment relationships. This payoff consists of the monetary value deriving from net credits minus the cost of obtaining the liquidity and transaction costs for each bilateral netted debt. On the right side of Equations (1) and (3), the expected payoff in the event of subscription to the clearinghouse is computed. Its value depends on the net position on conclusion of the procedure minus the expected loss on net credits and the commission fee due to the clearinghouse. The commission fee is in turn proportional to the offset values.

For the case of a net debtor (∆Oi < 0), Conditions (2) and (4) replicate the method for calculating payoffs by applying it to the BNS type of settlement. Therefore, nothing changes for the left side of the Conditions (2) and (4) with respect to (1) and (3). Considering the right side of Equations (2) and (4), it is apparent that the total settlement cost consists in the negative net position towards the clearinghouse, plus the liquidity costs on the net position and the fee charged on the volume of the compensated obligations. In order to identify the dynamics driving the agents’ choices in relation to the system to be implemented, we propose a set of Monte Carlo simulations based on an Erdos-Renyi network composed of 25 agents for each connectivity level (0 < p < 100). The total asset value of the system is equal to 100,000 tokens. The two options available to agents are the BNS-MNS pair.

Figure 9 shows the mean of the number of agents that ask for membership of the clearinghouse as the interconnectedness among them varies, for three different levels of the probability that the clearinghouse itself defaults. In this example, we assume that the clearinghouse is activated when all the agents have an incentive to enter.

Number of agents that apply for the clearinghouse (mean value) as its probability of default varies. On the x-axis is the percentage level of interconnectedness (p) and on the y-axis the number of agents who apply for the clearinghouse for different levels of pd. For y = 25 the clearinghouse is activated. fee = 0.5%, trc = 1% and i = 5%

A non-linear relationship between the level of interconnectedness and applications for membership of the clearinghouse is clearly apparent. The reason for this phenomenon is that whenever the probability of interconnectedness among agents rises, while MNS becomes more profitable, a concomitant rise in bilateral exposures occurs. This dynamically increases the number of bilateral positions, thus allowing for a cost reduction of non-participation in the clearinghouse. In detail, with the growth of connectivity from the lower end of the domain to the upper one, the net debits to be welded first increase, until they reach a maximum point for an average connectivity level, and then they fall almost symmetrically in the second half of the probability domain. This explains why the incentive to take part in the clearinghouse increases in the first half of the probability domain, and why it decreases in the second half. The same dynamic occurs for each of the three default probability rate curves observed. In particular, the clearinghouse is never activated for the highest level of probability default (10%), while for low default probability (1%), the clearinghouse is always activated for an interconnectedness range between 20 and 60%. For a probability of default of 5%, the average of the simulations never reaches 25. This does not mean, however, that in our simulations the activation of the clearinghouse has never occurred. Figure 10 shows how the choice dynamic varies for different liquidity costs. We consider three different levels of liquidity costs, i.e. 1%, 5% and 10%. Whenever the liquidity costs are low (1%), the clearinghouse cannot be activated, while for high liquidity costs (10%) and range of interconnectedness between 20 and 60%, a clearinghouse is almost always established. For a level of liquidity of 5%, the average of the simulations never reaches a mean of 25.

Number of agents who apply for the clearinghouse (mean value) as the interest rate on liquid means varies. On the x-axis is the percentage level of connectivity (p) and on the y-axis the number of agents who apply for the clearinghouse for different levels of i. For y = 25 the clearinghouse is activated. fee = 0.5%, trc = 1% and pd = 5%

3 Simulation outputs

In this section, we present the results of a scenario in which the BNS and the MNS payment systems are in competition with each other as illustrated in Section 2. In particular, we investigate the process of endogenous formation of a clearinghouse in the context of an interbank payment network whose features resemble those of the Free Banking Era. For this purpose, we focus on homogeneous topologies that better represent the relations among banks of similar size, and which are generally characterized by payment orders with a low variance. Furthermore, since clearing procedures occur weekly, we assume interest rate curves to be flat. We also take into account three levels of transaction costs and three default probabilities for the clearinghouse, as shown in Table 1.

We identify four effects which, once combined, contribute to determining the results of our simulations.

Effect 1

The first effect concerns the interconnectedness probability. As described in Section 2, in the case of the BNS, the probability of connection between the agents engenders an incentive for formation of the clearinghouse in the first half of the interconnectedness domain. In the second half, on the contrary, effect 1 obstructs the formation of the clearinghouse due to the possibility of netting a high number of obligations in a bilateral manner.

Effect 2

The second effect concerns the liquidity cost. We test three interest rates: low, medium and high. With the increase of the interest rate from low to high, the liquidity costs increase too; as a consequence, the incentive to join the clearinghouse grows. Hence, effect 2 shows a positive association with activation of the clearinghouse.

Effect 3

The third effect concerns the operational and transaction costs. As illustrated in the second section, with the increase of the transaction costs within the BNS, the incentives for formation of the clearinghouse increase too. Therefore, also effect 3 shows a positive association with activation of the clearinghouse.

Effect 4

The fourth effect concerns the default probability of the clearinghouse. The higher the default probability, the lower the incentive to join the clearinghouse. Effect 4 thus shows a negative association with formation of the clearinghouse.

In the “porter” system that we are simulating, according to the exposition put forward by Gorton (1984), as the number of transactions between financial intermediaries increased, the escalation of operational and transaction costs would have made the bilateral netting system unsustainable. From a theoretical point of view, the result is not so obvious. The increase in the number of transactions certainly increases the cost of transactions, but it could also increase the possibility of netting bilaterally. This trade-off is the basis of our investigation.

Table 2 presents the results for each scenario tested. They report the probability of activating a clearinghouse. The Table 5 in the Appendix shows the mean values of number of agents that apply for clearinghouse and their variance, while Figures 11, 12 and 13 represent the heated map for three different cases. Below we analyse the results of each scenario in relation to the cases of a high, medium and low default probability of the clearinghouse.

3.1 High default probability of the clearinghouse

The first case to be considered is the one in which the clearinghouse is marked by a high default probability. When liquidity costs are low, the activation of the clearinghouse does not occur at any level of the tested operational and transaction costs, nor at some levels of interconnectedness among the banks participating in the system. As a result, in this scenario, liquidity and probability effects (effects 2 and 4) significantly prevail over interconnectedness and operational and transaction cost effects (effects 1 and 3). In the second scenario, where liquidity costs are 3.5%, the activation of the clearinghouse can be observed in a few cases. In particular, when operational and transaction costs rise from 0.1 to 1%, the probability of activation of the clearinghouse reaches 4.9% at 30% connection probability. For all the levels of operational and transaction costs, the maximum probability of activation occurs at 30% connection levels (among those represented in Table 2). This is due to effect 1 (interconnectedness effect), which crucially contributes to limiting the activation of the clearinghouse at connection levels higher than 40–45%. In particular, for level of connectivity of 90%, the clearinghouse never appears at any level of transaction and liquidity costs. In the third scenario (liquidity cost of 5%), the probability of the clearinghouse’s activation is relatively high. Indeed, the clearinghouse is activated in 25.7% of the simulations for transaction costs of 1%.

The heated map represented in Fig. 11 shows the most favourable case for BNS in which a clearinghouse is never activated. For interconnection values between p= 0.01 and p = 0.05, the number of agents applying for the clearinghouse is high. This phenomenon is due to the assumption that agents with equal BNS and MNS payoffs opt for the MNS.

3.2 Medium default probability of the clearinghouse

Where the clearinghouse is marked by a 3% default probability, the impact of effect 4 (default effect) is generally less intense than in the previous case. With a liquidity cost of 2%, at any level of operational and transaction costs, the clearinghouse is rarely activated. When liquidity costs rise to 3.5%, the dominance of effects 1 (interconnectedness effect) and 2 (liquidity effect) determine a considerable probability of the clearinghouse’s activation at 30% and 50% connection levels. At high connection levels (70% and 90%), effect 1 (interconnectedness effect) tends to reduce the activation probability, while for low connection levels and medium and high transaction costs, the effects 1 and 3 push the activation probability at a peak of 41.5%. The dynamics of the third scenario are similar to those of the former ones.

The heated map in Fig. 12 shows the case in which all variables except for connectivity are at their intermediate level. It is striking how the density of the network can affect the outcome of our simulations. In particular, for the range of interconnectedness between 20 and 45%, the clearinghouse activation is the highest.

3.3 Low default probability of the clearinghouse

When the default probability of the clearinghouse is 1%, the default effect tends to raise the average probability of activation. There are numerous cases in which the activation of the clearinghouse is higher than 90%. When liquidity costs equal to 2%, the activation probability reaches 74% for interconnectedness of 30% when transaction costs are high, by virtue of effect 1 (interconnectedness effect). On lowering transaction costs, we detect an activation probability of medium and low connection levels also for low operational and transaction costs. At high connection levels, the effect 1 (interconnectedness effect) keeps the probability of the clearinghouse’s activation low. For liquidity cost equals 3.5%, the probability of the clearinghouse’s activation is high due to the combination of liquidity and default effects. In this case, the default effect eventually prevails over the interconnectedness effect making the probabilities of activation reach 34.8% for an interconnectedness level of 70%. Finally in the scenario characterized by liquidity costs of 5%, the activation of the clearinghouse proves to be structural, except for the interconnectedness effect at high connection levels. Figure 13 shows the most favourable case for activation of the clearinghouse. For a wide range of interconnectedness (25–60%), the activation probability is higher than 90% (see Table 2).

3.4 Heterogeneity in debt level

In this section, we present the results obtained by eliminating the hypothesis of a homogeneous total amount of interbank debts. This investigation helped us to understand how generalizable our model is to contexts different from the free banking case. To this end, we identified three cases to be compared with those commented on in the previous section. We first analysed the worst case for the MNS. Therefore, we simulated the case with pd = 5%, liq = 2% and trc = 0.1%.

Figure 14 shows that the clearinghouse is activated in none of the thousands of simulations even if the non-linear activation dynamic is preserved. Indeed, the heterogeneity of the interbank debt structure makes coordination among all agents more difficult. Figure 15 shows the different interbank debt structure in the two cases. Even if in both cases the interbank obligations are different, and there are some banks in surplus and others in deficit, in the case of banks with heterogeneous interbank debt structures, the net balance between surplus and deficit increases. In this context, it is very difficult for all agents to make the same choice. The ability to offset fewer interbank debts and the fear of a clearinghouse default push net creditors to the BNS to a greater extent than in the case with homogeneous banks.

Figure 16 investigates the clearinghouse activation dynamics for the case in which Pd = 3%, Liq = 3.5% and trc = 0.5%. On comparing Fig. 14 with Fig. 16, it can be seen that also in this case, the non-linear activation dynamic is preserved. Furthermore, with the interconnection probability between 25 and 40%, there are more simulations in which the number of agents approaches or reaches the threshold level for activation.

Finally, Fig. 17 shows the most favourable case for activating the clearinghouse. Comparing this case with the same one for homogeneous banks clearly appears that the probability of activation is lower. Nevertheless, the activation dynamics are preserved, and establishment of the clearinghouse is not an event with a probability higher than 1%.

3.5 Other topologies

In this section, we investigate how the topology of the interbank network can affect the results obtained in the random networks. First we consider networks with small-world characteristics built with the procedures described in Sub-section 2.1. Table 3 displays the results for different levels of rewiring (beta parameter) and average connection (K parameter implies a node mean degree of 2 * k). In particular, we assign to the beta parameter values equal to 0.3, 0.4 and 0.5 and to the connection parameter K values equal to 4, 5, 6 and 7.

The main driver of our simulations appears to be connection parameter K. Indeed, the probability of the clearinghouse’s activation does not undergo large variations for the different levels of beta given K. Parameter K shows the trade-off BNS vs. MNS detected for Erdos-Renyi random networks. For values of K between 4 and 6, the parameter shows a positive relationship with the activation probability, which reaches 24.8% for beta = 0.4. For values of K equal to 7, the probability of activation of the clearinghouse decreases for each level of beta. This implies that density has reached a point where the BNS prevails over the MNS.

Finally, we analyse the scale-free topology obtained with the Barabasi and Albert algorithm for m0 = 5 and mean degree values of the core banks equal to 2 and 3. Table 4 displays the average of banks that apply for the clearinghouse. Looking at the table, we deduce that for all scenarios at least one bank opts for the BNS. This is not surprising given the difference between hub banks and others. Figure 18 compares the net interbank exposures in the case of a scale-free network and an Erdos-Renyi one. We believe that this difference is the main cause of the BNS’s absolute dominance.

4 Concluding remarks

In this paper, we have developed an agent-based model in order to investigate the process of formation of a clearinghouse that manages payments. In particular, we have focused on the formation of the New York Clearinghouse during the Free Banking Era, as described by Gorton (1984). Our results confirm the thesis put forward by Gorton that a clearinghouse for the management of interbank payments emerged endogenously as a private institution intended to mitigate liquidity and transaction costs.

Moreover, our analysis has shown how the topology and the density of payments play an essential role in the formation of a clearinghouse. As far as we know, ours is the first study that considers this variable in addition to the probability of default and the cost of liquidity. Finally, our model could serve to analyse the process by which a clearinghouse is formed in more general contexts. In this case, it would be necessary to enrich the model by considering maintenance margins, the use of collaterals, clearinghouses as private institutions and the possibility of default by agents.

Notes

See Chu and Lai (2007) for settlement definitions.

See Chu and Lai (2007) for a literature review.

Gross settlement is a payment method in which the two contractors agree to close a financial obligation on a fixed date and for the nominal value of the contract.

The rule was that of single-branch banks.

In addition to the references reported above, for a comprehensive review, see Neveu (2018).

It would be the same if we provided a sufficient initial liquidity buffer to all agents and considered the opportunity cost of the different settlement systems. We have instead decided to consider agents without an initial liquidity buffer. Therefore, agents are forced to request gold from units outside the system. Hence, the described model is of partial equilibrium. If we considered agents with initial liquidity, we would have a general equilibrium model.

During the Free Banking Era, basically all banks operated as single-branch firms.

∆O stands for “delta obligations”.

References

Alós-Ferrer C, Kirchsteiger G (2006) General equilibrium and the emergence of (non) market clearing trading institutions. Economic Theory 44:339–360

Alós-Ferrer C, Kirchsteiger G, Walzl M (2010) On the evolution of market institutions: the platform design paradox. Econ J 120:215–243

Alós-Ferrer C, Buckenmaier J, Kirchsteiger G (2021) Do traders learn to select efficient market institutions? Exp Econ. https://doi.org/10.1007/s10683-021-09710-1

Anderson H, Calomiris C, Jaremski M, Richardson G (2018) Liquidity risk, bank networks, and the value of joining the Federal Reserve System. J Money Credit Bank 50:173–201

Angelini P (1998) An analysis of competitive externalities in gross settlement systems. J Bank Financ 22:1–18

Barabási A, Albert R (1999) Emergence of scaling in random networks. Science 286:509–512

Bargigli L, Gallegati M, Riccetti L, Russo A (2014) Network analysis and calibration of the “leveraged network-based financial accelerator”. J Econ Behav Organ 99:109–125

Battiston S, Delli Gatti D, Gallegati M, Greenwald B, Stiglitz J (2012a) Liaisons dangereuses: increasing connectivity, risk sharing, and systemic risk. J Econ Dyn Control 36:1121–1141

Battiston S, Delli Gatti D, Gallegati M, Greenwald B, Stiglitz J (2012b) Default cascades: when does risk diversification increase stability? J Financ Stab 8:138–149

Calomiris C, Carlson M (2017) Interbank networks in the National Banking Era: their purpose and their role in the panic of 1893. J Financ Econ 125:434–453

Chakravorti S (2000) Analysis of systemic risk in multilateral net settlement systems. J Int Financ Mark Inst Money 10:9–30

Chauduri R (2014) The changing face of American banking. Palgrave McMillan, New York

Chiu J, Lai A (2007) Modelling payments systems: a review of the literature. Bank of Canada Staff Working Paper, No.07/28

Delli Gatti D, Gallegati M, Greenwald B, Russo A, Stiglitz J (2010) The financial accelerator in an evolving credit network. J Econ Dyn Control 34:1627–1650

Duffie D, Zhu H (2011) Does a central clearing counterparty reduce counterparty risk? Rev Asset Pric Stud 1:74–95

Duffie D, Scheicher M, Vuillemey G (2015) Central clearing and collateral demand. J Financ Econ 116:237–256

Erdős P, Rényi A (1959) On random graphs. I. Publ Math 6:290–297

European Central Bank (2021) Payments and markets glossary. https://www.ecb.europa.eu/services/glossary/html/act7e.en.html

France V, Kahn C (2016) Law as a constraint on bailouts: emergency support for central counterparties. J Financ Intermed 28:22–31

Gaffeo E, Gobbi L (2021) Achieving financial stability during a liquidity crisis: a multi-objective approach. Risk Manag 23:48–74

Gaffeo E, Molinari M (2015) Interbank contagion and resolution procedures: inspecting the mechanism. Quant Finan 15:637–652

Gaffeo E, Molinari M (2016) Macroprudential consolidation policy in interbank networks. J Evol Econ 26:77–99

Gaffeo E, Gobbi L, Molinari M (2019) The economics of netting in financial networks. J Econ Interac Coord 14:595–622

Galbiati M Soramaki K (2013). Central counterparties and the topology of clearing networks, Bank of England working papers 480, Bank of England

Gerber A, Bettzuege M (2007) Evolutionary choice of markets. Economic Theory 30:453–472

Gorton G (1984) Private clearinghouses and the origins of central banking. Business Review of the Federal Reserve Bank of Philadelphia, Issue Jan/Feb, 3–12

Gorton G (1985) Banking theory and free banking history: a review essay. J Monet Econ 16:267–276

Grilli R, Tedeschi G, Gallegati M (2015) Markets connectivity and financial contagion. J Econ Interac Coord 10:287–304

Hoag C (2011) Clearinghouse membership and deposit contraction during the Panic of 1893. Cliometrica 5:187–203

International Monetary Fund (2001) Developing government bond markets. The World Bank

Jaremsky M (2015) Clearinghouses as credit regulators before the Fed? J Financ Stab 17:10–21

Jaremsky M (2018) The (dis)advantages of clearinghouses before the Fed. J Financ Econ 127:435–458

Kahn CM, Roberds W (2001) Real-time gross settlement and the costs of immediacy. J Monet Econ 47:299–319

Kugler T, Neeman Z, Vulkan N (2006) Markets versus negotiations: an experimental analysis. Games Econ Behav 56:121–134

Lucarelli S, Gobbi L (2016) Local clearing unions as stabilizers of local economic systems: a stock flow consistent perspective. Camb J Econ 40:1397–1420

Neeman Z, Vulkan N (2005) Markets versus negotiations: the predominance of centralized markets. http://archive.dimacs.rutgers.edu/Workshops/Games/neeman.pdf

Neveu A (2018) A survey of network-based analysis and systemic risk measurement. J Econ Interac Coord 13:241–281

Riccetti L, Russo A, Gallegati M (2013) Leveraged network-based financial accelerator. J Econ Dyn Control 37:1626–1640

Srinivasan V, Kim YH (1986) Payments netting in international cash management: a network optimization approach. J Int Bus Stud 17:1–20

Stodder J (2009) Complementary credit networks and macroeconomic stability: Switzerland’s Wirtschaftsring. J Econ Behav Organ 72:79–95

Stodder J, Lietaer B (2016) The macro-stability of Swiss WIR-Bank Credits: balance, velocity, and leverage. Comp Econ Stud 58:570–605

Tedeschi G, Mazloumian A, Gallegati M, Helbing D (2012) Bankruptcy cascades in interbank markets. PLoS One 7:e52749

Watts DJ, Strogatz SH (1998) Collective dynamics of ‘small-world’ networks. Nature 393:440–442

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Gaffeo, E., Gallegati, M. & Gobbi, L. Endogenous clearinghouse formation in payment networks. Rev Evol Polit Econ 3, 109–136 (2022). https://doi.org/10.1007/s43253-021-00054-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43253-021-00054-3