Abstract

This article studies estimation of a stationary autocovariance structure in the presence of an unknown number of mean shifts. Here, a Yule–Walker moment estimator for the autoregressive parameters in a dependent time series contaminated by mean shift changepoints is proposed and studied. The estimator is based on first order differences of the series and is proven consistent and asymptotically normal when the number of changepoints m and the series length N satisfy \(m/N \rightarrow 0\) as \(N \rightarrow \infty\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time series dynamics often change due to external events or internal systematic fluctuations. One common structural change is the mean shift, and changepoint analyses allow the researcher to identify whether and when abrupt changes in the mean of the series take place. Evolving from the original treatise for a single location parameter shift in Page (1954), the majority (but not all) of changepoint analyses check for shifts in the mean of the series. Since Page (1954), considerable changepoint work has been conducted, including recursive segmentation algorithms such as binary segmentation and wild binary segmentation Fryzlewicz (2014), dynamic programming based approaches such as Jackson et al. (2005) and Killick et al. (2012), moving sum (MOSUM) procedures (Eichinger and Kirch 2018; Chen et al. 2021), and simultaneous multi-scale changepoint estimators (SMUCE) Frick et al. (2014). Additional changepoint work includes applications in climatatology (Hewaarachchi et al. 2017), economics (Norwood and Killick 2018), and disease modelling (Hall et al. 2000).

Many changepoint techniques assume independent and identically distributed (IID) model errors; however, time series data are typically correlated (e.g., daily temperatures, stock prices, and DNA sequences Chakravarthy et al. (2004)). Changepoint techniques tend to overestimate the number of changepoints should positive autocorrelation be ignored (Shi et al. 2022). In addition, some multiple changepoint models for time series allow all model parameters, including those governing the correlation structure of the series, to change at each changepoint time. These scenarios are easier to handle computationally as dynamic programming techniques can quickly optimize penalized likelihood objective functions; see Killick et al. (2012) and Maidstone et al. (2017). In these cases, the objective function optimized is additive in its segments (regimes). A more parsimonious model allows series means to shift with each changepoint time, but keeps error autocovariances constant across all regimes. These models do not lead to objective function additivity and fast dynamic programming techniques cannot be directly applied (See Shi et al. 2022).

Remedies typically seek to incorporate the autocorrelation structure in the changepoint analysis or to pre-whiten the series prior to any changepoint analysis. In either case, one needs to quantify the autocovariance structure and/or long-run variance of the series. With a good estimate of the series’ autocovariance structure, one-step-ahead prediction residuals can be computed—and these residuals are always uncorrelated (independent up to estimation error for Gaussian series). Indeed, a principle of (Shi et al. 2022; Robbins et al. 2011) is that good multiple changepoint detection routines can be devised by applying IID methods to the series’ one-step-ahead prediction residuals (also called pre-whitening). Perhaps owing to this, considerable recent research has sought to find changepoints in dependent time series. Among these, Dette et al. (2020) estimate the long-run variance of the error process via a difference-type variance estimator calculated from local means from different blocks; this estimate is then used to modify SMUCE for dependent data. The authors Chen et al. (2021) propose a robust covariance estimation procedure from \(M-\)estimation to modify a moving sum procedure. Other proposed long-run variance (or time-average variance) estimators for mean shift problems based on robust methods include (Chan 2022; Romano et al. 2021; Chakar et al. 2017).

This paper studies autocovariance and long-run variance estimation in the presence of mean shifts in more detail. We devise a method based on first order differencing that outperforms robust and rolling window methods. The scenario is asymptotically quantified when the model errors obey a causal autoregressive (AR) process.

The rest of this paper proceeds as follows. The next section narrates our setup and discusses approaches to the problem. Section 3 then develops an estimation technique based on lag one differences of the series. Section 4 proves consistency and asymptotic normality of these estimators and Sect. 5 assesses their performance in simulations. Section 6 applies the results to an annual precipitation series and Sect. 7 concludes with brief comments.

2 Model and estimation approaches

Suppose that \(\{ X_t \}_{t=1}^N\) is a time series having an unknown number of mean shift changepoints, denoted by m, occurring at the unknown ordered times \(1< \tau _1< \tau _2< \cdots < \tau _m \le N\). These m changepoints partition the series into \(m+1\) distinct segments, each segment having its own mean. The model is written as

Here, s(t) denotes the series’ regime number at time t, which takes values in \(\{0, 1, \ldots , m \}\). Then \(\kappa _{s(t)} = \mu _i\) is constant for all times in the \(i\mathrm{th}\) regime:

We assume that \(\{ \epsilon _t \}\) is a stationary causal AR(p) time series that applies to all regimes. The AR order p is assumed known for the moment; BIC penalties will be examined later to select the order of the autoregression should it be unknown. While more general ARMA(p, q) \(\{ \epsilon _t \}\) could be considered, we work with AR(p) errors because this model class is dense in all stationary short-memory series (Brockwell and Davis 1991), and estimation, prediction, and forecasting are easily conducted. Adding a moving-average component \(q \ge 1\) induces considerably more work and is less commonly found in changepoint applications. The AR(p) \(\{ \epsilon _t \}\) obeys

where \(\{ Z_t \}\) is IID white noise with a zero mean, variance \(\sigma ^2\), and a finite fourth moment (this enables consistent estimation of the autoregressive parameters \(\phi _1, \ldots , \phi _p\)).

The next section develops a difference based moment estimation procedure for the mean shift setting. Under this scenario, first-order differences of the series will have a non-zero mean only at the changepoint times. At this point, it might seem prudent to apply ARMA estimation methods that are robust to outliers to the differenced series. Indeed, many previous authors have considered outlier-robust estimators for ARMA models. For examples, the M-estimators of Muler et al. (2009) are shown to be consistent and tractable and the bounded influence propagation (BIP) \(\tau\)-estimators in Muma and Zoubir (2017) merit mention. However, these estimators require the ARMA series to be causal and invertible. In our application, the differenced series has a unit root in its MA component and is hence not invertible. Perhaps worse, future simulations demonstrate that BIP \(\tau\)-estimators do not perform well in our setting.

3 Moment estimates based on differencing

This section derives a system of linear equations that relate the autocorrelations of the differenced series to the AR(p) coefficients. First-order differencing a series eliminates any piecewise constant mean except at times where shifts occur. Authors have previously used differencing to estimate global parameters in the changepoint literature. For example, Tecuapetla-Gómez and Munk (2017) discuss a class of difference– based estimators for autocovariances in nonparametric changepoint segment regression when the errors are from a stationary m- dependent process. The paper Fryzlewicz (2014) uses differencing to get an estimate of \(\text {Var}(X_t)\), although IID errors are assumed in this work. The estimator in (16) below comes from Chakar et al. (2017) and is also based on differencing. This said, there seems to be no previous literature using differencing to estimate AR(p) parameters in a setting corrupted by mean shifts. As an aside, differencing also detrends a time series; the estimators below perform well if a time series has both changepoints and a linear trend.

Let \(\{ X_t \}\) be a stationary series satisfying the causal AR(p) difference equation

with \(\{ Z_t \}\) a zero mean IID sequence with a finite fourth moment. Since \(X_t\) may be causally expressed in terms of \(Z_t, Z_{t-1}, \ldots\), the autoregressive coefficients are uniquely determined by the pth order recursion

and its boundary conditions Brockwell and Davis (1991). Here, \(\gamma _X(h)=\text {Cov}(X_t,X_{t-h})\) and we use the analogous notation \(\rho _X(h)=\text {Corr}(X_t, X_{t-h})\). Consider the sequence of first differences defined by \(d_t=X_t-X_{t-1}\). Then \(\{ d_t \}\) is stationary with

and

One can also show that \(\{ d_t \}\) satisfies an ARMA(p, 1) difference equation with a first-order moving average parameter of \(-1\). We now show that \(\phi _1, \ldots , \phi _p\) can be recovered from the autocorrelation function of the differences.

Let \(P(A\Vert B)\) denote the best linear predictor (BLP) of a random variable A from linear combinations of elements in the set B. We assume that B includes a constant term to allow for cases with a nonzero mean. It is well known that for a stationary causal ARMA process, the linear representation of the best linear prediction of future series values from past series values is unique Brockwell and Davis (1991). Equations determining the autoregressive coefficients can be derived by equating two different expressions for the BLP.

Executing on the above, (3) gives

where \(\kappa =1-\sum _{j=1}^p\phi _j\) (\(\kappa \ne 0\) by causality). Substituting \(X_{p-j}=X_p-\sum _{j=0}^{p-2}d_{p-j}\) for \(j=1, \ldots , p-1\) into the last line above yields

To express the BLP in terms of \(\mathbf {d}=(d_p, \ldots , d_1)^T\) only, use the prediction equations to obtain

where \(\varvec{\Gamma }_d\) is the \(p \times p\) covariance matrix of \(\mathbf {d}\), which is known to be invertible for a causal stationary ARMA \(\{ d_t \}\) (see Proposition 5.1 in Brockwell and Davis (1991)). Combining the above gives

with \((v_1,v_2,\ldots ,v_p)=\text {Cov}(X_p,\mathbf {d})\varvec{\Gamma }_d^{-1}\).

The coefficients \(\mathbf {v}^T=(v_1,\ldots , v_p)\) can be written in terms of the correlation function of the differences in (6):

where \(\mathbf{R}_d\) is the \(p \times p\) autocorrelation matrix of \(\mathbf{d}\) and

can be extracted from (5) and the relation

A second representation of the BLP is given by the prediction equations:

where the predicting coefficients are \((u_1, u_2, \ldots , u_p) = \text {Corr}(d_{p+1}, \mathbf {d})\mathbf{R}_d^{-1}\). Here, \(u_p\) is the lag p partial autocorrelation of \(\{ d_t \}\). Equating the coefficient of \(d_1\) in (7) and (9) yields \(- \kappa v_p= u_p\). If \(v_p \ne 0\), which we tacitly assume to avoid trifling work, we can set \(\kappa =-u_p/v_p\). Equating the coefficients on the right hand sides of (7) and (9), and solving for \(\varvec{\phi }\) produces an expression of the autoregressive coefficients in terms of the autocorrelations of \(\{ d_t \}\):

where \(v_0=1\) and \(u_0=-1\). If \(\{ X_t \}\) satisfies (3), then \(\phi _1, \ldots , \phi _p\) satisfy (10). Now let \(\{ d_t \}\) be a stationary sequence with \(v_p \ne 0\) and suppose that \(\varvec{\phi }^T=(\phi _1,\ldots , \phi _p)\) satisfies (10):

with

Since \(u_p\) is the partial correlation of \(\{ d_t \}\) at lag p,

with \(\varvec{\rho }_d^T=\left( \rho _d(1), \ldots , \rho _d(h) \right)\). Substituting this into the above linear equation for \(\varvec{\phi }\) and simplifying gives

where

with \(\mathbf {c}^*=\left( -1/2, 1/2 + \rho _d(1), \ldots , 1/2 + \sum _{k=1}^{p-1} \rho _d(k) \right) ^T\). Note that each element in \(\varvec{M}\) is a function of \(\rho _d(1), \ldots , \rho _d(p)\).

The \(p=1\) case will shed light on the above calculations. Here, (10) and (6) give

which exceeds unity whenever \(\rho _X(2) < 2\rho _X(1)-1\), which can happen for some AR(p) models. However, if \(\{ X_t \}\) follows

and \(|\phi |< 1\),

In general, if \(\varvec{\phi }\) is from a causal AR(p) model satisfying (4), then (11) provides a one-to-one transformation between \(\rho _{d}(1), \ldots , \rho _d(p)\) and \(\varvec{\phi }\). However, if \(\{X_t\}\) does not follow a causal AR(p) recursion, there is no guarantee that \(\varvec{\phi }\) satisfying (10) corresponds to a causal AR(p) model. In practice, this presents no issue since it is easy to check to see if a fitted AR(p) model is causal. If our fitted model is not causal, this is an indication that \(\{X_t\}\) is inadequately described by an AR(p) series. In this case, we simply change p and refit until causality is achieved.

Given observations \(X_1, \ldots , X_N\), we estimate the lag h sample autocorrelation of the differences from

Here, \(\bar{d}= (N-1)^{-1}\sum _{t=1}^{N-1} d_t\) is the sample mean. The AR(p) model will be fit using (10) with \(\gamma _d(h)\) replaced by the sample version \(\hat{\gamma }_d(h)\):

where \({\hat{\mathbf{{c}}}}\) is obtained from (8) by replacing all elements with their estimates.

To ensure that the estimated \(v_p\) is not zero, one simply checks this in practice. It is also recommended to check to see if the fitted AR(p) model is causal.

Summarizing, our proposed algorithm for fitting an AR(p) model using differences is

-

1.

Compute \({\hat{\mathbf{{u}}}}\) and \({\hat{\mathbf{{v}}}}\). If \(\hat{v}_p=0\), reduce the AR order to \(p-1\) and refit.

-

2.

Use (10) with \(\mathbf {u}={\hat{\mathbf{{u}}}}\) and \(\mathbf {v}={\hat{\mathbf{{v}}}}\) to find \(\hat{\varvec{\phi }}\), and check to see that the estimates correspond to a causal model. If the solution is non-causal, change p and refit.

The above algorithm produces a \(\hat{\varvec{\phi }}\) for a causal AR(p) process satisfying (11):

where each element in \(\hat{\mathbf{M}}\) corresponds to an element of \(\mathbf{M}\) with \(\rho _d(h)\) replaced by \(\hat{\rho }_d(h)\) for each h. For any stationary sequence of first differences \(\{ d_t \}\), each element of \(\hat{\mathbf{M}}\) converges almost surely to its theoretical value. In particular, as \(N \rightarrow \infty\), \(\hat{\mathbf{M}} \rightarrow \mathbf{M}\) in the almost sure sense.

We end this section by estimating \(\sigma ^2\). There are several moment equations that can be used to estimate \(\sigma ^2\). For example, multiplying both sides of the ARMA(p, 1) difference equation,

by \(d_t\), taking expectations, and solving for \(\sigma ^2\) yields,

A moment based estimator of the variance is hence

In the next section, we show that \(\hat{\sigma }^2\) is a \(\sqrt{N}\)-consistent estimator of \(\sigma ^2\).

4 Asymptotic normality

This section shows that if \(m=m(N)\) grows slowly enough in N, the estimators in the last section will be consistent and asymptotically normal. If the number of changepoints m is small relative to N, then the mean shifts should have a negligible impact on the estimated autocovariance of the differences, since \(X_t-X_{t-1} = d_t-d_{t-1}\) except at the changepoint times \(\tau _1, \ldots , \tau _m\). In particular, to obtain asymptotic normality, we assume that as \(N \rightarrow \infty\), for some finite B,

-

A.1

\(\max _{0 \le k\le m(N)} \mid \mu _{k+1}-\mu _k \mid \le B\).

-

A.2

\(m(N)=o(\sqrt{N})\).

Condition A.1 imposes existence of some bound on the mean shift sizes and Condition A.2 regulates the number of changepoints that can occur.

We begin with asymptotic normality of the autocorrelations for first-order differences in the general ARMA(p, q) case, which may be of distinct interest. The asymptotic normality of the AR(p) estimators is a corollary to Theorem 1.

Theorem 1

If \(\{ X_t \}_{t=1}^N\) obeys (1) with \(\{\epsilon _t \}\) satisfying (2) where \(\{ Z_t \}\) is IID white noise having a finite fourth moment, then for each fixed positive integer k, as \(N \rightarrow \infty\),

Here, the elements in the \((k+1) \times (k+1)\) dimensional \(\mathbf {W}\) are from Bartlett’s formula for the asymptotic covariance matrix of \((\hat{\rho }_\epsilon (1), \ldots , \hat{\rho }_\epsilon (k+1))^T\), (see Chapter 8 of Brockwell and Davis 1991) and \(\mathbf {B}\) is \(k \times (k+1)\) dimensional with form

Proof

We first show that the changepoints have negligible impact on estimated autocorrelations in the limit. To do this, write \(d_t=X_t-X_{t-1}= (\epsilon _t - \epsilon _{t-1}) +\delta _t\), with \(\delta _t=(\mu _k-\mu _{k-1}) I_{[t=\tau _{k+1}]}\), and \(I_A\) the indicator of the set A. Letting

then

where \(\mathcal{T} = \{ \tau _1, \ldots , \tau _m \}\) denote all changepoint times. The term on the right hand side converges to zero if \(N^{-1/2}m \rightarrow 0\) as \(N \rightarrow \infty\) (this is Condition A.2) and the sum is bounded in probability (this is guaranteed by Conditions A.1, A.2, and the properties of \(\{ \epsilon _t \}\)). We see that the asymptotic distribution of \(\hat{\gamma }_d(h)\) is the same as that of \(\tilde{\gamma }_d(h)\).

It is easy to see that

where \(o_P(1)\) denotes a term that converges to zero in probability as \(N \rightarrow \infty\). Using the above and \(\hat{\gamma }_d(0)/\gamma _\epsilon (0) \rightarrow 2(1-\rho _\epsilon (1))\) in the almost sure sense, we have

for each \(h = 1, \ldots , k\). Hence,

\(\square\)

Theorem 1 now follows from classic results for asymptotic normality for sample autocorrelations of ARMA processes (see Chapter 8 of Brockwell and Davis 1991).

Corollary 2

Suppose that \(\{ X_t \}\) follows (1) with \(\{ \epsilon _t \}\) satisfying (2) with \(\{ Z_t \}\) IID white noise having a finite fourth moment. For the estimator in (11), as \(N \rightarrow \infty\),

Here, \(\varvec{\Sigma }=\mathbf {M} \mathbf {B W} (\mathbf {M} \mathbf {B})^T\).

Proof of Corollary 2

Since \(\{ d_t \}\) is stationary and ergodic, the elements of \(\hat{\varvec{M}}\) converge to those in \(\varvec{M}\) in the almost sure sense; specifically, (15) gives

The conclusion of Corollary 2 now follows. \(\square\)

Theorem 1 and Corollary 2 imply that \({\hat{\varvec{\rho }}}_d\) and \(\hat{\varvec{\phi }}\) are both consistent estimators, so that \(\hat{\sigma }^2\) given by (14) is a consistent estimator of the white noise variance.

5 A simulation study

A simulation study with AR(p) errors is now conducted. Our Yule-Walker moment estimator based on first-order differencing is now compared to several estimators, including the robust AR(1) estimator of Chakar et al. (2017), the BIP \(\tau\)-estimators of Muma and Zoubir (2017), and the rolling window methods employed in Beaulieu and Killick (2018).

The paper Chakar et al. (2017) studies the AR(1) case and proposes an estimator that is robust to mean shifts:

It is not clear how to extend this work to cases where \(p > 1\).

The rolling window methods of Beaulieu and Killick (2018) estimate autocorrelations via window-based methods as follows. For a window length w, with \(w \le N\), a moving window scheme generates \(N-w+1\) subsegments, the \(i\mathrm{th}\) subsegment containing the data at times \(i, \ldots , i+w-1\). Each subsegment is treated as a stationary series (even though some may contain mean shifts and are thus truly nonstationary) and the time series parameters are estimated in subsegment i from the data in this subsegment only. The final estimates are taken as medians of the estimates over all subsegments. The hope is that most windows will be “changepoint free”, and medians over all subsegments will not be heavily influenced by the few windows containing changepoints. Of course, such a scheme may not use all data efficiently in estimation. Moreover, Beaulieu and Killick (2018) demonstrates that the success of this procedure depends heavily on the choice of w. As we show below, these robust autocovariance estimation methods do not perform particularly well for this problem.

In each simulation, the series length is \(N=1,000\) and m is randomly generated from the discrete uniform distribution \(\text {Uniform} \{ 0, 1, \ldots , 10 \}\), which roughly corresponds to the changepoint frequency in our data example in the next section. All changepoint times are generated randomly within \(\{ 2, 3, \ldots , N \}\) with equal probability — we do not impose any minimal spacing between successive changepoint times. The segment means \(\mu _i\) are randomly generated from a \(\text {Uniform}(-1.5,1.5)\) distribution. Ten thousand independent runs are conducted for all cases.

We first consider AR(1) errors, simulating \(\phi\) randomly from the \(\text {Uniform}(-0.95, 0.95)\) distribution and \(\{ Z_t \}\) as Gaussian white noise with a unit variance. Our Yule-Walker difference estimator in (12) is denoted by Diff in future figures. This estimator will be compared to a variety of alternative approaches. The robust AR(1) estimator in (16) is denoted by AR1seg. Averaged rolling window estimators, using different window lengths, are denoted by their lengths: N, N/2, N/5, N/10, N/20, and N/50. We also compare to the general ARMA robust estimator of Muma and Zoubir (2017) applied to the differenced data, which is denoted by BIP. Here, we fit a general ARMA(1,1) model for the errors, which does not take into account that the MA(1) parameter should be -1. This extra flexibility should make the BIP method appear better than it truly is. Finally, we include an estimator based on our approach but with the outlying observations in \(\{ d_t \}\) first removed, which we denote by Outlier. Since our method is “corrupted" by non-zero means at the changepoint times, removing outliers (which are likely to occur at the changepoint observations) should improve our approach. For outlier detection, we use a simple nonparametric Tukey fence and acknowledge that other detection schemes could be used.

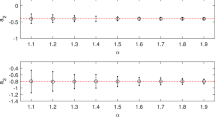

Our simulation results are summarized in Fig. 1. The obvious winner is the Yule-Walker estimator based on first-order differencing. Indeed, this estimator is unbiased and has the smallest variance. The AR1seg estimator is unbiased; however, it has a larger variability than the Yule-Walker difference estimators. The performance of the rolling window estimators depends on the choice of the window length, but appears to be inferior to the difference based estimator, even with the optimal window size selected (which is likely somewhere between N/20 and N/50 in this simulation). It is hard to decide the optimal window length in practice and smaller window lengths considerably increase computation time. While the general BIP robust estimator appears unbiased, it has a much larger variance than all other estimators. Indeed, this estimator seems to be the worst of all. Our outlier removal approach has a slightly positive bias and slightly larger variance, likely induced by the tendency to remove true observations as outliers.

We now move to AR(2) errors. In each AR(2) simulation, the AR coefficients were uniformly generated from the triangular region guaranteeing model causality: \(\phi _1 + \phi _2 < 1\), \(\phi _2 - \phi _1 < 1\), and \(|\phi _2 |< 1\). In these simulations, the changepoint total is fixed at \(m=9\) and all segments have equal lengths. All mean shifts alternate in sign with an absolute magnitude of 2.0, the first shift moving the series upwards. The series length varies with \(N \in \{ 1000, 2000, 5000, 10000, 20000 \}\). Since \(p > 1\), the AR1seg estimator is not applicable. The rolling-window estimator and general BIP robust estimator were dropped from consideration due to their poor AR(1) performance and computational time requirements. The simulation results show that estimator bias and variance decreases as the length of the series increases, reinforcing the consistency results in the last section Fig. 2.

Moving to AR(4) simulations, to meet model causality requirements, the AR(4) characteristic polynomial is factored into its four roots, denoted by \(1/r_1, 1/r_2, 1/r_3\), and \(1/r_4\). That is,

Causality implies that all \(r_i\) should lie inside the complex unit circle. To meet this, \(r_1\) and \(r_2\) will be randomly generated from the Uniform\((-0.9, 0.9)\) distribution, and \(r_3\) is a randomly generated complex number with modulus \(\vert r_3 \vert <0.9\). The root \(r_4\) is taken as the complex conjugate of \(r_3\). This mixes real and complex roots in the AR(4) characteristic polynomial. All other simulation settings are identical to those in the AR(2) case. Figure 3 shows our results, which exhibit the same pattern as the AR(2) case, with decreasing bias and variance as N increases.

Our next simulation returns to the AR(1) setting and conducts a sensitivity analysis to mean shift sizes. Here, estimator accuracy is more greatly influenced by the magnitude of the mean shifts than changepoint locations. We take all mean shifts to have the same size \(\Delta\) and introduce the signal-to-noise ratio (SNR), defined as the absolute mean shift magnitude over the marginal series standard deviation of \(X_t\):

For simplicity, \(\sigma ^2\) is set to unity. The number of changepoints is fixed at \(m=9\) and their locations are randomly generated over \(\{ 2, \ldots , N \}\) with \(N=1,000\). In each run, the true \(\phi\) is simulated from the \(\text {Uniform}(-0.95, 0.95)\) distribution. The nine mean shifts alternate signs, with \(|\Delta |\) varied in [0,5]. Boxplots of the difference between the estimated \(\hat{\phi }\) and the true \(\phi\) are presented in Fig. 4.

The horizontal line in Fig. 4 marks zero bias in \(\hat{\phi }\); the solid curve depicts the average differences between \(\hat{\phi }\) and \(\phi\). Obviously, the larger the mean shift magnitude, the more our estimator degrades. This said, in practice, larger mean shift sizes can usually be identified as outliers in the differenced series (Chen and Liu 1993; McQuarrie and Tsai 2003) or can easily be identified in the original series, despite the AR contamination. As such, the essential challenge lies with estimating the AR(p) parameters in the presence of smaller mean shifts.

Two more simulations are included. Our first simulation shows how AR(p) processes can approximate MA(q) errors in changepoint problems. The specifications of the series and changepoints are the same as the first AR(1) case of this section, but the model errors obey the MA(1) model

with \(\theta = 0.5\). The plot in Fig. 5 shows the autocorrelation function of our fitted AR(10) process from one simulation run only. Notice that the fitted AR(10) autocovariance is essentially zero at most lags that exceed unity, indicating the overall quality of the AR(10) approximation (an MA(1) model is characterized by an autocovariance that is non-zero only at lag 1).

Our final simulation considers order selection of p for AR errors by adding the Bayesian Information Criterion (BIC) penalty \((p+1)\ln (N)\) to minus two times the log likelihood of the model. The mean shifts in \(\{ X_t \}\) “contaminate” the likelihood for \(\{ X_t \}\) away from a likelihood for an AR series with a fixed (constant) mean. Our remedy here is to demean \(\{ X_t \}\) before estimating p. While other methods of order estimation are possible, this procedure worked the best amongst several that were experimented with. More specifically, the AR(p) coefficients are first estimated via \(\{ d_t \}\) for each candidate AR order \(p \in \{ 1, 2, \ldots , p_\mathrm{{max}} \}\), where \(p_\mathrm{{max}}\) is some preset maximum AR order to consider. Then, one-step-ahead prediction residuals were computed and the changepoint configuration was estimated by some changepoint technique. The pruned exact liner time (PELT) algorithm of Killick et al. (2012) was used here. The estimated changepoint configuration is then used to demean \(\{ X_t \}\). The likelihood and BIC scores are then calculated from the demeaned series for each order p and the order with the smallest penalized likelihood BIC score is selected.

In our simulation, \(N=1,000\), nine equally-spaced mean shifts of size 2.5 corrupt the series, and the errors are generated from a causal AR(4) process with coefficients \(\varvec{\phi }=(0.3, -0.3, -0.2, -0.1)\). The estimated AR order for 1, 000 simulations is plotted in the Fig. 6 histogram. While BIC selects \(p=4\) the majority of the time, it is also prone to overestimation, selecting the order 5 in more than 20% of the runs. AR order overestimation by BIC is classically appreciated in even changepoint-free settings (Brockwell and Davis 1991).

6 Applications

6.1 Changepoints in AR(p) Series

As previously discussed, most changepoint techniques mistakenly flag changepoints when underlying positive dependence is ignored. For example, (Lund and Shi 2020) argues that shifts identified in the London house price series of Fryzlewicz (2020) may be more attributable to the positive correlations in the series than to actual mean shifts. CUSUM based techniques are known to degrade with positive correlation (Shi et al. 2022). To remedy this, authors recommend detecting changepoints from estimated versions of the one-step-ahead prediction residuals of the series (Bai 1993; Robbins et al. 2011). This requires estimation of the autocovariance structure of the series in the presence of the unknown changepoints. As such, a major application of our methods serves to decorrelate (pre-whiten) series without any prior knowledge of the changepoint configuration of the series. IID-based changepoint techniques, applied to the estimated one-step-ahead prediction residuals, can then be used to estimate any mean shifts in the series. The Yule–Walker difference estimator proposed here is extensively used in Shi et al. (2022) to do just this. In addition, our difference estimator supplies a long-run variance estimate needed in the changepoint methods in Eichinger and Kirch (2018), Romano et al. (2021), and Dette et al. (2020).

Table 1 demonstrates the improved performance of two popular multiple changepoint methods, wild binary segmentation (WBS) Fryzlewicz (2014) and PELT Killick et al. (2012). In each run, an AR(1) series of length \(N=500\) is simulated with \(\phi\) fixed within \(\{ 0.25, 0.50, 0.75 \}\), and \(\sigma ^2=1\). The series has either no changepoints or three equally spaced changepoints; all mean shift sizes are the same, are denoted by \(\Delta\), and are chosen to induce the constant signal-to-noise requirement of \(\text {SNR}=2\) in (17). All simulations are aggregated from 1, 000 independent runs. In Table 1, \(\overline{\hat{m}}\) and \(SE_{\hat{m}}\) denote the average and standard error of the estimated number of changepoints when WBS and PELT are directly applied to the series. The quantities \(\overline{\hat{m}^d}\) and \(SE_{\hat{m}^d}\) denote the average and standard error of the estimated number of changepoints from the one-step-ahead prediction residuals after fitting an AR(1) series to the differences by our methods.

It is apparent that IID based WBS and PELT methods overestimate the number of changepoints in a dependent series when positive correlation is ignored; PELT appears to be more resistant to dependence issues than WBS. In contrast, with the help of the proposed Yule-Walker difference estimator and decorrelation techniques, both WBS and PELT become much more accurate.

6.2 New Bedford precipitation

Annual precipitations from New Bedford and Boston, Massachusetts are studies in Li and Lund (2012). The data are available from https://w2.weather.gov/climate/xmacis.php?wfo=box. The ratio of these series (New Bedford to Boston) is displayed in Figure 7, along with a fitted mean of a model that allows for both multiple changepoints and AR errors. Three documented changepoints, occurring at the years 1886, 1917, and 1967 are indicated. After adjusting for four regime means, Fig. 8 shows the sample ACF plot of the precipitation ratio series, suggesting that the series is correlated. The Bayesian Information Criterion estimates \(p=1\) as the AR order. Although this order does not seem to adequately describe all non-zero autocorrelations, we use it anyway to illustrate our points.

The AR(1) parameter estimates fluctuate wildly over distinct methods. Specifically, our difference Yule-Walker estimator and BIP \(\tau\)-estimators produce antipodal estimates as can be seen in Table 2. Our estimate agrees closely with an estimate computed by assuming the three changepoint times are known, but the level of autocorrelation is significantly less than that estimated in a Yule–Walker scheme that ignores all three changepoint times. The results show that one needs to be careful in changepoint problems with correlated data—mean shifts and correlation can inject similar features into time series.

7 Conclusions

Differencing methods can effectively be used to estimate the autocovariance structure of an AR(p) series corrupted by mean shift changepoints. Our Yule–Walker estimator for autoregressive models is easy to implement, computationally fast, consistent, and asymptotically normal. While the proposed estimator is adversely impacted by large mean shifts, large shifts appear as large outliers in the differenced series and can be removed. When changepoints are present, the difference methods developed here significantly improve changepoint techniques developed for IID errors. The techniques are also applicable if the series has a linear trend (constant across all regimes) with intercept shifts.

References

Bai, J. (1993). On the partial sums of residuals in autoregressive and moving average models. Journal of Time Series Analysis, 14(3), 247–260.

Beaulieu, C., & Killick, R. (2018). Distinguishing trends and shifts from memory in climate data. Journal of Climate, 31, 9519–9543.

Brockwell, P. J., & Davis, R. A. (1991). time series: Theory and methods (2nd ed.). New York City: Springer.

Chakar, S., Lebarbier, E., Lévy-Leduc, C., & Robin, S. (2017). A robust approach for estimating change-points in the mean of an \(\text{ AR }(1)\) process. Bernoulli, 23(2), 1408–1447.

Chakravarthy, N., Spanias, A., Iasemidis, L. D., & Tsakalis, K. (2004). Autoregressive modeling and feature analysis of DNA sequences. EURASIP Journal on Advances in Signal Processing, 2004(1), 1–16.

Chan, K. W. (2022). Mean-structure and autocorrelation consistent covariance matrix estimation. Journal of Business & Economic Statistics, 40(1), 201–215. https://doi.org/10.1080/07350015.2020.1796397.

Chen, C., & Liu, L.-M. (1993). Joint estimation of model parameters and outlier effects in time series. Journal of the American Statistical Association, 88(421), 284–297.

Chen, L., Wang, W., & Wu, W.B. 2021. Inference of breakpoints in high-dimensional time series. Journal of the American Statistical Association (just-accepted), 1–33

Dette, H., Eckle, T., & Vetter, M. (2020). Multiscale change point detection for dependent data. Scandinavian Journal of Statistics, 47(4), 1243–1274.

Eichinger, B., & Kirch, C. (2018). A MOSUM procedure for the estimation of multiple random change points. Bernoulli, 24(1), 526–564.

Frick, K., Munk, A., & Sieling, H. (2014). Multiscale change point inference. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 76(3), 495–580.

Fryzlewicz, P. (2014). Wild binary segmentation for multiple change-point detection. The Annals of Statistics, 42(6), 2243–2281.

Fryzlewicz, P. (2020). Detecting possibly frequent change-points: Wild binary segmentation 2 and steepest-drop model selection. Journal of the Korean Statistical Society, 49, 1027–1070.

Hall, C. B., Lipton, R. B., Sliwinski, M., & Stewart, W. F. (2000). A change point model for estimating the onset of cognitive decline in preclinical alzheimer’s disease. Statistics in Medicine, 19(11–12), 1555–1566.

Hewaarachchi, A. P., Li, Y., Lund, R., & Rennie, J. (2017). Homogenization of daily temperature data. Journal of Climate, 30(3), 985–999.

Jackson, B., Scargle, J. D., Barnes, D., Arabhi, S., Alt, A., Gioumousis, P., et al. (2005). An algorithm for optimal partitioning of data on an interval. IEEE Signal Processing Letters, 12(2), 105–108.

Killick, R., Fearnhead, P., & Eckley, I. A. (2012). Optimal detection of changepoints with a linear computational cost. Journal of the American Statistical Association, 107(500), 1590–1598.

Li, S., & Lund, R. (2012). Multiple changepoint detection via genetic algorithms. Journal of Climate, 25(2), 674–686.

Lund, R., & Shi, X. (2020). Commentary on: Detecting possibly frequent change-points: Wild binary segmentation 2 and steepest-drop model selection. Journal of the Korean Statistical Society, 49, 1090–1095.

Maidstone, R., Hocking, T., Rigaill, G., & Fearnhead, P. (2017). On optimal multiple changepoint algorithms for large data. Statistics and Computing, 27(2), 519–533.

McQuarrie, A. D., & Tsai, C.-L. (2003). Outlier detections in autoregressive models. Journal of Computational and Graphical Statistics, 12(2), 450–471.

Muler, N., Pena, D., & Yohai, V. J. (2009). Robust estimation for ARMA models. The Annals of Statistics, 37(2), 816–840.

Muma, M., & Zoubir, A. M. (2017). Bounded Influence Propagation \(\tau\)-Estimation: A New Robust Method for ARMA Model Estimation. IEEE Transactions on Signal Processing, 65(7), 1712–1727.

Norwood, B., & Killick, R. (2018). Long memory and changepoint models: A spectral classification procedure. Statistics & Computing, 28(2), 291–302.

Page, E. S. (1954). Continuous inspection schemes. Biometrika, 41(1–2), 100–115.

Robbins, M., Gallagher, C., Lund, R., & Aue, A. (2011). Mean shift testing in correlated data. Journal of Time Series Analysis, 32(5), 498–511.

Romano, G., Rigaill, G., Runge, V., Fearnhead, P. 2021. Detecting abrupt changes in the presence of local fluctuations and autocorrelated noise. Journal of the American Statistical Association (To appear)

Shi, X., Gallagher, C., Lund, R., & Killick, R. (2022). A comparison of single and multiple changepoint techniques for time series data. Computational Statistics and Data Analysis. 170. https://doi.org/10.1016/j.csda.2022.107433

Tecuapetla-Gómez, I., & Munk, A. (2017). Autocovariance estimation in regression with a discontinuous signal and M-dependent errors: A difference-based approach. Scandinavian Journal of Statistics, 44(2), 346–368.

Acknowledgements

Rebeca Killick gratefully acknowledges funding from Grants EP-R01860X-1, EP-T014105-1, NE-T012307-1, and NE-T006102-1. Robert Lund and Xueheng Shi acknowledge support from grant NSF DMS 2113592. Comments from two referees and the Associate Editor greatly improved this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Colin Gallagher, Rebecca Killick, Robert Lund and Xueheng Shi contributed equally to this work.

Rights and permissions

About this article

Cite this article

Gallagher, C., Killick, R., Lund, R. et al. Autocovariance estimation in the presence of changepoints. J. Korean Stat. Soc. 51, 1021–1040 (2022). https://doi.org/10.1007/s42952-022-00173-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42952-022-00173-5