Abstract

In this paper, we propose a stochastic approximation to the well-studied expectation–maximization (EM) algorithm for finding the maximum likelihood (ML)-type estimates in situations where missing data arise naturally and a proportion of individuals are immune to the event of interest. A flexible family of three parameter exponentiated Weibull (EW) distributions is assumed to characterize lifetimes of the non-immune individuals as it accommodates both monotone (increasing and decreasing) and non-monotone (unimodal and bathtub) hazard functions. To evaluate the performance of the proposed algorithm, an extensive simulation study is carried out under various parameter settings. Using likelihood ratio tests, we also carry out model discrimination within the EW family of distributions. Furthermore, we study the robustness of the proposed algorithm with respect to outliers in the data and the choice of initial values to start the algorithm. In particular, we show that our proposed algorithm is less sensitive to the choice of initial values when compared to the EM algorithm. For illustration, we analyze a real survival data on cutaneous melanoma. Through this data, we illustrate the applicability of the likelihood ratio test toward rejecting several well-known lifetime distributions that are nested within the wider class of the proposed EW distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Immune or cured individuals in the context of survival analysis refers to subjects who would not encounter the event of interest under study, e.g., death due to a disease or relapse of a condition or getting admitted to a hospital. Consequently, the observed lifetimes for the immune individuals would always concur with the length of the study. Hence, the immune individuals would be indiscernible from the censored yet non-immune or susceptible individuals. Ordinary survival analysis techniques ignore the presence of the fraction of individuals who are cured, commonly known as the cure fraction or cure rate. Therefore, several modified modeling techniques (known as cure rate models) to analyze time to event data marked by the presence of cure fraction have been studied over the years (see [5, 10, 43, 46, 54]). Cure rate models have been applied extensively on cancer survival data for cancers with relatively better prognosis (e.g., melanoma, breast cancer, leukemia, and prostate cancer), recidivism studies, engineering reliability, and defaulting on a loan in credit risk assessment studies.

The mixture cure rate model, also called the Bernoulli cure rate model, is probably the most widely used cure rate model; see the recently published monograph by Peng and Yu [52]. Under the mixture cure rate model, the overall population lifetime Y is defined as

where \(Y_s\) denotes the survival time for any susceptible individual, \(Y_c = \infty \) denotes the survival time for any cured individual and \(\eta \) is a random variable taking the value 1 or 0 depending on whether an individual is susceptible or immuned, respectively. The model in (1) can be further represented by

where \(S_p(.)\) and \(S_s(.)\) are the respective survival functions corresponding to Y and \(Y_s\), and \(\pi _0=P(\eta =0)\) is the cure rate. Interestingly, Meeker [33] used the mixture cure model to study reliability of integrated circuits but called the model as limited failure population model. The mixture cure rate model has been explored in detail with various assumptions and extensions. For nonparametric approaches to cure rate estimation, interested readers may refer to López-Cheda et al. [29] and Amico et al. [1]. Furthermore, for new approaches to mixture cure rate parameter estimation, one may refer to the recent works of Musta et al. [36] and Patilea and Van Keilegom [50].

An alternative representation of the cure rate model, namely, the promotion time cure rate model was suggested by Yakovlev et al. [60] and was later investigated by Chen et al. [19]. In a promotion time cure rate model, the case of 0 or 1 risks (causes) is extended to a more general case. In particular, a random variable M is introduced to denote the number of competing causes and, in this particular case, it is distributed as Poisson. Furthermore, letting \(Y_j, j=1, \dots , M\), denote the promotion time or survival time corresponding to the j-th cause, the overall population survival function \(S_p(.)\) can be expressed as:

where, given \(M=m\), \(Y_j, j=1, \dots , m\), are independently and identically distributed with a common survival function S(.), and \({{\tilde{g}}}(.)\) is the probability generating function of M. Note that, in (3), M is unobserved, \(Y_j, j=1, \dots , M,\) are independent of M, and \(Y=\min \{Y_0, Y_1, \dots , Y_M\}\) is the actual lifetime of an individual with \(P(Y_0=\infty )=1\). In cancer studies, competing causes may refer to the tumor cells that can potentially metastasize and cause detectable cancer. Several authors [19] have assumed M to follow a Poisson distribution, whereas [2, 5, 7, 31, 44, 47, 54] have modeled M by a flexible Conway–Maxwell (COM) Poisson distribution. When M is assumed to follow a Poisson distribution with mean \(\theta \), \(S_p(y)\) in (3) reduces to \(S_p(y) = e^{-\theta F(y)}\) and the cure rate is given by \(\pi _0=e^{-\theta }\), where \(F(\cdot )=1-S(\cdot )\).

The survival function \(S_s(y)\) in (2) or S(y) in (3) for any susceptible individual could be modeled and estimated by both parametric and nonparametric methods. From the statistical literature, positive valued continuous distributions like Weibull, gamma, generalized gamma and log normal distributions have been applied to model \(Y_s\) or \(Y_j\) (refer [6, 8, 9]). Semi-parametric generalizations to the model by assuming proportional hazards structure for \(Y_s\) or \(Y_j\) have been discussed by [19, 51, 55], whereas a class of semi-parametric transformation models have been studied by [30, 62, 63], among others. Applications of piecewise constant and linear functions to estimate the baseline hazard function under proportional hazards model were discussed by [4, 62].

Missing data arise in many forms. While it is common, especially in the modeling of lifetimes, to face missing covariates, censoring can give rise to other forms of missing information. In the analysis of data with cure fraction, incompleteness in data comes in two folds. Firstly, if censored, the information on the actual survival time of an individual is missing. Secondly, the information on the cured status is also missing for all individuals whose lifetimes are censored. Therefore, parameter estimation may be challenging for the cure rate models. Several methods of estimating the model parameters as well as the baseline hazard or survival functions have been implemented, including ordinary maximum likelihood (ML) estimation, Monte Carlo approximation of a marginal likelihood, profile likelihood, restricted nonparametric ML estimation [57], unbiased estimating equations [11, 30] and projected nonlinear conjugate gradient technique-based estimation [48, 49]. The expectation–maximization (EM) algorithm is also a commonly used estimation algorithm in the context of cure rate models. Taylor [56] developed an EM algorithm for mixture cure model where a Kaplan–Meier-type approach was used to model the latency part of the model. Sy and Taylor [55] developed an EM algorithm for joint estimation of the incidence and latency regression parameters in mixture cure model using the nonparametric form of the likelihood. Kuk and Chen [28] developed an EM algorithm to estimate the baseline survival function of their proposed semi-parametric mixture cure model and proposed to estimate the regression parameters by maximizing a Monte Carlo approximation of the marginal likelihood function. Peng and Dear [51] developed an EM algorithm for a nonparametric mixture cure model where they estimated the baseline survival using a Breslow-type estimator. Balakrishnan and Pal [5] first developed an EM algorithm for the Conway–Maxwell Poisson cure model that includes the mixture cure model as a special case; see also [3, 6, 8, 10, 27]. Very recently, Davies et al. [20] have introduced a stochastic version of the EM algorithm, called the stochastic EM algorithm (SEM), in the context of cure rate models where \(Y_j\) is modeled by a generalized exponential distribution for every \(j=1, \dots , M\). Interested readers may also refer to Pal [40] for a computationally efficient SEM algorithm developed in the context of cure rate model with negative binomial competing risks.

For the mixture cure model, \(M=0\) or 1, and \(Y=\min \{Y_0, Y_1\}\). In this manuscript, our main contribution is in the development of a SEM algorithm to find the estimates of the parameters of the mixture (Bernoulli) cure rate model. In this regard, we propose to model the lifetime \(Y_1\) by the flexible family of exponentiated Weibull (EW) distributions, which has not been studied before in the context of cure rate models. Being introduced by Celeux and Diebolt [16], the SEM algorithm has been designed to precisely estimate parameters in cases where the log-likelihood function has multiple stationary points, and the EM algorithm does not guarantee convergence to the significant local maxima. Unlike the EM algorithm, the SEM technique is less sensitive to the initial parameter choices, and the implementation is less cumbersome since it does not involve derivation of explicit expected values [15, 18]. In fact, we show in this paper that our proposed SEM algorithm is more robust to the choice of initial values when compared to the EM algorithm. This is the main motivation behind the development of the SEM algorithm.

The probability density function (pdf) of \(Y_1\), under the assumption of EW distribution, is expressed as:

where \(y_1>0\) is the support of the distribution, \(\alpha >0\) and \(k >0\) are the shape parameters, and \(\lambda >0\) denotes the scale parameter characterizing the distribution. The EW distribution has been introduced by Mudholkar and Srivastava [35] as an extension to the Weibull distribution by considering an additional shape parameter to the model. As pointed out by Mudholkar and Hutson [34] and Khan [26], modeling failure times by an EW distribution is parsimonious as it accommodates both monotone increasing (\(k\alpha \ge 1, k\ge 1\)) or decreasing (\(k \alpha \le 1, k \le 1\)), and non-monotone unimodal (\(k\alpha >1, k<1\)) or bathtub shaped (\(k\alpha <1, k>1\)) hazard functions. Moreover, EW encompasses many well-known lifetime distributions as special cases, e.g., exponential (\(\alpha =k=1\)), Rayleigh (\(\alpha =1, k=2\)), Weibull (\(\alpha =1\)), generalized or exponentiated exponential (\(k=1\)), and Burr type X (\(k=2\)) distributions. As a result, one can carry out hypotheses tests and model discrimination to validate if the sub-models fit better. Furthermore, EW serves as an alternative to the generalized gamma distribution, which is known to accommodate both monotone and non-monotone hazard functions.

The remainder of this manuscript is arranged in the following manner. We provide our model descriptions for the mixture cure model and basic properties of the EW distribution in Sect. 2. Section 3 deals with the structure of the observed data and development of the likelihood function. In Sect. 4, we discuss the implementation of the SEM algorithm for estimating the model parameters and their standard errors. An extensive simulation study with carefully chosen parameter settings is carried out in Sect. 5 to examine the robustness and accuracy of the proposed estimation technique. A model discrimination using likelihood-based criterion is performed to assess the flexibility of the EW distribution and the performance of the likelihood ratio test to correctly identify the true distribution. In Sect. 6, the flexibility of the proposed model and the performance of the estimation method are further substantiated based on real-life data collected from a malignant melanoma study. Finally, we provide some concluding remarks and scope of future research in Sect. 7.

2 Model Descriptions

2.1 Exponentiated Weibull Lifetime Distribution

We assume the lifetime of the susceptible individuals to follow an EW distribution. Hence, the cumulative distribution function (cdf), survival function and hazard function of the susceptible lifetime \(Y_1\) have the following forms:

and

respectively, where \(y_1>0\), \(\alpha>0, k>0\) and \(\lambda >0\). One interesting interpretation of the EW distribution is in the area of reliability. If there are n components in a parallel system and the lifetimes of the components are independently and identically distributed as EW, then, the system lifetime also follows an EW distribution. As pointed out by Nadarajah et al. [37], EW finds applications in a wide variety of problems, e.g., modeling extreme value data on water discharge arising due to river floods, data on optimal accelerated life test plans under type I censoring, firmware system failures, software release times, fracture toughness of materials, bus motor failures and number of ozone peaks, among others. From Mudholkar and Srivastava [35] and Mudholkar and Hutson [34], we note that

-

(a)

If \(\alpha =k=1\), the hazard rate is constant;

-

(b)

If \(\alpha =1\), the hazard rate is increasing for \(k>1\) and decreasing for \(k<1\);

-

(c)

If \(k=1\), the hazard rate is increasing for \(\alpha >1\) and decreasing for \(\alpha <1\).

Additionally, the combinations of the two shape parameters, as presented in Table 1, render various shapes to the hazard function.

The general expression for the q-th-order raw moment for a random variable \(Y_1\) following the EW distribution has been derived by [39], which is given by

where N denotes the set of natural numbers.

2.2 Mixture (Bernoulli) Cure Rate Model

On assuming the number of competing causes M to follow a Bernoulli distribution, i.e., there is either a single cause that can result in an event of interest or there is no cause resulting in a cure, the probability mass function (pmf) of M can be expressed as:

where \(\nu >0\). The survival function of the random variable \(Y=\min \{Y_0,Y_1\}\), also referred to as the population survival function, can be obtained by combining (3) and (6) and is given by

Further, note that

is the cure rate or cure probability of any individual in the population. Hence, the population density function can be derived from (10) as:

3 Form of the Data and Likelihood Function

The right censoring scheme is considered in our study. For \(i=1, \dots , n\) with n denoting the sample size, let \(Y_i\) and \(C_i\), respectively, denote the actual survival time and the censoring time for individual i. Let \(\delta _i=I(Y_i \le C_i)\) be the censoring indicator and \(T_i=\min \{Y_i, C_i\}\) be the observed lifetime for the i-th individual. Therefore, the observed survival data is represented in the form of a triplet denoted by \(\{(t_i, \delta _i, {\varvec{x}}^*_i): i=1, \dots , n\}\), where \(t_i\) is a realization of \(T_i\) and \({\varvec{x}}_i^{*}=(x_{1i}, \dots , x_{di})^{\tiny \mathrm T} \in {\mathbb {R}}^d\) is the d-dimensional covariate vector specific to the i-th subject. Let \({\varvec{x}}_i=\left( 1, {\varvec{x}}_i^{*\tiny \mathrm T}\right) ^{\tiny \mathrm T} \in {\mathbb {R}}^{d+1}\). We further denote \({\varvec{X}}=\left( {\varvec{x}}_1, \dots , {\varvec{x}}_n \right) ^{\tiny \mathrm T} \in {\mathbb {R}}^{(d+1) \times n}\),  and \({\varvec{t}}=(t_1, \dots , t_n)^{\tiny \mathrm T} \in {\mathbb {R}}^{n}_{>0}\), where

and \({\varvec{t}}=(t_1, \dots , t_n)^{\tiny \mathrm T} \in {\mathbb {R}}^{n}_{>0}\), where  . In order to associate the effect of covariates to the cure rate for every \(i=1, \dots , n\), we use log-linear function \(\nu _i=e^{{\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}}\) to link the parameter \(\nu >0\) with the covariate vector \({\varvec{x}}_i\), where \({\varvec{\beta }}=(\beta _0, \beta _1, \dots , \beta _d)^{\tiny \mathrm T}\) is the respective \((d+1)\)-dimensional vector of regression parameters. Note that this readily implies that the cure rate is linked to the covariates through a logistic function, i.e., we have

. In order to associate the effect of covariates to the cure rate for every \(i=1, \dots , n\), we use log-linear function \(\nu _i=e^{{\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}}\) to link the parameter \(\nu >0\) with the covariate vector \({\varvec{x}}_i\), where \({\varvec{\beta }}=(\beta _0, \beta _1, \dots , \beta _d)^{\tiny \mathrm T}\) is the respective \((d+1)\)-dimensional vector of regression parameters. Note that this readily implies that the cure rate is linked to the covariates through a logistic function, i.e., we have

We define \({\varvec{\theta }}=\left( {\varvec{\beta }}^{\tiny \mathrm T}, \alpha , k, \lambda \right) ^{\tiny \mathrm T} \in {\varvec{\Theta }}\subset {\mathbb {R}}^{d+4}\) as the unknown parameter vector and \({\varvec{\Theta }}\) as the parameter space. Therefore, the likelihood function \(L_O({\varvec{\theta }}; {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}})\) based on the observed data is given by

where \(S_p(.; {\varvec{\theta }}, \delta _i, {\varvec{x}}^*_i)\) and \(f_p(.; {\varvec{\theta }}, \delta _i, {\varvec{x}}^*_i)\) denote the respective population density and survival functions for individual i, and can be obtained from (10) and (12), respectively, with some notation adjustments. Hence, the observed data log-likelihood function is expressed as:

Let us define \(\Delta _1=\{i: \delta _i=1\}, \Delta _0=\{i: \delta _i=0\}, n_1=|\Delta _1|\) and \(F_w(t_i; k, \lambda )=1-e^{-(t_i/\lambda )^k}\) for \(i=1, \dots , n\).

From (10), (12), (15) and using \(\nu _i=e^{{\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}}, i=1, \dots , n\), the log-likelihood function for the mixture cure rate model takes the following form:

The expressions of the first-order and second-order derivatives of \(l_O({\varvec{\theta }}; {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}})\) with respect to \({\varvec{\theta }}\) are presented in Section A1 of the supplementary material. These expressions would allow interested researchers to directly maximize \(l_O({\varvec{\theta }}; {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}})\) to obtain an estimate of \({\varvec{\theta }}\). However, the presence of missing data (due to censoring) strongly motivates us to develop algorithms that can handle such missingness of data. In this paper, we derive both EM and SEM algorithms but we focus on SEM because of the reasons already specified (and empirically proven later in Sect. 5.4). For interested readers, the steps involved in the development of the EM algorithm are presented in Appendix. Note that our EM algorithm is developed under the assumption of the flexible EW distribution to model the lifetime of the susceptible subjects and hence is different from the existing EM algorithms in the literature.

4 Development of the SEM Algorithm

As defined in Sect. 1, let \(\eta _i=1\) if an individual is not cured and \(\eta _i=0\) if an individual is cured, for \(i=1, \dots , n\). It can be seen that \(\eta _i=1\) for \(i \in \Delta _1\) and \(\eta _i\) is unknown (hence, is missing) for \(i \in \Delta _0\). The data we observe is partial, and hence, the problem can be treated as an incomplete data problem. Therefore, the EM or EM-like algorithms can be applied for the ML or ML-type estimation of \({\varvec{\theta }}\). Note that the convergence rate of the EM algorithm depends on factors such as choice of initials parameter values and the flatness of likelihood surface. Furthermore, for likelihood surfaces characterized by several stationary points including saddle points, the EM algorithm does not guarantee convergence to the significant local maxima. Moreover, analytical steps in deriving conditional expectation involve computation of integrals which is often intensive, complex, and in some cases, intractable. In our considered modeling framework, computation of the conditional expectations is not complicated. However, the EM may be quite sensitive to the choice of initial values, which motivates the development of an alternate estimation algorithm, i.e., the SEM algorithm.

The SEM algorithm works on the idea of simulating pseudo values to replace the missing values. The SEM comprises two steps, namely, the S-step and the M-step. The S-step involves generating a pseudo sample from the conditional distribution of the missing data given the observed information and current parameter values. The M-step involves finding the parameter value which maximizes the complete data log-likelihood function based on the pseudo sample [15, 17]. The random generation of values to impute missing data allows the SEM algorithm to overcome the problem of getting trapped in an insignificant local maxima or saddle point [14, 15]. A discussion on the asymptotic properties based on a mixture model reveals that the sequence of estimates generated by the SEM algorithm converges to a stationary Gaussian distribution whose mean is the consistent ML estimator of the mixing proportion [21].

Define \({{\tilde{H}}}_1=\{i: \eta _i=1\}\) and \(\tilde{H}_0=\{i: \eta _i=0\}\). Note that \({{\tilde{H}}}_0\) is unobserved and \({{\tilde{H}}}_1\) is only partially observed. Hypothetically, assuming that we completely observe \({{\tilde{H}}}_0\) and \({{\tilde{H}}}_1\), then, for any individual \(i \in {{\tilde{H}}}_0\), \(Y_i>C_i\) and the contribution by i to the likelihood function would be through the cure rate \(\pi _0({\varvec{x}}^*_i; {\varvec{\beta }})\). Again, for any \(i \in {{\tilde{H}}}_1\), \(Y_i > C_i\) or \(Y_i \le C_i\), \(T_i=\min \{Y_i, C_i\}\) and contribution to the likelihood function by i would be through the population density function \(f_p(t_i; {\varvec{\theta }}, \delta _i, {\varvec{x}}^*_i)\). For the latter, the information on the actual lifetime is missing if the individual is right censored, and observed when not censored. Therefore, we would stochastically generate both cured status \(\eta _i\) and subject’s actual lifetime \(y_i^*\), and hence, generate pseudo data of the form \(\left\{ (y^*_i, \delta _i, {\varvec{x}}^*_i, \eta _i): i = 1, \dots , n\right\} \).

To implement the SEM algorithm, the complete data likelihood and log-likelihood functions are expressed as

and

respectively, where \(y_i^*\) denotes the actual lifetime generated stochastically for \(i \in \Delta _0\), and \({\varvec{y}}^*=\left( y_1^*, \dots , y^*_n\right) ^{\tiny \mathrm T}. \) For the mixture cure rate model, (18) becomes

4.1 Steps Involved in the SEM Algorithm

Start the iterative process for the SEM algorithm with a reasonable initial choice \({\varvec{\theta }}^{(0)}=\left( {\varvec{\beta }}^{(0)}, \alpha ^{(0)}, k^{(0)}, \lambda ^{(0)}\right) ^{\tiny \mathrm T}\) of the parameter \({\varvec{\theta }}\). For some pre-defined \(R \in {\mathbb {Z}}^+\) and \(r=0, 1, \dots , R\), assume \({\varvec{\theta }}^{(r)}=\left( {\varvec{\beta }}^{(r)}, \alpha ^{(r)}, k^{(r)}, \lambda ^{(r)}\right) ^{\tiny \mathrm T}\) as the estimate of the parameter \({\varvec{\theta }}\) for the r-th step. The steps below permit the computation of the ML-type estimate of \({\varvec{\theta }}\) by applying the SEM algorithm.

-

1.

S-Step There are two sub-steps to be followed in the stochastic step of the implementation.

-

A.

Generating cure status \(\eta _i^{(r+1)}\) for \(i=1, \dots , n\):

-

(i)

For \(i \in \Delta _1\), \(\eta ^{(r+1)}_i=1\).

-

(ii)

For \(i \in \Delta _0\), generate \(\eta _i^{(r+1)}\) from a Bernoulli distribution with conditional probability of success \(p^{(r+1)}_{s,i}\) given by

$$\begin{aligned} p^{(r+1)}_{s,i}&= P\left\{ \eta _i^{(r+1)}=1 \Big \vert \left( {\varvec{\theta }}^{(r)}, Y_i>t_i, {\varvec{x}}^*_i, i \in \Delta _0 \right) \right\} \nonumber \\&= 1- \frac{ \pi _0\left( {\varvec{x}}^*_i; {\varvec{\beta }}^{(r)}\right) }{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) }. \end{aligned}$$(20)

-

(i)

-

B.

Generating actual lifetime \(y^{*(r+1)}_i\) for \(i=1, \dots , n\):

-

(i)

For \(i \in \Delta _1\), \(y^{*(r+1)}_i = t_i\) is the actual lifetime.

-

(ii)

For \(i \in \Delta _0\) and if \(\eta ^{(r+1)}_i=0\) from step 1A., \(y^{*(r+1)}_i=\infty \) since the individual is cured with respect to the event of interest.

-

(iii)

For \(i \in \Delta _0\) and if \(\eta ^{(r+1)}_i=1\) from 1A., we only observe the censoring time \(t_i=c_i\) since the actual lifetime \(Y_i>t_i\). Hence, actual lifetime \(y^{*(r+1)}_i\) is generated from a truncated EW distribution with density \(g(.; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i)\), where

$$\begin{aligned}&g\left( y^{*(r+1)}_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) \nonumber \\&\quad =\frac{f_p\left( y^{*(r+1)}_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) }{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) }, \ \ y^{*(r+1)}_i>t_i \text { with } \delta _i=0. \end{aligned}$$(21)Let \(G(.; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i)\) denote the cdf corresponding to \(g(.; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i)\). It can be noted that \(G(.; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i)\) is not a proper cdf as

$$\begin{aligned} G\left( y^{*(r+1)}_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) = 1- \frac{S_p\left( y^{*(r+1)}_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) }{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) } \end{aligned}$$(22)and

$$\begin{aligned} \underset{ y_i^{*(r+1)}\rightarrow \infty }{{\lim }} G\left( y^{*(r+1)}_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) = 1- \frac{\pi _0\left( {\varvec{x}}^*_i; {\varvec{\beta }}^{(r)}\right) }{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) } = b_i^{(r+1)}, \end{aligned}$$(23)where \( b_i^{(r+1)}=1\) only if \(\pi _0\left( {\varvec{x}}^*_i; {\varvec{\beta }}^{(r)}\right) =0\). In this case, two schemes could be followed for generating \(y^{*(r+1)}_i\).

-

(a)

Generate \(u_i^{(r+1)}\) randomly from \(\text {uniform}\left( 0, b_i^{(r+1)}\right) \) and take an inverse transformation to find \(y^{*(r+1)}_i=G^{-1}\left( u_i^{(r+1)}; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) \).

-

(b)

Generate \(m_i^{(r+1)}\) using \(p\left( m_i^{(r+1)}; e^{{\varvec{x}}_i^{\tiny \mathrm T}{\varvec{\beta }}^{(r)}}\right) \) given in (9), i.e., from a Bernoulli distribution with success probability \(\left\{ \frac{e^{{\varvec{x}}_i^{\tiny \mathrm T}{\varvec{\beta }}^{(r)}}}{1+e^{{\varvec{x}}_i^{\tiny \mathrm T}{\varvec{\beta }}^{(r)}}}\right\} \). If \(m_i^{(r+1)}=1\), then simulate \(y_i^{*(r+1)}\) from the pdf \(g(.; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i)\), which is the pdf of a truncated EW distribution given in (21), truncated at \(t_i\).

-

(a)

-

(i)

-

A.

-

2.

M-Step Once the pseudo complete data \(\left\{ \left( y^{*(r+1)}_i, \delta _i, {\varvec{x}}^*_i, \eta _i^{(r+1)}\right) : i = 1, \dots , n\right\} \) is obtained, find the updated estimate by

$$\begin{aligned} {\varvec{\theta }}^{(r+1)}= & {} \left( {\varvec{\beta }}^{(r+1)}, \alpha ^{(r+1)}, k^{(r+1)}, \lambda ^{(r+1)}\right) ^{\tiny \mathrm T} \nonumber \\= & {} \underset{{\varvec{\theta }}}{{\arg \max }} \text { } {{\tilde{l}}}_{C}({\varvec{\theta }}; {\varvec{y}}^{*(r+1)}, {\varvec{\delta }}, {\varvec{X}}, {\varvec{\eta }}^{(r+1)}), \end{aligned}$$(24)where \({\varvec{y}}^{*(r)}=\left( y_1^{*(r)}, \dots , y^{*(r)}_n\right) ^{\tiny \mathrm T}\) and \({\varvec{\eta }}^{*(r)}=\left( \eta _1^{*(r)}, \dots , \eta ^{*(r)}_n\right) ^{\tiny \mathrm T}\). The maximization part can be carried out using multidimensional unconstrained optimization methods such as Nelder–Mead simplex search algorithm or quasi Newton methods such as BFGS algorithm (see [24]). These algorithms are available in statistical software R version 4.0.3 under General Purpose Optimization package called optimr().

-

3.

Repeat steps 1 and 2 a certain number of times, say R, to obtain a sequence of estimates \(\left\{ {\varvec{\theta }}^{(r)}\right\} _{r=1}^R\). As pointed out by Diebolt and Celeux [21], the sequence \(\underset{R \rightarrow \infty }{{\lim }} \left\{ {\varvec{\theta }}^{(r)}\right\} _{r=1}^R\) does not converge pointwise, and hence, the implementation of the SEM algorithm will not result in the consistent ML estimator. However, the ergodic Markov chain \(\left\{ {\varvec{\theta }}^{(r)}\right\} _{r=1}^R\) generated by the implementation of the SEM algorithm converges to a normal distribution. It was further established by [21] that the mean of the normal distribution is the consistent ML estimate of \({\varvec{\theta }}\) under some mild technical assumptions. Based on this result and arguments provided by Celeux et al. [15] and Davies et al. [20], the SEM estimate \(\hat{{\varvec{\theta }}}_{SEM}\) may be obtained by one of the following two approaches:

-

(a)

Calculate the SEM estimate by

$$\begin{aligned} \hat{{\varvec{\theta }}}_{SEM}= \{R-R^*\}^{-1} \sum _{r=R^*+1}^R {\varvec{\theta }}^{(r)}, \end{aligned}$$(25)where iterations \(r=1, \dots , R^*\) represent “burn-in” or “warm-up” period to reach the stationary regime, and the estimates \({\varvec{\theta }}^{(r)}, r=1, \dots , R^*\) are discarded. Marschner [32] indicated that a point estimate of \({\varvec{\theta }}\) can be calculated by taking average over the estimates obtained from iterations of the SEM algorithm after sufficiently long burn-in period. Both Marschner [32] and Ye et al. [61] used first 100 iterations of the algorithm as the burn-in period, and considered additional 900–1000 iterations for obtaining the SEM estimates. However, it is recommended to do a trace plot of the sequence of estimates against iteration numbers to examine the trend in the behavior of the estimates, and thereby, choosing an appropriate burn-in period.

-

(b)

Carry out \(R^*\) iterations as “warm-up” and derive the sequence \(\left\{ {\varvec{\theta }}^{(r)}\right\} _{r=1}^{R^*}\) by implementing the SEM algorithm. Find

$$\begin{aligned} \hat{{\varvec{\theta }}}_{SEMI}= \underset{\{{\varvec{\theta }}^{(r)}, r=1, \dots , R^*\}}{{\arg \max }} \text { } l_{O}\left( {\varvec{\theta }}; {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}}\right) . \end{aligned}$$(26)By taking \(\hat{{\varvec{\theta }}}_{SEMI}\) as the starting value, the EM algorithm is implemented to derive the ML estimate \(\hat{{\varvec{\theta }}}\) (see [15, 17]).

-

(a)

Note that approach (b) above requires the development of both SEM and EM algorithms and hence may not be a preferred approach to calculate the estimates. On the other hand, approach (a) above may result in under-estimation of the variances of the estimators, see [22]. In fact, in our model fitting study, as presented in Sect. 5.1, we have encountered the problem with under-estimated variances. The variances did improve when the sample size is very large and when the cure proportions are very small. In this paper, we propose to take each \({\varvec{\theta }}^{(r)}\), \(r=R^*+1,\cdots ,R,\) and evaluate the observed data log-likelihood function. Then, we take that \({\varvec{\theta }}^{(r)}\) as the estimate of \({\varvec{\theta }}\) for which the log-likelihood function value is the maximum.

It is also important to discuss the differences between the SEM algorithm and the Monte Carlo EM (MCEM) algorithm [12, 13]. In the MCEM algorithm, the E-step, i.e., the conditional expectation of the missing data, is approximated by the Monte Carlo mean based on, say, N samples drawn from the conditional distribution of the missing data. The resulting conditional expected log-likelihood function is maximized (M-step) to obtain an improved set of estimates. Then, the E-step and M-step are repeated iteratively until some convergence criterion is achieved. On the other hand, in the SEM algorithm, the E-step is replaced by just a single draw from the conditional distribution of the missing data, followed by the M-step. Then, we repeat the E-step and M-step a fixed number, say, R times. Since the SEM is based on drawing one sample from the conditional distribution of the missing data along with R iterations, whereas the MCEM is based on drawing multiple samples (may be taken as 500 or 1000) in each iteration to approximate the conditional mean, it is clear that the MCEM is computationally more expensive than the proposed SEM. This has been recently empirically proven by [40], where it was shown that the time taken by MCEM is roughly 5 to 6 times the time taken by SEM; see Table 9 in [40]. In addition, it was also shown that the MCEM results in the coverage probabilities to go beyond the nominal level; see Table 8 in [40]. Given these recent findings, the proposed SEM is considered to be the preferred algorithm even though it is possible to develop the MCEM in our setting.

5 Simulation Study

5.1 Model Fitting

To assess the performance of the proposed SEM algorithm in the context of mixture cure model with EW lifetimes, we carry out an extensive simulation study. For this purpose, we mimic the cutaneous melanoma data (analyzed later in Sect. 6) with the nodule category (taking values 1, 2, 3 and 4) as the only covariate in our application. As can be seen from the results in Sect. 6, the cure rate is monotonically decreasing with nodule category. So, along the same lines, in this simulation study we include a covariate effect x in the form of \(x = j\) for \(j = 1,2,3,4\), and consider the cure rate to be decreasing in the covariate. From hereon in, we refer to the observations associated with covariate value j as belonging to group j. We also link the cure rate \(\pi _0\) to the covariate x through the relation \(\pi _0(x,\varvec{\beta })=\left\{ 1+e^{\beta _0+\beta _1x}\right\} ^{-1}\). In order to determine the values of the regression parameters, two cure rates need to be fixed. With this purpose, we fix the values of \(\pi _0(x=1,\varvec{\beta })\) (for group 1) and \(\pi _0(x=4,\varvec{\beta })\) (for group 4) as \(\pi _{01}\) and \(\pi _{04}\), respectively. This results in the following expressions for the regression parameters \(\beta _0\) and \(\beta _1\):

Using (27), the cure rates for groups 2 and 3 can be easily calculated as \(\pi _{02}=\left\{ 1+e^{\beta _0+2\beta _1}\right\} ^{-1}\) and \(\pi _{03}=\left\{ 1+e^{\beta _0+3\beta _1}\right\} ^{-1}\), respectively.

For sample sizes \(n = 200\) and 400, we vary the lifetime distribution parameters, cure rates and censoring proportions. For cure rates, we consider two levels, which we refer to as “High” and “Low.” Within our study, in the high setting, we fix groups 1 and 4’s cure rates as 0.50 and 0.20, respectively, and in the low setting, we fix them as 0.40 and 0.10, respectively. Finally, as mentioned in Sect. 3, we allow for observations to be right censored. In order to incorporate this mechanism, we fix the overall censoring proportion for each group (\(p_j, j=1,2,3,4\)). In the high setting, these are fixed as (0.65,0.50,0.40,0.30) and in the low setting, (0.50,0.40,0.30,0.20). With these values, for each group, realized censoring times can be generated by assuming they follow an exponential distribution with rate parameter \(\gamma \), which, for fixed censoring proportion p and cure rate \(\pi _0\), can be found by solving the following equation:

where under the mixture cure rate model in (9), \(1-\pi _0=\frac{\nu }{1+\nu }\). Note that \(S(\cdot )\) is the survival function of the EW distribution, as defined in (6). From here, assuming that various cure rates and censoring rates have been predetermined for each group, the following steps are followed to generate the observed lifetime, T, under our model. First, a value of M is generated from a Bernoulli distribution with \(P[M=1] = 1-\pi _0\) and with it, a censoring time C is generated from an exponential distribution with rate parameter \(\gamma \). If \(M = 0\), this means there is no risk and the true lifetime is infinite with respect to the event of interest and so in this case, the observed lifetime is T = C. If M = 1, there is risk and so a true lifetime, Y, from the EW distribution is generated with parameters (\(\alpha ,k, \lambda \)) and in this case, the observed lifetime is simply \(T=\text {min}\left\{ Y, C\right\} \). Finally, if \(T = Y\), the right censoring indicator, \(\delta \), is taken as 1, otherwise, it is taken as 0.

For the parameters of the lifetime distribution Y, we consider three different parameter settings as 1: \((\alpha ,\lambda ,k)=(2,1.5,1)\), 2: (1,1.5,2) and 3: (1,0.5,1.5). For these choices of lifetime parameters, we consider different combinations of cure rates and sample sizes, resulting in 12 different settings. These settings are presented in Table 2. For a given sample of observations, once the parameter estimates are obtained, with the goal to construct confidence intervals, we numerically approximate the Hessian matrix and then invert it to estimate the standard errors of the estimators. As to be seen in the tables, this will allow for the calculation of associated coverage probabilities. For each parameter setting, we generate K = 500 samples using Monte Carlo simulation. Note that within the SEM algorithm, we choose \(R=1500\) runs and use the first 500 as burn-in. To find the initial values of model parameters, we randomly choose a value in the parameter space within 10% of the true value. In Table 3, and in Tables A2.1 and A2.2 in the supplementary material, we summarize the performance of the SEM algorithm in estimating the model parameters. The tables include the estimates (and standard errors), bias, root mean square error (RMSE), and two coverage probabilities (90% and 95%). We first observe that as n increases, with everything else fixed, the bias and RMSE both decrease, and coverage probabilities improve. Subsequently, in Table 4, and in Tables A2.3 and A2.4 in the supplementary material, we summarize the corresponding results for the estimation of cure rates. Across all parameter settings, it is evident that the estimates of the cure rates are consistently unbiased.

5.2 Robustness Study with Respect to the Presence of Outliers

In this section, we study the performance of the SEM algorithm when there are outliers present in the data. We consider a scenario where the generated data contains 5% outliers. For this purpose, we generate 95% of the data with true parameter setting as follows: \((\beta _0,\beta _1,\alpha ,\lambda ,k) = (-0.462,0.462,1,1.5,2)\), which corresponds to \((\pi _{01},\pi _{04}) = (0.5,0.2)\) and \((p1,p2,p3,p4) = (0.65,0.50,0.40,0.30)\). The remaining 5% of the data are outliers and are generated from \((\beta _0,\beta _1,\alpha ,\lambda ,k) = (-0.192,0.597,1,1,0.3)\), which corresponds to \((\pi _{01},\pi _{04}) = (0.4,0.1)\) and \((p1,p2,p3,p4)\) = (0.50,0.40,0.30,0.20). As far as the lifetime distribution is concerned, the true parameter setting results in a mean of 1.329, whereas the setting to generate outliers results in a mean of 9.260. Then, for the entire data, we use the SEM algorithm to estimate the true model parameters \((\beta _0,\beta _1,\alpha ,\lambda ,k) = (-0.462,0.462,1,1.5,2)\) and the true cure rates \((\pi _{01},\pi _{02},\pi _{03},\pi _{04}) = (0.5,0.386,0.284,0.2)\). Based on 500 Monte Carlo simulations, we present the estimation results of model parameters in Table 5 and of the cure rates in Table 6. From Table 5, it is clear that the presence of outliers results in biased estimates, which is more pronounced for the lifetime parameters and specifically for the parameter k. This is certainly due to the choice of the parameters using which the outliers were generated. Note that there is also a significant under-coverage that can be noticed for the lifetime parameters. The increase in sample size helps in the reduction of the standard errors and the RMSEs. It also helps in the reduction of bias for all model parameters except for the parameter k. From Table 6, we note that the estimates of cure rates contain little bias when compared to the results in Sect. 5.1 where there were no outliers. A slight under-coverage is also noticed for some cure rates. Finally, the performances of the SEM and EM algorithms are similar in the presence of outliers.

5.3 Model Discrimination

As mentioned in Sects. 1 and 2, the EW family of distributions includes many well-known lifetime distributions. Consequently, it makes sense to carry out a model discrimination study across the sub-models through the general EW distribution. The idea is to evaluate the performance of the likelihood ratio test in discriminating among the sub-models. For this purpose, we choose the setting with “Low” cure rates and a sample of size 400. The EW scale parameter \(\lambda \) is chosen to be 2.5.

Data from the mixture cure rate model are generated with the lifetimes coming from the five special cases (true models) of the EW family, namely, exponential (\(\alpha =1, k=1\)), Rayleigh (\(\alpha =1, k=2\)), Weibull (\(\alpha =1, k=1.5\)), generalized exponential (\(\alpha =2, k=1\)) and Burr type X (\(\alpha =2, k=2\)) distributions. For data generated from every true model, all five sub-models are fitted and parameter estimation is carried out by applying the SEM algorithm. In particular, we carry out following hypothesis tests corresponding to the five sub-models:

-

Exponential: \(H_{0}: \alpha =k=1\) vs. \(H_{1}\): at least one inequality in \(H_{0}\);

-

Rayleigh: \(H_{0}: \alpha =1, k=2\) vs. \(H_{1}\): at least one inequality in \(H_{0}\);

-

Weibull: \(H_{0}: \alpha =1\) vs. \(H_{1}: \alpha \ne 1\);

-

Generalized exponential: \(H_{0}: k=1\) vs. \(H_{1}: k \ne 1\);

-

Burr type X: \(H_{0}: k=2\) vs. \(H_{1}: k \ne 2\).

Let \({{\hat{l}}}\) and \({{\hat{l}}}_0\) denote the unrestricted maximized log-likelihood value and the maximized log-likelihood value obtained under \(H_0\), respectively. Then, by Wilk’s theorem, \(\Lambda =-2\left( {{\hat{l}}} - {{\hat{l}}}_0\right) {\sim } \chi ^2_{q^*}\) asymptotically under \(H_0\) where \(\chi ^2_{q^*}\) represents a chi-squared distribution with \(q^*\) degrees of freedom and \(q^*\) denotes the difference in the number of parameters estimated to obtain \({{\hat{l}}}\) and \({{\hat{l}}}_0\). The p values for the tests are compared against a significance level of 0.05 to decide whether to reject \(H_0\) or not. Proportion of rejections of \(H_0\) based on 1000 Monte Carlo runs are reported in Table 7 for every combination of the true and fitted models.

The observed significance level corresponding to every true lifetime distribution is close to the nominal level or significance level of 0.05. This implies that the chi-squared distribution provides a good approximation to the null distribution of the likelihood ratio test statistic. Next, we observe that when the true lifetime model is either exponential or Rayleigh, the rejection rates for the fitted Weibull model are 0.052 and 0.040, respectively. These rejection rates are close to 0.05 because both exponential and Rayleigh are contained within the Weibull distribution. On the other hand, when the true lifetime is generalized exponential or Burr type X, the rejection rates for the fitted Weibull lifetime are 0.542 and 0.596, respectively. These rejection rates are moderate because the Weibull distribution doesn’t accommodate the generalized exponential or Burr type X distributions as special cases. Based on some of the high rejection rates, we can conclude that the likelihood ratio test can discriminate between the following models: exponential and Rayleigh, Burr type X and exponential, Burr type X and generalized exponential, and generalized exponential and Rayleigh. As such, for a given data, there is a necessity to employ the likelihood ratio test for choosing the correct sub-model, if possible. If none of the sub-models provide an adequate fit, the proposed EW model should be used.

5.4 Comparison of the SEM Algorithm with the EM Algorithm

In this section, we compare the proposed SEM algorithm with the EM algorithm through the robustness of these algorithms with respect to the choice of initial values. Specifically, we study cases where the initial guesses of the model parameters are far away from the true values. For this purpose, for each model parameter, we provide an initial guess that differs from its true value by at least 50% and by at most 75%. Then, we run the SEM and EM algorithms using the same choice of initial values to make sure that the comparison between the two algorithms is fair. In Table 8, we present the percentage of divergent samples based on 500 Monte Carlo runs for different parameter settings. It is easy to see that for any considered parameter setting, the divergence percentage corresponding to the SEM algorithm is much less when compared to the EM algorithm. This clearly shows that the EM algorithm is sensitive to the choice of initial values, whereas the SEM algorithm is more robust. This, certainly, is a big advantage of the SEM algorithm and, hence, the SEM algorithm can be considered a preferred algorithm over the EM algorithm. It is interesting to note that when the true lifetime parameters are as considered in either setting 1 or setting 3, the percentage of divergent samples decreases with an increase in sample size. However, this is not true when the true lifetime parameters are as in setting 2. Similarly, for lifetime parameters as in settings 1 and 3, and for the SEM algorithm, the divergence percentages are smaller when the true cure rates are low. In this regard, for the EM algorithm, the divergence percentages are smaller for low cure rates, irrespective of the lifetime parameters.

It is also of our interest to compare the divergence rates of SEM and EM algorithms in terms of proportion of missing data. For this comparison, the initial values of the model parameters are chosen to be close to the true values. Noting that missing data arise due to censoring, we study both high and low censoring cases. In Table 9, we present the divergence rates for \(n=200\). For other sample sizes, the observations are similar and hence are not reported. It is clear that in all scenarios the divergence rate corresponding to the SEM algorithm is less than the divergence rate corresponding to the EM algorithm.

6 Analysis of Cutaneous Melanoma Data

Data description: An illustration of our proposed model with EW lifetime and proposed estimation technique is presented in this section. Motivated by an example provided in Ibrahim et al. [25] which showed influences of cure fraction, we consider the data set on cutaneous melanoma (a type of malignant skin cancer) studied by the Eastern Cooperative Oncology Group (ECOG) where the patients were observed for the period between 1991 and 1995. The objective of the study was to assess the efficacy of the postoperative treatment with high dose of interferon alpha-2b drug to prevent recurrences of the cancer. Observed survival time (t, in years) representing either exact lifetime or censoring time, censoring indicator (\(\delta =0, 1\)) and nodule category (\(x=1, 2, 3, 4\)) based on tumor thickness are selected as the variables of interest for demonstrating the performance of our model. There are 427 observations in the data set; each observation corresponds to a patient in the study with respective nodule category information. Analysis is performed based on 417 patients’ data due to missing information on tumor thickness for the remaining 10 patients. Nodule category is taken as the only covariate for our illustration. A descriptive summary of the observed survival time categorized by censoring indicator and nodule category is presented in Table 10.

Assignment of initial parameter values Let us define \(\pi _{0x}, x=1, 2, 3, 4\), to be the cure rate for the x-th nodule category. As indicated before and as can also be seen from Table 10, \(x=1\) represents the group which is likely to have the best prognosis (i.e., highest cure rate), whereas \(x=4\) represents the group likely to have the worst prognosis (i.e., lowest cure rate). Further, the censoring rates for \(x=1\) and \(x=4\) are 0.675 and 0.329, respectively. Using the monotone nature of the logistic-link function and assuming all censored individuals are cured, we obtain initial estimates of \(\beta _0\) and \(\beta _1\) by simultaneously solving

Hence, the initial estimates \(\beta _0^{(0)}\) and \(\beta _1^{(0)}\) are \(-1.212\) and 0.481, respectively. On the other hand, the initial estimates for the EW lifetime parameters \(\alpha , \lambda \) and k are obtained in two steps. In the first step, we consider (6) and note that

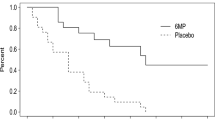

is linear in \(t_i\), where \(i \in \Delta _1\). Hence, fixing \(\alpha =\alpha _0\), ordinary least square estimates \(\lambda _0\) of \(\lambda \) and \(k_0\) of k are obtained by fitting a simple linear regression model with \({{\hat{\psi }}}(t; \alpha , \lambda , k)\) as the response and t as the predictor. Here, \({{\hat{\psi }}}(t_i; \alpha , \lambda , k)=\log \left[ -\log \left\{ 1-\left( 1-{{\hat{S}}}_s(t_i; \alpha , \lambda , k)\right) ^{1/\alpha }\right\} \right] \) and \({{\hat{S}}}_s(t_i; \alpha , \lambda , k)\) is the Kaplan–Meier estimate of the survival function evaluated at \(t_i\) for the i-th individual with \(i \in \Delta _1\). In our case, \(\alpha _0\) is chosen as 2. The Kaplan–Meier plots of the survival probabilities for the four nodule categories are presented in Fig. 1. In the second step, using (4), we define a likelihood function \(L_s(\alpha , \lambda , k; {\varvec{t}}^*)\) as

where \({\varvec{t}}^*=\{(t_i; i \in \Delta _1)\}\). From here, \(\log L_s(\alpha , \lambda , k; {\varvec{t}}^*)\) is then maximized with respect to \(\alpha , \lambda \) and k using numerical optimization routine in R with \(\alpha _0\), \(\lambda _0\) and \(k_0\) as initial parameter guesses. Finally, the ML estimates of \(\alpha \), \(\lambda \) and k are obtained as \(\alpha ^{(0)}=1.983, \lambda ^{(0)}=1.326\) and \( k^{(0)}=1.214\). Hence, \({\varvec{\theta }}^{(0)}=\left( \beta _0^{(0)}, \beta _1^{(0)}, \alpha ^{(0)}, \lambda ^{(0)}, k^{(0)}\right) = (-1.212, 0.481, 1.983, 1.326, 1.214)\) is taken as the initial parameter guess for starting the iterative processes involved in the SEM algorithm.

Model fitting: As discussed in Sect. 4, model parameters are estimated by the SEM method. Point estimate, standard error (SE), and 95% confidence interval (CI) are displayed in Table 11 for both model parameters and cure rates of all nodules categories. The standard errors of the cure rate estimates are estimated using the delta method. No overlap is observed between the confidence intervals for \(\pi _{01}\) and \(\pi _{04}\) suggesting that cure rates for these groups are significantly different. Figure 2 presents plots corresponding to the overall population survival function \(S_p(.; {\varvec{\theta }})\), where

evaluated at observed \(t_i\) for \(i =1, \dots , n\). The plot shows similar pattern as that of the Kaplan–Meier plot in Fig. 1. It can be seen that overall survival probability plots level off to points much higher than 0 (even when patients were followed up for more than 6 years), therefore, strongly indicating the presence of significant cure fractions.

Burn-in period: For the real data set, 10,000 iterations of the SEM algorithm are carried out. For each iteration, the SEM estimate for each parameter is plotted against iteration index and these are presented in Fig. 3. It is observed that all parameters show similar random behavior around the horizontal line with no discernible pattern, except for \(\alpha \). The plot for \(\alpha \) though doesn’t show upward, downward, or any other obvious pattern, yet the variability around the middle horizontal line is large and doesn’t show any obvious diminishing trend. This explains the large standard error that we have obtained corresponding to \(\alpha \). The middle horizontal lines correspond to the parameter values which return the maximized log-likelihood value after a burn-in period of 5000 iterations. The random oscillation with almost constant variance around the horizontal line indicates convergence of the SEM estimates to a stationary distribution. However, large variability in the estimates of \(\alpha \) is a concern and just taking the average over the iterations after the burn-in period results in under-estimated variance. So, it is reasonable to consider the parameter estimates of the SEM algorithm as the one which return the maximized log-likelihood value after the burn-in period (see [38]).

Model discrimination: The cutaneous melanoma data set is further analyzed by fitting all nested sub-models of the EW lifetime distribution. The parameter estimates and corresponding standard errors are presented in Table 12. To verify the appropriateness of fitting EW lifetime distribution to the melanoma data under mixture cure rate setup, maximized log-likelihood (\({{\hat{l}}}\)) values are calculated for all sub-models and formal hypotheses tests are carried out to test whether the sub-models deviate significantly from the model with EW lifetime distribution. By using the Wilk’s theorem, i.e.,

where \({{\hat{l}}}_{EW}\) and \({{\hat{l}}}_{sub}\) are the respective maximized log-likelihood values under the EW model (alternative model) and sub-model (null model), and \(\zeta \) is the difference in the number of parameters estimated, respective p values for all sub-models are obtained (Table 12). The p values indicate that all nested models, except the one fitted with generalized exponential distribution, are significantly different from the EW model, and hence are rejected. Further, Akaike information criterion

values for each fitted model are also presented in the same table where \({{\hat{l}}}_{fit}\) is the maximized log-likelihood value under the fitted model and q denotes the number of parameters estimated. AIC values suggest that the generalized exponential (AIC = 1036.642) model provides the best fit. Hence, for the considered cutaneous melanoma data, the EW lifetime distribution reduces to the generalized exponential distribution. Note the closeness of the generalized exponential model to the EW model based on the AIC values.

We also check for the goodness-of-fit or adequacy of the proposed model using the estimated normalized randomized quantile residuals [23], where the residuals are estimated using the SEM estimates. Figure 4 presents the quantile–quantile (QQ) plot, which clearly suggests that the proposed mixture cure model with EW lifetime distribution provides an adequate fit to the cutaneous melanoma data. We also test for the normality of residuals using the Kolmogorov–Smirnov test. The p value corresponding to the test turns out to be 0.934, which provides very strong evidence for the normality of residuals.

Comparison with semi-parametric piecewise linear mixture cure model: Our proposed mixture cure model with exponentiated Weibull lifetime distribution for the susceptible individuals is further compared (through AIC value and running time) with the mixture cure model where the susceptible lifetimes are estimated semi-parametrically. For this purpose, we approximate the hazard function of the susceptible lifetime by a piecewise linear function. A piecewise linear approximation (PLA) of the common hazard function \(h_s(t)\) of susceptible subjects is based on three factors, namely, the number of lines to be fitted (\(L^*\)), the chosen set of cut points \(\{\tau _0, \tau _1, \dots , \tau _{L^*}\}\) related to \(L^*\) and the set of initial estimates \(\{\phi _0, \phi _1, \dots , \phi _{L^*}\}\) of the hazard function at the cut points. Note that \(\tau _0\le \tau _1 \le \dots \le \tau _{L^*}\) and \(\underset{t \rightarrow \tau _l}{\lim } h_s(t)=\phi _{l}\) for \(l=0, 1, \dots , L^{*}\) for ensuring continuity at the cut points [4]. Therefore, for \(t \ge 0\),

where \(\mu _l=\frac{\phi _l-\phi _{l-1}}{\tau _{l}-\tau _{l-1}}\) is the slope, \(\nu _l=\phi _l-\mu _l \tau _l\) is the intercept, and \(I_{[\tau _{l-1},\tau _l]}(t)=1\) only if \(t \in [\tau _{l-1},\tau _l]\), and is 0 elsewhere, for \(l=0, 1, \dots , L^*\). For \(l =0, 1, \dots , L^*\), \(\mu _l\) and \(\nu _l\) are obtained such that \(h_s(t) \ge 0\) for any \(t \ge 0\). The cure model under this setup is characterized by parameter vector \((\beta _0, \beta _1, \phi _0, \phi _1, \dots , \phi _{L^*})^{\tiny \mathrm T}\). The estimate of the hazard function may vary depending on the choice of cut points \(\tau _l\) and corresponding \(\phi _l\) for \(l=0, 1, \dots , L^*\). Following [4], for our analysis, \(L^*\) is taken from \(\{1, 2, \dots , 6\}\) indicating up to six lines are used for the hazard function approximation. For \(l=0, 1, \dots , (L^*-1)\), \(\tau _l\) is chosen as suitable sample quantile of the uncensored lifetimes \(t_i\) for \(i \in \Delta _1\), and \(\tau _{L^*}=\max \{t_i; i=1, \dots , n\}\). Then, for \(l=0, 1, \dots , L^*\), initial estimates of \(\phi _l\) is obtained by applying a kernel-based hazard estimates (using muhaz function in R) and approximating hazard values at the cut points by interpolation, and \(\tau _0=0\) with \(h_s(t)=\nu _{L^*}+\mu _{L^*} t\) for \(t \ge \tau _{L^*}\). We present a comparison of our proposed cure model with EW lifetime and cure model with PLA of the susceptible hazard function in Table 13 using the cutaneous melanoma data, where the parameters in both models are estimated by the SEM algorithm.

Results in Table 13 are based on 1000 iterations of the SEM algorithm with a burn-in period of 500 iterations. Larger iterations and burn-in periods are not considered since they don’t improve the estimation results and also avoid excessive computation time for the PLA-based models (Table 13). It can be observed that on increasing the number of cut points and lines for the PLA, the maximized log-likelihood value consistently increases in general. But, on comparing the AIC values, we note that the PLA cure model with \(L^*=2\) provides the lowest value of AIC. When compared to the proposed EW model, and noting that the EW model reduced to a generalized exponential model, the AIC value for the EW (GE) model turns out to be even smaller. Thus, the proposed mixture cure model with flexible EW lifetime distribution provides a better fit to the melanoma data when compared to the PLA-based semi-parametric mixture cure model. Also note that the other advantage of the EW model over the semi-parametric PLA-based model is with respect to the computation time (system running time in hours). As \(L^*\) increases, the computation time for the PLA model almost increases exponentially (0.4307 h. for \(L^*=1\) and 9.3056 hrs. for \(L^*=6\)). For the PLA model (\(L^*=2\)) with minimum AIC, the computation time is almost 27 times higher than the EW (GE) model. Moreover, to apply the PLA models, the cut points and corresponding initial hazard estimates should be chosen carefully to get optimum results. These factors provide justification in favor of applying a fully parametric model such as the one proposed in this paper, specifically when the parametric model is reasonably flexible and general.

7 Concluding Remarks

The main contribution of this manuscript is the development of the SEM algorithm in the context of mixture cure rate model when the lifetimes of the susceptible individuals are modeled by the EW family of distributions. While we chose to demonstrate the performance of the SEM algorithm using the wider class of EW distributions, the proposed SEM algorithm can be easily extended to other flexible choices of lifetime distributions; see Pal and Balakrishnan [42] and Wang and Pal [58]. Different approaches of computing the estimates under the SEM framework have been discussed. An extensive Monte Carlo simulation study demonstrates the accuracy of the SEM algorithm in estimating the unknown model parameters. When compared with the well-known EM algorithm, we have shown that the proposed SEM algorithm is more robust to the choice of initial values than the EM algorithm. This can be seen as an advantage of the SEM algorithm over the EM algorithm. A detailed model discrimination study using the likelihood ratio test clearly shows that different sub-distributions of the EW distribution can be easily discriminated. Hence, blindly assuming a distribution for the lifetime is not recommended. Through the real cutaneous melanoma data, we have illustrated the flexibility of the proposed EW distribution. In this regard, we have seen that the assumption of the EW distribution allows formal tests of hypotheses to be performed to select the generalized exponential distribution as the best fitted distribution. In particular, we have seen that all other special cases of the EW distribution get rejected. When compared to the PLA-based semi-parametric mixture cure model, we have seen that our proposed parametric model with EW distribution provides a better fit in terms of smaller AIC value. As potential future works, we can consider more complicated cure models such as the ones that look at the elimination of risk factors after an initial treatment [41, 45, 53] and investigate the performance of the SEM algorithm. We can also think of extending the current framework, as studied in this paper, to accommodate interval censored data, as opposed to the commonly used right censored data [44, 59]. We are currently looking at some of these open problems and we hope to report our findings in future manuscripts.

References

Amico M, Van Keilegom I, Legrand C (2019) The single-index/Cox mixture cure model. Biometrics 75:452–462

Balakrishnan N, Barui S, Milienos F (2017) Proportional hazards under Conway–Maxwell–Poisson cure rate model and associated inference. Stat Methods Med Res 26(5):2055–2077

Balakrishnan N, Barui S, Milienos FS (2022) Piecewise linear approximations of baseline under proportional hazards based COM-Poisson cure models. Commun Stat Simul Comput. https://doi.org/10.1080/03610918.2022.2032157

Balakrishnan N, Koutras M, Milienos F (2016) Piecewise linear approximations for cure rate models and associated inferential issues. Methodol Comput Appl Probab 18(4):937–966

Balakrishnan N, Pal S (2012) EM algorithm-based likelihood estimation for some cure rate models. J Stat Theory Pract 6:698–724

Balakrishnan N, Pal S (2013) Lognormal lifetimes and likelihood-based inference for flexible cure rate models based on COM-Poisson family. Comput Stat Data Anal 67:41–67

Balakrishnan N, Pal S (2014) COM-Poisson cure rate models and associated likelihood-based inference with exponential and Weibull lifetimes. In: Frenkel I, Karagrigoriou A, Lisnianski A, Kleyner A (eds) Applied reliability engineering and risk analysis: probabilistic models and statistical inference applied reliability engineering and risk analysis: probabilistic models and statistical inference. Wiley, Chichester, pp 308–348

Balakrishnan N, Pal S (2015) An EM algorithm for the estimation of parameters of a flexible cure rate model with generalized gamma lifetime and model discrimination using likelihood-and information-based methods. Comput Stat 30:151–189

Balakrishnan N, Pal S (2015) Likelihood inference for flexible cure rate models with gamma lifetimes. Commun Stat Theory Methods 44(19):4007–4048

Balakrishnan N, Pal S (2016) Expectation maximization-based likelihood inference for flexible cure rate models with Weibull lifetimes. Stat Methods Med Res 25:1535–1563

Barui S, Grace YY (2020) Semiparametric methods for survival data with measurement error under additive hazards cure rate models. Lifetime Data Anal 26(3):421–450

Bedair KF, Hong Y, Al-Khalidi HR (2021) Copula-frailty models for recurrent event data based on Monte Carlo EM algorithm. J Stat Comput Simul 91(17):3530–3548

Bedair KF, Hong Y, Li J, Al-Khalidi HR (2016) Multivariate frailty models for multi-type recurrent event data and its application to cancer prevention trial. Comput Stat Data Anal 10(11):61–173

Cariou C, Chehdi K (2008) Unsupervised texture segmentation/classification using 2-d autoregressive modeling and the stochastic expectation-maximization algorithm. Pattern Recogn Lett 29(7):905–917

Celeux G, Chauveau D, Diebolt J (1996) Stochastic versions of the EM algorithm: an experimental study in the mixture case. J Stat Comput Simul 55(4):287–314

Celeux G, Diebolt J (1985) The SEM algorithm: a probabilistic teacher algorithm derived from the EM algorithm for the mixture problem. Comput Stat Q 2:73–82

Celeux G, Diebolt J (1992) A stochastic approximation type EM algorithm for the mixture problem. Stoch Int J Probab Stoch Process 41(1–2):119–134

Chauveau D (1995) A stochastic EM algorithm for mixtures with censored data. J Stat Plan Inference 46(1):1–25

Chen M-H, Ibrahim JG, Sinha D (1999) A new Bayesian model for survival data with a surviving fraction. J Am Stat Assoc 94:909–919

Davies K, Pal S, Siddiqua JA (2021) Stochastic EM algorithm for generalized exponential cure rate model and an empirical study. J Appl Stat 48:2112–2135

Diebolt J, Celeux G (1993) Asymptotic properties of a stochastic EM algorithm for estimating mixing proportions. Stoch Model 9(4):599–613

Diebolt J, Ip EH (1995) A stochastic EM algorithm for approximating the maximum likelihood estimate. https://www.osti.gov/biblio/49148

Dunn PK, Smyth GK (1996) Randomized quantile residuals. J Comput Graph Stat 5:236–244

Fletcher R (2013) Practical methods of optimization. Wiley, New York

Ibrahim JG, Chen M-H, Sinha D (2005) Bayesian survival analysis. Wiley, New York

Khan SA (2018) Exponentiated Weibull regression for time-to-event data. Lifetime Data Anal 24(2):328–354

Kosovalic N, Barui S (2022) A hard EM algorithm for prediction of the cured fraction in survival data. Comput Stat 37:817–835

Kuk AY, Chen C-H (1992) A mixture model combining logistic regression with proportional hazards regression. Biometrika 79:531–541

López-Cheda A, Cao R, Van Jácome MA, Keilegom I (2017) Nonparametric incidence estimation and bootstrap bandwidth selection in mixture cure models. Comput Stat Data Anal 105:144–165

Lu W, Ying Z (2004) On semiparametric transformation cure models. Biometrika 91:2331–343

Majakwara J, Pal S (2019) On some inferential issues for the destructive COM-Poisson-generalized gamma regression cure rate model. Commun Stat Simul Comput 48(10):3118–3142

Marschner IC (2001) Miscellanea on stochastic versions of the algorithm. Biometrika 88(1):281–286

Meeker WQ (1987) Limited failure population life tests: application to integrated circuit reliability. Technometrics 29(1):51–65

Mudholkar GS, Hutson AD (1996) The exponentiated Weibull family: some properties and a flood data application. Commun Stat Theory Methods 25(12):3059–3083

Mudholkar GS, Srivastava DK (1993) Exponentiated Weibull family for analyzing bathtub failure-rate data. IEEE Trans Reliab 42(2):299–302

Musta E, Patilea V, Van Keilegom I (2020) A presmoothing approach for estimation in mixture cure models. arXiv:2008.05338

Nadarajah S, Cordeiro GM, Ortega EM (2013) The exponentiated Weibull distribution: a survey. Stat Pap 54(3):839–877

Nielsen SF (2000) The stochastic EM algorithm: estimation and asymptotic results. Bernoulli 6(3):457–489

Pal M, Ali MM, Woo J (2006) Exponentiated Weibull distribution. Statistica (Bologna) 66(2):139–147

Pal S (2021) A simplified stochastic EM algorithm for cure rate model with negative binomial competing risks: an application to breast cancer data A simplified stochastic EM algorithm for cure rate model with negative binomial competing risks: An application to breast cancer data. Stat Med 40:6387–6409

Pal S, Balakrishnan N (2016) Destructive negative binomial cure rate model and EM-based likelihood inference under Weibull lifetime. Stat Probab Lett 116:9–20

Pal S, Balakrishnan N (2017) An EM type estimation procedure for the destructive exponentially weighted Poisson regression cure model under generalized gamma lifetime. J Stat Comput Simul 87(6):1107–1129

Pal S, Balakrishnan N (2017) Expectation maximization algorithm for Box–Cox transformation cure rate model and assessment of model misspecification under Weibull lifetimes. IEEE J Biomed Health Inform 22:926–934

Pal S, Balakrishnan N (2017) Likelihood inference for COM-Poisson cure rate model with interval-censored data and Weibull lifetimes. Stat Methods Med Res 26:2093–2113

Pal S, Balakrishnan N (2017) Likelihood inference for the destructive exponentially weighted Poisson cure rate model with Weibull lifetime and an application to melanoma data. Comput Stat 32:429–449

Pal S, Balakrishnan N (2018) Likelihood inference based on EM algorithm for the destructive length-biased Poisson cure rate model with Weibull lifetime. Commun Stat Simul Comput 47:644–660

Pal S, Majakwara J, Balakrishnan N (2018) An EM algorithm for the destructive COM-Poisson regression cure rate model. Metrika 81(2):143–171

Pal S, Roy S (2020) A new non-linear conjugate gradient algorithm for destructive cure rate model and a simulation study: illustration with negative binomial competing risks. Commun Stat Simul Comput. https://doi.org/10.1080/03610918.2020.1819321

Pal S, Roy S (2021) On the estimation of destructive cure rate model: a new study with exponentially weighted Poisson competing risks. Stat Neerl 75(3):324–342

Patilea V, Van Keilegom I (2020) A general approach for cure models in survival analysis. Ann Stat 48:2323–2346

Peng Y, Dear KB (2000) A nonparametric mixture model for cure rate estimation. Biometrics 56(1):237–243

Peng Y, Yu B (2021) Cure models: methods, applications and implementation. Chapman and Hall/CRC, London

Rodrigues J, de Castro M, Balakrishnan N, Cancho VG (2011) Destructive weighted Poisson cure rate models. Lifetime Data Anal 17:333–346

Rodrigues J, de Castro M, Cancho VG, Balakrishnan N (2009) COM-Poisson cure rate survival models and an application to a Cutaneous Melanoma data. J Stat Plan Inference 139:3605–3611

Sy JP, Taylor JM (2000) Estimation in a Cox proportional hazards cure model estimation in a cox proportional hazards cure model. Biometrics 56:227–236

Taylor JMG (1995) Semi-parametric estimation in failure time mixture models. Biometrics 51:899–907

Tsodikov A, Ibrahim J, Yakovlev A (2003) Estimating cure rates from survival data. J Am Stat Assoc 98:1063–1078

Wang P, Pal S (2022) A two-way flexible generalized gamma transformation cure rate model. Stat Med 41(13):2427–2447

Wiangnak P, Pal S (2018) Gamma lifetimes and associated inference for interval-censored cure rate model with COM-Poisson competing cause. Commun Stat Theory Methods 47(6):1491–1509

Yakovlev AY, Tsodikov AD, Asselain B (1996) Stochastic models of tumor latency and their biostatistical applications (1). World Scientific, Singapore

Ye Z, Ng HKT (2014) On analysis of incomplete field failure data On analysis of incomplete field failure data. Ann Appl Stat 8(3):1713–1727

Yin G, Ibrahim JG (2005) Cure rate models: a unified approach. Can J Stat 33(4):559–570

Zeng D, Yin G, Ibrahim JG (2006) Semiparametric transformation models for survival data with a cure fraction. J Am Stat Assoc 101:670–684

Acknowledgements

The authors express their thanks to the Guest Editor and three anonymous reviewers for their careful reviews and useful comments and suggestions on an earlier version of this manuscript which led to this improved version.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix: Development of the EM Algorithm

Appendix: Development of the EM Algorithm

To implement the EM algorithm, we define the complete data likelihood function as:

where \({\varvec{\eta }}=\left( \eta _1, \dots , \eta _n\right) ^{\tiny \mathrm T}\) and \(\pi _0({\varvec{x}}^*_i; {\varvec{\beta }})=\left\{ 1+e^{{\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}}\right\} ^{-1}\) is the cure rate. Equivalently, the expression for the complete data log-likelihood function is obtained as:

For the mixture cure rate model, the expression given in (33) takes the following form:

1.1 Steps Involved in the EM Algorithm

Begin the iterative process by considering an initial estimate \({\varvec{\theta }}^{(0)}=\left( {\varvec{\beta }}^{(0)}, \alpha ^{(0)}, k^{(0)}, \lambda ^{(0)}\right) ^{\tiny \mathrm T}\) of \({\varvec{\theta }}\). The choice of \({\varvec{\theta }}^{(0)}\) requires justifications based on background knowledge and some sample real-life data. For \(r=1, 2, \dots \), let \({\varvec{\theta }}^{(r)}\) be the estimate of \({\varvec{\theta }}\) at the r-th step of the iteration. Then, \({\varvec{\theta }}^{(r+1)}\) is obtained using the following steps:

-

1.

E-Step Find the conditional expectation \(Q\left( {\varvec{\theta }}; {\varvec{\theta }}^{(r)} \right) = E\left\{ l_{C}({\varvec{\theta }}; {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}}, {\varvec{\eta }})| \left( {\varvec{\theta }}^{(r)},\right. \right. \left. \left. {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}} \right) \right\} \), which is given by

$$\begin{aligned} Q\left( {\varvec{\theta }}; {\varvec{\theta }}^{(r)} \right)&= \text {constant}+ n_1 (\log \alpha +\log k - k \log \lambda ) + (k-1)\sum _{i \in \Delta _1} \log t_i \nonumber \\&\quad - \sum _{i \in \Delta _1} \left( \frac{t_i}{\lambda }\right) ^k + \sum _{i \in \Delta _1} (\alpha -1) \log \left\{ 1 - e^{-(t_i/\lambda )^k}\right\} \nonumber \\&\quad + \sum _{i \in \Delta _1} {\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}- \sum _{i=1}^n \log \left( 1+ e^{{\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}} \right) \nonumber \\&\quad + \sum _{i \in \Delta _0} E\left\{ \eta _i \big \vert \left( {\varvec{\theta }}^{(r)}, {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}} \right) \right\} e^{{\varvec{x}}_i^{\tiny \mathrm T} {\varvec{\beta }}} \nonumber \\&\quad + \sum _{i \in \Delta _0} E\left\{ \eta _i \big \vert \left( {\varvec{\theta }}^{(r)}, {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}} \right) \right\} \log \left\{ 1- \left[ 1 - e^{-(t_i/\lambda )^k}\right] ^{\alpha } \right\} , \end{aligned}$$(35)where

$$\begin{aligned}&E\left\{ \eta _i \big \vert \left( {\varvec{\theta }}^{(r)}, {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}} \right) \right\} \nonumber \\&\quad = P\left\{ \eta _i = 1\big \vert \left( {\varvec{\theta }}^{(r)}, {\varvec{t}}, {\varvec{\delta }}, {\varvec{X}} \right) \right\} \nonumber \\&\quad =P\left\{ \eta _i = 1\big \vert \left( {\varvec{\theta }}^{(r)}, Y_i>t_i, {\varvec{x}}^*_i, i \in \Delta _0 \right) \right\} \nonumber \\&\quad =\frac{P\left\{ Y_i>t_i\big \vert \left( \eta _i=1, {\varvec{\theta }}^{(r)}, {\varvec{x}}^*_i, i \in \Delta _0 \right) \right\} P\left\{ \eta _i=1 \big \vert \left( {\varvec{\theta }}^{(r)}, {\varvec{x}}^*_i, i \in \Delta _0\right) \right\} }{P\left\{ Y_i>t_i\big \vert \left( {\varvec{\theta }}^{(r)}, {\varvec{x}}^*_i, i \in \Delta _0 \right) \right\} } \nonumber \\&\quad =\frac{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) - \pi _0\left( {\varvec{x}}^*_i; {\varvec{\beta }}^{(r)}\right) }{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) }\nonumber \\&\quad =1- \frac{ \pi _0\left( {\varvec{x}}^*_i; {\varvec{\beta }}^{(r)}\right) }{S_p\left( t_i; {\varvec{\theta }}^{(r)}, \delta _i, {\varvec{x}}^*_i\right) }. \end{aligned}$$(36) -

2.

M-Step Find

$$\begin{aligned} {\varvec{\theta }}^{(r+1)}=\left( {\varvec{\beta }}^{(r+1)}, \alpha ^{(r+1)}, k^{(r+1)}, \lambda ^{(r+1)}\right) ^{\tiny \mathrm T} = \underset{{\varvec{\theta }}}{{\arg \max }} \text { }Q\left( {\varvec{\theta }}; {\varvec{\theta }}^{(r)} \right) . \end{aligned}$$(37)The maximization step can be carried out using multidimensional unconstrained optimization methods such as Nelder–Mead simplex search algorithm or quasi Newton methods such as BFGS algorithm. These algorithms are available in statistical software R version 4.0.3 under General Purpose Optimization package called optimr().

-

3.

Convergence Check if the stopping or convergence criterion for the iterative process is met. For our analysis, we consider that the EM algorithm has converged to a local maxima if

$$\begin{aligned} \underset{1 \le k' \le d+4}{{\max }}\text { }{\left| \frac{\theta ^{(r+1)}_{k'}- \theta ^{(r)}_{k'}}{\theta ^{(r)}_{k'}} \right| < \epsilon }, \end{aligned}$$(38)where \(\theta ^{(r)}_{k'}\) and \(\theta ^{(r+1)}_{k'}\) are the \(k'\)-th component of \({\varvec{\theta }}^{(r)}\) and \({\varvec{\theta }}^{(r+1)}\), respectively, and \(\epsilon \) is a tolerance such as 0.001.

If the condition in (38) is satisfied, then the iterative process is stopped and \({\varvec{\theta }}^{(r)}\) is considered as the ML estimate of \({\varvec{\theta }}\).

Rights and permissions

About this article

Cite this article

Pal, S., Barui, S., Davies, K. et al. A Stochastic Version of the EM Algorithm for Mixture Cure Model with Exponentiated Weibull Family of Lifetimes. J Stat Theory Pract 16, 48 (2022). https://doi.org/10.1007/s42519-022-00274-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-022-00274-8