Abstract

In this paper, we discussed the estimation of the unknown parameters in addition to survival and hazard functions for a three-parameter Burr-XII distribution based on unified hybrid censored data. The maximum likelihood and Bayes method have been used to obtain the estimating. The Fisher information matrix has been used to construct approximate confidence intervals. The Bayesian estimates for the unknown parameters have been obtained by Markov chain Monte Carlo (MCMC) method. Also, the credible intervals are constructed by using MCMC samples. Finally, we analyze a real data set to illustrate the proposed methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Burr-XII distribution originally was introduced by Burr [5] and has been fitted to a wide range of observational information in different areas such as meteorology, finance and hydrology [7]. For more details about applications of Burr-XII, see Ali and Jaheen [1] and Burr [5]. Shao [20] expanded the three-parameter Burr-XII distribution (TPBXIID) and studied maximum likelihood estimation, and Shao et al. [21] studied the models for extremes for the TPBXIID with application to flood frequency analysis. Wu et al. [24] studied the estimation problems by using Burr-XII based on progressive type-II censoring with random removals. Silva et al. [22] suggested a location-scale regression model based on the Burr-XII distribution. Paranaiba et al. [19] suggested the beta Burr-XII. Paranaiba et al. [18] discussed the Kumaraswamy Burr-XII. Mead [15] presented the beta exponentiated Burr-XII. Al-Saiarie et al. [2] studied the Marshall-Olkin extended Burr-XII.

Recently, Gomes et al. [13] discussed theory and practice for two extended Burr models (McDonald Burr-XII) and Mead and Affy [16] studied the properties and applications for five parameters Burr-XII distribution which called the Kumaraswamy exponentiated Burr-XII distribution. The TPBXIID has the following cumulative distribution function (CDF)

The probability density function (PDF)

the survival function S(t) is given by

and the hazard function h(t) is given by

Here, \(\gamma \) and \( \theta \) are the shape parameters and \(\alpha \) is a scale parameter. It is important to note that when \( \theta =1 \), TPBXIID reduces to the Lomax distribution, when \( \theta >1, \) the density function is upside-down bathtub shaped with mode at \( x=\alpha \left[(\theta -1) / (\theta \gamma +1) \right]^{\frac{1}{\theta }} \) and is L-shaped when \( \theta =1 \).

Unified hybrid censoring scheme (UHCS) was introduced by Balakrishnan et al. [3] which is admixture of generalized type-I and type-II HCS and described as follows. Fix \(r, k \in \{ 1, \ldots , n\} \) where \( k< r < n \) and \( T_1, T_2 \in (0, \infty )\) where \(T_2 > T_1 \). The experiment is terminated at min{max\( \{X_{r:n}, T_1\}, T_2 \)}, if the kth failure occurs before time \(T_1\), the experiment is terminated at min{\( X_{r:n}, T_2 \)}, if the kth failure occurs between \( T_1 \) and \( T_2 \), and the experiment is terminated at \( X_{k:n} \), if the kth failure occurs after time \( T_2 \). Based on this censoring scheme, we can make sure that the test would be finished at most in time \( T_2 \) with at least k failures and if not, we can make sure that there are exactly k failures. For that, based on UHCS, we get six cases as follows:

-

(1)

\(0< x_{k:n}< x_{r:n}< T_1 < T_2\),

-

(2)

\( 0< x_{k:n}< T_1< x_{r:n} < T_2 \),

-

(3)

\( 0< x_{k:n}< T_1< T_2 < x_{r:n} \),

-

(4)

\( 0< T_1< x_{k:n}< x_{r:n} < T_2 \),

-

(5)

\( 0< T_1< x_{k:n}< T_2 < x_{r:n} \),

-

(6)

\( 0< T_1< T_2< x_{k:n} < x_{r:n} \).

The test is ended at \( T_1, x_{r:n} , T_2 \), \(x_{r:n} \), \( T_2 \) and \(x_{k:n}\), respectively. A lot of papers dealt with UHCS such as Ghazal and Hasaballah [10,11,12] and among others.

The rest of this paper is organized as follows: In Sect. 2, the maximum likelihood estimators (MLEs) of the unknown parameters of TPBXIID in addition to S(t) and h(t) are presented. In Sect. 3, deals with approximate confidence intervals (ACIs) based on the MLEs. In Sect. 4, the MCMC techniques have been used to get the Bayes estimates and construct CRIs under three different loss functions for the TPBXIID. We analyzed a real data set to explain our techniques in Sect. 5. Monte Carlo simulation results are displayed in Sect. 6. Finally, conclusions appear in Sect. 7.

2 Maximum Likelihood Estimation

We provided the MLEs of the TPBXIID when \(\alpha , \theta \) and \(\gamma \) are unknown. Let (\(x_1, \ldots , x_n\)) be a random sample of size n from the TPBXIID. Then, the likelihood function for six cases of the UHCS is given by:

where R denotes the number of the total failures in test up to time C (the stopping time point) and \( d_1 \) and \( d_2 \) indicate the number of the failures that take place before time points \( T_1 \) and \( T_2 \), respectively. From (1.1), (1.2) and (2.1), we have

where \(K=\frac{n!}{(n-R)!}\).

The log-likelihood function for the TPBXIID corresponding to Eq. (2.2) is

Taking the first derivatives of Eq. (2.3) with reference to \( \alpha , \theta \) and \(\gamma \) and setting each of them equal to zero, we obtain

and

From (2.6), we obtain the MLE \( {\hat{\gamma }} \) as

Because Eqs. (2.4) and (2.5) cannot be expressed in closed forms. The Newton–Raphson iteration method has been used to get the estimates. For more details, see EL-Sagheer [9]. Moreover, we can get the MLEs of S(t) and h(t) after replacing \( \alpha , \theta \) and \( \gamma \) by their MLEs \({\hat{\alpha }},{\hat{\theta }}\) and \({\hat{\gamma }}\) as follows:

and

3 Approximate Confidence Interval

The asymptotic variance–covariance of the MLEs for parameters \(\alpha , \theta \) and \( \gamma \) is given by elements of the negative of the Fisher information matrix and is defined as:

However, the exact mathematical expressions for the above expectations are very hard to get. Hence, the asymptotic variance–covariance matrix is obtained as follows:

with

Then, \((1-\eta )100\%\) CIs for parameters \(\alpha , \theta \) and \( \gamma \) are, respectively, given as

where \(Z_{\eta /2}\) is a standard normal value. Moreover; to construct the ACIs of S(t) and h(t) , which are functions of the parameters \(\alpha , \theta \) and \( \gamma \) we need to find the variances of them. In order to find the approximate estimates of the variance of \({{\hat{S}}}(t)\) and \({{\hat{h}}}(t) \), we use the delta method. The delta method is a general approach for calculating ACIs for functions of ML estimates, see Greene [14]. As a result of this method, the variance of \({{\hat{S}}}(t)\) and \({{\hat{h}}}(t) \), respectively, given by \( {\hat{\sigma }}_{S(t)}^2 = \left[\nabla {{\hat{S}}}(t)\right]^T\left[ {{\hat{V}}}\right] \left[\nabla {{\hat{S}}}(t) \right]\) and \( {\hat{\sigma }}_{h(t)}^2 = \left[\nabla {{\hat{h}}}(t)\right]^T\left[ {{\hat{V}}}\right] \left[\nabla {{\hat{h}}}(t) \right]\), where \(\nabla {{\hat{S}}}(t)\) and \(\nabla {{\hat{h}}}(t) \) are the gradient of \({{\hat{S}}}(t)\) and \({{\hat{h}}}(t)\), respectively, with respect to \(\alpha ,\theta \) and \( \gamma \) and \({{\hat{V}}} = I^{-1}(\alpha , \theta , \gamma )\).

Thus, the \( (1-\eta )100\%\) ACIs for S(t) and h(t) are obtained as

\(\left({{\hat{S}}}(t)\pm Z_{\eta /2} \sqrt{{\hat{\sigma }}_{S(t)}^2}\right)\) and \(\left({{\hat{h}}}(t)\pm Z_{\eta /2} \sqrt{{\hat{\sigma }}_{h(t)}^2}\right)\).

4 Bayes Estimation

In this section, we get the Bayes estimates of the unknown parameters of the TPBXIID under squared error (SE) loss, linear exponential (LINEX) loss and general entropy (GE) loss functions. It is assumed that the parameters \(\alpha , \theta \) and \( \gamma \) are independent and follow the gamma prior distributions as

Here, all the hyperparameters \( a_1, a_2, a_3, b_1, b_2, \) and \( b_3 \) are assumed to be known and nonnegative.

The joint prior distribution for \(\alpha , \theta \), and \(\gamma \) is

From (2.2) and (4.1) the joint posterior density function can be written as follows:

It is clear that it is impossible to calculate (4.2) analytically because it is very hard to obtain closed forms for the posterior distributions for each parameter. Then, we propose using the MCMC method to approximate (4.2) under SE, LINEX and GE loss functions.

4.1 MCMC Method

The MCMC method is used to get the Bayes estimates of \(\alpha \), \(\theta , \gamma \), S(t) and h(t), and also, we construct the CRIs based on the generated posterior samples. A lot of papers dealt with MCMC technique such as Chen and Shao [8], EL-Sagheer [9] and Ghazal and Hasaballah [10,11,12]. From (4.2), we get the posterior density function of \(\alpha \) given \(\theta \) and \( \gamma \) can be written as

and

Therefore, the full conditional posterior density function of \(\gamma \) given \(\alpha \) and \( \theta \) is gamma with shape parameter \( (a_3+R )\) and scale parameter \( \left\{b_3+\sum _{i=1}^{R}{\ln \left[ 1+\left(\frac{x_i}{\alpha }\right)^\theta \right]}\right\} \) and, therefore, samples of \(\gamma \) can be easily generated using any gamma-generating routine. Furthermore, the posterior density function of \(\alpha \) given \(\theta \) and \( \gamma \) in (4.3) and the posterior density function of \(\theta \) given \(\alpha \) and \( \gamma \) in (4.4) cannot be reduced analytically to well-known distributions, and therefore, it is impossible to sample directly by standard methods, but the plot of both posterior distributions shows that they are similar to normal distribution, see in Figs. 1 and 2. So, to generate random numbers from these two distributions, we propose using the Metropolis–Hastings algorithm with a normal proposal distribution, see Metropolis et al. [17]. Now, we are applying the next MCMC algorithm to draw samples from the posterior density (4.2) and in turn to obtain the Bayes estimates of the parameters \(\alpha , \theta \) and \(\gamma \) and any function of them such as S(t) and h(t) and the corresponding CRIs.

Metropolis–Hastings algorithm:

-

1.

Make an initial guess of \(\alpha , \theta \) and \(\gamma \), say \(\alpha ^{(0)}, \theta ^{(0)} \) and \(\gamma ^{(0)}\), respectively, M = burn-in.

-

2.

Set \(j = 1\).

-

3.

Generate \(\gamma ^{(j)}\) from Gamma \( \left( a_3+R, b_3+\sum _{i=1}^{R}{\ln \left[ 1+\left(\frac{x_i}{\alpha }\right)^\theta \right]} \right) \).

-

4.

Using Metropolis–Hastings, generate \(\alpha ^{(j)}\) and \(\theta ^{(j)}\) from \(\pi ^*_1(\alpha |\theta , \gamma ,{\underline{x}})\) and \(\pi ^*_2(\theta |\alpha , \gamma ,{\underline{x}})\) with normal proposal distribution \(N(\alpha ^{(j-1)},var(\alpha ))\) and \(N(\theta ^{(j-1)},var(\theta ))\) where \( var(\alpha )\) and \( var(\theta ) \) are obtained from the variance–covariance matrix.

-

(i)

Compute the acceptance probability

$$ r_1= {\text {min}} \left[1, \frac{{\pi }^*_1(\alpha ^*|\theta ^{j-1},\gamma ^j,{\underline{x}})}{{\pi }^*_1(\alpha ^{j-1}|\theta ^{j-1},\gamma ^j,{\underline{x}})} \right], $$$$ r_2= {\text {min}} \left[1, \frac{{\pi }^*_2(\theta ^*|\alpha ^j,\gamma ^j,{\underline{x}})}{ {\pi }^*_2(\theta ^{j-1}|\alpha ^j,\gamma ^{j},{\underline{x}})} \right]. $$ -

(ii)

Generate \( u_1 \) and \( u_2 \) from a uniform (0, 1) distribution.

-

(iii)

If \( u_1\le r_1 \), do not reject the proposal and put \( \alpha ^i=\alpha ^* \), otherwise put \(\alpha ^i = \alpha ^{i-1}\).

-

(iv)

If \( u_2\le r_2 \), do not reject the proposal and put \( \theta ^i=\theta ^* \), otherwise put \(\theta ^i = \theta ^{i-1}\).

-

(i)

-

5.

Calculate S(t) and h(t) as

$$ S(t)^{(j)}=\left[1+\left(\frac{t}{\alpha ^{(j)}}\right)^\theta \right]^{-\gamma ^{(j)}}, \qquad t>0, $$$$ h(t)^{(j)}=\theta ^{(j)}\gamma ^{(j)} [\alpha ^{(j)}]^{-\theta ^{(j)}}t^{\theta ^{(j)}-1}\left[1+\left(\frac{t}{\alpha ^{(j)}}\right)^{[\theta ^{(j)}]}\right]^{-1},\qquad t>0. $$ -

6.

Set \( j = j + 1\).

-

7.

Repeat Steps 3–6 N times and obtain \(\alpha ^{(j)},\theta ^{(j)}, \gamma ^{(j)}, S^{(j)}(t)\) and \( h^{(j)}(t) , j= M+1,\ldots , N \).

-

8.

To calculate the CIs of \(\alpha , \theta , \gamma , S(t)\) and h(t), order \(\alpha ^{(j)}, \theta ^{(j)},\gamma ^{(j)}, S^{(j)}(t)\) and \( h^{(j)}(t) , j= M+1,\ldots , N \), as \(\left(\alpha ^{(1)}< \ldots ,< \alpha ^{(N - M)}\right), \left(\theta ^{(1)}< \ldots ,<\theta ^{(N - M)}\right), \left(\gamma ^{(1)}< \ldots ,<\gamma ^{(N - M)}\right), \left( S^{(1)}(t)< \ldots , < S^{(N - M)}(t)\right)\) and \(\left( h^{(1)}(t)< \ldots , < h^{(N - M)}(t)\right)\). Then, the \(100(1-\eta )\%\) CIs of \(\alpha , \theta , \gamma , S(t)\) and h(t) are \(\left(\zeta _{({N- M})_{\eta /2}}, \zeta _{({N- M})_{(1-\eta /2)}}\right)\). Then, the Bayes estimates of \(\zeta =[ \alpha , \theta ,\gamma , S(t),h(t)]\), under SE loss function are obtained by

$$ {\hat{\zeta }}_{BS}=E[\zeta |{\underline{x}}]=\frac{1}{N-M}\sum _{i=M+1}^N\zeta ^{(i)}, $$(4.6)the Bayes estimates of \(\zeta =[ \alpha , \theta ,\gamma ,S(t),h(t)]\), under LINEX loss function are obtained by

$$ {\hat{\zeta }}_{BL}= -\frac{1}{a} \ln \left[\frac{1}{N-M}\sum _{i=M+1}^N e^{-a\zeta ^{(i)}} \right], $$(4.7)and the Bayes estimates of \(\zeta =[ \alpha , \theta ,\gamma ,S(t), h(t)]\), under GE loss function are obtained by

$$ {\hat{\zeta }}_{BG}= \left[\frac{1}{N-M}\sum _{i=M+1}^N [\zeta ^{(i)}]^{-a} \right]^{\frac{-1}{a}}. $$(4.8)

For more details about Eqs. (4.6), (4.7) and (4.8), see Basu and Ebrahimi [4], Varian [23], Zellner [25] and Calabria and Pulcini [6].

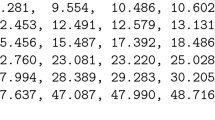

5 Real Life Data

We analyze a real data set produced by the National Climatic Data Center (NCDC) in Asheville in the USA. These data represent the wind speed measured by knots of 100 days, and we have been taken the daily average wind speeds from June 1, 2017 to September 28, 2017 for Cairo city as follows:

8.8 | 8.2 | 9.5 | 10.1 | 8.8 | 8.0 | 8.3 | 8.5 | 7.9 | 8.3 | 6.9 | 5.7 | 5.9 | 7.7 | 9.1 |

7.8 | 8.9 | 8.3 | 7.2 | 10.7 | 9.5 | 8.2 | 6.5 | 7.1 | 7.2 | 7.6 | 8.9 | 13.5 | 10.8 | 8.5 |

6.9 | 7.4 | 8.1 | 7.5 | 7.2 | 8.0 | 7.5 | 9.7 | 9.9 | 7.9 | 8.8 | 6.8 | 7.4 | 8.1 | 7.9 |

6.6 | 7.1 | 9.9 | 8.7 | 7.4 | 6.6 | 6.8 | 7.3 | 6.4 | 7.6 | 6.8 | 7.5 | 7.6 | 7.4 | 11.6 |

8.1 | 9.8 | 8.5 | 9.4 | 10.0 | 9.2 | 8.5 | 8.2 | 7.4 | 7.0 | 6.4 | 7.5 | 9.1 | 10.7 | 7.3 |

6.9 | 8.3 | 8.6 | 8.4 | 10.8 | 10.5 | 8.9 | 12.1 | 11.1 | 9.8 | 10.1 | 7.5 | 7.1 | 5.1 | 6.4 |

5.4 | 5.2 | 8.0 | 10.9 | 10.8 | 9.4 | 9.0 | 9.4 | 8.9 | 8.9 |

We used the Kolmogorov–Smirnov (K–S) test to determine whether the data distribution as TPBXIID fits the data or not. The calculated value of the K–S test is 0.059201 for the TPBXIID, and this value is smaller than their corresponding values expected at 5% significance level which is 0.13403 at \(n = 100\) and P value equal 0.853984. So, it can be seen that the TPBXIID fits these data very well and also we have just plotted the empirical S(t) and the fitted S(t) in Fig. 3. Observe that the TPBXIID can be a good model fitting these data.

Now, we consider the case when the data are censored. We have six cases as following:

-

Case I: \(T_1 =9.6, T_2 = 9.9, k = 70, r = 75 \). In this case : \( R = 80, C = T_1 = 9.6 \)

-

Case II: \(T_1 =9.7, T_2 = 9.9, k = 70, r = 83\). In this case: \( R = 83, C = x_{r:n} = 9.8 \)

-

Case III: \(T_1 =9.88, T_2 = 9.9, k = 70, r = 87.\) In this case: \(R = 85, C = T_2 = 9.9 \)

-

Case IV: \(T_1 = 8.9, T_2 = 11, k = 75, r = 88.\) In this case: \( R = 88, C = x_{r:n} = 10.15 \)

-

Case V: \(T_1 =8.9, T_2 = 10.55, k = 80, r = 91.\) In this case: \(R = 90, C = T_2 = 10.55\)

-

Case VI: \(T_1 =8.9, T_2 =9.15, k = 92, r = 94.\) In this case: \( R = 92, C = x_{k:n} = 10.8\)

In all the six cases, we estimate the unknown parameters of TPBXIID as well as survival and hazard functions using the MLEs and the Bayes estimates using MCMC method. For computing MLEs, From (2.4), (2.5), (2.6), (2.8) and (2.9), we obtain the ML estimates of \(\alpha , \theta , \gamma , S(t) \) and h(t), and the results are displayed in Table 2. Also, we computed the 95% CIs, and the results are given in Table 1. The Bayes estimates of \(\alpha , \theta , \gamma , S(t) \) and h(t) are computed under SE, LINEX and GE loss functions and also computed the 95% CRIs by applying the MCMC method with using 10,000 MCMC samples and discard the first 1000 values as ‘burn-in,’ and the results are given in Tables 1 and 2, respectively.

6 Simulation Study

In this paper, we present some simulation results to compare the performances of the different methods proposed in this paper. We mainly compare the performances of the ML estimates and Bayes estimates of the unknown parameters for TPBXIID as well as survival and hazard functions under three different loss functions, in terms of mean squared errors (MSEs), length and their coverage probability (CP). Below, we describe how the simulation study was carried out.

-

1.

For given hyperparameters \( a_1, b_1, a_2, b_2, a_3\) and \(b_3 \) generate random values of \( \alpha , \theta \) and \( \gamma \) from (4.3), (4.4) and (4.5).

-

2.

For given values of n (and R) with the initial values of \( \alpha , \theta \) and \( \gamma \) given in Step (1), we generated random samples from the inverse CDF of TPBXIID and then ordered them.

-

3.

The ML estimates of \( \alpha , \theta \) and \( \gamma \) are then obtained by solving the three nonlinear Eqs. (2.4), (2.5) and (2.6) numerically, and we also computed the 95% CIs using the observed Fisher information matrix.

-

4.

The ML estimates of S(t) and h(t) are obtained from Eqs. (2.8) and (2.9) and also computed the 95% CRIs at \( t=5. \)

-

5.

The Bayes estimates of \( \alpha , \theta , \gamma \), S(t) and h(t) are computed as well as the 95% CRIs by applying the MCMC method with 10,000 observations under SE loss function, given by (4.6), under LINEX loss function, given by (4.7) and GE loss function, given by (4.8).

-

6.

The quantities \((\phi - {\hat{\phi }})^2 \) are computed where \({\hat{\phi }} \) stands for an estimate of \( \phi \) (ML or Bayes).

-

7.

Steps 1–6 are repeated at least 1000 times and generated a sample from TPBXIID with \( \alpha =7.7165 , \theta =10.9436 , \gamma =0.6775 \) and \( n=100 \). The simulation is carried out for different choices of k, r, \( T_1 \) and \( T_2\) values. We have estimated \( \alpha , \theta , \gamma \) as well as S(t) and h(t) using the MLEs and computed MSEs, CP and length of the CIs when \( T_2= 10\) and \( T_1= 8.5 \). The results are displayed in Tables 3, 4, 5, 6 and 7. Also, we have estimated \( \alpha , \theta , \gamma \) as well as S(t) and h(t) using the Bayes estimates under the SE, LINEX and GE loss functions. We used the informative gamma priors for both the shape and scale parameters, that is, when the hyperparameters are \( a_1 = 0.9, b_1=0.5, a_2 = 0.8, b_2 = 1.0, a_3=0.6\) and \(b_3=0.1\), when \( T_2= 10\) and \( T_1= 8.5 \), the results are displayed in Tables 3, 4, 5, 6 and 7. We also compute the 95% CRIs and computed the MSEs, CP and length when \( T_2= 10\) and \( T_1= 8.5 \), the results are displayed in Tables 3, 4, 5, 6 and 7.

The MSEs of the estimates were estimated by

$$ {\text {MSE}}({\hat{\phi }}) = \sum _{i=1}^{1000}\dfrac{(\hat{\phi _i} -\phi )^2}{1000}. $$(6.1)

7 Conclusions

In this paper, based on unified hybrid censored data, the MLEs and the Bayes estimates for the unknown parameters in addition to the survival function and hazard function for the TPBXIID have been obtained. It is clear that the Bayes estimators cannot be obtained in explicit form. So, we have been used MCMC method to calculating the Bayes estimates under three different loss functions. We have applied the developed techniques on a real data set. A simulation study was carried out to examine and compare the performance of the proposed methods for different sample sizes (r, k) and different Cases (I, II, III, IV, V, VI). From the results, we notice the following:

-

1.

It is clear from Tables 3, 4, 5, 6 and 7 that the performance of the Bayes estimates for \(\alpha , \theta , \gamma , S(t) \) and h(t) are better than the MLEs in terms of MSEs.

-

2.

It is observed from Tables 3, 4, 5, 6 and 7 for Cases (I, II, III) the MSEs and the lengths decrease and the CP increases when \( T_2 \) and k are fixed and \( T_1 \) and r increase for \(\alpha , \theta , \gamma , S(t) \) and h(t) .

-

3.

It is evident that from Tables 3, 4, 5, 6 and 7 for Cases (IV, V, VI ) the MSEs and the lengths decrease and the CP increases when \( T_1 \) and r are fixed and \( T_2 \) and k increase for \(\alpha , \theta , \gamma , S(t) \) and h(t) .

-

4.

It is observed from Tables 3, 6, and 7 that the MSEs for \( \alpha \), S(t) and h(t) under LINEX and GE loss functions are almost symmetric to the MSEs under SE loss function.

-

5.

It is evident from Tables 4 and 5 that the MSEs for \( \theta , \gamma \) under LINEX and GE loss functions are relatively closed to the MSEs under SE loss function.

-

6.

From Tables 3, 4, 5, 6, and 7, it can be seen the Bayes estimates under GE loss function are better than all methods when \( a=7 \) in the sense of having smaller MSEs.

-

7.

From Tables 3, 4, 5, 6, and 7, the length of the CRIs of Bayes estimates for \(\alpha , \theta , \gamma , S(t) \) and h(t) is smaller than the corresponding length of the CIs of MLE; on the other side, the CP of Bayes estimates for \(\alpha , \theta , \gamma , S(t) \) and h(t) is greater than the corresponding CP of MLE.

References

Ali Mousa MAM, Jaheen ZF (2002) Statistical inference for the Burr model based on progressively censored data. Comput Math Appl 43:1441–1449

Al-Saiari AY, Baharith LA, Mousa SA (2014) Marshall–Olkin extended Burr type XII distribution. Int J Stat Probab 3:78–84

Balakrishnan N, Rasouli A, Sanjari Farsipour N (2008) Exact likelihood inference based on an unified hybrid censored sample from the exponential distribution. J Stat Comput Simul 78:475–788

Basu AP, Ebrahimi N (1991) Bayesian approach to life testing and reliability estimation using asymmetric loss function. J Stat Plan Inference 29:21–31

Burr IW (1942) Cumulative frequency functions. Ann Math Stat 13:215–232

Calabria R, Pulcini G (1994) An engineering approach to Bayes estimation for the Weibull distribution. Microelectron Reliab 34:789–802

Chen Y, Li Y, Zhao T (2015) Cause analysis on eastward movement of Southwest China vortex and its induced heavy rainfall in South China. Adv Meteorol 2:1–22

Chen M, Shao Q (1999) Monte Carlo estimation of Bayesian credible and HPD intervals. J Comput Graph Stat 8:69–92

EL-Sagheer RM (2018) Estimation of parameters of Weibull–Gamma distribution based on progressively censored data. Stat Pap 59:725–757

Ghazal MGM, Hasaballah HM (2017) Exponentiated Rayleigh distribution: a Bayes study using MCMC approach Based on unified hybrid censored data. J Adv Math 12:6863–6880

Ghazal MGM, Hasaballah HM (2017) Bayesian estimations using MCMC approach under exponentiated Rayleigh distribution based on unified hybrid censored scheme. J Stat Appl Probab 6:329–344

Ghazal MGM, Hasaballah HM (2018) Bayesian prediction based on unified hybrid censored data from the exponentiated Rayleigh distribution. J Stat Appl Probab Lett 5:103–118

Gomes AE, da-Silva CQ, Cordeiro GM (2015) Two extended Burr models: theory and practice. Commun Stat Theory Methods 44:1706–1734

Greene WH (2000) Econometric analysis, 4th edn. Prentice Hall, New York

Mead ME (2014) The beta exponentiated Burr-XII distribution. J Stat Adv Theory Appl 12:53–73

Mead ME, Afify AZ (2017) On five parameter Burr-XII distribution: properties and applications. S Afr Stat J 51:67–80

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E (1953) Equations of state calculations by fast computing machines. J Chem Phys 21:1087–1091

Paranaiba PF, Ortega EMM, Cordeiro GM, de Pascoa M (2013) The Kumaraswamy Burr-XII distribution: theory and practice. J Stat Comput Simul 83:2117–2143

Paranaiba PF, Ortega EMM, Cordeiro GM, Pescim RR (2011) The beta Burr-XII distribution with application to lifetime data. Comput Stat Data Anal 55:1118–1136

Shao Q (2004) Notes on maximum likelihood estimation for the three-parameter Burr-XII distribution. Comput Stat Data Anal 45:675–687

Shao Q, Wong H, Xia J (2004) Models for extremes using the extended three parameter Burr-XII system with application to flood frequency analysis. Hydrol Sci J 49:685–702

Silva GO, Ortega EMM, Garibay VC, Barreto ML (2008) Log-Burr-XII regression models with censored data. Comput Stat Data Anal 52:3820–3842

Varian HR (1975) A Bayesian approach to real estate assessment. North Holland, Amsterdam, pp 195–208

Wu SJ, Chen YJ, Chang CT (2007) Statistical inference based on progressively censored samples with random removals from the Burr type XII distribution. J Stat Comput Simul 77:19–27

Zellner A (1986) A Bayesian estimation and prediction using asymmetric loss function. J Am Stat Assoc 81:446–451

Acknowledgements

The authors would like to express their thanks to the editor, the associate editor and the referees for their useful and valuable comments on improving the contents of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

EL-Sagheer, R.M., Mahmoud, M.A.W. & Hasaballah, H.M. Bayesian Estimations Using MCMC Approach Under Three-Parameter Burr-XII Distribution Based on Unified Hybrid Censored Scheme. J Stat Theory Pract 13, 65 (2019). https://doi.org/10.1007/s42519-019-0066-3

Published:

DOI: https://doi.org/10.1007/s42519-019-0066-3