Abstract

Our study aims to compare the social engagement behaviors of the children with autism spectrum disorder (ASD) in interaction with a kid-size humanoid robot and a human partner in a motor imitation task on two children with ASD with high functioning. The evaluation measures (e.g., eye gaze direction, gaze shifting, completeness/correctness of the movements, initiation with/without prompt, verbal instruction, and time differences between starting/finishing the robot’s movement and the child’s movement) are extracted from the recorded videos of the trial sessions and analyzed to assess the engagement of the children in interaction with the robot or a human partner. The results indicate that the eye gaze duration to the robot, the frequency of the initiation without prompt, and the frequency of the complete and correct movements for Child 1 in the robot condition are higher compared to the human condition, while these criteria for Child 2 in the robot condition are slightly less compared to the human condition. Also, the frequency of the gaze shifting for Child 1 in the robot condition is lower compared to the human condition, while for Child 2, it is slightly higher compared to the human condition. Moreover, both children show desirable results in the case of the frequency of the required verbal instructions, and finished the movements before the robot and started after the robot most time. Finally, the study can suggest child–robot interaction to improve the social engagement of some children with ASD.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Autism spectrum disorder (ASD) is a developmental disorder that is characterized by impairments in social interactions, verbal and nonverbal communication, and a tendency to engage in restricted, repetitive, or stereotyped behaviors. The disorder affects normal brain development in the context of social interactions and communication skills due to gene mutation [1]. Although most parents report the symptoms of the disorder within the first 2 years of the life of their children, the early symptoms are revealed gradually from 6 months of age in children with ASD and continue during the adulthood. Nevertheless, these children have impairments in some aspects; some have abilities at a normal level or even better than typically developing children [2,3,4].

Although there is no definite reason for raising the prevalence rate of ASD, the reported statistics have demonstrated increases in prevalence rate [5,6,7,8,9]. Over the decades, psychologists and therapists have undertaken most behavioral therapy for children with ASD. However, in the last decade, many studies focused on the positive effects of the social robots to assist in improving the developmental impairments due to the reluctance of children with ASD to communicate with humans [10,11,12,13]. There are many studies reported that individuals with ASD have better performance in a predictable environment, like interacting with a social robot [14] and respond faster movements when primed by a robotic movement than human movement [15]. There are some reasons, which explain the better performance of the children with ASD when cued by the robotic movement than human movement. Children with ASD are more sensitive than typically developing children to the variances of performing the actions and therefore respond better to repetitive, predictable actions like the robot’s actions [15], which can be explained by a mechanism named “mirror systems” [16].

Imitation, as an early emerging skill in life, plays a critical role in the development of cognitive, language, social, and communication skills [17]. Children with ASD exhibit significant deficits in imitation, which can lead to broader impairments in other aspects, for example, language, social, and communication skills [18, 19]. The interactions between robot and child in the forms of imitation games can teach the child imitation behaviors better than the human [10,11,12, 15, 20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37].

In this paper, we investigate how children with ASD demonstrate social engagement behaviors (e.g., gaze direction, imitation, initiation with/without prompt) in interaction with a humanoid robot called ARC [38] or a human partner when imitating the robot’s movements or the human’s movements. For this purpose, we conduct several trial sessions in two different conditions (human condition and robot condition) on some children with ASD. During the sessions, the children are asked to imitate the human’s movement (in the human condition) or the robot’s movements (in the robot condition). The sessions are recorded to extract some measures to assess the social engagement behaviors of the children during the imitation tasks. We consider the same movements for both conditions. According to the considered evaluation metrics to assess the social engagement behaviors of the children, we expect that the children demonstrate more engagement in interaction with the robot than with the human partner including (1) longer duration of the gaze direction to the robot; (2) smaller frequency of the gaze shifting between the human and the robot; (3) higher frequency of complete and correct movements; (4) more initiation without prompt; (5) less required verbal instructions. The children had not been informed about the next movement of the robot. Therefore, we expect that the children often started after the robot and the time difference between starting the robot’s movement and the child’s movement to be high. Moreover, whereas the children’s speed is higher than the robot’s speed, we expect that at the most time, the children finish before the robot.

The remainder of this paper is as follows. We review the related works in Sect. 2. In Sect. 3, the trial procedures and the characteristic of the participants are described. Section 4 discusses the experimental results. Finally, Sect. 5 concludes the obtained results and describes future work.

2 Related works

Imitation is a primary social-cognitive skill that emerges early in development and plays a critical role in the development of cognitive, social, and communication skills. Children with autism exhibit significant impairments in imitation skills. These deficits have been reported on a variety of tasks, including body movements, vocalizations, facial expressions, and join attention [17,18,19]. The association between imitation impairments and social and communication skills deficits in children with ASD has led to a lot of studies focusing on designing the imitation interventions to teach the children with this deficit. Specifically, in recent years, the child–robot interactions in the forms of imitation tasks have reported promising results in teaching the children with ASD imitation behaviors [10,11,12, 15, 20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37].

In a study associated with the AURORA project, the humanoid robotic Robota was employed for engaging children with ASD in imitative interaction games [10,11,12]. Robins et al. [11, 12] conducted a longitudinal study by exposing four children with ASD to Robota throughout several months to encourage imitation and social interaction skills. The results were evaluated based on the social skills criteria such as eye gaze, touch, and imitation and were revealed that long-term studies could promote the social interaction skills of the children with ASD. In a study, Pierno et al. [15] revealed that interacting with robots can facilitate imitation behavior in children with ASD. For this purpose, they asked two groups of children with ASD and children with typical development to observe either a robotic arm model or a human performing a reach-to-grasp action toward an object and then perform the same action. Children with ASD demonstrated faster movement duration and peak velocity when primed by a robotic model rather than a human.

Michaud et al. [20] and Duquette et al. [21] compared the effects of interacting with a mobile robot as a mediator than a human on shared attention (visual contact/eye gaze/physical proximity), shared convention (facial expression, gesture, and actions toward the mediator) and the repetitive and stereotyped play patterns with favorite toys or objects on four children with ASD. They reported that the robot as a mediator increased shared attention and imitated facial expression more than a human mediator. However, the forms of shared conventions (imitation of body movements) were higher with the children paired with a human than the children paired with a robot. Moreover, the children paired with a robot demonstrated reduced repetitive play patterns with the toys.

Tapus et al. [22] conducted several trials on four children with ASD to investigate whether children with ASD show more initiations and social engagement behaviors when interacting with a Nao robot compared to a human partner in a motor imitation task. They analyzed the video data of the experiments considering some behavioral variables, including eye gaze, gaze shifting, free initiations/prompted initiations of arm movements, and smile/laughter. The results revealed high variability in reactions to Nao robot, such that two children indicated more eye gaze and smile/laugher in the interaction with robot compared to the human partner, while two other children show no effect of the robot in the target variables.

A graded cueing feedback model was applied for an imitation game played between a Nao robot and a child with ASD and evaluated on 12 children with ASD [24]. The study demonstrated that the graded cueing feedback model did not decline imitative accuracy in comparison with a non-adaptive condition. Zheng et al. [25, 26] developed a dynamic, adaptive, and autonomous robot-mediated learning system on a Nao robot for imitation skills with real-time imitated gestures evaluation and providing feedback. They compared the efficacy of the system with that of a human therapist on both group’s children with ASD and typically developing children. The results revealed that the children with ASD were engaged in the robotic system more compared to a human therapist and also had better performance in robot imitation tasks compared to the human sessions.

The authors in [27] conducted some trials using a Nao robot focused on imitation skills on three children with ASD and intellectual disabilities. They suggested the social assistive robots as an effective tool to promote the imitation skills of the children with ASD. The effects of the sensory profiles of children with ASD on the imitation in interaction with a Nao robot are assessed on 12 children with ASD [28]. The experimental results showed that there was a strong correlation between an overreliance on proprioceptive information (an ability to determine the body segment positions), hyporeactivity to visual motions (eye contact, following the gaze to others, joint attention), and difficulties in imitation tasks and then more engagement in interactions with a robot.

Taheri et al. [29] reported some promotion in social and communication skills, joint attention, stereotyped behaviors through robot-assisted imitation games for a twin with ASD, whom one was high functioning, and the other low functioning. Silva et al. [30] developed a robotic system to promote the imitation skills of children with ASD by encouraging them to engage in physical exercise and imitate the robot’s motions. They proposed a camera-based image processing imitation algorithm to imitate the child’s motions. The results indicated that the imitation algorithm accurately performs imitating the human motions. After that, he and his colleagues [31, 32] extended their system by proposing a real-time algorithm for evaluating the child’s motion. Overall, in their proposed system, the robot teaches a task to the child, then the child imitates task, the robot mimics the child’s motion and then evaluates it, if the child’s motion is correct, the robot congrats to the child, otherwise, it explains her/his mistakes and teaches the task again. The experimental results on four children with ASD indicated that interaction with robots could promote their imitation skills. An anthropomorphic robot named CHARLIE (CHild-centered Adaptive Robot for Learning in an Interactive Environment) is presented [33] for engaging the children with ASD in imitation games using hand and face tracking.

Srinivasan et al. [34] examined the effects of the robot–adult–child interactions using a humanoid robot Isobot on the social attention of 15 typically developing (TD) children and two children with ASD over 8-sessions imitation protocol. For both TD children and children with ASD “percent duration of attention” to the robot, to the trainer, to the tester, and elsewhere and “percent duration of verbalization,” to the trainer/tester, including spontaneous and responsive verbalization were evaluated throughout the training and testing sessions. The results indicated that both groups directed most of their attention toward the robot compared to the trainer/tester, and elsewhere during the testing and training sessions. Moreover, the child–robot interaction can facilitate spontaneous verbalization between the child and the trainer/tester for both groups, also both groups engaged in spontaneous verbalization more than responsive verbalization.

Srinivasan et al., in another work [35], evaluated praxis errors at pretest and posttest using a task-specific robot imitation test and a generalized test of praxis on 15 typically developing children and one child with ASD. The experimental results revealed that all children had improved in imitation tasks and generalized praxis. However, the child with ASD had more errors during imitation of the robot and human actions during the pretest compared to the TD children; he had improved his imitation of robot actions and human actions in the generalized test of praxis during posttest. The authors in their next study [23] extended their previous work to a larger sample of children with ASD and compared the effects of rhythm and robotic interventions using a Nao robot to a standard-of-care comparison intervention on the imitation/praxis, interpersonal synchrony skills, and overall motor performance of 36 children with ASD. The results indicated that all three groups illustrated improvements in imitation/praxis, both rhythm and robot groups demonstrated improvements in interpersonal synchrony performance, whereas the comparison group improved on the fine motor performance.

Bucenna et al. [37] investigated the influence of the type of partner (i.e., adults, TD children, and children with ASD) on robot learning during the imitation tasks. During the imitation tasks, at first, the robot produced a random posture, and the partner imitated the robot. After this phase, the roles were reversed, and the robot imitated the posture of the partner via a sensory-motor architecture based on a neural network. The experimental results showed that the robot learned the postures of the adults more easily than the other groups (TD children and children with ASD). Also, the number of required neurons for learning the postures of the children with ASD was more compared to the other groups. Furthermore, learning with children with ASD enabled generalization to be easier.

The attitude of the children with ASD toward robot was assessed in terms of eye gaze duration, imitation, and the frequency of the stereotyped behaviors through an interaction with a robot and another interaction with a human [36]. The results revealed more positive attitudes of the children with ASD toward the robot and more preference to interact/play with a robot compared to the human. Moreover, the percentage of the eye gaze duration toward the robot was longer compared to the human, the frequency of the stereotyped behavior in robot condition was less than in human condition, and the children imitated more the robot compared to the human.

3 Experimental setting

The trial sessions were conducted in Pooyesh Primary School in Tabriz, Iran, a school with approximately 30 pupils with ASD. The school has six classrooms, on average, five pupils in each class. The pupils are divided into these classes according to their course level. Although the education books of these pupils are different from the ones of the typically developing pupils, the children are educated based on the books related to their course level. In addition to the educational classes, the pupils participate in various sessions, including behavioral and play therapy interventions.

The trials were undertaken in two different conditions: the human condition and robot condition. The children are asked to imitate the movements of the human partner in the human condition and a humanoid robot in the robot condition. We conducted 24 sessions for both conditions (12 sessions for each condition). This took 3 months (4 weeks for human condition) and (7 weeks for robot condition) such that 2 months intervened between two conditions.

3.1 The experimental setup

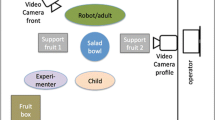

We conducted the trials in one of the rooms at the school. The room had one door and two windows overlooking the school’s backyard. The sessions were recorded with two video cameras. In the human condition, one stationary camera is placed in front of the child with a distance from him to capture the movements of the child, and another is placed in the corner of the room to capture the movements of the child from a different angle. In robot condition, a video camera is embedded in the head of the robot to capture the movements of the child in front of the robot, and, similarly, here, a stationary camera is placed in the corner of the room to capture the child’s movements in a different angle. Although we used two cameras in all of the sessions, there were periods the children moved outside the range of the cameras, due to the given freedom to the children to move around in the room.

There were two humans in the room during all of the sessions. One of them who was doing simple movements and gave the children verbal instructions for doing the movements called “human partner” and the other one who was sitting on the chair in the corner of the room to observe the sessions called “human observer.” Also, the children were not familiar with the human partner and human observer before starting the sessions. A picture of the experimental setup of the robot condition is reported in Fig. 1.

3.2 Robot

We used a kid-size humanoid robot named ARC, which has been built in our Humanoid Robots and Cognitive Technology research laboratory (HRCT) at the University of Tabriz, Iran [38]. The robot is 54.0 cm high, weighs 2.9 kg, and exploits 2 DOF for its head, 3 DOF for its arms, and 6 DOF for its legs. The main processor of the robot is a Mini PC Intel Core i5 6260U, which cooperates with one 32-bit ARM Cortex-M3 processor and an open-source CM9.04 board. ARC robot is a lightweight, cheap, and user-friendly, and it is equipped with a video camera with 90° fields of view. The specifications of the robot are described in detail in [38].

3.3 Participants

Five children (four boys and one girl) diagnosed with ASD based on DSM criteria [2, 3] aged between 9–11 and IQ ≥ 70 from a primary school for the children with autism were originally recruited to participate in the trials. However, three children (two boys and one girl) did not complete the study because of unwillingness and refusing to do the movements. Therefore, only two children completed the study. The characteristics of the participants are reported in Table 1. The description of the children’s behaviors is:

-

Child 1—Age (9 years and 3 months), high functioning. Although he can speak in a relatively fluent way, he is not interested in communicating with other children of the same age. He would like to communicate with older persons or younger kids. Also, he was showing stereotyped behaviors in several sessions.

-

Child 2—Age (9 years and 1 month), high functioning. Although he understands everything, he uses only some words to express some needs. He does not like to play with other children. He likes to walk around the room and talk to himself by expressing some repetitive words or sounds. Therefore, attracting him to concentrate on doing tasks is too hard and needs to be triggered by another person to initiate.

3.4 Trial session procedures

Although we conducted 12 sessions for each condition and each participant (3 sessions in a week for the human condition and 2 sessions in a week for the robot condition), some sessions were missed because of the absence or unwillingness of the children. The details of the number of sessions for each participant and the average duration with the standard deviation of the sessions’ duration for each condition are listed in Table 2.

Before starting the trials, the parents of the pupils were requested to sign the consent forms for the participation of their child. We conducted the trials for the human condition before the robot condition. The movements were designed to start progressively from very simple movements to more difficult ones, which were repeated several times by the human, or the robot. The movements are the same for both conditions, mainly involved the upper body, and were limited to arm movements. Each movement is a combination of the left and right arms (L and R, respectively) taking one of the following four positions: D: down; U: up; T: in T; and P: in ψ form. Figure 2 shows the main positions of the arm’s movements. The movements, which are the sequences of the positions, are listed in Table 3. Each movement is repeated ten times (for M1, M2, M4, M5, M7, M9, and M11) or eight times (for M3, M6, M8, M10, and M12).

The robot was connected to a laptop and placed on a table in front of the child. Before each trial, the video cameras and the robot (for robot condition) can be activated by pressing a button from the laptop. During the sessions, the children were asked to imitate either the robot or human movements. Since the speed of the robot is slower compared to the human, the duration of the sessions for the robot condition is approximately twice longer than the human condition. The first session of each condition was designed to familiarize the children with the process of the trials. During each session, the human partner gave the necessary verbal instructions to the child to imitate her or the robot.

3.5 Evaluation measures

Evaluating the quality of the human–robot interaction can be a critical issue, involving several aspects of the interaction such as drawing the attention and interest toward the robot, joint attention, imitation behaviors, and social tasks to obtain the useful information for improving the engagement of the children with ASD in interaction with a robot [22, 40, 41]. According to our procedure, we defined the evaluation measures as follows to assess the engagement of the children in interaction with the robot.

-

1.

Eye gaze direction refers to the duration of the child gazes at the human partner/human observer/robot/others (the room or the objects of the room) [22].

-

2.

Eye gaze shifting refers to the eye gaze shifting among human partner/human observer/robot/others [22]

-

3.

Completeness and correctness of the child’s movements refer to the movements which are “complete_correct,” “complete_incorrect,” or “incomplete_incorrect.” The presentation of this coding is described in Table 4.

Table 4 The description of the coding related to the Completeness/Correctness of the movements -

4.

Initiation of the child’ movement with/without prompt refers to the movements which the child starts with/without triggering by a verbal prompt (e.g., calling the child’s name or give him simple instructions, for example, “look at me” or “look at the robot”) [22].

-

5.

Verbal instructions to the child refer to the verbal instructions given by the human partner to the child (e.g., “up your arm,” “down your arm,” or “put your arm like this position”).

-

6.

Time differences between the beginning/finishing the robot’s arm movement and the beginning/finishing the child’s arm movement is measured as duration [40].

4 Experimental results

All of the recorded videos were annotated manually by the video annotation software ANVIL [42] which segmented each video into \(\frac{1}{10}\) second intervals. The intervals without any movement were ignored and had not been coded. The coded data extracted from the videos of each trial were combined to calculate the below-listed measures for each child:

-

1.

The percentage of the total duration of the eye gaze direction of the child to the human partner/human observer/robot/others

-

2.

The percentage of the total number of eye gaze shifting

-

3.

The percentage of completeness and correctness of the child’s movements

-

4.

The percentage of the total number of initiation of the child’ movement with/without prompt

-

5.

The percentage of the total number of the verbal instructions given to the child

-

6.

The percentage of time differences between the beginning/finishing the robot’s arm movement and the beginning/finishing the child’s arm movement

As all of the videos varied in duration, the total duration of the eye gaze direction to the human partner/human observer/robot/others was transformed to proportional representation of the corresponding value relative to the total duration of eye gaze direction for that video. Similarly, the total numbers of the completeness, correctness, and initiation of the child’s movements with/without prompt were transformed to proportional representations of the corresponding values relative to the total number of repeated movements for each video. Furthermore, the total number of eye gaze shifting and the total number of the verbal instructions were transformed to the proportional representation of the corresponding values relative to the total duration of the movements for that video. Finally, the total time differences between the starting/finishing the robot’s arm movement and the starting/finishing the child’s arm movement were transformed to the proportional representation of the corresponding value relative to the total duration of time differences for that video.

4.1 Eye gaze direction

As mentioned, we performed several sessions for each condition. The trends of the eye gaze toward the human partner/human observer/others/robot per session and the also the presentation of the variances of the percentages of the gaze duration for Child 1 and Child 2 are reported in Figs. 3, 4, 5 and 6, respectively. As you can see in Fig. 3, the levels of the gaze direction to the human partner and others are significantly lower in the robot condition compared to the human condition, and the most part of the gaze direction is dedicated to the robot in the robot condition, that is approximately 80% of the total imitation time. The visual analysis of the eye gaze in Fig. 3a indicates more high fluctuations in the trend of the gaze toward the human partner and others in the human condition, compared to relatively stable low levels in the robot condition in Fig. 3b. Moreover, the levels of the gaze direction to the human observer in the first sessions of the human condition are relatively high and then significantly decrease in the next sessions, while we observed relatively stable low levels in the robot condition. Figure 4 shows the variability of the obtained percentages of the gaze duration in terms of each actor during the sessions of both conditions for Child 1, which confirms the results of Fig. 3. It can be seen that the variances of the target variables in the human condition considerably are higher compared to the robot condition, which indicates the more high behavior variability of Child 1 during the human condition compared to the robot condition, as confirmed by Fig. 3. Although the variances of the obtained results for the robot condition are low, Fig. 4 illustrates two outlier points related to the gaze toward the others and gaze toward the robot.

Box plot comparing the percentages of the eye gaze duration for Child 1. Lower and upper box boundaries denote 25th and 75th percentiles, respectively, line inside box median, the cross marks the mean point, lower and upper error lines 10th and 90th percentiles, respectively, and the filled circle data points falling outside the plot the outlier points

Box plot (see caption of Fig. 4 for the explanation of box-plot) comparing the percentages of the eye gaze duration for Child 2

As you can see in Fig. 5, the levels of the gaze direction to the human partner are significantly lower in the robot condition compared to the human condition. Also, the levels of the gaze direction to the robot in the robot condition are significantly higher compared to the gaze direction to the human partner, observer, and others, as shown in Fig. 5b. The visual analysis in Fig. 5, reveals stable low levels of the gaze direction to the observer and others with no significant difference between two conditions. The higher fluctuant trend of the gaze toward the robot and relatively stable low-level trend of the gaze toward the observer shown in Fig. 5b compared to the other trends in both conditions, confirm their high variance and low variance of the results, as shown in Fig. 6. However, the variances of the gaze to the human observer and gaze to the others in the robot condition are low; we can observe two outlier points among their corresponding results.

4.2 Eye gaze shifting

As mentioned before, the percentage of the eye gaze shifting is a proportional representation of the number of the eye gaze shifting per \(\frac{1}{10}\) second intervals. Figures 7 and 8 illustrate the trends of the gaze shifting per session for Child 1 and Child 2, respectively, and the comparison of the variances of the percentages of the target variable for both children is demonstrated in Fig. 9. As shown in Fig. 7, there is a relatively high fluctuation pattern in the trend of the variable for two conditions with low levels of the gaze shifting in the robot condition compared to the human condition. We can see relatively stable low levels of the gaze shifting for Child 2 and slightly higher levels in the robot condition, as demonstrated in Fig. 8a, b. Figure 9 shows that the variance of the eye gaze shifting values of Child 1 during the human condition sessions is more compared to the robot condition, while Child 2 shows the reverse behaviors. Also, the values of the eye gaze shifting for Child 1 are higher compared to Child 2 in both conditions, which indicate Child 1 shifts his eye gaze frequently and cannot easily concentrate on a specific object.

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the eye gaze shifting per 1/10 s for both children

4.3 Completeness/correctness of the movements

We divided the completeness and the correctness of the movements into three categories, including complete_correct, complete_incorrect, and incomplete_incorrect. Table 4 describes this coding in detail. The trends of the completeness/correctness of the movements per session and the variance of the percentages of the target variable for participants are demonstrated in Figs. 10, 11, 12 and 13. The visual analysis in Fig. 10a, b reveals a slightly descendant trend for the levels of the complete_correct movements in the human condition and a slightly ascendant trend in the robot condition with a significantly better performance in the robot condition. Figure 10 illustrates a high fluctuation trend for the complete_incorrect movements in both conditions and relatively stable low levels of incomplete_incorrect movements in both conditions. As you can see in Fig. 11, the variances of the complete_correct and complete_incorrect movements for the robot condition are lower compared to the human condition. Moreover, although the percentage of the incomplete_incorrect movements in the robot condition is slightly higher compared to the human condition, the percentage of the complete_correct and the complete_incorrect movements in robot condition are slightly higher and lower compared to the human condition, respectively, which indicates a positive effect of the robot condition on promoting performance.

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the completeness/correctness of the movements during each session for Child 1

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the completeness/correctness of the movements for Child 2

For Child 2, the visual analysis in Fig. 12 reveals high fluctuation trends in both conditions for all variables. Figure 13 shows that although the levels of the correct movements in robot condition are lower compared to the human condition, the levels of the incomplete_incorrect movements in the robot condition are slightly lower compared to the human condition.

4.4 Initiation With/Without Prompt

The children initiated the movements with or without prompt. The trends of the initiation with/without prompt per session and the percentage of the target variable for the participants are illustrated in Figs. 14, 15, 16 and 17. The visual analysis in Fig. 14 reveals relatively stable high levels of the initiation without prompt and low levels of the initiation with prompt throughout both conditions. We can see, the level of the initiation without prompt decreases in the third session and again gradually increases and remains steady at a high level, while in the robot condition, the corresponding values are placed at relatively stable high levels. The percentages’ variance of the movements which are initiated without prompt in the robot condition is slightly lower compared to the human condition, as shown in Fig. 15. Also, it can be seen one outlier point for each variable during both conditions.

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the initiation with/without prompt for Child 1

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the initiation with/without prompt for Child 2

The visual analysis in Fig. 16 demonstrates high fluctuant trends for both variables in both conditions. Also, Fig. 16b shows that the trends of target variables for the robot condition approach in a single point (session 10). Although, the visual analysis in Fig. 17 indicates better performance in the human condition compared to the robot condition, the percentage of the initiation without prompt in the robot is higher compared to the levels of the initiation with prompt, which shows robot has no negative effect on the initiation of the movements for Child 2.

4.5 Verbal instructions

As mentioned before; we transformed the total number of verbal instructions to the percentage of verbal instructions per \(\frac{1}{10}\) second. The trends of the percentage of verbal instructions per session and the comparison of the variances of the percentages of the target variables for both participants are illustrated in Figs. 18, 19 and 20. Following our expectations, the levels of the verbal instructions during the robot condition diminished for both children. There is a high fluctuant trend in the human condition, while we can see a relatively ascendant trend in the robot condition, as shown in Fig. 18. The obtained results in Fig. 20 reveal that the variance and the values of the verbal instructions in the robot condition for Child 1 are slightly lower compared to the human condition.

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the verbal instructions per 1/10 s for both children

For Child 2, Fig. 19 shows a relatively descendant trend in the human condition, while it can be observed an ascendant trend in the robot condition. Also, the visual analysis in Fig. 20 detects that the robot condition had a significant positive effect on diminishing the verbal instructions which were given to Child 2.

4.6 Starting/finishing time of the movements

The synchrony of the child–robot movements and the responses to the robot’s movements can be considered as one of the evaluating measures for the quality of imitation tasks. Therefore, the time difference between the beginning of the robot’s arm movement and the child’s arm movement can denote the effectiveness of the abilities of the robot in involving in the social engagements of the child [40].

Since the starting and finishing time of the child’s movements and the robot’s movements are different, the time differences between these parameters are computed and transformed to the percentage of the time differences relative to the total differences. The trends of the percentage of the differences between the starting/finishing time of the child’s movements and the robot’s movements per session and the comparison of the variances of the percentages of the target variables are demonstrated in Figs. 21, 22 and 23. The visual analysis in Fig. 21 shows the high fluctuant trends for all target variables in both conditions. While the visual analysis in Fig. 22 presents high fluctuant trends for the graphs of “the child first finished,” and “the robot first started” and also relatively stable, and low-level trends for the graphs of “the child first started,” and “the robot first finishes.” The visual analysis in Fig. 23 indicated that for both children, the highest variance and the largest time differences are related to when the child finished the movement before the robot. Moreover, the lowest variance and the least time differences for Child 1 happened when the robot finished before the child, and for Child 2 corresponded when the child started before the robot.

Box plot (see caption of Fig. 4 for the explanation of box plot) comparing the percentages of the time differences between the starting/finishing time of the child’s movements and the robot’s movements

5 Conclusion and discussion

Our study is aimed at comparing the effects of the interaction with a humanoid robot and a human partner on the social engagement behaviors of the children with ASD during the imitation tasks. However, the children showed different behaviors during the imitation tasks, based on the observation and the obtained results, we can draw some conclusions. Both children were interested in interacting with the robot and imitating the robot’s movements. In the robot condition, both children manifested interest toward the robot to imitate it in the first minutes of the session and then diminished gradually toward the end of each session. Also, we observed a similar relation for the first sessions of the robot condition toward the final sessions.

Based on the assumption that robots can attract children with ASD, we expected the duration of the eye gaze to the robot to be higher compared to the human partner. The results indicated that the levels of the gaze direction to the human partner were significantly lower in the robot condition compared to the human condition, and the levels of the gaze direction to the robot in the robot condition were significantly higher compared to the gaze direction to the human partner, observer, and others for both children. Also, the obtained results revealed that Child 1 had longer gaze duration to the robot compared to the human partner, while gaze duration to the robot for Child 2 was slightly less compared to the human partner. Child 1 illustrated a higher eye gaze toward the others than the human partner in both conditions. Additionally, Child 2 had the lowest eye gaze to the human observer in both conditions, with no significant difference between both conditions.

We expected that the percentage of the gaze shifting per unit time between the human partner/human observer/robot/others was lower in the robot condition compared to the human condition. This expectation was confirmed for Child 1. However, the levels of the eye gaze shifting for Child 2 were lower compared to the corresponding value for Child 1 in both conditions, and the level of the gaze shifting in robot condition was higher compared to the human condition for Child 2.

We expected the percentage of the complete and correct movements to be higher in the robot condition compared to the human condition. The results indicated that Child 1 satisfied our prediction and also, the percentage of the incorrect movements in robot condition was lower compared to the human condition. Although Child 2 manifested slightly better performance in the human condition compared to the robot condition, the percentage of the incomplete_incorrect movements in the robot condition is lower compared to the human condition.

We also expected that the percentage of the initiations without prompt to be higher in the robot condition compared to the human condition and the percentage of the initiations without prompt to be higher than the initiations with prompt in each condition. The results indicated that the levels of the initiation without prompt were higher than the levels of the initiation with prompt in both conditions for both children. Also, the robot was a better facilitator of the target variable compared to the human partner for Child 1.

According to our expectation, the verbal instructions, which are given to the children in the robot condition, were lower compared to the human condition. The obtained results show that the prediction was confirmed for both participants.

We expected that the children finished the movement before the robot and started after the robot due to the slow speed of the robot. Indeed, comparing time differences between the starting/finishing time of the robot’s movement and the child’s movement, we can see that for both children, the most differences happened when the child finished the movement before the robot and the second most difference was related to when the children started after the robot. Moreover, the least differences for Child 1 were obtained when the robot finished before the child and for Child 2 was for when the robot started after the child.

We should note that our obtained results are drawn from two subjects’ behaviors, which makes it a little difficult to generalize our conclusion to a large sample size. Besides, the children with ASD exhibit a wide range of behaviors, and their reactions can be spread in a wide spectrum. Therefore, it can be acceptable that their social behaviors during the interactions are different from each other such that according to the obtained results, we observed that the behaviors of Child 1 for some measures are different from the behaviors of Child 2. However, the obtained results showed that (1) both children gazed at the robot more than the human partner/observer/others, (2) for one of the children, the percentage of the complete_correct movements in the robot condition was higher compared to the human condition, and for another one, the percentage of the incomplete_incorrect movements in the robot condition was lower compared to the human condition, (3) for both children, the level of the initiations of the movements without prompt in the robot condition was higher compared to the initiation with prompt, and (4) the robot condition had a significant positive effect on reducing the verbal instructions given to both children.

While there was a high variability of the behaviors in interaction with the robot, which can be found in several studies [12, 43], according to the above general conclusion, both children had an attraction toward the robot, and their performance often promoted in interacting with a robot more than with a human. Therefore, however, our sample size is too small, the attraction of the participants to the robot led to that we can suggest human–robot interaction to promote social engagement of at least a subgroup of the children with ASD.

In the future, we will work in the direction of deciding what could be the best sequence of movements for the robot, through evaluating the engagement of the children in interaction with the robot.

References

Muhle R, Trentacoste SV, Rapin I (2004) The genetics of autism. Pediatrics. https://doi.org/10.1016/j.spen.2004.07.003

American Psychiatric Association (2013) Diagnostic and statistical manual of mental disorders, 5th edition (DSM-5). Diagnostic Stat Man Ment Disord 4th Ed TR 280. https://doi.org/10.1176/appi.books.9780890425596.744053

American Psychiatric Association (2016) DSM 5 update: diagnostic and statistical manual of mental disorders, fifth edition. Psychiatr News. https://doi.org/10.1176/appi.pn.2016.5a20

Carpenter L (2013) DSM-5 Autism Spectrum Disorder. Dsm-5 1–7

Christensen DL, Baio J, Braun KVN, Bilder D, Charles J, Constantino JN, Daniels J, Durkin MS, Fitzgerald RT, Kurzius-Spencer M, Lee L-C, Pettygrove S, Robinson C, Schulz E, Wells C, Wingate MS, Zahorodny W, Yeargin-Allsopp M (2016) Prevalence and characteristics of Autism Spectrum Disorder among children aged 8 years - autism and developmental disabilities monitoring network, 11 Sites, United States, 2012. Morb Mortal Wkly report Surveill Summ 65:1–23. https://doi.org/10.15585/mmwr.ss6503a1

Elsabbagh M, Divan G, Koh Y, Kim YS, Kauchali S, Marcín C, Montiel-nava C, Patel V, Paula CS, Wang C, Yasamy MT, Fombonne E (2012) Global prevalence of autism and other pervasive developmental disorders. Int Soc Autism Res Wiley Period Inc. https://doi.org/10.1002/aur.239

Kim YS, Leventhal BL, Koh Y-J, Fombonne E, Laska E, Lim E-C, Cheon K-A, Kim S-J, Kim Y-K, Lee H, Song D-H, Grinker RR (2011) Prevalence of Autism Spectrum Disorders in a total population sample. Am J Psychiatry 168:904–912. https://doi.org/10.1176/appi.ajp.2011.10101532

Taylor B, Jick H, MacLaughlin D (2013) Prevalence and incidence rates of autism in the UK: time trend from 2004–2010 in children aged 8 years. BMJ Open 3:e003219. https://doi.org/10.1136/bmjopen-2013-003219

Waugh I (2018) The prevalence of Autism (including Asperger Syndrome) in school age children in Northern Ireland 2018. Community Inf Branch Inf Anal Dir Dep Heal (DHSSPS), Belfast, North Irel

Dautenhahn K, Billard A (2002) Games children with autism can play with robota, a humanoid robotic doll. In: 1st Cambridge workshop on universal access and assistive technology (CWUAAT), pp 179–190

Robins B, Dautenhahn K, Boekhorst R, Billard A (2004) Effects of repeated exposure of a humanoid robot on children with autism – Can we encourage basic social interaction skills? Designing a more inclusive world. Springer, London, pp 225–236. https://doi.org/10.1007/978-0-85729-372-5_23

Robins B, Dautenhahn K, Te Boekhorst R, Billard A (2005) Robotic assistants in therapy and education of children with autism: can a small humanoid robot help encourage social interaction skills? Univers Access Inf Soc 4:105–120. https://doi.org/10.1007/s10209-005-0116-3

Kim ES, Berkovits LD, Bernier EP, Leyzberg D, Shic F, Paul R, Scassellati B (2013) Social robots as embedded reinforcers of social behavior in children with autism. J Autism Dev Disord 43:1038–1049. https://doi.org/10.1007/s10803-012-1645-2

Dautenhahn K, Werry I (2004) Towards interactive robots in autism therapy: background, motivation and challenges. Pragmat Cogn 12:1–35. https://doi.org/10.1075/pc.12.1.03dau

Pierno AC, Mari M, Lusher D, Castiello U (2008) Robotic movement elicits visuomotor priming in children with autism. Neuropsychologia 46:448–454. https://doi.org/10.1016/j.neuropsychologia.2007.08.020

Pacherie E, Dokic J (2006) From mirror neurons to joint actions. Cogn Syst Res 7:101–112. https://doi.org/10.1016/j.cogsys.2005.11.012

Ingersoll B (2008) The social role of imitation in autism. Infants Young Child 21:107–119. https://doi.org/10.1097/01.iyc.0000314482.24087.14

Ingersoll B (2010) Brief report: pilot randomized controlled trial of reciprocal imitation training for teaching elicited and spontaneous imitation to children with autism. J Autism Dev Disord 49:1154–1160. https://doi.org/10.1016/j.pain.2013.06.005.Re-Thinking

Ingersoll B (2012) Brief report: effect of a focused imitation intervention on social functioning in children with autism. J Autism Dev Disord 42:1768–1773. https://doi.org/10.1007/s10803-011-1423-6.Brief

Michaud FÝ, Salter TÝ, Duquette a Þ, Mercier HÞ, Lauria MÝ, Larouche HÞ, Larose FÞ (2007) Assistive technologies and child–robot interaction. In: Presented at AAAI spring symposium on multidisciplinary collaboration for socially assistive robotics, Stanford, CA

Duquette A, Michaud F, Mercier H (2008) Exploring the use of a mobile robot as an imitation agent with children with low-functioning autism. Auton Robots 24:147–157. https://doi.org/10.1007/s10514-007-9056-5

Tapus A, Peca A, Aly A, Pop C, Jisa L, Pintea S, Rusu AS, David DO (2012) Children with autism social engagement in interaction with Nao, an imitative robot: a series of single case experiments. Interact Stud 13:315–347. https://doi.org/10.1075/is.13.3.01tap

Srinivasan SM, Kaur M, Park IK, Gifford TD, Marsh KL, Bhat AN (2015) The effects of rhythm and robotic interventions on the imitation/praxis, interpersonal synchrony, and motor performance of children with autism spectrum disorder (ASD): a pilot randomized controlled trial. Autism Res Treat 2015:1–18. https://doi.org/10.1155/2015/736516

Greczek J, Kaszubski E, Atrash A, Matari M (2014) Graded cueing feedback in robot-mediated imitation practice for children with autism spectrum disorders. In: The 23rd IEEE international symposium on robot and human interactive communication. IEEE, pp 561–566

Zheng Z, Das S, Young EM, Swanson A, Warren Z, Sarkar N (2014) Autonomous robot - mediated imitation learning for children with autism. In: IEEE international conference on robotics & automation (ICRA), pp 2707–2712

Zheng Z, Young EM, Swanson AR, Weitlauf AS, Warren ZE, Sarkar N (2016) Robot-mediated imitation skill training for children with autism. IEEE Trans Neural Syst Rehabil Eng 24:682–691. https://doi.org/10.1109/TNSRE.2015.2475724.Robot-mediated

Conti D, Nuovo S Di, Buono S, Trubia G, Nuovo A Di (2015) Use of robotics to stimulate imitation in children with Autism Spectrum Disorder : a pilot study in a clinical setting. In: 24th IEEE international symposium on robot and human interactive communication (RO-MAN). IEEE, pp 1–6

Chevalier P, Raiola G, Martin J-C, Isableu B, Bazile C, Tapus A (2017) Do sensory preferences of children with autism impact an imitation task with a robot? In: Proceedings of 2017 ACM/IEEE international conference on human–robot interaction - HRI ’17, pp 177–186. https://doi.org/10.1145/2909824.3020234

Taheri A, Meghdari A, Alemi M, Pouretemad HR, Sciences B (2018) Clinical interventions of social humanoid robots in the treatment of a pair of high- and low-functioning autistic Iranian twins. Sci Iran 25:1197–1214. https://doi.org/10.24200/sci.2017.4337

Silva PRS De, Matsumoto T, Saito A, Lambacher SG, Higashi M (2009) The development of an assistive robot as a therapeutic device to enhance the primal imitation skills of autistic. In: Proceeding of IEEE/RSJ international conference on intelligent robots and systems, Japan

Fujimoto I, Matsumoto T, De Silva PRS (2010) Study on an assistive robot for improving imitation skill of children with autism. International Conference on Social Robotics, Springer-Verlag, Berlin Heidelberg 2010:232–242

Fujimoto I, Matsumoto T, De Silva PRS, Kobayashi M, Higashi M (2011) Mimicking and evaluating human motion to improve the imitation skill of children with autism through a robot. Int J Soc Robot 3:349–357. https://doi.org/10.1007/s12369-011-0116-9

Boccanfuso L, Kane JMO (2011) CHARLIE : an adaptive robot design with hand and face tracking for use in autism therapy. Int J Soc Robot 3:337–347. https://doi.org/10.1007/s12369-011-0110-2

Srinivasan S, Bhat A (2013) The effect of robot–child interactions on social attention and verbalization patterns of typically developing children and children with autism between 4 and 8 years. Autism Open Access 03:1–10. https://doi.org/10.4172/2165-7890.1000111

Srinivasan SM, Lynch KA, Bubela DJ, Gifford TD, Bhat AN (2013) Effect of interactions between a child and a robot on the imitation and praxis performance of typically developing children and a child with autism: a preliminary study. Percept Mot Skills 116:885–904. https://doi.org/10.2466/15.10.PMS.116.3.885-904

Costa A, Schweich T, Charpiot L, Steffgen G (2018) Attitudes of children with autism towards robots: an exploratory study. arXiv:180607805

Boucenna S, Anzalone S, Tilmont E, Cohen D, Chetouani M (2014) Learning of social signatures through imitation game between a robot and a human partner. IEEE Trans Auton Ment Dev 6:213–225. https://doi.org/10.1109/TAMD.2014.2319861

Saeedvand S, Aghdasi HS, Baltes J (2018) Novel lightweight odometric learning method for humanoid robot localization. Mechatronics 55:38–53. https://doi.org/10.1016/j.mechatronics.2018.08.007

Montgomery JM, Newton B, Smith C (2008) Test review: GARS-2: Gilliam autism rating scale-second edition. J Psychoeduc Assess 26:395–401. https://doi.org/10.1177/0734282908317116

Anzalone SM, Boucenna S, Ivaldi S, Chetouani M (2015) Evaluating the engagement with social robots. Int J Soc Robot Springer 1–14

Mascarell-Maricic L, Lee J, Schuller BW, Rudovic O, Picard RW (2017) Measuring engagement in robot-assisted autism therapy: a cross-cultural study. Front Robot AI. https://doi.org/10.3389/frobt.2017.00036

ANVIL (2020) The video annotation research tool. http://www.anvil-software.org/. Accessed 29 Feb 2020

Hamzah MSJ, Shamsuddin S, Azfar M (2014) Development of interaction scenarios based on pre-school curriculum in robotic intervention for children with autism. Procedia Comput Sci 42:214–221. https://doi.org/10.1016/j.procs.2014.11.054

Acknowledgements

We are grateful to the parents, participant pupils, and teaching staff at Autism Pooyesh Primary School in Tabriz for allowing and supporting us to recruit participants and collect data at the school.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This study was carried out with the approval of the Ethics Committee of the University of Tabriz, Faculty of Electrical and Computer Engineering (approval number: 01/96) and Special Education Organization of East Azerbaijan Province, Tabriz, Iran (approval number: 46/96). Also, before starting the study, all of the parents/caretakers/legal guardians, and the children received a full explanation of the study. Because the children were underage and had difficulties in communicating, their parents/caretakers/legal guardians signed informed consent forms on behalf of their children to participate in the study, which would be discontinued when any significant indication of distress or discomfort would be observed. Moreover, the parents demonstrated their agreement with the study to be recorded on videos and to be shared the results with the scientific community if the anonymity and confidentiality are guaranteed.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Aryania, A., Aghdasi, H.S., Beccaluva, E.A. et al. Social engagement of children with autism spectrum disorder (ASD) in imitating a humanoid robot: a case study. SN Appl. Sci. 2, 1085 (2020). https://doi.org/10.1007/s42452-020-2802-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-2802-4