Abstract

Feature selection (FS) is an adequate data pre-processing method that reduces the dimensionality of datasets and is used in bioinformatics, finance, and medicine. Traditional FS approaches, however, frequently struggle to identify the most important characteristics when dealing with high-dimensional information. To alleviate the imbalance of explore search ability and exploit search ability of the Whale Optimization Algorithm (WOA), we propose an enhanced WOA, namely SCLWOA, that incorporates sine chaos and comprehensive learning (CL) strategies. Among them, the CL mechanism contributes to improving the ability to explore. At the same time, the sine chaos is used to enhance the exploitation capacity and help the optimizer to gain a better initial solution. The hybrid performance of SCLWOA was evaluated comprehensively on IEEE CEC2017 test functions, including its qualitative analysis and comparisons with other optimizers. The results demonstrate that SCLWOA is superior to other algorithms in accuracy and converges faster than others. Besides, the variant of Binary SCLWOA (BSCLWOA) and other binary optimizers obtained by the mapping function was evaluated on 12 UCI data sets. Subsequently, BSCLWOA has proven very competitive in classification precision and feature reduction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Extensive data collecting and storage technology has undergone a revolutionary transformation due to the rapid development of contemporary civilization in information technology, image processing, natural language [1, 2], and other fields. As the core part of Knowledge Discovery in Databases (KDD) [3], data mining [4,5,6] aims to automatically search for obtaining hidden information from a vast amount of collected data. The acquired data sets are usually high dimensional and have irrelevant and redundant characteristics. The unprocessed execution will consume a lot of computational resources, which will impact how well the learning algorithm performs in the end. Feature selection, as a representative data preprocessing method, has been widely concerned in recent decades. It performs well in lowering the data’s feature dimension [7] and increasing prediction accuracy.

Filter [8, 9], wrapper [10, 11], and hybrid [12,13,14,15] methods are the three main categories [16] of conventional feature selection techniques. The filter approach directly employs all training data’s characteristics to assess and choose feature subsets [5] without using different algorithms. Thus, the calculation speed is fast, but the average deviation is large. The wrapper method works by creating many models with different subsets of input features and selecting those features with the most optimal model performance according to performance metrics. Therefore, the wrapper method is usually better than the filter method in selecting smaller feature subsets. Hybrid methods attempt to combine two or more feature selection methods to inherit the advantages of filters and wrappers and ultimately obtain a better subset of features [7, 17,18,19].

Researchers widely favor the wrapper method because they improve search efficiency and accuracy by using various search strategies to obtain a better subset. Existing early search strategies, such as branch-and-bound search [20], improve search efficiency by cutting out many redundant features. Sequential Forward Search (SFS) generates feature subsets by adding features to blanks [21]. Sequential Backward Search (SBS), which generates feature subsets by removing features [22] from the complete set [23], is a further example. The population-based search strategy is particularly suitable for feature selection problems because a group of non-dominant solutions (feature subsets) can be found in a single run. Meta-heuristic search can quickly obtain high-quality solutions [9] in the whole search space, so it is gradually used to solve FS.

Despite the long-standing preference for deterministic optimization such as gradient descent due to their precision and repeatability, they may not always be sufficient when dealing with complex problems [24,25,26]. In such cases, evolutionary methodologies such as genetic algorithms that explore a wide range of potential solutions can offer a promising alternative [27]. However, it is important to note that these approaches can be computationally expensive and time-consuming. Moreover, optimizing complex systems requires consideration of multiple objectives [28, 29], as single-objective optimization may not always yield desired outcomes [30]. Nevertheless, multi-objective optimization is not always necessary or appropriate [31], and a combination of single-objective and multi-objective approaches may prove more effective depending on the problem at hand [32]. Therefore, it is crucial to carefully evaluate the problem requirements before selecting an optimization methodology.

At present, there are many Meta-heuristic Algorithms (MAs) which applied to practical problems, such as particle swarm optimizer (PSO) [33, 34], Ant Colony Optimization (ACO) [35], Grey Wolf Optimization (GWO) [36], Harris Hawks Optimizer (HHO) [37], Grasshopper Optimization Algorithm (GOA) [38], Differential Evolution (DE) [39], Slime Mould Algorithm (SMA) [40, 41], Colony Predation Algorithm (CPA) [42], Runge Kutta optimizer (RUN) [43], Rime Optimization Algorithm (RIME) [44], Hunger Games Search (HGS) [45] and Sine Cosine Algorithm (SCA) [46]. Algorithms, however, are not appropriate for any issues due to the diversity and complexity of practical problems. As a result, it is crucial to research optimization techniques for various situations. Therefore, many improved MAs have been proposed, including Nelder-Mead simplex [47], Opposition-based Marine Predators Algorithm (MPA-OBL) [48], Random Learning Slime Mould Optimization (ISMA) [49], Improved Seagull Optimization Algorithm (ISOA) [50], Enhanced Elephant Herding Optimization (EEHO) [51], Improved Archimedes Optimization Algorithm (I-AOA) [52], Opposition-based Levy Flight Chimp Optimizer (ICHOA) [53], Multi-population Cooperative Coevolutionary Whale Optimization Algorithm (MCCWOA) [54], Adaptive Chaotic Grey Wolf Optimization (ACGWO) [55], Memory-based Harris Hawks Optimization (MEHHO) [56], and Hybrid Wind Driven-based Fruit Fly Optimization (WDFO) [57]. The MAs have been applied to solve many problems, such as bankruptcy prediction [58], economic emission dispatch [59], economic load dispatch [60], feature selection [61,62,63], constrained multi-objective optimization [64], global optimization [65], large-scale complex optimization [66], feed-forward neural networks [67], scheduling optimization [68, 69], multi-objective optimization [70], and dynamic multi-objective optimization [71].

The use of MAs for feature selection has also attracted much investigation. Al-tashi et al. [72] proposed a discrete BGWOPSO that combined GWO with Particle Swarm Optimization (PSO) to perform feature selection on the text. Abd et al. [73] proposed a hybrid algorithm of the Marine Predators Algorithm (MPA) and KNN to solve the feature selection problems. Xue et al. [74] proposed an Adaptive Particle Swarm Optimization (SAPSO) and applied it to solve FS. Samy et al. [75] proposed a new binary WOA using the Optimal Path Forest (OPF) technique as an objective function to select the optimal subset to deal with the classification problem. Hussien et al. [76] proposed two binary WOA variants for feature selection, which ultimately reduced the complexity of the system and improved the performance of the system. Tubishat et al. put forward a Dynamic Butterfly Optimization Algorithm (DBOA) which is an enhanced Butterfly Optimization Algorithm (BOA) [77]. Fang et al. [78]. proposed a hybrid algorithm of the Nonlinear Binary Grasshopper Whale Optimization Algorithm (NL-BGWOA) to solve the feature selection problem and achieved good results in most high-dimensional datasets. Hassanien et al. [79] proposed a method to recognize human emotions using an elephant herding optimization algorithm and support vector regression and verified its effectiveness. Houssein et al. [80] proposed an improved Beluga Whale Optimization(BWO) algorithm based on dynamic candidate solutions to solve feature selection problems of different dimensions Barshandeh et al. [81] used the extended Learning-Automata mechanism to re-integrate and develop the Jellyfish Search algorithm and Marine Predator Algorithm (MPA). They verified the advantages of the proposed algorithm on 38 test functions and 10 data sets. Emary et al. [82] presented an extension of Ant-lion Optimization (ALO) with Levy flight (LALO) for feature selection. Sayed et al. [83] proposed a new Chaotic Dragonfly Feature Selection Algorithm (CDA) embedded with a chaotic map algorithm for dragonfly search iterations. Abualigah et al. [84] used an Improved Particle Swarm Optimization Algorithm (FSPSOTC) as an FS method, and experiments validated that it improved the effectiveness of text clustering techniques by processing a new subset of informative features. Thom et al. [85] presented a discrete Coyote Optimization Algorithm (COA) called Binary COA (BCOA). According to the hyperbolic transfer function of the packing model, the optimal feature subset of the dataset is selected for classification. Rajalaxmi et al. [86] developed a Binary Iimproved Grey Wolf Optimization (BIGWO) method based on wrappers for classifying Parkinson’s disease with optimal feature sets. Li et al. [87] proposed an Improved Sticky Binary PSO (ISBPSO) algorithm for feature selection.

These methods are efficient ways for FS. One of the population-based MAs, namely WOA [88], focuses on mathematical modeling by simulating the humpback whale’s bubble-net hunting style. WOA has few model parameters, fast convergence speed, and easy implementation compared to other optimizers. Therefore, it has been widely used in engineering technology [89,90,91,92] and other fields.

Although WOA performs well in optimizing simple nonconvex and other problems, it also struggles to settle complex optimization problems and tends to fall into local optima. Search around ideal individuals and searching around random individuals are the two basic search modes for the WOA algorithm. The contraction and spiral update process around the optimum search is crucial to ensure the algorithm has excellent local search ability and fast convergence speed. However, this also leads to a struggle beyond the local optimum at a later stage. Therefore, introducing appropriate, feasible strategies to enhance WOA’s ability to global explore and locally exploit is a direction worthy of study.

This research has developed a comprehensive learning whale algorithm, which provides the optimal solution to solve the imbalance of WOA’s exploration and exploitation. First, sine chaos is introduced into WOA. The sine chaos strategy generates a chaotic sequence to replace pseudorandom numbers to generate an initial random population. Experiments show that the initial population with more uniform distribution can be obtained using sine chaos, which helps the algorithm obtain better results in the early evolution stage. This work also proposes a comprehensive learning strategy to enrich the diversity of the swarm. CL strategy contains three mutually exclusive equations [93], which can provide more search methods for the algorithm to escape from local optimization and maintain the balance between exploration and exploitation. According to the excellent search performance of SCLWOA, this paper considers its application in high-dimensional feature space for FS. By changing the encoding mode of SCLWOA for feature selection, its effectiveness is verified by evaluating 12 UCI high-dimensional medical data sets. This paper adopts K Nearest Neighbor (KNN) as a classifier for experimental evaluation. KNN shows excellent performance in training speed and classification accuracy [94, 95], so it is widely utilized to classify small sample data. The specific contributions of this paper are shown below:

-

1.

Propose an SCLWOA with sine chaos and comprehensive learning mechanisms to strike a new balance between exploration and exploitation for WOA.

-

2.

SCLWOA has better global search capability than other established and improved optimization algorithms.

-

3.

The improved binary version based on SCLWOA has an excellent performance in feature optimization compared to other algorithms.

The structure of this article is as follows: It focuses on the detailed description of the standard WOA’s exploration and exploitation process in Sect. 2. Section 3 introduces an improved SCLWOA incorporating sine chaos and CL strategies and proposes BSCLWOA for feature selection tasks. The experimental setup and results analysis of this study are shown in Sect. 4. Finally, in the fifth part, the conclusions and description of the work will be given.

2 An Overview of Whale Optimization Algorithm (WOA)

WOA was a MA inspired by the humpback whales’ predatory behavior, which was proposed in 2016. It consists of two main stages of exploration and exploitation. The term "exploitation" refers to the algorithm’s ability to search regions with potential optimal solutions that have not been searched globally in the iterative process. In contrast, the term "exploration" refers to the algorithm’s ability to focus on the local search between different regions that have been developed [96]. During the exploitation phase, WOA mainly referred to the humpback whales’ bubble net behavior for mathematical modeling.

2.1 Exploration

The search phase mainly refers to the random individual position. This stage allows the algorithm to perform a global search to obtain a better solution [88],

where \(X_{rand}\) is the random agent selected from the current population, C and A are two coefficients. The specific equations of A and C are presented in Eqs. (3) and (4).

where \(a\) is a parameter decreasing from 2 to 0 linearly during the evolving process, and \(r\) represents a number ranging from [0,1] randomly.

2.2 Exploitation

In the utilization stage of the WOA algorithm, a bubble search mechanism is adopted, embodied in two methods, namely the shrinking enveloping strategy and spiral update position mechanism.

2.2.1 Contraction Encirclement Mechanism

The update position of the individual will be set to any position between the current individual and the prey if A is within [− 1,1].

where \(X_{best}\) represents the current optimal solution.

2.2.2 Spiral Update Position

The whales drive their prey through bubble nets and constantly update their position. This process will first calculate the distance between the current individual and the best individual:

where \(b\) indicates a logarithmic spiral shape factor, and \(l\) represents a number ranging from [− 1, 1] randomly. To balance the two search strategies, a random parameter \(p \in \left[ {0,1} \right]\) is introduced as follows:

WOA’s pseudo-code is shown in Algorithm 1.

3 Proposed Comprehensive Learning WOA Algorithm

The proposed SCLWOA adds two main fruitful strategies: (1) The idea of chaos optimization is introduced into WOA, and the initialization is uniformly generated using the randomness, ergodicity, and regularity properties of sine chaos optimization; (2) CL mechanism is added to enrich the individual’s diversity using three mutually exclusive equations. Therefore, the algorithm can avoid falling into the local optimum trap. Besides, the feature selection problem can be solved by improving SCLWOA to a binary version.

3.1 Sine Chaotic Mapping

Global optimization is called searching for the minimal or maximal fitness of objective function on solution space. There is a strong likelihood that the global optimum will be reached if various randomly chosen points on the solution space can be offered. Therefore, stochasticity is the key to dealing with global optimization problems [97]. The behavior of the chaotic mapping phenomenon is characterized by uncertainty, irreducibility, and unpredictability, which is the existence of seemingly random irregular motion in a deterministic system. There are Common chaotic mappings, such as Logistic mapping, Sine mapping, Gaussian mapping, Tent mapping, Cubic mapping, and so on. We mainly use simple sine mapping to realize population initialization, and its mathematical equation can be calculated by Eq. (10).

3.2 Comprehensive Learning Strategy

Balancing the exploration and exploitation phases is crucial to determining whether the algorithm can find the optimal agent. Compared to other optimizers, WOA has good exploitation performance but is weak in global space search. Therefore, a comprehensive learning mechanism is incorporated to strength the search behavior of WOA. This is manifested in three mutually exclusive equations:

Levy distribution is a kind of continuous probability distribution of non-negative random variables, which has the advantages of exploring the unknown large search space and jumping out of the trouble of local optimization. Therefore, in Eq. (11), the Levy flight mechanism is introduced to WOA to get rid of the local optimum. Then, a specific formula is expressed as follows:

where \(\gamma\) is Levy’s flight parameters, and the product \(\oplus\) represents the term-by-term multiplication.

The spiral mechanism helps to avoid premature convergence, and the mean value processing scheme is adopted in Eq. (12). In each dimension, solutions are updated according to a differential vector pointing to the overall average value of all solutions. By learning from the average behavior and effectively using the information results of all schemes, the population diversity of the algorithm is maintained, and the local optimum can be avoided. The expression of the updated equation is shown as Eq. (12).

Equation (13) guides individuals to approach the optimal direction by learning from the optimal solution to gain a better solution to enhance the local search ability.

The pseudo-code of SCLWOA is given in Algorithm 2. The detailed flowchart is shown in Fig. 1.

3.3 Binary SCLWOA

Solving the problem of feature selection is a search optimization problem. For a feature set of size dim, the search space consists of \({2}^{dim-1}\) possible subsets. This is a huge exhaustive search space. As an improved algorithm of the original WOA, the proposed SCLWOA has greatly improved the search performance. Therefore, this study applies it to gain a better feature subset.

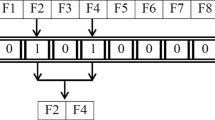

An effective population-based strategy is to search the potential feature space and convert the feature subset into binary population individuals. Feature selection is a discrete combinatorial optimization problem, and the primary task of the method is to discretize the search strategy. The transfer function and position update equation selected in this paper are shown in Eqs. (14) and (15). Under the FS strategy based on SCLWOA, each individual \(x = (x_{i,1} ,x_{i,2} ,...,x_{i,n} )\) is regarded as a feature subset, and the individual dimension dim represents the number of features in the original data set. If a feature is chosen, the corresponding dimension value is 1; otherwise, the value is 0 [98].

The fitness function is a measure to decide the merits of the selected subset. The selection of the fitness function will directly affect whether the algorithm can eventually find the optimal subset. FS aims to select the subset with the least features to ensure classification accuracy. Affected by the above two objective constraints, the adopted fitness function is shown in Eq. (16):

where \(\gamma_{R} (D)\) represents the classification error rate of the KNN classifier, D denotes the number of features in the original data set, \(\alpha \in \left[ {0,1} \right],\,\beta = 1 - \alpha\), R denotes the length of the selected feature subset. This paper selects the best point \(\alpha =0.05\) through experiments.

3.4 Computational Complexity Analysis

The time complexity of SCLWOA in this paper is mainly determined by four subsequent steps: population initialization, sine chaos, fitness calculation, and position update order incorporating a comprehensive learning strategy. The time spent calculating the fitness value is mainly judged according to the chosen optimization problem, so the algorithm complexity is temporarily set to \(O(T*n)\). The population initialization depends primarily on the number of schemes and the dimensionality, n indicates the number of agents, Dim denotes the dimensionality of the agents, and T means the maximum number of iterations, so the time complexity of population initialization is \(O(n*Dim)\). The time complexity of sine chaos is \(O(n*Dim),\) and the computational complexity of updating the location is \(O\left(T*n*Dim\right)\). Besides, the integrated learning strategy is \(O(T*n*Dim)\). Finally, the time complexity of SCLWOA is \(O((T+3)*n*Dim+T*n)\) (Table 1).

4 Experimental Results and Analysis

While confirming the performance of SCLWOA, sufficient targeted experiments are done in this paper, and the results of the comparative observations are discussed in a comprehensive analysis. A crucial component of evaluating the effectiveness of various models or algorithms is ensuring fair comparison in the field of AI literature [99, 100]. To ensure impartiality, all models must use identical datasets that accurately represent the problem domain [101]. Additionally, it is crucial to employ appropriate assessment measures while accounting for any potential biases that might exist in the data or the process of assessment [102, 103]. By including these aspects, one may gain more meaningful judgments about the effectiveness of models and develop AI systems comprehensively [104]. To decrease the influence of external factors, every task in this work is conducted in the same setting. Regarding the parameter setting of the meta-heuristic algorithm, a total of 30 search agents were set up, and 300,000 iterations were completed. To reduce the influence of experimental contingency, each algorithm repeatedly executed the benchmark function 30 times. It is important to note that the results obtained in this paper evaluate performance according to the Average Value of Optimal Function (AVG) and the Standard Deviation Value (STD). The best result for each experiment is shown in bold for clarity. At the same time, a non-parametric Wilcoxon sign rank test at a significance level of 5% is adopted to determine whether the method has statistical significance. The symbols ‘+’, ‘=’ and ‘-’ are used to illustrate that the performance of SCLWOA is better than, equal to, or weaker than other competitors, respectively.

Section 4.1 presents a qualitative analysis of SCLWOA, including the balance between exploration–exploitation and the diversity of populations during the iterative process. In Sect. 4.2, we investigate the ability of the sine chaos strategy to initialize and the influence of the CL strategy on the final search for the global optimal solution. In Sect. 4.3, according to the multi-parameter characteristics of the CL strategy, we made a parameter sensitivity analysis. In Sect. 4.4, SCLWOA is compared with the other seven standard MAs in terms of convergence speed and accuracy on the race function. In Sect. 4.5, eight variants of the WOA were selected for the competition. Section 4.6 compares SCLWOA to the current seven superior developed algorithms. In Sect. 4.7, the 12 data sets are selected from the UCI machine learning library to test the performance of binary SCLWOA in feature selection. In Sect. 4.8, the advantages and disadvantages of SCLWOA are summarized and analyzed based on the experimental results.

All experiments were performed on a 2.50 GHz Inter i7 CPU equipped with 16 GB RAM and Windows 10 OS, programmed in MATLAB R2019b.

4.1 The Qualitative Analysis

This section conducted a comprehensive qualitative analysis of SCLWOA. Figure 2 shows the outcomes of unimodal, multimodal, hybrid, and composition functions. Here, column (a) is each algorithm’s three-dimensional position distribution map. Column (b) is the two-dimensional search history of SCLWOA in various functions. Column (c) is the track of the search agent. Column (d) is the average fitness value in the optimization process, which records its convergence curve.

The red dots in Fig. 2b represent the locations of the global optimal solutions. It can be seen that the locations explored are widely distributed, which indicates that SCLWOA has a strong exploration ability and can have more access to the global space. Further, the locations near the optimal locations in the figure are the most concentrated. This indicates that SCLWOA can perform concentrated exploration at the optimal location and finally obtain the global optimal solution. In Fig. 2c, the fluctuation of the motion trajectories presented by the individuals during the iterative process is large, which indicates the high exploration ability of SCLWOA.

In MAs [105,106,107], the exploration–exploitation balance is a common problem [108]. The algorithm’s balance depends on the performance throughout the search process, analyzing its efforts between exploration and exploitation. Another way to measure the exploration–exploitation balance is to use the population diversity metric. The above experiments have tested that SCLWOA has a strong talent for exploration, so further research on its ability of exploration–exploitation is necessary. Figure 3 shows the analysis of the balance and diversity performance of SCLWOA and WOA.

Through the cross-sectional comparison of columns (a) and (b) in Fig. 3, we can see that SCLWOA increases spending in the exploration phase more substantially, whether on unimodal, multimodal, hybrid, or hybrid composition functions. Similarly, this is also proved by the diversity analysis in Fig. 3c. It can be seen that the curve of SCLWOA decreases more slowly compared to the original WOA, which is because SCLWOA that sacrifices part of the convergence rate gains enhancement in population diversity. Throughout the evaluation process, the fluctuation of SCLWOA is always larger than that of WOA. Especially in the middle and late iterations, it still maintains a considerable population diversity, which effectively helps the algorithm avoid the local optimum to seek a higher-quality solution scheme. This also allows SCLWOA to have better robustness to problems of different natures. From the analysis of balance and diversity, it is clear that with the combination of sine chaos and CL, SCLWOA can further balance the ratio in exploration and exploitation, find better-quality individuals, and maintain a notable convergence rate.

4.2 Cross-evaluation of Proposed SCLWOA was Conducted

This section further verifies that SCLWOA has better search performance compared to the single policy variant and the original WOA.

Sections 3.1 and 3.2 introduce two integrating strategies, including sine chaos and CL comprehensive learning, into the original WOA. In this section, the performance after mixing and crossing is tested and compared mainly by means of linear combination. In Table 2, "1" means the mechanism is selected, and "0" means it is not. We refer to the WOA combined with the CL comprehensive learning strategy as CLWOA and the fusion of WOA and sine chaos strategy as SWOA. In this chapter, 30 functions from CEC 2017 are used to test and evaluate the performance of the SCLWOA optimizer. See Table 17 in "Appendix" for the specific contents of the function. Dim is the dimension of the function, and the "search range" is the boundary of the search space of the current function.

From the horizontal comparison in Table 3, it is not difficult to find that SCLWOA, which integrates sine chaos and CL, is significantly better than the initial WOA in optimization. Looking further at F9 and F30 of Fig. 4, it is more evidence that the initial optimization agent combining sine chaos of SWOA and SCLWOA has significantly outperformed the other algorithms at the starting stage and found the best solution in the end. From the above experimental results, we can find that a good initial solution affects the process of finding the evolutionary algorithm’s global optimum, accelerating the population’s convergence and improving the final solution’s accuracy. CLWOA incorporating the Levy flight significantly improves the exploitation capability of the original WOA, allowing it to escape from the local optimum and thus obtain a better solution, especially in multimodal problems. CL strategy that considers all three behaviors also increases the overall population diversity, allowing the algorithm to cope with more complex problems. SCLWOA nicely combines the advantages of both strategies and considers the advantages of fast convergence of the original WOA. Referring to the results given by the final Wilcoxon symbolic rank in Table 3, it is more intuitive than SCLWOA gives the best solution for most problems relative to other single mechanism variants. Bold indicates that the method achieved the best results among all the methods compared in this experiment. In summary, the SCLWOA incorporating sine chaos and CL strategies is the variant with the best performance.

4.3 Influence of Parameters

This chapter examines the effects of the algorithm parameters, including the number of evaluations and the population size. The proposed comprehensive learning strategy is the key to balancing the two exploration and exploitation phases. It has a large impact on the performance of the algorithm. The parameter p mainly determines the choice of the stage to execute the CL strategy, so it is valuable to analyze the value of p. In the CL strategy, three mutually exclusive equations are included to balance the exploration and exploitation phases of the optimizer. The introduced parameters r1 and r2 were used to determine which equation was adopted to participate in the update. The adopted CL strategy will profoundly impact the exploration and exploitation capability of the balancing algorithm, so the values of r1 and r2 need to be verified. The parameter comparison experiments in this section are conducted in the evaluation framework by selecting 30 test functions on CEC 2017, setting 30 search agents(n) with dimension 30 individually (Dim), performing 300,000 evaluations(T), and repeating the test functions 30 times. The default parameter settings are shown in Table 1.

In Sect. 3.2, it was introduced that WOA performs strongly in terms of exploitation capability but is prone to fall into local optimum (LO) values, so the CL strategy is introduced to reduce this risk. Four main ranges of p values are set in the experiments to evaluate the best stage for CL strategy, which are \(p>0.6,p>0.7,p>0.8,p>0.9\). Among them, the default is \(p>0.8\). The remaining optimizer parameters are shown in Table 1, and the value of different p are shown in Fig. 5. It can be found that the change of the parameter p range has no significant impact on the overall performance of SCLWOA. Therefore, we still choose the default and implement the CL strategy at \(p>0.8\).

This experiment is performed by default in the case of \(p>0.8\) for the remaining parameter comparison experiment. The r1 and r2 values of the benchmark function are shown in Table 4, and the detailed parameter settings of the remaining optimizers are shown in Table 1.

From Fig. 5, it is easy to see that the algorithm that adopts the benchmark r1 and r2 values has a stronger performance on the unimodal function F2, the multimodal function F6, and the hybrid function F14 can maintain a certain lead on the rest of the functions. The experimental results show a greater tendency to maximize the ability of the CL strategy in the algorithm when \(r1=0.1,r2=0.4\).

4.4 Comparison with Other Well-known MAs

To further assess the optimization performance of SCLWOA, we selected 7 well-known MAs to participate in the competition, namely BA [109], WOA [88], PSO [110], SCA [46], FA [111], GWO [36] and HHO [37]. Table 5 shows the settings of their parameters. In this section, 30 benchmark functions from IEEE CEC2017, recently adopted by most of the literature in the evolution community, are used for comparison. All experiments in this section are carried out under the evaluation framework. Additionally, the population size is 30, the dimension is 30, and the maximum iteration number is 300,000. Besides, each function is executed 30 times.

In this paper, the final numerical result of the algorithm is determined by referring to the AVG and the STD, where ‘±/=’ stands for better/worse/equal to the benchmark optimizer, respectively. The Wilcoxon signed-rank test at the significance level of 0.05 was adopted to determine whether the method met statistical significance. It can be considered that when the test value is lower than 0.05, it indicates more superiority. Moreover, we conducted the Freidman [112] test to help us visually observe the final experimental statistics and give the final average ranking.

Table 6 shows each algorithm’s optimal function values and standard deviations on the IEE CEC2017 function test. By observing the final optimal average values of ARV and RANK. Bold indicates that the method achieved the best results among all the methods compared in this experiment, it can be found that SCLWOA ranks first with a minimum average value of 1.8667, which can be said to achieve a considerable degree of enhancement compared to WOA’s 5.1667. HHO is the best of other algorithms, but there is still a certain gap in the search performance compared with SCLWOA. This reflects the powerful search performance of SCLWOA. Although SCLWOA is not optimal for the multimodal functions from F5 to F10, the minimum value is also close to the optimal value. SCLWOA also performs well on mixed problems and complex problems. The superiority of the SCLWOA algorithm over the high-performance optimization algorithms GWO, HHO, and WOA on the composition functions F23 to F30 is demonstrated.

Table 7 shows the average p-values of SCLWOA and other MAs on 30 competition functions. Where ‘±/=’ indicates the number of functions whose p-value is ‘greater than/less than/equal to’ ‘0.05’. The experimental results in the table show that the p-value of SCLWOA is far less than 0.05 compared with other MAs in most functions, which indicates that SCLWOA has apparent advantages over other MAs.

Figure 6 shows the convergence curves during the iterative process. From the figure, it is easy to see that BA has a faster convergence rate on the F1 function, but it quickly rushes into the local optimum trap. In contrast, SCLWOA still has outstanding exploitation ability in the later stage, mainly due to the CL strategy that helps the algorithm maintain good population diversity and allows the algorithm to explore more regions. The convergence plots of F13, F15, and F19 show that SCLWOA exhibits fast convergence and finally obtains the optimal solution. SCLWOA is slightly better than HHO on F30. SCLWOA has a better initial position at the beginning of the algorithm, which mainly benefits from the sine chaos strategy.

4.5 Compared with the Improved WOA Variants

In this section, we further compare the performance of SCLWOA with eight advanced WOAs in CEC 2017. These variants include CWOA [113], CCMWOA [114], LWOA [115], IWOA [116], BWOA [117], BMWOA, OBWOA [118], and ACWOA [119].

Based on the final ranking in Table 8, it can be seen that SCLWOA still ranks first in comparison with the WOA variants. Bold indicates that the method achieved the best results among all the methods compared in this experiment. SCLWOA achieves global optimality on most of the tested functions. These benefit from the population diversity maintained by the CL strategy, which helps the algorithms explore more promising regions as the number of evaluations grows. LWOA ranked first on the unimodal function F2 and the multimodal functions F4 and F5. Although SCLWOA did not converge to the best, it ranked second. On Composition Functions, the STD value of CCMWOA and BWOA has reached 0, indicating a more stable performance than other algorithms on these functions. However, SCLWOA always maintains a low STD value on all functions, which indicates that SCLWOA has better robustness and can handle more complex problems.

Table 9 is the result of Wilcoxon’s signed-rank test. It can be seen that the p-values of SCLWOA are much lower than the other WOA variants in most functions, which demonstrates the excellent performance of SCLWOA. It is significantly improved when compared to the different variants. Nevertheless, the p-value is 1 on some composition functions because the initial population has random behavior.

Figures 7 and 8 show the visualization of the convergence curves of each optimizer during the evaluation process. The figure makes it easier to see how SCLWOA frequently manages to escape the trap and locate a superior solution than other algorithms that prematurely enter the local optimum. This is because the Levy flight mechanism in the CL strategy can help the algorithm avoid the trouble of local optimum and search for a broader space. On F7, SCLWOA has relatively high-quality solutions in the initial stage. This is attributed to sine chaos’ initial randomization, which allows the initial solution to be scattered more evenly and has a better chance of searching for the global optimum. Based on the above-combined performance considerations, SCLWOA is the superior variant.

4.6 Compared with State-of-the-art Advanced Evolutionary Algorithms

To more effectively verify SCLWOA’s powerful exploration ability, other well-known advanced evolutionary algorithms were organized to participate in the competition, including CGPSO [120], SCADE [121], OBSCA [122], CLSCA [123], CGSCA, CMFO and OBLGWO [124]. The experiments in this section are done in an evaluation framework, adopting a population size of 30 individuals and performing 300,000 iterations of evaluation, where each algorithm will be executed ten times. The experimental results were accepted by Wilcoxon signed-rank test, and the p-value was less than 0.05, implying a significant improvement compared to other algorithms. The Freidman test will rank the final performance of the algorithm based on the assumed Average Ranking Value (ARV).

As is shown in Table 10, SCLWOA continues to rank first in the comparison ranking with the advanced evolutionary algorithm. Bold indicates that the method achieved the best results among all the methods compared in this experiment. SCLWOA achieves global minimization on the unimodal functions F3 and multimodal functions F5 and F9. SCLWOA on F4, F6, F7, and F8 does not reach the global optimum solution but maintains the closest distance to the solution. This is due to incorporating both Levy flights in the CL strategy, allowing the ability to jump out of the local optimum solution. The behavior of optimal vector learning is added again to balance the exploration–exploitation capabilities. SCLWOA has an absolute advantage in the hybrid function. This shows that SCLWOA can solve problems well in different situations with better robustness.

Table 11 shows the Wilcoxon signed-rank test results of the p-values. Results show that most competing algorithms are far less than 0.05 in 30 functions, indicating the strong search performance of SCLWOA.

As seen in Fig. 9, the overall performance of SCLWOA is outstanding. In the multimodal function F9, SCLWOA is already ahead of other rivals in the pre-selection stage. This is because the sine chaos mechanism helps the algorithm to have better initial random agents, and the individuals are more equally scattered in the global space and have a better chance to approach the global optimal solution. However, in F1, F12, and F13, CGPSO showed better convergence ability but soon fell into the trouble of local optimization. On the other hand, SCLWOA tends to converge to better solutions because the CL strategy helps the algorithm improve population diversity. The Levy flight mechanism allows the algorithm to jump out of the local optimum and explore more possible spaces. As for the other functions, SCLWOA shows its powerful global search ability. The algorithm can obtain the optimal value and ensure convergence speed by learning from the optimal scheme.

4.7 Feature Selection Experiment

This section conducted a more comprehensive study on the proposed SCLWOA in a binary manner according to the feature selection rules. The experiment selected 12 medical datasets from the UCI database and used KNN classifier to test the quality of the selected optimal feature subset. To avoid possible deviations [125] in the feature selection process of high-dimensional data, we carried out tenfold cross-validation [126] for each data set, and the results obtained in the table were the average results.

4.7.1 K-nearest Neighbor Classification

As a supervised machine learning algorithm, the k-nearest neighbor is mainly applied to classification and regression problems [127]. The main core idea of KNN is to calculate the distance between all samples according to the classification results in the training set. Straight-line distance (Euclidean distance) is usually adopted as the basis for distance calculation. The calculation formula is shown in Eq. (17). Then, the nearest sample to the test sample in the K training samples is selected according to the selected K value. The category of most training samples is the final category of test samples.

4.7.2 The Preparatory Work

Section 3.3 proposes a binary-based BSCLWOA to improve the performance of the classifier. This section will perform a reliability study to carefully evaluate whether the proposed BSCLWOA performs reliably on feature selection problems compared to other well-known optimizers. The experiment collected 12 data sets from the UCI data repository [128], as shown in Table 12.

In order to consider the performance of the optimizer more comprehensively, the selected dataset contains data with low- and high-dimensional features. As can be seen from the table, the sample number of the selected benchmark data set ranges from 70 to 2500, the characteristic dimension ranges from 15 to 16,000, and the class ranges from 2 to 7. Lung_Cancer, Prostate_Tumor, Tumors_11, and Tumors_14 are all high dimension and small sample data sets. The results of this type of data evaluation are susceptible to extreme samples and therefore have a more excellent test of classification accuracy.

4.7.3 Results and Analysis

This paper selects six common binary heuristic algorithms for comparison, including BGWO [129], BALO [130], BBA [131], BSSA [132], BWOA [133], and BQGWO. The experimental results mainly refer to the final adaptation value, feature accuracy, the number of selected features, and depletion time, and the results of these four items are shown in Tables 13, 14, 15 and 16.

In the above tables, for the convenience of observation, we have bolded some data to indicate that the method achieved the best results among all the methods compared in this experiment. It can be seen from Table 13 that BSCLWOA has a significant advantage over the other six competitors on all 12 data sets that participated in the test. From the final ARV obtained, the average fitness values obtained by BSCLWOA were much lower than those of other peers. This shows that BSCLWOA has superior performance compared to other algorithms. Further observing the data in the table, it can be found by comparing the STD. of each data set horizontally. Except for the better performance of BBA and BSSA on segment and Tumors_14, the STD. of BSCLWOA is much lower than other algorithms on the rest of the data sets, which indicates that BSCLWOA has better stability.

According to the final RANK values in Table 14, the classification accuracy obtained by BSCLWOA optimization still far exceeds that of other algorithms. BSCLWOA achieved zero classification error rates in four of the 12 test data sets. In the others, the rate of evaluation error is much lower than that of rivals. Compared with the standard WOA, the average error rate of the proposed algorithm is also reduced by nearly 0.02, which has been greatly improved.

The ultimate goal of feature selection is to improve prediction accuracy and reduce the dimensionality [134] of prediction results. Obtaining the optimal feature subset by eliminating features with little or no predictive information and strongly correlated redundant features [135] is the core of this work. Table 15 shows that BSCLWOA obtains a subset of features with minimum dimensionality on each dataset, indicating that BSCLWOA has a better feature selection capability. Combined with the classification error rate in Table 14, it can always filter out fewer features with a low error rate.

To further demonstrate the excellent performance of the proposed algorithm on high-dimensional data sets, we compare the classification error rate and the number of features of the six high-dimensional data sets in the experiment. To further demonstrate the excellent performance of the proposed algorithm on high-dimensional data sets, we compare the classification error rate and the number of features of the six high-dimensional data sets in the experiment. From Fig. 10, SCLWOA maintains a minimum classification error rate in classifying these six high-dimensional data sets. In Fig. 11, compared with the performance of the seven algorithms in feature dimensionality reduction, the proposed SCLWOA is also more satisfactory.

Comparison results of time consumption in Table 16 show that BSCLWOA ranks sixth, which shows it took longer time than most binary optimizers. This is because both the sine chaos and CL strategies somewhat affect the time cost. Although BSCLWOA has consumed more time cost, it is worthwhile considering the comprehensive performance of Tables 13, 14, and 15. BSCLWOA outperforms the other six binary optimizers in handling the feature selection problem. Of course, how to reduce the consumption of SCLWOA computing time while ensuring performance is still the direction of our future research.

Convergence plots are considered a reasonable measure to estimate the performance of the optimization algorithm. The best fitness values during the iterative process are presented as convergence curves below to make the experimental results more intuitive and clearer to represent. Figure 12 shows the convergence curves of the algorithm comparing 12 data sets. The Y-axis shows the average fitness value under ten independent executions, and the X-axis indicates the number of iterations. It is not difficult to find that BGWO is close to BSCLWOA except for Breast, Dermatology, and segment. The convergence value of BSCLWOA is much smaller than other algorithms on all the datasets, especially in the high-dimensional ones. All of these benefit from the variety of update methods provided by the CL strategy, which ensures the diversity of the population and enables the algorithm to have more opportunities to explore optimal regions.

Handling the balance between global exploration and local exploitation search phases is a significant factor that makes BSCLWOA superior to other algorithms. The experimental conclusion shows that the powerful search capability enables BSCLWOA to perform excellently on a wide range of complex problems.

4.8 Experiment Summary

The experimentation for this study has been described above. Both qualitative and parametric experiments were designed exclusively for SCLWOA, and the outcomes demonstrated that SCLWOA outperforms the original WOA in terms of balanced exploration–exploitation. The comparative experimental results of the mechanisms show that sine chaos and comprehensive learning strategies significantly strengthen the algorithm’s optimization capacity. However, sine chaos’s initial optimization does not work well on some test functions. This is not to say that sine chaos has unsatisfactory optimization results, but sine chaos initially has certain randomness. SCLWOA also has relatively stable performance in comparison with other metaheuristics, such as WOA and the improved WOA versions, always finding the best solution. However, SCLWOA, which focuses on exploration capability, makes certain sacrifices in terms of convergence speed. Compared with some algorithms with faster convergence speed, SCLWOA may often take more time to consume, but the sacrifice of speed improves accuracy on fs tasks. Therefore, how to further speed up the convergence speed of the algorithm while ensuring the accuracy of SCLWOA convergence is a question we continue to consider in the next phase. The binary variant of SCLWOA, BSCLWOA, is well known for its excellent performance in feature selection. From the experimental results, it can be observed easily that BSCLWOA usually finds the most effective and relatively small number of feature subsets for classification. The results are consistent with the purpose of feature selection. It is meaningful to use BSCLWOA to settle the feature selection problem. Furthermore, the proposed BSCLWOA can also be applied to more cases in future work, such as optimization of machine learning models [136], fine-grained alignment [137], Alzheimer’s disease identification [138], medical signals [139,140,141], power distribution network [142], MRI reconstruction [143], renewable energy generation [144], location-based services [145, 146] and information retrieval services [147,148,149,150], and iris or retinal vessel segmentation [151, 152].

5 Conclusions and Future Directions

In this paper, SCLWOA is proposed to optimize the exploration–exploitation imbalance of the original WOA. Sine chaos and CL integrated strategies are integrated with SCLWOA to improve WOA, the algorithm is applied to some CEC2017 global optimization problems. The global optimization performance of SCLWOA is verified by comparing it to other advanced algorithms. The experimental results show that the enhanced SCLWOA has excellent exploration ability, which helps the algorithm jump out of LO values and accurately explore more promising regions in most cases. In addition, we map SCLWOA into binary space by the mapping function, which can also show strong optimization performance for feature selection tasks. On selected UCI datasets of different dimensions, BSCLWOA obtains the most accurate feature subset and the least number of features compared to other algorithms. This has important implications in terms of reducing data dimensionality and improving computing performance. Due to SCLWOA’s outstanding exploration and global optimization capabilities, we will apply them to other industrial engineering problems in the future.

Data Availability

The data involved in this study are all public data, which can be downloaded through public channels.

References

Joshi, A. K. (1991). Natural language processing. Science (New York), 253, 1242–1249. https://doi.org/10.1126/science.253.5025.1242

Bitter, C., Elizondo, D. A., & Yang, Y. J. (2010). Natural language processing: A prolog perspective. Artificial Intelligence Review, 33, 151–173. https://doi.org/10.1007/s10462-009-9151-4

Wang, C. C., Zhu, K. Y., Hedström, P., Li, Y., & Xu, W. (2022). A generic and extensible model for the martensite start temperature incorporating thermodynamic data mining and deep learning framework. Journal of Materials Science & Technology, 128, 31–43. https://doi.org/10.1016/j.jmst.2022.04.014

Ara Shaikh, A., Nirmal Doss, A., Subramanian, M., Jain, V., Naved, M., & Khaja Mohiddin, M. (2022). Major applications of data mining in medical. Materials Today: Proceedings, 56, 2300–2304. https://doi.org/10.1016/j.matpr.2021.11.642

Mitroshin, P., Shitova, Y., Shitov, Y., Vlasov, D., & Mitroshin, A. (2022). Big data and data mining technologies application at road transport logistics. Transportation Research Procedia, 61, 462–466. https://doi.org/10.1016/j.trpro.2022.01.075

Guarascio, M., Manco, G., & Ritacco, E. (2019). Knowledge discovery in databases. In S. Ranganathan, M. Gribskov, K. Nakai, & C. Schönbach (Eds.), Encyclopedia of Bioinformatics and Computational Biology (pp. 336–341). Oxford: Academic Press. https://doi.org/10.1016/B978-0-12-809633-8.20456-1

Adamu, A., Abdullahi, M., Junaidu, S. B., & Hassan, I. H. (2021). An hybrid particle swarm optimization with crow search algorithm for feature selection. Machine Learning with Applications, 6, 100108.

Barddal, J. P., Enembreck, F., Gomes, H. M., Bifet, A., & Pfahringer, B. (2019). Merit-guided dynamic feature selection filter for data streams. Expert Systems with Applications, 116, 227–242.

Zhang, Y., Gong, D. W., Hu, Y., & Zhang, W. Q. (2015). Feature selection algorithm based on bare bones particle swarm optimization. Neurocomputing, 148, 150–157. https://doi.org/10.1016/j.neucom.2012.09.049

Kohavi, R., & John, G. H. (1997). Wrappers for feature subset selection. Artificial Intelligence, 97, 273–324.

González, J., Ortega, J., Damas, M., Martín-Smith, P., & Gan, J. Q. (2019). A new multi-objective wrapper method for feature selection–accuracy and stability analysis for bci. Neurocomputing, 333, 407–418.

Zhang, J. X., Xiong, Y. M., & Min, S. G. (2019). A new hybrid filter/wrapper algorithm for feature selection in classification. Analytica Chimica Acta, 1080, 43–54.

Ben Brahim, A., & Limam, M. (2018). Ensemble feature selection for high dimensional data: A new method and a comparative study. Advances in Data Analysis and Classification, 12, 937–952.

Pes, B. (2020). Ensemble feature selection for high-dimensional data: A stability analysis across multiple domains. Neural Computing and Applications, 32, 5951–5973.

Ghosh, M., Adhikary, S., Ghosh, K. K., Sardar, A., Begum, S., & Sarkar, R. (2019). Genetic algorithm based cancerous gene identification from microarray data using ensemble of filter methods. Medical & Biological Engineering & Computing, 57, 159–176.

Guyon, I., & Elisseeff, A. (2003). An introduction to variable and feature selection. Journal of Machine Learning Research, 3, 1157–1182.

Li, X., Zhao, Z., Zhu, Y. D., Zhao, Q., Li, J., & Feng, F. L. (2022). Automatic sleep identification using the novel hybrid feature selection method for hrv signal. Computer Methods and Programs in Biomedicine Update, 2, 100050.

Li, Y., Lu, B. L., & Wu, Z. F. (2006). A hybrid method of unsupervised feature selection based on ranking. In 18th International Conference on Pattern Recognition (ICPR'06) (pp. 687–690). IEEE.

Solorio-Fernández, S., Carrasco-Ochoa, J. A., & Martínez-Trinidad, J. F. (2016). A new hybrid filter–wrapper feature selection method for clustering based on ranking. Neurocomputing, 214, 866–880.

Narendra, P. M., & Fukunaga, K. (1977). A branch and bound algorithm for feature subset selection. IEEE Transactions on Computers, 26, 917–922.

Guan, S.-U., Liu, J., & Qi, Y. (2004). An incremental approach to contribution-based feature selection. Journal of Intelligent Systems, 13, 15–42.

Gasca, E., Sánchez, J. S., & Alonso, R. (2006). Eliminating redundancy and irrelevance using a new mlp-based feature selection method. Pattern Recognition, 39, 313–315.

Kabir, M. M., Shahjahan, M., & Murase, K. (2012). A new hybrid ant colony optimization algorithm for feature selection. Expert Systems with Applications, 39, 3747–3763. https://doi.org/10.1016/j.eswa.2011.09.073

Li, Z., Wang, J., Huang, J., & Ding, M. (2023). Development and research of triangle-filter convolution neural network for fuel reloading optimization of block-type htgrs. Applied Soft Computing, 136, 110126.

Zhang, J., Tang, Y., Wang, H., & Xu, K. (2022). Asro-dio: Active subspace random optimization based depth inertial odometry. IEEE Transactions on Robotics, 39, 1496–1508.

Ni, Q., Guo, J., Wu, W., & Wang, H. (2022). Influence-based community partition with sandwich method for social networks. IEEE Transactions on Computational Social Systems, 10, 819–830.

Xu, X., Wang, C., & Zhou, P. (2021). Gvrp considered oil–gas recovery in refined oil distribution: From an environmental perspective. International Journal of Production Economics, 235, 108078.

Cao, B., Zhao, J., Lv, Z., & Yang, P. (2020). Diversified personalized recommendation optimization based on mobile data. IEEE Transactions on Intelligent Transportation Systems, 22, 2133–2139.

Mao, Y., Zhu, Y., Tang, Z., & Chen, Z. (2022). A novel airspace planning algorithm for cooperative target localization. Electronics, 11, 2950.

Cao, B., Li, M., Liu, X., Zhao, J., Cao, W., & Lv, Z. (2021). Many-objective deployment optimization for a drone-assisted camera network. IEEE Transactions on Network Science and Engineering, 8, 2756–2764.

Cao, B., Yan, Y., Wang, Y., Liu, X., Lin, J.C.-W., Sangaiah, A. K., & Lv, Z. (2022). A multiobjective intelligent decision-making method for multistage placement of pmu in power grid enterprises. IEEE Transactions on Industrial Informatics, 19, 7636–7644.

Cao, B., Fan, S., Zhao, J., Tian, S., Zheng, Z., Yan, Y., & Yang, P. (2021). Large-scale many-objective deployment optimization of edge servers. IEEE Transactions on Intelligent Transportation Systems, 22, 3841–3849.

Cao, B., Gu, Y., Lv, Z., Yang, S., Zhao, J., & Li, Y. (2020). Rfid reader anticollision based on distributed parallel particle swarm optimization. IEEE Internet of Things Journal, 8, 3099–3107.

Tian, J., Hou, M., Bian, H., & Li, J. (2022). Variable surrogate model-based particle swarm optimization for high-dimensional expensive problems. Complex & Intelligent Systems. https://doi.org/10.1007/s40747-022-00910-7

Dorigo, M., Maniezzo, V., & Colorni, A. (1996). Ant system: Optimization by a colony of cooperating agents. IEEE Transactions on Systems, Man, and Cybernetics Part B (Cybernetics), 26, 29–41.

Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey wolf optimizer. Advances in Engineering Software, 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Heidari, A. A., Mirjalili, S., Faris, H., Aljarah, I., Mafarja, M., & Chen, H. L. (2019). Harris hawks optimization: Algorithm and applications. Future Generation Computer Systems, 97, 849–872.

Saremi, S., Mirjalili, S., & Lewis, A. (2017). Grasshopper optimisation algorithm: Theory and application. Advances in Engineering Software, 105, 30–47.

Storn, R., & Price, K. (1997). Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. Journal of Global Optimization, 11, 341–359.

Chen, H., Li, C., Mafarja, M., Heidari, A. A., Chen, Y., & Cai, Z. (2022). Slime mould algorithm: A comprehensive review of recent variants and applications. International Journal of Systems Science, 54, 204–235.

Li, S., Chen, H., Wang, M., Heidari, A. A., & Mirjalili, S. (2020). Slime mould algorithm: A new method for stochastic optimization. Future Generation Computer Systems, 111, 300–323. https://doi.org/10.1016/j.future.2020.03.055

Tu, J., Chen, H., Wang, M., & Gandomi, A. H. (2021). The colony predation algorithm. Journal of Bionic Engineering, 18, 674–710. https://doi.org/10.1007/s42235-021-0050-y

Ahmadianfar, I., Asghar Heidari, A., Gandomi, A. H., Chu, X., & Chen, H. (2021). Run beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Systems with Applications. https://doi.org/10.1016/j.eswa.2021.115079

Su, H., Zhao, D., Asghar Heidari, A., Liu, L., Zhang, X., Mafarja, M., & Chen, H. (2023). Rime: A physics-based optimization. Neurocomputing. https://doi.org/10.1016/j.neucom.2023.02.010

Yang, Y., Chen, H., Heidari, A. A., & Gandomi, A. H. (2021). Hunger games search: Visions, conception, implementation, deep analysis, perspectives, and towards performance shifts. Expert Systems with Applications, 177, 114864. https://doi.org/10.1016/j.eswa.2021.114864

Mirjalili, S. (2016). Sca: A sine cosine algorithm for solving optimization problems. Knowledge-Based Systems, 96, 120–133.

Rios, L. M., & Sahinidis, N. V. (2013). Derivative-free optimization: A review of algorithms and comparison of software implementations. Journal of Global Optimization, 56, 1247–1293.

Houssein, E. H., Hussain, K., Abualigah, L., Abd Elaziz, M., Alomoush, W., Dhiman, G., Djenouri, Y., & Cuevas, E. (2021). An improved opposition-based marine predators algorithm for global optimization and multilevel thresholding image segmentation. Knowledge-Based Systems. https://doi.org/10.1016/j.knosys.2021.107348

Weng, X. M., Heidari, A. A., Liang, G. X., Chen, H. L., & Ma, X. S. (2021). An evolutionary nelder–mead slime mould algorithm with random learning for efficient design of photovoltaic models. Energy Reports, 7, 8784–8804. https://doi.org/10.1016/j.egyr.2021.11.019

Abdelhamid, M., Houssein, E. H., Mahdy, M. A., Selim, A., & Kamel, S. (2022). An improved seagull optimization algorithm for optimal coordination of distance and directional over-current relays. Expert Systems with Applications. https://doi.org/10.1016/j.eswa.2022.116931

Ismaeel, A. A. K., Elshaarawy, I. A., Houssein, E. H., Ismail, F. H., & Hassanien, A. E. (2019). Enhanced elephant herding optimization for global optimization. IEEE Access, 7, 34738–34752. https://doi.org/10.1109/ACCESS.2019.2904679

Houssein, E. H., Helmy, B. E. D., Rezk, H., & Nassef, A. M. (2021). An enhanced archimedes optimization algorithm based on local escaping operator and orthogonal learning for pem fuel cell parameter identification. Engineering Applications of artificial Intelligence. https://doi.org/10.1016/j.engappai.2021.104309

Houssein, E. H., Emam, M. M., & Ali, A. A. (2021). An efficient multilevel thresholding segmentation method for thermography breast cancer imaging based on improved chimp optimization algorithm. Expert Systems with Applications. https://doi.org/10.1016/j.eswa.2021.115651

Zhao, F. Q., Bao, H. Z., Wang, L., Cao, J., & Tang, J. X. (2022). A multipopulation cooperative coevolutionary whale optimization algorithm with a two-stage orthogonal learning mechanism. Knowledge-Based Systems, 246, 108664.

Hao, P., & Sobhani, B. (2021). Application of the improved chaotic grey wolf optimization algorithm as a novel and efficient method for parameter estimation of solid oxide fuel cells model. International Journal of Hydrogen Energy, 46, 36454–36465. https://doi.org/10.1016/j.ijhydene.2021.08.174

Too, J. W., Liang, G. X., & Chen, H. L. (2021). Memory-based Harris hawk optimization with learning agents: A feature selection approach. Engineering with Computers. https://doi.org/10.1007/s00366-021-01479-4

Ibrahim, I. A., Hossain, M., & Duck, B. C. (2022). A hybrid wind driven-based fruit fly optimization algorithm for identifying the parameters of a double-diode photovoltaic cell model considering degradation effects. Sustainable Energy Technologies and Assessments, 50, 101685.

Zhang, Y., Liu, R., Heidari, A. A., Wang, X., Chen, Y., Wang, M., & Chen, H. (2021). Towards augmented kernel extreme learning models for bankruptcy prediction: Algorithmic behavior and comprehensive analysis. Neurocomputing, 430, 185–212.

Dong, R., Chen, H., Heidari, A. A., Turabieh, H., Mafarja, M., & Wang, S. (2021). Boosted kernel search: Framework, analysis and case studies on the economic emission dispatch problem. Knowledge-Based Systems, 233, 107529. https://doi.org/10.1016/j.knosys.2021.107529

Deb, S., Abdelminaam, D. S., Said, M., & Houssein, E. H. (2021). Recent methodology-based gradient-based optimizer for economic load dispatch problem. IEEE Access, 9, 44322–44338. https://doi.org/10.1109/ACCESS.2021.3066329

Liu, Y., Heidari, A. A., Cai, Z., Liang, G., Chen, H., Pan, Z., Alsufyani, A., & Bourouis, S. (2022). Simulated annealing-based dynamic step shuffled frog leaping algorithm: Optimal performance design and feature selection. Neurocomputing, 503, 325–362. https://doi.org/10.1016/j.neucom.2022.06.075

Xue, Y., Xue, B., & Zhang, M. J. (2019). Self-adaptive particle swarm optimization for large-scale feature selection in classification. ACM Transactions on Knowledge Discovery from Data (TKDD), 13, 1–27.

Xue, Y., Cai, X., & Neri, F. (2022). A multi-objective evolutionary algorithm with interval based initialization and self-adaptive crossover operator for large-scale feature selection in classification. Applied Soft Computing, 127, 109420. https://doi.org/10.1016/j.asoc.2022.109420

Liang, J., Qiao, K., Yu, K., Qu, B., Yue, C., Guo, W., & Wang, L. (2022). Utilizing the relationship between unconstrained and constrained Pareto fronts for constrained multiobjective optimization. IEEE Transactions on Cybernetics. https://doi.org/10.1109/TCYB.2022.3163759

Deng, W., Xu, J., Gao, X. Z., & Zhao, H. (2022). An enhanced MSIQDE algorithm with novel multiple strategies for global optimization problems. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 52, 1578–1587. https://doi.org/10.1109/TSMC.2020.3030792

Huang, C., Zhou, X., Ran, X., Liu, Y., Deng, W., & Deng, W. (2023). Co-evolutionary competitive swarm optimizer with three-phase for large-scale complex optimization problem. Information Sciences, 619, 2–18. https://doi.org/10.1016/j.ins.2022.11.019

Xue, Y., Tong, Y., & Neri, F. (2022). An ensemble of differential evolution and Adam for training feed-forward neural networks. Information Sciences, 608, 453–471. https://doi.org/10.1016/j.ins.2022.06.036

Wen, X., Wang, K., Li, H., Sun, H., Wang, H., & Jin, L. (2021). A two-stage solution method based on nsga-ii for green multi-objective integrated process planning and scheduling in a battery packaging machinery workshop. Swarm and Evolutionary Computation, 61, 100820. https://doi.org/10.1016/j.swevo.2020.100820

Wang, G., Fan, E., Zheng, G., Li, K., & Huang, H. (2022). Research on vessel speed heading and collision detection method based on ais data. Mobile Information Systems, 2022, 1–10.

Zhao, C., Zhou, Y., & Lai, X. (2022). An integrated framework with evolutionary algorithm for multi-scenario multi-objective optimization problems. Information Sciences, 600, 342–361. https://doi.org/10.1016/j.ins.2022.03.093

Yu, K., Zhang, D., Liang, J., Chen, K., Yue, C., Qiao, K., & Wang, L. (2022). A correlation-guided layered prediction approach for evolutionary dynamic multiobjective optimization. IEEE Transactions on Evolutionary Computation. https://doi.org/10.1109/TEVC.2022.3193287

Al-Tashi, Q., Kadir, S. J. A., Rais, H. M., Mirjalili, S., & Alhussian, H. (2019). Binary optimization using hybrid grey wolf optimization for feature selection. IEEE Access, 7, 39496–39508. https://doi.org/10.1109/ACCESS.2019.2906757

Abd Elminaam, D. S., Nabil, A., Ibraheem, S. A., & Houssein, E. H. (2021). An efficient marine predators algorithm for feature selection. IEEE Access, 9, 60136–60153. https://doi.org/10.1109/ACCESS.2021.3073261

Xue, Y., Xue, B., & Zhang, M. J. (2019). Self-adaptive particle swarm optimization for large-scale feature selection in classification. ACM Transactions on Knowledge Discovery from Data. https://doi.org/10.1145/3340848

Samy, A., Hosny, K. M., & Zaied, A.-N.H. (2020). An efficient binary whale optimisation algorithm with optimum path forest for feature selection. International Journal of Computer Applications in Technology, 63, 41–54.

Hussien, A. G., Oliva, D., Houssein, E. H., Juan, A. A., & Yu, X. (2020). Binary whale optimization algorithm for dimensionality reduction. Mathematics. https://doi.org/10.3390/math8101821

Tubishat, M., Alswaitti, M., Mirjalili, S., Al-Garadi, M. A., Alrashdan, M. T., & Rana, T. A. (2020). Dynamic butterfly optimization algorithm for feature selection. IEEE Access, 8, 194303–194314. https://doi.org/10.1109/ACCESS.2020.3033757

Fang, L. L., & Liang, X. Y. (2023). A novel method based on nonlinear binary grasshopper whale optimization algorithm for feature selection. Journal of Bionic Engineering, 20, 237–252. https://doi.org/10.1007/s42235-022-00253-6

Hassanien, A. E., Kilany, M., Houssein, E. H., & AlQaheri, H. (2018). Intelligent human emotion recognition based on elephant herding optimization tuned support vector regression. Biomedical Signal Processing and Control, 45, 182–191. https://doi.org/10.1016/j.bspc.2018.05.039

Houssein, E. H., & Sayed, A. (2023). Dynamic candidate solution boosted beluga whale optimization algorithm for biomedical classification. Mathematics. https://doi.org/10.3390/math11030707

Barshandeh, S., Dana, R., & Eskandarian, P. (2021). A learning automata-based hybrid mpa and js algorithm for numerical optimization problems and its application on data clustering. Knowledge-Based Systems. https://doi.org/10.1016/j.knosys.2021.107682

Emary, E., & Zawbaa, H. M. (2019). Feature selection via Lèvy antlion optimization. Pattern Analysis and Applications, 22, 857–876.

Sayed, G. I., Tharwat, A., & Hassanien, A. E. (2019). Chaotic dragonfly algorithm: An improved metaheuristic algorithm for feature selection. Applied Intelligence, 49, 188–205. https://doi.org/10.1007/s10489-018-1261-8

Abualigah, L. M., Khader, A. T., & Hanandeh, E. S. (2018). A new feature selection method to improve the document clustering using particle swarm optimization algorithm. Journal of Computational Science, 25, 456–466. https://doi.org/10.1016/j.jocs.2017.07.018

Thom de Souza, R. C., de Macedo, C. A., dos Santos Coelho, L., Pierezan, J., & Mariani, V. C. (2020). Binary coyote optimization algorithm for feature selection. Pattern Recognition, 107, 107470. https://doi.org/10.1016/j.patcog.2020.107470

Rajalaxmi, R. R., Mirjalili, S., Gothai, E., & Natesan, P. (2022). Binary grey wolf optimization with mutation and adaptive k-nearest neighbor for feature selection in Parkinson’s disease diagnosis. Knowledge-Based Systems. https://doi.org/10.1016/j.knosys.2022.108701

Li, A. D., Xue, B., & Zhang, M. J. (2021). Improved binary particle swarm optimization for feature selection with new initialization and search space reduction strategies. Applied Soft Computing, 106, 107302. https://doi.org/10.1016/j.asoc.2021.107302

Mirjalili, S., & Lewis, A. (2016). The whale optimization algorithm. Advances in Engineering Software, 95, 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

Li, M. N., Porter, A. L., Suominen, A., Burmaoglu, S., & Carley, S. (2021). An exploratory perspective to measure the emergence degree for a specific technology based on the philosophy of swarm intelligence. Technological Forecasting and Social Change, 166, 120621.

Maleki, A. (2022). Optimization based on modified swarm intelligence techniques for a stand-alone hybrid photovoltaic/diesel/battery system. Sustainable Energy Technologies and Assessments, 51, 101856.

Liu, K. H., Alam, M. S., Zhu, J., Zheng, J. K., & Chi, L. (2021). Prediction of carbonation depth for recycled aggregate concrete using ANN hybridized with swarm intelligence algorithms. Construction and Building Materials, 301, 124382.

Shao, Y., Wang, J., Zhang, H., & Zhao, W. (2021). An advanced weighted system based on swarm intelligence optimization for wind speed prediction. Applied Mathematical Modelling, 100, 780–804. https://doi.org/10.1016/j.apm.2021.07.024

Farah, A., Belazi, A., Benabdallah, F., Almalaq, A., Chtourou, M., & Abido, M. A. (2022). Parameter extraction of photovoltaic models using a comprehensive learning rao-1 algorithm. Energy Conversion and Management. https://doi.org/10.1016/j.enconman.2021.115057

Nadimi-Shahraki, M. H., Zamani, H., & Mirjalili, S. (2022). Enhanced whale optimization algorithm for medical feature selection: A covid-19 case study. Computers in Biology and Medicine, 148, 105858. https://doi.org/10.1016/j.compbiomed.2022.105858

Yedukondalu, J., & Sharma, L. D. (2022). Cognitive load detection using circulant singular spectrum analysis and binary Harris hawks optimization based feature selection. Biomedical Signal Processing and Control. https://doi.org/10.1016/j.bspc.2022.104006

Yang, H., Yu, Y., Cheng, J., Lei, Z., Cai, Z., Zhang, Z., & Gao, S. (2022). An intelligent metaphor-free spatial information sampling algorithm for balancing exploitation and exploration. Knowledge-Based Systems, 250, 109081. https://doi.org/10.1016/j.knosys.2022.109081

Kutlu Onay, F., & Aydemir, S. B. (2022). Chaotic hunger games search optimization algorithm for global optimization and engineering problems. Mathematics and Computers in Simulation, 192, 514–536. https://doi.org/10.1016/j.matcom.2021.09.014

Faris, H., Mafarja, M. M., Heidari, A. A., Aljarah, I., Ala’M, A.-Z., Mirjalili, S., & Fujita, H. (2018). An efficient binary salp swarm algorithm with crossover scheme for feature selection problems. Knowledge-Based Systems, 154, 43–67.

Xie, X., Xie, B., Xiong, D., Hou, M., Zuo, J., Wei, G., & Chevallier, J. (2022). New theoretical ism-k2 bayesian network model for evaluating vaccination effectiveness. Journal of Ambient Intelligence and Humanized Computing. https://doi.org/10.1007/s12652-022-04199-9

Lu, S., Guo, J., Liu, S., Yang, B., Liu, M., Yin, L., & Zheng, W. (2022). An improved algorithm of drift compensation for olfactory sensors. Applied Sciences, 12, 9529.

Dang, W., Guo, J., Liu, M., Liu, S., Yang, B., Yin, L., & Zheng, W. (2022). A semi-supervised extreme learning machine algorithm based on the new weighted kernel for machine smell. Applied Sciences, 12, 9213.

Qin, X., Liu, Z., Liu, Y., Liu, S., Yang, B., Yin, L., Liu, M., & Zheng, W. (2022). User ocean personality model construction method using a bp neural network. Electronics, 11, 3022.

Lu, S., Liu, S., Hou, P., Yang, B., Liu, M., Yin, L., & Zheng, W. (2023). Soft tissue feature tracking based on deep matching network. Computer Modeling in Engineering and Sciences, 136, 363–379.

Huang, C.-Q., Jiang, F., Huang, Q.-H., Wang, X.-Z., Han, Z.-M., & Huang, W.-Y. (2022). Dual-graph attention convolution network for 3-d point cloud classification. IEEE Transactions on Neural Networks and Learning Systems. https://doi.org/10.1109/TNNLS.2022.3162301

Wu, Y. (2021). A survey on population-based meta-heuristic algorithms for motion planning of aircraft. Swarm and Evolutionary Computation, 62, 100844.

Li, S. J., Gong, W. Y., & Gu, Q. (2021). A comprehensive survey on meta-heuristic algorithms for parameter extraction of photovoltaic models. Renewable and Sustainable Energy Reviews, 141, 110828.

Ganesan, V., Sobhana, M., Anuradha, G., Yellamma, P., Devi, O. R., Prakash, K. B., & Naren, J. (2021). Quantum inspired meta-heuristic approach for optimization of genetic algorithm. Computers & Electrical Engineering, 94, 107356.

Osuna-Enciso, V., Cuevas, E., & Castañeda, B. M. (2022). A diversity metric for population-based metaheuristic algorithms. Information Sciences, 586, 192–208.

Yang, X. S. (2010). A new metaheuristic bat-inspired algorithm. In J. R. González, D. A. Pelta, C. Cruz, G. Terrazas, & N. Krasnogor (Eds.), Nature Inspired Cooperative Strategies for Optimization (NICSO 2010) (pp. 65–74). Berlin: Springer.

Poli, R., Kennedy, J., & Blackwell, T. (2007). Particle swarm optimization. Swarm Intelligence, 1, 33–57.

Yang, X. S. (2009). Firefly algorithms for multimodal optimization. In International Symposium on Stochastic Algorithms (pp. 169–178). Springer.

Alcalá-Fdez, J., Sanchez, L., Garcia, S., del Jesus, M. J., Ventura, S., Garrell, J. M., Otero, J., Romero, C., Bacardit, J., & Rivas, V. M. (2009). Keel: A software tool to assess evolutionary algorithms for data mining problems. Soft Computing, 13, 307–318.

Kaur, G., & Arora, S. (2018). Chaotic whale optimization algorithm. Journal of Computational Design and Engineering, 5, 275–284.

Luo, J., Chen, H. L., Heidari, A. A., Xu, Y. T., Zhang, Q., & Li, C. Y. (2019). Multi-strategy boosted mutative whale-inspired optimization approaches. Applied Mathematical Modelling, 73, 109–123.

Ling, Y., Zhou, Y. Q., & Luo, Q. F. (2017). Lévy flight trajectory-based whale optimization algorithm for global optimization. IEEE Access, 5, 6168–6186.

Tubishat, M., Abushariah, M. A., Idris, N., & Aljarah, I. (2019). Improved whale optimization algorithm for feature selection in Arabic sentiment analysis. Applied Intelligence, 49, 1688–1707.

Chen, H. L., Xu, Y. T., Wang, M. J., & Zhao, X. H. (2019). A balanced whale optimization algorithm for constrained engineering design problems. Applied Mathematical Modelling, 71, 45–59.

Wang, W. L., Li, W. K., Wang, Z., & Li, L. (2019). Opposition-based multi-objective whale optimization algorithm with global grid ranking. Neurocomputing, 341, 41–59.

Elhosseini, M. A., Haikal, A. Y., Badawy, M., & Khashan, N. (2019). Biped robot stability based on an a–c parametric whale optimization algorithm. Journal of Computational Science, 31, 17–32.

Sun, T.-Y., Liu, C.-C., Tsai, S.-J., Hsieh, S.-T., & Li, K.-Y. (2010). Cluster guide particle swarm optimization (cgpso) for underdetermined blind source separation with advanced conditions. IEEE Transactions on Evolutionary Computation, 15, 798–811.

Alambeigi, F., Pedram, S. A., Speyer, J. L., Rosen, J., Iordachita, I., Taylor, R. H., & Armand, M. (2019). Scade: Simultaneous sensor calibration and deformation estimation of fbg-equipped unmodeled continuum manipulators. IEEE Transactions on Robotics, 36, 222–239.

Abd Elaziz, M., Oliva, D., & Xiong, S. W. (2017). An improved opposition-based sine cosine algorithm for global optimization. Expert Systems with Applications, 90, 484–500.

Huang, H., Heidari, A. A., Xu, Y. T., Wang, M. J., Liang, G. X., Chen, H. L., & Cai, X. D. (2020). Rationalized sine cosine optimization with efficient searching patterns. IEEE Access, 8, 61471–61490.

Heidari, A. A., Ali Abbaspour, R., & Chen, H. L. (2019). Efficient boosted grey wolf optimizers for global search and kernel extreme learning machine training. Applied Soft Computing, 81, 105521. https://doi.org/10.1016/j.asoc.2019.105521

Krawczuk, J., & Lukaszuk, T. (2016). The feature selection bias problem in relation to high-dimensional gene data. Artificial Intelligence in Medicine, 66, 63–71. https://doi.org/10.1016/j.artmed.2015.11.001

Li, Q., Chen, H. L., Huang, H., Zhao, X. H., Cai, Z. N., Tong, C. F., Liu, W. B., & Tian, X. (2017). An enhanced grey wolf optimization based feature selection wrapped kernel extreme learning machine for medical diagnosis. Computational and Mathematical Methods in Medicine. https://doi.org/10.1155/2017/9512741