Abstract

In this work, we study the problem of learning the volatility under market microstructure noise. Specifically, we consider noisy discrete time observations from a stochastic differential equation and develop a novel computational method to learn the diffusion coefficient of the equation. We take a nonparametric Bayesian approach, where we a priori model the volatility function as piecewise constant. Its prior is specified via the inverse Gamma Markov chain. Sampling from the posterior is accomplished by incorporating the Forward Filtering Backward Simulation algorithm in the Gibbs sampler. Good performance of the method is demonstrated on two representative synthetic data examples. We also apply the method on a EUR/USD exchange rate dataset. Finally we present a limit result on the prior distribution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let the one-dimensional stochastic differential equation (SDE)

be given. Here W is a standard Wiener process and b and s are referred to as the drift function and volatility function, respectively. We assume (not necessarily uniformly spaced) observation times \(\{t_i: 1\le i\le n\}\) and observations \( {\mathscr {Y}}_n =\{Y_1, \ldots , Y_{n}\}, \) where

and \(\{ V_i \}\) is a sequence of independent and identically distributed random variables, independent of W. Our aim is to learn the volatility s using the noisy observations \(\mathscr {Y}_n\). Knowledge of the volatility is of paramount importance in financial applications, specifically in pricing financial derivatives, see, e.g., Musiela and Rutkowski (2005), and in risk management.

The quantity \(\varDelta t_i = t_i - t_{i-1}\) is referred to as the observation density, especially if the time instants are equidistant, and then \(1/\varDelta t_i\) will be the frequency of the observations. Small values of \(\varDelta t_i\) correspond to high frequency, dense-in-time data. Intraday financial data are commonly thought to be high frequency data. In this high frequency financial data setting, which is the one we are interested in the present work, the measurement errors \(\{V_{i}\}\) are referred to as microstructure noise. Their inclusion in the model aims to reflect such features of observed financial time series as their discreteness or approximations due to market friction. Whereas for low-frequency financial data these can typically be neglected without much ensuing harm, empirical evidence shows that this is not the case for high frequency data; cf. Mykland and Zhang (2012).

There exists a large body of statistics and econometrics literature on nonparametric volatility estimation under microstructure noise. See, e.g., Hoffmann et al. (2012), Jacod et al. (2009), Mykland and Zhang (2009), Reiß (2011), Sabel et al. (2015); a recent overview is Mykland and Zhang (2012). The literature predominantly deals with estimation of the integrated volatility \(\int _0^t s^2(u){{\textrm{d}}}u\), although inference on s has also been studied in several of these references. Various methods proposed in the above-mentioned works share the property of being frequentist in nature. An important paper (Mancini et al., 2015) is a mix of theoretical and practical results, with a clear predominance of the former. Its main purpose (see page 262) is proposing a unifying frequentist approach to spot volatility estimation, from which many previously existing approaches would be derived as special cases. This allows comparison between them. As our approach is Bayesian, our results do not fall under the general umbrella of Mancini et al. (2015).

In this paper, our main and novel contribution is the development of a practical nonparametric Bayesian approach to volatility learning under microstructure noise. We specify an inverse Gamma Markov chain prior (Cf. Cemgil & Dikmen 2007) on the volatility function s and reduce our model to the Gaussian linear state space model. Posterior inference in the latter is performed using Gibbs sampling including a Forward Filtering Backward Simulation (FFBS) step. We demonstrate good performance of our method on two representative simulation examples. The first example uses a benchmark function, popular in nonparametric regression, see Fan and Gijbels (1995), as the volatility function. In the second example we consider a well known and widely used stochastic volatility model, the Heston model, see Heston (1993), or (Filipovic 2009, Section 10.3.3) and (Brigo & Mercurio 2006, Chapter 19, Appendix A). In both examples our approach shows accurate results. We also apply our method to a real data set of EUR/USD exchange rates and we deduce a clear and understandable variation in the volatility over time.

In general, a nonparametric approach reduces the risk of model misspecification; the latter may lead to distorted inferential conclusions. The presented nonparametric method is not only useful for an exploratory analysis of the problem at hand (cf. Silverman 1986), but also allows honest representation of inferential uncertainties (cf. Müller & Mitra 2013). Attractive features of the Bayesian approach include its internal coherence, automatic uncertainty quantification in parameter estimates via Bayesian credible sets, and the fact that it is a fundamentally likelihood-based method. For a modern monographic treatment of nonparametric Bayesian statistics see Ghosal and van der Vaart (2017); an applied perspective is found in Müller et al. (2015).

The paper is organised as follows: in Sect. 2 we introduce in detail our approach, followed by Sect. 3 where the limiting behaviour of the prior on the squared volatility is derived under mesh refinement. In Sect. 4 we test its practical performance on synthetic data examples. Section 5 applies our method on a real data example. Section 6 summarises our findings. Finally, Appendix A gives further implementational details.

1.1 Notation

We denote the inverse Gamma distribution with shape parameter \(\alpha >0\) and scale parameter \(\beta >0\) by \({\text {IG}}(\alpha ,\beta )\). Its density is

By \(N(\mu ,\sigma ^2)\) we denote a normal distribution with mean \(\mu \in \mathbb {R}\) and variance \(\sigma ^2>0\). The uniform distribution on an interval [a, b] is denoted by \({\text {Uniform}}(a,b).\) For a random variate X, the notation \(X\sim p\) stands for the fact that X is distributed according to a density p, or is drawn according to a density p. Conditioning of a random variate X on a random variate Y is denoted by \(X\mid Y\). By \(\lfloor x \rfloor \) we denote the integer part of a real number x. The notation \(p \propto q\) for a density p denotes the fact that a positive function q is an unnormalised density corresponding to p: p can be recovered from q as \(q/\int q\). Finally, we use the shorthand notation \(a_{k:\ell }=(a_k,\ldots ,a_{\ell })\).

2 Methodology

In this section we introduce our methodology for inferring the volatility. We first recast the model into a simpler form that is amenable to computational analysis, next specify a nonparametric prior on the volatility, and finally describe an MCMC method for sampling from the posterior.

2.1 Linear state space model

Let \(t_0=0\). By Eq. (1), we have

We derive our method under the assumption that the “true”, data-generating volatility s is a deterministic function of time t. Next, if the “true” s is in fact a stochastic process, we apply our procedure without further changes, as if s were deterministic. As shown in the example of Sect. 4.2, this works in practice. That this is the case is easiest to understand in the situation where one can discern a two-stage procedure. First the stochastic volatility is generated, and given a realization of it, the observations are generated by an independent Brownian motion W. In Kanaya and Kristensen (2016) such an approach is used for simulation.

Over short time intervals \([{t_{i-1}},{t_{i}}]\), the term \(\int _{t_{i-1}}^{t_{i}} s(t){{\textrm{d}}}W_t\) in (3), roughly speaking, will dominate the term \(\int _{t_{i-1}}^{t_{i}}b(t,X_t){{\textrm{d}}}t\), as the latter scales as \(\varDelta t_i\), whereas the former as \(\sqrt{\varDelta t_i}\) (due to the properties of the Wiener process paths). As our emphasis is on learning s rather than b, following (Gugushvili et al., 2020, 2019b) we act as if the process X had a zero drift, \(b\equiv 0\). The justification of this procedure is explained (Gugushvili et al., 2020). A similar idea is often used in frequentist volatility estimation procedures in the high frequency financial data setting; see Mykland and Zhang (2012), Sect. 2.1.5 for an intuitive exposition. Formal results why this works in specific settings rely on Girsanov’s theorem, see, e.g., Gugushvili et al. (2020), Hoffmann et al. (2012), Mykland and Zhang (2009). Further reasons why one would like to set \(b=0\) are that b is a nuisance parameter, in specific applications its appropriate parametric form might be unknown, and finally, a single observed time series is not sufficient to learn b consistently (see Ignatieva & Platen 2012).

We thus assume \( X_{t_{i}}=X_{t_{i-1}}+U_{i}, \) where \( U_{i}=\int _{t_{i-1}}^{t_{i}} s(t){{\textrm{d}}}W_t. \) Note that then

and also that \(\{U_{i}\}\) is a sequence of independent random variables. To simplify our notation, write \(x_i=X_{t_{i}}\), \(y_i=Y_{i}\), \(u_i=U_{i}\), \(v_i=V_{i}\). The preceding arguments and (2) allow us to reduce our model to the linear state space model

where \(i = 1, \dots , n\). The first equation in (5) is the state equation, while the second equation is the observation equation. We assume that \(\{v_i\}\) is a sequence of independent \(N(0,\eta _v)\) distributed random variables, independent of the Wiener process W in (1), so that \(\{v_i\}\) is independent of \(\{u_i\}\). For justification of such assumptions on the noise sequence \(\{v_i\}\) from a practical point of view, see Sabel et al. (2015), page 229. We endow the initial state \(x_0\) with the \(N(\mu _0,C_0)\) prior distribution. Then (5) is a Gaussian linear state space model. This is very convenient computationally. Had we not followed this route, we would have had to deal with an intractable likelihood, which constitutes the main computational bottleneck for Bayesian inference in SDE models; see, e.g, Papaspiliopoulos et al. (2013) and van der Meulen and Schauer (2017) for discussion.

2.2 Prior

For the measurement error variance \(\eta _v\), we assume a priori \(\eta _v \sim {\text {IG}}(\alpha _v,\beta _v)\). The construction of the prior for s is more complex and follows (Gugushvili et al., 2019b), that in turn relies on Cemgil and Dikmen (2007). Fix an integer \(m<n\). Then we have a unique decomposition \(n=mN+r\) with \(0\le r<m\), where \(N=\lfloor {n}/{m}\rfloor \). Now define bins \(B_k=[t_{m(k-1)},t_{mk})\), \(k=1,\ldots ,N-1\), and \(B_N=[t_{m(N-1)},T]\). We model s as

where N (the number of bins) is a hyperparameter. Then \( s^2=\sum _{k=1}^{N} \theta _k \textbf{1}_{B_k}, \) where \(\theta _k=\xi _k^2\). We complete the prior specification for s by assigning a prior distribution to the coefficients \(\theta _{1:N}\). For this purpose, we introduce auxiliary variables \(\zeta _{2:N}\), and suppose the sequence \(\theta _1,\zeta _2,\theta _2,\ldots ,\zeta _k,\theta _k,\ldots ,\zeta _N,\theta _N\) forms a Markov chain (in this order of variables). The transition distributions of the chain are defined by

where \(\alpha _1,\beta _1,\alpha \) are hyperparameters. We refer to this chain as an inverse Gamma Markov chain, see Cemgil and Dikmen (2007). The corresponding prior on \(\theta _{1:N}\) will be called the inverse Gamma Markov chain (IGMC) prior. The definition in (7) ensures that \(\theta _1,\ldots ,\theta _N\) are positively correlated, which imposes smoothing across different bins \(B_k\). Simultaneously, it ensures partial conjugacy in the Gibbs sampler that we derive below, leading to simple and tractable MCMC inference. In our experience, an uninformative choice \(\alpha _1,\beta _1\rightarrow 0\) performs well in practice. We also endow \(\alpha \) with a prior distribution and assume \(\log \alpha \sim N(a,b)\), with hyperparameters \(a\in \mathbb {R},b>0\) chosen so as to render the hyperprior on \(\alpha \) diffuse. As explained in Gugushvili et al. (2020), Gugushvili et al. (2019b), the hyperparameter N (or equivalently m) can be considered both as a smoothing parameter and the resolution at which one wants to learn the volatility function. Obviously, given the limited amount of data, this resolution cannot be made arbitrarily fine. On the other hand, as shown in Gugushvili et al. (2019b) (see also Gugushvili et al. 2019a), inference with the IGMC prior is quite robust with respect to a wide range of values of N, as the corresponding Bayesian procedure has an additional regularisation parameter \(\alpha \) that is learned from the data. Statistical optimality results in Munk and Schmidt-Hieber (2010) suggest that in our setting N should be chosen considerably smaller than in the case of an SDE observed without noise (that was studied via the IGMC prior in Gugushvili et al. (2019b)).

2.3 Likelihood

Although an expression for the posterior of s can be written down in closed form, it is not amenable to computations. This problem is alleviated by following a data augmentation approach, in which \(x_{0:n}\) are treated as missing data, whereas the \(y_{1:n}\) are the observed data; cf. Tanner and Wong (1987). An expression for the joint density of all random quantities involved is easily derived from the prior specification and (5). We have

Except for \(p(x_{0:n} \mid \theta _{1:N})\), all the densities have been specified directly in the previous subsections. To obtain an expression for the latter, define (with \(\varDelta _i\equiv \varDelta t_i\))

and set \(m_k=m\) for \(k=1,\ldots ,N-1\), and \(m_N=m+r\). Then

2.4 Gibbs sampler

We use the Gibbs sampler to sample from the joint conditional distribution of \((x_{0:n}, \theta _{1:N}, \zeta _{2:N}, \eta _v, \alpha )\) given \(y_{1:n}\). The full conditionals of \(\theta _{1:N}\), \(\zeta _{2:N}\), \(\eta _v\) are easily derived from Sect. 2.3 and recognised to be of the inverse Gamma type, see Sect. A.2. The parameter \(\alpha \) can be updated via a Metropolis-Hastings step. For updating \(x_{0:N}\), conditional on all other parameters, we use the standard Forward Filtering Backward Simulation (FFBS) algorithm for Gaussian state space models (cf. Section 4.4.3 in Petris et al. 2009). The resulting Gibbs sampler is summarised in Algorithm 1. For details, see Appendix A.

3 Asymptotics for the prior on the squared volatility

We first provide in Proposition 1 results on the prior conditional mean and variance of the \(\theta _k\) as in Sect. 2.2. These results will be exploited to find an asymptotic regime for the \(\theta _k\) when the number of bins tends to infinity. In this section we depart from the original setting with \(\alpha \) as a random hyperparameter (having a lognormal distribution), but instead we take it as a deterministic one that we let grow to infinity to obtain our asymptotic results.

Proposition 1

If \(\alpha >2\) is a fixed parameter then the IGMC prior of Sect. 2.2 satisfies

where \(\mathbb {E}_k\) and \(\mathbb {V}_k\) respectively denote expectation and variance, conditional on \(\theta _k\). Consequently, the conditional mean squared error \(\mathbb {E}_k(\theta _{k+1}-\theta _k)^2\) equals \(\frac{2(\alpha +1)\theta _k^2}{(\alpha -1)(\alpha -2)}\).

Proof

We will use that for \(Z\sim {\text {IG}}(a,b)\) it holds that

First we consider the conditional mean. Since \(\theta _{k+1} \mid \zeta _{k+1} \sim {\text {IG}}(\alpha , \alpha \zeta _{k+1}^{-1})\) we have

provided \(\alpha >1\). Exploiting that the sequence \(\theta _1,\zeta _2,\theta _2,\ldots ,\zeta _k,\theta _k,\ldots ,\zeta _N,\theta _N\) forms a Markov chain (in this order of variables), one has

where we used (11) at the last equality sign. Using (10) and \(\zeta _{k+1}\mid \theta _k \sim {\text {IG}}(\alpha , \alpha \theta _k^{-1})\) one obtains

which is equivalent to (8).

Next we calculate the conditional variance. We have

provided \(\alpha >2\). We need the following variation on the law of total variance. If \(X\in L^2(\varOmega ,{\mathscr {F}},\mathbb {P})\), and \({\mathscr {G}}\), \({\mathscr {H}}\) are subsigma-algebras of \({\mathscr {F}}\) with \({\mathscr {H}}\subset {\mathscr {G}}\). Then, with \(X^{\mathscr {G}}=\mathbb {E}[X\mid {\mathscr {G}}]\), it holds that

We use this result with \(X=\theta _{k+1}\), \({\mathscr {G}}=\sigma (\theta _k,\zeta _{k+1})\), \({\mathscr {H}}=\sigma (\theta _k)\), obtaining

as now \(X^{\mathscr {G}}=\mathbb {E}[\theta _{k+1}\mid \theta _k,\zeta _{k+1}]=\mathbb {E}[\theta _{k+1}\mid \zeta _{k+1}]\) and \(\mathbb {V}\textrm{ar}(\theta _{k+1}\mid \theta _k,\zeta _{k+1})=\mathbb {V}\textrm{ar}(\theta _{k+1}\mid \zeta _{k+1})\) in view of the Markov property. Hence, in our abbreviated notation,

which establishes (9). The expression for the mean squared error follows from (8) and (9). \(\square \)

The expression for the conditional mean squared error in Proposition 1 shows that it decreases in \(\alpha \), which illustrates the regularising property of this parameter. Therefore we are interested in the behaviour of the prior distribution on the \(\theta _k\) for large values of \(\alpha \). We will scale \(\alpha \) with the number of bins N to obtain a limit result by applying Donsker’s theorem (Cf. Corollary VII.3.11 in Jacod & Shiryaev 2013), upon letting \(N\rightarrow \infty \). We give some heuristics to derive the limit behaviour.

Take \(\alpha = \gamma N\), where in the latter expression \(\gamma \) is a positive scaling factor. As the law of the \(\theta _k\) depends on \(\gamma N\), we are thus interested in the law of \(\theta _k\) for \(N\rightarrow \infty \). This entails simultaneously increasing the number of bins as also the dependence of the values on the bins. Below we argue that under the IGMC-prior, with \(\theta _1\) fixed, the process \( t\mapsto s^2(t)\), with \(s^2(t)=\sum _{k=1}^{N} \theta _k \textbf{1}_{B_k}(t)\), converges weakly to the continuous time process \(t\mapsto \theta _1 Z_t\) where

The expressions for conditional mean and variance of Proposition 1 are for large N (in particular then \(\alpha =\gamma N>2\)) approximately equal to

These properties are shared with \(\theta _k\) generated by the recursion

where, for all k, \(z_k\) is independent of \(\theta _1,\ldots ,\theta _k\), \(\mathbb {E}z_k=0\), \(\mathbb {V}\textrm{ar}\, z_k=1\). The \(z_k\) can be seen as martingale differences, or even as IID random variables. Solving the recursion starting from \(\theta _1\) after taking logarithms leads to

where the approximation is based on a second order Taylor expansion of the logarithm and makes sense for large values of N. Introduce the processes \(W^N=\{W^N_t, t\in [0,1]\}\) and \(A^N=\{A^N_t, t\in [0,1]\}\) by

By Donsker’s theorem, one has weak convergence of \(W^n\) to W, a standard Brownian motion. Furthermore, \(A^N\) converges uniformly in probability to A, \(A_t=t\). It follows that \(\sqrt{\frac{2}{\gamma }}W^N-\frac{1}{\gamma }A^N\) weakly converges to \(\sqrt{\frac{2}{\gamma }}W-\frac{1}{\gamma }A\). Note that A is the quadratic variation process \(\langle W\rangle \). Hence \(\sqrt{\frac{2}{\gamma }}W^N-\frac{1}{\gamma }A^N\) converges weakly to \(\sqrt{\frac{2}{\gamma }}W-\frac{1}{2}\langle \sqrt{\frac{2}{\gamma }}W\rangle \).

Consequently, assuming tN is an integer, for \(k=tN\) one finds that the distribution of \(\log \theta _k-\log \theta _0\) is approximately (for large N) that of \(\sqrt{\frac{2}{\gamma }}W_t-\frac{1}{\gamma }t\), which is normal \(N(-\frac{t}{\gamma },\frac{2t}{\gamma })=N(-\frac{k}{\gamma N},\frac{2k}{\gamma N})\). The \(\theta _k\) can also approximately be generated by the recursion, yielding log-normal random variables,

where the \(\xi _k\) are IID random variables with common \(N(-\frac{1}{\gamma N},\frac{2}{\gamma N})\) distribution. Indeed for this recursion one finds \(\mathbb {E}_k \theta _{k+1} = \theta _k\) and \(\mathbb {V}_k (\theta _{k+1}) = \theta _k^2 (\exp (\frac{2}{\gamma N})-1)\approx \frac{2\theta _k^2}{\gamma N}\), which coincide with the earlier found expressions (14) and (15) for conditional mean and variance.

Moreover the continuous time approximation Z of the \(\theta _k/\theta _1\), with Z as in (13), is the Doléans exponential \({\mathscr {E}}\left( \sqrt{\frac{2}{\gamma }} W\right) \) and thus satisfies the stochastic differential equation

In Sect. 4.2 we will provide results for the Heston model with the product of \(\theta _1\) and this limit process as the squared volatility process.

4 Synthetic data examples

In this section we test the practical performance of our method on challenging synthetic data examples. The goal is to illustrate the ability of our method to recover the volatility in a controlled setting where the ground truth is known and thus the quality of inferential results can be assessed directly. We also show good practical performance of the method in a situation which formally does not fall under the derivations made in Sect. 2; see Sect. 4.2 below.

The simulation setup is as follows: we take the time horizon \(T=1\) and generate \(n=4\, 000\) observations as follows. First, using a fine grid of \(10n+1\) time points which are sampled from the \({\text {Uniform}}(0,1)\) distribution, conditional on including 0 and 1, a realisation of the process X is obtained via Euler’s scheme, see Glasserman (2004) or Kloeden and Platen (1992). The n time points \(\{ t_i \}\) are then taken as a random subsample of those times, conditional on including 1. The settings used for the Gibbs sampler are given in Appendix A.4. In each example below, we plot the posterior mean and \(95\%\) marginal credible band (obtained from the central posterior intervals for the coefficients \(\xi _k=\sqrt{\theta }_k\) in (6)). The former gives an estimate of the volatility, while the latter provides a means for uncertainty quantification.

All the computations are performed in the programming language Julia, see Bezanson et al. (2017), and we provide the code used in our examples. The hardware and software specifications for the MacBook used to perform simulations are: CPU: Intel(R) Core(TM) M-5Y31 CPU @ 0.90GHz; OS: macOS (x86_64-apple-darwin14.5.0).

4.1 Fan and Gijbels function

Suppose the volatility function is given by

This is a popular benchmark in nonparametric regression, which we call the Fan & Gijbels function (see Fan & Gijbels 1995). This function was already used in the volatility estimation context in Gugushvili et al. (2020). We generated data using the drift coefficient \(b(x) = -x\). For the noise level we took \(\eta _v=0.01\), which is substantial. Given the general difficulty of learning the volatility from noisy observations, one cannot expect to infer it on a very fine resolution (cf. the remarks in Sect. 2.2), and thus we opted for \(N=40\) bins. Experimentation showed that the results were rather robust w.r.t. the number of bins.

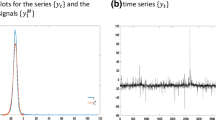

Inference results are reported in Fig. 1. It can be seen from the plot that we succeed in learning the volatility function. Note that the credible band does not cover the entire volatility function, but this had to be expected given that this is a marginal band. Quick mixing of the Markov chains can be visually assessed via the trace plots in Fig. 2. The algorithm took about 11 min to complete.

Posterior and pointwise \(95\%\) credible band for the example of Sect. 4.1. The true volatility function is plotted in red, the black step function gives the posterior mean, and the credible band is shaded in blue (color figure online)

Trace plots for the Fan and Gijbels function of Sect. 4.1. Left: first 10,000 samples. Right: subsequent 20,000 samples. Top: \(\alpha \), middle: \(\eta _v\), bottom: \(\theta _{20}\)

4.2 Heston model

The Heston model (see Heston 1993, or (Filipovic 2009, Section 10.3.3) and (Brigo & Mercurio 2006, Chapter 19, Appendix A)) is a widely used stochastic volatility model. It stipulates that the price process of a certain asset, denoted by S, evolves over time according to the SDE

where the process Z follows the CIR or square root process (see Cox et al. 1985),

Here W and B are correlated Wiener processes with correlation \(\rho \). Following a usual approach in quantitative finance, we work not with S directly, but with its logarithm \(X_t=\log S_t\). According to Itô’s formula it obeys a diffusion equation with volatility \(s(t)=\sqrt{Z_t}\),

We assume high-frequency observations on the log-price process X with additive noise \(V_i \sim N(0,\eta _v)\). In this setup the volatility s is a random function. We assume no further knowledge of the data generation mechanism. This setting is extremely challenging and puts our method to a serious test. To generate data, we used the parameter values \(\mu = 0.05,\) \(\kappa = 7,\) \(\theta = 0.04,\) \(\sigma = 0.6,\) \(\rho = -0.6\), that mimick the ones obtained via fitting the Heston model to real data (see Table 5.2 in van der Ploeg 2006). Furthermore, the noise variance was taken equal to \(\eta _v = 10^{-6}.\) The latter choice ensures a sufficiently large signal-to-noise ratio in the model (5), that can be quantified via the ratio \(w_i / \eta _v\) of the intrinsic noise level \(w_i\) to the external noise level \(\eta _v\). Finally, the parameter choice \(\mu =0.04\) corresponds to a \(4\%\) log-return rate, which is a reasonable value.

We report inference results with \(N=80\) bins in Fig. 3. These are surprisingly accurate, given a general difficulty of the problem and the amount of assumptions that went into the learning procedure. Note that the number of bins to get accurate results is higher than in the previous example. This is due to the fact that the volatility here is much rougher, Hölder continuous of order less than \(\tfrac{1}{2}\). The Markov chains mix rapidly, as evidenced by the trace plots in Fig. 4. The simulation run took about 12 min to complete. Finally, we note that the Heston model does not formally fall into the framework under which our Bayesian method was derived (deterministic volatility function s). We expect that a theoretical validation why our approach also works in this case requires the use of intricate technical arguments, which lie beyond the scope of the present, practically-oriented paper.

Posterior mean and pointwise \(95\%\) credible band for the example of Sect. 4.2. The true volatility function is plotted in red, the black step function gives the posterior mean, and the credible band is shaded in blue (color figure online)

Trace plots for the Heston model of Sect. 4.2. Left: first 10,000 samples. Right: subsequent 20,000 samples. Top: \(\alpha \), middle: \(\eta _v\), bottom: \(\theta _{40}\)

We have also run some experiments for a variation of the above Heston model with the original CIR squared volatility according to (18) replaced with a squared volatility equal to the limit process \(\theta _1 Z\), where Z is as in (13), equivalently as in (16), with \(\sqrt{\frac{2}{\gamma }}=0.6\). The experiments showed the influence of the number of bins N and the starting values, illustrated by Figs. 5 and 6. One sees that the results with the lower number of bins \(N=40\) combined with a low initial value are less satisfactory than with the higher \(N=80\) and higher starting value.

Posterior results for the Heston model where the CIR volatility is replaced by the root of the continuous time limit of the prior, \(N=40\), starting value of the volatility is 0.1. The colors have the same meaning as in Fig. 3 (color figure online)

5 Exchange rate data

Unlike daily stock quotes or exchange rate series that can easily be obtained via several online resources (e.g., Yahoo Finance), high frequency financial data are rarely accessible for free for academia. In this section, we apply our methodology to infer volatility of the high frequency foreign exchange rate data made available by Pepperstone Limited, the London based forex broker.Footnote 1 As we shall see below, the inferred volatility looks plausible, and while there is substantial uncertainty surrounding the inferential results left (as quantified by the marginal credible band), nontrivial conclusions can nevertheless be drawn.

Specifically, we use the EUR/USD tick data (bid prices) for 2 March 2015. As the independent additive measurement error model (2) becomes harder to justify for highly densely spaced data, we work with the subsampled data, retaining every 10th observation. Applying various amounts of subsampling is a common strategy in this context; see, e.g., Section 5 in Mancini et al. (2015) for a practical example. In our case subsampling results in a total of \(n=13\, 025\) observations over one day, about 9 per minute. See (Mykland & Zhang 2012, Section 2.5) for further motivation and explanation of subsampling and (Zhang et al. 2005, Section 1.2) where it is shown that subsampling (and averaging) is motivated by a substantial decrease in the bias of their estimator. As in Sect. 4.2, we apply the log-transformation on the observed time series, and assume the additive measurement error model (2). The data are displayed in Fig. 7, top panel.

Top: Natural logarithm of the EUR/USD exchange rate data for 2 March 2015 analysed in Sect. 5. Bottom: Posterior mean (black curve) and pointwise 95% credible band (blue band) for the volatility function. The time axis is rescaled to [0, 1] (color figure online)

Financial time series often contain jumps, accurate detection of which is a delicate task. As this section serves illustrative purposes only, for simplicity we ignore jumps in our analysis. For high frequency data, volatility estimates are expected to recover quickly after infrequent jumps in the underlying process. This should in particular be the case for our learning procedure, given that we model the volatility as a piecewise constant function, which can be viewed as an estimate of the “histogramised” true volatility. Indeed, effects of infrequent jumps in the underlying process are likely to get smoothed out by averaging (we make no claim of robustness of our procedure with respect to possible presence of large jumps in the process X, or densely clustered small jumps). An alternative here would have been to use a heuristic jump removal technique, such as the ones discussed in Sabel et al. (2015); next one could have applied our Bayesian procedure on the resulting “cleaned” data.

We report inference results in the bottom panel of Fig. 7, where time is rescaled to the interval [0, 1], so that \(t=0\) corresponds to the midnight and \(t=0.5\) to noon. We used \(N=96\) bins. As seen from the plot, there is considerable uncertainty in volatility estimates. Understandably, the volatility is lower during the night hours. It also displays two peaks corresponding to the early morning and late afternoon parts of the day. Finally, we give several trace plots of the generated Markov chains in Fig. 9. The algorithm took about 33 min to complete. In Figure 8 we give inference results obtained via further subsampling of the data, retaining \(50\%\) of the observations. The posterior mean is quite similar to that in Fig. 7, whereas the wider credible band reflects greater inferential uncertainty due to a smaller sample size. The figure provides a partial validation of the model we use.

Posterior mean (black curve) and pointwise 95% credible band (blue band) for the volatility of the further subsampled EUR/USD exchange rate data analysed in Sect. 5. The time axis is rescaled to [0, 1] (color figure online)

Log trace plots for the exchange rate data example of Sect. 5. Top: \(\alpha \), middle: \(\eta _v\), bottom: \(\theta _{20}\)

6 Discussion

In this paper we studied a practical nonparametric Bayesian approach to volatility learning under microstructure noise. From the statistical theory point of view, the problem is much more difficult than volatility learning from noiseless observations. Hence, accurate inference on volatility under microstructure noise requires large amounts of data. Fortunately, these are available in financial applications. On the other hand, design of a learning method that scales well with data becomes important. Our specification of the prior and a deliberate, but asymptotically harmless misspecification of the drift by taking \(b\equiv 0\) are clever choices that enable us to combine our earlier work in Gugushvili et al. (2019b) with the FFBS algorithm for Gaussian linear state space models. This gives a conceptually simple and fast algorithm (Gibbs sampler) to obtain samples from the posterior, from which inferences can be drawn in a straightforward way. A very interesting topic for future research within our approach is to explicitly account for the possible presence of jumps in financial time series.

Code availability

The computer code to reproduce numerical examples in this article is available at Gugushvili et al. (2022).

Notes

As of 2020, data are not available from the Pepperstone website any more, but can be obtained directly from the present authors. The data are stored as csv files, that contain the dates and times of transactions and bid and ask prices. The data over 2019 are available for download (after a free registration) at https://www.truefx.com/truefx-historical-downloads.

References

Bezanson, J., Edelman, A., Karpinski, S., & Shah, V. B. (2017). Julia: A fresh approach to numerical computing. SIAM Review, 59(1), 65–98.

Brigo, D., & Mercurio, F. (2006). Interest rate models-theory and practice: With smile, inflation and credit (Vol. 2). Springer.

Cemgil, A. T., & Dikmen, O. (2007). Conjugate gamma Markov random fields for modelling nonstationary sources. In M. E. Davies, C. J. James, S. A. Abdallah, & M. D. Plumbley (Eds.), Independent component analysis and signal separation: 7th International Conference, ICA 2007, London, UK, September 9–12, 2007. Proceedings (pp. 697–705). Springer.

Cox, J. C., Ingersoll, J. E., & Ross, S. A. (1985). A theory of the term structure of interest rates. Econometrica, 53(2), 385–407.

Fan, J., & Gijbels, I. (1995). Data-driven bandwidth selection in local polynomial fitting: Variable bandwidth and spatial adaptation. Journal of Royal Statistics Society Series B, 57(2), 371–394.

Filipovic, D. (2009). Term-structure models. A graduate course. Springer.

Ghosal, S., & van der Vaart, A. (2017). Fundamentals of nonparametric Bayesian inference, Cambridge series in statistical and probabilistic mathematics (Vol. 44). Cambridge: Cambridge University Press.

Glasserman, P. (2004). Monte Carlo methods in financial engineering (Vol. 53). Springer.

Gugushvili, S., van der Meulen, F., Schauer, M., & Spreij, P. (2019). Fast and scalable non-parametric Bayesian inference for Poisson point processes. RESEARCHERS.ONE. https://www.researchers.one/article/2019-06-6

Gugushvili, S., van der Meulen, F., Schauer, M., & Spreij, P. (2019). Nonparametric Bayesian volatility estimation. In J. de Gier, C. E. Praeger, & T. Tao (Eds.), 2017 MATRIX annals (pp. 279–302). Springer.

Gugushvili, S., van der Meulen, F., Schauer, M., & Spreij, P. (2020). Nonparametric Bayesian estimation of a Hölder continuous diffusion coefficient. Brazilian Journal of Probability and Statistics, 34(3), 537–579. https://doi.org/10.1214/19-BJPS433.

Gugushvili, S., van der Meulen, F., Schauer, M., & Spreij, P. (2022). Julia code for nonparametric Bayesian volatility learning under microstructure noise. https://doi.org/10.5281/zenodo.6801410

Heston, S. L. (1993). A closed-form solution for options with stochastic volatility with applications to bond and currency options. Review of Financial Studies, 6(2), 327–343.

Hoffmann, M., Munk, A., & Schmidt-Hieber, J. (2012). Adaptive wavelet estimation of the diffusion coefficient under additive error measurements. Annales de l’IHP Probabilités et Statistiques, 48(4), 1186–1216.

Ignatieva, K., & Platen, E. (2012). Estimating the diffusion coefficient function for a diversified world stock index. Computer Statistics Data Analysis, 56(6), 1333–1349.

Jacod, J., Li, Y., Mykland, P., Podolskij, M., & Vetter, M. (2009). Microstructure noise in the continuous case: The pre-averaging approach. Stochastic Processes Application, 119(7), 2249–2276.

Jacod, J., & Shiryaev, A. (2013). Limit theorems for stochastic processes (Vol. 288). Springer.

Kanaya, S., & Kristensen, D. (2016). Estimation of stochastic volatility models by nonparametric filtering. Econometric Theory, 32(4), 861–916.

Kloeden, P. E., & Platen, E. (1992). Numerical solution of stochastic differential equations, Applications of Mathematics (New York) (Vol. 23). Springer. https://doi.org/10.1007/978-3-662-12616-5.

Mancini, C., Mattiussi, V., & Renò, R. (2015). Spot volatility estimation using delta sequences. Finance and Stochastics, 19(2), 261–293.

Müller, P., & Mitra, R. (2013). Bayesian nonparametric inference—Why and how. Bayesian Analyses, 8(2), 269–302.

Müller, P., Quintana, F. A., Jara, A., & Hanson, T. (2015). Bayesian nonparametric data analysis. Springer Series in Statistics. Springer.

Munk, A., & Schmidt-Hieber, J. (2010). Lower bounds for volatility estimation in microstructure noise models. Borrowing strength: Theory powering applications—A Festschrift for Lawrence D. Brown, Inst. Math. Stat. (IMS) Collect. (Vol. 6, pp. 43–55). Institute of Mathematics Statistics.

Musiela, M., & Rutkowski, M. (2005). Martingale methods in financial modelling. Stochastic modelling and applied probability (2nd ed., Vol. 36). Springer.

Mykland, P. A., & Zhang, L. (2009). Inference for continuous semimartingales observed at high frequency. Econometrica, 77(5), 1403–1445.

Mykland, P. A., & Zhang, L. (2012). The econometrics of high-frequency data. In C. R. C. Press (Ed.), Statistical methods for stochastic differential equations, Monogr. Statist. Appl. Probab. (Vol. 124, pp. 109–190). CRC Press.

Papaspiliopoulos, O., Roberts, G. O., & Stramer, O. (2013). Data augmentation for diffusions. Journal of Computational and Graphical Statistics, 22(3), 665–688.

Petris, G., Petrone, S., & Campagnoli, P. (2009). Dynamic linear models with R. Use R! Springer.

Reiß, M. (2011). Asymptotic equivalence for inference on the volatility from noisy observations. Annals of Statistics, 39(2), 772–802.

Sabel, T., Schmidt-Hieber, J., & Munk, A. (2015). Spot volatility estimation for high-frequency data: Adaptive estimation in practice. Modeling and stochastic learning for forecasting in high dimensions, Lect. Notes Stat. (Vol. 217, pp. 213–241). Springer.

Silverman, B. W. (1986). Density estimation for statistics and data analysis. Monographs on statistics and applied probability. Chapman & Hall.

Tanner, M. A., & Wong, W. H. (1987). The calculation of posterior distributions by data augmentation. Journal of American Statistics Association, 82(398), 528–550 (With discussion and with a reply by the authors).

Tierney, L. (1994). Markov chains for exploring posterior distributions. Annals of Statistics, 22(4), 1701–1762.

van der Ploeg, A. P. C. (2006). Stochastic volatility and the pricing of financial derivatives. Ph.D. thesis, University of Amsterdam.

van der Meulen, F., & Schauer, M. (2017). Bayesian estimation of discretely observed multi-dimensional diffusion processes using guided proposals. Electronics Journal of Statistics, 11(1), 2358–2396.

Wilkinson, D. J. (2012). Metropolis Hastings MCMC when the proposal and target have differing support. https://darrenjw.wordpress.com/2012/06/04/metropolis-hastings-mcmc-when-the-proposal-and-target-have-differing-support/. Accessed 23 Dec 2017

Zhang, L., Mykland, P. A., & Aït-Sahalia, Y. (2005). A tale of two time scales: Determining integrated volatility with noisy high-frequency data. Journal of the American Statistical Association, 100(472), 1394–1411.

Funding

The research leading to the results in this paper has received funding from the European Research Council under ERC Grant Agreement 320637.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Details on update steps in the Gibbs sampler

Details on update steps in the Gibbs sampler

1.1 Drawing \(x_{0:n}\)

We first describe how to draw the state vector \(x_{0:n}\) conditional on all other parameters in the model and the data \(y_{1:n}\). Note that for \(u_i\) in (5) we have by (4) that \(u_i \sim N(0,w_i)\), where

By Eq. (4.21) in Petris et al. (2009) (we omit dependence on \(\theta _{1:N}, \eta _v\) in our notation, as they stay fixed in this step),

where the factor with \(i=n\) in the product on the righthand side is the filtering density \(p( x_n | y_{1:n})\). This distribution is in fact \(N(\mu _n,C_n)\), with the mean \(\mu _n\) and variance \(C_n\) obtained from Kalman recursions

Here

is the Kalman gain. Furthermore, \( e_i=y_i-\mu _{i-1} \) is the one-step ahead prediction error, also referred to as innovation. See Petris et al. (2009), Section 2.7.2. This constitutes the forward pass of the FFBS.

Next, in the backward pass, one draws backwards in time \({\widetilde{x}}_n \sim N(\mu _n,C_n)\) and \({\widetilde{x}}_{n-1},\ldots {\widetilde{x}}_0\) from the densities \(p( x_i | {\widetilde{x}}_{i+1}, y_{1:n})\) for \(i=n-1,n-2,\ldots ,0\). It holds that \(p( x_i | {\widetilde{x}}_{i+1:n}, y_{1:n})=p( x_i | {\widetilde{x}}_{i+1}, y_{1:n})\), and the latter distribution is \(N(h_i,H_i)\), with

For every i, these expressions depend on a previously generated \({\widetilde{x}}_{i+1}\) and other known quantities only. The sequence \({\widetilde{x}}_0,{\widetilde{x}}_1,\ldots ,{\widetilde{x}}_n\) is a sample from \(p(x_{0:n}|y_{1:n})\). See Section 4.4.1 in Petris et al. (2009) for details on FFBS.

1.2 Drawing \(\eta _v\), \(\theta _{1:N}\) and \(\zeta _{2:N}\)

Using the likelihood expression from Sect. 2.3 and the fact that \(\eta _v\sim {\text {IG}}(\alpha _v,\beta _v)\), one sees that the full conditional distribution of \(\eta _v\) is given by

Similarly, using the likelihood expression from Sect. 2.3 and the conditional distributions in (7), one sees that the full conditional distributions for \(\theta _{1:N}\) are

The full conditional distributions for \(\zeta _{2:N}\) are

1.3 Drawing \(\alpha \)

The unnormalised full conditional density of \(\alpha \) is

The corresponding normalised density is nonstandard, and the Metropolis-within-Gibbs step (see, e.g., Tierney 1994) is used to update \(\alpha \). The specific details are exactly the same as in Gugushvili et al. (2019b).

1.4 Gibbs sampler

Settings for the Gibbs sampler in Sect. 4 are as follows: we used a vague specification \(\alpha _1,\beta _1\rightarrow 0\), and also assumed that \(\log \alpha \sim N(1,0.25)\) and \(\eta _v \sim {\text {IG}}(0.3,0.3)\) in Sect. 4.1. For the Heston model in Sect. 4.2 we used the specification \(\eta _v \sim {\text {IG}}(0.001,0.001)\). Furthermore, we set \(x_0 \sim N(0,25)\). The Metropolis-within-Gibbs step to update the hyperparameter \(\alpha \) was performed via an independent Gaussian random walk proposal (with a correction as in Wilkinson (2012)) with scaling to ensure the acceptance rate of about \(30-50\%\). The Gibbs sampler was run for \(30 \, 000\) iterations, with the first third of the samples dropped as burn-in.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gugushvili, S., van der Meulen, F., Schauer, M. et al. Nonparametric Bayesian volatility learning under microstructure noise. Jpn J Stat Data Sci 6, 551–571 (2023). https://doi.org/10.1007/s42081-022-00185-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-022-00185-9

Keywords

- Forward Filtering Backward Simulation

- Gibbs sampler

- High frequency data

- Inverse Gamma Markov chain

- Microstructure noise

- State-space model

- Volatility