Abstract

The cardiovascular system is a combination of the heart, blood and blood vessels. Cardiovascular diseases (CVD) are a key factor behind casualties worldwide among both women and men. About 9.4 million deaths occur due to high Blood Pressure (BP) only, out of which 51% deaths are due to strokes and 45% deaths are due to coronary heart diseases. The Electrocardiogram (ECG) represents the heart health condition of the subject, (patient) since it is acquired through electrical conduction, which appears in terms of P-QRS-T waves. But analysis of these waves is very tedious due to the existence of different noises/artifacts. Computer Aided Diagnosis (CAD) system is required in practical medical scenario for better and automated ECG signal analysis and to compensate for human errors. In general, implementation of a CAD system for ECG signal analysis requires; preprocessing, feature extraction and classification. In the existing literature, some authors have used time domain techniques which yield good performance for cleaned ECG signals i.e., without noise/artifact. Some authors have used frequency domain techniques later, but they suffer from the problem of spectral leakage making them unsuitable for real time/pathological datasets. The existing techniques from both these domains are not able to effectively analyze nonlinear behavior of ECG signals. These limitations have motivated this work where Continuous Wavelet Transform (CWT), Spectrogram and Autoregressive (AR) technique are used collectively for interpreting nonlinear and non-stationary features of the ECG signals. In this paper, both Massachusetts Institute of Technology-Beth Israel Hospital Arrhythmia database (MB Ar DB) and Real-time database (RT DB) have been used. Performance of the proposed method is compared with that of the previous studies on the basis of sensitivity (SE) and detection rate (D.R). The proposed technique yields SE of 99.90%, D.R of 99.81% & SE of 99.77%, D.R of 99.87% for MB Ar DB and RT DB, respectively. Therefore, the proposed technique showcases the possibility of an encouraging diagnostic tool for further improving the present situation of health informatics in cardiology labs/hospitals.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cardiovascular disease (CVD) has been marked as the principal root-cause of casualties of about 17 million people every year worldwide [1]. CVD mainly refers to the diseases related to the heart [2]. The heart is a cone-shaped organ which requires constant supply of oxygen and nutrients [3,4,5]. It is responsible for supplying the blood to different organs of the body and it contracts at regular interval [6]. Any obstruction in the supply of blood leads to heart attacks (or heart diseases), which causes a lot of casualties every year [7, 8]. But unfortunately, the analysis of CVD is not a simple task as it involves a lot of complexities such as hypertensive, pulmonary, valvular, inflammatory cardiomyopathy, etc. [9,10,11]. An efficient electrocardiogram (ECG) signal can provide correct assessment of them [12,13,14]. This signal is generated due to active tissues of the heart, which generates electrical currents. The ECG signal is not random in nature and is even scheduled according to time period, shape, and heart rate [15]. It is an earliest and cheapest non-invasive diagnostic tool in the medical field for detecting CVDs based on examination of its P-QRS-T waves [16,17,18,19]. These waves arise due to chemical, electrical and mechanical processes within the heart [20, 21]. Any continuous alterations in these waves indicate possible cardiac arrhythmia [22,23,24] and require a different kind of clinical diagnostic observation [25,26,27,28,29,30]. Early detection of such alterations is utmost essential to figureout patient’s health condition timely and to reduce overall mortality rate [31]. Heart rate variability (HRV) in another factor that plays an important role in accessing the correct status of the cardiac health as a preliminary diagnosis method [14, 24, 32,33,34,35,36,37].

The early-stage detection of cardiac arrhythmia is of prime importance [1]. But during the acquisition of ECG data, different types of noise gets involved, which hide its important characteristics that mislead its analysis and introduces the non-linearity [38, 39]. Analysis of this nonlinear signal requires automated analysis as provided by computer-aided diagnosis (CAD). This, in turn, requires efficient techniques to handle the current incidences of CVDs occurring worldwide [40,41,42]. Also, presently the concept of personal ECG monitoring is coming up among patients of distinct age groups worldwide [43]. Therefore, there is a need of developing a framework involving efficient techniques from all domains viz. preprocessing, feature extraction, and classification in the domain of biomedical signal processing [44, 45]. In the existing literature, some authors have used time domain techniques which show good performance for cleaned ECG signals, i.e. without noise/artifacts. Later, frequency domain techniques were reported by some authors that have limited application due to spectral leakage. But all these techniques from both these domains were not able to effectively analyze nonlinear behavior of the ECG signals [46, 47]. For instance, in [48], Christov, I.I. et al. and in [49], Hamilton, P.S. & Tompkin, W.J. proposed heuristic methods for classifying an ECG signal, but the outcome was found to be highly dependent on proper selection of the band pass filter (3 dB frequencies). In [30], Kumar, M. et al. proposed a decision support system for atrial fibrillation using a flexible analytic wavelet transform. They have tested performance of the proposed technique on the basis of ACC, SEN, and SPE with random forest classifier. In [50], Rao, K.D. proposed R-peaks detection based on discrete wavelet transforms (DWT). In that research, R–R interval and data compression steps for ECG signals were presented along with R-peaks detection. The main obstacle in the application of DWT is its frequency resolution which is reduced during resampling [51]. Traditionally, DWT-based computationally efficient techniques have been reported in the literature for inverting system transfer function model, computing piecewise constant system response to arbitrary excitations and fractional system analysis [52,53,54]. In [45], Asadur Rahman, Md. proposed preprocessing techniques of ECG signal using a simple statistical approach. In that article, MATLAB was used to develop the set-up for this kind of preprocessing. In [55], Hanumantha Rao, G. and Rekha, S. presented a transconductor-capacitor filter for bio-medical signals’ applications. They have proposed low-voltage, low-power transconductor device with 5.85 nS and 0.8 V, respectively. Validation of this research work was done using a second-order Butterworth low-pass filter having a cutoff frequency of 100 Hz. In [56], Kora, P. proposed detection of myocardial infarction using Hybrid Firefly and Particle Swarm Optimization (FFPSO) that were used to optimize the raw ECG signal. But the main drawback of PSO is low convergence rate [57]. In [58], Pachori, R.B. et al. proposed a classification-based technique for analyzing datasets of diabetic and normal subjects based on RR-interval. In that research article, empirical mode decomposition (EMD), least square-support vector machine (LS-SVM) classifier, Radial Basis Function (RBF), Morlet wavelet, and Mexican hat wavelet kernel have been utilized. In [59], Jain S. et al. adaptive filters were used for QRS complex detection, but an appropriate reference signal was needed for its operation. In [9, 47, 60], Gupta, V. et al. proposed chaos theory as a feature extraction tool for ECG signal and used both real-time and standard datasets for demonstrations. The main limitations of this approach are: requirement of proper selection of time delay dimension (embedding), correlation dimension, Lyapunov exponent and entropy that is still a challenge. In [61], Hema Jothi, S. and Helen Prabha, K. proposed analysis of fetal ECG on the basis of adaptive neuro-fuzzy inference systems and undecimated wavelet transform. Comparison on the basis of MSE was carried out between the reported technique and standard discrete wavelet transform technique for evaluating the performance in that article. In [62], automated identification of normal and diabetic heart rate signals was proposed using approximate entropy, but it required knowledge of the previous amplitude values. In [63], Das, M.K. and Ari, S. proposed a denoising technique based on Stockwell transform. Validation of the reported technique was done using various normal and abnormal files of MB Ar DB. They added white Gaussian noise to the selected records of MB Ar DB to investigate the effectiveness of their technique. Various performance parameters viz. SNR, RMSE and PRD were estimated for comparison.

In the existing literature, most of the techniques are not suitable to handle high-frequency components efficiently. These techniques tend to trim the amplitudes of the QRS peaks increasing the false detection and duplicity in the detection process of its peaks [64]. This problem motivated the present authors to explore the use of efficient techniques that can provide better frequency information and results in more efficient and accurate detection of R-peaks by effectively filtering-out the high-frequency noise components.

In this paper, spectrogram (obtained using short-time Fourier transform) has been used, because it helps in effective measurement of time, frequency and power intensity information simultaneously through time–frequency analysis. Also, continuous wavelet transform (CWT) has been used for enhancing both time and frequency resolutions as compared to that provided by the spectrogram [65]. The benefit of using a spectrogram is due to the fact that Fourier transform has been known to be a good candidate for analyzing stationary signals.

Wavelet transform represents a nonlinear signal by translations and dilations of a window. It is of two types; CWT and discrete wavelet transform (DWT). DWT is not a good candidate for the present application due to reduction in the frequency resolution during resampling at each decomposition level. CWT, on the other hand, provides a good and consistent frequency resolution. Also, sufficient and dominant scale can be estimated for each component of the ECG signal in each dataset using it. It further helps in estimating each component separately from the selected ECG dataset [66]. Furthermore, the proposed use of AR technique further supplements the limitations of spectrogram, CWT and provides enhanced time and frequency resolution simultaneously. The proposed technique helps in getting more clear frequency information that is important for filtering-out the high-frequency noise components.

The paper is structured as; Sect. 2 describes materials and methods, Sect. 3 presents and analyzes the simulated results in detail, followed by conclusions at the end.

2 Materials and methods

The methodology proposed in this paper is shown in Figs.1, 2.

2.1 ECG dataset (recording)

MIT-BIH Arrhythmia and Real-time databases have been used for validating the proposed methodology.

2.1.1 MIT-BIH arrhythmia database

Massachusetts Institute of Technology-Beth Israel Hospital Arrhythmia database (MB Ar DB) [70, 71] has been considered in this study. It has 48 recordings sampled at 360 Hz, with durations of 30–60 min using 2 lead arrangements. In this paper, all 48 datasets of MB Ar DB were downloaded from physioNet database and directly used for this study.

In this paper, 12 real-time recordings (RT DB) were also used to establish the performance of the proposed methodology in a practical scenario. The use of two databases in this paper is in line with other studies in the existing literature that made use of variety of databases for validating their work [39, 72,73,74,75].

2.1.2 Real-time ECG database

In this paper, 27 real-time recordings (RT DB) were also acquired at a sampling rate of 360 Hz, with duration of 10–30 min using two lead arrangements under the supervision of a well skilled lab technician. This data acquisition was obtained after permission letter from research ethics committee of NIT, Jalandhar, India along with willingness from each volunteering subject before ECG acquisition. In this data acquisition arrangement, 27 subjects participated who were aged between 23 and 72 years including research scholars, retired professors, and college students. Unfortunately, only 12 ECG recordings were appropriate for analysis purpose. These ECG datasets were stored directly in a personal computer using Biopac@MP35/36 equipments.

Figure 1 shows the set-up for recording along with a recorded real time ECG signal. The acquired data remains stored in a computer that may be used for data interpretation in future.

2.2 Preprocessing

The existence of different types of noises/artifacts during ECG signal acquisition makes the analysis of ECG signal more complex and difficult [76, 77]. Cardiologists/physicians/doctors use to face distinct problems in accessing the clinical datasets of the patients having CVDs in such situations [63, 78, 79]. These noises/artifacts may be due to motion, respiration, poor conditions of electrodes, base line wander (BLW), muscle noise, and power line interference (PLI) [63, 80,81,82]. In this paper, Savitzky–Golay digital filtering (SGDF) has been used for preprocessing of MIT-BIH Arrhythmia datasets as described in [83, 84]. It is a digital filter which is used for smoothing the raw ECG signal [60] that preserves all important clinical attributes after filtering [85].

SGDF is characterized by matrix \([\mathrm{g}]\) which has \(\mathrm{D}+1\) rows and \(2\mathrm{N}+1\) columns. Mathematically, it is represented as

where \({\alpha }\), x, n, W, k denotes filtering coefficients, input signal, columns’ index number (2 N + 1), weighting matrix, rows’ index number (D + 1), respectively.

2.3 Feature extraction

Various methods used for feature extraction in this paper are presented in next subsections.

2.3.1 Continuous wavelet transform (CWT)

CWT helps in analysis of non-stationary signals at multiple scales by considering an analysis window to extract signal segments [86]. Mathematically, the CWT [16] of a signal \(y(t)\) using a family of wavelet functions,\({\Psi }_{{\alpha },\upbeta }(\mathrm{t})\) is given by:

where β is translation factor, α is scale factor, * denotes complex conjugate, and finally ψ ∗ is a translated and scaled complex conjugated mother wavelet function.

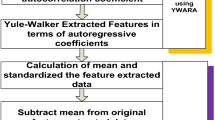

2.3.2 Autoregressive (AR) technique

Among existing time–frequency analysis (TFA) techniques, auto-regressive (AR) technique offers good time–frequency resolution [87]. It estimates the order of the model of the considered ECG dataset to provide better results [88]. The order of this model is important as it indicates its number of poles [89]. AR analysis provides both power spectrum density (PSD) description and TFA [90]. For a signal \(\mathrm{y}[\mathrm{k}]\), if \(\mathrm{m}\) is the model order, δ[k] is the zero-mean white noise, then the AR process is written as [90]

where \({{\alpha }}_{\mathrm{j}}\) are the jth coefficients of AR process, and m denotes time delay index.

The power spectrum is given by [90]

2.3.3 Spectrogram technique

A spectrogram provides time varying spectral density description of the ECG signal. It shows signal in time–frequency domain. Mathematically, it is given by squared magnitude of the short time Fourier transform (STFT) of the signal as in [91]

where t denotes time (in sec) and w denotes frequency (in rad/sec).

STFT estimates sinusoidal frequency and phase content of the local segments of a signal as it changes over time. It converts long length signal into small segments and computes Fourier transform of each [92]. Therefore, spectrogram represents time–frequency-intensity spectrum for a short time duration [93, 94].

2.3.4 Classification

After successful completion of preprocessing using SGDF and feature extraction using CWT, spectrogram, AR modeling techniques, the main task that remains is related to their classification, which is a crucial step to detect exact R-peaks in the ECG signal. K-Nearest Neighbor (KNN) classifier is selected here for classification as it yields sufficiently accurate classification results. It works on the assumption that similar things tend to lie near to each other, which can be implemented easily using some functional space equations. Some authors ignored KNN classifier due to its laziness in the past. But in CAD-based system, it is the accuracy that matters for saving the life of a subject (patient) at the time of emergency. And, the system does not require to rely on building a model with KNN and thus is able to yield more versatile responses [10]. This is the reason of emergence of CAD in the field of health informatics.

In KNN, generally Euclidean distance metric (EDM) is preferred [95], because it does not require assigning weights for various features. EDM is measured between the test sample and training sample as

where P and Q denote test and training sample, respectively, in class L. The next test sample is measured on the basis of K-Nearest training samples. Most of the time, getting the odd values of K is the prime objective [96]. An appropriate value of K gives low test error rates, but it may enhance the number of iterations. Therefore, the strategy is to use same dataset for both testing and training purposes. K-fold cross validation is also applied for validation of the dataset as in [97]. Figure 3 indicates the steps involved in the KNN classification algorithm.

Steps involved in the KNN classification algorithm [10]

2.3.5 Classification parameters

For evaluating the performance of the proposed methodology, two important parameters viz. sensitivity (SE) and detection rate (D.R) are considered in this paper. The definitions of SE and DR are illustrated below [98] as;

Sensitivity (SE) – It is the ratio of true positive (TP) to the all actual positives (TP + FN). It estimates the proportion of actual positives (TP/TP + FN) which are accurately detected.

Detection Rate (D.R)—It is the ratio of total number of true positive (TP) to the total actual peaks.

Mathematically, these classification parameters are defined as [66, 79, 81, 99,100,101,102,103]

(9). where TP denotes true positives, FP denotes false positives, and FN denotes false negatives, which are illustrated below as;

True positives (TP): They are defined as positively detected events when system possess such events actually. For example, if any patient is diagnosed with a disease from which he is actually suffering, then it is called TP.

False positives (FP): They are also known as Type-I error. They are defined as wrongly detected events. For example, if any patient is diagnosed with a disease from which he is not suffering, then it is called FP.

False negatives (FN): They are also known as Type-II error. They are defined as wrongly missed events. For example, if any patient is not diagnosed with a disease from which he is actually suffering then it is called FN. In the existing literature, various techniques have been used as summarized in Table 1 along with their pros and cons.

3 Results and analysis

Any deviations in a signal makes the recognition of the existing patterns a difficult task. The spectrogram can represent these deviations effectively only if the signal has high SNR. Otherwise, both time domain and spectrogram approach fail and phase analysis using CWT works better [94] instead as shown in Fig. 4. It shows two peaks in the left figure and three peaks in the right figure of 103 m dataset. The modulus (magnitude response) of CWT clearly reveals all the actual peaks and the angle (phase response) reveals its characteristics.

AR technique strengthens the CAD system by measuring the amount of peak power that is associated with each of the constituent frequency components. It is also known as power spectrum. Any noise that remains after preprocessing is further investigated using the power spectrum. The corresponding coefficients help to figure out the type of heart disease as done in [72, 95]. Figure 5a, b shows power spectrum and AR coefficients for the MIT-BIH Arrhythmia database (record no.103 m) at model order 5. Figure 5c, d shows the power spectrum and AR coefficients for the MIT-BIH arrhythmia database (record no.103 m) at model order 6.

Contour plot is used to describe an ECG signal in terms of their time–frequency analysis for differentiating (i) noisy and filtered ECG datasets and (ii) normal and abnormal ECG datasets. Figure 6 shows the contour plot of MB Ar DB (record no. 103 m) for differentiating noisy and filtered ECG datasets where the vertical scale is frequency measurement, horizontal scale is time and power is indicated by the color intensity [94]. The existing approaches based on power spectrum resulted in wrong outcomes due to their limited time–frequency resolution. There they estimated the same frequency output using different windows of identical size both for normal and heart patients’ ECG datasets. However, the spectrogram technique provides an effective signal estimation, both in the time and frequency segments of the ECG datasets.

AR technique has multiresolution capability which can figureout all the actual peaks as well as noise present in the recorded ECG signal. Figure 7 shows detected R-peak in 103 m database at model order 6 using AR technique. Here, R-peaks in the three-dimensional view are obtained with time interval of 1 s, frequency resolution of 20.09 Hz/points. All amplitudes are obtained in decibel (dB) during R-peaks detection using AR modeling technique.

In this paper, the proposed technique has obtained SE of 99.90%, D.R of 99.81% and SE of 99.77%, D.R of 99.87% for MB Ar and RT DB, respectively. Table 2 and Table 3 shows analysis results for RT DB and MB Ar DB, respectively. Table 4 clearly reveals that the proposed technique outperforms the existing techniques. In future, these results will definitely help in enhancing the applications of the proposed methodology in expert systems.

Table 2 shows that out of 25,426 actual R-peaks, the proposed technique detects 25,406 R-peaks, TP of 25,394, FN of 58, and FP of 35.

Table 3 shows that out of 1,10,043 actual R-peaks, the proposed technique detects 1,09,875 R-peaks with TP of 1,09,833, FN of 109, and FP of 83. In most of the datasets of MB Ar DB, the proposed technique outperforms and yields FN and FP of 0 (MB-124, MB-207, MB-217, MB-219, MB-220, MB-222 for FN = 0 and MB-201, MB-202, MB-205, MB-207, MB-208, MB-210, MB-215, MB-217, MB-223, MB-231 for FP = 0).

The existing techniques have SE of 99.69%, 99.68%, 82.75%, 99.8%, 98.01%, 99.81%, 98.32%, 99.84%, 99.65%, 95.8%, 98.84%, 99.29%, 99.75%, 99.66% in He et.al. [57], Jain et al. [59], Narina et al.[112], Rai et al. [113], Rajesh and Dhuli [70], Saini et al. [97], Van et al. [114], Lin et al.[64], Das and Ari [63], Kumar et al. [30], Kaya et al. [115], Kaya and Pehlivan [116], Pan and Tompkins [117], and Liu et al. [118], respectively. Table 4 shows that the proposed technique (with SE of 99.90%) outperforms all other existing techniques.

The existing techniques have D.R of 99.81%, 99.89%, 99.45%, 99.20%, 99.92%, 99.57%, 99.66%, 99.87%, and FN + FP of 412, 249, 784, 1598, 219, 957, 758, 245 in P. Phukpattaranont et.al.[119], Sharma and Sharma [120], Pan and Tompkins [117], Dohare et al. [121], Manikandan and Soman [122], Nallathambi and Príncipe [123], Pandit et al. [124], and Yazdani and Vesin [125], respectively. It can be observed that several techniques such as [120, 122] and [125] have slightly higher D.R as compared to that obtained with the proposed methodology. But the proposed technique outperforms all other existing techniques on the basis of low false detection rate (FN + FP) with comparable D.R as shown in Table 5.

The existing techniques have false detection rate (FN + FP) of 586, 479, 372, 594, 459 in Zidelmal et al. [126], Christov [127], Bouaziz et al. [128], Choi et al. [129], and Sahoo et al. [51], respectively. The proposed technique outperforms all other existing techniques on the basis of low false detection rate (FN + FP) as shown in Table 6 where all the datasets of MB Ar DB are considered. It is further concluded that the proposed technique outperforms all other existing techniques for most of the datasets viz. MB-104, MB-105, MB-108, MB-116, MB-200, MB-201, MB-202, MB-203, MB-205, MB-207, MB-208, MB-210, MB-215, MB-217, MB-222, MB-228, and MB-233.

4 Conclusion

This paper successfully analyzed RT and MB Ar DB using CWT, Spectrogram and Autoregressive technique together. It has been demonstrated that the proposed technique outperforms the existing state-of-the-art techniques. The performance of proposed methodology, i.e., SE of 99.90%, D.R of 99.81% (for MB Ar) and SE of 99.77%, D.R of 99.87% (for RT DB) reveals its applications in the emerging medical informatics field in practical emergent situations. It will definitely help in properly classifying different kinds of arrhythmias promptly at an early stage. It has also been shown that the spectrogram gives important frequency analysis that can detect existing arrhythmias. AR technique has yielded good time and frequency resolution simultaneously on the basis of selected features such as PSD, time–frequency analysis (TFA) and model order.

The proposed technique identifies the frequency information quiet clearly, which was shown to be important for filtering-out the high-frequency noise components. The proposed approach promises a ready-to-use methodology in any critical surgery or cardiology lab due to its robustness.

Abbreviations

- CVD:

-

Cardiovascular Disease

- STFT:

-

Short-time Fourier Transform

- CWT:

-

Continuous Wavelet Transform

- MB Ar DB:

-

Massachusetts Institute of Technology-Beth Israel Hospital Arrhythmia Database

- RT DB:

-

Real-time Database

- CAD:

-

Computer Aided Diagnosis

- ECG:

-

Electrocardiogram

- SGDF:

-

Savitzky–Golay Digital Filtering

- KNN:

-

K-Nearest Neighbor

- AR:

-

Autoregressive

- TFA:

-

Time–Frequency Analysis

- EDM:

-

Euclidean Distance Metric

- PSD:

-

Power Spectral Density

- SE:

-

Sensitivity

- DR:

-

Detection Rate

- TP:

-

True Positive

- FP:

-

False Positive

- FN:

-

False Negative

- WHO:

-

World Health Organization

- SNR:

-

Signal to Noise Ratio

- RMSE:

-

Root Mean Square Error

- PRD:

-

Percent Root Mean Square Difference

- ACC:

-

Accuracy

- SPE:

-

Specificity

References

Kumar, M., et al.: An efficient automated technique for CAD diagnosis using flexible analytic wavelet transform and entropy features extracted from HRV signals. Expert Syst Appl (2016). https://doi.org/10.1016/j.eswa.2016.06.038

Martis, R.J., et al.: Current methods in electrocardiogram characterization. Comp Biol Med 48, 133–149 (2014)

Gupta, V., et al.: Performance evaluation of various pre-processing techniques for R-peak detection in ECG signal. IETE J Res (2020). https://doi.org/10.1080/03772063.2020.1756473

Sharma, L.D., Sunkaria, R.K.: Myocardial infarction detection and localization using optimal features based lead specific approach. IRBM 41, 58–70 (2020)

Gupta, V., et al.: R-peak detection using chaos analysis in standard and real time ECG databases. IRBM 40(6), 341–354 (2019)

Wong, N.D.: Epidemiological studies of CHD and the evolution of preventive cardiology. Nat Rev Cardiol 11, 276–289 (2014)

Sahoo, S., et al.: “Machine learning approach to detect cardiac arrhythmias in ecgsignals: a survey. IRBM (2019). https://doi.org/10.1016/j.irbm.2019.12.001

Kora, P., Kalva, S.R.: Improved Bat algorithm for the detection of myocardial infarction. SpringerPlus 4, 666 (2015). https://doi.org/10.1186/s40064-015-1379-7

Gupta, V., Mittal, M.: QRS complex detection using STFT, chaos analysis, and PCA in standard and real-time ECG databases. Series B, J The Inst Eng (India) (2019). https://doi.org/10.1007/s40031-019-00398-9

Gupta, V., Mittal, M.: Efficient R-peak detection in electrocardiogram signal based on features extracted using hilbert transform and burg method. J Inst Eng India Ser B (2020). https://doi.org/10.1007/s40031-020-00423-2

M.C. Helen, M. et al., “Changes in scale-invariance property of electrocardiogram as a predictor of hypertension,” International Journal of Medical Engineering and Informatics (IJMEI), Vol.12 No.3, pp.228 – 236, 2020.

Ripoll, V.J.R., et al.: Assessment of electrocardiograms with pretraining and shallow networks. J Comput Cardiol 4, 1061–1064 (2014)

Chandra, S., et al.: A comparative analysis of performance of several wavelet based ECG data compression methodologies. IRBM (2020). https://doi.org/10.1016/j.irbm.2020.05.004

Mary, M.C., et al.: Assessment of scale invariance changes in heart rate signal during postural shift. IETE J Res 1, 1604172 (2019)

Gupta, V., Mittal, M.: Dimension reduction and classification in ECG signal interpretation using FA & PCA: A Comparison. Jangjeon Mathemat Soc 21(4), 765–777 (2018)

Aouinet, A., Adnane, C.: Electrocardiogram denoised signal by discrete wavelet transform and continuous wavelet transform. Akramaouinet and cherifadnane. J Signal Proc Internat J (SPIJ) 8, 1–9 (2014)

Gupta V, and Mittal M., “Respiratory Signal Analysis using PCA, FFT and ARTFA,” 2016 International Conference on Electrical Power and Energy Systems (ICEPES), Maulana Azad National Institute of Technology, Bhopal, India, pp. 221–225 (2016).

Gupta, V., Mittal, M.: ECG (Electrocardiogram) signals interpretation using chaostheory. J Adv Res Dyn Cont Sys (JARDCS) 10(2), 2392–2397 (2018)

Rahhal, M.M.A., et al.: Deep learning approach for active classification of electrocardiogram signals. Internat J Inform Sci 345, 340–354 (2016)

Zhang, X.S., et al.: New approach to studies on ECG dynamics: extraction and analyses of QRS complex irregularity time series. J Med Biol Eng Comput 5, 467–473 (1997)

Subramanian, B., Ramasamy, A.: Investigation on the compression of electrocardiogram signals using dual tree complex wavelet transform. IETE J Res (2017). https://doi.org/10.1080/03772063.2016.1275988

Zhang, J., et al.: ECG signals denoising method based on improved wavelet threshold algorithm. IEEE Internat Conf 1, 1779–1784 (2016)

Yu, S.N., Chou, K.T.: Integration of independent component analysis and neural networks for ECG beat classification. J Expert Syst Appl 34, 2841–2846 (2008)

Krummen, D.E.: Accurate ECG diagnosis of atrial tachyarrhythmias using quantitative analysis: a prospective diagnostic and cost-effectiveness study. J Cardiov Electrophys 21, 11 (2010)

Annavarapu, A., Kora, P.: ECG-based atrial fibrillation detection using different orderings of conjugate symmetric-complex hadamard transform. Internat J Cardiov Acad 12, 151–154 (2016)

Aqil, M., et al.: ECG-waves: analysis and detection by continuous wavelet transform. J Telecommun J Electronic Computer Eng 9, 45–52 (2010)

Lin, C.: Heart Rate Variability Analysis using Windows and Wavelet Transform. Internat J Cardiol 109(1), 101–107 (2006)

Chen, S., et al.: Heartbeat classification using projected and dynamic features of ECG Signal. Biomed Signal Process Control 31, 165–173 (2017)

Gupta, V., Mittal, M.: Principal component analysis and factor analysis as an enhanced tool of pattern recognition. Int J Elec Electr Eng Telecoms 1(2), 73–78 (2015)

Kumar, M., et al.: Automated diagnosis of atrial fibrillation ECG signals using entropy features extracted from flexible analytic wavelet transform. Biocyber Bi Med Eng. (2018). https://doi.org/10.1016/j.bbe.2018.04.004

Alshebly, Y.S., Nafea, M.: Isolation of fetal ECG signals from abdominal ECG using wavelet analysis. IRBM (2019). https://doi.org/10.1016/j.irbm.2019.12.002

Chazal, P.D., et al.: Automatic classification of heartbeats using ECG morphology and heartbeat interval features. IEEE Trans Biomed Eng 51, 1196–1206 (2004)

Jonnagaddala, J., et al.: Coronary artery disease risk assessment from unstructured electronic health records using text mining. J Biomed Inform 58, 203–210 (2015)

Martínez, J.P., et al.: Wavelet based ECG delineator: evaluation on standard databases. IEEE Trans Biomed Eng 51, 570–581 (2004)

Robert, K., Colleen, E.C.: Basis and treatment of cardiac arrhythmias, 1st edn. Springer-Verlag, New York (2006)

Mokeddem, F., et al.: Study of murmurs and their impact on the heart variability. Internat J Med Eng Inform (IJMEI) 12(3), 291–301 (2020)

Webster, J.G.: Medical Instrumentation: application and design, 3rd edn. JohnWiley & Sons, London (2008)

Mortezaee, M., et al.: An improved SSA-based technique for EMG removal from ECG. IRBM 40, 62–68 (2019)

Kumar, M.: Identifying heart-brain interactions during internally and externally operative attention using conditional entropy. Biomed Signal Process Control 57, 101826 (2020). https://doi.org/10.1016/j.bspc.2019.101826

Acharya, U.R., et al.: A deep convolutional neural network model to classify heartbeats. Comput Biol Med 89, 389–396 (2017)

Gupta, V., et al.: R-peak detection based chaos analysis of ECG signal. Analog Integr Circ Sig Process 102, 479–490 (2020)

Xingyuan, W., Juan, M.: Wavelet-based hybrid ECG compression technique. Analog Integr Circ Sig Process 59(3), 301–308 (2009)

Gandhi, B., Raghava, N.S.: Fabrication techniques for carbon nanotubes based ECG electrodes: a review. IETE J Res (2020). https://doi.org/10.1080/03772063.2020.1768909

Daamouche, A., et al.: A wavelet optimization approach for ECG signal classification. Biomed Signal Process Control 7, 342–349 (2012)

Rahman, A.: A statistical designing approach to MATLAB based functions for the ECG signal preprocessing. Iran J Computer Sci (2019). https://doi.org/10.1007/s42044-019-00035-0

Chakraborty, M.: Quantitative assessment of arrhythmia using non-linear approach: a non-invasive prognostic tool. J Inst Eng India Ser B (2017). https://doi.org/10.1007/s40031-017-0307-3

Gupta, V., Mittal, M., Mittal, V.: Chaos theory: an emerging tool for arrhythmia detection. Sens Imaging. 21(10), 1–22 (2020). https://doi.org/10.1007/s11220-020-0272-9

Christov, I.I.: Real time electrocardiogram QRS detection using combine adaptive threshold. Biomed Eng Online 3, 28 (2004). https://doi.org/10.1186/1475-925X-3-28

Hamilton, P.S., Tompkin, W.J.: Quantitative investigation of QRS detection rules using MIT/BIH Arrhythmia database. IEEE Trans BME 33, 1157–1165 (1986)

Rao, K.D.: DWT based detection of r-peaks and data compression of ECG Signals. IETE J Res 43(5), 345–349 (1997)

Sahoo, S., et al.: Multiresolution wavelet transform based feature extraction and ECG classification to detect cardiac abnormalities. Measurement 108, 55–66 (2017)

Mittal, M.: A computationally efficient piecewise constant solution for system transfer function inversion using orthogonal functions. J Adv Res Dynam Control Syst 9, 2398–2404 (2018)

Mittal, V., Mittal, M.: Haar wavelet based numerical approach for computing system response to arbitrary excitations. J Adv Res Dynamical Control Syst 2, 2433–2439 (2018)

Valli, T., Mittal, M.: Analysis of Fractional Systems using Haar Wavelet. Int J Innov Technol Exploring Eng (IJITEE) 8(9), 455–459 (2019)

Rao, H., Rekha, S.: A 0.8-V, 5.51-dB DR, 100 Hz low-pass filter with low-power PTAT for bio-medical applications”. IETE J Res (2019). https://doi.org/10.1080/03772063.2019.1682074

Kora, P.: ECG based myocardial infarction detection using hybrid firefly algorithm. Comput Methods Programs Biomed (2017). https://doi.org/10.1016/j.cmpb.2017.09.015

He, R., et al.: A novel method for the detection of R-peaks in ECG based on K-Nearest Neighbors and Particle Swarm Optimization. EURASIP J Adv Sig Proc 82, 4 (2017). https://doi.org/10.1186/s13634-017-0519-3

Bilas, R., et al.: An improved online paradigm for screening of diabetic patients using RR-interval signals. J Mech Med Biol 16(1), 1640003 (2016)

Jain, S., et al.: QRS detection using adaptive filters: a comparative study. ISA Trans 66, 362–375 (2017)

Gupta V. and Mittal M., “A novel method of cardiac arrhythmia detection in electrocardiogram signal,” IJMEI, 2019 (in press).

Jothi, S.H., Prabha, K.H.: Fetal electrocardiogram extraction using adaptive neuro-fuzzy inference systems and undecimated wavelet transform. IETE J Res 58(6), 469–475 (2012)

Acharya, U.R., et al.: Automated identification of normal and diabetes heart rate signals using nonlinear measures. Comp Biol Med 43, 1523–1529 (2013)

Das, M.K., Ari, S.: Analysis of ECG signal denoising method based on S-transform. IRBM (2013). https://doi.org/10.1016/j.irbm.2013.07.012

Lin, C.C., et al.: A novel wavelet-based algorithm for detection of QRS complex. Appl Sci 12, 4 (2019)

Addison, P.S.: Wavelet transforms and the ECG: a review. PhysiolMeas 26, 155–199 (2005)

Ghaffari, A., et al.: A new mathematical based QRS detector using continuous wavelet transform. Comput Electr Eng 34, 81–91 (2008)

Free hospital cartoons. Available:Error! Hyperlink reference not valid. on 17 Dec. 2019.

Computer analysis free technology items. Available: www.all-free-download.com. (Accessed on 28 Oct 2019).

MP35 Biopac system-www.biopac.com.

Rajesh, K., Dhuli, R.: Classification of ECG heartbeats using nonlinear decomposition methods and support vector machine. J Comput Biol Med 87, 271–284 (2017)

Rangayyan, R.M.: Biomedical signal analysis: a case-study approach. Wiley-Interscience, New York (2001)

Gupta, V., Mittal, M.: R-Peak Detection in ECG Signal Using Yule-Walker and Principal Component Analysis. IETE J. Res. (2019). https://doi.org/10.1080/03772063.2019.1575292

Gupta, V., Mittal, M.: A novel method of cardiac arrhythmia detection in electrocardiogram signal. Internat J Med Eng Informs (IJMEI) 12, 18 (2020)

Luz, E.J.S., et al.: ECG-based heartbeat classification for arrhythmia detection: a survey. Comp Met Prog Biom 127, 144–164 (2016)

Physionet database/MIT-BIH Arrhythmia database/ (Accessed on Nov 22, 2017).

Giorgio, A., et al.: Improving ECG signal denoising using wavelet transform for the prediction of malignant arrhythmias. Internat J Med Eng Inform 12(2), 135–150 (2020)

Mostafi, M., et al.: Discrimination of signals phonocardiograms by using SNR report. Internat J Med Eng Inform (IJMEI) 11(4), 386–403 (2019)

Haque, Z.U., et al.: Analysis of ECG Signal Processing and Filtering Algorithms. Int J Adv Comp Sci Appl 10, 3 (2019). https://doi.org/10.14569/IJACSA.2019.0100370

Li, H., et al.: Novel ECG signal classification based on KICA nonlinear feature extraction. J Circuits Syst Signal Process (2004). https://doi.org/10.1007/s00034-015-0108-3

Gupta, V., et al.: Principal component and independent component calculation of ECG signal in different posture. AIP Conf Proc 1414, 102–108 (2011)

Nikan S. et al., “Pattern Recognition Application in ECG Arrhythmia Classification,” in Proceedings of the 10th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC), pp. 48–56, 2017.

Priyadarshini, S.H., et al.: Processing of wrist pulse signals using linear and nonlinear techniques – a review. Internat J Eng Sci Computing 4, 7477–7482 (2016)

Bromba, M.U.A., Ziegler, H.: Application hint for Savitsky-golay digital smoothing filters. Anal Chem 53, 1583–1586 (1981)

Jha, C., Kolekar, M.H.: Empirical mode decomposition and wavelet transform based ECG data compression. Scheme (2020). https://doi.org/10.1016/j.irbm.2020.05.008

Guiñón JL. “Moving Average and Savitzki-Golay Smoothing Filters Using Mathcad,” International Conference on Engineering Education – ICEE, Coimbra, Portugal, pp.1–4, 2007.

http://www.robots.ox.ac.uk/~gari/teaching/cdt/A3/8_A3_BSP_Time_Freq.pdf.

Gupta, V., et al.: Auto-regressive time frequency analysis (ARTFA) of electrocardiogram (ECG) signal. Internat J Appl Eng Res 13(6), 133–138 (2019)

https://content.nexosis.com/blog/what-are-autoregressive-models.

Evaristo, R.M., et al.: Mathematical model with autoregressive process for electrocardiogram signals. J Commun Nonlinear SciNumerSimulat 57, 415–421 (2018)

https://dsp.stackexchange.com/questions/9518/what-are-autoregressive-coefficients.

Emresoy, M.K., Jaroudi, A.E.: Signal Proc 64, 157–165 (1998)

Rohini, R., et al.: A new paradigm for plotting spectrogram. J Inform Syst Commun 3, 158–161 (2012)

Vlad S, et al., “Efficient ECG Signal Parameters Extraction using Multiresolution Analysis,” International Conference on Advancements of Medicine and Health Care through Technology, Romania, 2009.

Gupta, V., Mittal, M.: KNN and PCA classifier with autoregressive modelling during different ECG signal interpretation. Procedia Computer Sci Elsevier 125, 18–24 (2018)

Acharya, U.R., et al.: Automated characterization and classification of coronary artery disease and myocardial infarction by decomposition of ECG signals: a comparative study. J Inform Sci 377, 17–29 (2017)

Saini, I., et al.: QRS detection using K-Nearest Neighbor algorithm (KNN) and evaluation on standard ECG databases. J Adv Res 4(4), 331–344 (2013)

Confusion matrix.https://towardsdatascience.com/taking-the-confusion-out-of-confusion-matrices-c1ce054b3d3e.

Acharya, U.R., et al.: Automatic identification of cardiac health using modeling techniques: a comparative study. J Inform Sci 178, 4571–4582 (2008)

Bogunovic N, Jovic A. “Processing and Analyisis of Biomedical Nonlinear Signals by Data Mining Methods,” IWSSIP 2010 - 17th International Conference on Systems, Signals and Image Processing, pp. 276–279, 2010.

Elhaj, F.A., et al.: Arrhythmia recognition and classification using combined linear and nonlinear features of ECG signals. J Computer Methods Programs Biomed 127, 52–63 (2016)

Marinho, L.B., et al.: A novel electrocardiogram feature extraction approach for cardiac arrhythmia classification. Fut Gen Comp Syst 97, 564–577 (2019)

Mukherjee, S., et al.: Malignant melanoma detection using multi layer preceptron with visually imperceptible features and PCA components from Med-Node dataset. Internat J Med Eng Inform (IJMEI) 12(2), 151–168 (2020)

Mehta, S.S., Lingayat, N.S.: Development of SVM based ECG pattern recognition technique. IETE J Res 54(1), 5–11 (2008)

Mehta, S.S., Lingayat, N.S.: SVM-based algorithm for recognition of QRS complexes in electrocardiogram. IRBM 29, 310–317 (2008)

Nayak, C., et al.: “An efficient QRS complex detection using optimally designed digital differentiator. Circuits Syst Signal Proc (2019). https://doi.org/10.1007/s00034-018-0880-y

Dasgupta, H.: Human age recognition by electrocardiogram signal based on artificial neural network. Sens Imaging 17(4), 1–15 (2016)

Jangra, M., et al.: ECG arrhythmia classification using modified visual geometry group network (mVGGNet). J Intel Fuzzy Syst 38, 3151–3165 (2020)

Gupta, V. and Mittal, M., “R-peak based Arrhythmia Detection using Hilbert Transform and Principal Component Analysis,” 2018 3rd International Innovative Applications of Computational Intelligence on Power, Energy and Controls with their Impact on Humanity (CIPECH). pp.116–119, doi:https://doi.org/10.1109/cipech.

Halder, B.: Classification of complete myocardial infarction using rule-based rough set method and rough set explorer system. IETE J Res (2019). https://doi.org/10.1080/03772063.2019.1588175

Sheetal, A., et al.: QRS detection of ECG signal using hybrid derivative and MaMeMi filter by effectively eliminating the baseline wander. Analog Integr Circ Sig Process 98(1), 1–9 (2019)

Narina, A., et al.: Investigating the performance improvement of HRV Indices in CHF using feature selection methods based on backward elimination and statistical significance. J Computers Biol Med 45, 72–79 (2014)

Rai, H.M., et al.: R-peak detection using daubechies wavelet and ecg signal classification using radial basis function neural network. J Inst Eng India Ser B 95(1), 63–71 (2014)

Phy, J.: Algorithm for detection the QRS complexes based on support vector machine. J Phy IOP Conf Series 929, 1–5 (2017)

Kaya, Y., et al.: Effective ECG beat classification using higher order statistic features and genetic feature selection. J Biomed Res 28, 7594–7603 (2017)

Kaya, Y., Pehlivan, H.: Classification of premature ventricular contraction in ECG. Int J Adv Com Sci Appl 6, 34–40 (2015)

Pan, J., Tompkins, W.J.: A real-time QRS detection algorithm. IEEE Trans Biomed Eng 32, 230–236 (1985)

Liu, X., et al.: A novel R-peak detection method combining energy and wavelet transform in electrocardiogram signal. J Biom Eng 26, 1–9 (2014)

Phukpattaranont, P.: QRS detection algorithm based on the quadratic filter. Exp Sys with Appl 42(11), 4867–4877 (2015)

Sharma, T., Sharma, K.K.: QRS complex detection in ECG signals using locally adaptive weighted total variation denoising. Comp Biol Med 87, 187–199 (2017)

Dohare, A.K., et al.: An efficient new method for the detection of QRS in electrocardiogram. Comput Electr Eng 40(5), 1717–1730 (2014)

Manikandan, M.S., Soman, K.P.: A novel method for detecting R-peaks in the electrocardiogram (ECG) signal. Biom Sig Proc Cont 7(2), 118–128 (2012)

Nallathambi, G., Príncipe, J.C.: Integrate and fire pulse train automaton for QRS detection. IEEE Trans Biomed Eng. 61(2), 317–326 (2014)

Pandit, D., et al.: A lightweight QRS detector for single lead ECG signals using a max-min difference algorithm. Comput Methods Prog Biomed 144, 61–75 (2017)

Yazdani, S., Vesin, J.M.: Extraction of QRS fiducial points from the ECG using adaptive mathematical morphology. Dig Sig Proc 56, 100–109 (2016)

Zidelmal, Z., et al.: QRS detection based on wavelet coefficients. Comp meth Prog Biomed 107(3), 490–496 (2012)

Christov II (2004) Real time electrocardiogram QRS detection using combined adaptive threshold. Bio Med Eng. OnLine 28(3P):4. http://www.biomedical-engineering-online.com/content/3/1/28.

Bouaziz, F.: Multiresolution wavelet-based QRS complex detection algorithm suited to several abnormal morphologies. IET Signal Proc 8(7), 774–782 (2014)

Choi, S., et al.: Development of ECG beat segmentation method by combining lowpass filter and irregular R-R interval checkup strategy. Exp Syst Appl 37(7), 5208–5218 (2010)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gupta, V., Mittal, M., Mittal, V. et al. ECG signal analysis using CWT, spectrogram and autoregressive technique. Iran J Comput Sci 4, 265–280 (2021). https://doi.org/10.1007/s42044-021-00080-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42044-021-00080-8