Abstract

Two phenomena that are central to simulation research on opinion dynamics are opinion divergence—the result that individuals interacting in a group do not always collapse to a single viewpoint, and group polarization—the result that average group opinions can become more extreme after discussions than they were to begin with. Standard approaches to modeling these dynamics have typically assumed that agents have an influence bound, such that individuals ignore opinions that differ from theirs by more than some threshold, and thus converge to distinct groups that remain uninfluenced by other distinct beliefs. Additionally, models have attempted to account for group polarization either by assuming the existence of recalcitrant extremists, who draw others to their view without being influenced by them, or negative reaction—movement in opinion space away from those they disagree with. Yet these assumptions are not well supported by existing social/cognitive theory and data, and insofar as there are data, it is often mixed. Moreover, an alternative cognitive assumption is able to produce both of these phenomena: the need for consistency within a set of related beliefs. Via simulation, we show that assumptions about knowledge or belief spaces and conceptual coherence naturally produce both convergence to distinct groups and group polarization, providing an alternative cognitively grounded mechanism for these phenomena.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Simulation of consensus, divergence, and polarization

In the research area sometimes known as opinion dynamics, a growing number of modeling paradigms have sought to understand how insights from models of physical systems can inform our understanding of the formation and transmission of opinion and belief within organizations, groups, and society. Much of this progress has centered on simple agents with simple knowledge, interacting according to simple rules that together produce the complex behavior observed in or hypothesized about the real world. For example, in statistical mechanics, the Ising model [1] was developed to examine phase change in ferromagnetic physical systems, but it has been adapted to study group opinion formation [2], providing interesting new insights into the social phenomenon. The Ising model is considered relevant because it is analogous to many social systems, in that a group with initially distinct opinions may converge to a consensus because of their close interaction, just as a disorganized lattice of charged particles may align their polarity and come to a stable state.

Yet the simplicity of these simulations can come at a cost. Although simplified assumptions about knowledge representation make simulation easier and convergence proofs possible, these sometimes force theorists to make ad hoc and unfounded assumptions about interaction rules in order to create tractable convergence distributions. Using a simplistic knowledge representation akin to the Ising model in which all agents are completely described by whether or not they hold a belief (i.e., they are either − 1 or 1), there is little room for understanding influence and belief change at an individual level, other than change from complete agreement with a premise to complete disagreement. Strategies for augmenting these representations have become an important area of exploration within opinion dynamics research.

Although opinion dynamics has established itself as an independent field, research on opinion dynamics continues to be heavily influenced by physical models [3, 4]. It has been argued that translations of physical models to social systems can often require unprincipled assumptions governing both belief representation and interaction rules [5], and we argue this happens because of assumptions at both the sociological and psychological level. These are sometimes simply atheoretical conveniences that are used in order to produce end-state distributions that are intuitive and reasonable. But these assumptions sometimes replace psychological or social theory, producing an observed behavior but not providing a reasonable or testable account of the phenomena.

In this paper, we examine several common phenomena studied within opinion dynamics models, and the assumptions they rely on to produce these results, including (1) consensus formation, (2) opinion divergence—the formation of distinct stable cultures of a belief within an interacting group, and (3) group polarization phenomenon. We then propose a cognitive and knowledge-based account that can explain these phenomena, and also identify testable hypotheses which can help discriminate alternative theories.

Consensus formation in interacting groups

Typical models of opinion dynamics assume that beliefs are fragile, and can be easily influenced through interactions and discussions with others. Although these models are typically intended to capture consensus formation as a function only of internal rules and dynamics (without considering exogenous influences from outside the system), they are interesting because they demonstrate phenomena that are well documented in the real world. Such models can account fairly easily for fads and fashions [6], information cascades [7], and various examples of herd mentality and groupthink (see [8] for some remarkable examples). Furthermore, such models can be deployed in automated distributed intelligent systems such as swarm robotics where consensus formation is necessary, but there is no central leader to dictate a group decision [9]. A basic simulation showing consensus formation or “collective cognitive collapse” [10, 11] is shown in Fig. 1.

In this simulation, the representation dimension is expanded from the simple binary 0–1 state discussed earlier to a continuous value between 0 and 1. This representation has been used extensively in the opinion dynamics literature (see [12] for a review of the history of these assumptions). 100 agents were given random initial states on a uniform distribution between 0 and 1. On each step, two agents were chosen, and their current states were compared. New beliefs were chosen for each agent so that it moved slightly toward the other chosen agent’s belief. The results match what should be an intuitive result: the population quickly converges to a single state, and has no ability to escape that belief after convergence.

Although such a model has applications, it fails to capture many observations about beliefs and attitudes in human groups. Most specifically, although some customs, norms, and beliefs appear to converge within a community, others remain stably at odds within a group, which we will refer to as opinion divergence. Furthermore, the stable groups are often believed to form at the extremes of the opinion spectrum, rather than more moderate points, which has been referred to as group polarization. The simplest agent-based systems like the one in Fig. 1 cannot account for either effect, because they tend to converge to a single group at the center of the opinion space.

To account for these effects, additional assumptions are made. First, models typically suppose that agents have bounded influence, which assumes that agents are not affected by or do not interact with other agents whose beliefs are too far from their own. Second, models sometimes assume one of the two explanations for group polarization: either the presence of recalcitrant extremists—individuals who both hold extreme views and are also less willing than a typical agent to change those views, or some sort of a negative reaction to those who hold an opposing view. The recalcitrant extremist and the negative reaction hypotheses are distinct (and in some ways inconsistent), but either one appears capable of producing polarization. However, the assumptions leading to the behavior may not be justifiable from a psychological perspective. Although it is difficult to rule out that these processes might come into play in different situations and for different individuals, we believe that these behaviors may also stem from other assumptions about knowledge representation and transmission, and will show that by augmenting knowledge representation in ways consistent with cognitive theory the same results can be produced without these assumptions.

Next, we will discuss each behavioral phenomenon in greater detail, with a focus on the assumptions used to produce them in simulation models.

Opinion divergence and the bounded influence conjecture

One of the most common assumptions made in opinion dynamics simulations is what we will refer to as the “Bounded Influence Conjecture”: that agents are not affected or do not interact with other agents whose opinions differ with theirs by a large amount. Within social simulation, bounded influence can be traced back at least to Axelrod’s model [13] that produced local convergence and global polarization, but it has been explored, discussed, and modified extensively since that time (e.g., [14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30]). It is often referred to as “bounded confidence” [31, 32], reflecting the notion that experts interacting may have a range of confidence in either their own opinion or their opinion of others; however, this label does not map cleanly onto many psychological notions of confidence (cf. [33,34,35]) which typically view confidence as independent of response and not directly linked to the scale or a range of the opinion value, so we will use the term influence, which has fewer implications for psychological theory.

The bounded influence conjecture is typically operationalized within agent-based simulations, where agents have belief structures that are represented on a unidimensional bipolar continuous scale as in the simulation presented earlier, often anchored by 0.0 and 1.0 or − 1.0 and 1.0. This standard model, introduced by [17], remains the starting point for much recent research (see [12]). As described before, the basic models allow interaction between any two agents, which causes each agent to shift its beliefs toward the other agent (at least occasionally). Bounded influence institutes an additional bound (e.g., \(\delta \)), such that the shift is only carried out if the two belief values differ by less than \(\delta \). Under the right conditions, bounded influence can create opinion divergence: stable groups of agreement that are at odds with one another within a single larger belief space. This makes sense because it creates gulfs (usually slightly larger than \(\delta \)) between local clusters, permitting individuals to converge locally to stable opinions.

Although it has been described in a number of different ways, one simple way of defining bounded influence is that we ignore beliefs that are extremely different from our own. It is perhaps a formal implementation of the concept of “groupthink”, and resonates with aphorisms like “birds of a feather flock together”, and so has much intuitive appeal. But it is also problematic, for both theoretical and empirical reasons. For example, there is little direct empirical evidence supporting the conjecture, and indeed there are reasons to believe that the opposite can hold. Furthermore, on its own, it fails to produce group polarization, which must be accounted for by additional assumptions. These deficiencies may call for a rethinking of the bounded influence conjecture as a source of opinion divergence.

There is certainly anecdotal evidence in support of the bounded influence conjecture. Political debates often appear to be a discussion among two groups with polar opposites beliefs, who simply ignore the other’s arguments. Similarly, a common finding in the social psychology literature is one of in-group bias: preferential treatment given to those who belong to the same group [36], with each group often defined by heritage, geography, affiliation, and the like.

Yet many of these behaviors, even if accepted as true, could have other explanations. The bounded influence conjecture should not be confused with homophily [37], which is the tendency to interact with those who are generally similar by external measures (as opposed to internal belief state). This is akin to the distinction made by Lazarsfeld and Merton [38] between status and value homophily. Furthermore, theories of homophily describe whether two people or agents will associate; not whether they will take on one another’s beliefs. So, in-group effects may not stem from shared beliefs per se, but instead from group membership itself. In fact, in-group effects can be found for even arbitrary group memberships, where prior beliefs are uncorrelated with group identity (e.g., [39, 40]). Thus, homophily may still mediate opinion dynamics, but perhaps opinion itself does not define homophily.

Moreover, just as anecdotes appear to support bounded influence, others appear to violate it. For example, the tradition of western political discourse and debate means that those with extreme views often do know and listen to the opposing views quite well, better than those with moderate views. The mechanisms of influence may be much more complicated than simple exposure, and understanding those processes may be critical for making predictive models of opinion dynamics.

Notions of the bounded influence are often attributed to Festinger’s social comparison theory [41], whose Hypothesis III stated “The tendency to compare oneself with some other specific person decreases as the difference between his opinion or ability and one’s own increases”. It should be noted that for Festinger, the primary purpose of comparison was for self-evaluation. Festinger gave as an example that a novice chess player is unlikely to compare themselves to a master of the game. To judge ones own competence at chess, one is more likely to compare oneself to another novice than a champion, which may give a better self-assessment: you can know if you are progressing well by evaluating whether you are good or bad for a novice chess player. However, secondary hypotheses and corollaries offered by Festinger suggest that in the presence of differences, individuals will either try to change their own opinions, the opinions of others in the group, or the membership of the group to achieve agreement, which is relevant to bounded influence.

Yet the evidence offered in support of social comparison theory often does not support bounded influence. For example, Festinger cited a now-famous study by Stanley Schachter [42], who found that a confederate dissenter in a group discussion about the punishment of a child would initially be the focal target of discussion in the group, but this would taper off over time—putative evidence for bounded influence. This seems to indicate that the dissenter is eventually ignored because of his extreme views, but for both the original result and a recent replication [43], the dissenter remained the focus of the most discussion throughout the session, even at the end of the 75-min discussion. Group members continued talking to the dissenter throughout (and presumably listening to him), and although the amount decreased, he still dominated discussion. Other examples offered by Festinger as support for his Hypothesis III are similarly inconsistent with bounded influence: they tend to involve cases where most of the communication was directed toward the person with the divergent opinion, in an attempt to influence them. Thus, we suggest that there are processes and mechanisms that allow individuals to communicate extensively with extreme dissenters and not be influenced by them, but these may not have been settled by Festinger’s social comparison theory.

In fact, a number of classic studies in decision making suggest that there are cases when the opposite of bounded influence might be true. For example, heuristics-and-biases research has identified the “anchor and adjustment” phenomena [44], in which extreme initial anchors have the largest impacts on participants’ estimates. If bounded influence were really at play, one might expect extreme anchors to have less of an influence than intermediate anchors. To be sure, these may be highly context dependent, as moderate anchors have sometimes been found to produce larger effects (see [45]). However, the larger impact of extreme anchors has even been demonstrated in adversarial opinion situations, such as the courtroom (e.g., [46]), a result that seems highly relevant to opinion dynamics simulations.

Bounded influence models also require that changes in attitude will be gradual, drifting smoothly along the continuum from one extreme to another as one interacts with individuals of different beliefs. But this is in opposition to empirical findings in the scientific literature [47], and phenomena such as religious and political conversion or deconversion which involve wholesale adoption of a large set of beliefs distinct from those previously held. There is in fact a long-standing debate in the sociology of religion about whether conversion is a gradual process (called the ‘drift model’) or a quantum process (called the ‘brainwashing’ model), and some have argued that both are important paths of conversion [48]. Bounded influence ignores one of these critical routes.

Also relevant to this is recent empirical research has begun to emerge attempting to test assumptions of opinion dynamics models—looking at the conditions under which humans are willing to change their opinion or an estimate, as a function of both the size of the difference and their confidence in their knowledge [34, 49, 50]. Contrary to the assumptions of bounded influence, Moussaid and colleagues [34] found that when opinion differences are small, this typically resulted in no change; as they grew larger, a change (either a compromise estimate or adoption of the others’s value) became relatively more likely, although the chance of adopting another’s estimate wholesale (in contrast to a compromise value) went down as the opinion difference got very large. This suggests that it is smaller differences in estimates that are ignored—larger differences will lead to compromise or (as long as they are not too extreme) adoption of the others’ estimate wholesale. To be fair, this last finding is consistent with the bounded influence conjecture, but may also be consistent with other accounts. Similarly, Kerckhove [49] measured how easily participants were influenced by group judgments, and found that those who differed the most from the group judgment were most influenced, and Takacs [50] similarly showed that larger differences of opinion lead to larger overall changes.

In our view, the most central challenge for bounded influence regards not just the empirical support for the notion, but the logic of the cognitive process itself—for an extreme opinion’s influence to be bounded, it first must be attended to, processed, and then subsequently ignored—otherwise how should one know that it should be ignored? The alternatives might be (1) we deliberately avoid listening to people, media sources, social connections, or other sources of information that are correlated with an opposing opinion; or (2) the world tends to provide only those ideas about which we agree in the first place; or (3) we listen to and then discount the information that is too extreme. But we view each of these as problematic: the first uses external cues and identity, rather than the message itself, to filter out-of-bounds information, and so is essentially a homophily explanation. The second has been discussed in popular culture as the Filter Bubble hypothesis [51], and to the extent it is true prevents opinions from being influenced at all. But it places the influence bound at a level outside control of the two individuals, which we do not think it is the intent of bounded influence models. Alternately, it might be thought to place the role in the geography or social network, which is a reasonable account but again not the assumptions of the bounded influence model. The third explanation relies on evidence discounting, which has been studied in a number of contexts (see [52, 53]), and is distinct from bounded influence. Such research suggests that misinformation is not easily discounted, and can be difficult to ignore, even when discredited [54].

Consequently, we argue that although bounded influence may be a useful and adequate computational shortcut for producing behavior akin to these processes, it might also provide us with improper insights into social influence or the cognitive aspects of opinion change. There are certainly other assumptions that have been made that can produce opinion divergence in simulation models. For example, some researchers have considered how geography [13] and social connection [14, 16, 21, 55] constrain who communicates with whom, and so can produce opinion divergence. These accounts also have face validity: small groups can collapse to a single attitude state, and if they are sufficiently isolated from others whose opinions differ, they will not be influenced by them. However, many of these models also assume bounded influence, which raises the question of how easily network connectivity alone can produce opinion divergence. Similarly, the DIAL model [56] provides means for isolating and segregating communities via a number of comparison and communication processes.

Later, we will show that bounded influence per se is not necessary to produce opinion divergence, and suggest that divergence can be a natural consequence of certain kinds of multi-attribute opinion spaces. But first, we will examine group polarization, a related phenomenon that bounded influence cannot account for but is viewed as a second important behavior of opinion dynamics models.

Group polarization, the recalcitrant extremist, and the negative reaction hypothesis

Group polarization is a phenomenon that has been discussed and studied in both the social psychological literature and the opinion dynamics literature [57,58,59]. Group polarization is when a group of individuals arrives at an consensus view that is more extreme than their average initial beliefs. Historically, social psychological research has narrowed down the sources of group polarization to two processes: social comparison and persuasive argumentation [60]. Social comparison is thought to drive like-minded groups to be more extreme by influencing individuals to compare their beliefs to others and align or contrast with them; persuasive argumentation argues that the proportion and content of the available ideas influence polarization.

Most recent opinion dynamics models can produce distinct subcultures of belief that differ from one another (often because of the bounded influence conjecture). However, without additional assumptions, these typically converge to a moderate belief close to the average of the initial beliefs, rather than ones more extreme than their starting positions [24, 25, 31, 61, 62]. Consequently, additional assumptions have been needed to produce group polarization.

A typical assumption made to produce such phenomena is one we will call the “Recalcitrant Extremist” hypothesis, which is made of two related assumptions: a (usually small number) of unwavering individuals exist, and these individuals also tend to hold extreme opinions. This has also been discussed in terms of committed minorities [63], individual inflexibility [64], close-minded agents [65], narrow-minded agents [61, 62], extremists [14, 16, 18, 66, 67], and stubborn agents or actors [68,69,70].

Both extremism and recalcitrance are typically needed to prevent consensus groups from forming at moderate levels. An extremist who is willing to change will draw the average opinion toward him or her, but will also be drawn toward the other group members, and will not produce polarization. On the other hand, stubborn agents that are not extremists may draw more pliant agents toward them, and this will help to produce opinion divergence, but not polarization.

Recent empirical evidence [33, 34, 71] suggests that contrary to this hypothesis, most people are in fact “stubborn”. For example, [33] found that 53%-61% of participants did not change their answer in an estimation task in response to new information; [49] incorporated an ‘influencability’ parameter in their analysis model, and concluded that most participants overweighted their own opinion compared to new information, having a reluctance to change. Although it seems reasonable on its face that those with extreme opinions are not easily swayed, this represents an additional assumption that to our knowledge has never been tested or demonstrated empirically. In terms of psychological validity, this account is most similar to a persuasive argumentation process, although research on persuasive argumentation has often focused on linguistic factors and argument structure [72]. However, most empirical social psychology research on group polarization ignores the possibility of it being driven by recalcitrant extremists, probably because polarization is apparently easy to produce with groups of relatively moderate homogeneous opinion-holders.

Personality theory includes concepts akin to recalcitrance, and so it may also give credence to whether extremists are less willing to change their attitudes than are others. For example, Cattell’s 16-factor model [73] includes two dimensions (“Openness to change” and “Deference/Dominance”); and the classic Big Five model [74] includes “Openness to experience”; these appear to be similar to recalcitrance. But these appear to not to be correlated with extremism per se (at least in the political domain). Rather, they map asymmetrically onto a general liberal-conservative spectrum [75,76,77]—conservatives are unwilling to consider alternative views, but liberals are more willing to do so. Similarly, [71] found that agreeableness (perhaps the opposite of recalcitrance) was not significantly correlated with behaviors related to opinion shifts across time during a group discussion. Furthermore, the Schachter study discussed earlier [42] demonstrated that not only do participants spent more time talking to extremists, they tend to not be swayed by them.

An alternative account for group polarization makes the opposite assumption: rather than being drawn toward an opinion, people have a negative reaction to opinions that differ from their own, which pushes them away from the extreme. The concept may have its origins in the psychodynamic concept of reaction formation, and it has some similarity to assimilation-contrast effects [78, 79]. Others have found similar effects of attitude change in response to in-group/out-group manipulations—Wood et al. [80] showed that in some circumstances, individuals reverse their attitudes away from a minority-group opinion. However, this was not a negative reactions to an opinion; it rather appears related to social identity and minority/majority group membership.

The social psychological literature on group polarization has apparently not given the negative reaction hypothesis much weight in general, because polarization appears even when there is no negative foil to react against [60]. Rather, some have explained it as a need to differentiate and appear more socially desirable than the average of the group [81], and studies that include exposure to opposing ideas typically show depolarization—moving toward a more moderate viewpoint [82], which is similar to anchoring-and-adjustment results by [44]. Finally, recent empirical studies [33, 34] have shown that opinion was drawn toward the more extreme alternate opinion, not away from it. To be fair, the estimation methods used by these recent studies were unlikely to lead to a negative reaction effect, but this illustrates that there are many situations for which negative reaction may not apply. However, perhaps the most relevant research is a recent study by [50], whose title asserts “Discrepancy and disliking do not induce negative opinion shifts”. This found that positive influence increased as the discrepancy increased.

So, the evidence for negative reaction is limited. Some of the results taken as evidence for a negative reaction involve opinion shifts based on group identity; not opinion per se; and there are also examples that look like accommodation of and positive influence by opposite ideas. Thus, although the negative reaction may occur in some settings, it may be questionable as a universal assumption about opinion dynamics. Regardless, a number of past models have adopted negative reaction mechanisms of one type or another, partly because they are both simple and useful. This assumption has been discussed with respect to ‘commitment’ [59], ‘heterophobia’ [83], ‘rejection’ [83,84,85], ‘latitude of rejectance‘ [86,87,88], and ‘repulsion’ [67, 89]. It should be pointed out that negative reaction does not require extremists. For example, [30] has demonstrated how agents can move to more extreme values by interacting with more moderate agents, and similarly, [59] assumed that interacting agents that differed on multiple issues would shift farther apart. Finally, [37] considered a related concept called distancing which asserted that people are sometimes drawn toward opinions that are held by a smaller proportion of a group.

As part of the simulation model we will present next, we will show that neither of these explanations are necessary to produce group polarization, and the same assumptions that produce opinion divergence can also produce polarization: both can arise from a multivariate opinion space when self-consistency of the opinion space is important to individuals.

Interim summary

We have examined two important phenomena that have been extensively simulated in opinion dynamics research: opinion divergence despite local consensus formation, and group polarization. We argued that the mechanisms typically adopted to account for these phenomena have questionable grounding in cognitive and social empirical research—in part stemming, at least historically—from a desire for simple systems that are mathematically tractable. This has traditionally meant adopting a belief or knowledge representation that is highly simplified and likely at odds with human knowledge and attitudes. For example, the simplest models using a single binary value (e.g., [2]) closely match physical models of electric charge, but may be too impoverished to provide a compelling account of human attitudes or opinions.

Certainly, many other alternatives rooted in social psychological theory have also been proposed, but perhaps not been explored fully. For example, researchers have considered emotion [90, 91]; social network connections [92, 93]; and argumentation and dialog [56]. In addition, a number of richer representations of opinion have been examined. For example, a number of models have emerged that use a 2-dimensional or multi-dimensional continuous opinion vector [56, 94,95,96,97]. Although such spatial or geometric knowledge representations are not unknown in the memory and psychological literature (notable, the LSA model of [98]), [99] leveled a famous criticism against such geometric models of knowledge representation, favoring a feature-based approach. This critique was based on the fact that judgments of similarity consistently violate each of the axioms upon which the geometric models are based (symmetry, minimality, and the triangle inequality).

Feature-based approaches have also appeared in the opinion dynamics literature. For example, [100] used a binary opinion vector, [101] used a discrete multi-value feature approach, and others [102, 103] used simplicial complexes to represent more complex sets of agreement among features. Despite the fact that more complex representations have been explored, few of these models appear to have selected these representations on psychological grounds. Next, we will show how it is possible to develop a coherent model that produces both opinion divergence and group polarization using the same mechanism, based on a psychological theory of knowledge representation.

A belief-space account of opinion divergence and group polarization

Research on knowledge representation suggests that there may be a single process that can drive both opinion divergence and group polarization: the logical or traditional association between beliefs. This hypothesis has its origins in research by [104], which argued that theories are critical in defining and organizing categories and concepts. In the present context, this means that exogenous factors such as explanatory reasoning, logic, and causal reasoning will constrain whether features are associated with one another.

As an example, in the United States, political parties ascribe to a series of beliefs and attitudes that are interrelated, and sometimes attributed to a single underlying principle. The Republican party officially opposes abortion, opposes nationalized health care, supports gun rights, opposes unionized labor, and supports capital punishment, and so on, while the Democratic party has opposite stances on each of these issues. Members of each party will often differ from their party consensus on a small number of issues [105], but as a whole the platform stands together and members support it. In general, these are held together by a small number of underlying principals (small government; justice, etc.), and although they may not always be logically inconsistent, certain combinations of beliefs may be viewed by most individuals as incoherent or inconsistent with a higher-order theory. As such, a set of views are associated with one another, either logically or by tradition, to such an extent that an individual may not be able to repudiate one belief without disavowing others.

Issues may be related for logical reasons (if one supports lower taxes for everyone, they also support lower taxes for middle-class citizens); but others may be more supported by an underlying principle (“pro-life” attitudes may appear logically at odds with support for the death penalty, but are reconciled by a consistent and coherent view on punishment [106]). Others may be associated for more traditional reasons, such as support for the gun rights and support for an amendment banning the burning of the flag; or support for free speech and support for higher marginal tax rates. We suspect that tradition and logic govern a perceived coherence among attitudes, and that individuals feel pressure to maintain consistency among their related beliefs, which are reinforced by other members of a group tending to hold the same constellation of beliefs.

Such a need for consistency may underlie the observed phenomena typically modeled with bounded influence and recalcitrant extremists or negative reactions. According to this view, opposing opinions are not edited or ignored automatically based on one’s beliefs, and agents can be influenced by ideas that come from any source. However, one must consider an entire belief system rather than just a single component. If the resulting belief results in an inconsistent set of opinions, movement to that state may be prohibited as taboo, forbidden, or illogical. This prevents consensus, and produces opinion divergence. This also provides a mechanism for polarization. A set of individuals who share most of their ideals but defect from their belief group on a small number of issues will almost necessarily find a consensus that is polarized from their average. For example, if a group of like-minded individuals attempted to come to agreement on ten related issues, but each person only supported nine (randomly chosen), the consensus opinion would likely converge to the extreme of 10/10, whereas individual average is 9/10.

To explore the role of knowledge coherence in social simulation, we conducted a simulation study from a model first introduced by [107].Footnote 1 In this model, we assumed that opinion-based beliefs are a multidimensional set of binary belief features (e.g., agree versus disagree) on a number of related issues. The motivation for this representation is past research on how humans acquire knowledge from distinct memory episodes [108], and use this knowledge to make decisions [53] and solve problems [109]. Thus, although the representation remains somewhat simplified from these cognitive models, it retains important critical aspects of how knowledge is represented in humans.

Without losing the spirit of the model’s implementation, one could assume that beliefs might take on a number of discrete positions on a particular issue (e.g., if there were three, support, ambivalence, and opposition), or that individuals have a continuous strength in each belief that will result in either support or oppose at any given time, but the binary feature representation simplifies simulation. Regardless, our approach is at odds with the typical assumption that opinion strength is an infinitely discriminable scale between two opposite extremes (see [35] for an empirical demonstration suggesting humans are not capable of even consistently discriminating between discrete levels of confidence about a perceptual state). However, even this distinction is somewhat soft, because one can easily create a scale of arbitrary precision by combining sets of binary opinions, just as one can represent any irrational or rational number with arbitrary precision using a set of binary digits.

The model assumes that the values of these opinions are not arbitrary, but rather are valenced with some higher-order set of traditions or principles, to represent agreement with one consistent sets of beliefs, and disagreement with a second.

In the simulations, a knowledge or belief space is first constructed which defines a set of ’legal’ or ’consistent’ combinations of beliefs. Doignon & Falmagne [110] developed the mathematical groundwork for this kind of representation, and have applied to extensively to knowledge-based applications, such as in education. In these contexts, the resulting space is referred to as a knowledge space and each node a knowledge state. For the current application, the nodes represent belief states, and the lattice represents a belief space, more akin to how [111] used the same formalism. The only distinction between these terms is whether the elements have some sort of objective or normative truth (i.e., the rules of long division) in which case they represent knowledge you can attain. If, rather, the elements represent attitudes that one can hold (e.g., do you think lowering taxes on corporations will have long-term benefits to society?), these are referred to as belief states and belief spaces.

In the simulations, 20 binary features were used to describe beliefs, which provides \(2^{20}=1,048,576\) possible states within a knowledge space. From these possible states, 15 unique states were sampled along the valence spectrum (each simulation used a different sampling method), but the two most extreme states were always chosen (i.e., all 0 and all 1). The different simulations we present use different schemes for sampling these states. The knowledge space lattice [110, 112] describing these states can be formed through complement arithmetic [111, 113, 114], in order to identify which states are subsets or supersets of other states. The choice of the number of features and size of the knowledge space is somewhat arbitrary, and although clearly these choices can impact convergence properties, they serve as a proof-by-example of the behaviors we are interested in.

Set of binary features describing each of 15 states (left panel), and resulting knowledge space lattice (right panel) created by sampling belief states distributed uniformly across the range of agreement/disagreement with an extreme view. Arrows indicate that a higher-level state fully encompasses the beliefs of a lower-level state, representing the space as a partially-ordered set. The model does not restrict movement to transitions between nodes connected by arrows, the arrows visualize paths that would involve only adding or dropping beliefs from the belief set

The simulation model assumes that pairs of individual agents may interact and, when they do, each has the potential to adopt any of the opposing beliefs of the agent they interacted with. For example, in Fig. 2, an agent in State 8 might interact with one in State 9. Although these agents agree on many issues, they differ in their agreement with Features (i.e., issues) B, C, F, G, R, and T (6 issues). If either agent changes its view on all of those issues, it will hold a coherent set of attitudes (by virtue of the fact that it is a belief state in the belief space). An agent in State 8 could also transition to State 9 by interacting with other agents, but that it is less likely because it must change only those beliefs that are consistent with State 9 and none of the others. In the model, once a candidate belief set is generated, it will only be adopted if it corresponds to a state within the belief space. Otherwise, the agent will remain at its initial state. The belief comparison/transition occurs in parallel, such that each agent interacts with another agent and they both have the potential to shift to a new state after this interaction. As such, the ease with which agents transition between states involves how many potential states exist. With a sparse set of 15 states in this example, agents will typically not move between states. If there are many more ways in which individual beliefs can diverge from others, transitions will be more frequent.

Simulation 1: convergence and polarization in distributed knowledge spaces.

The first simulation shows how using a knowledge space can produce one of the main phenomena used to justify the bounded influence conjecture: convergence to multiple stable groups. In this simulation (and the others), the first step was to create a knowledge space (as shown in Fig. 2) based on 20 binary features with 15 total knowledge states, always including the two most extreme states (i.e., all [00000000000000000000] and all [11111111111111111111]). Each knowledge state was chosen by first sampling a single value p with a uniform random distribution between 0 and 1, and then using p to determine whether each of 20 binary features should be 0 or 1 [0 with probability p, 1 with probability \((1-p)\)].

This approach tends to create nodes distributed along the continuum between the two extremes, allowing both moderate and extreme viewpoints to emerge. Furthermore, because there are relatively few possible knowledge states at the extremes (compared to the bulk of possible states that are moderate), this uniform sampling over-represents extreme views, especially considering that the most extreme views are always represented. In contrast, if states were chosen at random, the distribution would approximate a binomial distribution with \(p=.5\).

For each simulation, 100 agents were distributed randomly across the fifteen knowledge states, and during the simulation they were allowed to interact and influence belief according to the following procedure:

-

1.

Two agents were chosen at random to interact, without regard to individual beliefs.

-

2.

Each binary belief element for one agent was independently selected for inclusion in the “discussion” (with probability \(\mu =.3\)).

-

3.

A new candidate belief for the first agent was created by changing its discussed beliefs to those of the other agent in the discussion.

-

4.

If the new belief state was valid (that is, it was one of the pre-selected belief states), this new state is adopted by the agent.

-

5.

Steps 2-4 (one cycle) were repeated for the second agent in the discussion.

This process was repeated many thousands of times. Every 1000 interaction cycles, the distribution over knowledge states was examined for signs of convergence, and the simulation was discontinued either once a convergence criterion was met, or 125,000 rounds were simulated. The convergence criteria were: (a) the population was all in a single knowledge state, or (b) the population was distributed across an identical set of knowledge states for 25 consecutive 1000-interaction rounds, ignoring any states with five or fewer agents. These low-frequency states were ignored because there is usually an opportunity for singleton agents to jump to a third state whenever more than two states exist, and since we evaluated convergence only every 1000 interactions, a strict convergence criterion would ignore some of these small deviations that happened between samples anyway.

Dynamics across two sample runs of Simulation 1. At each iteration (horizontal position), the vertical distance between lines represents the proportion of agents with a particular belief (the lines themselves are not meaningful, unlike in Fig. 1). In top panel, the system meetsconvergence criteria after about 80,000 iterations, with most agents in a single state. In bottom panel, stable convergence to two states occurs

Figure 3 illustrates the dynamics of two simulation runs across time. For each panel, each vertical slice represents a distribution across states, from one extreme (all features equal to 0, at the bottom) to the other (all 1, on the top). Over time, agents converge to fewer states. In the top panel, two primary states exist by 50,000 iterations, and these two states are both fairly extreme, with one dominating. In the bottom panel, two states (again relatively extreme) dominate by 20,000 iterations, with a slightly more moderate state absorbing the most extreme state from around iteration 10,000 to 20,000. In the top panel, the system converges to primarily one state, whereas the bottom panel stays fairly stable. These two examples illustrate that convergence criteria can be met when multiple states are present, and that the time to converge ranges substantially. Figure 4 shows two aspects of the average behavior of this system across 1000 runs. First, out of 1000 runs, only 69 failed to meet either of the convergence criteria after 125,000 rounds. The solid black line in the figure illustrates the cumulative convergence distribution. Most simulations did not converge until about 50,000 iterations, but most had converged by 100,000 iterations. Figure 4 also shows the number of states converged to. The 0 state indicates non-convergence; and 1 through 4 indicate that the simulations converged to between 1 and 4 groups (with more than five agents in each). Most often the system converged to two states, although a substantial minority also converged to 1 or 3 states. These simulations show that such representations can produce stable opinion divergence.

It is also important to examine the states that the simulations converged to. In traditional opinion dynamics models, groups of agents tend to converge to opinions at the center of a “basin” whose size and extremity are controlled by the bounded influence parameter [24]. Thus, we might expect groups to converge to the center of the belief space, especially because agents in this simulation are essentially blind to the extremeness of their own and others opinions. In other words, once the knowledge state is set up, beliefs could be arbitrarily recoded from 0 to 1 or vice versa, and the simulation would produce the same result because the mean extremeness of a belief is never computed or used to guide behavior.

Figure 5 shows that despite the fact that agents do not explicitly use extremeness of belief, they produce group polarization, converging to the more extreme belief states. In the figure, the horizontal axis shows the spectrum of belief for possible belief states—essentially how many of a state’s binary values were 1 versus 0. The thick black line with round symbols indicates the probability that a belief space contained a belief state with N positive features. Because there were always 15 states and the two most extreme were always present, they have on average 1.0 per simulation, and all other cases are approximately \(13/19=.68\). For comparison, the solid grey line shows the distribution of potential belief states across the belief spectrum, with the great majority falling in the middle region. The thin U-shaped line shows the probability that a state was held by more than five agents at convergence. Here, despite the initial uniform distribution across the belief space, the agents converged to the extreme ends of the space.

Convergence distributions across belief states for Simulation 1. Black line with filled points indicates mean number of initial states with specified agreement with extreme view (extremeness index). Thick grey line shows the distribution of possible belief states. Thin U-shaped line indicates proportion of simulations that converged to given extremeness index

This simulation illustrates that the use of a belief space can produce the same basic phenomenon that the combination of bounded influence conjecture and recalcitrant extremists or negative reaction have typically been used to produce: multiple groups that tend to converge to opposite ends of the belief spectrum. This happens despite lacking the bounded influence conjecture: agents are willing to interact with any other agent regardless of belief, yet the system does not converge to a single belief state.

The knowledge-space or belief-space account produces these results for several reasons. First, two agents with extremely different views influence one another only rarely. This is not because they never listen to one another, and not because they ignore the extreme information, but rather because the discussion must result in moving one agent from their current state to another legal state, which constitute only \(15/1,048,576=0.0014\%\) of the possible states. This creates a gulf between most knowledge states (even intermediate ones) that is difficult to overcome, because only the proper message can move one agent to a logically consistent state. Furthermore, clustering in the extremes is encouraged because of the substantial oversampling of extreme states, which are necessarily close to one another and, thus, have a chance of moving opinion of an agent.

Overall, Simulation 1 illustrates the basic premise that belief states, restricted because of a need for consistency between concepts, can indeed produce the same type of convergence phenomena that the bounded influence conjecture does. It also produces group polarization by default, with no additional assumptions. These results raise two questions, which will be explored in Simulations 2 and 3. First, do the convergence properties depend on the belief space being highly polarized in the first place? This will be explored in Simulation 2. Second, would we still see group polarization if the starting distribution was not so broadly distributed across the belief spectrum in a (perhaps) unrealistic manner. This will be explored in Simulation 3.

Simulation 2: convergence in random belief spaces.

The first simulation showed how assumptions about the structure of knowledge, rather than the interaction rules of naive agents, can produce situations in which an interacting group can be influenced by any other agent’s opinions but still converge to distinct belief groups, and not collapse to a single group. It also showed that despite the fact that the initial beliefs were distributed across the range of the belief space, the stable groups tended to converge to the extremes. This raises a couple related question: (1) will groups still tend to converge to extremes in belief spaces that do not oversample the extremes of the space?; and (2) will agents still tend to converge to multiple groups in these situations, or will they collapse to a single group? The present simulation investigates this.

The basic steps in Simulation 2 were identical to Simulation 1, except that the initial distribution of states was chosen as follows: the two most extreme states were always included, but instead of choosing a value for p that ranged between 0 and 1 uniformly, p was chosen between .4 and .6. for each belief state. The parameter p was then used to sample each feature of a belief state. This convolution of a uniform and binomial distribution was used to expand the center region of the belief space, biasing it only slightly in the direction of the extreme views. A p uniformly chosen to be .5 would produce a space that is identical to an unvalenced space, where no dimensions have any real coherence with any underlying principle. Otherwise, the simulation was identical to Simulation 1.

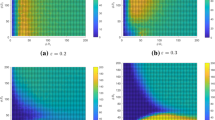

Convergence distributions across across belief states for Simulation 2. Black line with filled points indicates mean number of initial states with specified agreement with extreme view (extremeness index). Thick grey line shows the distribution of possible belief states. Thin peaked (blue) line indicates proportion of simulations that converged to given extremeness index

Figure 6 shows the basic convergence properties of Simulation 2. Again, most simulations (all but 18) had converged by 125,000 cycles. Like Simulation 1, simulations converged to two states more often than others, but unlike Simulation 1, a considerable proportion of simulations converged to one and three states. This is interesting because without the heavy oversampling at the extremes, one might expect the simulations to tend to converge to a single state near the center of the extremity index, but this did not happen.

Figure 7 shows that the simulations do indeed tend to converge near the center of the belief space (thin peaked line). But they still tended to converge to multiple states. Convergence to moderate states occurs because, similar to Experiment 1, there is a large gulf between moderate states and the extreme states which often prevents agents from moving to the extremes. But convergence to multiple states is interesting, because one might assume that heavier sampling of moderate states would serve as an attractor that would encourage consensus.

The explanation for this outcome is that just because two states are equally moderate does not mean that they are similar. For example, consider the two following states:

Both might be considered moderate, yet they are in complete disagreement. In fact, each has more similarity to the two most extreme belief states (10/20 matches) than they do to one another (0/20 matches). Thus, it is fairly easy for Simulation 2 to converge to multiple moderate belief states that still have gulfs between them wide enough to prevent convergence to a single state.

The combined results of Simulations 1 and 2 suggest that belief spaces can provide a powerful explanation for consensus formation and stable disagreement within an interacting community. Simulation 1 also produced a polarization effect that was not present in Simulation 2, whereby the belief a group converges to is more extreme than their average belief before convergence. Simulation 3 examines group polarization in more detail.

Simulation 3: group polarization in consensus formation

Simulation 1 produced an unexpected result: not only did beliefs converge to multiple stable groups, the groups tended to stabilize at the extremes of the belief space. This result is akin to classic group polarization effects. However, this polarization might be somewhat of an artifact, because agents were initially spread broadly across the belief space, the convergence patterns might just appear extreme in comparison to the starting conditions. Traditionally, polarization effects can occur for a group that is already in general agreement, such that the consensus reached after discussion is still more extreme than the average of initial opinions.

Simulation 3 addresses this point by creating a belief space that is already highly polarized. In this simulation, rather than choosing the value p uniformly between 0 and 1 (as in Simulation 1) or uniformly between .4 and .6 (as in Simulation 2), p was chosen to be either .1 or .9 for each belief state. This configuration tended to converge to two stable states, so in this simulation, we ended each run whenever only two states remained. As before, the two most extreme states were always present in the belief space.

Figure 8 shows the outcome of this simulation. The critical comparison is between the thin black line (starting distribution) and the dashed line (ending distribution). As before, the simulations tended to converge to extreme states, and these states were in fact more extreme than the already polarized starting configurations. Each grey filled circle represents the number of agents holding a belief of one extremity index, across all simulations. The lowest thick U-shaped line is the mean of these values across all simulations.

Observed distribution showing group polarization. Thin black line shows average of initial distributions of belief states, which were binomially distributed with \(p=.1\) or \(p=.9\). Solid thick line shows the mean of all outcome distributions after convergence to two states, and the dashed line shows the proportion of trials that a particular state was converged to

This simulation illustrates a novel account of the group polarization phenomenon. In the simulation, group polarization occurs because the more extreme views are the ones that are most central to the less extreme beliefs around it, in that they are closest to the most other states. Typically, movement between two less extreme belief states is less likely than movement to an extreme. Indeed, an agent can move to an extreme after interacting with another agent that may less extreme than the first agent, if it happens to adopt beliefs of the second agent that are more extreme. This differs fundamentally from many different accounts of group polarization because it does not assume that the effect stems from social processes such as leadership or unwillingness to change opinion. Rather, polarization stems from the structure of the belief space. In these cases, the most extreme view is actually the most central view amongst extreme views, and so the easiest to agree upon. Less extreme views, which may represent more moderate beliefs that match the starting distribution better, are more difficult to converge upon because they tend to involve greater average belief change, even among initially moderate agents (who are simply moderate on different dimensions).

Discussion

Overall, these simulations illustrate an alternative approach to accounting for group-level processes in social simulations. They rely on the notion of a belief or knowledge space, which provides a richer representation through which to simulate belief transmission than unidimensional representations typically offer. We argue that group-level behaviors related to consensus formation, stable disagreement, and group polarization can be understood as consequences of a more complex belief space, even without making assumptions about bounded influence or recalcitrant extremists. The basic insight is that in unidimensional opinion spaces, drift and collapse are almost inevitable, and so additional obstacles that prevent this influence must be adopted by the modelers. The bounded influence conjecture is one such obstacle. The present research suggests that another obstacle is conceptual coherence [104]. It also suggests alternate strategies for influence and persuasion, and mechanisms for the propagation of misinformation. The bounded influence model suggests that large-scale change must happen gradually, with people following a trail of breadcrumbs from one belief, through intermediate beliefs, and each time they can only be influenced by someone whose attitudes are fairly similar to their current attitudes. The belief space model suggests that the largest obstacle to attitude change is coherence, and that an entire belief system might need to be modified at once. Alternately, one might examine intermediate states that an individual is unwilling to accept, and try to convince them that those states are not incoherent or inconsistent. Similarly, mis-information may be incorporated and adopted to the extent that it is coherent and consistent with other beliefs, attitudes, and knowledge. Curiously, the model’s account for group polarization is a natural consequence of the same process—people reach agreement at more extreme positions because that position is the only thing they can agree upon, or at least that is the easiest position for them to reach agreement. This echoes the explanation of group polarization by [115], who argued that groups converge on the prototypical response, which is more extreme than the average response: the prototype is the belief most similar to each individual response and, in many belief spaces, it is more extreme than most individual beliefs.

Figure 9 illustrates the basic differences between the belief spaces used in the three simulations. The top panel shows a single belief space sampled using the methods in Simulation 1. In it, there are a number of states near the extremes which tend to be close to one another (and thus enable easier movement between), with others distributed across the spectrum that tend to be fairly isolated. The center panel shows a belief space similar to Simulation 2, in which states tend to be nearer the center, yet they are also isolated from one another. The bottom panel shows belief spaces similar to Simulation 3, which are polarized to begin with, and so are all close to one another.

Whether the results here can apply to real-world groups depends upon whether beliefs in those organizations can be reasonably approximated by a multi-dimensional binary belief space with valenced values having a limited number of intermediate states that can logically exist. Clearly, this would not be true for some classes of beliefs in arbitrary groups of people. A general broad-based opinion survey about consumer products might have essentially arbitrary valences about unrelated topics, and so would produce a space more akin to Simulation 2. But other domains may be more relevant. For example, [111] showed an example in the domain of religious beliefs that fit this description well, and identified an empirical belief space that both had an overall valence (in terms of religiousness) and individual intermediate states that were logically or theistically coherent.

Another example comes from work using statistical methods to identify groups within a population [105]. They examined the 2006 U.S. Senate votes on 19 issues identified by the AFL-CIO as important for labor union interests. Not surprisingly, beliefs clustered at the extremes, with Democrats largely in support of union issues and Republicans largely opposing union issues. However, there was quite substantial divergence from the “most extreme” ends of the scale. The same data can be examined in terms of their distribution of agreement with the AFL/CIO, as shown in Fig. 10.

Senate voting distribution on 19 issues identified by the AFL-CIO as important for union interests [105]. Horizontal axis indicates number of issues in agreement with the union

The senate voting shows a fairly polarized set of beliefs, but with substantial deviations from the most extreme views in both directions. Yet it illustrates that there do indeed exist the types of polarized belief spaces simulated in Simulation 3. The degree of polarization is remarkable yet probably not unexpected. The present simulations might expect even greater polarization and tighter consensus groups, but Senators are also be highly influenced by opinions of people outside of the Senate body (especially their donors and constituents), which may drive some individuals away from the typical positions.

Nevertheless, the simulation approach described here has offered a clear alternative to the standard bounded influence conjecture. In addition, it offers the possibility of representing more complex knowledge structures, and making close contact to data that can be obtained using surveys and other similar methodologies. It also provides a single account of opinion divergence and group polarization, and its account of group polarization is novel.

Testing the bounded influence conjecture

It is important to recognize that these simulations do not show that the bounded influence conjecture is wrong, or that alternative accounts are correct. We view this as an empirical question that should be settled via experiments. In the last five years, the opinion dynamics community has begun to generate and collect empirical data that does test specific assumptions of their models [33, 34, 49, 71]. We believe that there are ways in which bounded influence differs from the belief space model, and these could be subject to similar experimental testing. Identifying critical differences between these theories and testing them may also be useful because it can help clarify what the central assumptions of each approach really are. Furthermore, there are a number of assumptions outside the scope of the models that can account for some of these effects. Notions of social network, geographical distance, and group membership may greatly constrain both models. Several of the tacit assumptions of the bounded influence approach are compared to the belief space approach in the Table 1. These assumptions constitute distinct differences between the two approaches that could lead to testable hypotheses that may discriminate between the two theories using behavioral experimentation.

It is unlikely that the assumptions embodied in either of the columns in Table 1 are universals. Rather, they may apply to specific situations or contexts. Within any setting, it may be possible to test some of these assumptions explicitly, and determine the most relevant model. Understanding the context under which different assumption hold will be an important contributor to a more predictive science of opinion dynamics.

Models with similar or alternate assumptions

The current simulations focus on how simple constraints of knowledge representations might produce global behaviors. Thus, we refrained from examining other constraints or assumptions that might also produce these behaviors. One such realistic constraint is a basic result from research on ‘small world’ phenomena [116]. In real groups, influence and interaction are non-uniform and asymmetric. Some individuals have stronger influence than others, and individuals may trust or mistrust others for specific reasons. And certainly, the format and structure of discussions can matter, and content of the interactions is not randomly selected.

For models of opinion dynamics to make richer and more useful predictions, they may need to incorporate all these factors. Recent agent-based modeling has attempted to incorporate many of these factors [56], and other social/psychological factors including norms, emotion, social comparison, segmented networks, etc. have been examined as well [90, 91, 117]. There are also existing models that make some assumptions similar to the present model, although they have not been used to demonstrate group polarization. For example, several models have entertained more complex and more realistic knowledge representations. Thiriot & Kant [118] proposed a model of innovation diffusion that used more complex networks of nodes to represent the conceptual space. They used the iPhone as an example, and along with having a number of binary properties of the iPhone (similar to our representation), allowed these features to be associated with other features for a richer representation. Furthermore, each association could have continuous-valued belief, which also differs from the present approach. Nevertheless, the richer representation is akin to our previous work relating acquisition of complex knowledge structures via incremental learning of episodic information [108] that inspired the present model, and similar learning mechanisms could be implemented within our framework to represent more gradual influence and more complex knowledge structures. Similarly, a number of models have examined more complex representational structures, especially multi-dimensional analogs to the bounded confidence models (e.g., [26, 27, 29, 30, 59, 85, 100,101,102,103, 117]).

Limitations

The particular model we used here has assumptions similar to a number of other models, and we have identified some other psychological assumptions present in other models that we have not implemented. One related question regards the extent to which these simulations produced behavior that is representative of other parameters settings within the model. For example, we fixed the number of features to 20, the number of intermediate belief states to 15, and the number of agents to 100. We have not explored fully how robust these results are. We know that as the number of intermediate states increases, the system tends to converge to a single intermediate state more often. This makes sense because there are more intermediate states, and if all states were feasible, there is nothing preventing this eventual convergence. Furthermore, the number of agents sampled might have an instrumental effect on polarization, which could be important because most research on polarization has arisen from studies of small group interactions. However, despite these limitations, the present study offers a proof of concept that knowledge coherence may underly some of these group level processes, and our view is that more empirical work may be necessary to test this more specifically.

Summary

Overall, these simulations challenge one common approach in understanding belief and opinion transmission through an organization. Much previous theorizing has assumed that a divergence of belief itself prevents agents from interacting. This bounded influence assumption arose in part because of the impoverished representations typically used by these models. By expanding the notion of belief to a multivariate complex, and restricting the legal states within the resulting space, the present models can produce convergence to multiple groups almost by default, and also produce group polarization with no further assumptions. Future work in opinion dynamics should take more seriously cognitive aspects of belief, and look to cognitive theory, in contrast to physical systems, for inspiration and guidance.

Notes

Complete source code for these simulations is available at https://www.openabm.org/model/5808/version/1/view, and hypothetical interaction in a simple system is described in the Appendix.

References

Brush, S. G. (1967). History of the Lenz-Ising model. Reviews of Modern Physics, 39(4), 883–893.

Sznajd-Weron, K., & Sznajd, J. (2000). Opinion evolution in closed community. International Journal of Modern Physics C-Physics and Computer, 11(6), 1157–1166.

Castellano, C., Fortunato, S., & Loreto, V. (2009). Statistical physics of social dynamics. Reviews of Modern Physics, 81(2), 591–646.

Martins, A. C. (2015). Opinion particles: classical physics and opinion dynamics. Physics Letters A, 379(3), 89–94.

Sobkowicz, P. (2009). Modelling opinion formation with physics tools: Call for closer link with reality. Journal of Artificial Societies and Social Simulation, 12(1), 11. http://jasss.soc.surrey.ac.uk/12/1/11.html.

Weisbuch, G., & Stauffer, D. (2000). Hits and flops dynamics. Physica A: Statistical Mechanics and its Applications, 287(3–4), 563–576.

Anderson, L. R., & Holt, C. A. (1997). Information cascades in the laboratory. The American Economic Review, 87(5), 847–862.

Mackay, C. (1852). Memoirs of Extraordinary Popular Delusions and the Madness of Crowds. London: Office of the National Illustrated Library. http://www.gutenberg.org/ebooks/24518.

DeGroot, M. H. (1974). Reaching a consensus. Journal of the American Statistical Association, 69(345), 118–121. http://www.jstor.org/stable/2285509.

Parunak, H. V. Belding, T. C. Hilscher, R., & Brueckner, S. (2008). Modeling and managing collective cognitive convergence. In: Proceedings of the 7th international joint conference on Autonomous agents and multiagent systems—Volume 3, International Foundation for Autonomous Agents and Multiagent Systems (pp. 1505–1508), Estoril, Portugal. http://portal.acm.org/citation.cfm?id=1402821.1402910.

Parunak, H.(2009). A mathematical analysis of collective cognitive convergence. In: Proceedings of The 8th International Conference on Autonomous Agents and Multiagent Systems-Volume 1, International Foundation for Autonomous Agents and Multiagent Systems (pp. 473–480).

Lorenz, J. (2007). Continuous opinion dynamics under bounded confidence: A survey. International Journal of Modern Physics C, 18(12), 1819–1838.

Axelrod, R. (1997). The dissemination of culture: A model with local convergence and global polarization. The Journal of Conflict Resolution, 41(2), 203–226. http://dx.doi.org/10.2307/174371.

Amblard, F., & Deffuant, G. (2004). The role of network topology on extremism propagation with the relative agreement opinion dynamics. Physica A: Statistical Mechanics and its Applications, 343, 725–738.

Carletti, T., Fanelli, D., Grolli, S., & Guarino, A. (2006). How to make an efficient propaganda. Europhysics Letters, 74(2), 222–228.

Deffuant, G. (2006). Comparing extremism propagation patterns in continuous opinion models. Journal of Artificial Societies and Social Simulation, 9(3), 8. http://jasss.soc.surrey.ac.uk/9/3/8.html.

Deffuant, G., Neau, D., & Amblard, F. (2000). Mixing beliefs among interacting agents. Advances in Complex Systems, 3, 87–98.

Deffuant, G., Amblard, F., Weisbuch, G., & Faure, T. (2002). How can extremism prevail? A study based on the relative agreement interaction model. Journal of Artificial Societies and Social Simulation, 5(4).

Fortunato, S. (2005) Monte Carlo simulations of opinion dynamics. In Complexity, metastability and nonextensivity. Proceedings of the 31st workshop of the international school of solid state physics (pp. 301–305). Erice, Sicily, Italy, 20–26 July 2004. https://doi.org/10.1142/9789812701558_0034.

Fortunato S., Stauffer D. (2006) Computer simulations of opinions and their reactions to extreme events. In: S. Albeverio, V. Jentsch, H. Kantz (Eds) Extreme events in nature and society. The Frontiers Collection. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-28611-X_11.

Franks, D. W., Noble, J., Kaufmann, P., & Stagl, S. (2008). Extremism propagation in social networks with hubs. Adaptive Behavior, 16(4), 264–274.

Gómez-Serrano, J., Graham, C., & Le Boudec, J.-Y. (2012). The bounded confidence model of opinion dynamics. Mathematical Models and Methods in Applied Sciences, 22(02), 1150007. http://www.worldscientific.com/doi/abs/10.1142/S0218202511500072.

Groeber, P., Schweitzer, F. & Press, K. (2009). How groups can foster consensus: The case of local cultures. Journal of Artificial Societies and Social Simulation, 12(2), 4. http://jasss.soc.surrey.ac.uk/12/2/4.html.

Hegselmann, R. K., & Krause, U. (2002). Opinion dynamics and bounded confidence models, analysis and simulation. Journal of Artificial Societies and Social Simulation 5(3). http://jasss.soc.surrey.ac.uk/5/3/2.html.

Lorenz, J. (2006). Consensus strikes back in the Hegselmann-Krause model of continuous opinion dynamics under bounded confidence. Journal of Artificial Societies and Social Simulation, 9(1), 8. http://jasss.soc.surrey.ac.uk/9/1/8.html.

Lorenz, J. (2006). Continuous opinion dynamics of multidimensional allocation problems under bounded confidence: More dimensions lead to better chances for consensus. European Journal of Economic and Social Systems, 19(2), 213–227.

Lorenz J. (2008) Fostering consensus in multidimensional continuous opinion dynamics under bounded confidence. In: D. Helbing (Ed) Managing Complexity: Insights, Concepts, Applications. Understanding Complex Systems. Berlin, Heidelberg: Springer

Lorenz, J. (2010). Heterogeneous bounds of confidence: Meet, discuss and find consensus! Complexity, 15(4), 43–52.

Urbig, D. & Malitz, R. (2005). Dynamics of structured attitudes and opinions. In: K. G. Troitzsch (Ed.) Representing social reality. Pre-Proceedings of the Third Conference of the European Social Simulation Association (ESSA), (pp. 206–212) September 5–9, Koblenz, Germany, 2005. https://www.researchgate.net/profile/Diemo_Urbig/publication/228360096_Dynamics_of_structured_attitudes_and_opinions/links/00b4952ff206292534000000.pdf.

Urbig, D., & Malitz, R. (2007). Drifting to more extreme but balanced attitudes: Multidimensional attitudes and selective exposure. Presented at the Fourth Conference of the European Social Simulation Association (ESSA), September 10–14, Toulouse.

Deffuant, G., Amblard, F., Weisbuch, G. (2004). Modelling group opinion shift to extreme: The smooth bounded confidence model. Presented to the 2nd ESSA Conference (Valladolid, Spain), September 2004.

Dittmer, J. C. (2001). Consensus formation under bounded confidence. Nonlinear Analysis-Theory Methods and Applications 47(7), 4615–4622.

Chacoma, A., & Zanette, D. H. (2015). Opinion formation by social influence: From experiments to modeling. PLoS One, 10(10), 1–16. https://doi.org/10.1371/journal.pone.0140406.

Moussaïd, M., Kämmer, J. E., Analytis, P. P., & Neth, H. (2013). Social influence and the collective dynamics of opinion formation. PloS One, 8(11), e78433.

Mueller, S. T., & Weidemann, C. T. (2008). Decision noise: An explanation for observed violations of signal detection theory. Psychonomic Bulletin & Review, 15(3), 465–494.

Tajfel, H. (1970). Experiments in intergroup discrimination. Scientific American, 223(5), 96–102.

Mark, N. P. (2003). Culture and competition: Homophily and distancing explanations for cultural niches. American Sociological Review, 68(3), 319–345.

Lazarsfeld, P. F., & Merton, R. K. (1954). Friendship as a social process: A substantive and methodological analysis. Freedom and Control in Modern Society, 18(1), 18–66.

Ferguson, C. K., & Kelley, H. H. (1964). Significant factors in overevaluation of own-group’s product. The Journal of Abnormal and Social Psychology, 69(2), 223–228.

Brewer, M. B. (1979). In-group bias in the minimal intergroup situation: A cognitive-motivational analysis. Psychological Bulletin, 86(2), 307–324.

Festinger, L. (1954). A theory of social comparison processes. Human Relations, 7(2), 117–140.

Schachter, S. (1951). Deviation, rejection, and communication. The Journal of Abnormal and Social Psychology, 46(2), 190–207.

Wesselmann, E. D., Williams, K. D., Pryor, J. B., Eichler, F. A., Gill, D. M., & Hogue, J. D. (2014). Revisiting Schachter’s research on rejection, deviance, and communication (1951). Social Psychology, 45, 164–169.