Abstract

The purpose of this design and development case is to document the design, development, and evaluation of a statistics course tailored for educational technology doctoral students in an online program. The course is intended to prepare online doctoral students to both consume and produce quantitative research in the field of educational technology using relevant and authentic learning experiences. This paper first outlined the course features used to engage students in the learning materials. Twenty doctoral students enrolled in this online statistics course provided relevant data on three occasions (pre-, mid-, and post-surveys) during a 16-week academic semester. Drawing from both quantitative and qualitative data sources, this paper demonstrates that the use of authentic and relevant datasets based on peer-reviewed publications from the field of educational technology coupled with engaging practice assignments, designed for students to exercise the use of various statistical techniques using SPSS, was a successful strategy. Students found learning about analysis of covariance (ANCOVA) along with factorial and repeated measures analysis of variance (ANOVA) in the same module overwhelming. Results showed that students’ level of statistics anxiety significantly decreased in three key areas. The discussion provides interpretations of the findings as well as our lessons learned.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Perceived as a difficult subject to study by many graduate students (Onwuegbuzie 2004), statistics courses continue to be a required component in many graduate programs in the social sciences (Thompson et al. 2019), increasing the demand for introductory and advanced statistics courses in both online and face-to-face formats (DeVaney 2010; Songsore and White 2018). Despite the growing interest in statistics courses to support learners’ development of quantitative skills and critical thinking to deal with professional demands and everyday challenges (Songsore and White 2018), there is little understanding on how the teaching and learning of statistics can be targeted to specific learner populations (e.g., graduate students in educational technology), especially in online learning environments.

Online statistics courses are not a new innovation to the academy (Mills and Raju 2011); however, with the increase in online courses and programs (Seaman et al. 2018), the necessity to share experiences about teaching online statistics courses to target populations becomes increasingly important. The target population in the present study is an online educational technology doctoral program offered at a large, public southeastern research university in the USA. While we know there is great variability across graduate programs in our field (Ku et al. 2012; West et al. 2017), those institutions offering online doctoral programs typically require coursework in introductory and advanced statistics to prepare graduates to conduct independent scholarship, solve problems using the scientific method, and draw inferences from various datasets to inform theory and practice (Ku et al. 2012).

Scholars in the field of educational technology regularly share expertise on the design of our graduate programs. For instance, Bolliger and Martindale (2001) discovered that instructor variables, course management, and technical issues were the main factors for student satisfaction in an online master’s program. Dixon et al. (2012) stated that proper course sequences, units, certificate options, and a high level of consistency of hardware and software programs are crucial for future programs in educational technology. Additionally, the field also shares experiences surrounding the design of educational technology doctoral programs (Dawson and Kumar 2014; Kumar and Ritzhaupt 2014; Kung and Logan 2014), even publishing a special issue on online doctoral programs in TechTrends (Dawson and Kumar 2014). Despite scholarly communication about online doctoral programs in our field, there is a scarcity of research regarding how to teach statistics to these online doctoral students.

To address this gap, this study describes the design, development, facilitation, and evaluation of an online statistics course. This course aimed at increasing the relevance and applications of statistical concepts as they relate to common practices in educational technology problems and research. Furthermore, this study explores how students’ attitudes toward statistics changed from the beginning to the end of the semester and whether these changes align with learners’ perceptions of the course design and learning materials. Framed as design and development case (Richey and Klein 2014), this study draws upon both quantitative and qualitative data to systematically evaluate the first offering of an intermediate level statistics course designed specifically for educational technology doctoral students. The course was designed to be asynchronous; however, students could also participate in synchronous activities with the instructor and peers. For example, students could optionally participate in virtual office hours and rewatch these at a later moment. This paper first provides an overview of the course design, topics, and learning activities. Next, we systematically evaluate the course in light of the design and draw inferences about elements that worked and did not work within the course. We conclude with some lessons learned and suggestions for other seeking to teach online statistics as part of their programs.

Course Design

As an overarching goal, the course instructor wanted the learners to gain thorough knowledge and skills in quantitative data analysis through instructional activities that were engaging and relevant to the learners’ field and practice. This would help learners best prepare to complete rigorous and relevant dissertations. This goal informed the various topics in the course, the way the instructor structured and organized this content in the course, and the way in which the instructional materials were delivered to be most beneficial to practicing online doctoral students in educational technology.

Course Content

In order to make this course as useful to the learners as possible, basic and more advanced concepts such as descriptive statistics and exploratory factor analysis, respectively, were included in the curriculum. The course was designed for a traditional 16-week academic semester. Table 1 provides the course modules, topics, and duration for the course. These topics were established based on reviewing the topics of face-to-face statistics courses offered at the same university, considering the researchers’ own knowledge of quantitative methods, and the constraints regarding delivering a course online during a 16-week semester. The customized curriculum, featuring 14 broad topics in nine modules, allowed learners to gain knowledge and skills in this single online course that would usually require multiple statistics courses to cover.

Field’s (2013) Discovering Statistics Using SPSS was chosen as the textbook used for this course. This textbook was recommended to the instructor designing the course by various statistics instructors and experts for a course of this nature. It was ultimately chosen because it had positive reviews (was considered thorough and engaging) and included a comprehensive coverage of quantitative data analysis methods topics selected for inclusion in the course. Not all quantitative methods textbooks cover the topics included in the curriculum, so Field’s textbook was attractive in that it was a single resource that learners could use for the entirety of the course.

Course Structure and Organization

Because this course was designed to be delivered entirely in the online environment, structure and organization were essential to support learners (Lee et al. 2012). Each module was designed to last 2 weeks (aside from Module 5 and Module 9, which only consisted of mid-term and final examinations). Each 2-week module included the following elements: a module overview page, short instructional videos (ranging from approximately 5 min to no more than 20 min each), real-world datasets and associated journal publications derived from these datasets, a practice assignment, a concept quiz, an open discussion forum for learners to ask questions, and a link to scheduled virtual office hour meetings. Module 1, Module 4, and Module 8 also included focused discussion prompts about relevant topics (e.g., instrument design) to further learners’ engagement with the topics. The course was organized within the Canvas™ learning management system. Below, each element is described in detail (for an overview of the elements, their functions, and their formats, see Table 2).

Module Overview Page

Module overview pages included various instructional elements and resources assembled into a coherent format. They included a brief textual introduction into what to expect from that module serving as an advanced organizer. This introduction included transitions from the previous module and what to expect in the current module. The module overview page also included learning outcomes, which notified learners about what they can expect to be able to do by the end of the current module. The module overview page also included all of the resources that learners needed for each module, which included the textbook reading assignments along with corresponding PowerPoint presentations, instructional videos, and datasets and associated journal articles. The reading assignments and PowerPoint presentations both came from the textbook used in the course (Field 2013). These readings and presentations were mostly conceptual in nature. Learners’ understanding of the concepts presented was assessed using the concept quizzes in the course. Finally, the module overview pages concluded with descriptions of the deliverables that were due at the end of that module.

Instructional Videos

The instructional videos were recorded by the instructor of the course (one of the researchers on the team) and consisted of practical applications of the concepts. These instructional videos consisted of the instructor analyzing data while recording his computer screen and explaining the concepts and techniques included in that module. The datasets found in the module overview page were those analyzed in the instructional videos, so learners were able to follow along during the demonstrations (Wang 2006). The sources of these datasets (studies in the field of educational technology) were always included as well. The instructional videos would first assess the assumptions of the statistical methods employed (e.g., normality), apply a statistical method to the dataset (e.g., ANOVA), interpret the output of the statistical program, and demonstrate how to report the results in APA format.

Real-World Datasets and Journal Articles

A goal of the course was to make the learning experiences relevant and authentic. Thus, the instructor used real-world educational technology datasets in published journal articles to demonstrate the concepts. The instructional videos would use one sample dataset to illustrate the concepts in a module, and the practice assignment would use a similar but different dataset for the students to practice the concepts using SPSS. At all times, the actual journal publications derived from these datasets were available as a reference to the students to check their work and to seek guidance if they were stuck on a practice assignment.

Practice Assignment

Practice assignments were used to assess learner’s ability to apply the concepts and skills in authentic, real-world situations. Each practice assignment included a dataset to download, a description of the dataset and its source, and detailed instructions for the data analysis. These datasets came from published educational technology studies, so they are relevant to the learners and can align with the types of analyses and contexts in which the learners may be conducting themselves in the future (Lombardi 2007). The format of the practice assignments were open-ended questions that learners answered in a Microsoft Word document (e.g., “Using SPSS, conduct a dependent sample t-test [e.g., Paired Samples t-test in SPSS] between the pre-test and post-test. Are the results statistically significant? Interpret the meaning of these results for readers. Place the interpretation and statistics under ‘Results’ following APA guidelines.”).

Concept Quizzes

The concept quizzes, on the other hand, assessed the learners’ grasp of the concepts discussed in the course. These quizzes consisted of 20 multiple-choice items that focused on the learners’ understanding of the textbook readings, PowerPoint presentations, and instructional videos (e.g., “Which of the following is a scenario in which you would use a paired-samples t-test?”). Quiz items and distractors were written following best practices of item writing for multiple selection item formats (Miller et al. 2013). Additionally, item-level feedback was provided for each question to provide both response correctness and an elaboration as feedback to the learners to assist in their mastery of the content.

Open Discussion Forum

Open discussion forums were simply a blank discussion forum to provide a space where the learners and the instructor could easily communicate. Often, this included learners asking and answering questions. Learners answered each other’s questions often, and the instructor also chimed in to provide answers within this discussion space. This discussion space was an important affordance of the course to support social and instructor presence in the online learning environment (Mandernach et al. 2006). From a theoretical standpoint, this space helped scaffold students learning experiences at motivational, affective, cognitive, and metacognitive levels (Reiser and Tabak 2014). Scaffolding is a pedagogical practice rooted in sociocultural perspectives of teaching and learning, where learning is considered a result of cognitive processes facilitated by social interaction (Vygotsky 1978). An important hallmark of the sociocultural perspectives is the theory of zone of proximal development (ZPD) proposed by Vygotsky (1978). According to the ZPD theory, knowledge is co-constructed and facilitated by social interactions. Thus, ZPD describes the stage between signs already internalized by the learner and those currently beyond his or her current level of independent problem-solving capabilities. From a practical standpoint, this space supported the expansion of students’ knowledge based on social interactions with more knowledgeable others, which is the foundational framework for scaffolding. Additionally, it reduced the number of times the instructor had to answer the same question since all of the students could access the discussion forum and see both the questions and answers.

Focused Discussion Prompt

The focused discussion prompts had learners engage in academic discussions about relevant topics in the course to foster learning (Andresen 2009). Learners were required to answer the prompt with an initial post and then respond to at least two other classmates’ posts. These prompts were designed to have learners think critically and creatively about aspects of the course. For example:

We have explored several important topics in these past few modules, including things like independent and dependent variables, between-subject conditions and repeated measures, hypothesis testing, and several statistical methods to examine these types of research problems. In this discussion, you are charged with proposing a small research design utilizing these concepts for an educational technology problem. Propose a study with clearly identified independent and dependent variables, and state the hypotheses for this study. Also, provide a short description of which statistical methods you would employ to test the hypothesis. Do not write a dissertation here. The goal is to show that you understand how these concepts can be operationalized in the educational technology context. Be sure to review two of your peers' ideas and provide some feedback on their research designs.

Focused discussion prompts such as these were only implemented in Module 1 (Introduction discussion), Module 4 (Research design discussion), and Module 8 (Instrument design discussion).

Link to Virtual Office Hours

Each module included a link to a scheduled video conferencing meeting for virtual office hours. This was a predetermined date and time in which the instructor made sure to be present for a virtual meeting to answer any questions that learners had. This was a space that learners and the instructor could discuss more in-depth questions and answers than in the open discussion forum, as the video conferencing technology afforded synchronous interactions and the ability to share screens (e.g., to show where problems were occurring or demonstrate a data analysis technique). The video conferencing software used in the course was Zoom™ (Zoom 2020). Notably, the students would generate a list of questions before each Zoom session to focus the discussion.

Mid-term and Final Examinations

Module 5 and Module 9 lasted only 1 week (as opposed to two like the rest of the modules) because they included the mid-term and final examinations, respectively. These were summative assessments designed to evaluate overall student learning performance for the first half and second half of the semester. In order to do this, a published educational technology article and corresponding dataset were provided, and learners were asked (in an open-ended manner) to provide a detailed data analysis plan and results section with interpretations of findings (e.g., “This final examination will require you to apply the concepts you have learned in the previous three modules of the course. You will be employing a combination of descriptive and inferential statistics to test complex hypotheses and explore relationships among these data. You are expected to use appropriate graphics and tables to describe your data. Further, you are responsible for evaluating the missingness of the dataset, and statistical assumptions of the methods you employ….You are expected to write a detailed data analysis plan to address the stated research goals by carefully evaluating the assumptions and outcomes of the statistical analyses employed. You are also expected to write a succinct and meaningfully organized results section using appropriate tables, figures, statistical details about the nature of these data in light of the research goals. Your interpretation of the statistical information will be equally important in assessing whether you met the learning outcomes for this final examination. Your full report should be no longer than 10 double-spaced pages following APA guidelines. You are allowed to include an Appendix in addition to the 10 double-spaced pages.”). These examinations required that learners connected and applied the various concepts and skills learned throughout the course.

Course Context and Facilitation

As this course was designed for online doctoral students in educational technology, instruction, activities, and examples were designed to be delivered through an educational technology lens. In order to achieve this goal, all datasets used in instructional videos, practice assignments, and mid-term and final examinations came from peer-reviewed studies in educational technology. With each dataset, the source (the peer-reviewed paper) was attached to provide more information to the learners. Content within the instructional videos was also tailored to the educational technology context, with examples, language, and ideas relevant to educational technology framing the presentation and approach. This created instruction that was contextually meaningful, relevant, and authentic to the learners, which can foster more engagement and be beneficial instructional events (Herrington et al. 2004).

During the 16-week academic semester, the course instructor exercised a strict communication and interaction policy with students to grade practice assignments within 48 h of the due date and to grade the mid-term and final examinations within 1 week of the delivery due date. The instructor also placed emphasis on providing timely responses to student emails, messages, and discussion posts in Canvas—typically, within a 24-h period. To ensure consistency in grading and evaluation, rubrics were employed and connected to the student learning outcomes in the course for each module. The instructor used the virtual office hours and discussions as a way to enhance both teaching and social presence within the course. Facilitation of the course was clearly outlined on the course syllabus to establish the ground rules of the course.

Research Questions

Based on the development of educational technology as a prominent area of practice and research (Dawson and Kumar 2014; Kumar and Ritzhaupt 2014; Kung and Logan 2014), this study contributes to the field by exploring how the design, development, and implementation of a customized curriculum may influence learners’ evaluation of the learning tools, overall learning experience, and levels of statistics anxiety in an intermediate online statistics course. Understanding how design and instructional elements influence learners’ experiences within the domains of statistics and educational technology in an online learning environment is particularly important because (a) statistics courses are required by most graduate programs in social sciences (Thompson et al. 2019); (b) as many as 80% of graduate students have been found to experience anxiety related to statistics (Onwuegbuzie 2004); (c) the design and instructional elements employed in the course at hand—particularly, the use of authentic datasets and contextualized tasks—have been shown to be beneficial to students in various ways (Grimshaw 2015; Herrington et al. 2004); and (d) there is limited understanding on how statistics courses can be implemented in the context of educational technology programs to prepare future educational technologists to think critically as they learn about and apply statistical analyses to address authentic problems of practice and research (Herrington et al. 2004). To address these issues, the present study was informed by the following research questions:

-

1.

How do learners evaluate the modules, learning resources, and topics covered in the course design and format?

-

2.

How do learners describe their perceptions of the course design, learning materials, and learning activities as well as their overall learning experience in the course?

-

3.

Do learners’ levels of statistics anxiety change over the semester in this course?

Evaluation Method

Participants

Within the online statistics course, 20 out of the 23 graduate students participated in this study. Most participants were Caucasian (45%) and self-identified as female (70%). Participants’ age ranged between 28 and 62 (M = 42.32, SD = 9.04). In response to a “Select all that apply” question about the reasons for enrolling in the course, most students selected the options “Is required for major (program)” (80%), “Fulfills course requirement” (65%), “Will be useful to me in other courses” (55%), and “Will help improve my academic skills” (55%). The option “Is required of all students at the department” was selected by 45% of the students (for full results of the reasons that participants were enrolled in the online statistics course, see Table 3).

Data Collection and Instruments

Following the IRB-approved research protocol, we collected data on students’ demographics, levels of statistics anxiety, and perceptions of the course in three instances using pre, mid-term, and post-surveys in Qualtrics™. To make it easier for students to remember the specifics of each module, Modules 1 to 4 were evaluated in the mid-term survey, and Modules 6 to 8 were evaluated in the post-survey. Below, the nature of the surveys’ prompts is discussed.

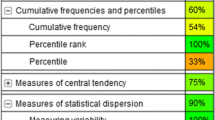

Modules’ Need for Improvement

Students were asked to rate the modules based on their need for improvement. A Likert-type scale from 1 “Doesn’t need improvement” to 5 “Needs significant improvement” was used to collect this feedback. The survey followed a polar point format starting at 1 to guide learners’ interpretation of the scale points (Toepoel and Dillman 2011). If 60% or more of the students indicated that a given module needed improvement, the module was considered having an “unacceptable” quality level.

Overwhelming Modules

Using an “all that apply” item, students were asked to select which modules they perceived as overwhelming based on the number of complex concepts these modules covered in a 2-week period. If a given module was selected by 60% or more of the students, it was considered unnecessarily difficult, implying that further revisions in terms of content presentation and organization are warranted.

Resources and Their Facilitation of Learning

Using a polar point format scale starting at 1, students were asked to rate the various course resources based on how well they facilitated learning in the course using a Likert-type scale item from 1 “Does not facilitate learning” to 5 “Facilitates learning.” If 60% or more of the students indicated that a given resource facilitated their learning, the resource was considered a useful learning tool.

Challenging Topics

Using a drag-and-drop function in Qualtrics™, students ranked specific topics (statistical concepts, statistical equations, and using SPSS) according to how challenging they perceived them to be. A rank of one was used for the most challenging topic and a rank of four for the least challenging topic. The option “Other” was also available to give students a chance to discuss topics that were not listed. If 60% or more of the students indicated that a given topic was the first or second most challenging topic, the topic was considered unnecessarily challenging, implying that further revisions in terms of content presentation are warranted.

Topics Covered

Using an “All that apply” item, students provided feedback on other statistical topics that they would like to see covered in the course. The list included “experimental designs,” “logistic regression,” “nonparametric tests,” and “confirmatory factor analysis.” The options “None” and “Other” were also available for those who thought no other topics should be included or to give students a chance to suggest topics that were not listed, respectively.

Open-Ended Questions

Additionally, open-ended items such as “Please explain/suggest possible areas of improvement for Module 8. Multivariate Analysis of Variance (MANOVA)” and “Please provide any additional comments about your experience in this statistics course” were used to understand learners’ perceptions regarding specific modules, resources, and their overall learning experience in the course.

Statistical Anxiety Rating Scale

The Statistical Anxiety Rating Scale (STARS) instrument (Cruise and Wilkins 1980) was used to collect students’ level of statistics anxiety at the beginning and at the end of the semester. The STARS instrument was selected for this study because it addresses several constructs related to statistics anxiety that are pertinent to the context of statistics courses. More specifically, it addresses class/test anxiety, interpretation anxiety (anxiety felt when interpreting or making decisions based on statistical results), anxiety in asking for help, computation anxiety (negative attitudes toward mathematics), worth of statistics anxiety (negative attitudes toward statistics), and fear of statistics teachers (negative attitudes toward statistics instructors). It is worth noting that STARS has been used in several studies, with different types of learners, and in different contexts (DeVaney 2016; Hanna et al. 2008; Papousek et al. 2012). For the present study, the scores also suggest good internal consistency (Table 4).

Despite the lower Cronbach’s alpha coefficient related to “Fear of asking for help” in the post-survey (α = 0.59), most of the constructs had high Cronbach’s alpha (α) coefficients (> 0.80) corroborating other studies in which confirmatory factor analyses provided further evidence to support the instrument’s original six-factor structure as well as its construct validity and internal consistency reliability (DeVaney 2016; Papousek et al. 2012).

Data Analysis

Quantitative

The researchers used R© (3.5.2) to organize the data, build graphs, calculate descriptive statistics, and run statistical analyses. A paired sample t tests was used to compare learners’ pre and post statistics anxiety scores, and the Shapiro-Wilk test of normality was used to assess data distribution. Then, we used paired samples Wilcoxon test (also known as Wilcoxon signed-rank test) to account for the non-normal distribution related to the STARS constructs.

Qualitative

For the qualitative data analysis, we used the constant comparative method framework, which involves four iterative processes: (1) comparing incidents applicable to each category, (2) integrating categories and their properties, (3) delimiting the theory, and (4) writing the theory (Glaser 1965). Two of the authors coded the data separately following the processes above where each incident in the data (e.g., student reports dissatisfaction with the textbook) was compared to previously identified codes until the theoretical properties of the category were found (first stage). After continuous comparisons of instances, codes, and categories (second stage), the categories themselves were compared and integrated to illuminate patterns in the data (e.g., 72 incidents related to instructor or instructional approaches). Then, the categories were modified and combined based on their most relevant properties (third stage) to support logical clarity and address theoretical saturation. Finally, with the major themes identified, the writing was conducted based on codes, memos, and theory (fourth stage).

Results

Research Question One

As a reminder, our first research question was “How do learners evaluate the modules, learning resources, and topics covered in the course?”. To address this question, we used five survey items concerning areas for improvement, perceived difficulty level of the modules and topics, course resources, and suggestions for additional topics.

Modules’ Need for Improvement

According to students’ rating, most modules did not need improvements or only needed minor improvements (Fig. 1). As a reminder, the Likert-type scale used to collect this feedback ranged from 1 “Doesn’t need improvement” to 5 “Needs significant improvement.” For Module 1 (Descriptive and Inferential Statistics), Module 2 (Exploring Data with Graphs), and Module 7 (Exploratory Factor Analysis and Reliability), 75% of the students were satisfied with the modules and its contents. A similar trend was seen for Module 6 (Correlation and Linear Regression Models) and Module 8 (Multivariate Analysis of Variance), for which 80% of the students were satisfied. However, for Module 3 (Comparing Means and Analysis of Variance) and Module (Analysis of Covariance, and Factorial and Repeated Measures ANOVA), the evaluation was in the opposite direction with 40% and 50% of the students indicating that these modules needed more substantial improvements.

Overwhelming Modules

Aligned with the results above, most students (85%) in the mid-term survey selected Module 3 (Comparing Means and Analysis of Variance) and/or Module 4 (Analysis of Covariance, and Factorial and Repeated Measures ANOVA) as overwhelming in terms of the number of complex concepts to be covered in a 2-week period (Fig. 2). Importantly, no students selected Module 1 (Descriptive and Inferential Statistics). As for Modules 6 to 8, the majority of students (75%) selected the option “None,” and no students selected module eight (Multivariate Analysis of Variance). These results indicate that the contents related to “Descriptive and Inferential Statistics” and “Multivariate Analysis of Variance” covered in Module 1 and Module 8 were more manageable to students than the content related to “Comparing Means and Analysis of Variance” and “Analysis of Covariance, and Factorial and Repeated Measures ANOVA” covered in Module 3 and Module 4, which may require further revisions to split the content into smaller instructional units.

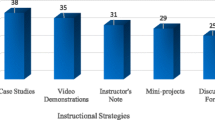

Resources and their Facilitation of Learning

When asked about which course resources facilitated their learning, a large percentage (45%) of students indicated that the textbook did not facilitate their learning (Table 5). On the other hand, the instructional videos (85%), sample datasets (60%), and practice assignments (80%) were selected by the students as the resources that most facilitated their learning in the course. These results suggest that while students were generally satisfied with several of the resources in the course, the textbook was not as helpful to students as the instructor expected.

Challenging Topics

Students found that statistics concepts (85%) and statistical equations (85%) were the first or second most challenging topics in the course (Table 6). The two students (10%) who selected the option “Other” as the most challenging mentioned that “reading the text. It’s full of junk that is distracting” and “Putting everything together” were the most challenging aspects in the course. Only one student who selected “Other” mentioned the “interpretation of data” as a somewhat challenging topic. Eighty-five percent of students selected the option “Other” for what they thought was the least challenging topic in the course. These students either did not offer details about their selection or mentioned “textbook,” “Understanding assumptions and what those meant,” and “Select the most appropriate statistic model for a real problem” as the least challenging topics in the course.

Topics Covered

Finally, when asked about what other statistics-related topics students would like to see covered in the course, 35% of the students selected “Experimental designs,” and 15% of them selected, both, “Experimental designs and Confirmatory Factor Analysis.” The option “None” was selected by 20% of the students. These results suggest that the inclusion of topics such as Experimental design would be welcomed by the students. A more in-depth understanding of learners’ perceptions regarding the course, its content, design, and learning materials was addressed in the qualitative data analysis described in “Research Question Two.”

Research Question Two

The constant comparative method (Glaser 1965) framework and its iterative process of data analysis were applied to answer “Research Question Two,” which asked “How do learners describe their perceptions of course design, learning materials, and learning activities as well as their overall learning experience in the course?”. This culminated in three categories (design, materials, and facilitation) and four related properties (organization/structure, content, assessments, resources, and instructor/instruction) that made up these categories. This framework was helpful to illustrate similar and diverse components that were identified through the constant comparative method where insights about various aspects of the course were found within and across responses from individuals. These categories and properties that emerged from the data are displayed in Table 7, along with descriptions, examples, and frequencies of responses that included them. These themes are further elaborated in the following section.

Design

One category that emerged from the data was course design. Participant responses that were categorized as design were those regarding design decisions that were established prior to the course’s launch. Analysis resulted in two main properties regarding design: course organization and structure and course content.

Organization and Structure

Responses that were coded as regarding organization and structure touched on the course layout. The structure and duration of the course as a 16-week course design, including the 2-week modules, was a recurrent topic in the data. Students discussed how setting up the course in this manner impacted them in terms of completing the coursework:

Thank goodness this is a 16-week course! I felt that the two-week modules were doable, but they were very time-consuming.

I do like having 2 weeks to work on each assignment. That organization part works very well. You don't feel rushed but it also allows more time for analysis and hoping you didn't miss anything.

Corroborating the evaluation results regarding Modules 3and 4 that were perceived as overwhelming, participants described how the organization of the course made study time challenging given the amount of topics covered:

I think that there is too much information over too many weeks. I think smaller chunks of information per week may be worth trying. For module 3 it was still manageable, but Module 4, it was not.

The amount of time to navigate the videos from the textbook, the actual textbook, the online resources, the Power Points, as well as the instructor's videos, articles, assignments, quizzes, and online sessions and discussions is difficult for most working professionals.

Another aspect of the course organization that emerged from the data was the timing set for certain assessments. There were multiple mentions of how having timed quizzes and 1-week mid-terms and finals impacted students in terms of stress and anxiety:

It was hard to ascertain what was salient and anticipate what's on the quiz. 20 minutes didn't feel long enough.

I am a little nervous about finishing the midterm exam in one week.

Content

Responses related to the content included those mentioning the nature of the subject matter of which the course was comprised of. One topic that emerged from the data was the idea that there was enough of an emphasis on making sure learners had sufficient prior knowledge when entering the course. Several participants mentioned that they could have used a “refresher” or that the course “assumed some baseline knowledge that I didn’t have”:

I could have used a mini-lecture(s) on the basics of descriptive and inferential statistics to help level set given the students' various statistics and math backgrounds (and/or lack of). Perhaps a pretest or an assessment that we all can take could guide the content of this mini-lecture(s).

Include a review of the concepts we covered in the first [previous] course this summer, do comprehension checks, needs analysis, or pre-test to determine what we already know and where the gaps may be.

Another topic that emerged was the manner in which the course content included authentic, real-world tasks relevant to the learners (e.g., conducting research, writing and publishing peer-reviewed articles). Learners discussed how they enjoyed different authentic aspects of the design of the course such as the preparation for learners “to submit results sections” of academic papers and working “with real (or mostly real) datasets and access(ing) the research article” in which those datasets came from. Suggestions to make the content even more applicable to practice were described in students’ feedback:

The course is good. I really like that you set it up as an applied course: Use SPSS to conduct an analysis, use Excel to refine graphs, and write it up in APA format.

[…] the connection/application to research was appropriate. I might reference more articles where these are clearly highlighted to make a clearer connection what that looks like in practice.

Many participants mentioned the content would describe what they were supposed to do, but did not include reasons behind it. As one learner stated, “I feel like I’m ascertaining the what, but not the why”:

Good module but, in each module it may be beneficial to explain a little more about why some options are not used in the analysis.

I also have difficulty understanding how to interpret the statistics. I can use the software all day, but I'm not sure why I'm doing what I'm doing at this point. It is slowly coming together but it is so very difficult.

This course was designed to cover 14 topics within a semester. This ambitious approach may have contributed to students’ feedback regarding the need for greater connections between the content and other components of the course:

For this course, I wonder if covering less, but going deeper with those key concepts would be possible/fruitful? I almost would rather make connections between the module concepts and an actual article that uses the statistics topic we're concerned with, go over the tutorial/practice running the test in SPSS, then apply what was learned to a write-up.

For each module it would help if the instructor used one hour of the class meetings as a high level review of the big concepts in each module, to help connect the concepts with the theory. For example, a clearer example of exactly why you would use certain analysis.

Materials

Another category that emerged from the data was materials. Participant responses that fell under the materials category expressed perceptions of assessments and resources in relation to the course and participants’ learning.

Resources

While many learners acknowledged that the video tutorials and the connections of the learning resources with applications to research were helpful, most learners criticized the textbook used in the course stating that it presented distracting information and jokes:

The videos were great, but the textbook chapters were overwhelming with extraneous personal information about the author that I did not want to know. I just wanted the nuts and bolts. The quiz was especially stressful because it was timed (I ran out of time) and I had no idea what to expect (thank you for changing the time constraints on the future quizzes).

The feedback above was echoed by another student who criticized the textbook but also emphasized the value of the video tutorials and the benefit of collaboration among learners who created questions to make the synchronous online meetings carried out by the instructor more productive:

The instructor's videos are amazing - I could not get through the course without them. The online sessions are great as well and made better because one cohort member starts a list of questions for the professor. Honestly, I'm amazed that I've made it this far! However, I'm just trying to take it one day at a time, devote as much time as possible to the assignments and reading, etc. to get through. The textbook is crazy, yet online it is recommended by several professors - the only thing I can imagine is they like the "extras." We are overrun with too much nonsense and pulling out what is important is just too time consuming. There must be a better text or someone needs to write one.

Assessments

Positive and negative feedback about quizzes, assignments, and exams were also frequent codes in the data. For example, a student said “I think the assignments are 100% on par with getting us ready to submit results sections,” which highlights the perceived alignment between the learning material and the practical use of statistics. Another student provided a different angle when discussing alignment while maintaining a positive tone about the “hands-on” nature of the activities in the course:

I feel the PowerPoint slides, practice assignment and quiz for each module could be better aligned. Right now, they appeared to be isolated and unrelated segments.

Love the practice assignments, mid-term and final exam. These hands-on activities really helped me to connect the dots and apply what I learned to practice.

While assessment items such as quizzes and the mid-term exam were described as “too tricky” for beginners or “really anxiety producing,” respectively, the learning materials and other resources in the course were perceived as “massively helpful,” contributing to positive affective outcomes:

The how to videos are amazing and have contributed the most to my learning. Completing the write ups gives me a massive sense of accomplishment that I enjoy, even if they're challenging at times.

A narrated Powerpoint by [the instructor] to the textbook slides would be a great addition, given how massively helpful the live Q&As are. […]Overall, I'm understanding more than I thought I would, but wonder how much better I'd be if there were better learning materials.

Facilitation

The final category that emerged from the qualitative data was facilitation. Participant responses regarding facilitation typically touched on instructor guidance (or lack thereof) and instructional strategies to support learning outcomes. Instructional strategies mentioned included those already used by the instructor or suggestions from learners. For example, feedback about the implementation of reviews as an instructional strategy to support learners at the start of the course was mentioned as a way “to provide a few more core references on basic statistics as a refresher for participants who may need it.”

Common suggestions by the students included the review of specific topics (e.g., statistical assumptions), “an overview of the module with videos summarizing concepts in each chapter from the readings and how they fit with one another,” and the implementation of reviews during the office hours to clarify concepts and analysis:

For each module it would help if the instructor used one hour of the class meetings as a high level review of the big concepts in each module, to help connect the concepts with the theory. For example, a clearer example of exactly why you would use certain analysis.

To improve scaffolding (Reiser and Tabak 2014), reviews were also proposed for modules and concepts perceived as more challenging:

This [module 3] is where the course becomes more difficult. Providing a videotaped lecture would be helpful to provide clarity on more difficult concepts. I feel as though I'm having to teach myself. For people who are not comfortable with stats or mathematics in general, more instructor scaffolding is needed.

After reflecting about the difficulty of Module 4, the following student suggested that more guidance about models and related outputs were necessary to support students’ understanding of the similarities and differences between them:

As you said, this was the most difficult module [4]. Some of the models we ran in this module had similar output to the models (t-tests) we ran in the previous modules, so I think more emphasis needs to be made on which models we can run in which situation and why. I think I have a tendency to run all the models just to be safe, which is really redundant and not necessary.

The same student reported that the overview of all modules in an online meeting was a helpful resource “that really helped clarify things” for her.

Taken together, these findings align with students’ evaluation of the learning materials, modules, and resources addressed in “Research Question One”. Furthermore, they contribute to a deeper understanding of how design choices and instructional strategies influenced students’ perceptions and overall learning experience in the course. It is worth noting that although anxiety was present in students’ feedback, it was not clear whether and how students’ level of anxiety changed throughout the semester. Thus, “Research Question Three,” presented below, was helpful to uncover how the different facets of statistics may have influenced learners’ affective outcomes.

Research Question Three

To address this question (Do learners’ levels of statistics anxiety change over the semester in this course?), we used the pre and post data from the STARS to document changes in the students’ level of anxiety before and after the course experience. Results from the paired samples t tests showed that students’ statistics anxiety decreased over the semester for three of the statistics anxiety constructs, namely, “test and class,” “interpretation,” and “computation anxiety.” More specifically, we found a statistically significant difference (t(19) = 2.60, p = .018) between student’s pre and post anxiety scores related to test and class anxiety (pre: M = 3.21, SD = 1.03; post: M = 2.75, SD = 0.87), which indicates that students’ perceived anxiety related to statistical tests and statistics classes reduced from the beginning to the end of the semester. Similarly, there was a statistically significant difference (t(19) = 3.44, p = .003) between student’s pre and post anxiety scores related to interpretation anxiety (pre: M = 2.55, SD = 0.71; post: M = 2.07, SD = 0.6). This suggests that students’ perceived anxiety related to the interpretation of statistical results or with the idea of making decisions based on statistical results reduced over the semester.

Finally, there was a statistically significant difference (t(19) = 2.43, p = .025) between students’ pre and post computation anxiety scores (pre: M = 1.94, SD = 0.99; post: M = 1.69, SD = 0.76), which indicates that as the semester progressed, there was a reduction in students’ negative attitudes toward mathematics. Because computation anxiety did not meet the assumptions of normality, we also ran the Wilcoxon signed-rank test, which showed a statistically significant result (p = 0.012) similar to our previous result. In other words, the median score at the beginning of the semester was higher (Mdn = 1.5) compared to the end of the semester (Mdn = 1.43). Thus, albeit not robust, this difference was consistent and statistically significant for both the parametric and nonparametric tests. We found no statistically significant differences between pre and post scores for “ask help,” “worth of statistics,” and “fear of statistics teachers” constructs (Fig. 3).

Discussion and Lessons Learned

As more academic programs make the ambitious transition to online learning, we must ensure there is useful and frequent dialog in our communities about these teaching experiences to share ideas, lessons learned, and caveats in order to help other educators and improve our academic programs. This study provided one such example for the educational technology community about how to teach relevant and authentic online statistics to an increasingly important target population—doctoral students in an online educational technology degree program. This paper has provided a brief overview of the course design and development and evaluation results from the first offering of the course. While we believe that the results from this study are transferable to similar situations and contexts, we strongly advise against attempting to generalize the findings from this study to other academic programs or students. First off, the sample size in this study is representative of an online single course and in a single instance in time. Further, all of the students enrolled in this online doctoral program are employed full-time in educational technology professional positions and eventually have to defend a dissertation in their professional context as an independent piece of scholarship. These circumstances may be different than a statistics course taught in political science or for an undergraduate degree audience. In light of this caution, we believe there are many important findings from this systematic evaluation worth discussing and several lessons learned worth noting.

We believe an important feature of the course is the focus on providing the doctoral students relevant and authentic learning experiences designed to prepare them for independent scholarship. This was achieved with a customized curriculum that contextualized the quantitative methods commonly employed to address educational technology research problems. A common criticism of traditional quantitative method courses taught across our academic institutions is that the methods learned are often based of contrived datasets meeting all statistical assumptions and are devoid of any relevant context for the students. Often, examples are provided from different fields or the datasets used for practice are not “messy” like real-world datasets. To avoid this potential problem, a number of course design features were employed to create both relevant and authentic learning experiences. Early on in the design of the course, the team decided to employ real-world datasets for students to explore from the field based on published peer-reviewed publications that could be referenced to check their work or compare results. These instructional resources were carefully sequenced in learning activities that would ensure initial learning, near transfer of learning, and far transfer of learning.

It is notable that each module in the course presented two different datasets for the students. The first dataset was the “initial” learning dataset in which the instructor-created instructional videos referenced and used as worked examples to demonstrate how to conduct the analysis and interpret the results. Students were encouraged to download this dataset and follow along in the instructional videos for practice. The second dataset in each module was carefully aligned with the practice assignment, in which the students would demonstrate what they had learned from the instructional videos on a different dataset using the same statistical techniques. The practice assignments in each module served as a form of “near” transfer of learning in which the students used what they had learned in a similar but different situation. Finally, in Module 5 (mid-term examination) and Module 9 (final examination), students were expected to demonstrate “far” transfer of learning by fully analyzing yet another different dataset only with a research goal and associated publication available. In this situation, the students were expected to carefully select a statistical analysis technique to properly analyze the dataset based on what they had learned up to that point in the course. This carefully sequenced set of learning experience and materials appeared to “work” since students rated these activities as helpful in facilitating their learning.

While several of the course modules were designated as “acceptable” for the students and as not requiring substantive revisions, one module in particular stood out from our systematic evaluation: Module 4: Analysis of Covariance, and Factorial and Repeated Measures ANOVA. By this point in the course, students had studied both t tests and one-way ANOVA using the real-world datasets and practice assignments. Module 4 required the students to examine the ANCOVA model and both factorial and repeated measures ANOVA. This design may have been too ambitious in hindsight, and several of the students struggled with some of the research design aspects of these statistical techniques. For example, students had to clearly master both between-subject and within-subject (a.k.a., repeated measure) variables and designs to fully understand why a specific statistical technique was selected for an analysis. In fact, the mid-term examination required the students to employ a mixed design involving both between-subject and within-subject variables in the same model. The presentation of all of these concepts concurrently appeared to confuse students about which data analysis technique was most appropriate for the research problem. As a lesson learned, we plan to split this module into two modules to better “chunk” the contents and to not overwhelm the students on these difficult conceptual ideas and techniques.

One area that came to fruition from our qualitative data was that a “review” of the prerequisite materials as either a required or optional learning experience within the course design would be beneficial to learners. While this specific course is taken as a second level statistics course in their academic program, the first course focused more on general research design ideas like objectivity, validity, and reliability and less on the appropriate statistical methods for a given research problem. As evidenced by the variability in the student anxiety levels in the pre-survey administration, there was a good amount of variability across the students in the course. As a possible solution to this problem, the design team has decided to take two actions. First off, the prerequisite course will be adjusted to include more statistical analysis techniques aligned to specific research designs (e.g., experimental) and will employ new course features similar to those adopted in this course. Second, this course will provide an optional review module and encourage students to complete this module prior to engaging in the required modules to better prepare for the learning sequence and contents. As learned, we cannot assume that all students will enter this course with the same levels of prerequisite knowledge.

Another aspect of the course design that took us by surprise was the overall negative response to the selected textbook in the course, which was a common theme in both the quantitative and qualitative analyses. While the Field’s (2013) Discovering Statistics Using SPSS textbook is widely used in the academy both as a required textbook in quantitative method courses and as a resource for doctoral students during their dissertation, the students found his use of inappropriate jokes and superfluous content (meant to make the topic of statistics more engaging to learners) to distract them from the important content. One of the primary reasons this textbook was adopted is because it is one of the few textbooks available that cover the statistical techniques and their assumptions using SPSS. Other textbooks cover similar contents, but do not address some of the statistical analysis techniques (e.g., several textbooks did not have chapters on Exploratory Factor Analysis) identified as essential by the instructor and design team. Plans are already in the works to find an alternative to this textbook before the course is offered again. While this is proving more difficult than it sounds, the results from our systematic evaluation provide conclusive evidence that the textbook was a problem and hindered the student learning experience.

A few smaller problems were identified in the facilitation of the course that were adjusted as the semester progressed based on student feedback and discussion. Two examples are the use of time limits on the concept quizzes and lack of a clear agenda for the virtual office hours facilitated in each module of the course. First, the use of time limits on the concept quizzes was elected as a mechanism to ensure that the students had completed the assigned readings before attempting the quiz. That is, we were trying to avoid students completing the quiz without having studied first and to prevent the behavior of simply “looking up” answers. However, after the first timed quiz in Module 1, it became clear that student needed more time and that the time limit was causing unnecessary anxiety among the students. Students actively raised this concern during the virtual office hours in Module 2, and several students confirmed that this was an experience at large. Thus, the team decided to remove the time limit on the subsequent concept quizzes to provide a necessary accommodation. Another problem is that the first virtual office hour session was not structured, which resulted in an inappropriate use of time. The instructor assumed students would all come to the virtual office hours session with questions available. One student suggested that all students compile a list of questions each module prior to the virtual office hours and spend the time providing answers to these questions which appeared to focus the time and agenda. While attendance to virtual office hours was optional (sessions were recorded), attendance in each module was very high. Both of these small problems represented inaccurate assumptions by the design team but are also examples of how small problems can be addressed in facilitation of the actual course.

The results from the pre and post administration of the STARS provided the design team and instructor encouraging outcomes for the first offering of the course. As a reminder, three of the sub-constructs of the STARS resulted in statistically significant decreases in statistics anxiety among the students from the pre-survey to the post-survey: “test and class anxiety,” “interpretation anxiety,” and “computation anxiety.” Test and class anxiety subscale assesses anxiety related to students taking statistics courses and completing the examinations in such courses. The interpretation anxiety sub-construct is the anxiety of student experiences when attempting to interpret statistical results. Finally, the computation anxiety sub-construct has to do with the anxiety a student experiences when solving mathematical equations and problems associated with the calculation of statistics. Importantly, students’ feedback about equations and statistical concepts suggests that further scaffolding of the content (Reiser and Tabak 2014; Vygotsky 1978) can be used for these topics. While no comparison group was used as a control, these preliminary findings suggest that the approach taken with the course design and facilitation may have reduced students’ statistics anxiety, which can have a long-lasting effect on the students choosing to use quantitative methods in their dissertations and their future professional careers. While the other three subscales (teacher anxiety, worth anxiety, and ask for help anxiety) did not result in statistically significant differences, none of the sub-constructs appeared to move in the opposite direction of potentially increasing a student’s statistics anxiety levels. These findings are important as many of the students may need to employ quantitative methods to answer their research questions in their dissertations.

Prior to starting this project, we intentionally scanned the literature in the broad field of educational technology for sample publications addressing how to design and teach an online statistics course to doctoral students. However, we were unable to pinpoint papers focused on this topic, which was an impetus for the present study. In this paper, we have documented an approach to teaching an intermediate statistics course to online doctoral students in the field of educational technology. Using both quantitative and qualitative data, we systematically evaluated this course based on the response from students on its first offering. As outlined in this discussion, we have learned a great deal from this experience and have made substantive plans to revise the course in light of this feedback prior to the next offering. Our hope in sharing this experience with the broader educational technology community is to continue and expand the tradition of sharing dialog on our teaching experiences to help other educators. At minimum, this manuscript is a call for future efforts to document and share experiences teaching online statistics courses to students in our field.

References

Andresen, M. A. (2009). Asynchronous discussion forums: Success factors, outcomes, assessments, and limitations. Journal of Educational Technology & Society, 12(1), 249–257.

Bolliger, D., & Martindale, T. (2001). Student satisfaction in an online master’s degree program in instructional technology (pp. 4–7). Atlanta: Proceedings of the National Convention of the Association for Educational Communications and Technology.

Cruise, R. J., & Wilkins, E. M. (1980). STARS: Statistical anxiety rating scale. Unpublished Manuscript, Andrews University, Berrien Springs, MI, 20.

Dawson, K., & Kumar, S. (2014). An analysis of professional practice Ed.D. dissertations in educational technology. TechTrends., 58(4), 62–72.

DeVaney, T. A. (2010). Anxiety and attitude of graduate students in on-campus vs. online statistics courses. Journal of Statistics Education, 18(1), 1–15. https://doi.org/10.1080/10691898.2010.11889472.

DeVaney, T. A. (2016). Confirmatory factor analysis of the statistical anxiety rating scale with online graduate students. Psychological Reports, 118(2), 565–586. https://doi.org/10.1177/0033294116644093.

Dixon, M., Beveridge, P., Farrior, C., Williams, B. A., Sugar, W., & Brown, A. (2012). Development of an online orientation for an instructional technology masters program. TechTrends, 56(6), 44–48.

Field, A. (2013). Discovering statistics using IBM SPSS statistics (4th. ed.). Sage Publications Ltd.

Glaser, B. G. (1965). The constant comparative method of qualitative analysis. Social Problems, 12(4), 436–445. https://doi.org/10.2307/798843.

Grimshaw, S. D. (2015). A framework for infusing authentic data experiences within statistics courses. The American Statistician, 69(4), 307–314. https://doi.org/10.1080/00031305.2015.1081106.

Hanna, D., Shevlin, M., & Dempster, M. (2008). The structure of the statistics anxiety rating scale: A confirmatory factor analysis using UK psychology students. Personality and Individual Differences, 45(1), 68–74. https://doi.org/10.1016/j.paid.2008.02.021.

Herrington, J., Reeves, T. C., Oliver, R., & Woo, Y. (2004). Designing authentic activities in web-based courses. Journal of Computing in Higher Education, 16(1), 3–29. https://doi.org/10.1007/BF02960280.

Ku, H. Y., Plantz-Masters, S., Hosler, K., Diteeyont, W., Akarasriworn, C., & Lin, T. Y. (2012). An analysis of educational technology-related doctoral programs in the United States. In M. Orey, S. Jones, & R. Branch (Eds.), Educational media and technology yearbook (pp. 99–112). New York: Springer.

Kumar, S. & Ritzhaupt, A. D. (2014). Adapting the community of inquiry survey for an online graduate program: Implications for online programs. E-learning and Digital Media. Special Issue: Community of Inquiry, 11(1), 59-71. https://doi.org/10.2304/Felea.2014.11.1.59.

Kung, M., & Logan, T. J. (2014). An overview of online and hybrid doctoral degree programs in educational technology. TechTrends, 58(4), 16–18.

Lee, C. Y., Dickerson, J., & Winslow, J. (2012). An analysis of organizational approaches to online course structures. Online Journal of Distance Learning Administration, 15(1), 1–4.

Lombardi, M. M. (2007). Authentic learning for the 21st century: An overview. Educause learning initiative, 1, 1–12.

Mandernach, B. J., Gonzales, R. M., & Garrett, A. L. (2006). An examination of online instructor presence via threaded discussion participation. Journal of Online Learning and Teaching, 2(4), 248–260.

Miller, M. D., Linn, R. L., & Gronlund, N. E. (2013). Measurement and assessment in teaching (11th ed.). Upper Saddle River: Prentice-Hall.

Mills, J. D., & Raju, D. (2011). Teaching statistics online: A decade's review of the literature about what works. Journal of Statistics Education, 19(2), 1–28. https://doi.org/10.1080/10691898.2011.11889613.

Onwuegbuzie, A. J. (2004). Academic procrastination and statistics anxiety. Assessment & Evaluation in Higher Education, 29(1), 3–19.

Papousek, I., Ruggeri, K., Macher, D., Paechter, M., Heene, M., Weiss, E. M., et al. (2012). Psychometric evaluation and experimental validation of the statistics anxiety rating scale. Journal of Personality Assessment, 94(1), 82–91. https://doi.org/10.1080/00223891.2011.627959org/10.1080/00223891.2011.627959.

Reiser, B., & Tabak, I. (2014). Scaffolding. In The Cambridge Handbook of the Learning Sciences, Second Edition (pp. 44–62). Cambridge University press. https://doi.org/10.1017/CBO9781139519526.005.

Richey, R. C., & Klein, J. D. (2014). Design and development research. In J. Spector, M. Merrill, J. Elen, & M. Bishop (Eds.), Handbook of research on educational communications and technology (pp. 141–150). New York: Springer.

Seaman, J. E., Allen, I. E., & Seaman, J. (2018). Grade increase: Tracking distance education in the United States. Babson Survey Research Group. http://www.onlinelearningsurvey.com/highered.html

Songsore, E., & White, B. J. G. (2018). Students’ perceptions of the future relevance of statistics afer completing an online introductory statistics course. Statistics Education Research Journal, 17(2), 120–140.

Thompson, C. J., Leonard, L., & Bridier, N. (2019). Online discussion forums: Quality interactions for reducing statistics anxiety in graduate education students. International Journal of E-Learning & Distance Education, 34(1), 1–31.

Toepoel, V., & Dillman, D. A. (2011). Words, numbers, and visual heuristics in web surveys: Is there a hierarchy of importance? Social Science Computer Review, 29(2), 193–207. https://doi.org/10.1177/F0894439310370070.

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Cambridge: Harvard university press.

Wang, S. K. (2006). Learning hands-on skills in an online environment: The effectiveness of streaming demonstration animation. Journal of Interactive Online Learning, 5(1), 1–14.

West, R. E., Thomas, R. A., Bodily, R., Wright, C., & Borup, J. (2017). An analysis of instructional design and technology departments. Educational Technology Research and Development, 65(4), 869–888. https://doi.org/10.1007/s11423-016-9490-1.

Zoom for video, conference rooms, and phone (2020). Retrieved from https://zoom.us/.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

There are no potential conflicts of interest in this research. The authors declare that they have no conflict of interest. This research involved human participants and was approved by an Institutional Review Board (IRB) prior to data collection. Ethical conduct was executed in all phases of the present research study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ritzhaupt, A.D., Valle, N. & Sommer, M. Design, Development, and Evaluation of an Online Statistics Course for Educational Technology Doctoral Students: a Design and Development Case. J Form Des Learn 4, 119–135 (2020). https://doi.org/10.1007/s41686-020-00051-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41686-020-00051-5