Abstract

This study aimed to examine changes in Healthy Eating Index-2010 (HEI-2010) scores, components, and energy intake between automated Bite Counter (Bite) and traditional diet tracking mobile app (App) groups. This was a secondary analysis of the DIET Mobile study, a 6-month weight loss intervention. Assessments were conducted at baseline, 3 and 6 months. Twenty-four-hour dietary recall data were collected. Overweight/obese adults (N = 81) were randomized to Bite or App groups. The intervention was delivered through behavioral podcasts. Participants were provided customized calorie/bite goals and used their device to track intake. We assessed changes in HEI-2010 scores from baseline to 6 months between groups. t tests, chi-square, and repeated measures ANOVA were performed. Models included time, group, and group×time interaction, controlling for no other covariates. There were no significant changes in HEI-2010 scores, components, or energy intake between groups at 3 or 6 months. This study found that both the Bite and App groups were able to reduce their energy intake and there was no difference in changes in diet quality between groups, which provides some support for using the less intensive, more automated method (Bite Counter) for long-term dietary self-monitoring. The study had a low sample size according to power calculations. Future interventions aimed at improving diet quality through mHealth technology should investigate the potential to develop a new app or modify an existing app that would allow for dietary self-monitoring that provides specific feedback on how users’ diets align with diet quality components in the HEI to improve overall diet quality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Obesity is one of the most burdensome and costly chronic health conditions worldwide, affecting over one-third (36.5%) of US adults (Lehnert et al. 2013; Ogden et al. 2015). Burgeoning evidence supports the use of mobile health (mHealth) technology as innovative and effective strategies for promoting weight loss through self-monitoring (Hutchesson et al. 2015) by providing customized and detailed feedback on eating behavior, including caloric, macronutrient, and micronutrient information (Hingle and Patrick 2016).

More frequent feedback on dietary intake is associated with higher self-monitoring adherence and greater weight loss (Turk et al. 2013). mHealth technology has the capacity to facilitate changes in dietary intake, such as increasing the intake of fruits, vegetables, and whole grains, and decreasing added salt and sugar, which can result in significant public health improvements (Hingle and Patrick 2016). Diet quality, the overall pattern of eating, captures the synergistic effect of foods as they are consumed as complex meals (Messina et al. 2001).

Much of the current mHealth technology depends on self-reported data input into mobile apps (G. M. Turner-McGrievy et al. 2013); however, emerging technology is incorporating wearable technology to capture real-time data (Dong et al. 2012; Sun et al. 2014). The Bite Counter, a wearable bite-counting device provides a more automated approach to dietary self-monitoring compared to traditional diet apps (Dong et al. 2012). The Bite Counter provides an average estimation of energy intake based on the number of bites taken using a kilocalories per bite (KPB) formula that is customized to participants’ demographic characteristics. It aims to decrease the burden of daily self-monitoring, which has the potential to increase longevity of self-monitoring compared to more detailed and intensive methods (e.g., diet tracking apps). While these newer technologies aim to be more objective in capturing real-time data than current methods, they are unable to provide the high level of dietary feedback that traditional mobile diet apps provide (i.e., specific energy, macronutrient, and micronutrient intake) (Boushey et al. 2016).

Given that wearable diet tracking methods may be less burdensome, there is a need to see how these methods compare to a standard diet app. The goal of the present study was to examine differences in dietary intake and diet quality between individuals randomized to track their diet using a Bite Counter (Bite) or a diet-tracking mobile app (App) as part of a remotely-delivered 6-month behavioral weight loss intervention. The primary hypotheses were: (1) the Bite group would have a greater reduction in energy intake (total kcals) and (2) the App group would have greater improvements in diet quality (via the Healthy Eating Index-2010). These hypotheses were based on the idea that the Bite group would receive immediate feedback regarding the number of bites taken during each eating occasion, while the app group would receive more detailed dietary feedback once items were entered into the app.

Methods

The Dietary Intervention to Enhance Tracking with mobile devices (DIET Mobile) study was a 6-month randomized weight loss trial comparing two different mobile diet self-monitoring methods: a traditional diet app (Calorie Counter by FatSecret) and a wearable bite-tracking device (Bite Counter). Overweight or obese (BMI 25–49.9 kg/m2) adults in South Carolina were recruited through worksite listserv messages, fliers, and newspaper ads. Participants were eligible if they met the weight criteria, were 18–65 years, interested in losing weight, owned an Android or iPhone, had a stable medical status (e.g., no uncontrolled thyroid conditions or diabetes), and were willing to accept random assignment to a diet self-monitoring condition.

During orientation, participants signed an informed consent form, completed baseline questionnaires, had their height and weight assessed, and completed a 24-h dietary recall (representing a weekday) using the Automated Self-Administered 24-h dietary recall (ASA24™) (Kirkpatrick et al. 2014). Participants were contacted later to complete a second unannounced dietary recall (representing a weekend day). ASA24 recall data were used to calculate Food Patterns Equivalents Database values before using the ASA24 HEI scoring macro provided by the National Cancer Institute to calculate HEI-2010 scores per individual across both days of recalls (National Cancer Institute 2016). HEI-2010 scores measure adherence to the 2010 Dietary Guidelines for Americans (Guenther et al. 2013; U.S. Department of Agriculture and U.S. Department of Health and Human Services 2010). The HEI-2010 is comprised of 12 total dietary components, including nine adequacy components (areas where current consumption among the general population is too low) and three moderation components (areas where current consumption is too high) (Guenther et al. 2013). Adequacy components include: total fruit, whole fruit, total vegetables, greens and beans, whole grains, dairy, total protein foods, seafood and plant proteins, and ratio of unsaturated to saturated fatty acids. Moderation components include: refined grains, sodium, and empty calories. A major benefit of the HEI is that it uses density standards for scoring (i.e., intake per 1000 kcals), which are independent of an individual’s energy requirement. Total HEI-2010 scores range from 0 to 100. The average total HEI score presented as mean + SE for U.S. adults 18–64 years is 58.3 + 0.98 (United States Department of Agriculture (USDA) n.d.). Prior to revealing group assignment, data assessors were blinded to study conditions. The University of South Carolina Institutional Review Board approved the study and all participants provided written informed consent.

The 6-month weight loss intervention was delivered remotely. Participants received behavioral weight loss information through twice-weekly podcasts based on Social Cognitive Theory (Bandura 2004) and the Diabetes Prevention Program (Diabetes Prevention Program (DPP) 2002). Participants in the App group used a traditional diet app (Calorie Counter by FatSecret) to track all foods and beverages consumed each day. To facilitate 1–2 lbs weight loss each week, participants received customized calorie goals that have been successfully used in previous weight loss interventions (1200 kcal/d for participants weighing ≤ 90 kg, 1500 kcal/d for participants weighing 91–112 kg, and 1800 kcal/d for participants weighing ≥ 113 kg) (Jakicic et al. 2012). Participants in the Bite group were provided a Bite Counter, a wearable device worn like a watch, which utilizes microelectromechanical (MEMS) gyroscope technology to track wrist motion, automatically detecting when a bite of food is eaten (Dong et al. 2012). Users wear the device on their dominant hand and turn on the device at the beginning of an eating event to track all foods and beverages containing calories. While eating, the device displays the bite count for the current eating event, while allowing users to view the total bites consumed throughout the day (Scisco et al. 2011). They were instructed to use their non-dominant hand to drink non-caloric beverages, such as water or diet soda. Similar to the App group, Bite group participants received a personalized bite/day goal based on the same kcal/d goal for the App group. These bites/day goals were developed from a formula that predicts an individual’s kilocalories per bite (KPB) based on demographic characteristics (i.e., gender, height, weight, and age): KPB (male) = 0.0967 height (cm) + 0.0992 weight (kg) − 0.2478 age; KPB (female) = 0.0528 height (cm) + 0.0640 weight (kg) − 0.0534 age. This formula has been validated in previous studies and described in detail elsewhere (Salley 2013; Scisco et al. 2014; Shen et al. 2016). Participants used their respective mHealth tools (either App or Bite Counter) to track dietary intake throughout the study period. Additional details on intervention activities have been published elsewhere (Turner-McGrievy et al. 2017).

Statistical Analysis

To examine differences in baseline demographic characteristics between groups, independent sample t tests were used for continuous variables and χ2 tests of independence were used for categorical data. Repeated measures ANOVA models were used to assess changes in HEI scores, HEI components that comprise the total HEI-2010 score, and energy intake (kcals) using PROC MIXED in SAS (SAS version 9.4, SAS Institute Inc., Cary, North Carolina, 2013). Total HEI scores, HEI components, and energy intake had a model with a three-level time variable (i.e., baseline, 3, and 6 months), and its interaction with group, controlling for no other covariates. Changes in total HEI-2010 score, HEI components, and energy intake between groups were assessed with baseline-to-three-month and baseline-to-six-month effects from the single point in time model. The statistical models use full information maximum likelihood estimation with robust standard errors to utilize all available data when attrition is present.

Results

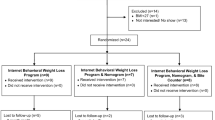

Data were collected between 2015 and 2016. The full CONSORT diagram has been described elsewhere (Turner-McGrievy et al. 2017). Briefly, a total of 306 people completed the online screening questionnaire and 164 were excluded mainly due to being unable to reach, having diabetes treated with oral medications or insulin, or having lost more than 10 lbs in the past 6 months. One-hundred and forty-two people were invited to orientation and 81 completed all baseline measures and were randomized to either the Bite group (n = 39) or App group (n = 42). Attrition was 16% at the 3-month assessment and 25% at the 6-month assessment. Baseline demographics are presented in Table 1. There were no differences between groups for any of the baseline characteristics. There were no differences in age, baseline body mass index (BMI), sex, or race between those who completed all study activities and those who did not participate in the 6-month assessment (p’s all > 0.05).

Results are presented as means + SE. At baseline, average total HEI scores for both App and Bite groups fell below the average HEI score for US adults (United States Department of Agriculture (USDA), n.d.). Main outcomes of changes in total HEI scores and HEI components are presented by randomization group in Table 2. Compared to baseline, there were no significant changes in HEI-2010 scores, components, or energy intake between groups at 3 or 6 months.

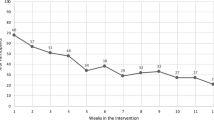

Exploratory within-subjects investigations were conducted for changes in HEI-2010 scores, components, and energy intake within each group at 3 and 6 months. All changes at 3 and 6 months are in comparison to values at baseline. As shown in Table 2 (superscripts a–c), both Bite and App groups had significant reductions in energy intake at 3 and 6 months. The App group’s HEI score increased at both 3 and 6 months. The App group increased their intake of total vegetables, total fruits, and whole grains, and decreased their intake of seafood and plant proteins at 3 months. Lastly, the App group increased their consumption of empty calories at 3 and 6 months, while the Bite group increased their consumption of empty calories at 6 months only.

Discussion

The current study found no significant difference in changes in HEI-2010 scores, components, or energy intake between Bite and App groups at 3 or 6 months, compared to baseline. Within each group, both Bite and App groups had significantly lower energy intake, yet higher consumption of empty calories at 6 months. Additionally, the App group increased their overall diet quality at 6 months. Overall, there were no differences in changes in diet quality between Bite and App groups from baseline to 6 months.

Our study results differ from previous research that examined dietary self-monitoring and diet quality; however, previous research has not examined changes in diet quality after using a wearable diet tracking device. For example, Carpenter and colleagues (2004) conducted a randomized controlled trial (N = 98) and found that individuals who kept weekly food logs and attended weekly group meetings significantly improved their overall diet quality score and specific diet components (i.e., fruit, total fat, cholesterol, and sodium) compared with a group receiving informational mailings and a usual care control group. While food logs were associated with improved diet quality, there is conflicting evidence around whether mHealth technology is related to improved diet quality. In terms of diet quality components, Acharya and colleagues (2011) found that participants who used a personal digital assistant for dietary self-monitoring increased their intake of fruit, vegetables, and whole grains and decreased their intake of refined grains when compared to participants who used paper. In regard to overall diet quality, in an 8-week feasibility study comparing three dietary self-monitoring methods, there were no significant differences in changes in diet quality between participants using a mobile app, the memo function on mobile phones, and paper logs (Wharton et al. 2014). Similarly, there were no significant differences for changes in specific HEI categories (i.e., whole grains, total fruit, saturated fat, and sodium) (Wharton et al. 2014).

Using mHealth technology to improve nutrition is a growing area of research; however, the majority of research using mobile technology for dietary self-monitoring has focused on mobile apps, which requires users to either search for foods/beverages in a database, use a barcode scanner, or take a picture of the item (Thomas and Bond 2014). Daily self-monitoring can be burdensome (Burke et al. 2011), causing people to decrease monitoring over time (Burke et al. 2009). Emerging mHealth research is evaluating the use of more automated devices for dietary self-monitoring (Hermans et al. 2017). Our study results indicate that there were no significant differences in the reduction of energy intake between using the Bite Counter, which requires very little effort to track foods, compared to using an app. This automated approach may help decrease the burden associated with frequent self-monitoring.

The present study has multiple strengths, primarily the use of the Bite Counter as a novel strategy for assessing dietary intake in real-time in the original weight loss trial. The use of the HEI goes beyond a focus on energy intake to include a comprehensive assessment of dietary intake as it pertains to Dietary Guidelines for Americans, which has implications for obesity and other chronic diseases. For each 10-unit increase in total HEI score, there is an 8% lower risk of abdominal obesity in women, and a 15% lower risk in men (Tande et al. 2010). Standardized assessment of diet quality, such as the HEI, allows for equal comparisons across studies.

Limitations

The study’s findings should be interpreted in the context of a few limitations. It is possible that participants underreported their energy intake in the 24-h recalls (Ma et al. 2009). Previous research has suggested that mobile apps for dietary self-monitoring that provide feedback that is focused on caloric intake, and not described in relation to diet quality do not provide users with enough information needed to improve the healthfulness of their diet while managing their weight (Wharton et al. 2014). Since the DIET Mobile study was focused on weight loss through dietary self-monitoring and reducing energy intake, the intervention might need an additional component focused on improving and tracking diet quality in relation to the diet components in the HEI to facilitate lasting changes in diet quality. Furthermore, the study had a low sample size according to power calculations.

Implications for Research and Practice

Optimal diet quality has been associated with decreased risk for chronic disease (Appel et al. 1997; Hu et al. 2000) and mortality (Kant et al. 2000). In order to prevent chronic disease, the Dietary Guidelines for Americans recommends increasing fruits, vegetables, and lean protein, and decreasing sodium and saturated fat (U.S. Department of Agriculture and U.S. Department of Health and Human Services 2010). Mobile technology holds promise in being able to improve dietary intake, which could result in significant public health improvements (Hingle and Patrick 2016). This study found that both the Bite and App groups were able to reduce their energy intake and there was no difference in changes in diet quality between groups. This provides some support for using the less intensive, more automated method (Bite Counter) for long-term dietary self-monitoring. Future interventions aimed at improving diet quality through mHealth technology should investigate the potential to develop a new app or modify an existing app that would allow for dietary self-monitoring that provides specific feedback on how users’ diets align with HEI components. This could help transition the field of mobile dietary self-monitoring research from focusing on restricting energy intake for weight loss to increasing healthful diet quality components (i.e., fruits, vegetables, whole grains, and low-fat dairy) and decreasing unhealthy diet quality components (i.e., “empty calories” and saturated fat) that would improve overall diet quality. Health practitioners could suggest the use of technology-based self-monitoring tools to assist clients with regular self-monitoring in conjunction with nutrition education. Future mHealth obesity interventions should also examine factors related to engagement with technology-based self-monitoring tools to better understand how they can be effectively used to improve overall diet quality.

References

Acharya, S. D., Elci, O. U., Sereika, S. M., Styn, M. A., & Burke, L. E. (2011). Using a personal digital assistant for self-monitoring influences diet quality in comparison to a standard paper record among overweight/obese adults. Journal of the American Dietetic Association, 111(4), 583–588. https://doi.org/10.1016/j.jada.2011.01.009.

Appel, L. J., Moore, T. J., Obarzanek, E., Vollmer, W. M., Svetkey, L. P., Sacks, F. M., et al. (1997). A clinical trial of the effects of dietary patterns on blood pressure. New England Journal of Medicine, 336(16), 1117–1124.

Bandura, A. (2004). Health promotion by social cognitive means. Health Education & Behavior, 31(2), 143–164. https://doi.org/10.1177/1090198104263660.

Boushey, C. J., Spoden, M., Zhu, F. M., Delp, E. J., & Kerr, D. A. (2016). New mobile methods for dietary assessment: review of image-assisted and image-based dietary assessment methods. Proceedings of the Nutrition Society 1–12.

Burke, L. E., Swigart, V., Turk, M. W., Derro, N., & Ewing, L. J. (2009). Experiences of self-monitoring: successes and struggles during treatment for weight loss. Qualitative Health Research, 19(6), 815–828. https://doi.org/10.1177/1049732309335395.

Burke, L. E., Wang, J., & Sevick, M. A. (2011). Self-monitoring in weight loss: a systematic review of the literature. Journal of the American Dietetic Association, 111(1), 92–102. https://doi.org/10.1016/j.jada.2010.10.008.

Carpenter, R. A., Finley, C., & Barlow, C. E. (2004). Pilot test of a behavioral skill building intervention to improve overall diet quality. Journal of Nutrition Education and Behavior, 36(1), 20–26.

Diabetes Prevention Program (DPP). (2002). The diabetes prevention program (DPP). Diabetes Care, 25(12), 2165–2171. https://doi.org/10.2337/diacare.25.12.2165.

Dong, Y., Hoover, A., Scisco, J., & Muth, E. (2012). A new method for measuring meal intake in humans via automated wrist motion tracking. Applied Psychophysiology and Biofeedback, 37(3), 205–215. https://doi.org/10.1007/s10484-012-9194-1.

Guenther, P. M., Casavale, K. O., Reedy, J., Kirkpatrick, S. I., Hiza, H. A. B., Kuczynski, K. J., et al. (2013). Update of the healthy eating index: HEI-2010. Journal of the Academy of Nutrition and Dietetics, 113(4), 569–580.

Hermans, R. C. J., Hermsen, S., Robinson, E., Higgs, S., Mars, M., & Frost, J. H. (2017). The effect of real-time vibrotactile feedback delivered through an augmented fork on eating rate, satiation, and food intake. Appetite, 113, 7–13. https://doi.org/10.1016/j.appet.2017.02.014.

Hingle, M., & Patrick, H. (2016). There are thousands of apps for that: navigating mobile technology for nutrition education and behavior. Journal of Nutrition Education and Behavior, 48(3), 213–218.e1. https://doi.org/10.1016/j.jneb.2015.12.009.

Hu, F. B., Rimm, E. B., Stampfer, M. J., Ascherio, A., Spiegelman, D., & Willett, W. C. (2000). Prospective study of major dietary patterns and risk of coronary heart disease in men. The American Journal of Clinical Nutrition, 72(4), 912–921.

Hutchesson, M. J., Rollo, M. E., Krukowski, R., Ells, L., Harvey, J., Morgan, P. J., … Collins, C. E. (2015). eHealth interventions for the prevention and treatment of overweight and obesity in adults: a systematic review with meta-analysis. Obesity Reviews, 16(5), 376–392. doi: https://doi.org/10.1111/obr.12268

Jakicic, J. M., Tate, D. F., Lang, W., Davis, K. K., Polzien, K., Rickman, A. D., et al. (2012). Effect of a stepped-care intervention approach on weight loss in adults: a randomized clinical trial. JAMA, 307(24), 2617–2626. https://doi.org/10.1001/jama.2012.6866.

Kant, A. K., Schatzkin, A., Graubard, B. I., & Schairer, C. (2000). A prospective study of diet quality and mortality in women. Journal of the American Medical Association, 283(16), 2109–2115.

Kirkpatrick, S. I., Subar, A. F., Douglass, D., Zimmerman, T. P., Thompson, F. E., Kahle, L. L., … Potischman, N. (2014). Performance of the automated self-administered 24-hour recall relative to a measure of true intakes and to an interviewer-administered 24-h recall. The American Journal of Clinical Nutrition, 100(1), 233–240. doi: https://doi.org/10.3945/ajcn.114.083238.

Lehnert, T., Sonntag, D., Konnopka, A., Riedel-Heller, S., & König, H.-H. (2013). Economic costs of overweight and obesity. Best Practice & Research Clinical Endocrinology & Metabolism, 27(2), 105–115. https://doi.org/10.1016/j.beem.2013.01.002.

Ma, Y., Olendzki, B. C., Pagoto, S. L., Hurley, T. G., Magner, R. P., Ockene, I. S., …, Hébert, J. R. (2009). Number of 24-hour diet recalls needed to estimate energy intake. Annals of Epidemiology, 19(8), 553–559. doi: https://doi.org/10.1016/j.annepidem.2009.04.010

Messina, M., Lampe, J., Birt, D., Appel, L., Pivonka, E., Berry, B., & Jacobs, D. (2001). Reductionism and the narrowing nutrition perspective: time for reevaluation and emphasis on food synergy. Journal of the American Dietetic Association, 101(2), 1416–1419.

National Cancer Institute. (2016). Resources realted to the Healthy Eating Index (HEI). Retrieved from http://epi.grants.cancer.gov/asa24/resources/hei.html

Ogden, C. L., Carroll, M. D., Fryar, C. D., & Flegal, K. M. (2015). Prevalence of obesity among adults and youth: United States, 2011–2014. NCHS Data Brief, 219(219), 1–8.

Salley, J. (2013). Accuracy of a bite-count based calorie estimate compared to human estimates with and without calorie information available. Retrieved from http://tigerprints.clemson.edu/all_theses/1680/

SAS Institute Inc. (2013). SAS/STAT (Version 9.4). Cary, NC: SAS Institute Inc.

Scisco, J. L., Muth, E. R., Dong, Y., & Hoover, A. W. (2011). Slowing bite-rate reduces energy intake: an application of the bite counter device. Journal of the American Dietetic Association, 111(8), 1231–1235. https://doi.org/10.1016/j.jada.2011.05.005.

Scisco, J. L., Muth, E. R., & Hoover, A. W. (2014). Examining the utility of a bite-count–based measure of eating activity in free-living human beings. Journal of the Academy of Nutrition and Dietetics, 114(3), 464–469. https://doi.org/10.1016/j.jand.2013.09.017.

Shen, Y., Salley, J., Muth, E., & Hoover, A. (2016). Assessing the accuracy of a wrist motion tracking method for counting bites across demographic and food variables. IEEE Journal of Biomedical and Health Informatics.

Sun, M., Burke, L. E., Mao, Z.-H., Chen, Y., Chen, H.-C., Bai, Y., …, Jia, W. (2014). eButton: a wearable computer for health monitoring and personal assistance. Proceedings / Design Automation Conference. Design Automation Conference, 2014, 1–6. doi: https://doi.org/10.1145/2593069.2596678

Tande, D. L., Magel, R., & Strand, B. N. (2010). Healthy eating index and abdominal obesity. Public Health Nutrition, 13(02), 208. https://doi.org/10.1017/S1368980009990723.

Thomas, J. G., & Bond, D. S. (2014). Review of innovations in digital health technology to promote weight control. Current Diabetes Reports, 14(5), 485. https://doi.org/10.1007/s11892-014-0485-1.

Turk, M. W., Elci, O. U., Wang, J., Sereika, S. M., Ewing, L. J., Acharya, S. D., …, Burke, L. E. (2013). Self-monitoring as a mediator of weight loss in the SMART randomized clinical trial. International Journal of Behavioral Medicine, 20(4). 556–561. doi: https://doi.org/10.1007/s12529-012-9259-9

Turner-McGrievy, G. M., Beets, M. W., Moore, J. B., Kaczynski, A. T., Barr-Anderson, D. J., & Tate, D. F. (2013). Comparison of traditional versus mobile app self-monitoring of physical activity and dietary intake among overweight adults participating in an mHealth weight loss program. Journal of the American Medical Informatics Association, 20(3), 513–518. https://doi.org/10.1136/amiajnl-2012-001510.

Turner-McGrievy, G. M., Wilcox, S., Boutté, A., Hutto, B. E., Singletary, C., Muth, E. R., & Hoover, A. W. (2017). The dietary intervention to enhance tracking with mobile devices (DIET mobile) study: a 6-month randomized weight loss trial. Obesity, 25(8), 1336–1342. https://doi.org/10.1002/oby.21889.

United States Department of Agriculture (USDA). (n.d.). Healthy Eating Index (HEI). Retrieved June 9, 2017, from https://www.cnpp.usda.gov/healthyeatingindex

U.S. Department of Agriculture, & U.S. Department of Health and Human Services. (2010). Dietary guidelines for Americans, 2010 (7th ed.). Washington, D.C: U.S. Government Printing Office. Retrieved from https://health.gov/dietaryguidelines/dga2010/DietaryGuidelines2010.pdf.

Wharton, C. M., Johnston, C. S., Cunningham, B. K., & Sterner, D. (2014). Dietary self-monitoring, but not dietary quality, improves with use of smartphone app technology in an 8-week weight loss trial. Journal of Nutrition Education and Behavior, 46(5), 440–444. https://doi.org/10.1016/j.jneb.2014.04.291.

Funding

This project was funded by the National Cancer Institute of the National Institutes of Health under award number R21CA18792901A1 (PI: Turner-McGrievy). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Authors E and F have formed a company, Bite Technologies, to market and sell a bite counting device. Clemson University owns a US patent for intellectual property known as “The Weight Watch”, USA, Patent No. 8310368, filed January 2009, granted November 13, 2012. Bite Technologies has licensed the method from Clemson University. Authors E and F receive royalty payments from bite counting device sales. The remaining authors do not have any conflicts of interest to declare.

Ethical Approval

The University of South Carolina Institutional Review Board approved the study. All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. This article does not contain any studies with animals performed by any of the authors.

Informed Consent

Written informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Boutté, A.K., Turner-McGrievy, G.M., Wilcox, S. et al. Comparing Changes in Diet Quality Between Two Technology-Based Diet Tracking Devices. J. technol. behav. sci. 4, 25–32 (2019). https://doi.org/10.1007/s41347-018-0075-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41347-018-0075-1